Abstract

This study introduces a pioneering scrambling response model tailored for handling sensitive variables. Subsequently, a generalized estimator for variance estimation, relying on two auxiliary information sources, is developed following this novel model. Analytical expressions for bias, mean square error, and minimum mean square error are meticulously derived up to the first order of approximation, shedding light on the estimator’s statistical performance. Comprehensive simulation experiments and empirical analysis unveil compelling results. The proposed generalized estimator, operating under both scrambling response models, consistently exhibits minimal mean square error, surpassing existing estimation techniques. Furthermore, this study evaluates the level of privacy protection afforded to respondents using this model, employing a robust framework of simulations and empirical studies.

Similar content being viewed by others

Introduction

Information regarding complex characteristics for example family income, induced abortions, criminal activities, etc. may cause refusal bias, response bias, or both. The randomized response technique (RRT) was introduced by Warner1 to overcome such a difficult situation where the response is qualitative. In RRT, the information attained ensures the privacy of the respondent. This work was extended to quantitative response models with scrambling responses. Pollock and Bek2 presented the theory of additive and multiplicative scrambling randomized response (SRR) technique. On the choice of scrambling mechanism, many researchers made an effort to develop models such as Himmelfarb and Edgell3, Eichhron and Hayre4, Gupta et al.5, Diana and Perri6, Hussain and Khan7, Zaman et al.8, and Azeem9. Besides, developments by different survey researchers are made to the estimation stage using additive models. Sousa et al.10 first presented ratio-type estimators estimating the population mean of the complex study variable using the non-delicate auxiliary variable. Additionally, Koyuncu et al.11, Gupta et al.12, Saleem et al.13, Shahzad et al.14, Sanaullah et al.15,16, Saleem and Sanaullah17, Khalid et al.18 and Juarez-Moreno et al.19 presented various estimators for the population mean of the complex variable using different RRT models.

In human regular life, variation remains existent everywhere. Naturally, not the two individuals or things are identical. In all fields, we necessitate estimating the population variance, such as the climate factors from place to place, the degree of blood pressure, etc. The medical researcher needs a suitable understanding of the level of variation of a particular HIV treatment dose curing or affecting from person to person to be able to plan whether to reduce or change the treatment for a particular person. Practically, several situations can be seen where the estimation of population variance can be observed for complex issues. In survey sampling, the auxiliary variable is used to intensify the precision of population variance estimators at the stage of estimation. The work on the estimation of population variance for the non-sensitive variable of interest was done by countless statisticians such as Gupta and Shabbir20, Asghar et al.21, Sanaullah et al.22, Niaz et al.23 and Zaman and Bulut24. Singh et al.25 first introduced a new estimator to estimate the population variance of the sensitive variable of interest centered on a multiplicative scrambled response model using auxiliary information. They presented different procedures for estimating variance using Das and Tripathi26 and Isaki27 estimators. Later, Gupta et al.28 presented three variance estimators under Diana and Perri’s6 RRT model using two scrambling variables. Aloraini et al.29 proposed some separate and combined variance estimators using stratified sampling following the strategy presented by Gupta et al.28.

The present study follows the methodology of Gupta et al.28 and suggested a new generalized exponential estimator, to estimate the variance of the finite population which is complex in nature. The jargon of the bias and the mean square error of the proposed estimator originated up to the first order of approximation. The outline of the article is organized as follows: In “Sampling strategy for scrambled response model” section the sampling strategy for the scrambled response model presented by Diana and Perri6 is discussed. “The proposed estimator and its class of estimators” section, displays the proposed generalized estimator for two auxiliary variables under the existing model along with the expressions of bias and MSE. In “The proposed RRT model and estimator-II” section, we also propose a generalized randomized response model. The unbiased variance estimator, ratio estimator, and proposed generalized estimator are modified under the proposed model in the same section. The privacy protection measure for the models are discussed in “Privacy levels” section. To support the proposed methodology a simulation study is presented in “An application of the proposed model” section and some concluding interpretations are given in “Simulation study” section.

Sampling strategy for scrambled response model

Let a simple random sample done without replacement (SRSWOR) of size n be drawn a finite population of U = {U1, U2,…, UN}. Let Y be a true response of sensitive quantitative variables and X be the non-sensitive auxiliary variable, positively correlated to Y. Let \({s}_{y}^{2}=\) \(\frac{\sum_{i=1}^{n}{({y}_{i }- \overline{y })}^{2}}{(n-1)}\), \({s}_{x1}^{2}=\) \(\frac{\sum_{i=1}^{n}{({x}_{1i }- {\overline{x} }_{1})}^{2}}{(n-1)}\), \({s}_{x2}^{2}=\) \(\frac{\sum_{i=1}^{n}{({x}_{2i }- {\overline{x} })}^{2}}{(n-1)}\), \({s}_{z}^{2}=\frac{\sum_{i=1}^{n}{({z}_{i }- \overline{z })}^{2}}{(n-1)}\), \({\sigma }_{y}^{2}=\frac{\sum_{i=1}^{N}{({Y}_{i }- \overline{Y })}^{2}}{(N-1)}\), \({\sigma }_{x1}^{2}=\frac{\sum_{i=1}^{N}{({X}_{1i }- {\overline{X} }_{1})}^{2}}{\left(N-1\right)}\), \({\sigma }_{x2}^{2}=\frac{\sum_{i=1}^{N}{({X}_{2i }- {\overline{X} }_{2})}^{2}}{\left(N-1\right)},\) \({\sigma }_{z}^{2}=\frac{\sum_{i=1}^{N}{\left({Z}_{i }- \overline{Z }\right)}^{2}}{\left(N-1\right)}\), \({\overline{X} }_{1}= \frac{\sum_{i=1}^{N}{X}_{1i }}{N}\), \({\overline{X} }_{2}= \frac{\sum_{i=1}^{N}{X}_{2i }}{N} ,\) \(\overline{Y }= \frac{\sum_{i=1}^{N}{Y}_{i }}{N}\), \(\overline{Z }=\frac{\sum_{i=1}^{N}{Z}_{i }}{N}\), \(\overline{y }= \frac{\sum_{i=1}^{n}{y}_{i }}{n}\), \({\overline{x} }_{1}= \frac{\sum_{i=1}^{n}{x}_{1i }}{n}\), \({\overline{x} }_{2}= \frac{\sum_{i=1}^{n}{x}_{2i }}{n}, \overline{z }= \frac{\sum_{i=1}^{n}{z}_{i }}{n}\).

Let us define the following assumptions and expectations to get the bias and mean square error: \({s}_{z}^{2}={\sigma }_{z}^{2}(1+{\delta }_{z})\), \({s}_{{x}_{1}}^{2}={\sigma }_{{x}_{1}}^{2}\)(1 + \({\delta }_{{X}_{1}}\)), \({s}_{{x}_{2}}^{2}={\sigma }_{{x}_{2}}^{2}\)(1 + \({\delta }_{{X}_{2}}\)), and \(\overline{z }\) = \(\overline{Z }(1+{e}_{z}\)), where \({\delta }_{z}\) \(=\frac{{s}_{z}^{2}-{\sigma }_{z}^{2}}{{\sigma }_{z}^{2}}\), \({\delta }_{{X}_{1}}= \frac{{s}_{{x}_{1}}^{2}-{\sigma }_{{x}_{1}}^{2}}{ {\sigma }_{{x}_{1}}^{2}}\), \({\delta }_{{X}_{2}}=\frac{{s}_{{x}_{2}}^{2}-{\sigma }_{{x}_{2}}^{2}}{{\sigma }_{{x}_{2}}^{2}}\) and \({e}_{z}=\frac{\overline{z }-\overline{z} }{\overline{z} }\), such that \(E\left({\delta }_{z}\right)=E\left({\delta }_{x}\right)=E\left({e}_{z}\right)=0\), \(E\left({\delta }_{z}^{2}\right)=\theta ({\lambda }_{400}-1)\), \(E\left({\delta }_{x1}^{2}\right)=\theta ({\lambda }_{040}-1)\), E\(\left({\delta }_{x2}^{2}\right)=\theta ({\lambda }_{004}-1)\), \({E(e}_{z}^{2})=\theta {C}_{z}^{2}\), \({E(\delta }_{z}{e}_{z})=\theta {\lambda }_{300}{C}_{z}\), \(E\left({\delta }_{x1}{e}_{z}\right)=\theta {\lambda }_{120}{C}_{z}\), \(E\left({\delta }_{x2}{e}_{z}\right)=\theta {\lambda }_{102}{C}_{z}\), \(E\left({\delta }_{x1}{\delta }_{x2}\right)=\theta ({\lambda }_{022}-1)\), \(E\left({\delta }_{z}{\delta }_{x1}\right)=\theta ({\lambda }_{220}-1)\), and \(E\left({\delta }_{z}{\delta }_{x2}\right)=\theta ({\lambda }_{202}-1)\).

Based on the Diana and Perri6 RRT model Z = TY + S, Gupta et al.28 introduced basic variance and some ratio-type estimators. The basic variance estimator is as

The MSE of t0 is as,

The ratio estimators is given by,

The MSE of tratio estimator is as,

The generalized ratio estimator is as,

The MSE of t0 is as,

where \({\mu }_{rsa}=\frac{{\mu }_{rsa}}{{\eta }_{200}^\frac{r}{2}{\eta }_{020}^\frac{s}{2}{\eta }_{020}^\frac{a}{2}}\), \({\eta }_{rsa}=\frac{1}{N-1}\sum_{i=1}^{N}{({Y}_{i }- \overline{Y })}^{r}{({X}_{1i }- \overline{X })}^{s}{({X}_{2i }- \overline{X })}^{a}\), \(\theta =\frac{1}{n}\).

The proposed estimator and its class of estimators

The proposed estimator

In this section, a generalized exponential estimator is presented following Koyuncu et al.11. The form of the proposed estimator is given by,

where \({k}_{1}\), \({k}_{2}\), \({k}_{3}\), are the three optimizing and unrestricted constants which need to be estimated such that the MSE of the estimator is minimum, and \({\lambda }_{1}\) and \({\lambda }_{2}\) are the generalization constants which need to be placed with some suitable values, known parameters, or function of known parameters to get different efficient and or existing estimators. A few examples are shown in Table 1 by setting different values to the constants.

To obtain the Bias, and the MSE, we define the following error terms, rewriting Eq. (7), we have

The mean square error of the generalized estimator t1D is given by

Differentiate Eq. (10) with respect to k1, k2, and k3, and after the simplification optimum values of the constants are given by,

and utilizing the optimum values of the constants into Eq. (10), the simplified form of the minim MSE of the estimator is given by

where \({A}_{1}={\sigma }_{y}^{4}\left[\frac{{\lambda }_{1}^{2}}{4}\left({\mu }_{040}-1\right)+{\lambda }_{2}^{2}\left({\mu }_{004}-1\right)-{\lambda }_{1}{\lambda }_{2}\left({\mu }_{022}-1\right)\right]+\frac{{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)}{{{(\sigma }_{T}^{2}+1)}^{2}}+4\frac{{\overline{Z} }^{4}{\sigma }_{T}^{4}{C}_{z}^{2}}{{{(\sigma }_{T}^{2}+1)}^{2}}+\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\sigma }_{z}^{2}{\mu }_{300}{C}_{z}}{{(\sigma }_{T}^{2}+1)}-2\frac{{\sigma }_{y}^{2}}{{\sigma }_{T}^{2}+1}\left[{\sigma }_{z}^{2}\left\{\frac{{\lambda }_{1}}{2}(\left({\mu }_{220}-1\right)-{\lambda }_{2}\left({\mu }_{202}-1\right)\right\}+2{{\overline{Z} }^{2}\sigma }_{T}^{2}\left\{\frac{{\lambda }_{1}}{2}{\mu }_{120}-{{\lambda }_{2}\mu }_{102}\right\}{C}_{z}\right]\), \({A}_{2}={\sigma }_{y}^{2}\left[\frac{{\lambda }_{1}}{2}\left({\mu }_{040}-1\right)-{\lambda }_{2}\left({\mu }_{022}-1\right)\right]-\frac{{\sigma }_{z}^{2}\left({\mu }_{220}-1\right)}{{(\sigma }_{T}^{2}+1)}+2\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\mu }_{120}}{{(\sigma }_{T}^{2}+1)}{C}_{z}\), \({A}_{3}={\sigma }_{y}^{2}\left[\frac{{\lambda }_{1}}{2}\left({\mu }_{022}-1\right)-{\lambda }_{2}\left({\mu }_{004}-1\right)\right]-\frac{{\sigma }_{z}^{2}\left({\mu }_{202}-1\right)}{{(\sigma }_{T}^{2}+1)}+2\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\mu }_{120}}{{(\sigma }_{T}^{2}+1)}{C}_{z}\), \({A}_{4}={A}_{2}\left({\mu }_{004}-1\right)+{A}_{3}\left({\mu }_{022}-1\right)\), \({A}_{5}={A}_{2}\left({\mu }_{022}-1\right)+{A}_{3}\left({\mu }_{040}-1\right)\), \({A}_{6}=\left({\mu }_{040}-1\right)\left({\mu }_{004}-1\right)-{\left({\mu }_{022}-1\right)}^{2}\), and \({A}_{7}={\sigma }_{y}^{4}+\theta {A}_{1}+\theta \left({\mu }_{040}-1\right){\left(\frac{{A}_{4}}{{A}_{6}}\right)}^{2}+\theta \left({\mu }_{004}-1\right){\left(\frac{{A}_{5}}{{A}_{6}}\right)}^{2}+2\theta \frac{{A}_{2}{A}_{4}}{{A}_{6}}-2\theta \frac{{A}_{3}{A}_{5}}{{A}_{6}}+2\theta \left({\mu }_{022}-1\right)\frac{{A}_{4}{A}_{5}}{{A}_{6}^{2}}\).

Mathematical comparison of the proposed class of estimators with \({t}_{0}\)

-

i.

\(MSE\left({t}_{0}\right)-MSE\left({t}_{1D(1)}\right)=\frac{{B}_{1}}{{B}_{2}}>1\) is true iff \({B}_{2}\) > \({B}_{1}\), where \({B}_{1}=\left(\frac{1}{{({\sigma }_{T}^{2}+1)}^{2}}\right)\left({\sigma }_{z}^{4}({\mu }_{400}-1)+4{\sigma }_{T}^{2}{\overline{Z} }^{2}{C}_{z}^{2}-4{\sigma }_{z}^{2}{\sigma }_{T}^{2}{\overline{Z} }^{2}{\mu }_{300}{C}_{z}\right)\), \({B}_{2}=\frac{{\sigma }_{y}^{4}}{\theta }{\left({k}_{1}-1\right)}^{2}+{k}_{1}^{2}{B}_{21}+{k}_{2}^{2}{\sigma }_{x1}^{4}\left({\mu }_{040}-1\right)+2{k}_{1}{k}_{2}{\sigma }_{x1}^{2}{B}_{22}\), \({B}_{21}=\frac{{\sigma }_{y}^{4}}{4}\left({\mu }_{040}-1\right)+\frac{{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)}{{{(\sigma }_{T}^{2}+1)}^{2}}+4\frac{{\overline{Z} }^{4}{\sigma }_{T}^{4}{C}_{z}^{2}}{{{(\sigma }_{T}^{2}+1)}^{2}}+\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\sigma }_{z}^{2}{\mu }_{300}{C}_{z}}{{(\sigma }_{T}^{2}+1)}-2\frac{{\sigma }_{y}^{2}}{{\sigma }_{T}^{2}+1}\left[\frac{{\sigma }_{z}^{2}}{2}(\left({\mu }_{220}-1\right)+{{\overline{Z} }^{2}\sigma }_{T}^{2}{{\mu }_{120}C}_{z}\right]\), \({B}_{22}=\frac{{\sigma }_{y}^{2}}{2}\left({\mu }_{040}-1\right)-\frac{{\sigma }_{z}^{2}\left({\mu }_{220}-1\right)}{{(\sigma }_{T}^{2}+1)}+2\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\mu }_{120}}{{(\sigma }_{T}^{2}+1)}{C}_{z}\).

-

ii.

\(MSE\left({t}_{0}\right)-MSE\left({t}_{1D(2)}\right)=\frac{{B}_{1}}{{B}_{3}}>1\) is true iff \({B}_{3}\) > \({B}_{1}\), where \({B}_{3}=\frac{{\sigma }_{y}^{4}}{\theta }{\left({k}_{1}-1\right)}^{2}+{k}_{1}^{2}{B}_{31}+{k}_{2}^{2}{\sigma }_{x1}^{4}\left({\mu }_{040}-1\right)+2{k}_{1}{k}_{2}{\sigma }_{x1}^{2}{B}_{32}\), \({B}_{31}={\sigma }_{y}^{4}{\lambda }_{2}^{2}\left({\mu }_{004}-1\right)+\frac{{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)}{{{(\sigma }_{T}^{2}+1)}^{2}}+4\frac{{\overline{Z} }^{4}{\sigma }_{T}^{4}{C}_{z}^{2}}{{{(\sigma }_{T}^{2}+1)}^{2}}+\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\sigma }_{z}^{2}{\mu }_{300}{C}_{z}}{{(\sigma }_{T}^{2}+1)}+2\frac{{\sigma }_{y}^{2}}{{\sigma }_{T}^{2}+1}\left[{\sigma }_{z}^{2}{\lambda }_{2}\left({\mu }_{202}-1\right)+2{{\overline{Z} }^{2}\sigma }_{T}^{2}{{\lambda }_{2}\mu }_{102}{C}_{z}\right]\), \({B}_{32}=2\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\mu }_{120}}{{(\sigma }_{T}^{2}+1)}{C}_{z}-{\sigma }_{y}^{2}{\lambda }_{2}\left({\mu }_{022}-1\right)-\frac{{\sigma }_{z}^{2}\left({\mu }_{220}-1\right)}{{(\sigma }_{T}^{2}+1)}.\)

-

iii.

\(MSE\left({t}_{0}\right)-\left[MSE\left({t}_{1D(3)}\right)=MSE\left({t}_{ratio}\right)\right]=\frac{{B}_{1}}{{B}_{4}}>1\) is true iff \({B}_{4}\)>\({B}_{1}\), where \({B}_{4}={\sigma }_{y}^{4}{\lambda }_{2}^{2}\left({\mu }_{004}-1\right)+\frac{{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)}{{{(\sigma }_{T}^{2}+1)}^{2}}+4\frac{{\overline{Z} }^{4}{\sigma }_{T}^{4}{C}_{z}^{2}}{{{(\sigma }_{T}^{2}+1)}^{2}}+\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\sigma }_{z}^{2}{\mu }_{300}{C}_{z}}{{(\sigma }_{T}^{2}+1)}+2\frac{{\sigma }_{y}^{2}}{{\sigma }_{T}^{2}+1}\left[{\sigma }_{z}^{2}{\lambda }_{2}\left({\mu }_{202}-1\right)+2{{\overline{Z} }^{2}\sigma }_{T}^{2}{{\lambda }_{2}\mu }_{102}{C}_{z}\right]\).

-

iv.

\(MSE\left({t}_{0}\right)-MSE\left({t}_{1D(4)}\right)=\frac{{B}_{1}}{{B}_{5}}>1\) is true iff \({B}_{5}\) > \({B}_{1}\), where \({B}_{5}=\left[\frac{{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)}{{{(\sigma }_{T}^{2}+1)}^{2}}+4\frac{{\overline{Z} }^{4}{\sigma }_{T}^{4}{C}_{z}^{2}}{{{(\sigma }_{T}^{2}+1)}^{2}}+\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\sigma }_{z}^{2}{\mu }_{300}{C}_{z}}{{(\sigma }_{T}^{2}+1)}\right]+{k}_{2}^{2}{\sigma }_{x1}^{4}\left({\mu }_{040}-1\right)+{k}_{3}^{2}{\sigma }_{x2}^{4}\left({\mu }_{004}-1\right)+2{k}_{2}{\sigma }_{x1}^{2}\left[2\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\mu }_{120}}{{(\sigma }_{T}^{2}+1)}{C}_{z}-\frac{{\sigma }_{z}^{2}\left({\mu }_{220}-1\right)}{{(\sigma }_{T}^{2}+1)}\right]-2{k}_{1}{k}_{3}{\sigma }_{x2}^{2}\left[2\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\mu }_{120}}{{(\sigma }_{T}^{2}+1)}{C}_{z}-\frac{{\sigma }_{z}^{2}\left({\mu }_{202}-1\right)}{{(\sigma }_{T}^{2}+1)}\right]+2{k}_{3}{\sigma }_{x1}^{2}{\sigma }_{x2}^{2}\left({\mu }_{022}-1\right)\).

-

v.

\(MSE\left({t}_{0}\right)-MSE\left({t}_{1D(5)}\right)=\frac{{B}_{1}}{{B}_{6}}>1\) is true iff \({B}_{6}\) > \({B}_{1}\), where \({B}_{6}={B}_{21}+{k}_{2}^{2}{\sigma }_{x1}^{4}\left({\mu }_{040}-1\right)+2{k}_{2}{\sigma }_{x1}^{2}{B}_{22}\).

-

vi.

\(MSE\left({t}_{0}\right)-MSE\left({t}_{1D(7)}\right)=\frac{{B}_{1}}{{B}_{7}}>1\) is true iff \({B}_{7}\) > \({B}_{1}\), where \({B}_{7}={B}_{31}+{k}_{2}^{2}{\sigma }_{x1}^{4}\left({\mu }_{040}-1\right)+2{k}_{2}{\sigma }_{x1}^{2}{B}_{32}\).

-

vii.

\(MSE\left({t}_{0}\right)-MSE\left({t}_{1D(8)}\right)=\frac{{B}_{1}}{{B}_{8}}>1\) is true iff \({B}_{8}\) > \({B}_{1}\), where \({B}_{8}={\sigma }_{y}^{4}\left[\frac{{\lambda }_{1}^{2}}{4}\left({\mu }_{040}-1\right)+\left({\mu }_{004}-1\right)-{\lambda }_{1}\left({\mu }_{022}-1\right)\right]+\frac{{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)}{{{(\sigma }_{T}^{2}+1)}^{2}}+4\frac{{\overline{Z} }^{4}{\sigma }_{T}^{4}{C}_{z}^{2}}{{{(\sigma }_{T}^{2}+1)}^{2}}+\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\sigma }_{z}^{2}{\mu }_{300}{C}_{z}}{{(\sigma }_{T}^{2}+1)}-2\frac{{\sigma }_{y}^{2}}{{\sigma }_{T}^{2}+1}\left[{\sigma }_{z}^{2}\left\{\frac{{\lambda }_{1}}{2}(\left({\mu }_{220}-1\right)-\left({\mu }_{202}-1\right)\right\}+2{{\overline{Z} }^{2}\sigma }_{T}^{2}\left\{\frac{{\lambda }_{1}}{2}{\mu }_{120}-{\mu }_{102}\right\}{C}_{z}\right]\).

-

viii.

\(MSE\left({t}_{0}\right)-MSE\left({t}_{1D(9)}\right)=\frac{{B}_{1}}{{B}_{9}}>1\) is true iff \({B}_{9}\) > \({B}_{1}\), where \({B}_{9}={\sigma }_{y}^{4}{\lambda }_{2}^{2}\left({\mu }_{004}-1\right)+\frac{{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)}{{{(\sigma }_{T}^{2}+1)}^{2}}+4\frac{{\overline{Z} }^{4}{\sigma }_{T}^{4}{C}_{z}^{2}}{{{(\sigma }_{T}^{2}+1)}^{2}}+\frac{{{\overline{Z} }^{2}\sigma }_{T}^{2}{\sigma }_{z}^{2}{\mu }_{300}{C}_{z}}{{(\sigma }_{T}^{2}+1)}+2\frac{{\sigma }_{y}^{2}}{{\sigma }_{T}^{2}+1}\left[{\sigma }_{z}^{2}{\lambda }_{2}\left({\mu }_{202}-1\right)+2{{\overline{Z} }^{2}\sigma }_{T}^{2}{{\lambda }_{2}\mu }_{102}{C}_{z}\right]\).

The above (i)–(vii) expressions the conditions under which the proposed class of estimators performs better as compared to the estimators \({t}_{0}\).

The proposed RRT model and estimator-II

The proposed RRT model

Our scrambled randomized response model provides a combination of multiplicative, additive, and subtractive models. Since Y is the sensitive variable of interest and hence subject to social desirability bias. S and R are the two independent scrambling variables and are mutually uncorrelated with Y. We assume

Rewriting, we get

-

(i)

Estimating \({\sigma }_{ZNP }^{2}\) by its unbiased estimator \({s}_{Z }^{2}\),

$${t}_{NP1}=\frac{{s}_{Z}^{2}-(1-{g)}^{2}{\sigma }_{R}^{2}*{\overline{z} }^{2}}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}-{a}^{2}{\sigma }_{S}^{2}.$$(16)Rewrite (16) we get

$${t}_{NP1}=\frac{{\sigma }_{Z}^{2}(1-{\delta }_{Z})-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}{\left(1+{e}_{0}\right)}^{2}}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}-{a}^{2}{\sigma }_{S}^{2},$$(17)$${t}_{NP1}=\frac{{\sigma }_{Z}^{2}-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}-{a}^{2}{\sigma }_{S}^{2}+\frac{{\sigma }_{Z}^{2}{\delta }_{Z}-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}({e}_{0}^{2}+2{e}_{0})}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]},$$(18)$${t}_{NP1}-{\sigma }_{Y}^{2}=\frac{{\sigma }_{Z}^{2}{\delta }_{Z}-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}({e}_{0}^{2}+2{e}_{0})}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}.$$(19)By pertaining expectations together on (19), the bias we obtain is as

$$Bias\, \left({t}_{NP1}\right)=-\theta \frac{(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}{C}_{Z}^{2},$$(20)$$MSE \left({t}_{NP1}\right)=\theta \frac{1}{{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}^{2}}\left[{\sigma }_{Z}^{4}\left({\lambda }_{400}-1\right)+4(1-{g)}^{4}{\sigma }_{R}^{4}{\overline{Z} }^{2}{C}_{Z}^{2}-4(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}{\lambda }_{300}{C}_{Z}\right].$$(21) -

(ii)

Ratio estimator under the proposed randomized model:

$${t}_{NP2}=\left[\frac{{s}_{Z}^{2}-(1-{g)}^{2}{\sigma }_{R}^{2}\times {\overline{z} }^{2}}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}-{a}^{2}{\sigma }_{S}^{2}\right]\left[\frac{{\sigma }_{X1}^{2}}{{s}_{X1}^{2}}\right].$$(22)Rewrite (22) we get

$${t}_{NP2}=\left[\frac{{\sigma }_{Z}^{2}(1-{\delta }_{Z})-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}{\left(1+{e}_{0}\right)}^{2}}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}-{a}^{2}{\sigma }_{S}^{2}\right]\left[\frac{{\sigma }_{X1}^{2}}{{\sigma }_{X1}^{2}(1-{\delta }_{X1})}\right],$$(23)$${t}_{NP2}=\left[{\sigma }_{Y+}^{2}\frac{{\sigma }_{Z}^{2}{\delta }_{Z}-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}({e}_{0}^{2}+2{e}_{0})}{\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}\right]{(1-{\delta }_{X1})}^{-1}.$$(24)By pertaining expectations together on (24), the Bias and mean square error we obtain are as

$$Bias\, \left({t}_{NP2}\right)=\frac{\theta }{G}\left[\left({\lambda }_{040}-1\right)G-\left({\sigma }_{Z}^{4}{\lambda }_{120}{C}_{Z}+(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}\left({C}_{Z}-2{\lambda }_{120}\right)\right){C}_{Z}\right],$$(25)$$MSE \left({t}_{NP2}\right)=\frac{\theta }{G}\left[\begin{array}{c}\frac{1}{G}\left\{{\sigma }_{Z}^{4}\left({\lambda }_{400}-1\right)G-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}{\left(2{\lambda }_{300}-(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}\right)C}_{Z}\right\}\\ -{\sigma }_{Y}^{2}\left\{{\sigma }_{Z}^{2}\left({\lambda }_{220}-1\right)+(1-{g)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}{\lambda }_{120}{C}_{Z}-{\sigma }_{Z}^{2}\left({\lambda }_{040}-1\right)\right\}\end{array}\right],$$(26)where

$$G=\left[{g}^{2}+(1-{g)}^{2}\left({\sigma }_{R}^{2}+1\right)\right].$$

The proposed estimator under the proposed RRT model

The exponential estimator expressed in (7) can be generalized in the situation of two auxiliary non-sensitive variables as,

Rewrite (27) we get,

The expression of the bias and MSE of \({t}_{1N}\) to the first order of approximation is given by,

Differentiate with respect to \({w}_{1},{w}_{2}\) and \({w}_{3}\), the optimum values attained are as \({w}_{1}=\frac{{\sigma }_{y}^{4}}{{\sigma }_{y}^{4}+\theta {B}_{8}}\), \({w}_{2}=\frac{-{w}_{1}{B}_{4}}{{\sigma }_{x1}^{2}{B}_{5}}\) and \({w}_{3}=\frac{{w}_{1}{B}_{6}}{{\sigma }_{x2}^{2}{B}_{7}}.\)

The MSE imputing this optimum value is given as,

where \({D}_{1}=\frac{1}{{\left[{g}^{2}+{\left(1-g\right)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}^{2}}\left[{\sigma }_{z}^{4}\left({\mu }_{400}-1\right)+{4\left(1-g\right)}^{4}{\sigma }_{z}^{4}{\overline{Z} }^{4}{C}_{z}^{2}-2{\left(1-g\right)}^{2}{\sigma }_{z}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}{\mu }_{300}{C}_{z}\right]\), \({D}_{2}=\frac{1}{\left[{g}^{2}+{\left(1-g\right)}^{2}\left({\sigma }_{R}^{2}+1\right)\right]}\left[{\sigma }_{z}^{2}\{{v}_{1}\left({\mu }_{220}-1\right)+{v}_{2}\left({\mu }_{202}-1\right)\}-{2\left(1-g\right)}^{2}{\sigma }_{R}^{2}{\overline{Z} }^{2}\{{v}_{1}{\mu }_{120}+{v}_{2}{\mu }_{102}\}{C}_{z}\right]\), \({D}_{3}={v}_{1}^{2}\left({\mu }_{040}-1\right)+{v}_{2}^{2}\left({\mu }_{004}-1\right)-2{v}_{1}{v}_{2}\left({\mu }_{022}-1\right)\), \({D}_{4}=\theta \left({D}_{1}+{\sigma }_{y}^{4}{D}_{2}-2{\sigma }_{y}^{2}{D}_{3}\right)\), \({B}_{1}={D}_{1}+{\sigma }_{y}^{4}\left[\frac{{v}_{1}^{2}}{2}\left({\mu }_{040}-1\right)+{v}_{2}^{2}\left({\mu }_{004}-1\right)-2{v}_{1}{v}_{2}\left({\mu }_{022}-1\right)\right]-{\sigma }_{y}^{2}\left[{v}_{1}{D}_{2}-{v}_{2}{D}_{3}+2{v}_{1}{v}_{2}\left({\mu }_{022}-1\right)\right]\), \({B}_{2}= {D}_{2}+\left[{\sigma }_{y}^{2}\left({\mu }_{040}-1\right)+2\left({\mu }_{022}-1\right)\right]{v}_{1}\), \({B}_{3}= {D}_{3}+\left[{\sigma }_{y}^{2}\left({\mu }_{022}-1\right)+2\left({\mu }_{004}-1\right)\right]{v}_{2}\),\({B}_{4}=\left[{B}_{2}\left({\mu }_{004}-1\right)-{B}_{3}\left({\mu }_{022}-1\right)\right],\) \({B}_{5}={\sigma }_{X1}^{2}\left[\left({\mu }_{040}-1\right)\left({\mu }_{004}-1\right)-{\left({\mu }_{022}-1\right)}^{2}\right],\) \({B}_{6}=\left[{B}_{2}\left({\mu }_{022}-1\right)-{B}_{3}\left({\mu }_{040}-1\right)\right],\) \({B}_{7}={\sigma }_{X2}^{2}\left[\left({\mu }_{004}-1\right)\left({\mu }_{040}-1\right)-{\left({\mu }_{022}-1\right)}^{2}\right],\) and,

Privacy levels

In the literature, many privacy protection measures are presented by different authors. For our study, privacy measure due to Yan et al.30 is used to compute privacy for Diana and Perri’s6 model, and the proposed randomized response model.

The privacy protection measure presented by Yan et al. is given by,

-

a.

Diana and Perri6 model is given by,

$$Z=RY+S.$$(33)The privacy protection level is given by

$$\begin{aligned} \Delta_{D} = & E\left( {Z_{i} - Y_{i} } \right)^{2} \\ = & \sigma_{R}^{2} \left( {\mu_{Y}^{2} + \sigma_{y}^{2} } \right) + \sigma_{s}^{2} . \\ \end{aligned}$$(34) -

b.

The proposed Randomized response model’s privacy protection mlevel is as,

$$\begin{aligned} \Delta_{PN} = & E\left( {Z_{i} - Y_{i} } \right)^{2} \\ = & \left( {\mu_{Y}^{2} + \sigma_{y}^{2} + a^{2} \sigma_{s}^{2} } \right)\left( {1 + \left( {1 - g} \right)^{2} \sigma_{R}^{2} } \right) - \left( {\mu_{Y}^{2} + \sigma_{y}^{2} } \right). \\ \end{aligned}$$(35) -

c.

Comparison of privacy protection levels for Diana and Perris’6 model and proposed model.

$${\Delta }_{PN}-{\Delta }_{D}=\left({\mu }_{Y}^{2}+{\sigma }_{y}^{2}\right){\sigma }_{R}^{2}g\left(g-2\right)-{\sigma }_{s}^{2}\left[1-{a}^{2}\left(1+{\left(1-g\right)}^{2}{\sigma }_{R}^{2}\right)\right]>0,$$(36)$$\mathrm{iff }g\left(g-2\right)>\frac{{\sigma }_{s}^{2}\left[1-{a}^{2}\left(1+{\sigma }_{R}^{2}\right)\right]}{\left({\sigma }_{R}^{2}\left({\mu }_{Y}^{2}+{\sigma }_{y}^{2}\right)-{a}^{2}{\sigma }_{s}^{2}{\sigma }_{R}^{2}\right)}.$$

An application of the proposed model

In this section, motivated by Saleem and Sanaullah17 a real-life application is presented to analyze the efficiency of the proposed RRT model compared to the existing models.

A survey is organized to collect real data for the problem of the estimation of the true variance of the Grade Point Average (GPA) of the students of the Department of Statistics, in Forman Christian College University Lahore, who have studied the Course: Statistical Methods in Spring 2023. Ninety students registered in three sections in this statistics course are considered as our population. In this application, the variable of interest Y is the CGPA of students, and the two auxiliary variables i.e., X1 is the weekly study hours, and X2 is the number of courses studied in recent semesters. For the scrambling variables, S is a normal random variable with a mean equal to zero and a standard deviation equal to 2, and R is a normal random variable with a mean equal to 1 and a standard deviation equal to 0.02. The following are some characteristics of the population:

For model, \(Z=RY+S,\)

For model, \({Z}_{NP}=g\left(Y+aS\right)+\left(1-g\right)R\left(Y+aS\right),\)

Table 2 shows the results for MSE estimates of the model given by Diana and Perri6 and the proposed model. The resuts are obtained byusing two different sample sizes n = 20 and 38. One can notice that the proposed estimator provides minimum and better results as compared to the other estimators under both models.

Simulation study

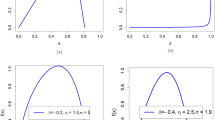

In this section, we conduct a simulation study to evaluate the performance of the proposed generalized exponential-type estimators by comparing some existing variance estimators.

Population I:

Population II:

For both populations, we ruminate three different samples of sizes 200, 300, and 500. The variance of S i.e. Var (S) and variance of T, i.e.Var (T) choose different values for simulation.

Table 3 provides the privacy protection level of the RRT models discussed in this study, we follow Gupta et al.31 unified measure of the estimator and is given by

where i = 0, ratio, 1D, NP1, NP2, 1; j = D and PN, \(MSE({t}_{i})\) is the theoretical MSE of the various estimators and \({\Delta }_{j}\) is the privacy level for Diana and Perri’s6 model and the proposed model as discussed in “Privacy levels” section.

Tables 4, 5 and 6 give the MSE and percent relative efficiency (PRE) results for the proposed estimator and existing estimators discussed in this article. The following expression is implied to get the PRE,

where i = ratio, gratio, and gep.

The results are presented in Tables 4, 5 and 6. The Tables 4 and 5 provides the numerical results of estimators dicussed in “Sampling strategy for scrambled response model” and “The proposed estimator and its class of estimators” sections whereas the Table 6 presented the results of estimators discussed in “The proposed RRT model and estimator-II” section based on proposed model. The values from Tables 4, 5 and 6 confirm that the existing estimators presented by Gupta et al.28 are less efficient as compared to the generalized estimator. Also while comparing the proposed model and existing model estimator results in these tables on mayobseve that the proposed model provides more efficient MSE values as compared to the model presented by Diana and Perri6. As we can see as the variance of S increases the MSE decreases.

A smaller value of \(\vartheta\) is to be preferred. Tables 3, 4 and 5 presents the unified measure along with the PRE of the estimators. It is observed that the proposed generalized estimator using two auxiliary variables efficiently performs either using Diana and Perri’s model or the proposed RRT model. One can notice that the values of \(\vartheta\) are smaller for the proposed generalized model.

Conclusion

This study addressed the estimation of population variance for sensitive study variables using a non-sensitive auxiliary variable. A generalized exponential-type estimator, based on Diana and Perri’s6 randomized response model, was introduced and evaluated against estimators proposed by Gupta et al.28, as detailed in Tables 4, 5 and 6. The comparative analysis indicated that the proposed estimator consistently demonstrated superior efficiency in variance estimation. Additionally, we introduced a novel generalized scrambled response model and applied it to conventional variance and ratio estimators, along with the proposed estimator. In “An application of the proposed model” section, a real survey-based study was presented, applying the proposed RRT model. The results, obtained under both our novel model and the model presented by Diana and Perri6, revealed that the proposed estimator consistently outperformed conventional mean and ratio estimators in minimizing MSE. Notably, as the sample size increased, the efficiency of the estimator further improved. Moreover, a simulation study was conducted, and the findings are summarized in Tables 3, 4, 5 and 6, comparing expected variances, MSE, and the precision (PRE). The results indicated that the generalized proposed estimator under the proposed randomized response model consistently provided the minimum MSE for both populations, outperforming the estimator’s MSE results using Diana and Perri’s6 model. This research study contributes valuable insights into variance estimation for sensitive variables. The proposed generalized estimator, underpinned by the innovative scrambled response model, demonstrated robustness, scalability, and superior performance in both real and simulated scenarios. These findings underscore the potential of this approach in advancing the precision and reliability of population variance estimation in sensitive contexts.

Data availability

The data set used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Warner, S. L. Randomized response: A survey technique for eliminating evasive answer bias. J. Am. Stat. Assoc. 60(309), 63–69 (1965).

Pollock, K. H. & Bek, Y. A comparison of three randomized response models for quantitative data. J. Am. Stat. Assoc. 71(356), 884–886 (1976).

Himmelfarb, S. & Edgell, S. E. Additive constants model: A randomized response technique for eliminating evasiveness to quantitative response questions. Psychol. Bull. 87(3), 525 (1980).

Eichhron, B. H. & Hayre, L. S. Scrambled randomized response methods for obtaining sensitive quantitative data. J. Stat. Plan. Inference 7, 307–316 (1983).

Gupta, S., Shabbir, J. & Sehra, S. Mean and sensitivity estimation in optional randomized response models. J. Stat. Plan. Inference 140(10), 2870–2874 (2010).

Diana, G. & Perri, P. F. A class of estimators for quantitative sensitive data. Stat. Pap. 52(3), 633–650 (2011).

Hussain, Z. & Khan, K. On estimation of sensitive mean using scrambled data. World Appl. Sci. J. 23(9), 1201–1206 (2013).

Zaman, Q., Ijaz, M. & Zaman, T. A randomization tool for obtaining efficient estimators through focus group discussion in sensitive surveys. Commun. Stat. Theory Methods 52(10), 3414–3428 (2023).

Azeem, M. Using the exponential function of scrambling variable in quantitative randomized response models. Math. Methods Appl. Sci. 1, 1 (2023).

Sousa, R., Shabbir, J., Real, P. C. & Gupta, S. Ratio estimation of the mean of a sensitive variable in the presence of auxiliary information. J. Stat. Theory Pract. 4(3), 495–507 (2010).

Koyuncu, N., Gupta, S. & Sousa, R. Exponential-type estimators of the mean of a sensitive variable in the presence of non-sensitive auxiliary information. Commun. Stat. Simul. Comput. 43(7), 1583–1594 (2014).

Gupta, S., Kalucha, G. & Shabbir, J. A regression estimator for finite population mean of a sensitive variable using an optional randomized response model. Commun. Stat. Simul. Comput. 46(3), 2393–2405 (2017).

Saleem, I., Sanaullah, A. & Hanif, M. Double-sampling regression-cum-exponential estimator of the mean of a sensitive variable. Math. Popul. Stud. 26(3), 163–182 (2019).

Shahzad, U., Perri, P. F. & Hanif, M. A new class of ratio-type estimators for improving mean estimation of nonsensitive and sensitive variables by using supplementary information. Commun. Stat. Simul. Comput. 48(9), 2566–2585 (2019).

Sanaullah, A., Saleem, I. & Shabbir, J. Use of scrambled response for estimating mean of the sensitivity variable. Commun. Stat. Theory Methods 49(11), 2634–2647 (2020).

Sanaullah, A., Saleem, I., Gupta, S. & Hanif, M. Mean estimation with generalized scrambling using two-phase sampling. Commun. Stat. Simul. Comput. 51, 1–15 (2020).

Saleem, I. & Sanaullah, A. Estimation of mean of a sensitive variable using efficient exponential-type estimators in stratified sampling. J. Stat. Comput. Simul. 92(2), 232–248 (2022).

Khalid, A., Sanaullah, A., Almazah, M. M. A. & Al-Duais, F. S. An efficient ratio-cum-exponential estimator for estimating the population distribution function in the existence of non-response using an SRS design. Mathematics 11, 1312. https://doi.org/10.3390/math11061312 (2023).

Juárez-Moreno, P. O., Santiago-Moreno, A., Sautto-Vallejo, J. M. & Bouza-Herrera, C. N. Scrambling reports: New estimators for estimating the population mean of sensitive variables. Mathematics 11(11), 2572 (2023).

Gupta, S. & Shabbir, J. Variance estimation in simple random sampling using auxiliary information. Hacettepe J. Math. Stat. 37(1), 57 (2008).

Asghar, A., Sanaullah, A. & Hanif, M. Generalized exponential type estimator for population variance in survey sampling. Rev. Colomb. Estad. 37(1), 213–224 (2014).

Sanaullah, A., Hanif, M. & Asghar, A. Generalized exponential estimators for population variance under two-phase sampling. Int. J. Appl. Comput. Math. 2(1), 75–84 (2016).

Niaz, I., Sanaullah, A., Saleem, I. & Shabbir, J. An improved efficient class of estimators for the population variance. Concurr. Comput. Pract. Exp. 34(4), e6620 (2022).

Zaman, T. & Bulut, H. A new class of robust ratio estimators for finite population variance. Sci. Iran. https://doi.org/10.24200/sci.2022.57175.5100 (2022).

Singh, S., Sedory, S. A. & Arnab, R. Estimation of finite population variance using scrambled responses in the presence of auxiliary information. Commun. Stat. Simul. Comput. 44(4), 1050–1065 (2015).

Das, A. K. & Tripathi, T. P. Use of auxiliary information in estimating the finite population variance. Sankhya 40, 139–148 (1978).

Isaki, C. T. Variance estimation using auxiliary information. J. Am. Stat. Assoc. 78(381), 117–123 (1983).

Gupta, S., Qureshi, M. N. & Khalil, S. Variance estimation using randomized response technique. REVSTAT Stat. J. 18(2), 165–176 (2020).

Aloraini, B., Khalil, S., Qureshi, M. N. & Gupta, S. Estimation of population variance for a sensitive variable in stratified sampling using randomized response technique. REVSTAT Stat. J. 1, 1 (2022).

Yan, Z., Wang, J. & Lai, J. An efficiency and protection degree-based comparison among the quantitative randomized response strategies. Commun. Stat. Theory Methods 38(3), 400–408 (2008).

Gupta, S., Mehta, S., Shabbir, J. & Khalil, S. A unified measure of respondent privacy and model efficiency in quantitative RRT models. J. Stat. Theory Pract. 12, 506–511 (2018).

Acknowledgements

Princess Nourah bint Abdulrahman University Researchers Supporting Project Number (PNURSP2023R368), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Author information

Authors and Affiliations

Contributions

I.S.: conceptualization, theoretical and mathematical framework; software handling, data visualization, and analysis; writing the first draft. A.S.: Concept, supervision, data visualization analysis, editing and reviewing the final draft and wrote the first draft. L.A.A.-E & A.A.-M..: Editing, reviewed and proofread the final draft, Project management. resources and funding. S.B.: editing, formatting, and reviewing the final draft of the manuscript. All authors reviewed the final drafts of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saleem, I., Sanaullah, A., Al-Essa, L.A. et al. Efficient estimation of population variance of a sensitive variable using a new scrambling response model. Sci Rep 13, 19913 (2023). https://doi.org/10.1038/s41598-023-45427-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-45427-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.