Abstract

Heart rate (HR) is a crucial physiological signal that can be used to monitor health and fitness. Traditional methods for measuring HR require wearable devices, which can be inconvenient or uncomfortable, especially during sleep and meditation. Noncontact HR detection methods employing microwave radar can be a promising alternative. However, the existing approaches in the literature usually use high-gain antennas and require the sensor to face the user’s chest or back, making them difficult to integrate into a portable device and unsuitable for sleep and meditation tracking applications. This study presents a novel approach for noncontact HR detection using a miniaturized Soli radar chip embedded in a portable device (Google Nest Hub). The chip has a \(6.5 \text{ mm } \times 5 \text{ mm } \times 0.9 \text{ mm }\) dimension and can be easily integrated into various devices. The proposed approach utilizes advanced signal processing and machine learning techniques to extract HRs from radar signals. The approach is validated on a sleep dataset (62 users, 498 h) and a meditation dataset (114 users, 1131 min). The approach achieves a mean absolute error (MAE) of 1.69 bpm and a mean absolute percentage error (MAPE) of \(2.67\%\) on the sleep dataset. On the meditation dataset, the approach achieves an MAE of 1.05 bpm and a MAPE of \(1.56\%\). The recall rates for the two datasets are \(88.53\%\) and \(98.16\%\), respectively. This study represents the first application of the noncontact HR detection technology to sleep and meditation tracking, offering a promising alternative to wearable devices for HR monitoring during sleep and meditation.

Similar content being viewed by others

Introduction

Sleep is essential for overall well-being, having a significant impact on physical and mental health. Research indicates that poor sleep quality and inadequate sleep can lead to various health issues, including obesity, diabetes, cardiovascular disease, and depression1. Additionally, meditation has been found to have multiple benefits, such as reducing stress, anxiety, and depression and enhancing attention and cognitive function2. Therefore, accurately tracking sleep and meditation patterns is crucial for individuals to improve their practice and maintain optimal health and wellness3.

The integration of smart beds/mattresses, equipped with advanced sensing technologies such as pressure sensors4,5, presents a promising avenue for systematic, preventive, personalized, and non-invasive assessment of sleep quality. These technologies enable the measurement of critical parameters like respiration rate (RR) and heart rate (HR). However, their limitations, including bulkiness, high cost, and immobility, pose challenges to widespread adoption and deployment in diverse settings. On the contrary, wearable devices like smartwatches have gained popularity for sleep and meditation tracking. They can collect data on movement and HR using sensors like accelerometers and Photoplethysmography (PPG)6. Nevertheless, wearing a device during sleep or meditation can be uncomfortable and inconvenient, potentially leading to incomplete or inaccurate data6. Remote photoplethysmography (rPPG)7,8,9,10 has also emerged as a compelling alternative to wearable devices. It offers valuable insights, including heart rate (HR) measurement, by detecting changes in reflected light intensity from a user’s skin. However, rPPG’s applicability is limited by its requirement to “see” the user’s skin, making it unsuitable for comprehensive sleep and meditation tracking due to constraints related to varying light conditions, sleep postures, and concerns regarding user privacy.

Portable devices like the Google Nest Hub11, equipped with microwave radar technology, introduce an innovative approach to monitoring sleep and meditation quality. These devices eliminate the discomfort and inconvenience associated with wearing tracking devices by providing valuable insights through motion tracking and respiratory rate (RR) monitoring12. In comparison to their rPPG7,8,9,10 counterparts, radar systems offer several advantages, including enhanced privacy protection, independence from light conditions, and reduced power consumption. They can also penetrate clothing and blankets, and are suitable for various postures during sleep and meditation. Yet, it’s important to acknowledge that current portable devices, including the Google Nest Hub, lack the ability to monitor heart rate (HR), a crucial biometric for comprehensive sleep and meditation tracking, due to existing technical challenges. The implementation of HR measurement in portable devices is an area of great interest.

(a) A Google Nest Hub monitors a user’s HR contactlessly on a bedside table. The device integrates a radar chip called Soli, operating at the 60 GHz frequency band. (b) The Soli radar chip is 6.5 mm × 5 mm × 0.9 mm and comprises one transit antenna and 3 receive antennas on the package. (c) The proposed HR detection approach includes three signal processing blocks to detect the user’s presence and extract micro-motion waveforms, a machine learning block to extract pulse waveform and related pseudo-spectrum, and a post-processing block to smooth HRs. (d) An overnight sleep example is shown in this plot. The upper plot illustrates the user’s sleep position, while the lower plot shows the HRs estimated by the proposed method (i.e., Soli HR) and the ECG HRs as the ground truth. The Soli HR can accurately track slow HR fluctuations over time under various sleep positions.

Related work

Noncontact HR detection using microwave radar13,14,15,16,17 represents a promising technology to integrate HR monitoring into portable sleep- and meditation-tracking devices. This approach emits electromagnetic waves toward the target subject. It measures the reflected signals, which exhibit periodic variations related to the HR due to changes in blood flow with each heartbeat. HR information can be extracted from the reflected signals using advanced signal processing (SP) and machine learning (ML) techniques without physical contact. Although significant efforts have been dedicated to this field over the past few decades, two challenges still need to be solved for using radar in portable sleep and meditation-tracking devices.

Firstly, most existing systems rely on high-gain antennas to amplify signal-to-noise ratio (SNR) and diminish environmental interference by directing the radiating energy onto the user’s body. Nonetheless, this method necessitates larger antenna sizes and produces a narrower field of view (FOV), limiting the user’s positioning to typically being right in front of the radar sensor.

Secondly, the weak micro-motion of the heartbeat is often overshadowed by respiration motion and random body motion, which are typically much stronger than the heartbeat by one or two orders of magnitude. In the frequency domain, the weak heartbeat signal can be easily masked by the harmonic components and sidelobes of these two interfering motions. Thus, traditional spectral analysis techniques, such as Fourier transform (FT) and band-pass filter (BPF)17, fail to perform satisfactorily. Various methods have been developed to address this issue, including ensemble empirical mode decomposition (EEMD)18, wavelet19, RELAX20, Kalman filter21, independent component analysis (ICA)22, and ML23. However, the authors of these papers have primarily focused on cases where the radar sensor is directed straight at the user’s chest or back. Detecting HR from the sides presents an even more significant challenge due to the smaller magnitude of heartbeat micro-motion and the obstruction of the arms and shoulders. In fact, a recent study23 discovered that “ the left and right orientations are unsuitable for monitoring HR and RR” when examining the HR performance for different user orientations. Unfortunately, users may assume various poses during sleep. The most common sleep position, supine, leads to the side orientation, making it difficult to acquire reliable HRs using a portable device at the bedside. More advanced techniques are required to overcome this challenge.

This article introduces an innovative approach for continuously monitoring a user’s HR during sleep and meditation, utilizing a Google Nest Hub placed on a bedside table (Fig. 1a). The Google Nest Hub incorporates a miniaturized radar chip named Soli, which operates at the 60 GHz frequency band and includes a transit antenna and 3 receive antennas, all on the package, with a small dimension of \(6.5 \text{ mm } \times 5 \text{ mm } \times 0.9 \text{ mm }\) (Fig. 1b). We propose a combined SP and ML approach to address the challenges of weak heartbeat micro-motion and interference from respiration and random body motion (as depicted in Fig. 1c). The approach involves using three SP blocks to detect the user’s presence and extract micro-motion waveforms from different body parts. Then, an ML-based pulse extraction block is used to extract the heart pulse waveform and associated pseudo-spectrum from the micro-motion waveforms, from which we detect the HR. Finally, a post-processing block is employed to smooth the HR sequence.

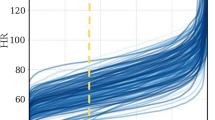

We have tested the proposed method on a sleep dataset (62 users, 498 h) and a meditation dataset (114 users, 1131 min), which results in mean-absolute-error (MAE) of 1.69 bpm (beat-per-minute) and 1.05 bpm, mean-absolute-percentage-error (MAPE) of \(2.67\%\) and \(2.0\%\), and recall rates of \(88.53\%\) and \(98.16\%\), respectively. We provide a sleep-tracking example in Fig. 1d, which shows the overnight Soli HR sequence compared to its electrocardiogram (ECG) counterpart and the user’s sleep position. Soli’s HR estimates precisely track gradual HR changes across different sleep positions, and they closely match with ECG HRs.

To the best of our knowledge, this article represents a significant contribution to three areas of research: (1) the use of a miniaturized radar chip in a commercial portable device for noncontact HR detection; (2) the study of noncontact HR detection for sleep and meditation tracking, including challenging sleep positions where the radar sensor views users from their sides; and (3) the evaluation of this technology on large-scale datasets, including 62 users’ overnight sleep data and 112 users’ meditation data under various practical conditions. Overall, it introduces the novel application of noncontact HR detection technology to sleep and meditation tracking, providing a promising solution for noncontact HR monitoring in these domains.

Results

Below, we present the HR results on the sleep and meditation datasets.

Sleep tracking

To collect sleep data, we used a Google Nest Hub placed on a bedside table (refer to Fig. 1a) to record Soli radar data. The distance between the Soli sensor and the user’s torso varied from 0.3 to 1.5 m. We also attached an ECG sensor to the user’s body to record ECG signals as a reference. For each session, a user was brought in to sleep overnight, ranging from 6 to 9 h. We had a proctor present to record the user’s sleep positions and events (such as apnea, hypopnea, and arousal).

A total of 232 sessions of data have been collected. Of these, 66 sessions do not have ECG data files, 41 sessions have a timestamp misalignment issue between Soli and ECG data, and 3 sessions have poor-quality ECG signals. After removing these sessions, 122 sessions with valid ECG data were identified. These sessions correspond to 122 unique users and a total of 975 h. We randomly partitioned the 122 sessions into two groups, 60 sessions (477 h) for ML training and 62 sessions (498 h) for performance evaluation. As shown in Fig. 2a, five different sleep positions were recorded from these 62 test sessions: supine (204 h, \(41\%\) of total data), left lateral (123 h, \(25\%\)), right lateral (128 h, \(26\%\)), prone (24 h, \(5\%\)), and sitting-up (19 h, \(4\%\)). The reference ECG HRs, derived by applying FFT to the ECG waveforms, range from 40 to 100 bpm, with \(95\%\) of the samples falling within the range of 45−85 bpm (Fig. 2b). The sleep-tracking HR performance below is based on the 62 test sessions.

During data processing, the overnight data is segmented into 60 s overlapping samples with a step size of 15 s. Each 60 s sample is processed through SP and ML blocks, as illustrated in Fig. 1c, to obtain an HR estimate. The post-processing block then smooths the HR estimates over time. Thus, the first HR estimate is obtained in 60 s and updated every 15 s. The performance metrics are evaluated based on the smoothed HR estimates.

To provide a benchmark for comparison, we evaluate the conventional BPF approach17 on the sleep dataset. This comparison with BPF, a widely accepted reference method, serves to establish a familiar baseline for evaluating the effectiveness and novelty of our approach. The ML block in Fig. 1c is replaced by several SP blocks, including a BPF block (with BPF passing frequency band being 40–200 bpm) to extract heart pulse waveforms, an FT block to obtain the related spectrum, and a block to select the best HR over various micro-motion waveforms based on the peak-to-average ratio (PAR). As shown in Table 1, the BPF approach yields MAE and MAPE values of up to 21.0 bpm and \(31.2\%\), respectively. The 95th percentile absolute error (AE) and absolute percentage error (APE) of the BPF approach are up to 67.3 bpm and \(98.5\%\), respectively. The recall rate is \(73\%\), indicating that the BPF approach can not determine the HRs in \(27\%\) of the samples. Furthermore, the R-squared value, also known as the determination coefficient and defined in Eq. (6), returns a negative value because the mean squared error (MSE) exceeds the HR variance. These performance metrics indicate that the BPF approach failed to perform on the sleep data.

In contrast, our proposed method achieves good HR accuracy, as evidenced by its MAE and MAPE values of 1.69 bpm and \(2.67\%\), respectively. The 95th percentile AE and APE are 5.50 bpm and \(8.49\%\), indicating that our method achieves good HR accuracy in \(95\%\) of the samples. The R-Squared value is \(89.92\%\). Moreover, the method has a recall rate of \(88.53\%\), with the remaining \(11.47\%\) of samples (not sessions or users) undetermined. Detailed visual representations, Fig. 3a,b, depict the distribution of HR errors through the error histogram and the corresponding AE cumulative density function (CDF). Note that the recall rate in the sleep study is relatively low compared to its meditation counterpart, a topic to be elaborated upon in the following section. This discrepancy can mainly be attributed to environmental factors. The sleep data collection occurred overnight in an uncontrolled environment, leading to increased body motion of participants due to the noisy surroundings and discomfort caused by ECG attachment. These factors negatively impacted both the recall rate and HR accuracy.

The HR accuracies are further analyzed in Fig. 4a–c for various sleep positions, HR bands, and data sessions. In each figure, blue bars indicate MAEs, red bars indicate the 95th percentile AE, and green curves represent recall rates. The y-axes on the left and right sides show HR errors in bpm and percentage recall rates, respectively. The results in Figure 4a demonstrate that the four lying sleep positions (supine, left lateral, right lateral, and prone) yield similar HR accuracies with an MAE of less than 1.7 bpm and a 95th percentile AE of less than 5.6 bpm. The sitting-up position performs slightly worse than the lying positions, with an MAE of 3.48 bpm and a 95th percentile AE of 9.81 bpm.

(a) The HR accuracy of the proposed approach is shown for various sleep positions. The proposed approach achieves an MAE of less than 1.7 bpm for all sleep positions except for the sitting-up pose, which is 3.48 bpm. (b) The HR accuracy of the proposed approach is shown for various actual HR bands. The proposed approach achieves an MAE of less than 2.4 bpm for all HR bands except for the 90–100 bpm band, where the MAE is 5.65 bpm. (c) The HR accuracy of the proposed approach is shown for various sessions. The proposed approach achieves an MAE of less than 3 bpm for 59 out of 62 sessions (\(95.2\%\)).

Similarly, in Fig. 4b, we observe good HR accuracy when the true HR is between 40 and 90 bpm, with an MAE of less than 2.4 bpm and a 95th percentile AE of less than 5.8 bpm. The HR accuracy degrades slightly when the true HR is between 90 bpm and 100 bpm, with an MAE of 5.65 bpm and a 95th percentile AE of 14.60 bpm. The lower HR accuracy for the sitting-up position and high HR bands can be traced back to the small data size available for these two categories (Fig. 2a,b). The insufficient data size causes the ML model to be inadequately trained for these categories, leading to worse performance.

The HR accuracy over different sessions (i.e., users) is shown in Fig. 4c, where sessions are sorted based on their MAEs from the smallest to the largest. As per the figure, 59 sessions (\(95.2\%\)) have MAEs less than 3 bpm, 58 sessions (\(93.6\%\)) have the 95th percentile AE less than 10 bpm, and 60 sessions (which is \(96.8\%\)) have a recall rate greater than \(50\%\). Overall, 57 sessions (\(91.9\%\)) perform satisfactorily with MAE less than 3 bpm, the 95th percentile AE less than 10 bpm, and a recall rate greater than \(50\%\).

Figures 1d, 5a,b exhibit three examples of overnight sleep tracking, with the user sleep position in the upper plot and the proposed Soli HR and ECG HR (ground truth) displayed in the lower plot. The Soli HR estimates align well with the ECG HR, accurately tracking slow HR fluctuations over time. Fig. 5a illustrates Soli’s ability to track oscillating HR variations during overnight sleep, while Fig. 5b demonstrates its capability to detect HR in a sitting-up position. We remark that the proposed approach cannot track rapid HR changes reflecting heart rate variability (HRV), contributing to the discussed HR errors. We also observe from Fig. 5a that Soli fails to detect HR in the first half hours for this example. Enhancing the recall rate of sleep tracking and investigating the feasibility of monitoring HRV using Soli technology could be of great interest.

Meditation tracking

The meditation data collection (DC) platform is a modification of the sleep-tracking platform, with the ECG sensor replaced by a fingertip PPG for convenience. To evaluate the performance of this approach, we conducted 24 test cases (outlined in Table 2) with users in the supine position. Categories A, B, and C represent the primary cases with users lying still at two different distances (0.6 m and 1.0 m) from the sensor to the chest, two blanket conditions (with and without blanket), and three breathing patterns (regular, deep, and rapid breathing). Users were asked to perform jumping jacks before DC for rapid breathing cases. Cases of Category D are used to test the approach’s robustness against various body motions. Cases of Category E are used to test the approach’s robustness against a second-person aggressor lying, standing, or walking around the DC environment. It is worth mentioning that the objective of this study is not to track the user’s HR while in motion. Instead, the HR monitoring is temporarily halted when substantial body movements are detected by the presence detection block depicted in Fig. 1c and resumed when the user is motionless again. As a result, the motion duration in Cases of Category D is not factored into the performance assessment. Additionally, this study does not aim to detect the HR of the second person in cases of Category E.

In this meditation study, each data session lasts 2 to 3 min, with each user performing 5 to 7 sessions from different test cases. We have collected 1131 min of data from 114 users, with the data sizes for each test case presented in Table 2. Figure 6 displays the distribution of ground-truth HR obtained from the PPG data, which shows slightly higher HRs (45 to 115 bpm) compared to its sleep counterpart (Fig. 2b). It is important to note that a separate dataset has been collected for ML training for this meditation study. Thus, all data in Table 2 were used for performance evaluation. The data collection for ML training followed the same protocol outlined in Table 2, albeit with a distinct set of subjects. We gathered approximately 876 min of data from a cohort of 60 participants.

Two meditation HR examples using our proposed approach. (a) shows an example in Case C3, where the user performed jumping jacks before data collection, and (b) shows an example in Case E2, where a second person lay still on the non-device side. In both cases, the Soli HR matches well with the PPG ground truth, indicating the accuracy of our proposed approach.

For the meditation application, we employ the same SP algorithm and ML model utilized in sleep tracking. However, we make specific adjustments to the sample length and step size, setting them to 16 and 4 s, respectively, to ensure compliance with the latency requirements of the meditation application. Consequently, in this meditation study, our proposed approach delivers the initial heart rate measurement within a timeframe of 16 s, followed by subsequent updates every 4 s.

Table 3 presents the HR accuracy achieved by our proposed approach on the meditation dataset, and Figs. 7a,b show the related HR error histogram and CDF of HR AE, respectively. The proposed approach achieves good HR accuracy, with MAE and MAPE values of 1.05 bpm and \(1.56\%\), respectively, and the corresponding 95th percentiles of AE and APE being 3.24 bpm and \(4.69\%\). The recall rate is \(98.16\%\), with only \(1.84\%\) of samples remaining undetermined. The R-Squared value is around \(95.26\%\). All of these performance metrics outperform their sleep counterparts.

Figure 8 presents the HR accuracies for various test cases. The proposed approach achieves high accuracy across all cases, with the MAE of all cases except Case C4 being less than 1.5 bpm and the corresponding 95th percentile AE being less than 6.5 bpm. Case C4 is slightly worse than the others, with MAE and the 95th percentile CI being 3.0 bpm and 11.1 bpm, respectively. Interestingly, Cases C4 and D3 perform differently even under similar conditions, possibly due to outliers. Overall, the figure shows the resilience of the proposed method, showcasing its ability to operate effectively up to 1.0 m with a blanket and successfully handle various breathing patterns, body motions, and even second-person aggressors (despite the limited data size of Category E).

Figures 9a,b illustrate two examples of meditation in test cases C3 and E2, respectively. In Fig. 9a, a decrease in HRs is observed from both the Soli and PPG sensors, which can be attributed to the jumping jack exercise performed before data collection. On the other hand, Fig. 9b depicts a scenario where a second person lies still on the non-device side. In both cases, the Soli HRs exhibit strong alignment with the PPG HRs, indicating a high level of agreement between the two measurements. The MAEs for Figs. 9a is around 1.4 bpm, while Fig. 9b , it is 0.8 bpm.

Discussion

Detecting HR without physical contact is a valuable yet challenging task, especially for sleep and meditation tracking. This study presents a novel solution that leverages a miniaturized Soli radar chip integrated into a portable device (Google Nest Hub). The proposed approach harnesses advanced SP and ML techniques to extract HR information from radar signals, enabling precise measurements during sleep and meditation sessions. The effectiveness of the proposed approach is validated using two datasets: a sleep dataset comprising data from 62 users and 498 h and a meditation dataset with data from 114 users and 1131 min. The results show that the proposed approach achieves an MAE of 1.69 bpm and a MAPE of \(2.67\%\) on the sleep dataset and an MAE of 1.05 bpm and a MAPE of \(1.56\%\) on the meditation dataset. The recall rates for the two datasets are \(88.53\%\) and \(98.16\%\), respectively. Furthermore, the proposed method is robust against various sleep positions, HR bands, body motions, and even the presence of a second-person aggressor. These results suggest that noncontact HR monitoring technology has promising potential for continuous and convenient monitoring of sleep and meditation.

Future work will focus on enhancing the sleep-tracking recall rate and improving Soli’s capability to track rapid HR changes for HRV detection, another critical biometric for meditation. Another interesting research area is RF-Seismocardiogram (SCG), which aims to extract more comprehensive heartbeat information beyond HR and HRV for monitoring various cardiovascular conditions of the user, especially during sleep24. With further developments, noncontact heart monitoring technology enabled by Soli and other radar technologies may become essential for continuously and conveniently monitoring sleep and meditation.

Methods

Hardware

The second-generation Soli radar chip, illustrated in Fig. 1b, is utilized in our investigation. The chip is integrated into the Google Nest Hub, as depicted in Fig. 1a. This compact chip measures \(6.5 \text{ mm } \times 5 \text{ mm } \times 0.9 \text{ mm }\) and operates at 60 GHz with one transmit antenna and three receive antennas. Its diminutive size allows for integration into various portable devices and smartphones. The receive antennas are configured in an L shape, with 2.5 mm spacing between them. The chip employs frequency-modulated continuous wave (FMCW) waveforms25, also known as chirps, with a transmit power of 5 mW. These chirps sweep frequencies from 58 GHz to 63.5 GHz, resulting in a bandwidth of \(B = 5.5\) GHz and a range resolution of approximately \(\frac{c}{2B}= 2.7\) cm, where c denotes the speed of light. The received signals are sampled at an ADC sampling rate of 2 MHz, with each chirp comprising 256 samples. Chirps are repeated at a rate of 3000 Hz, with 20 chirps organized into a burst that repeats at a burst rate of 30 Hz. The chip enters an idle mode between chirps and bursts, resulting in an active duty cycle of roughly \(\frac{256\,*\,20}{2\times 10^6} \, * \,30 = 7.68\%\) within 33 ms, compliant with the FCC wavier26. The essential operational parameters of the Soli radar chip are presented in Table 4. For a more comprehensive understanding of the Soli radar chip and the FMCW principle, interested readers may refer to the related papers16,27,28,29.

Preprocessing

The ADC samples from the three receivers are initially directed to the preprocessing block, as shown in Fig. 1c. Within the preprocessing block, the chirps within each burst are first averaged. Then the bursts are decimated from 30 to 15 Hz by merely averaging two adjacent bursts, enhancing the SNR. Consequently, the data is decimated to one chirp every 0.067 s. FFT is then applied to the decimated data along the fast-time, i.e., the 256 samples of each chirp, generating complex-valued range profiles. An example of decimated data from the three receivers of 60 s duration is illustrated in Fig. 10a, with the x-axis representing time and the y-axis representing the ADC sample index with a chirp.

Presence detection

The presence detection block in this study serves three functions: firstly, to determine whether a user is present; secondly, to determine whether the user is still; and thirdly, to determine the distance of the user from the sensor. When the presence detection block detects a still user, it will activate the subsequent HR monitoring blocks to measure the user’s HR. Conversely, it pauses the HR detection process until a still user is detected again. By including these functionalities in the presence detection block, the system can optimize HR monitoring, ensuring accurate and reliable readings are obtained.

In the presence detection stage, we first apply a clutter filter to complex-valued range profiles to eliminate strong stationary clutter originating from the background and the interplay between transmit and receive antennas. The clutter filter first calculates the average range profile over time (or chirps) and subtracts it from the original ones. It assumes that these clutter signals remain unvaried over time. The power of the so-processed range profiles is then computed and combined over receivers to form a power range profile image. As shown in Fig. 10b, the bright line in the power range profile image signifies user presence. A simple constant false alarm (CFAR)30 detector is used to detect the user and determine the associated distance. Upon user detection, the peak range bin signal is extracted from the complex-valued range profiles (after the clutter filter). An FFT is then executed to obtain a Doppler spectrum. Given that Doppler indicates the user’s motion speed, the ratio of low Doppler energy to high Doppler energy is calculated to determine the user’s stillness.

Micro-motion extraction

Once a still user is detected, 16 range bin signals are extracted from the complex-valued range profiles around the detected user distance. The micro-motion extraction algorithm is applied to each range bin independently, resulting in a micro-motion waveform.

The micro-motion extraction process involves two main steps: beamforming and phase extraction. In the first step, signals from the three receivers are combined using a maximum ratio combining (MRC) method to filter out the desired reflection signal. To perform the MRC beamforming, we first stack the signals from the three receivers into a \(3 \times 1\) vector, denoted by \(\textbf{x}(l) \in {\mathscr {C}}^{3\times 1}\), where l denotes the chirp index. A \(3 \times 3\) covariance matrix \(\textbf{Q}\) is then computed using the formula:

In (1), \({\hat{\textbf{x}}} = \frac{1}{L} \sum _{l=1}^L \textbf{x}(l)\) represents the stationary clutter at the current range bin, \((\cdot )^H\) denotes the Hermitian matrix transpose, and L is the number of chirps (in the example in Fig. 10, this is 900). The dominant eigenvector of \(\textbf{Q}\), denoted by \(\textbf{w}\), is computed using a power iteration method31. Finally, the three channels are combined using \(\textbf{w}\) to produce a complex-valued scalar sequence \(y(l) = \textbf{w}^H \textbf{x}(l)\).

The objective of the second step is to extract phase information from the combined signal y(l). Note that the conventional phase extraction technique is ineffective in this problem due to the small heartbeat and respiration micro-motion magnitude compared to the wavelength. Instead, a circle-fitting technique25,32 is used. This technique assumes that the desired reflection signal has a constant modulus over time. Thus, it can be formulated in the following optimization problem:

where \(\eta \in {\mathscr {C}}\) and \(r \in {\mathscr {R}}\) represent the center and radius of the fitted circle, respectively. The optimization problem of (2) can be solved using a closed-form solution25.

Once we estimate the circle center \(\eta\), we can extract the micro-motion waveform using the following equation:

with \(f_0\) denoting the center frequency. Here, \(\text{ unwrap }(\cdot )\) denotes the unwrap function, which corrects the radian phase angles by adding multiples of \(\pm 2 \pi\) such that the phase change from the previous sample is less than \(\pi\).

Figure 10c displays the 16 micro-motion waveforms extracted from the sleep data example. While some waveforms exhibit respiration motion, the heart pulse signal is scarcely discernible. We employ an ML technique below to extract the pulse waveform and its associated pseudo-spectrum from the micro-motion waveforms.

ML-based pulse waveform and pseudo-spectrum extraction

A neural network (NN) has been designed to extract the heart pulse waveform and its corresponding pseudo-spectrum from the micro-motion waveforms. The NN comprises two blocks, depicted in Fig. 11. The first block focuses on extracting the pulse waveform from the 16 micro-motion waveforms, as shown in Fig. 10c. The so-obtained pulse waveform is illustrated in the upper plot of Fig. 10d. The second block takes this pulse waveform as input and generates a pseudo-spectrum, visualized in the lower plot of Fig. 10d.

At the bottom of Fig. 11, we present a modified residual neural network (ResNet) layer33, which is utilized in both neural network (NN) blocks33. The modified ResNet layer comprises a 1D convolution (Conv) layer, followed by a separable convolution (Separable-Conv)34 layer. We use Separable-Conv for the second stage to reduce the number of parameters. A shortcut, two batch normalizations, and two rectified linear units (ReLu) are included, similar to the standard ResNet.

As shown in Fig. 11, in the first block, each of the 16 micro-motion waveforms is processed through three ResNet layers before being passed to a summation layer. The output of the summation layer is then processed through a ResNet and a standard 1D Conv layer, ultimately producing the pulse waveform output. The number of filters and their corresponding kernel size for each ResNet layer is illustrated in the figure. The ResNet weights across various input branches are enforced to be equal.

The second NN block begins by processing the pulse waveform through a ResNet layer. The resulting output is then passed through a full-size FFT layer, two half-size FFT layers, and four quarter-size FFT layers in parallel. In the half-size FFT layers, the input signal is divided into two segments, and FFT is applied to each segment independently. A similar process is used for the quarter-size FFT block. This design enhances the robustness of HR detection against random body motion, which is usually non-stationary and thus with different spectra at different time intervals. By examining the spectra at different FFT outputs, the NN can better reject the interference of random body motion. Zero-padding is applied to all 7 FFTs resulting in the same output length (1024) and the same output frequency granularity (0.88 bpm). The FFT outputs are then cropped to the frequency band of interest (35–200 bpm, \(N=189\)) and concatenated. The concatenated data is processed by two ResNet layers and one SoftMax layer, generating a pseudo-spectrum of the pulse waveform. The HR and associated confidence level can be determined by detecting the peak of the pseudo-spectrum and comparing its amplitude with that of the second peak.

The designed NN is very lightweight, with only 10,248 parameters, of which 9815 are trainable. As a result, the entire pipeline, including the NN model, data acquisition/transmission, signal processing, and virtualization, can run in real time on a laptop (e.g., MacBook Pro with 2.6 GHz 6-Core Intel Core i7) or a portable device (e.g., Google Nest Hub with Amlogic S905D3 quad-core Cortex-A55 processor), updating the HR estimate every 4 s. The NN was implemented using TensorFlow 2.13.035,36 and trained in the Google Colaboratory environment37. We used 477 h of sleep data and 478 min of meditation data for training. The training data were collected from different users using the same DC protocols as the test data. The neural network (NN) model was trained separately for sleep and meditation tracking applications due to the differences in input data lengths.

During the NN training, we utilized both the ECG and PPG waveforms, along with their respective pseudo-spectra, as ground truth labels. To facilitate ML training, we pre-processed and normalized both the ECG/PCG waveforms and their pseudo-spectra. Initially, we detected individual peaks from the raw ECG/PCG waveforms, which were then modulated by Gaussian pulses. This process effectively replaced the heartbeat pulses with standard Gaussian pulses, preserving the timing information of the heartbeats while removing other unrelated features. A similar approach was applied to process the spectrum labels. For the waveform output, a mean square error (MSE) loss function was employed, while a cross-entropy loss function was used for the pseudo-spectrum output. By combining these two loss functions, we optimized the overall performance of the NN. The Adam optimizer38 was utilized to facilitate the optimization process.

Post-processing

The HR sequence obtained from the signal processing (SP) and machine learning (ML) blocks undergoes further refinement in the post-processing block. Initially, HRs with low confidence levels are discarded, and a simple linear interpolation technique is employed to recover these rejected HR samples using neighboring samples as reference. However, if the consecutive number of rejected samples exceeds the subsequent median filter length, the HRs during that period are considered as undetermined.

A median filter is applied to ensure smoothness in the HR sequence, followed by a Gaussian smoothing filter. For sleep tracking, the median filter length is set to 10 min, while the Gaussian smoothing filter has a length of 1 min. The median and Gaussian filter lengths for meditation tracking are set to 20 s. These filter lengths are chosen to optimize the balance between preserving accurate HR information and reducing noise in the respective tracking scenarios.

Performance metrics

The accuracy of Soli HR is evaluated using five performance metrics: recall rate, MAE, MAPE, 95th percentile AE, and 95th percentile APE. The recall rate measures the percentage of data samples with determined HR values. It is calculated by dividing the number of samples with determined HRs by the total number of samples. AE and APE are computed for each determined HR sample to quantify the differences between the Soli HR and the ground-truth HR. The MAE is then determined by averaging the AEs over the investigated sample set, providing a measure of the average absolute error, i.e.,

with \(\text{ hr}_n\) and \(\widehat{\text{ hr }}_n\) representing the reference and estimated HRs, respectively. Similarly, the MAPE is calculated by averaging the APEs, indicating the average percentage error, i.e.,

Additionally, the 95th percentile AE and 95th percentile APE are calculated from the distribution of AEs and APEs, respectively. These metrics represent the value below which \(95\%\) of the absolute or percentage errors fall, providing insight into the performance at higher error levels.

In Tables 1 and 3, we have also included the R-squared value, denoted as \(\text{ R}^2\), as an additional metric to offer insights into the model’s performance. This statistical measure quantifies the proportion of HR variation that the model can predict compared to the total variation in HR. It is calculated as:

with \(\bar{\text{ hr }} = \frac{1}{N} \sum _{n=1}^N \text{ hr}_n\) representing the averaged HR over all data samples.

Ethical approval

All experiments and methods were performed in accordance with the relevant guidelines and regulations. Informed consent was obtained from all subjects and/or their legal guardian(s). The human heart rate collection protocol was approved by the Institutional Review Board of Google (IRB no. Pro00042166).

Data availability

The datasets produced and/or analyzed during the study are currently not publicly available. Requests for access to the data may be directed to the corresponding author.

References

CDC. Sleep and chronic disease (2021).

Tang, Y. Y., Hölzel, B. K. & Posner, M. I. The neuroscience of mindfulness meditation. Nat. Rev. Neurosci. 16, 213–225. https://doi.org/10.1038/nrn3916 (2015).

Kratz, L. J., Mekic, M., van der Veen, J. & Kerkhof, G. A. Wearables for monitoring sleep and sleep-related disorders: A comprehensive review. Sleep Med. Rev. 55, 101382. https://doi.org/10.1016/j.smrv.2020.101382 (2020).

SpillmanJr, W. B. et al. A ‘smart’ bed for non-intrusive monitoring of patient physiological factors. Meas. Sci. Technol. 15, 1614. https://doi.org/10.1088/0957-0233/15/8/032 (2004).

Laurino, M. et al. A smart bed for non-obtrusive sleep analysis in real world context. IEEE Access 8, 45664–45673. https://doi.org/10.1109/ACCESS.2020.2976194 (2020).

Olsen, M. K., Choi, M. H., Kwon, H. J. & Byun, J. Y. Validity and reliability of wearable sleep-tracking devices. J. Clin. Sleep Med. 16, 2123–2128. https://doi.org/10.5664/jcsm.7803 (2020).

Poh, M.-Z., McDuff, D. J. & Picard, R. W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 18, 10762–10774. https://doi.org/10.1364/OE.18.010762 (2010).

de Haan, G. & van Leest, A. Improved motion robustness of remote-PPG by using the blood volume pulse signature. Physiol. Meas. 35, 1913. https://doi.org/10.1088/0967-3334/35/9/1913 (2014).

Boccignone, G. et al. An open framework for remote-PPG methods and their assessment. IEEE Access 8, 216083–216103. https://doi.org/10.1109/ACCESS.2020.3040936 (2020).

Bae, S. et al. Prospective validation of smartphone-based heart rate and respiratory rate measurement algorithms. Commun. Med. 2, 1–10. https://doi.org/10.1038/s43856-022-00102-x (2022).

Google. Sleep sensing on Google Nest Hub (2021).

Lin, J. C. & Salinger, J. Microwave measurement of respiration, in 1975 IEEE MTT-S International Microwave Symposium Digest, 285–287 (IEEE, 1975).

Li, C., Cummings, J., Lam, J., Graves, E. S. & Wu, W. Radar remote monitoring of vital signs. IEEE Microwave Mag. 10, 47–56 (2009).

Li, C., Lubecke, V. M., Boric-Lubecke, O. & Lin, J. A review on recent advances in Doppler Radar sensors for noncontact healthcare monitoring. IEEE Trans. Microw. Theory Tech. 61, 2046–2060. https://doi.org/10.1109/TMTT.2013.2256924 (2013).

Li, C. et al. A review on recent progress of portable short-range noncontact microwave radar systems. IEEE Trans. Microw. Theory Tech. 65, 1692–1706. https://doi.org/10.1109/TMTT.2017.2650911 (2017).

Mercuri, M. et al. Vital-sign monitoring and spatial tracking of multiple people using a contactless radar-based sensor. Nat. Electron. 2, 252–262. https://doi.org/10.1038/s41928-019-0258-6 (2019).

Park, J.-Y. et al. Preclinical evaluation of noncontact vital signs monitoring using real-time IR-UWB radar and factors affecting its accuracy. Sci. Rep. 11, 23602 (2021).

Motin, M. A., Karmakar, C. K. & Palaniswami, M. An EEMD-PCA approach to extract heart rate, respiratory rate and respiratory activity from PPG signal, in 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 3817–3820, https://doi.org/10.1109/EMBC.2016.7591560 (2016).

Tariq, A. & Ghafouri-Shiraz, H. Vital signs detection using Doppler radar and continuous wavelet transform, in Proceedings of the 5th European Conference on Antennas and Propagation (EUCAP), 285–288 (2011).

Li, C., Ling, J., Li, J. & Lin, J. Accurate Doppler radar noncontact vital sign detection using the RELAX algorithm. IEEE Trans. Instrum. Meas. 59, 687–695. https://doi.org/10.1109/TIM.2009.2025986 (2010).

Arsalan, M., Santra, A. & Will, C. Improved contactless heartbeat estimation in FMCW radar via Kalman filter tracking. IEEE Sens. Lett. 4, 1–4. https://doi.org/10.1109/LSENS.2020.2983706 (2020).

Muramatsu, S., Yamamoto, M., Takamatsu, S. & Itoh, T. Non-contact heart sound measurement using independent component analysis. IEEE Access 10, 98625–98632. https://doi.org/10.1109/ACCESS.2022.3206467 (2022).

Iyer, S. et al. mm-Wave radar-based vital signs monitoring and arrhythmia detection using machine learning. Sensors 22, 3106 (2022).

Ha, U., Assana, S. & Adib, F. Contactless seismocardiography via deep learning radars, in Proceedings of the 26th Annual International Conference on Mobile Computing and Networking, MobiCom ’20, https://doi.org/10.1145/3372224.3419982 (Association for Computing Machinery, New York, NY, USA, 2020).

Alizadeh, M., Shaker, G., Almeida, J. C. M. D., Morita, P. P. & Safavi-Naeini, S. Remote monitoring of human vital signs using mm-wave FMCW radar. IEEE Access 7, 54958–54968. https://doi.org/10.1109/ACCESS.2019.2912956 (2019).

Commission, F. C. Order granting petition for waiver of section 15.255(c)(3) of the commission’s rules applicable to radars used for short-range interactive motion sensing in the 57-64 GHz frequency band (2018).

Trotta, S. et al. SOLI: A tiny device for a new human machine interface, in 2021 IEEE International Solid- State Circuits Conference (ISSCC), Vol. 64, 42–44, https://doi.org/10.1109/ISSCC42613.2021.9365835 (2021).

Hayashi, E. et al. RadarNet: Efficient gesture recognition technique utilizing a miniature radar sensor, in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, CHI ’21, (Association for Computing Machinery, 2021). https://doi.org/10.1145/3411764.3445367

Lien, J. et al. Soli: Ubiquitous gesture sensing with millimeter wave radar. ACM Trans. Graph.https://doi.org/10.1145/2897824.2925953 (2016).

Richards, M. A. Fundamentals of Radar Signal Processing (McGraw-Hill, 2005).

Golub, G. H. & Van Loan, C. F. Matrix Computations (Johns Hopkins Univ. Press, 1996).

Mercuri, M. et al. Automatic radar-based 2-D localization exploiting vital signs signatures. Sci. Rep. 12, 7651 (2022).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016).

Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017).

Abadi, M. et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. Software available from tensorflow.org. https://www.tensorflow.org/ (2015).

Abadi, M. et al. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv:1603.04467 (2016).

Carneiro, T. et al. Performance analysis of Google Colaboratory as a tool for accelerating deep learning applications. IEEE Access 6, 61677–61685. https://doi.org/10.1109/ACCESS.2018.2874767 (2018).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Acknowledgements

The authors gratefully acknowledge the support and contributions of Jessie Yang, Keith Klumb, Jenna Drumright, Billie Whitehouse, Madeleine Gong, Ben Wong, Alex Paniutin, Ranjit Deshpande, Shruti Gupta, Alan Laursen, William Tran, Rafal Protasiuk, Farhana Khan, Andrew Bartunek and other unnamed individuals in program management, hardware and software development, and data collection.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

This study was funded by Alphabeta Inc. All authors are affiliated with Alphabet, either as current employees or former employees, and may hold stock as a component of their standard compensation package.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, L., Lien, J., Li, H. et al. Soli-enabled noncontact heart rate detection for sleep and meditation tracking. Sci Rep 13, 18008 (2023). https://doi.org/10.1038/s41598-023-44714-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-44714-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.