Abstract

For patients suffering from central nervous system tumors, prognosis estimation, treatment decisions, and postoperative assessments are made from the analysis of a set of magnetic resonance (MR) scans. Currently, the lack of open tools for standardized and automatic tumor segmentation and generation of clinical reports, incorporating relevant tumor characteristics, leads to potential risks from inherent decisions’ subjectivity. To tackle this problem, the proposed Raidionics open-source software has been developed, offering both a user-friendly graphical user interface and stable processing backend. The software includes preoperative segmentation models for each of the most common tumor types (i.e., glioblastomas, lower grade gliomas, meningiomas, and metastases), together with one early postoperative glioblastoma segmentation model. Preoperative segmentation performances were quite homogeneous across the four different brain tumor types, with an average Dice around 85% and patient-wise recall and precision around 95%. Postoperatively, performances were lower with an average Dice of 41%. Overall, the generation of a standardized clinical report, including the tumor segmentation and features computation, requires about ten minutes on a regular laptop. The proposed Raidionics software is the first open solution enabling an easy use of state-of-the-art segmentation models for all major tumor types, including preoperative and postsurgical standardized reports.

Similar content being viewed by others

Introduction

Central nervous system (CNS) tumors, further classified into taxonomic categories as per iterative editions from the World Health Organization1, depict all possible tumors originating from the brain or spinal cord. Given more than 100 subtypes, the heterogeneity in a tumor expression (i.e., location, growth rate, or invasiveness) leads to a likewise heterogeneous prognosis. Most patients will experience neurological and cognitive deficits over time2, with survival expectancy ranging from weeks to several years depending on the tumor type and grade. Primary tumors, emanating from the brain itself or its supporting tissues, represent the vast majority of CNS tumors. As opposed to secondary tumors, arising elsewhere in the body and then transferred to the brain (i.e., metastases). In the former, the most frequent subtypes arise either from the brain’s glial tissue (i.e., gliomas) or from the meninges (i.e., meningiomas). The most aggressive gliomas, further categorized as glioblastomas (noted GBM), remain among the most difficult cancers to treat with an extremely short overall survival3. Less aggressive entities, categorized as diffuse lower-grade gliomas (noted LGG), are infiltrative neoplasms like other gliomas, highly invasive, and impossible to resect4. Initial tumor discovery, treatment decisions, and preoperative prognosis assessment are based on the analysis of a set of magnetic resonance (MR) scans. For maximizing patient outcome and facilitating optimal treatment decisions, the utmost accuracy during the diagnostics phase is imperative from the multidisciplinary team of surgeons, radiologists, and oncologists. The coupling of MR scans to genetic and histopathological findings from tissue analysis5 has shown benefits to narrow the tumor classification and presence of mutations, further assisting to refine clinical outcomes and guide clinical decision making6,7. Currently, informative tumor characteristics are estimated from the MR scans either through crude measuring techniques (e.g., eyeballing or short-axis diameter estimation) or after manual tumor delineation. However, such procedures are either inherently time-consuming or often liable to intra and inter-rater variability. A lack of user-friendly software solutions for retrieving quantitative and standardized information for patients with intracranial tumors stands out as a major hurdle preventing widespread access in clinical practice, clinical research, or over tumor registries.

The task of automatic brain tumor segmentation from preoperative MR scans is an actively researched field8,9,10. Multiple previous studies did not disambiguate between CNS tumor types11,12,13,14,15 and trained generic segmentation models, investigating at the same time other tasks such as classification or survival estimation. Most studies investigating brain tumor segmentation globally have used the public dataset from the BraTS challenge16. A constant attention is upheld by the community thanks to the MICCAI challenge occuring every year since 2012, promoting research on glioma sub-regions segmentation and classification to predict clinical biomarkers status. The dataset contains a patient cohort of up to 2040 patients and multiple MR sequences included for each patient: T1-weighted (T1w), gadolinium-enhanced T1-weighted (T1c), T2-weighted fluid attenuated inversion recovery (FLAIR), and T2-weighted (T2). As a result, the most studied CNS tumor in the literature is by far the glioma, including both GBM and LGG. The current state-of-the-art baseline method for tumor segmentation is the nnU-Net framework17, which is a typical encoder decoder architecture coupled to a smart parameters optimization scheme for preprocessing and training, to cater to the input dataset. Average Dice scores about 90% have been reached over contrast-enhancing tumor, necrosis, or edema sub-regions. For the meningioma subtype, a literature review has made an inventory of all studies performed between 2008 and 202018. At best, no more than 130 patients were included to train models using widespread 3D neural network architectures, achieving average Dice scores around 90%19,20. In our recent study21, a much larger dataset with 700 patients was used, for similar overall performances. The validation was extended to show robustness across MR scan resolution and tumor volume. Finally, brain metastasis segmentation has been investigated over multicentric and multi-sequence datasets of up to 200 patients22,23,24,25, achieving on average up to 80% Dice score using either the DeepLabV326 or the DeepMedic architecture27.

To summarize, the task of CNS tumor segmentation has been well investigated on preoperative MR scans, favoring GBM and LGG subtypes through unrestricted access to an open and annotated dataset. Conversely, segmentation in postoperative MR scans has been scarcely addressed as of yet due to its unparalleled difficulty and lack of public data. Recently, Lotan et al. proposed to fuse two of the top-ranked BraTS methods for performing both pre- and postoperative GBM segmentation28. Over the 20 postoperative MR samples constituting the test set, an average Dice score of 74% was reached over the contrast-enhancing subregion. Having access to a dataset of a larger magnitude including 500 patients, an average Dice score of 69% was reported using all MR sequences as input and an ensemble of nnU-Net models29. Finally, similar performances were reached on a dataset including up to 900 patients and multiple MR sequences, also leveraging the nnU-Net architecture30. Being able to generate high-quality automatic segmentations is a mandatory initial step to provide reproducible and trustworthy standardized reports to characterise the tumor (RADS). The ultimate objective is to assist the clinical team in making the best assessment regarding treatment options and patient outcome. However, the segmentation quality has only very recently reached an acceptable threshold and as such the literature on RADSs for CNS tumors is scarce. For preoperative glioblastoma surgery, tumor features such as volume, multifocality, and location with respect to cortical and subcortical structures was presented31. An excellent agreement between features collected from the automatic segmentation and the manual segmentation was reported. For post-treatment investigations, a structured set of rules was suggested, deprived of any automatic segmentation or image analysis support32.

For use in routine clinical practice, the aforementioned segmentation models or RADS methods must be packaged into well-rounded solutions directly usable by most practitioners. A web imaging platform for radiology is being developed, Open Health Imaging Foundation (OHIF)33, leaving the possibility to interface developed methods through custom plugins, and run processes on a server either locally to a hospital with access to PACS or remote. MONAI, a multipotent toolbox covering a wide range of use-cases including brain tumors, is being actively developed34. While the MONAI Label component is meant as a development tool for creating or refining segmentation models through manual annotation, the MONAI Deploy component focuses on bringing AI-driven applications into the healthcare imaging domain. Even though custom plugins can be developed in both solutions, no trained models for CNS tumor segmentation or standardized reporting are available. On the other hand, less advanced or refined solutions have been developed, focusing on the task at hand. A toolkit has been developed for running preoperative GBM segmentation models from the BraTS challenge, with a very minimalistic graphical user interface (GUI)35. Inside the 3D Slicer software36, often used by clinicians for performing semi-manual tumor delineation, plugins have been developed to facilitate the deployment of custom models with DeepInfer37 or with existing brain tumor segmentation models with DeepSeg38. To conclude, some focus has recently been dedicated to the accessibility of developed methods, yet not many solutions are providing trained segmentation models for CNS tumors other than preoperative gliomas. In instances where trained segmentation models and inference scripts are publicly available, some extent of computer science and programming knowledge is required for running inference scripts locally on MR scans. However, this process is too overwhelming for most clinicians and hospital practitioners. Finally, no open solution exists offering the possibility to perform clinical reporting in a standardized fashion (i.e., RADS).

Upon initial publication39, a Raidionics prototype was introduced, first open-source solution, offering the possibility to segment the most frequent brain tumor types in preoperative MR scans (namely glioblastoma, diffuse lower-grade glioma, meningioma, and metastasis). One RADS mode was also available for describing the segmented tumor in terms of overall location in the brain and respective location against cortical and subcortical structures. In this paper, the first complete and stable Raidionics software version is presented, including the following novelties. First, (i) the GUI has been completely redesigned, requiring only a few clicks and no programming skills to run segmentation and reporting tasks, either for single patients or entire cohorts. In the meantime, the processing backbone remains independently available to users with programming experience or for PACS integration. Second, (ii) the preoperative segmentation models have been improved, trained and validated using various datasets, reaching performances on-par or better than state-of-the-art reported performances. Third, (iii) an early postoperative glioblastoma residual tumor segmentation model has been included; the first open-access model for the task. Finally, (iv) a standardized report for postsurgical assessment has been incorporated.

Data

In this work, four curated datasets were leveraged to train and develop the proposed methods, one for each considered CNS tumor type. For glioblastoma (GBM), 2125 T1c patient scans were gathered from 15 institutions. For diffuse lower-grade glioma (LGG), 678 FLAIR patient scans were compiled from four institutions. For meningioma, 706 T1c patient scans were retrieved from two institutions, and finally 394 T1c patient scans were collected from two institutions for metastasis. More in-depth descriptions of the different datasets have been reported in a previous study39.

All tumors were manually delineated by trained raters, under supervision of neuroradiologists and neurosurgeons. The tumor was defined as gadolinum-enhancing tissue, including non-enhancing enclosed necrosis or cysts in T1c scan and as the hyperintense region in FLAIR scan. Initial segmentations were performed using either a region growing algorithm40 or a grow-cut algorithm41, followed by manual correction.

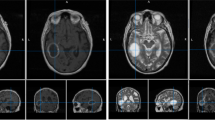

Illustration of the raidionics software GUI in single patient mode, after generating the standardized report over a glioblastoma case. The left side presents the tumor characteristics belonging to the report, the central part offers a set of 2D views, and the right panel shows loaded MR scans and corresponding annotations.

Methods

To make the segmentation models and standardized generation of clinical reports easily available to a wider audience, the Raidionics software has been developed with a special care towards the user interface design. In the following sections, the different components of the software are described, the strategy for training the segmentation models is presented, and both pipelines for pre- and postoperative clinical reporting are explained.

The institutional review board approval was obtained from the Norwegian regional ethics committee (REK ref. 2019/510). Written informed consent was obtained from patients included in this study as required for each participating hospital. All methods were carried out in accordance with relevant guidelines and regulations.

Modes

In Raidionics, two main modes are available: single patient mode (illustrated in Fig. 1) and batch/study mode (illustrated in Fig. 2). In single patient mode, direct visualization and interaction with patient’s data and corresponding results is available. In this mode, the GUI is split into three main components, starting with the left side panel relating to patient import and browsing of standardized reports. Patients can be saved, closed, reloaded, and renamed for only one patient displayed at any given time. In the center panel, three 2D viewers are proposed for displaying the selected MR scan following standard axial, coronal, and sagittal slicing. All views are interconnected and aligned under the same 3D location, adjustable by mouse clicking, and represented by the two cross-hair green dotted lines. Finally, the panel on the right side of the interface lists all MR scans and corresponding annotations or structural atlases for each scan, for the given patient. While only one MR scan can be toggled visible at the time, multiple annotations or structural atlases can be freely overlaid. Each overlaid item can be customized in color and opacity, improving the display, and allowing the generation of illustrations. By selecting the Actions tab, automatic segmentation or standardized reporting processes can be launched for the current patient. In batch/study mode, cohorts of patients can be loaded and processed sequentially, without any direct visualization or possibility to interact with the results. The GUI is likewise split into three components in this mode with a left side panel relating to study creation and import. For each study, patients can be imported either by careful selection of a few patient folders, or by selection of a cohort folder (i.e., containing multiple folders, one per patient). The launching of a segmentation or reporting process over an entire cohort can also be performed from within each study. In the center panel, all included patients for the current study are listed, can be removed, and can be opened in single patient mode for viewing and interaction purposes. Finally, the right panel proposes different summary tables, starting with a content summary listing all files included for all patients. In addition, annotation and standardized reporting tables are included to provide an overview of all extracted parameters for the patients of a given cohort.

Data import

Data can be imported in Raidionics in different formats, either as raw DICOM folders originating from PACS or as converted volumes in popular formats such as NIfTI (.nii, .nii.gz), MetaImage (.mhd), or NRRD (.nrrd). More generally, all formats accepted in SimpleITK42, underlying Python library used for reading converted volumes, are likewise approved. Upon import, all MR scans are internally converted to NIfTI format for subsequent processing. Annotation files can also be imported manually, requiring matching volume parameters (i.e., shape, spacing, and orientation) to the corresponding MR scan. Upon loading, if multiple MR scans were imported for the current patient, the annotation must be linked to the proper MR scan using the drop-down selector (named Parent MRI, in the right side panel).

Within Raidionics, all MR scans are expected to be associated to data timestamps organized in ascending order, allowing the disambiguation between preoperative and postoperative content loaded simultaneously for a patient. Timestamps can be manually edited in the right side panel of the single patient mode interface.

Overall, four approaches are available for loading patient data into the software. First, a DICOM folder can be selected, and a pop-up window will allow for selecting specific MR acquisitions or importing all available acquisitions in a bulk. Second, converted MR scans can be individually selected, automatically linked to the current data timestamp. Third, an entire folder can be selected, either containing multiple converted MR scan files or multiple sub-folders. In the latter, the data is assumed to be split into data timestamps, and loaded data will be organized as such. Finally, reopening a patient folder, previously saved through Raidionics, can be done by selecting the corresponding custom scene file (.raidionics).

Storing results

For each patient, all results are stored inside the corresponding folder, including a custom .raidionics file for fast reloading. This concept is similar to scene files from 3D Slicer stored as .mrml on disk. All volume files are stored as NIfTI (i.e., MR scan, segmentations, and atlases), statistics are stored as comma-separated values files (.csv), and standardized reports are stored as text files (.txt), json files (.json), and csv files (.csv).

Tumor segmentation

All preoperative CNS tumor segmentation models included in the software have been trained with the AGU-Net architecture21 using five levels with \(\{16, 32, 128, 256, 256\}\) as filter sizes, deep supervision, multiscale input, and single attention modules. Unlike the originally published architecture, all batch normalization layers have been removed and a patch-wise approach for training and inference was followed, with \(160^{3}\) voxels as patch dimension. The preprocessing was limited to a \(0.75\,\text {mm}^{3}\) isotropic resampling, skull-stripping (except for the meningioma subtype), intensity clipping to remove the 0.05% highest values, and finally intensity normalization and scaling to [0, 1]. While more advanced normalization algorithms exist in the literature43, increased computational costs would be expected. Training has been performed with batch size four and using the Adam optimizer with an initial learning rate of \(5 \cdot 10^{-4}\). In addition, a gradient accumulation of 8 steps was performed, resulting in a virtual batch size of 32 samples, using the open TensorFlow model wrapper implementation44. The number of updates per epoch has been limited to 512, and an early stopping scheme was setup to stop training after 15 consecutive epochs without validation loss improvement.

For early postoperative segmentation of glioblastomas, the model available in Raidionics has been introduced in a recent study30.

Features extraction and standardized reporting (RADS)

The overall process for segmentation and standardized report generation with relevant tumor characteristics is depicted in Fig. 3. For the generation of standardized preoperative clinical reports in a reproducible fashion, the computation of tumor characteristics was performed after alignment to a standard reference space, the symmetric Montreal Neurological Institute (MNI) ICBM2009a atlas45,46. The patient’s input MR scan was registered to the corresponding atlas file using the SyN method from the Advanced Normalization Tools (ANTs)47. The collection of computed tumor features includes: volume, laterality, multifocality, cortical structure location profile, and subcortical structure location profile. Specifically tailored for glioblastomas, resectability features are therefore not available for the other CNS tumor types. A more in-depth description of the computed parameters is available in our previous study48.

For postsurgical assessment, both preoperative and postoperative volumes, extent of resection (EOR), and EOR patient classification are automatically extracted, following the latest guidelines49.

Deployment

Installation executables have been created for cross-platform use of Raidionics, compatible with Windows (\(\ge\) 10), Ubuntu Linux (\(\ge\) 18.04), and macOS (\(\ge\) Catalina 10.15) including ARM-based Apple M1. The selected inference engine to run the segmentation models is ONNX Runtime, supporting models from various deep learning frameworks and widely compatible across hardware, drivers, and operating systems.

The core computational backend (i.e., without any GUI) is also available for experienced users, allowing for direct use either through the command-line interface, as a Python library, or as a Docker container. In addition, a Raidionics 3D Slicer plugin is available, directly leveraging the backend Docker container.

Results

Experiments were carried out on multiple machines with the following specifications: Intel Xeon W-2135 CPU @3.70 GHz x 12, 64 GB of RAM, NVIDIA Tesla V100S (32GB), and a regular hard-drive. The implementation was done in Python 3.7, using PySide6 v6.2.4 for the GUI, TensorFlow v2.8 for training the segmentation models, and ONNX Runtime v1.12.1 for running model inference.

Segmentation performance

All preoperative CNS segmentation models were trained from scratch under the same k-fold cross-validation paradigm whereby one fold was used as validation set, one fold as test set, and all remaining folds constituted the training set. For the glioblastoma subtype, a leave-one-hospital-out cross-validation paradigm was followed, equivalent to a 15-fold cross-validation. Pooled estimates, computed from each fold’s results, are reported for each measurement50. Overall, measurements are reported as mean and standard deviation (indicated by ±) in the tables.

A summary of the segmentation performances for the models packaged in Raidionics is provided in Table 1. For all preoperative models, an average Dice score of 85% and patient-wise F1-score of 95% are achieved, highlighting a high segmentation quality. The lowest Dice score of 78% is obtained for the LGG subtype, which can be explained by the diffuse nature of such tumors, more difficult to fully delineate in FLAIR MR scans. Overall, segmentation performances are largely stable across the different CNS tumor subtypes, with extremely accurate sensitivity and specificity of the different models. The models are quite conservative with few false positives, and simultaneously efficient with few tumors missed. For multifocal tumors, often with satellite foci clearly smaller than the main focus, an average object-wise recall of 80% is achieved, indicating a struggle to properly segment tiny structures. A similar decrease in object-wise precision can be acknowledged, around 87% on average, symptomatic of a segmentation excess over false positive areas, locally resembling contrast-enhancing tumor tissue.

To further investigate segmentation performances from a tumor volume standpoint, an empirical categorization was made to single out small tumors. The volume cut-off was set to 2 ml for all CNS tumor subtypes, except for the LGG subtype where it was set to 5 ml . With a volume cut-off set at one-tenth of the average tumor for each subtype, enough cases are featured in the small tumor category for providing relevant results. The categorized segmentation performances based on tumor volume are reported in Table 2. Unsurprisingly, segmentation performances obtained over non-small tumors are excellent with 99% patient-wise recall and up to 90% Dice-TP for the metastasis subtype. In comparison, the average Dice score for the small tumors category lies closer to 60%, achieving barely 75% patient-wise recall globally. Such performances are less enticing as they highlight limitations for using the packaged models to perform early-stage tumor detection. However, larger performance discrepancies for the small tumors category across the different CNS subtypes can be observed. The lowest Dice and patient-wise recall values are repeatedly obtained for the LGG subtype, whereas a 92% patient-wise recall is obtained for the metastasis subtype. Compared to previous publications using the AGU-Net architecture in a full volume fashion over the same task21,39, using a patch-wise strategy improved segmentation performances overall, especially over small tumors.

Runtime experiments

A comparison in runtime processing speed using Raidionics for generating the segmentation masks and standardized reports is provided in Table 3. For each CNS tumor subtype, a representative MR scan, with dimension in voxels indicated in parenthesis in the table, was processed five times in a row and speed results were averaged. Two different machines were used: a high-end desktop computer with an Intel Xeon W-2135 CPU (@3.7GHz) and 64GB of RAM (noted Desktop), and a mid-end laptop computer with an Intel Core Processor (i7@1.9GHz) and 16GB of RAM (noted Laptop).

For preoperative tasks, an average of one minute is necessary for generating the tumor segmentation mask, and around six minutes in total for computing the standardized report, using the desktop machine. When using a computer with less computational power, the processing speed is halved on average as indicated by the laptop runtime results. A large runtime variation can also be noticed from the MR scan dimensions. The image registration to MNI space takes three times longer over the highest resolution images, whereas the computation of the standardized report in itself remains around two minutes overall. Considerable speed improvement would be obtained by downsampling the high resolution MR scans before computing the standardized report, especially when processing a patient cohort. Regarding the postoperative task, a combination of MR scans is required, including T1-weighted and contrast-enhanced T1-weighted sequences. As such, the brain must be segmented independently in four MR scans, increasing the runtime to 40 seconds on average. Similarly, the tumor must be segmented in both pre- and postoperative contrast-enhanced T1-weighted scans. Hence, the generation of a postoperative standardized report requires from five minutes on the desktop machine to ten minutes on the laptop. In general, brain segmentation is three to four times faster to perform than tumor segmentation due to a design choice. On one hand, the brain segmentation model is run in single-shot inference over a downsampled version of the whole input MR scan. On the other hand, iterative inference is performed over patches from the input MR scan with tumor segmentation models. As a result, the segmentation runtime increases with the number of patches to process.

Discussion

In this study, the Raidionics software has been presented for enabling the use of CNS tumor segmentation models and standardized reporting methods, through a carefully designed GUI. The software is the first to provide access to competitive preoperative segmentation models for the most common CNS tumor types (i.e., GBM, LGG, meningioma, metastasis) in addition to an early postoperative GBM segmentation model. Standardized reports can be generated to automatically and reproducibly characterize a preoperative tumor or provide a postsurgical assessment through volume and extent of resection computation. Furthermore, new preoperative CNS tumor segmentation models were trained using the AGU-Net architecture, and thoroughly validated. The use of a patch-wise approach, conversely to the full volume approach, allows for a more efficient segmentation of smaller tumors, with a drop in performances noticed below a 2 ml volume cut-off. For reference, the average glioblastoma, meningioma, and metastasis volumes in our datasets are 34 ml, 19 ml, and 17 ml, respectively. In that regard, the reported performances only start decreasing for tumors more than ten times smaller than average.

Previously, the preoperative CNS segmentation models included in the Raidionics prototype were all trained following a full volume approach39, with a well-identified drawback in the inability to segment accurately the smallest structures. The use of patch-wise techniques leads to improved recall performances, but sometimes at the expense of precision due to the generation of more false positive predictions. By training our AGU-Net architecture with a patch-wise approach, higher recall performances were obtained whereas satisfactory precision performances were retained. For preoperative glioblastoma segmentation, an average F1-score of almost 97% is being reported in this study, higher than the 94% reported over the same patient cohort using the nnU-Net architecture48. Models trained with both architectures reached 98% recall, but the AGU-Net discriminate better with up to 95% precision. Whereas overall segmentation performances are satisfying, the performances for the smallest tumors with a \(<2\) ml volume still needs to be improved, especially for early-stage tumor detection during screening or incidental finding. Postoperatively, only the segmentation of glioblastoma is supported in the software as it represents the only CNS tumor subtype currently investigated in the literature. While metrics performances seem substandard, they were shown to be comparable to human rater performance on real world MRI scans30.

Currently, the standardized preoperative reports provide a CNS tumor analysis focusing heavily on overall location in the brain and respective location in relation to cortical and subcortical structures. As it stands, the set of extracted characteristics may not be sufficiently exhaustive to be used as part of preoperative surgical assessment meetings. Similarly, the postoperative standardized report limits itself to the most important parameter to assess, the extent of resection. Nevertheless, the robust, reproducible, and standardized computation of such reports is the first of its kind to be freely available and the list of computed characteristics can be extended in the future. As a side note, Raidionics allows the user to provide already acquired tumor segmentation masks (i.e., manually or semi-automatically) for computing the standardized report. Bypassing the automatic segmentation process can be extremely valuable as segmentation models are not perfect and might fail to segment, either fully or partially.

Compared to the initial prototype, Raidionics is now a well-rounded and stable software solution, working across all major operating systems, with a welcoming GUI. Clinical end-users can generate the needed segmentations or reports in a few clicks, over single cases or patient cohorts, and directly visualize the results within the software. Users with programming knowledge have the possibility to circumvent the use of the GUI altogether. A stand-alone backend library, used for running the segmentation and standardized reporting tasks, has also been made available both as a Python package and as a Docker image. Furthermore, the possibility is given to integrate the Raidionics backend into other frameworks with relative ease. For example, a plugin for 3D Slicer has been developed, using the Docker image for running all computation. In a similar fashion, a direct integration towards PACS or inside OHIF can be established in the near future. To include a larger assortment of segmentation models in the future, the decision was made to use an open standard for machine learning interoperability (i.e., ONNX). Models trained using the most common deep learning frameworks (i.e., TensorFlow, PyTorch) can be easily converted to ONNX and deployed inside Raidionics. In addition, the ONNX runtime libraries have been designed to maximize performance across hardware and should provide a better user experience.

The Raidionics environment is under active development with the intent to release more segmentation models and expand the list of characteristics constituting the standardized reports. Better postoperative segmentation models will be investigated, not only for remnant tumor detection but also postoperative complications like hemorrhages or infarctions. For research and benchmarking purposes, a metrics computation module and heatmap location generator module are prospective components to be included. Finally, open-source and state-of-the-art models could be included to extend the record of brain structures to segment. As additional future work, the proposed software could be extended to other clinical areas whereby the segmentation of organs such as the liver and kidneys remains challenging51. Methods used for their automated segmentation generally needs improvements due to unclear boundaries, inhomogeneous intensities, and the presence of adjacent organs. For instance, capsule networks could be favored for classification or segmentation as they better model features’ spatial relationships52. All users are invited to provide feedback for improvement or contribute code directly to the Raidionics environment at https://github.com/raidionics. In addition, project collaborations for testing the software in clinical practice or data-sharing for the training of better models are more than welcome.

Data availability

The data analyzed in this study is subject to the following licenses/restrictions: patient data are protected under GDPR and cannot be publicly distributed. Requests to access these datasets should be directed to David Bouget (david.bouget@sintef.no) for consideration.

Accession codes

The Raidionics environment with all related information is available at https://github.com/raidionics. More specifically, all trained models can be accessed at https://github.com/raidionics/Raidionics-models/releases/tag/1.2.0, the Raidionics software can be found at https://github.com/raidionics/Raidionics, and the corresponding 3D Slicer plugin at https://github.com/raidionics/Raidionics-Slicer.

References

Louis, D. N. et al. The 2021 WHO classification of tumors of the central nervous system: A summary. Neuro. Oncol. 23, 1231–1251. https://doi.org/10.1093/neuonc/noab106 (2021).

Day, J. et al. Neurocognitive deficits and neurocognitive rehabilitation in adult brain tumors. Curr. Treat. Opt. Neurol. 18, 1–16. https://doi.org/10.1007/s11940-016-0406-5 (2016).

Lapointe, S., Perry, A. & Butowski, N. A. Primary brain tumours in adults. The Lancet 392, 432–446. https://doi.org/10.1016/S0140-6736(18)30990-5 (2018).

Cancer Genome Atlas Research Network. Comprehensive, Integrative Genomic Analysis of Diffuse Lower-Grade Gliomas. New Engl. J. Med.372, 2481–2498. https://doi.org/10.1056/NEJMoa1402121 (2015).

Kaya, B. et al. Automated fluorescent miscroscopic image analysis of ptbp1 expression in glioma. PLoS ONE 12, e0170991 (2017).

Appin, C. L. & Brat, D. J. Molecular genetics of gliomas. Cancer J. 20, 66–72. https://doi.org/10.1097/PPO.0000000000000020 (2014).

Jiao, Y. et al. Frequent ATRX, CIC, FUBP1 and IDH1 mutations refine the classification of malignant gliomas. Oncotarget3, 709, https://doi.org/10.18632/oncotarget.588 (2012).

Wadhwa, A., Bhardwaj, A. & Verma, V. S. A review on brain tumor segmentation of MRI images. Magn. Reson. Imaging 61, 247–259. https://doi.org/10.1016/j.mri.2019.05.043 (2019).

Tiwari, A., Srivastava, S. & Pant, M. Brain tumor segmentation and classification from magnetic resonance images: Review of selected methods from 2014 to 2019. Pattern Recogn. Lett. 131, 244–260. https://doi.org/10.1016/j.patrec.2019.11.020 (2020).

Havaei, M. et al. Brain tumor segmentation with Deep Neural Networks. Med. Image Anal. 35, 18–31. https://doi.org/10.1016/j.media.2016.05.004 (2017).

Pereira, S., Pinto, A., Alves, V. & Silva, C. A. Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans. Med. Imaging 35, 1240–1251. https://doi.org/10.1007/s10916-019-1416-0 (2016).

Myronenko, A. 3D MRI brain tumor segmentation using autoencoder regularization. In International MICCAI brainlesion workshop, 311–320, https://doi.org/10.1007/978-3-030-11726-9_28 (Springer, 2019).

Ranjbarzadeh, R. et al. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci. Rep. 11, 1–17. https://doi.org/10.1038/s41598-021-90428-8 (2021).

Naser, M. A. & Deen, M. J. Brain tumor segmentation and grading of lower-grade glioma using deep learning in MRI images. Comput. Biol. Med. 121, 103758. https://doi.org/10.1016/j.compbiomed.2020.103758 (2020).

Sun, L., Zhang, S., Chen, H. & Luo, L. Brain tumor segmentation and survival prediction using multimodal MRI scans with deep learning. Front. Neurosci. 13, 810. https://doi.org/10.3389/fnins.2019.00810 (2019).

Menze, B. H. et al. The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med. Imaging 34, 1993–2024. https://doi.org/10.1109/TMI.2014.2377694 (2014).

Isensee, F., Jaeger, P. F., Kohl, S. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211. https://doi.org/10.1038/s41592-020-01008-z (2021).

Neromyliotis, E. et al. Machine learning in meningioma MRI: past to present. A narrative review. J. Magn. Reson. Imaging 55, 48–60. https://doi.org/10.1002/jmri.27378 (2022).

Laukamp, K. R. et al. Fully automated detection and segmentation of meningiomas using deep learning on routine multiparametric MRI. Eur. Radiol. 29, 124–132. https://doi.org/10.1007/s00330-018-5595-8 (2019).

Laukamp, K. R. et al. Automated meningioma segmentation in multiparametric MRI: comparable effectiveness of a deep learning model and manual segmentation. Clin. Neuroradiol. 31, 357–366. https://doi.org/10.1007/s00062-020-00884-4 (2021).

Bouget, D., Pedersen, A., Hosainey, S. A. M., Solheim, O. & Reinertsen, I. Meningioma segmentation in T1-weighted MRI leveraging global context and attention mechanisms. Front. Radiol.1, https://doi.org/10.3389/fradi.2021.711514 (2021).

Charron, O. et al. Automatic detection and segmentation of brain metastases on multimodal MR images with a deep convolutional neural network. Comput. Biol. Med. 95, 43–54. https://doi.org/10.1016/j.compbiomed.2018.02.004 (2018).

Liu, Y. et al. A deep convolutional neural network-based automatic delineation strategy for multiple brain metastases stereotactic radiosurgery. PLoS ONE 12, e0185844. https://doi.org/10.1371/journal.pone.0185844 (2017).

Grøvik, E. et al. Deep learning enables automatic detection and segmentation of brain metastases on multisequence MRI. J. Magn. Reson. Imaging 51, 175–182. https://doi.org/10.1002/jmri.26766 (2020).

Grøvik, E. et al. Handling missing MRI sequences in deep learning segmentation of brain metastases: a multicenter study. NPJ Digital Med. 4, 33. https://doi.org/10.1038/s41746-021-00398-4 (2021).

Chen, L.-C., Papandreou, G., Schroff, F. & Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv preprint https://doi.org/10.48550/arXiv.1706.05587 (2017).

Kamnitsas, K. et al. DeepMedic for brain tumor segmentation. In Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries: Second International Workshop, BrainLes 2016, with the Challenges on BRATS, ISLES and mTOP 2016, Held in Conjunction with MICCAI 2016, Athens, Greece, October 17, 2016, Revised Selected Papers 2, 138–149, https://doi.org/10.1007/978-3-319-55524-9_14 (Springer, 2016).

Lotan, E. et al. Development and practical implementation of a deep learning-based pipeline for automated pre-and postoperative glioma segmentation. Am. J. Neuroradiol. 43, 24–32. https://doi.org/10.3174/ajnr.A7363 (2022).

Nalepa, J. et al. Deep learning automates bidimensional and volumetric tumor burden measurement from MRI in pre-and post-operative glioblastoma patients. Comput. Biol. Med. 154, 106603. https://doi.org/10.1016/j.compbiomed.2023.106603 (2023).

Helland, R. H. et al. Segmentation of glioblastomas in early post-operative multi-modal MRI with deep neural networks. arXiv preprint https://doi.org/10.48550/arXiv.2304.08881 (2023).

Kommers, I. et al. Glioblastoma surgery imaging-reporting and data system: Standardized reporting of tumor volume, location, and resectability based on automated segmentations. Cancers 13, 2854. https://doi.org/10.3390/cancers13122854 (2021).

Weinberg, B. D. et al. Management-based structured reporting of posttreatment glioma response with the brain tumor reporting and data system. J. Am. Coll. Radiol. 15, 767–771. https://doi.org/10.1016/j.jacr.2018.01.022 (2018).

Urban, T. et al. LesionTracker: Extensible open-source zero-footprint web viewer for cancer imaging research and clinical trials. Can. Res. 77, e119–e122. https://doi.org/10.1158/0008-5472.CAN-17-0334 (2017).

Cardoso, M. J. et al. MONAI: An open-source framework for deep learning in healthcare. arXiv preprint https://doi.org/10.48550/arXiv.2211.02701 (2022).

Kofler, F. et al. BraTS Toolkit: Translating BraTS brain tumor segmentation algorithms into clinical and scientific practice. Front. Neurosci. 125, https://doi.org/10.3389/fnins.2020.00125 (2020).

Pieper, S., Halle, M. & Kikinis, R. 3d slicer. In 2004 2nd IEEE international symposium on biomedical imaging: nano to macro (IEEE Cat No. 04EX821), 632–635, https://doi.org/10.1109/ISBI.2004.1398617 (IEEE, 2004).

Mehrtash, A. et al. DeepInfer: Open-Source Deep Learning Deployment Toolkit for Image-Guided Therapy. In Medical Imaging 2017: Image-Guided Procedures, Robotic Interventions, and Modeling, vol. 10135, 410–416, https://doi.org/10.1117/12.2256011 (SPIE, 2017).

Zeineldin, R. A., Weimann, P., Karar, M. E., Mathis-Ullrich, F. & Burgert, O. Slicer-DeepSeg: Open-source deep learning toolkit for brain tumour segmentation. Curr. Direct. Biomed. Eng. 7, 30–34. https://doi.org/10.1515/cdbme-2021-1007 (2021).

Bouget, D. et al. Preoperative brain tumor imaging: Models and software for segmentation and standardized reporting. Front. Neurol. 1500, https://doi.org/10.3389/fneur.2022.932219 (2022).

Huber, T. et al. Reliability of semi-automated segmentations in glioblastoma. Clin. Neuroradiol. 27, 153–161. https://doi.org/10.1007/s00062-015-0471-2 (2017).

Vezhnevets, V. & Konouchine, V. GrowCut: Interactive multi-label ND image segmentation by cellular automata. In Proceedigns of the Graphicon, vol. 1, 150–156 (Citeseer, 2005).

Yaniv, Z., Lowekamp, B. C., Johnson, H. J. & Beare, R. SimpleITK image-analysis notebooks: A collaborative environment for education and reproducible research. J. Digit. Imaging 31, 290–303. https://doi.org/10.1007/s10278-017-0037-8 (2018).

Goceri, E. Intensity normalization in brain mr images using spatially varying distribution matching. In 11th International Conference on Computer Graphics, Visualization, Computer Vision and Image Processing (CGVCVIP 2017), 300–4 (2017).

Pedersen, A., de Frutos, J. P. & Bouget, D. andreped/GradientAccumulator: v0.4.0, https://doi.org/10.5281/zenodo.7831244 (2023).

Fonov, V. S., Evans, A. C., McKinstry, R. C., Almli, C. R. & Collins, D. Unbiased nonlinear average age-appropriate brain templates from birth to adulthood. NeuroImage S102, https://doi.org/10.1016/S1053-8119(09)70884-5 (2009).

Fonov, V. et al. Unbiased average age-appropriate atlases for pediatric studies. Neuroimage 54, 313–327. https://doi.org/10.1016/j.neuroimage.2010.07.033 (2011).

Avants, B. B., Epstein, C. L., Grossman, M. & Gee, J. C. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Med. Image Anal. 12, 26–41. https://doi.org/10.1016/j.media.2007.06.004 (2008).

Bouget, D. et al. Glioblastoma surgery imaging-reporting and data system: Validation and performance of the automated segmentation task. Cancers 13, 4674. https://doi.org/10.3390/cancers13184674 (2021).

Karschnia, P. et al. Prognostic validation of a new classification system for extent of resection in glioblastoma: A report of the RANO resect group. Neuro Oncol. 24, vii255–vii255. https://doi.org/10.1093/neuonc/noac193 (2022).

Killeen, P. R. An alternative to null-hypothesis significance tests. Psychol. Sci. 16, 345–353. https://doi.org/10.1111/j.0956-7976.2005.01538.x (2005).

Dura, E., Domingo, J., Göçeri, E. & Martí-Bonmatí, L. A method for liver segmentation in perfusion mr images using probabilistic atlases and viscous reconstruction. Pattern Anal. Appl. 21, 1083–1095 (2018).

Goceri, E. Classification of skin cancer using adjustable and fully convolutional capsule layers. Biomed. Signal Process. Control 85, 104949 (2023).

Acknowledgements

All clinical practitioners from the different hospitals who contributed by providing MR scans and manual tumor annotations. Data were processed in digital labs at HUNT Cloud, Norwegian University of Science and Technology, Trondheim, Norway.R.H.H. is supported by a grant from The Research Council of Norway, grant number 323339. D.B., I.R., and O.S. are partly funded by the Norwegian National Research Center for Minimally Invasive and Image-Guided Diagnostics and Therapy.

Funding

Open access funding provided by Norwegian University of Science and Technology.

Author information

Authors and Affiliations

Contributions

D.B. and R.H.H. conceived and conducted the experiments; D.A. and V.G. designed the software; A.P. and D.B. developed the software; D.B. analyzed the results; I.R. and O.S. acquired the fundings; All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bouget, D., Alsinan, D., Gaitan, V. et al. Raidionics: an open software for pre- and postoperative central nervous system tumor segmentation and standardized reporting. Sci Rep 13, 15570 (2023). https://doi.org/10.1038/s41598-023-42048-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-42048-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.