Abstract

With the expansion of electronic health records(EHR)-linked genomic data comes the development of machine learning-enable models. There is a pressing need to develop robust pipelines to evaluate the performance of integrated models and minimize systemic bias. We developed a prediction model of symptomatic Clostridioides difficile infection(CDI) by integrating common EHR-based and genetic risk factors(rs2227306/IL8). Our pipeline includes (1) leveraging phenotyping algorithm to minimize temporal bias, (2) performing simulation studies to determine the predictive power in samples without genetic information, (3) propensity score matching to control for the confoundings, (4) selecting machine learning algorithms to capture complex feature interactions, (5) performing oversampling to address data imbalance, and (6) optimizing models and ensuring proper bias-variance trade-off. We evaluate the performance of prediction models of CDI when including common clinical risk factors and the benefit of incorporating genetic feature(s) into the models. We emphasize the importance of building a robust integrated pipeline to avoid systemic bias and thoroughly evaluating genetic features when integrated into the prediction models in the general population and subgroups.

Similar content being viewed by others

Introduction

With biobank and genetic data integrated with electronic health records (EHR) comes the development of predictive models designed for healthcare applications. There is an urgent need to develop robust modeling pipelines using machine learning (ML) to determine whether EHR-derived common clinical risk factors can predict the phenotype of interest and whether adding genetic factors can improve model performance. Several factors have to be considered during the model development, including (1) selection bias for the biobank data; (2) case–control imbalance; (3) temporal bias in feature acquisition; (4) impact of confounding factors; (5) optimal model selection to capture multi-way interactions; and (6) predictive power and generalizability of the final models. Here we choose Clostridioides difficile infection (CDI) as a proof-of-concept given its complexity and clinical importance. The purpose of this study is to develop an integrated pipeline for predicting symptomatic CDI using common EHR-derived clinical and genetic risk factors. Focusing on symptomatic CDI is driven by the fact that testing and treatment for CDI are not recommended in asymptomatic individuals1.

CDI is considered the most common cause of healthcare-associated diarrhea and is listed as one of the top five urgent antimicrobial resistance threats by the Centers for Disease Control and Prevention (https://www.cdc.gov/drugresistance/biggest-threats.html). Existing literature reporting CDI prediction focuses on three outcomes—symptomatic infection, severity of the infection, and recurrence. The prediction models developed in this field vary by setting, patient recruitment, data source, study design, feature selection, and algorithms. EHR-based studies have become popular due to their improved predictability, specificity, and generalizability. Host genetic susceptibility to CDI and epidemiology of C. difficile strains have been topics of investigations2,3. Intestinal inflammatory cytokines correlate more closely to disease severity than pathogen burden4. The same mechanism (inflammatory cytokines) applies to the inflammatory cytokine signature (plasma level of IL-6, IL-8, and TNF-α) for the prediction of COVID-19 severity and survival5. A previous candidate gene approach revealed that genetic polymorphisms, rs4073(–251T>A) or rs2227306(+ 781T/C) from a pro-inflammatory cytokine, IL-8, are associated with IL-8 production and predisposition to CDI6,7,8 with functional impact (eFigure 1). Genetic markers are not yet included in any established disease scoring system or clinical decision tool for risk stratification of CDI due to unclear causality, small effect size, complex gene by environment (GxE) interaction, and data availability/accessibility.

Materials and methods

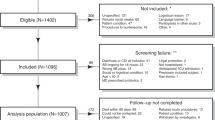

The study was conducted and reported according to the transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD) guideline9. The Geisinger Institutional Review Board approved this study to meet “Non-human subject research” using de-identified information. All research was performed in accordance with relevant guidelines/regulations. Geisinger built and performs regular updates to the de-identified structured EHR database for research linked to the MyCode Community Health Initiative biorepository10,11,12. The structured EHR and matching genetics data allowed us to conduct a retrospective study on primary CDI3. Informed consent was obtained from all subjects and/or their legal guardian(s) for the MyCode patients. The analysis pipeline, as well as CDI-specific study parameters, are illustrated in Fig. 1.

Robust phenotyping algorithm

The phenotype algorithm used to identify CDI cases and controls from EHR data was developed by eMERGE entitled “Phenotype Algorithm Pseudo Code (August 16, 2012)” and collected at PhenoKB (https://phekb.org/phenotype/clostridium-difficile-colitis). This algorithm adopted the golden standard, which used laboratory test data to identify CDI cases. Based on clinical symptoms, we first identified adults (age ≥ 18) with CDI from the Geisinger EHR. Three or more consecutive liquid stools within a day may be tested for C. difficile based on recommended guidelines13,14. The polymerase chain reaction was used as the laboratory reference standard test3. Patients tested for C. difficile but with negative results or exposed to antibiotics with high or moderate risk (Appendix-1) served as controls. Nongenetic risk factors collected in this study included index age, people in healthcare settings, antibiotic treatment, underlying comorbidities such as inflammatory bowel disease (IBD), type 2 diabetic mellitus (T2DM), HIV, cancer, medications such as chemo, transplant, corticosteroid, anti-TNF, proton pump inhibitors(PPI). The observation window for each risk factor was empirically defined to avoid an uneven sampling of the disease trajectory, which might lead to temporal bias in feature selection15. Clinical risk factors and demographic information were extracted from the structured EHR based on the International Classification of Disease (ICD)-related codes and medication codes (Appendix 1).

Using data from January 1, 2009, through December 31, 2017, we identified 6,035 cases and 72,241 controls. Of these, 5911 cases and 69,086 controls had self-reported European ancestry. Overall, 22.4% (1156/14,148 for case/control) of European (EUR) patients enrolled in the MyCode project with genetic data available.

Data pre-processing

The entire cohort of participants with EUR ancestry (n = 5911/69,086) was first split based on the availability of genetic data. No missing data was observed in any of the included clinical variables. The MyCode samples were genotyped as previously reported3. Both SNPs (rs2227306 and rs4076) from IL-8 passed the quality control without missingness. The genetic variable is routinely treated in a dosage manner (0, 1, 2). Data extraction and pre-processing details (z-scored index age, binary codes for other variables) have previously been described3.

Either the χ2 test, substituted by Fisher exact test for frequency per group ≤ 5% or the ANOVA test was performed to screen for the bivariate association. A heatmap was created to show the significance of this bivariate association based on the log-transformed p-value from each bivariate χ2 test (Fig. 2).

Association among features and performance of models in prediction of CDI in MyCode and nonMyCode samples with (simulated) genotypes included. (a, b) Heatmaps to show the significant association between variables employed in the prediction model using the training dataset. Data extraction and pre-processing details (z-scored index age, binary codes for other variables) have previously been described. Association among variables (index age further dummy coded) was assessed using a bivariate χ2 test. (c, d) To examine the discrimination power of each modeling algorithm in the testing dataset, we estimated the AUROC using common clinical risk factors for CDI with or without rs2227306 as predictors. Here the genotypes of rs2227306 were simulated in the nonMyCode samples. (e) The summary of AUROCs of the optimal modeling algorithms (gbm and xgbDART) versus glm using simulated rs2227306 genotype. P values represent the result of the DeLong test to compare AUROC between models with or without (simulated) genetic data included, with or without PSM for index age and sex.

Simulations to determine predictive power

A genotype simulation study was conducted to determine different modeling algorithms' predictive power and generalizability in the nonMyCode cohort. The genotypes of rs2227306 (IL-8) in this cohort of 4755 cases and 54,938 controls were created by a simulation strategy based on an assumption of a binomial probability distribution of each allele equaling to the prior parameter, MAF, estimated from the corresponding MyCode subgroups stratified by CDI, age, and sex (Table 2).

where R is a vector or a matrix of the summary statistics derived from the MyCode cohort, N and MAF represent the number of subjects and the corresponding MAF for each subcategory. This simulation strategy considers confounding factors such as age and sex, which may impact the association between the genetic variant and the outcome variable.

Individual SNP association testing

Genotype and phenotype association was conducted using Logistic Regression after controlling covariates such as age or sex in subgroups stratified by sex or age (binary).

Controlling confounding

Age and sex were identified as confounding factors for the association between genetic/nongenetic risk factors and CDI (see “Result”). They were selected as covariates in a Logistic Regression model to create propensity scores(R MatchIt package). We chose “nearest neighbor matching without replacement” to create a more balanced case:control ratio at 1:5 or 1:10, as shown in eFigure 2.

Selecting machine learning (ML) algorithms

Model development and optimization were based on selecting the optimal sampling approach followed by a comparison of the eight classification algorithms, including Logistic Regression (glm), Gradient Boosted Classification (gbm), Extreme Gradient Boosting with the dropout regularization for regression trees (xgbDART), Bagging for tree (treebag), Neural Network [nnet, using 1-layer fully connected neural networks (shallow)], C5.0 (c5), LogitBoost (lb), and Support Vector Machine (SVM). We selected these models based on model/algorithmic diversity, performance on similar tasks, model interpretability, and ease of implementation16. Specifically, these set of algorithms are well-established with good performance for tabular data in a wide range of classification tasks16. Furthermore, Ensemble methods17,18 (C5.0, xgbDART, Bagging, LogitBoost) that combine multiple weak learners to create a stronger model, can lead to improved predictive performance and better generalizability. Finally, by creating decision boundaries that are complex and not linear, Neural Network and SVM are capable of capturing non-linear relationships between the variables.

Evaluation metrics

We assessed the performance of the multivariable model in the prediction of CDI mainly by sensitivity, specificity, Positive Predictive Value (PPV), Negative Predictive Value (NPV), precision, recall, F1 score, Matthews Correlation Coefficient (MCC), accuracy, and Area Under the curve (AUROC) for a classification problem.

where TP = True positives; TN = True negatives; FP = False positives; FN = False negatives. Recall = TP/(TP + FN); Precision = TP/(TP + FP). Both F1 Score and MCC are calculated based on the confusion matrix and are reliable metrics for evaluating binary classification models, particularly in imbalanced datasets. F1 score combines precision and recall to provide a balanced evaluation of a model’s accuracy. MCC considers the overall balance between the four elements of the confusion matrix. The value of MCC ranges from − 1 to 1, where 1 or − 1 indicates the complete agreement or disagreement between predicted classes and actual classes, respectively, and 0 indicates completely random guessing. The result of these metrics for the training and testing datasets across eight ML algorithms was summarized in eTable3. AUROC is a graphical representation of the model’s TP rate (sensitivity/recall) versus the FP rate (1-specificity) at various probability thresholds. AUROC is insensitive to the class distribution and is often preferred over accuracy for imbalanced datasets.

Addressing data imbalance

The imbalance of a case:control dataset can lead to biased model training, where the ML models tends to favor the majority class and underestimate the minority class, resulting in high accuracy by always predicting the majority class. The oversampled data improve ML models for the prediction of minority class when there is a significant unbalanced case–control cohort, as shown in this study (~ 1:12). We performed oversampling (using Synthetic Minority Oversampling Technique, SMOTE, and random oversampling, ROSE) of the minority class during the model training to address the class imbalance and used F1 score and MCC to assess the model performance.

The SMOTE function oversampled the minority class (rare events) using bootstrapping (perc.over = 100) and k-nearest neighbor (k = 5) to synthetically create additional observations of that event and undersampling the majority class (perc.under = 200). For each case in the original dataset belonging to the minority class, perc.over/100 new examples of that class were created. ROSE applied smoothed bootstrapping to draw artificial samples from the feature space around the minority class without undersampling the majority class. We oversampled the minority class to reach a case:control ratio of 1:1 in a training dataset.

Model optimization

Both MyCode and nonMyCode samples were split into training and holdout data(testing) with 7:3 ratio at the beginning of this study. All the models were tuned in training data and tested in holdout data(testing data), as described in Fig. 1. The fivefold repeated CV has been applied to the corresponding training data from either MyCode or nonMyCode cohorts. The nonMyCode samples were considered as an additional dataset to determine the overfitting. However, the genotypes for nonMyCode samples were not available, which is very common for the non-biobank population. That is why we impute the genotype data for nonMyCode samples by simulation to determine the generalizability of the optimal models. A hyperparameter tuning grid was used to train the model with five-fold repeated cross-validation (CV) and ten repeats (R caret package). Model tuning was performed by an automatic grid search for each algorithm parameter randomly (eTable1 for the tuning parameters used in each final model). Finally, the testing set was used to calculate the model AUROC.

We also compared optimal models with the benchmark algorithm glm19,20, and extracted the feature importance of the 12 included variables. The ranks of each variable in feature importance, particularly the genetic variable, were compared across the algorithms. The DeLong test was used to compare AUROCs from two modeling algorithms and compare AUROCs of the best model with or without the genetic feature included21,22. For each model, the AUROC was calculated first, and the 95% confidence interval (95% CI) of AUROC was also computed. The covariance matrix \(Cov\left(\widehat{A}, \widehat{B}\right)\), where \(\widehat{A}\) and \(\widehat{B}\) were the estimated AUROC for models A and B, respectively, of the AUROCs between the two models was calculated. The formula for the covariance between two sample proportions, \(\widehat{A}, and \widehat{B}\), was given by: \(Cov\left(\widehat{A}, \widehat{B}\right)\) = \(\frac{(1-\widehat{A})\widehat{A}}{{n}_{a}}+ \frac{(1-\widehat{B})\widehat{B}}{{n}_{b}}+ \frac{\widehat{B}\widehat{A}}{{n}_{b}{n}_{a}} (\frac{\widehat{A}}{{n}_{a}}+ \frac{\widehat{B}}{{n}_{b}}-1)\), where \({n}_{a} and {n}_{b}\) were the sample sizes for models A and B, respectively. The Z statistic (\(\frac{\widehat{A}-\widehat{B}}{SE(\widehat{A}-\widehat{B})}\)), where \(SE(\widehat{A}-\widehat{B})= \sqrt{Cov(\widehat{A},\widehat{B}})\), and p-value were computed under an assumption of normal distribution. This p-value represented the probability of observing a difference in AUROC as extreme as the one observed in the data, assuming the null hypothesis where there was no difference between the two models.

Results

Patients demographics

The entire dataset was split based on the availability of the genetic data. Demographic and clinical information for MyCode and nonMyCode cohorts are listed in Table 1. The case:control ratio in MyCode(n = 1156/14,148, 1:11.55) was comparable to that in the nonMyCode cohort(n = 4755/54,938, 1:12.24). This ratio (~ 1:12) indicated approximately a ten-fold enrichment for controls as shown in the previously reported EHR-based large populational studies(more than 1:100). Several demographic features (e.g., sex, age) and known clinical risk factors showed significant differences between case and control groups in MyCode and nonMyCode cohort. Their bi-variate association among all predictive variables is illustrated in Fig. 2a and b. Antibiotics were the most significant risk factor for CDI.

Genotype–phenotype association

The Logistic Regression analysis showed a significant association between rs2227306 genotype and CDI only in young MyCode patients (β = 0.138, p = 0.048 vs. β = 0.062, p = 0.263) after controlling for sex. After controlling for age, this nominal association was only observed in females (β = 0.119, p = 0.034 vs β = 0.053, p = 0.427). The minor alleles from both SNPs with the higher expression level of CXCL8 and CXCL6 were associated with an increased risk for CDI.

Comparing oversampling methods to manage the case–control imbalance

SMOTE outperformed ROSE in seven out of eight examined algorithms(Fig. 3). When using xgbDART and gbm models, SMOTE in the training dataset led to better F1 (0.264 and 0.272, respectively) than the ROSE (0.253 and 0.261, respectively) in the testing dataset. The process without resampling provided the worst F1 (0.037 and 0.056, respectively). SMOTE was chosen for the following analyses. Both MCC and F1 scores showed that SMOTE yielded a slightly higher value than ROSE with or without the genetic feature included according to xgbDART and gbm models in the testing dataset (eTable 2).

Comparing the upsampling strategies in the MyCode testing dataset using F1 score as the metric for performance. The SMOTE function oversampled the minority class (a rare event) using bootstrapping (perc.over = 100) and k-nearest neighbor (k = 5) to synthetically create additional observations of that event and undersampling the majority class (perc.under = 200). For each case in the original dataset belonging to the minority class, perc.over/100 new examples of that class will be created. The ROSE function oversampled the minority class without undersampling the majority class. Here we make the case:control ratio in the training dataset equaled to 1:1 for both oversampling strategies. F1 score, the weighted average of Precision and Recall, was selected to determine the performance of oversampling. Summary of the sample sizes for training with or without upsampling (SMOTE or ROSE) and testing dataset stratified by genetic data availability. ROSE upsampling cases (n = 782) to 9931 so that case:control ratio is 1:1 with controls (n = 9931) for the training dataset. SMOTE upsampling cases (n = 782) to 1564 so that case:control ratio is 1:1 with controls (n = 1564) for the training dataset.

Predicting CDI in MyCode patients with or without propensity score matching (PSM)

The best tuning parameters selected for each model based on the largest ROC value from the training dataset were summarized in eTable 1. Among eight algorithms examined, we found that using the 11 clinical risk factors with rs2227306, in conjunction with gbm and xgbDART led to superior results (with the genetic feature, AUROCgbm = 0.72[0.694–0.746] versus AUROCglm = 0.684[0.655–0.713], p = 1.92e−07) (Fig. 2c). There was no significant difference between gbm and xgbDART (AUROCxgbDART = 0.715[0.689–0.742], p = 0.247). The genetic feature was always ranked higher than known risk factors in gbm and xgbDART compared to glm, suggesting non-parametric algorithms can better capture some nonlinear interaction(See the radar plots in Fig. 4). Compared to the base model, the integrated model provided the best discriminative power in the testing dataset, particularly for gbm (AUROC = 0.710 [0.683–0.737] vs. 0.72 [0.694–0.746], p = 0.006). xgbDART showed similar trends, better with the genetic feature, but did not reach a statistical significance (AUROC = 0.710 [0.683–0.736], vs. 0.715 [0.689–0.742], p = 0.304). Both gbm and xgbDART models provided a more balanced sensitivity (0.508 and 0.518 versus 0.481) and specificity (0.792 and 0.796 versus 0.777) and better PPV (0.178 and 0.183 versus 0.161) and NPV (0.948 and 0.949 versus 0.944) when compared to glm. No significant difference between age and sex was observed after the PSM (Table 1, eFigure 2). The algorithms with the best performance after matching (e.g. 1:10 ratio) remained gbm (AUROCgbm = 0.711 [0.681–0.740]) and xgbDART (AUROCxgbDART = 0.701 [0.671–0.731]) with rs2227306 (Fig. 2e). The former showed no statistically significant improvement over the model without rs2227306 (AUROCglm = 0.703 [0.675–0.732]). A similar trend was observed in the matching cohort with a 1:5 ratio (Fig. 2e). Both gbm and xgbDART models ranked the genetic feature the highest in feature importance(Fig. 4 the top two rows), while glm did not. glm also ranked index age and sex lower.

Feature importance for the cohort with or without (simulated) genetic data was plotted for two selected models (gbm and xgbDART), which outperformed other models (glm and nnet). This study was based on 12 features, including one genetic risk factor, rs2227036, from IL8. Feature importance from glm and nnet was always plotted as a control to compare the rank of the features weighted by optimal modeling algorithms (gbm and xgbDART) in MyCode (top two rows) and nonMyCode samples (bottom two rows). The genetic feature was weighted the top tier in gbm and xgbDART but not in glm and nnet irrespective of PSM in the MyCode cohort.

Predictive power in nonMyCode patients

The simulated genotype in the nonMyCode cohort can recapitulate the nominal association between rs2227306 and CDI (Table 2). Consistent with the result from MyCode patients, with the simulated genetic feature included, gbm (AUROCgbm = 0.820 [0.809–0.831]) and xgbDART (AUROCxgbDART = 0.819 [0.808–0.831]) outperformed other modeling algorithms (e.g., AUROCglm = 0.751 [0.737–0.765], p = 1.68e−57) (Fig. 2d). Again, rs2227306 was ranked higher in gbm and xgbDARTthan glm (Fig. 4 the bottom two rows). The result of performance metrics (sensitivity, specificity, PPV, NPV, precision, recall, F1 score, and accuracy) for the prediction of CDI in the training and testing datasets across eight ML algorithms using SMOTE as the oversampling method was summarized in eTable3.

Discussion

We developed a prediction model of symptomatic CDI by integrating common risk factors extracted from electronic health records and genetic risk factors (rs2227306/IL8). Our modeling pipeline included steps to minimize systemic bias in the final models while adhering to best practices to improve model transparency. These steps included (1) applying robust and validated phenotyping, (2) selecting and optimizing a range of ML algorithms with a focus on attributes such as generalizability, interpretability, potential interactions, and bias-variance trade-off, (3) addressing data imbalance and performing extensive simulation studies to determine the predictive power in samples without genetic information, and finally using PSM to control for confounding factors.

Overall, our results supported that decision tree-based Ensemble methods such as gbm and xgbDART demonstrated superior discriminative power than glm(logistic regression)16. Both gbm and xgbDART are based on the boosting algorithm17,18 which is an ensemble technique that sequentially builds multiple weak learners, each focusing on the mistakes of its predecessor and assigning higher weights to misclassified instances to correct them in subsequent iterations. Ensemble methods often work better than individual ML algorithms due to the following reasons17,18: (1) Handing imbalanced data by giving more weight to the minority class and balancing the predictions as shown in this study; (2) Reduced Bias and Variance by combing multiple individual models(weak learners) to create a single and more robust model(strong learners); (3) Complementary learning by leveraging the complementary strengths of individual algorithms with less overfitting and improved generalization; (4) Tackling complex relationships which cannot be effectively captured by a single model; (5) Robustness to noisy data because of the averaging or voting mechanisms which often are less affected by noisy data points and outliers.

The improvements gained by including common genetic variants in the optimal models were limited and age- or sex-dependent after PSM. This finding was consistent with the decreased genetic heritability observed in late-onset compared to early-onset in multiple complex disease traits23, and rs2227306 (IL-8) was related to early-onset of disease24.

Importance of genetic risk factor across modeling algorithms

Feature importance was considered a measure of the individual contribution of the feature for a particular classifier, regardless of the shape or direction of the feature effect. The genetic feature was consistently ranked high in the optimal models, even higher in PSM subgroups. This finding corroborates the clinical value of genetic information in prediction models. The conclusion made from the MyCode sample could partially be extended to the larger nonMyCode sample. The potential interaction between the genetic feature and clinical risk factors beyond age and sex was not recognized and implemented in the genotype simulation, which may prevent the conclusion made from the MyCode sample could fully be extended to the larger nonMyCode sample, leading to more uncertainty of the discriminative power in the model with the simulated genetic feature included. The importance of the genetic feature was ranked lowest in the Logistic Regression-based model, suggesting Logistic Regression may underestimate the contribution of the genetic variant for the prediction of CDI, highlighting the importance of capturing multi-way interactions when assessing the value of common genetic variants with a small effect size in prediction models25.

Clinical relevance of the selected genetic variant from IL-8

Previous candidate gene approach of genetic predisposition of CDI has revealed a promoter polymorphism –rs4073(–251T>A) and its linkage disequilibrium SNP, rs2227306(+ 781T/C), from a pro-inflammatory cytokine, IL-8—can result in increased IL-8 production and predisposes subjects to CDI6, recurrent CDI8, or severity of CDI7. They are both eQTL for a gene cluster located at 4q13.3 (eFigure 1), encoding several members of the CXC chemokine family such as CXCL8 (aka IL-8), CXCL6, CXCL5, CXCL1, and more, which promote the recruitment of neutrophils to the site of infection.

Minor alleles from both SNPs are associated with an increased expression level of CXCL8 (GTEx). Increased IL-8 protein levels and CXCL5 and IL8 message levels have been associated with prolonged disease26. Intrarectal administration of TcdA/TcdB in a mouse model increases the expression of inflammatory mediators such as CXCL1, the murine ortholog of human IL-827. PheWAS analyses from UKBIOBANK (eFigure 1) confirmed that the top phenotypes associated with rs2227306 included some laboratory variables such as “Neutrophil count”, “Neutrophil percentage”, “White blood cell count”, the latter of which contributed to a composite risk score developed in earlier studies in the prediction of CDI severity28,29 (Table 3). Our study is the first to evaluate the integrated model by including the genetic and common clinical risk factors using various optimized ML algorithms to predict CDI.

In the context of other similar studies

Since the testing and treatment are not recommended in asymptomatic carriers of C. difficile1, we direct our focus on symptomatic CDI. The results from this study may eventually facilitate at-risk patient stratification for targeted treatment in patients more likely to benefit from emerging prevention or treatment options such as a vaccine, fidaxomicin, monoclonal antibodies, and fecal microbiota transplantation. Results may also support more granular substratification for therapeutic trials.

Controls were defined as patients without CDI, based on negative molecular laboratory results or exposed to similar risk factors, such as antibiotic use. Because of using the eMERGE phenotype algorithm with the inclusion/exclusion criteria to define controls, our case:control ratio was approximately 1:10, which was a tenfold enrichment of controls with increased risk for CDI, compared to more than 1:100 ratio summarized from other retrospective cohorts studies (Table 3). This enrichment would make the prediction more challenging.

The results from a subgroup with PSM suggested that the contribution of the genetic variant in model prediction was minimum in elderly patients. This finding was consistent with the decreased genetic heritability observed in late-onset compared to early-onset in multiple complex disease traits23, and rs2227306 (IL-8) was related to early-onset of disease24.

As summarized in Table 3, the majority of the predictive models for CDI30,31,32,33,34,35,36 are not based on large EHR or claims databases until recently37,38,39,40,41,42,43, whereas studies performed on recurrence44,45,46,47 or severity28,29,48,49 include very small cohorts. Further, the existing studies do not compare algorithms; instead, they focus on the amount of information extracted necessary for improved prediction. In general, the amount of information, such as the number of variables, correlates with model performance; however, including hundreds of variables can lead to models with lower interpretability and reduced generalizability to other healthcare systems. For example, some institution-specific features can rank in the top tier in feature importance; therefore, a healthcare-based model may have limited discriminative power in the prediction of individuals from other healthcare systems when geographic, social-economic, and clinical management environments differ significantly.

The generalizability of developed prediction models from a single healthcare system to others is debatable37. Since this study aims to utilize only common clinical risk factors readily available in most EHRs to build a prediction model, the conclusion made from this study could have better generalizability and may be easier to implement elsewhere. For these reasons, we propose that this integrated model is more transferable to EHR than complex models with manually curated variables and datasets.

Strength and limitation

The strength of this study lies in the following, (1) development of a prediction model of symptomatic CDI by integration of genetic and common clinical risk factors; (2) evaluation of several advanced ML algorithms to compare their performance; (3) identification of an association between the genetic variant and the outcome variable, which was confounded by age and sex; (4) determination of the value of the genetic feature in its contribution to the model performance, in general, and propensity score matched subgroups; and (5) identification of the selection bias in the cohort with genetic data available.

This study has some limitations, including (1) the accuracy of EHR data collection and recording processes that may vary by clinician, hospital, and over time to possibly prevent the generalizability of developed models to other healthcare systems; (2) our data came from a single healthcare system with a patient population that was predominantly European ancestry. The features selected from this homogenous population may not best represent or cover the complexity of feature space derived from a heterogenous population; (3) we only tested a common genetic variant with a high MAF. We expect the polygenic risk score developed from the consortium-based GWAS with individual effect size estimated from thousands of genetic variants would better represent the genetic liability to CDI and other complex diseases.

In conclusion, we showed that developing robust prediction models for CDI, and perhaps other complex conditions, requires a step-wise approach to ensure the highest level of transparency and lowest possible systemic bias. This study leveraged CDI as a disease model to demonstrate that although genetic information may improve predictions, the benefit of including genetic feature(s) in the prediction models should be thoroughly evaluated.

Data availability

The patient-level EHR data analyzed in this study may be shared with a third party upon execution of data sharing agreement for reasonable requests. Such requests should be addressed to V. Abedi. All the codes can be found at TheDecodeLab/Prediction_of_CDI_by_EHR_and_Genetics (https://github.com/TheDecodeLab/Prediction_of_CDI_by_EHR_and_Genetics).

Abbreviations

- AUROC:

-

Area under the receiver operating characteristic

- Treebag:

-

Bagging for tree

- BMI:

-

Body Mass Index

- CXCL:

-

Chemokine (C–X–C motif) ligand

- C. difficile :

-

Clostridioides difficile

- CDI:

-

Clostridioides difficile Infection

- CV:

-

Cross-validation

- EHR:

-

Electronic health records

- eQTL:

-

Expression quantitative trait loci

- xgbDART:

-

Extreme gradient boosting with the dropout regularization for regression trees

- GWAS:

-

Genome-wide association study

- gbm:

-

Gradient boosting machine

- IBD:

-

Inflammatory bowel disease

- IL-8:

-

Interleukin-8

- ICD:

-

International classification of disease

- ML:

-

Machine learning

- MHC:

-

Major histocompatibility complex

- MAF:

-

Minor allele frequency

- MCC:

-

Matthews correlation coefficient

- nnet:

-

Neural network

- NPV:

-

Negative predictive value

- PheWAS:

-

Phenome-wide association study

- PPV:

-

Positive predictive value

- PPI:

-

Proton-pump inhibitor

- SVM:

-

Support vector machine

- PSM:

-

Propensity score matching

- SMOTE:

-

Synthetic minority oversampling technique

- ROSE:

-

Random oversampling

- T2DM:

-

Type 2 diabetic mellitus

References

Khanna, S. & Pardi, D. S. Clostridium difficile infection: New insights into management. Mayo Clin. Proc. 87, 1106–1117. https://doi.org/10.1016/j.mayocp.2012.07.016 (2012).

Berkell, M. et al. Microbiota-based markers predictive of development of Clostridioides difficile infection. Nat. Commun. 12, 2241. https://doi.org/10.1038/s41467-021-22302-0 (2021).

Li, J. et al. Variants at the MHC region associate with susceptibility to Clostridioides difficile infection: A genome-wide association study using comprehensive electronic health records. Front. Immunol. 12, 638913. https://doi.org/10.3389/fimmu.2021.638913 (2021).

El Feghaly, R. E. et al. Markers of intestinal inflammation, not bacterial burden, correlate with clinical outcomes in Clostridium difficile infection. Clin. Infect. Dis. 56, 1713–1721. https://doi.org/10.1093/cid/cit147 (2013).

Del Valle, D. M. et al. An inflammatory cytokine signature predicts COVID-19 severity and survival. Nat. Med. 26, 1636–1643. https://doi.org/10.1038/s41591-020-1051-9 (2020).

Jiang, Z. D. et al. A common polymorphism in the interleukin 8 gene promoter is associated with Clostridium difficile diarrhea. Am. J. Gastroenterol. 101, 1112–1116. https://doi.org/10.1111/j.1572-0241.2006.00482.x (2006).

Czepiel, J. et al. The presence of IL-8 +781 T/C polymorphism is associated with the parameters of severe Clostridium difficile infection. Microb. Pathog. 114, 281–285. https://doi.org/10.1016/j.micpath.2017.11.066 (2018).

Garey, K. W. et al. A common polymorphism in the interleukin-8 gene promoter is associated with an increased risk for recurrent Clostridium difficile infection. Clin. Infect. Dis. 51, 1406–1410. https://doi.org/10.1086/657398 (2010).

Collins, G. S., Reitsma, J. B., Altman, D. G. & Moons, K. G. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. BMC Med. 13, 1. https://doi.org/10.1186/s12916-014-0241-z (2015).

Carey, D. J. et al. The Geisinger MyCode community health initiative: An electronic health record-linked biobank for precision medicine research. Genet. Med. 18, 906–913. https://doi.org/10.1038/gim.2015.187 (2016).

Abul-Husn, N. S. et al. Genetic identification of familial hypercholesterolemia within a single US health care system. Science 354, 6319. https://doi.org/10.1126/science.aaf7000 (2016).

Dewey, F. E. et al. Distribution and clinical impact of functional variants in 50,726 whole-exome sequences from the DiscovEHR study. Science 354, 6319. https://doi.org/10.1126/science.aaf6814 (2016).

Burnham, C. A. & Carroll, K. C. Diagnosis of Clostridium difficile infection: An ongoing conundrum for clinicians and for clinical laboratories. Clin. Microbiol. Rev. 26, 604–630. https://doi.org/10.1128/CMR.00016-13 (2013).

McDonald, L. C. et al. Clinical practice guidelines for Clostridium difficile infection in adults and children: 2017 update by the infectious diseases society of america (IDSA) and society for healthcare epidemiology of America (SHEA). Clin. Infect. Dis. 66, 987–994. https://doi.org/10.1093/cid/ciy149 (2018).

Yuan, W. et al. Temporal bias in case-control design: Preventing reliable predictions of the future. Nat. Commun. 12, 1107. https://doi.org/10.1038/s41467-021-21390-2 (2021).

Borisov, V. et al. Deep neural networks and tabular data: A survey. IEEE Trans. Neural Netw. Learn. Syst. https://doi.org/10.1109/TNNLS.2022.3229161 (2022).

Zhou, Z.-H. Ensemble methods foundations and algorithms. in Ensemble Methods, 23–95 (2012).

Rokach, L. Chapter 3. Introduction to ensemble learning. in Ensemble Learning Pattern Classification Using Ensemble Methods 2nd Edition, 51–104, https://doi.org/10.1142/9789811201967_0003 (2019).

Abedi, V. et al. Prediction of long-term stroke recurrence using machine learning models. J. Clin. Med. https://doi.org/10.3390/jcm10061286 (2021).

Abedi, V. et al. Predicting short and long-term mortality after acute ischemic stroke using EHR. J. Neurol. Sci. 427, 117560. https://doi.org/10.1016/j.jns.2021.117560 (2021).

DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 44, 837–845 (1988).

Sun, X. & Xu, W. Fast implementation of DeLong’s algorithm for comparing the areas under correlated receiver operating characteristic curves. IEEE Signal Process. Lett. 21, 1389–1393. https://doi.org/10.1109/lsp.2014.2337313 (2014).

Mars, N. et al. Polygenic and clinical risk scores and their impact on age at onset and prediction of cardiometabolic diseases and common cancers. Nat. Med. 26, 549–557. https://doi.org/10.1038/s41591-020-0800-0 (2020).

Emonts, M. et al. Polymorphisms in genes controlling inflammation and tissue repair in rheumatoid arthritis: A case control study. BMC Med. Genet. 12, 36. https://doi.org/10.1186/1471-2350-12-36 (2011).

Xu, D. et al. Quantitative disease risk scores from EHR with applications to clinical risk stratification and genetic studies. NPJ Digit. Med. 4, 116. https://doi.org/10.1038/s41746-021-00488-3 (2021).

El Feghaly, R. E., Stauber, J. L., Tarr, P. I. & Haslam, D. B. Intestinal inflammatory biomarkers and outcome in pediatric Clostridium difficile infections. J. Pediatr. 163, 1697–1704. https://doi.org/10.1016/j.jpeds.2013.07.029 (2013).

Hirota, S. A. et al. Intrarectal instillation of Clostridium difficile toxin A triggers colonic inflammation and tissue damage: Development of a novel and efficient mouse model of Clostridium difficile toxin exposure. Infect. Immun. 80, 4474–4484. https://doi.org/10.1128/IAI.00933-12 (2012).

Drew, R. J. & Boyle, B. RUWA scoring system: A novel predictive tool for the identification of patients at high risk for complications from Clostridium difficile infection. J. Hosp. Infect. 71, 93–94. https://doi.org/10.1016/j.jhin.2008.09.020 (2009) (Author reply 94–95).

Lungulescu, O. A., Cao, W., Gatskevich, E., Tlhabano, L. & Stratidis, J. G. CSI: A severity index for Clostridium difficile infection at the time of admission. J. Hosp. Infect. 79, 151–154. https://doi.org/10.1016/j.jhin.2011.04.017 (2011).

Garey, K. W. et al. A clinical risk index for Clostridium difficile infection in hospitalised patients receiving broad-spectrum antibiotics. J. Hosp. Infect. 70, 142–147. https://doi.org/10.1016/j.jhin.2008.06.026 (2008).

Tanner, J., Khan, D., Anthony, D. & Paton, J. Waterlow score to predict patients at risk of developing Clostridium difficile-associated disease. J. Hosp. Infect. 71, 239–244. https://doi.org/10.1016/j.jhin.2008.11.017 (2009).

Dubberke, E. R. et al. Development and validation of a Clostridium difficile infection risk prediction model. Infect. Control Hosp. Epidemiol. 32, 360–366. https://doi.org/10.1086/658944 (2011).

Chandra, S., Thapa, R., Marur, S. & Jani, N. Validation of a clinical prediction scale for hospital-onset Clostridium difficile infection. J. Clin. Gastroenterol. 48, 419–422. https://doi.org/10.1097/MCG.0000000000000012 (2014).

Smith, L. A. et al. Development and validation of a Clostridium difficile risk assessment tool. AACN Adv. Crit. Care 25, 334–346. https://doi.org/10.1097/NCI.0000000000000046 (2014).

van Werkhoven, C. H. et al. Identification of patients at high risk for Clostridium difficile infection: Development and validation of a risk prediction model in hospitalized patients treated with antibiotics. Clin. Microbiol. Infect. 21(786), e781-788. https://doi.org/10.1016/j.cmi.2015.04.005 (2015).

Tilton, C. S. & Johnson, S. W. Development of a risk prediction model for hospital-onset Clostridium difficile infection in patients receiving systemic antibiotics. Am. J. Infect. Control 47, 280–284. https://doi.org/10.1016/j.ajic.2018.08.021 (2019).

Wiens, J., Guttag, J. & Horvitz, E. A study in transfer learning: Leveraging data from multiple hospitals to enhance hospital-specific predictions. J. Am. Med. Inform. Assoc. 21, 699–706. https://doi.org/10.1136/amiajnl-2013-002162 (2014).

Wiens, J., Campbell, W. N., Franklin, E. S., Guttag, J. V. & Horvitz, E. Learning data-driven patient risk stratification models for Clostridium difficile. Open Forum Infect. Dis. 1, 045. https://doi.org/10.1093/ofid/ofu045 (2014).

Baggs, J. et al. Identification of population at risk for future Clostridium difficile infection following hospital discharge to be targeted for vaccine trials. Vaccine 33, 6241–6249. https://doi.org/10.1016/j.vaccine.2015.09.078 (2015).

Press, A. et al. Developing a clinical prediction rule for first hospital-onset Clostridium difficile infections: A retrospective observational study. Infect. Control Hosp. Epidemiol. 37, 896–900. https://doi.org/10.1017/ice.2016.97 (2016).

Zilberberg, M. D., Shorr, A. F., Wang, L., Baser, O. & Yu, H. Development and validation of a risk score for Clostridium difficile infection in medicare beneficiaries: A population-based cohort study. J. Am. Geriatr. Soc. 64, 1690–1695. https://doi.org/10.1111/jgs.14236 (2016).

Oh, J. et al. A generalizable, data-driven approach to predict daily risk of Clostridium difficile infection at two large academic health centers. Infect. Control Hosp. Epidemiol. 39, 425–433. https://doi.org/10.1017/ice.2018.16 (2018).

Aukes, L. et al. A risk score to predict clostridioides difficile infection. Open Forum Infect. Dis. 8, 052. https://doi.org/10.1093/ofid/ofab052 (2021).

Hebert, C., Du, H., Peterson, L. R. & Robicsek, A. Electronic health record-based detection of risk factors for Clostridium difficile infection relapse. Infect. Control Hosp. Epidemiol. 34, 407–414. https://doi.org/10.1086/669864 (2013).

LaBarbera, F. D., Nikiforov, I., Parvathenani, A., Pramil, V. & Gorrepati, S. A prediction model for Clostridium difficile recurrence. J. Community Hosp. Intern. Med. Perspect. 5, 26033. https://doi.org/10.3402/jchimp.v5.26033 (2015).

Escobar, G. J. et al. Prediction of recurrent Clostridium difficile infection using comprehensive electronic medical records in an integrated healthcare delivery system. Infect. Control Hosp. Epidemiol. 38, 1196–1203. https://doi.org/10.1017/ice.2017.176 (2017).

Cobo, J. et al. Prediction of recurrent clostridium difficile infection at the bedside: The GEIH-CDI score. Int. J. Antimicrob. Agents 51, 393–398. https://doi.org/10.1016/j.ijantimicag.2017.09.010 (2018).

Na, X. et al. A multi-center prospective derivation and validation of a clinical prediction tool for severe Clostridium difficile infection. PLoS ONE 10, e0123405. https://doi.org/10.1371/journal.pone.0123405 (2015).

Li, B. Y., Oh, J., Young, V. B., Rao, K. & Wiens, J. Using machine learning and the electronic health record to predict complicated Clostridium difficile infection. Open Forum Infect. Dis. 6, 186. https://doi.org/10.1093/ofid/ofz186 (2019).

Origuen, J. et al. Toxin B PCR amplification cycle threshold adds little to clinical variables for predicting outcomes in Clostridium difficile infection: A retrospective cohort study. J. Clin. Microbiol. 57, 18. https://doi.org/10.1128/JCM.01125-18 (2019).

Acknowledgements

We would like to extend our thanks to Drs. Brodginski, Alison M; Martinez, Raquel M; Olson, Jordan; Kreis, Steve; and Heiter, Barb J. for clinical data validation.

Author information

Authors and Affiliations

Contributions

Study conception and design: J.L. and V.A. Data extraction and systematic literature search: J.L. D.C., V.S., V.S. Code development: J.L. Analysis of results: J.L. Data preparation and pre-processing: J.L. and V.A.; Interpretation of the findings: J.L. and V.A. Preparation of the first draft of the manuscript: J.L. and V.A. Critically evaluated the manuscript: D.C., P.S., D.M.W., R.Z., and V.A. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, J., Chaudhary, D., Sharma, V. et al. An integrated pipeline for prediction of Clostridioides difficile infection. Sci Rep 13, 16532 (2023). https://doi.org/10.1038/s41598-023-41753-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-41753-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.