Abstract

Self-related visual information, especially one’s own face and name, are processed in a specific, prioritized way. However, the spatio-temporal brain dynamics of self-prioritization have remained elusive. Moreover, it has been unclear whether this prioritization is an effect of enhancement and amplification, or rather a facilitating automatization of processing self-referential information. In this EEG study, 25 married women (who changed their surnames after marriage, so that their past and present surnames could be used as stimuli) performed a detection task with faces and names from five categories: self, self from the past, friend, famous, and unknown person. The aim was to determine the temporal and spatial characteristics of early electrophysiological markers of self-referential processing. We report results of event-related component (ERP) and time–frequency analyses. In the ERPs, the earliest self-relevance effect was displayed only 300 ms after stimulus onset in the midfrontal N2, and later in the parietal P3b, independently of the stimulus type. No self-relevance effect was found on the N170 component. However, local theta power at the occipito-temporal (visual) areas and inter-regional theta phase coherence between the visual and midfrontal areas showed that self-relevance differentiation of faces began already about 100–300 ms after stimulus onset. No such early effects were found for names. The results are discussed in terms of the time-course, functional localization, stimulus-specificity, and automatization of self-prioritization.

Similar content being viewed by others

Introduction

Everyone knows from their own experience that self-related information has a sort of special priority. The effects of self-prioritization have been observed in various contexts and situations. Hearing one’s own name or seeing one’s own face capture and hold attention1,2,3,4; it is difficult to ignore personally relevant stimuli5,6,7, and self-related information or traits are remembered better8,9,10,11,12,13. Regardless of the functional specificity of those effects, the prioritization of self-related information is usually assumed to be quick and automatic14,15,16. For instance, looking at one's own name has been claimed to capture one’s attention automatically, unintentionally, and preconsciously1. This seems to imply that: (a) self-prioritization occurs early in the time-course of processing self-related information, thus its effects should be observed in modulations of early components of the electroencephalogram (EEG); (b) self-prioritization occurs already at the perceptual levels of processing, thus its effects should be observed already in the activity of sensory areas. Furthermore, it is often assumed that self-prioritization entails enhancement and amplification of processing self-related information17,18,19. However, neither of the above has been unequivocally supported by available empirical evidence. In this study, we attempt to address those issues in terms of visual perception of the most self-related stimuli: our own face and our own name.

When does self-prioritization occur?

Several studies observed the first differentiation between self- and other-faces at about 170 ms after stimulus onset. Self-faces evoked a larger amplitude of the N170 component of the event-related potential (ERP)20,21,22,23,24. The N170 is considered as the earliest marker of formation and activation of structural face representations25,26, thus the results would suggest that self-prioritization begins already along with this first stage of face processing. However, no self-face effect on the N170 component was reported even more often27,28,29,30,31,32,33,34,35,36,37. It is worth noticing that the effects on the N170 were more frequently observed for familiar faces than for the self faces, but according to a recent review by Caharel and Rossion38, even this familiarity effect was found only in about half of the analyzed N170 studies.

In contrast, a number of EEG studies showed the earliest difference between self and other faces only at about 250 ms after stimulus onset. It was usually observed as a negative deflection of the ERP over the inferior temporal sites, called N25030,31,33,36,39. This N250 effect is assumed to reflect activation of the face recognition units40, and is sensitive to identity-specific representations of known faces41. The effect of N250 for self- and familiar specificity was found also for names42 and owned objects43. The latency of about 250 ms may be considered as relatively late effect—when compared to the results showing the first effects of face-specific neural processing at about 100 ms44,45,46,47, and evidence for categorical perception of facial emotional expressions occurring as early as 90–140 ms after stimulus onset48,49,50. Some studies suggest the N250 depends on the level of familiarity, therefore reaches the highest amplitude for self-face27,36. The available results are, however, not entirely consistent with the assumption of a quick and early, presumably pre-conscious, self-prioritization.

Where does an early self-prioritization occur?

In several studies on the visual self-relevance, the first ERP self-effects were found in the occipito-temporal visual areas. This includes some of the aforementioned studies showing the self-face effect on the N17021,22,23,24—the component localized in the fusiform face area51,52. Similarly, the self-face effect on the N250 has been observed at occipito-temporal or occipito-parietal sites27,30,31,33,36,42. On the other hand, many studies have found the first signs of the self-face discrimination outside the visual areas; either as a decrease of the midfrontal N235,53 or centro-temporal P228 (for effects in occipito-temporal P2 see54; and frontal P2 see27), or as an increase of the parietal P3b3,29,30,34,36,55,56,57. Finally, several studies have found the first effects of self-prioritization occurring at both sensory and frontal areas21,22,23. In conclusion, the available literature seems to show three patterns of the functional anatomy of the early effects of self-prioritization: either (i) self-related processing is facilitated already in the sensory (visual) areas, or (ii) it is prioritized over fronto-central areas, or (iii) both. It remains unclear what factors could explain this discrepancy. Given the discrepant evidence, we cannot draw any firm conclusion on the spatio-temporal dynamics of the identification and processing of self-related sensory information.

Is it amplification or automatization?

Another issue concerns the nature of the self-prioritization, which is most often accounted as an enhancement or amplification of the self-related information processing. A number of studies have shown that amplitude of the P3b component (centro-parietal P300) of the ERP increases in response to self-relevance, irrespectively to stimulus type. Such P3b effect was observed for self-faces3,29,30,34,36,37,55,56,57,58,59, as well as for visually presented own name3,56,60,61,62 or online nickname63, autobiographical information18, and trait adjectives evaluation64,65. The self-related increase of the P3b has usually been interpreted as an enhancement of neural processing, presumably related to increased allocation of attention. However, such interpretation may be questioned. First of all, there is still an ongoing debate on the functional role of the P3b component66,67,68,69,70. Secondly, an increase of the P3b was also observed along with a decrease in attentional demands (see e.g., Refs.71,72,73,74,75), which shows that the P3b can be decreased when an enhanced involvement of task-related processes is entailed by increased task demands.

Less ambiguous for interpretation are the aforementioned self-related modulations of the N170, N250, and N2. More ambiguous, however, seem to be the actual results. While in some studies the N170 and N250 were found to be larger in response to self-face or self-name21,22,24,33,42,76; others reported a decrease of the N250 in response to self-relevance27,30,31,36,43. Some studies showed significant self-related enhancement of the N170 and at the same time self-related decrease of the P221,23, while others did not find the effect in the N170 yet still showed the P2 decrease at occipito-temporal sites27,54. The midfrontal N2, in turn, was found to have the largest amplitude in response to written own name60. However, a decrease of the midfrontal N2 amplitude was observed in response to self-faces, compared to other, familiar and unfamiliar faces23,34,35. The N2 amplitude was also decreased when arbitrary faces were assigned and recognized as own53. Thus, the self-related effects of the N2 are even less conforming to the enhancement hypothesis.

Larger amplitudes of the visual-evoked potentials are usually interpreted as an increased involvement of the processes reflected in these ERPs (e.g., Ref.77). An increase of the midfrontal N2 often reflects a stronger involvement of some forms of executive control78. It is therefore plausible that the N2 decrease reflects a reduced need for control in terms of e.g., allocation of attentional resources27,53 onto processing self-related information, or accessing self-related memories33. Taken together, the findings of decreased ERP amplitudes in the self-referential conditions suggest that, at least in some cases, self-prioritization may not entail enhancement and amplification. Rather, it may be an effect of perceptual facilitation attained through a less effortful and more automatic processing of self-related information. To complicate the issue even further, self-prioritization might involve both the enhancement and automatization; the two effects could occur at different stages or levels of processing.

Are the effects of self-prioritization stimulus-specific?

The effects of early self-prioritization may be either stimulus-specific or independent of the information type. Faces are biologically significant and ecologically-salient stimuli79, whereas names/words are learned symbols. Face-specific processing begins by activating the ventral fusiform face area (FFA), predominantly in the right hemisphere, whereas written words—the occipito-temporal visual word form area (VWFA) in the left hemisphere. The structural characteristics and complexity of faces and words differ, thus, their processing may involve amplification and automatization at different stages and levels of processing80,81,82,83. The available results show that all three discussed above issues related to self-prioritization—the time-course, functional localization, and character of the effects (amplification or facilitation)—may differ significantly depending on the stimulus type. However, since the results are not consistent, it remains unclear whether—and if so, then how exactly—the self-face and self-name effects differ in terms of their time-courses and functional anatomy.

This study

In this study, we examined self-related modulations of the EEG data recorded during a simple visual detection task in an experiment by Kotlewska and Nowicka56. The stimuli were faces and names from five categories: current self, self from the past, friend, famous, and unknown. Thus, self-relevance was graded from most (current self-face/name) to least (unknown face/name) self-relevant. While the earlier study56 was focused specifically on similarities between the present and past selves, in the current study we examined the EEG data in terms of the spatiotemporal neural dynamics underlying self-relevance differentiation at early stages of visual processing. The aim was twofold: (a) to determine general and stimulus-specific spatio-temporal changes in electrophysiological brain activity related to the increasing self-relevance; and (b) to interpret and discuss the findings in relation to the four issues introduced above: the time-course, functional localization, nature (amplification or facilitation), and stimulus-specificity of the self-prioritization effects.

Following the previous studies reporting the effects of self-face and self-name processing, we analyzed the N170, N2, and P3b components of the ERPs. However, our main focus was on the local and inter-regional time–frequency activity in the theta-band. While the ERP analysis is an essential tool for investigating the time-course of stimulus-related processes, it reveals only a fraction of the activity of interest. The time–frequency analysis allows to detect band-specific spectral dynamics of local brain activity as well as of long-distance functional connectivity84,85. As the ERPs do not give a coherent picture of precise spatio-temporal characteristics of self-prioritization, the oscillatory activity may reveal some missing parts of the puzzle. For instance, a stronger theta activity over midfrontal areas was reported for familiar and famous faces than unknown ones86, and for self-relevant personally familiar face (participants’ grandmother) than for unknown face87. Theta oscillations were also found to differentiate upright and inverted faces and bodies88. Also, while theta activity did not differentiate spoken own-name when it was task-irrelevant89,90, a larger left-lateralized theta synchronization was observed when the own-name was task-relevant90. Most importantly, Miyakoshi and colleagues31 showed a smaller increase of local theta-band phase coherence in the fusiform area in response to self-face.

Methods

Participants

Twenty-five females participated in the study (mean age 35.7; SD 8.7). All participants had normal or corrected-to-normal vision, reported normal color vision and no history of neurological disorders. All were paid for their participation. Only married woman who have changed their surnames after marriage were recruited, so that their current and past surnames could be used in the 'present self' and 'past self' task conditions, respectively. The required minimal length of marriage was three years, and it varied from 3 to 37 years (mean 9.64; SD 8.86). The experimental protocol was approved by the Human Ethics Committee of the SWPS University of Social Sciences and Humanities (Warsaw, Poland). The study was carried out in accordance with the guidelines and regulations of the Declaration of Helsinki, and written informed consent was obtained from each participant before the experiment.

Stimuli and procedure

Participants performed a simple visual detection task. One stimulus was presented in each trial of the task and participants responded by pressing a dedicated response button regardless of the stimulus type. The stimuli were faces and names from five categories: (1) present participants' marital names and current photos of their faces, (2) participants' family names and photos of their faces from the past, (3) names and faces of participants' close friends, (4) names and faces of famous persons (celebrities), and (5) names and faces of persons unknown to participants. Before the experiment, participants delivered photographs presenting their current face, photo of their face taken just before marriage, and the current photo of a female close friend. One restriction was made on the choice of the friend: first and last names (past or present) of the participant and the friend had to differ. All names were of Polish origin. Mean length of names was 15 letters. The length did not differ significantly between the categories (for details see56). The stimulus set was tailored individually for each participant. Famous and unknown names were selected to match the length of first and last name, and faces of famous and unknown persons (downloaded from the Internet) were matched to the age and appearance of the participants. Before the experiment, each participant confirmed whether she was familiar with the famous and unknown people selected for their stimulus set.

Pictures of faces were rendered grayscale. The faces were extracted from their backgrounds. Picture luminance was matched to the color statistics of a single picture. One image of face was shown per category. In the name condition, stimuli consisted of first and last names (further referred to as “names”), and were written with white letters. The stimuli were presented on a black background. The stimulus size ranged from about 2 × 3° to 2 × 6° for names, and 5 × 4° to 5 × 6° for faces. The stimuli were displayed centrally, at fixation, on a 19’ computer monitor.

Participants were asked to respond to each stimulus as quickly as possible by pressing always the same button on a response pad (Cedrus, San Pedro, CA, USA). The instructions were introduced twice: first verbally by the experimenter and then displayed on the monitor before the experiment started. Subjects had to confirm their understanding of the instruction by pressing the appropriate button.

The stimulus presentation procedure is shown in Fig. 1 (the example picture is a photograph of one of the authors). Each trial consisted of the following sequence of events: a blank screen displayed for 1 s, a fixation cross (white cross) displayed for 1 s, and the target stimulus displayed until the response. A new trial began automatically after the response, or after 3 s if the participant did not respond. The stimulus sequence was pseudo-randomized so that no more than three stimuli of the same type or the same condition were presented consecutively. Stimulus of each type and category was repeated 30 times. The task lasted approximately 15 min. The task was performed in an acoustically and electrically shielded room.

EEG recording and preprocessing

EEG was recorded from 62 scalp sites using Brain Products Quick Amp amplifier and BrainVision Recorder® software (Brain Products, Gilching, Germany). Ag–AgCl electrodes were mounted on an elastic cap (ActiCAP, Munich, Germany) and positioned according to the extended 10–20 system. Electrode impedance was kept below 5 kΩ. The EEG was recorded against an average of all channels calculated by the amplifier hardware. The sampling rate was 500 Hz. Off-line EEG data preprocessing was done using BrainVision Analyzer® software (version 2, Brain Products, Gilching, Germany). Data were re-referenced to the mean signal from earlobes, filtered with a 0.1–80 Hz band-pass and a 50 Hz band-rejection filter (Butterworth zero-phase filters, attenuation of 12 dB/octave), and split into segments beginning at− 800 ms and ending at 1000 ms relative to target onset. Segmented data were baselined to the first 200 ms before target onset (i.e., − 200 to 0 ms). Ocular artifacts were corrected by means of independent component analysis (ICA). One ICA component representing blinks was rejected per participant. Next, after resetting the baseline, the Analyzer’s semi-automatic artifact rejection method was applied. The maximum permitted voltage step per sampling point was 50 μV, the maximum permitted absolute difference between two values in the segment was 200 μV, and the lowest permitted activity in the 100 ms interval was 0.5 μV. The overall average of segments that passed the artifact rejection was 98% (range 94–100%) The averages of accepted segments in the name and face conditions, respectively, were: 98 and 97% for present self, 98 and 97% for past self, 98 and 97% for friend, 97 and 97% for famous, 97 and 97% for unknown.

Event-related potential (ERP) analysis

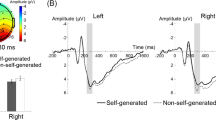

Before the ERP analysis, artifact-free segments were averaged over each experimental condition per participant. The ERPs of interest—N170, midfrontal N2, and P3b—have all been well-established in the literature, both empirically and theoretically, thus the electrode sites for the ERP analysis were selected a priori. Data inspection confirmed that the ERPs had the expected topographies with amplitude peaks at the selected sites (see Fig. 2). The ERP time-windows were determined using a collapsed localizer approach. i.e., on the waveforms averaged across the relevant experimental conditions (cf. Ref.91). All the analyzed ERPs formed regular waveforms with well-defined peaks within the selected time-windows (see Fig. 2). The amplitudes of the ERPs were measured as a mean amplitude in a given time-window. The amplitudes of the visual-evoked N170 component were measured from the occipito-temporal sites PO7 and PO8 (cf. Ref.92). The latencies of face-evoked N170 were about 10 ms longer than of the name-evoked N170 (see Fig. 2), thus, to avoid any between-condition artifacts of mismatch between the peaks, we measured the N170 in a 150–190 ms window for faces, and 140–180 ms for names. (We compared the obtained statistical results with the analysis of one time-window for each condition, 140–190 ms, and found the same pattern of effects.) The midfrontal N2 component was measured at FCz (cf. Ref.23) as mean amplitude 250–350 ms after target onset, relative to its preceding P2 component measured within 180–220 ms (the N2 was assessed peak-to-peak to nullify potential impact of between-condition variance in the preceding P2, cf. Refs.93,94). The P3b component was measured at Pz (cf. Ref.67) as mean voltage 350–550 ms after target onset.

Event-related potentials (ERPs). Panel (A) shows grand averages of visual evoked potentials (VEPs) from occipito-temporal areas in the left and right hemisphere (PO7 and PO8) for faces (left panel) and names (right panel). The head maps show topographies of the N170 component. The data are averaged across the Self Relevance conditions because the effects of this factor on N170 amplitudes were not significant. The depicted N1 results show typical hemispheric lateralization of face and name processing. Panels (B) and (C) show grand averages of the ERPs from FCz and Pz, respectively. The data are averaged across the Stimulus Type conditions because Self Relevance effects did not differ significantly between faces and names. The head maps show topographies of the midfrontal N2 (B) and centro-parietal P3b (C) for the five Self Relevance conditions, also averaged across the Stimulus Type conditions. Colors of frames denote the conditions of Self Relevance. The N2 topography data were high-pass filtered above 2 Hz to remove the slow parietal positivity that otherwise would mask the N2 peak. The depicted results show that the stimulus self relevance decreased the N2 amplitudes and increased the P3b amplitudes. Stimulus Type did not interact with these Self Relevance effects. For all panels: Negative voltage points upward. Time-point zero is stimulus onset. The gray areas indicate the time windows in which the ERP components were measured for statistical analysis. The head maps depict topographies of the ERP components selected by the gray areas. The maps are min–max scaled, with positive polarity in red, negative polarity in blue. The head view is from above.

EEG power and phase decomposition

The segmented artifact-free data was decomposed into time–frequency representations using custom-written MATLAB (The MathWorks, Natick, MA, USA) scripts based on published code84,95. To obtain power and phase values, the single-trial data was convoluted via complex Morlet wavelets defined as complex sine waves tapered by a Gaussian. The power spectrum of the EEG was multiplied by the spectrum of complex Morlet wavelets with the frequencies of the wavelets increased logarithmically from 1 to 40 Hz in 40 steps. The number of wavelet cycles in the Gaussian that tapers the sine waves was increased logarithmically from 3 to 8. This allowed to obtain an optimal tradeoff between temporal and frequency resolutions96.

The obtained power values (µV2) were baseline-corrected as a percentage change relative to the pre-stimulus baseline at each frequency band. The baseline was computed as the average power across all experimental conditions, from − 400 to − 100 ms before target onset. For statistical evaluations of target-related theta modulations, power values from relevant sites (PO7 and PO8 for analysis of visual activity; FCz, Fz, and AFz for analysis of midfrontal activity) were averaged over a time–frequency window of 100–300 ms and 5–7 Hz. This theta window was specified based on previous studies and confirmed after inspection of the data. More detailed descriptions of the time–frequency decomposition method (including the algorithm details) can be found in our previous articles72,73,94.

Multivariate spatio-spectral decomposition

To estimate the global topology of the task-related theta activity, we used the method of generalized eigenvalue decomposition (GED97,98,99; also referred to as joint decorrelation100). The GED is a feature-guided multivariate source separation technique that contrasts and maximally separates two covariance matrices of two a priori-specified data-features of interest. The first matrix is the channel (electrode) covariance of the relevant signal. Because our aim here was to isolate theta activity, the signal matrix (S) was derived from the data narrow band-filtered in the theta band98,101. The filter was centered at 5 Hz, and the range of the Gaussian spectral full-width at half-maximum (FWHM) was 3 Hz. The second matrix is the channel covariance of the reference data. Thus, our reference matrix (R) was derived from the broadband (unfiltered) EEG. Both matrices were computed for the entire post-target epoch. The covariance matrices were calculated for each participant, separately for the face and name conditions. To avoid potential stimulus-evoked transient artifacts, the phase-locked part of the signal was removed from the single-trial data prior to the GED analyses102. Next, the GED was applied to the matrices, which yielded a set of 62 spatial components (each being a weighted combination of all 62 channels) of the theta-band signal for each subject per condition. The components are specified by their eigenvalues that show the S/R ratio indicating the importance of each component, and eigenvectors that provide the parameters of the spatial filters, i.e., the sensor weights constituting each spatial component.

In the next step, we determined which of the obtained components were significantly contributing to the overall stimulus-related theta signal97. To this end, we used a non-parametric permutation testing method, which was a modified version of a parallel analysis method101. According to Hayton et al.103, parallel analysis is one of the most accurate methods of factor retention in exploratory factor analysis. Zuure et al.101 utilized this method for thresholding the GED components. First, theta-filtered and broadband signals were randomly assigned to the S and R matrices and submitted to the GED, which generated a random distribution of eigenvalues. One thousand iterations of the randomization were performed, and 62 eigenvalues extracted from the randomly swapped data at each iteration. After all iterations, the eigenvalues above the 95th percentile of the explained variance were selected from the full set of eigenvalues (62 × 1000) to create a random dataset (a null hypothesis distribution). This was done for each subject. The resulting datasets were averaged to obtain the group-level distribution, and compared against the eigenvalues of theta components obtained from the GED. Any component with eigenvalue greater than the maximal eigenvalue generated for the random distribution was considered significant. At the group level, three components with the highest eigenvalues exceeded the retention threshold (α = 0.05) and were selected for further analysis.

The obtained eigenvectors were used to construct spatial topographies of the three significant theta components. The component spatial representations were computed as the eigenvector multiplied by the S covariance matrix104. It is assumed here that the component topographies represent the underlying EEG sources projecting the signal onto the electrodes97. (Note however that the GED components do not indicate neural sources in the sense of delineating the exact functional anatomy.) Lastly, for further inspection, component-specific time-series data (the component eigenvector multiplied by the single-subject EEG signal) was decomposed into its time–frequency representation through convolution with complex Morlet wavelets, as previously described for sensor-data analysis.

Inter-site phase coherence (ISPC)

To estimate theta-band connectivity between the midfrontal and visual areas (indicated in the GED analysis), the obtained phase angles (derived from the complex wavelet convolution analysis, as described above) were used to compute inter-site phase coherence (ISPC)105,106. (This measure is also referred to as: inter-site phase connectivity, inter-site phase clustering, inter-channel phase synchrony or ICPS, and inter-site phase-locking value or PLV.) Before the phase decomposition for ISPC analysis, the surface Laplacian filter107,108 (also called current source density or current scalp density) was applied to the single-trial data. We used a 10th-order Legendre polynomial, and lambda was set at 1e−5. The Laplacian is a spatial filtering method that increases spatial selectivity and attenuates volume conduction confounds, allowing effective assessments of long-distance phase-based connectivity107,109. ISPC estimates the consistency between band-specific phase angle values at two sites or areas of activity. It is computed as the length of the mean vector of differences between a distribution of phase angle differences over trials, at each time–frequency point. The ISPC value varies from 0 to 1, where 0 indicates no phase synchrony between two sites, i.e., randomly distributed phases, and 1 indicates identical phase values at two sites, i.e., a perfect inter-site phase coherence. The obtained ISPC values were baseline-corrected as a percentage change relative to the pre-stimulus baseline, using the same method and the same baseline time-window as for power analysis. For statistical analysis, ISPC between the FCz site (used as “seed”) and the posterior PO7 and PO8 sites was averaged over a time–frequency window of 50–400 ms and 4–8 Hz—specified after inspection of the condition-averaged time–frequency results.

Inferential statistics

To determine both main effects and interactions between the experimental factors, all depended variables were analyzed by means of repeated-measures ANOVAs. The behavioral data were submitted into a 2 × 5 repeated measures ANOVA with Stimulus Type (faces, names) and Self Relevance (present self, past self, friend, famous, unknown) as within-subject factors. The N2 and P3b components of the ERP were analyzed using the same ANOVA design as for the behavioral data. The N170 component and theta-band activity (both occipito-temporal local power and ISPC) were analyzed using a 2 × 5 × 2 repeated measures ANOVA with Stimulus Type (faces, names), Self Relevance (present self, past self, friend, famous, unknown), and Hemisphere (left, right) as within-subject factors. When the Stimulus Type × Self Relevance interaction was significant, further analyses of Self Relevance effects were carried out separately for faces and names. The Greenhouse–Geisser correction was applied when the factor Self Relevance had more than one degree of freedom in the numerator to adjust for violations of sphericity. When the main effect of Self Relevance was significant, pairwise comparisons between the Self Relevance conditions were performed. The Bonferroni correction for multiple comparisons was applied to all pairwise tests. Statistical analyses were done in IBM SPSS Statistics 28.

Results

The RT, P2, and P3b results from this experiment have been reported previously in Kotlewska and Nowicka56. The data were reprocessed and reanalyzed for the purpose of this study.

Behavioral results

The overall mean RT was 408 ms (SD = 257 ms). Stimulus Type and Self Relevance did not affect the RTs, F ≤ 2.00, p ≥ 0.10.

Event-related potentials (ERPs)

Occipito-temporal N170

Figure 2A depicts grand averages of the ERPs from the PO7 and PO8 sites, separately for the two Stimulus Type conditions. The figure shows an opposite hemispheric lateralization of the N170 amplitudes for faces (left panel) and names (right panel), indicated by the interaction between Stimulus Type and Hemisphere, F1,24 = 42.68, p < 0.001, ηp2 = 0.64. As expected, names evoked a larger N170 in the left hemisphere (PO7) than in the right hemisphere (PO8), F1,24 = 29.02, p < 0.001, ηp2 = 0.55; whereas the N170 evoked by faces tended to be larger in the right hemisphere than in the left, F1,24 = 4.14, p = 0.053, ηp2 = 0.15. Importantly, Self Relevance did not significantly affect the N170 amplitudes. In the face condition, the main effect of Self Relevance did not reach the level of significance, F4,96 = 2.23, p = 0.084, ηp2 = 0.09 (Self × Hemisphere: F < 1.0, n.s.), and the pairwise comparisons showed no significant differences between the five Self categories, p’s ≥ 0.35. In the name condition, the Self Relevance effect was far from being significant, F < 1.0, n.s. The Self × Stimulus Type interaction was also not significant F < 1.0.

Midfrontal N2

Grand-average ERP waveforms from FCz are shown in Fig. 2B. The N2 peaked around 300 ms after stimulus onset, and was larger for faces than names, F1,24 = 12.54, p = 0.002, ηp2 = 0.34. Importantly, the main effect of Self Relevance was significant, F4,96 = 5.92, p < 0.001, ηp2 = 0.20. Moreover, the Stimulus Type × Self Relevance interaction was not significant, F4,96 = 1.97, p = 0.12, ηp2 = 0.08, indicating that the Self Relevance effect on the midfrontal N2 did not differ significantly between faces and names. The N2 amplitudes increased from smallest for present self, to larger for past self, intermediate for friend, and to largest for the famous and unknown faces; linear trend: F1,24 = 27.97 p < 0.001, ηp2 = 0.54. Pairwise comparisons showed significant differences between present self and famous, p = 0.002, and between present self and unknown, p < 0.001.

We also quantified the positive peak that preceded the N2, i.e., P2 occurring at about 200 ms, and found no significant effects of Self Relevance, F ≤ 1.61, p ≥ 0.20 (cf. Ref.56).

Centro-parietal P3b

The grand-average ERPs from Pz are depicted in Fig. 2C. The P3b component peaked between 350–550 ms. Its amplitude was generally larger for faces than names, F1,24 = 37.03, p < 0.001, ηp2 = 0.61. Importantly, Self Relevance modulated the P3b amplitude, F4,96 = 16.25, p < 0.001, ηp2 = 0.40, in the direction opposite to the N2 effect. The P3b amplitude decreased from largest for self-relevant categories (present and past self), to intermediate for friend, and to smallest for famous and unknown categories; linear trend: F1,24 = 30.31 p < 0.001, ηp2 = 0.56. As for the midfrontal N2, the interaction between Stimulus Type and Self Relevance was not significant, F = 1.0, indicating similar processing of Self Relevance in the centro-parietal area for faces and names. Pairwise comparisons showed that both the present and past self-face-evoked P3b were significantly larger than the P3b in the other three categories: self vs. friend, p’s ≤ 0.005, self vs. famous, p’s < 0.001, and self vs. unknown, p’s ≤ 0.002. See Table 1 for the estimated amplitudes of the ERP components for each experimental condition with standard error of the mean and confidence intervals.

Occipito-temporal theta power

Figure 3 shows EEG power from the sites PO7 and PO8, separately for faces (upper panel) and names (lower panel). The time–frequency maps (on the left) depict power spectra averaged over the PO7/8 electrodes and Self Relevance conditions, along with topographies of theta-band power. The graphs (in the middle) and head maps (on the right) show grand averages and topographies of theta power for each condition of Self Relevance.

Stimulus-related modulations of EEG power at the occipito-temporal sites PO7 and PO8 in the face (A) and name conditions (B). The time–frequency plots on the left depict power spectra averaged over the Self Relevance conditions and PO7/8 sites, along with topographies for the relevant time–frequency window. The charts in the middle show grand averages of theta power over time for each task condition. The head maps on the right show topographies of theta power for the analyzed time–frequency window. The colors of lines and frames denote Self Relevance conditions. The depicted results show that the increasing self-relevance of faces, but not names, attenuated the stimulus-related increase of the occipito-temporal theta power.

As seen in the figure, there was a prominent burst of theta power over the occipito-temporal sites at about 100–300 ms after stimulus onset. Expectedly, as in the visual-evoked potentials, there was a significant interaction between Stimulus Type and Hemisphere, F1,24 = 21.70, p < 0.001, ηp2 = 0.48, indicating a right-lateralization for faces, and a reversed asymmetry for names. The main effects of Stimulus Type and Hemisphere were insignificant, Fs ≤ 1.0. Most importantly, the main effect of Self Relevance was significant, F4,96 = 4.40, p = 0.006, ηp2 = 0.16, and so was its interaction with Stimulus Type, F4,96 = 3.78, p = 0.012, ηp2 = 0.14. The following analysis of Self Relevance effects were therefore conducted separately for faces and names.

In the face condition, the main effect of Self Relevance was significant, F4,96 = 6.53, p < 0.001, ηp2 = 0.21. Theta power increased from smallest for present self to largest for the famous and unknown categories. This linear trend was significant, F1,24 = 14.14, p < 0.001, ηp2 = 0.37. The interaction of Self Relevance and Hemisphere was not significant, F < 1.0. Pairwise comparisons showed that present self-elicited significantly lower posterior theta power compared to famous face, p = 0.005, and to unknown face, p = 0.042. The past self differed significantly from famous face, p = 0.037. A marginal difference was found between friend’s face and unknown face, p = 0.053. No significant difference was found between the two self categories (present vs. past, n.s.).

In the name condition, none of the Self Relevance effects on posterior theta power reached the level of statistical significance (Fs < 1.0, n.s., see Fig. 3).

Theta topology (GED results)

Our sensor-level results showed the most prominent theta power bursts over the lateral occipito-temporal areas. However, in the face condition, a noteworthy stimulus-related increase of theta power was present also over anterior midline sites (see the head maps in Fig. 3A). Anterior midline activity has been indicated in fMRI studies as related to processing of self-related information10,110,111,112,113. Hence, our next questions were (a) whether anterior theta was functionally connected with posterior theta, and (b) whether this connectivity was related to processing of the stimulus self-relevance, as was the local posterior activity. We therefore proceeded to examine source-level topology of the whole-brain theta-band activity, aiming at more precise delineating the sites that could be further examined in terms of their local activity and their inter-regional functional connectivity. To this end, we used the GED-based method of multivariate spatio-spectral decomposition (see “Methods” for details).

The GED results revealed three components significantly contributing to the overall stimulus-related theta-band signal (see the left panel of Fig. 4). Spatial topographies of the components averaged across participants are shown in Fig. 4, along with plots of averaged time–frequency decomposition of the components. Note that the components' power spectra showed very similar time courses of the stimulus-related modulations, indicating that the components contributed to the overall theta variance within the same time-window of about 100–400 ms after target onset.

Theta topology defined by the GED. The scree plot on the left shows averaged eigenvalues of all GED components, with statistical significance cutoff determined by permutation testing. Eigenvalues indicate the importance of each component for separating the signal covariance matrix from the reference covariance matrix (i.e., S/R power ratio). The head maps show activation topographies of the three significant components of theta activity defined by GED. The plots below show time–frequency power decompositions of the three GED components, indicating homogenous activity in the theta band.

The first component isolated the local lateral-posterior theta, which was already demonstrated as the strongest theta signal in the sensor-level analysis above. The anterior theta was in turn isolated in the third component, confirming the anterior midline theta roughly indicated on the sensor-level topography. Most interesting is the second component, which isolated again the lateral-posterior areas, but this time along with the midfrontal site. Apparently, this component was driven not by a local source, but anatomically distributed sources captured into one component.

Inter-regional theta phase coherence (ISPC results)

The spatio-temporal activation pattern isolated in the second GED component seems to indicate a network of nodes that were parallelly recruited, and time–frequency synchronized in the theta-band (cf. Refs.97,114). The midfrontal activation indicated in this component presumably represents the midfrontal theta115, which is often interpreted as a hub of long-distance connectivity that entrains task-related functional nodes through inter-regional phase coherence in the theta band73,116,117. Following this interpretation, in the next step of analysis, we used the midfrontal site (FCz electrode) as a “seed” of inter-regional connectivity (cf. Refs.73,118,119) and examined ISPC between the midfrontal and lateral-occipital activations.

The ISPC results showed a conspicuous increase of phase coherence between the two sites (relative to baseline) from 100 to 400 ms after stimulus onset, covering the full range of theta band. The omnibus ANOVA showed two relevant interactions. The Stimulus Type × Hemisphere interaction, F1,24 = 22.06, p < 0.001, ηp2 = 0.48, indicated again the lateralized effects for faces and names. More importantly, the Stimulus Type × Self Relevance interaction, F4,96 = 2.80, p = 0.038, ηp2 = 0.10, indicated a significant difference between the face and name conditions in the effects of Self Relevance.

The ISPC results for the face condition is shown in Fig. 5. The main effect of face self-relevance did not reach significance, F4,96 = 2.16, p = 0.094, ηp2 = 0.08, but the linear trend was again significant, F1,24 = 10.39 p = 0.004, ηp2 = 0.30, reflecting a gradual increase of the signal, from weakest for present self to strongest for self-irrelevant categories, consistently with the effects on posterior theta power. Pairwise comparisons of present self-face with the other face categories reached the alpha-unadjusted significance level (p’s = 0.048, 0.026, 0.007, 0.008, respectively from past self to unknown), but did not survive the Bonferroni correction (p’s = 0.48, 0.26, 0.071, 0.084, respectively). In conclusion, the theta ISPC results showed a trend consistent with the theta power results. In the name condition, none of the effects of Self Relevance was significant, Fs < 1.0. Estimations of theta-band power and ISPC for each experimental condition are provided in Table 2.

Inter-site phase coherence (ISPC) between the midfrontal and lateral-posterior areas in the face conditions. The time–frequency plot shows ISPC averaged over the Self Relevance conditions and hemispheres, along with a topographical map of theta-band ISPC for the analyzed time–frequency window. The chart in the middle shows grand averages of theta ISPC over time for the five Self Relevance conditions. The head maps on the right show topographies of theta ISPC. The colors of lines and frames denote the Self Relevance conditions. “Hotter” colors on time–frequency plot and head maps indicate more robust phase synchronization. The black on white dot on head maps denotes the midfrontal “seed”. The depicted results show that the stimulus-related increase of theta phase-synchrony between the midfrontal and posterior areas was smallest in the self-face condition.

Anterior midline theta power

In the last analysis step, we examined the local anterior midline theta activity, indicated in the second and third GED components. To this end, power from the anterior sites AFz, Fz, and FCz was measured at the same time–frequency window as occipital power, and entered the omnibus ANOVA (with AFz, Fz, and FCz, instead PO7 and PO8). The results showed that anterior theta power was generally larger for faces than names, F1,24 = 9.69, p = 0.005, ηp2 = 0.29. Marginally significant was the interaction Stimulus Type × Self Relevance × Electrode, F8,192 = 2.42, p = 0.056, ηp2 = 0.09, because in some conditions power tended to be largest at AFz. However, we found no significant differences between the self-relevance conditions, Fs ≤ 2.09, p ≥ 0.12.

Discussion

Summary of results

Behavioral results

No differences in RTs between experimental conditions were found. Specifically, no RTs effects for self-referential stimuli were observed although such effects have been reported in previous studies (e.g., Ref.3, for review see Ref.120). It is worth mentioning that the task used in the current study was very simple; it did not require discriminating between stimuli, thus no in-depth processing of incoming information was required. Still, analyses of neural activity showed several significant effects (see below). It should be noted, however, that our EEG results showed self-relevance effects on amplitudes and not latencies of the stimulus-related modulations. Thus, the RT result is not incompatible with the EEG results.

ERP results

The amplitudes of visual-evoked N170 showed typical effects of stimulus-specific hemispheric lateralization. Faces tended to evoke larger N170 in the right hemisphere than in the left, in line with evidence for lateralization of face processing121,122 and particularly the asymmetry of the FFA52,123,124. In turn, the N170 evoked by names was larger in the left hemisphere, presumably reflecting the involvement of the visual word form area125,126,127. Importantly, self-relevance of the visual stimuli did not affect the N170.

In our ERP results, the first significant effect of self-relevance appeared only at about 300 ms after stimulus onset. The self-relevant stimuli evoked a smaller midfrontal N2 component than the stimuli from the other categories, which is in line with several previous studies23,27,33,35,53. The self-relevance effect was then reversed into a larger P3b amplitudes in the self-relevant conditions, also in accordance with previous studies3,18,31,36,37,55,58,59,128. Importantly, neither the N2 nor P3b self-referential effects differed between faces and names, thus the effects may reflect a domain-general self-prioritization.

The ERP results therefore suggest that (a) self-relevance affects stimulus processing only at later, post-perceptual stages of processing, carried out in the midfrontal and parietal areas, and (b) the self-relevance effects are independent of the type of processed information. However, our time–frequency results show that such conclusions would be premature.

Time–frequency results

The EEG power results showed typical stimulus-related bursts of local activity in the theta-band over visual areas in both hemispheres, emerging within 100–300 ms after stimulus onset. Importantly, the self-related faces (present and past self) elicited a smaller increase of this occipito-temporal theta power than the self-irrelevant faces (i.e., famous and unknown face). Moreover, this early effect of self-relevance was present only for faces and not for names. Unlike the ERPs, the theta power results show that self-relevance may modulate already the early perceptual processing in visual areas, and this early self-relevance effect is stimulus-specific. This result corresponds with the previous observation of lower local theta phase synchrony in the right fusiform area during seeing self-face, compared to other familiar faces31.

In the next step, we identified the whole-brain topology of theta-band activity using a GED-based spatial filtering method97. The GED results showed that the second most-prominent area of theta activity—after the posterior (visual) theta—was over the midfrontal area. We then assessed inter-site phase coherence between the midfrontal and occipito-temporal areas of activity, to estimate their functional connectivity. The ISPC results showed that there was a significant burst of stimulus-related connectivity in the theta-band between these areas. This is in line with evidence showing that midfrontal theta—typically originating from the anterior cingulate cortex (ACC) and medial frontal cortex (MFC)—functions as a “hub” of long-distance task-related connectivity, which is carried out through theta phase coherence73,115,116. This stimulus-related phasic increase of theta ISPC tended to be smaller in the self-face condition than in the other face conditions. No such effect was found for names. Thus, although power and phase are generally independent of each other84, our results of theta occipital power and ISPC are consistent in showing the smallest stimulus-related modulations for self-face, and no self-relevance effects for names.

The time-course and functional anatomy of self-effects in visual perception

The first two questions we have asked were: how early in the processing chain is self-relevance differentiated from other sensory information, and where in the brain does the differentiation occur? The present findings of stimulus-related modulations of local occipito-temporal theta power suggest that self-face is differentiated within the first 100–300 ms, and the differentiation entails modulations of local activity in visual areas as well as of functional connectivity between the visual and midfrontal areas. The results conform to the previous findings showing an early local activity related to self-processing occurring parallelly in both the visual and midfrontal areas21,22,23. It also corresponds to fMRI studies showing the involvement of midfrontal areas in self-referential processing110,111,112,129, recently demonstrated also in primates130. As these local posterior and midfrontal areas are assumed to be part of an integrated system (e.g., a self-attention network131), we certainly should expect them to be functionally connected. Our ISPC results show that such inter-regional connectivity may be mechanistically carried out through a synchronization of theta-band activity, which is in line with the current accounts of long-distance neuronal communication132,133,134.

Stimulus-specificity of early self-relevance effect

An important aspect of the present results is that the early self-effect in the theta-band activity was found for faces and not for names. Thus, our results provide a potentially important data point contributing to understanding the specificity of face processing. The critical neural nodes involved in visual perception of faces and words—the FFA and VWFA—are anatomically located in similar areas of the fusiform gyri, in the right and left hemisphere, respectively81. In the present results, the assumed involvement of the two areas in processing faces and words is presumably reflected by the opposite hemispheric lateralization of the N170. Based on the functional anatomy, it would be reasonable to expect a similar time-course of self-referential differentiation of faces and names. However, the results showed the contrary. While the latency of name-evoked N170 was generally about 10 ms shorter than for face-evoked N170 (which might reflect the slightly more posterior localization of the VWFA than the FFA), the self-name differentiation was found only at about 300 ms after stimulus onset, as a modulation of the midfrontal N2 (and then P3b). The early self-differentiation of faces is plausibly based on the biologically determined mechanisms of face perception. The differentiation of self-name may rely on more time-consuming neural apparatus, because it may also need to involve later, semantic stages of processing, in addition to early visual encoding126.

The N170 discrepancy

Interestingly, as in several previous studies27,28,29,30,31,32,33,34,35,36, the self-face differentiation was not reflected in the N170, which suggests that this early visual ERP may not be sensitive to one’s own facial identity. In this line, it has been argued that the N170 may reflect specifically the processing of stimulus structural configurations, independently of facial expression or identity. This however contradicts with studies reporting positive N170 self-face effects21,22,23,24, and it is not clear what could explain the discrepancy. The problem is analogous to the issue of encoding facial emotions. While in some studies early signs of processing facial expressions were observed over the visual occipito-temporal areas48,135,136, others found such effects only over the fronto-central regions137,138,139. This sparked a debate on whether facial expressions are encoded along with the perceptual processing of facial structural configurations (as indexed by the N170) and what is the neural architecture of integrations between these aspects of face processing25,126. The same questions apply to the issue of encoding self-relevance.

One study demonstrated that the N170 could show the effects of facial expressions only because the N170 was spatially and temporally overlapping with activity from the expression-sensitive early posterior negativity, and not because the N170 per se is sensitive to facial expressions140. If the N170 is indeed not sensitive to face identity—as the present results seem suggest, possibly a similar overlap might occur during processing of face identity. This might explain why some studies did observe the N170 effects21,22,23,24. That is, the early structural processing and the identity processing might be carried out parallelly through partially overlapping but independent processes. We may speculate that the former might be expressed in the ERPs as the N170, while the latter in the modulations of occipito-temporal theta-band activity, but it obviously requires further evidence.

On the other hand, the meta-analysis by Hinojosa et al.25 showed that the N170 may in fact be affected by facial identity and expressions. This may therefore also explain the observations of the positive N170 self-face effects21,22,23,24. Still, it does not explain the discrepancy between the studies. It may therefore be that the self-face sensitivity of the N170 reveals only under specific conditions. Plausibly, specific task demands interact with and modulate the early self-referential visual processing, possibly by some top-down executive regulations of task-set parameters (see the section below on the issue of stimulus task-relevance).

Another relevant issue in this context is the neurobiological meaning of an enhanced N170. In terms of changes in the actual neural dynamics, the self-face related increase of the N170 amplitude may reflect either a stronger activation of the same neural sources or additional sources activated specifically by self-faces. Most plausibly, the N170 originates from a massive synchronized increase of postsynaptic neural activity, both time-locked and phase-locked to stimulus onset. Accordingly, the enhanced N170 (either to faces in general, or to self-face specifically) would reflect an increased recruitment of those neural sources92.

Self-relevance decreases stimulus-related activity

Another question we have asked here was whether self-referential processing entails an amplification of the self-related information, as it has often been assumed. Contradictory to this assumption, our results showed that self-relevance decreased the stimulus-related effects on the N2, posterior theta power, and theta ISPC. The interpretation of these effects is relatively straightforward—they do not show an amplification, but rather a smaller involvement of the underlying process, compared to the self-unrelated stimuli. The only exception was the P3b, but, as mentioned in the introduction, its interpretation is not as straightforward. The self-related P3b amplitude increase might reflect a decrease of the involvement of the underlying process, not their amplification or enhancement. It is also unclear what exact processes are reflected by the P3b (see the “Introduction”).

The smaller stimulus-related amplitude increase of the midfrontal N2 in the self-related conditions, compared to the self-unrelated conditions, may indicate a smaller involvement of executive control in visual encoding and response execution73,78,94,141. The previous studies on self-prioritization usually interpreted such N2 effects in a similar fashion, as a decreased need for control of allocation of attentional or memory resources27,33,53.

The role of theta oscillations in visual processing

A strong theta-band activity in the posterior occipital cortex in response to an incoming stimulus is one of the electrophysiological signatures of receiving input in the visual system. Thus, it seems to reflect an aspect of basic visual processing. Unlike alpha-band oscillations, theta-band oscillations respond to increased processing- or task-demands only with an increase of amplitude or power—the effect termed as an event-related synchronization142. Thus, we may assume that the observed modulations of theta activity impact stimulus processing proportionally to the magnitude of these modulations. Thereby, the smaller increase of the stimulus-related theta activity in the self-related conditions indicates a smaller involvement of the processing “engines”. If the self-prioritization was obtained by attentional enhancement or amplification of self-related information, then we should observe an increase of the related theta activity.

How then to interpret the observed self-face-related theta effects? As proposed by Fries132, theta rhythm may serve resetting the gamma phase. More specifically, the function of theta activity is related to the governing attentional sampling of local gamma activity143,144. In the visual cortex, theta rhythm is engaged in top-down control loop of the gamma-band feedforward processing132,145,146. By resetting the gamma, theta oscillations control the input sent to the higher visual levels144. Weaker theta synchronization in the occipital areas may therefore indicate a less attentional or less controlled processing. Thus, it might be hypothesized that the smaller theta effects in visual areas reflect a more automatic processing of the stimuli. In other words, processing of self-related stimuli may be more automatized than processing of other information, at least in the visual areas. Further, the observed weaker stimulus-related theta ISPC in the self-relevant conditions suggests lower connectivity, therefore a lower inter-regional coupling147, which also may reflect a higher degree of automatization. In this line, Tacikowski, Freiburghaus and Ehrsson6 demonstrated that self-face recognition was associated with less cognitive interference than recognition of other faces. They have suggested that processing self-related information becomes automatized as a function of repetition: “the more frequently and consistently a mental representation is accessed, the lower its threshold of activation becomes”6 (p. 93). After sufficient training, certain representation activates in the presence of a trigger. This process becomes automatic, i.e., involuntary, preconscious, and performed with minimal cognitive effort or control. In conclusion, the present findings suggest that visual perception of one’s own face might be facilitated at the early sensory stages by means of a less effortful and more automatic processing of self-related information.

Interestingly, a recent macaque study proposed that less theta-rhythmic visual processing may indicate a more continuous processing of stimuli, which may subserve processing of behaviorally relevant information148. However, in that study, selective attention actually reduced, not increased, the strength of theta modulations in the visual cortex. This opens an alternative account of our results, as simply reflecting the effect of more focused attention on the self-related information. Still, such an effect of selective attention was not yet shown in humans. Hence, the issue seems opening a new line of research on the functional meaning of theta activity in self-referential visual processing.

Evoked and induced theta activity

The time–frequency-representation of task-related modulations of EEG power is a mix of evoked and induced activity (see e.g., Refs.84,149). The evoked activity is phase-aligned with the event onset, whereas the induced activity is the signal that is time-locked but not phase-locked to the event onset. Although it is not entirely clear what the two aspects of EEG signal reflect in terms of cognitive operations, it is often assumed that evoked activity may reflect transient non-sinusoidal oscillations that are event-like and time-intermittent (cf. Ref.150), whereas the presence of induced activity is usually interpreted as evidence for rhythmic, sinusoidal, and stimulus independent (or endogenous) oscillations84,149. Therefore, an issue to be addressed in future is the dissociation between the two types of activity and determining their functions in the processing of self-related stimuli.

In the current study, we did not have enough statistical power per participant to reliably examine this issue. Thus, we briefly report only an exploratory examination of a general difference between the two signals in the responses to faces. We isolated induced and evoked power by subtracting the ERP from the single-trial EEG signal, and then decomposed the phase-locked and non-phase-locked signals into their time–frequency representations (using the same method as for total power, see “Methods”; for details see Refs.73,151). The results showed that the early posterior (visual) stimulus-related theta-band activity (100–300 ms, 5–7 Hz) was present in both evoked and induced power (see Fig. 6). This suggests that the visual effects observed here in the total power analysis may include both types of neural oscillations. This is in agreement with the fact that the spectral analysis showed the self-face effects that were not detected in the ERPs.

Face-related modulations of total (left panel), evoked (phase-locked; middle panel), and induced (non-phase-locked, right panel) EEG power from the occipito-temporal sites PO7 and PO8, along with topographies for the relevant time–frequency window (100–300 ms, 5–7 Hz). The time–frequency plots depict power spectra averaged over the Self Relevance conditions and PO7/8 sites.

Task-relevance of self-stimuli

A factor that should also be considered when comparing visual self-effects from different studies is the specificity of task-demands, and particularly whether self-related information is task-related or task-irrelevant. Active and passive stimulus recognition are two different processes. Active recognition of one’s own face and its intentional (task-related) discrimination among other faces involve the top-down control and intentional conscious prioritization of self-relevant information6,152. Several studies on self-prioritization stressed the role of top-down attentional modulations in self-referential processing131,153,154, e.g., by showing the involvement of the lateral prefrontal areas and anterior cingulate cortex155. Self-prioritization appears to be an attentional effect especially from the perspective of research that failed to find self-effects when the self-related information was not task-relevant156,157,158. On the other hand, several other studies did find task-irrelevant self-referential effects17,159. Both the active and passive viewing of self-face activate brain regions associated with the face representation, e.g., the fusiform gyrus and supramarginal gyrus. Also, the anterior midline areas typically involved in self-related processing—the medial prefrontal cortex and posterior cingulate cortex—are often found active even if self-relevance discrimination was not explicitly required by the task160,161,162. In the present study, the task did not require active stimulus discrimination, and the results still showed several self-related modulations of the brain activity. Taken together, the results suggest that the self-face differentiation occurs automatically.

Self-effects vs. familiarity effects

It is often raised as an issue whether self-face evokes different brain responses because it is a highly familiar stimulus156,163. Here, not only the familiar faces (famous and friend categories) evoked a different response than the self-face, but also the effects of familiar stimuli did not differ from the unknown stimuli. This adds evidence for the interpretation of the self-relevance effects, and brings us to the conclusion that personally relevant stimuli are more than just highly familiar stimuli (for similar results see Refs.153,164,165).

Data availability

The data supporting the reported findings are available from the corresponding author upon reasonable request.

References

Alexopoulos, T., Muller, D., Ric, F. & Marendaz, C. I, me, mine: Automatic attentional capture by self-related stimuli. Eur. J. Soc. Psychol. 42, 770–779 (2012).

Moray, N. Attention in dichotic listening: Affective cues and the influence of instructions. Q. J. Exp. Psychol. 11, 56–60 (1959).

Tacikowski, P. & Nowicka, A. Allocation of attention to self-name and self-face: An ERP study. Biol. Psychol. 84, 318–324 (2010).

Wójcik, M. J., Nowicka, M. M., Kotlewska, I. & Nowicka, A. Self-face captures, holds, and biases attention. Front. Psychol. 8, 1–11 (2018).

Brédart, S., Delchambre, M. & Laureys, S. One’s own face is hard to ignore. Q. J. Exp. Psychol. 59, 46–52 (2006).

Tacikowski, P., Freiburghaus, T. & Ehrsson, H. H. Goal-directed processing of self-relevant information is associated with less cognitive interference than the processing of information about other people. J. Exp. Soc. Psychol. 68, 93–100 (2017).

Zhao, S., Uono, S., Li, C., Yoshimura, S. & Toichi, M. The influence of self-referential processing on attentional orienting in frontoparietal networks. Front. Hum. Neurosci. 12, 199 (2018).

Chen, Y. et al. Evidence for implicit self-positivity bias: An event-related brain potential study. Exp. Brain Res. 232, 985–994 (2014).

Conway, M. A. & Dewhurst, S. A. The self and recollective experience. Appl. Cogn. Psychol. 9, 1–19 (1995).

Heatherton, T. F. et al. Medial prefrontal activity differentiates self from close others. Soc. Cogn. Affect. Neurosci. 1, 18–25 (2006).

Moran, J. M., Macrae, C. N., Heatherton, T. F., Wyland, C. L. & Kelley, W. M. Neuroanatomical evidence for distinct cognitive and affective components of self. J. Cogn. Neurosci. 18, 1586–1594 (2006).

Symons, C. S. & Johnson, B. T. The self-reference effect in memory: A meta-analysis. Psychol. Bull. 121, 371–394 (1997).

Tanguay, A. N. et al. The ERP correlates of self-knowledge: Are assessments of one’s past, present, and future traits closer to semantic or episodic memory?. Neuropsychologia 110, 65–83 (2018).

Cunningham, S. J. & Turk, D. J. Editorial: A review of self-processing biases in cognition. Q. J. Exp. Psychol. 70, 987–995 (2017).

Legrand, D. & Ruby, P. What is self-specific? Theoretical investigation and critical review of neuroimaging results. Psychol. Rev. 116, 252–282 (2009).

Tong, F. & Nakayama, K. Robust representations for faces: Evidence from visual search. J. Exp. Psychol. Hum. Percept. Perform. 25, 1016–1035 (1999).

Bola, M., Paź, M., Doradzińska, Ł & Nowicka, A. The self-face captures attention without consciousness: Evidence from the N2pc ERP component analysis. Psychophysiology 58, 1–13 (2021).

Gray, H. M., Ambady, N., Lowenthal, W. T. & Deldin, P. P300 as an index of attention to self-relevant stimuli. J. Exp. Soc. Psychol. 40, 216–224 (2004).

Sedikides, C. & Gregg, A. P. Self-enhancement food for thought. Perspect. Psychol. Sci. 3, 102–116 (2008).

Caharel, S., Courtay, N., Bernard, C., Lalonde, R. & Rebaï, M. Familiarity and emotional expression influence an early stage of face processing: An electrophysiological study. Brain Cogn. 59, 96–100 (2005).

Caharel, S. et al. The effects of familiarity and emotional expression on face processing examined by ERPs in patients with schizophrenia. Schizophr. Res. 95, 186–196 (2007).

Geng, H., Zhang, S., Li, Q., Tao, R. & Xu, S. Dissociations of subliminal and supraliminal self-face from other-face processing: Behavioral and ERP evidence. Neuropsychologia 50, 2933–2942 (2012).

Keyes, H., Brady, N., Reilly, R. B. & Foxe, J. J. My face or yours? Event-related potential correlates of self-face processing. Brain Cogn. 72, 244–254 (2010).

Zeman, P. M., Till, B. C., Livingston, N. J., Tanaka, J. W. & Driessen, P. F. Independent component analysis and clustering improve signal-to-noise ratio for statistical analysis of event-related potentials. Clin. Neurophysiol. 118, 2591–2604 (2007).

Hinojosa, J. A., Mercado, F. & Carretié, L. N170 sensitivity to facial expression: A meta-analysis. Neurosci. Biobehav. Rev. 55, 498–509 (2015).

Olivares, E. I., Iglesias, J., Saavedra, C., Trujillo-Barreto, N. J. & Valdés-Sosa, M. Brain signals of face processing as revealed by event-related potentials. Behav. Neurol. https://doi.org/10.1155/2015/514361 (2015).

Alzueta, E., Melcón, M., Poch, C. & Capilla, A. Is your own face more than a highly familiar face?. Biol. Psychol. 142, 100–107 (2019).

Caharel, S. et al. ERPs associated with familiarity and degree of familiarity during face recognition. Int. J. Neurosci. 112, 1499–1512 (2002).

Cygan, H. B., Tacikowski, P., Ostaszewski, P., Chojnicka, I. & Nowicka, A. Neural correlates of own name and own face detection in autism spectrum disorder. PLoS One 9, e86020 (2014).

Miyakoshi, M., Kanayama, N., Nomura, M., Iidaka, T. & Ohira, H. ERP study of viewpoint-independence in familiar-face recognition. Int. J. Psychophysiol. 69, 119–126 (2008).

Miyakoshi, M., Kanayama, N., Iidaka, T. & Ohira, H. EEG evidence of face-specific visual self-representation. Neuroimage 50, 1666–1675 (2010).

Pierce, L. J. et al. The N250 brain potential to personally familiar and newly learned faces and objects. Front. Hum. Neurosci. 5, 111 (2011).

Rubianes, M. et al. Am I the same person across my life span? An event-related brain potentials study of the temporal perspective in self-identity. Psychophysiology 58, e13692. https://doi.org/10.1111/psyp.13692 (2020).

Scott, L. S., Luciana, M., Wewerka, S. & Nelson, C. A. Electrophysiological correlates of facial self-recognition in adults and children. Cogn. Brain Behav. 9, 211–238 (2005).

Sui, J., Zhu, Y. & Han, S. Self-face recognition in attended and unattended conditions: An event-related brain potential study. NeuroReport 17, 423–427 (2006).

Tanaka, J. W., Curran, T., Porterfield, A. L. & Collins, D. Activation of preexisting and acquired face representations: The N250 event-related potential as an index of face familiarity. J. Cogn. Neurosci. 18, 1488–1497 (2006).

Żochowska, A., Jakuszyk, P., Nowicka, M. M. & Nowicka, A. Are covered faces eye-catching for us? The impact of masks on attentional processing of self and other faces during the COVID-19 pandemic. Cortex 149, 173–187 (2022).

Caharel, S. & Rossion, B. The N170 is sensitive to long-term (personal) familiarity of a face identity. Neuroscience 458, 244–255 (2021).

Eick, C. M., Ambrus, G. G. & Kovács, G. Inhibition of the occipital face area modulates the electrophysiological signals of face familiarity: A combined cTBS-EEG study. Cortex 141, 156–167 (2021).

Schweinberger, S. R. Neurophysiological correlates of face recognition. In Oxford Handbook of Face Perception (eds Calder, A. J. et al.) 346–367 (Oxford Academic, 2011). https://doi.org/10.1093/oxfordhb/9780199559053.013.0018.

Wuttke, S. J. & Schweinberger, S. R. The P200 predominantly reflects distance-to-norm in face space whereas the N250 reflects activation of identity-specific representations of known faces. Biol. Psychol. 140, 86–95 (2019).

Tacikowski, P., Cygan, H. B. & Nowicka, A. Neural correlates of own and close-other’s name recognition: ERP evidence. Front. Hum. Neurosci. 8, 1–10 (2014).

Miyakoshi, M., Nomura, M. & Ohira, H. An ERP study on self-relevant object recognition. Brain Cogn. 63, 182–189 (2007).

Liu, J., Harris, A. & Kanwisher, N. Stages of processing in face perception: An MEG study. Nat. Neurosci. 5, 910–916 (2002).

Debruille, J. B., Guillem, F. & Renault, B. ERPs and chronometry of face recognition: Following-up Seeck et al. and George et al. NeuroReport 9, 3349–3353 (1998).

Feng, W., Martinez, A., Pitts, M., Luo, Y. J. & Hillyard, S. A. Spatial attention modulates early face processing. Neuropsychologia 50, 3461–3468 (2012).

Halit, H., de Haan, M. & Johnson, M. H. Modulation of event-related potentials by prototypical and atypical faces. NeuroReport 11, 1871–1875 (2000).

Rellecke, J., Sommer, W. & Schacht, A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol. Psychol. 90, 23–32 (2012).

Pourtois, G., Grandjean, D., Sander, D. & Vuilleumier, P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb. Cortex 14, 619–633 (2004).

Jacques, C. & Caharel, S. The time course of categorical perception of facial expressions. Neuropsychologia 177, 108424 (2022).

Deffke, I. et al. MEG/EEG sources of the 170-ms response to faces are co-localized in the fusiform gyrus. Neuroimage 35, 1495–1501 (2007).

Rossion, B., Joyce, C. A., Cottrell, G. W. & Tarr, M. J. Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 20, 1609–1624 (2003).

Woźniak, M., Kourtis, D. & Knoblich, G. Prioritization of arbitrary faces associated to self: An EEG study. PLoS One 13, e0190679 (2018).

Alzueta, E., Kessel, D. & Capilla, A. The upside-down self: One’s own face recognition is affected by inversion. Psychophysiology 58, e13919. https://doi.org/10.1111/PSYP.13919 (2021).

Cygan, H. B., Nowicka, M. M. & Nowicka, A. Impaired attentional bias toward one’s own face in autism spectrum disorder: ERP evidence. Autism Res. 15, 241–253 (2022).

Kotlewska, I. & Nowicka, A. Present self, past self and close-other: Event-related potential study of face and name detection. Biol. Psychol. 110, 201–211 (2015).

Ninomiya, H., Onitsuka, T., Chen, C. H., Sato, E. & Tashiro, N. P300 in response to the subject’s own face. Psychiatry Clin. Neurosci. 52, 519–522 (1998).

Żochowska, A., Nowicka, M. M., Wójcik, M. J. & Nowicka, A. Self-face and emotional faces—Are they alike?. Soc. Cogn. Affect. Neurosci. 16, 593–607 (2021).

Żochowska, A., Jakuszyk, P., Nowicka, M. M. & Nowicka, A. The self and a close-other: Differences between processing of faces and newly acquired information. Cereb. Cortex bhac201, 1–17 (2022).

Fan, W. et al. Electrophysiological correlation of the degree of self-reference effect. PLoS One 8, e80289 (2013).

Fischler, I., Jin, Y. S., Boaz, T. L., Perry, N. W. & Childers, D. G. Brain potentials related to seeing one’s own name. Brain Lang. 30, 245–262 (1987).

Nowicka, A., Cygan, H. B., Tacikowski, P., Ostaszewski, P. & Kuś, R. Name recognition in autism: EEG evidence of altered patterns of brain activity and connectivity. Mol. Autism 7, 38 (2016).

Niu, G. et al. Behavioural and ERP evidence of the self-advantage of online self-relevant information. Sci. Rep. 10, 1–10 (2020).

Kotlewska, I. & Nowicka, A. Present-self, past-self and the close-other: Neural correlates of assigning trait adjectives to oneself and others. Eur. J. Neurosci. 44, 2064–2071 (2016).

Luo, Y., Huang, X., Chen, Y., Jackson, T. & Wei, D. Negativity bias of the self across time: An event-related potentials study. Neurosci. Lett. 475, 69–73 (2010).

Asanowicz, D. et al. The response relevance of visual stimuli modulates the P3 component and the underlying sensorimotor network. Sci. Rep. 10, 1–20 (2020).

Verleger, R. Effects of relevance and response frequency on P3b amplitudes: Review of findings and comparison of hypotheses about the process reflected by P3b. Psychophysiology 57, e13542 (2020).

Verleger, R., Asanowicz, D., Werner, L. & Śmigasiewicz, K. Biased odds for heads or tails: Outcome-evoked P3 depends on frequencies of guesses. Psychophysiology 52, 1048–1058 (2015).

Twomey, D. M., Murphy, P. R., Kelly, S. P. & O’Connell, R. G. The classic P300 encodes a build-to-threshold decision variable. Eur. J. Neurosci. 42, 1636–1643 (2015).

Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 118, 2128–2148 (2007).

Neuhaus, A. H. et al. Event-related potentials associated with Attention Network Test. Int. J. Psychophysiol. 76, 72–79 (2010).

Asanowicz, D., Wołoszyn, K., Panek, B. & Wronka, E. On the locus of the effect of alerting on response conflict: An event-related EEG study with a speed-accuracy tradeoff manipulation. Biol. Psychol. 145, 62–75 (2019).

Asanowicz, D., Panek, B. & Kotlewska, I. Selection for action: The medial frontal cortex is an executive hub for stimulus and response selection. J. Cogn. Neurosci. 33, 1442–1469 (2021).

Hopfinger, J. B. & Mangun, G. R. Reflexive attention modulates processing of visual stimuli in human extrastriate cortex. Psychol. Sci. 9, 441 (1998).

Śmigasiewicz, K., Asanowicz, D., Westphal, N. & Verleger, R. Bias for the left visual field in rapid serial visual presentation: Effects of additional salient cues suggest a critical role of attention. J. Cogn. Neurosci. 27, 266–279 (2015).

Zhao, K. et al. Event-related potential correlates of the collective self-relevant effect. Neurosci. Lett. 464, 57–61 (2009).

Vogel, E. K. & Luck, S. J. The visual N1 component as an index of a discrimination process. Psychophysiology 37, 190–203 (2000).

Folstein, J. R. & van Petten, C. Influence of cognitive control and mismatch on the N2 component of the ERP: A review. Psychophysiology 45, 152–170 (2008).

Cellerino, A., Borghetti, D. & Sartucci, F. Sex differences in face gender recognition in humans. Brain Res. Bull. 63, 443–449 (2004).

Haxby, J. V., Hoffman, E. A. & Gobbini, M. I. The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233 (2000).

Dien, J. A tale of two recognition systems: Implications of the fusiform face area and the visual word form area for lateralized object recognition models. Neuropsychologia 47, 1–16 (2009).