Abstract

Here, we study the problem of decoding information transmitted through unknown quantum states. We assume that Alice encodes an alphabet into a set of orthogonal quantum states, which are then transmitted to Bob. However, the quantum channel that mediates the transmission maps the orthogonal states into non-orthogonal states, possibly mixed. If an accurate model of the channel is unavailable, then the states received by Bob are unknown. In order to decode the transmitted information we propose to train a measurement device to achieve the smallest possible error in the discrimination process. This is achieved by supplementing the quantum channel with a classical one, which allows the transmission of information required for the training, and resorting to a noise-tolerant optimization algorithm. We demonstrate the training method in the case of minimum-error discrimination strategy and show that it achieves error probabilities very close to the optimal one. In particular, in the case of two unknown pure states, our proposal approaches the Helstrom bound. A similar result holds for a larger number of states in higher dimensions. We also show that a reduction of the search space, which is used in the training process, leads to a considerable reduction in the required resources. Finally, we apply our proposal to the case of the phase flip channel reaching an accurate value of the optimal error probability.

Similar content being viewed by others

Introduction

The states of quantum systems have properties that distinguish them from their classical counterparts. Unknown quantum states cannot be perfectly and deterministically copied1 and entangled states exhibit correlations without classical equivalence2. These are deeply related to the impossibility of discriminating non-orthogonal quantum states. If this were the case, then unknown quantum states could be perfectly and deterministically copied and entangled states could be used to implement superluminal communications3. Consequently, the discrimination of non-orthogonal quantum states has become an important research subject due to its implications for the foundations of the quantum theory4,5 and quantum communications6,7. For example, the problem of implementing quantum teleportation, entanglement sharing, and dense coding through a partially entangled pure state can be solved by local discrimination of non-orthogonal pure states8,9,10,11,12,13,14,15.

The discrimination of quantum states can be naturally stated in the context of two parties that attempt to communicate: Alice encodes information representing the letters of an alphabet through a set \(\Omega _1\) of orthogonal pure quantum states, which is transmitted through a communication channel. The channel transforms the orthogonal states into a new set \(\Omega _2\) of states, which might become non-orthogonal and mixed. Bob then receives them and performs a generalized quantum measurement to discriminate the states and to retrieve the information encoded by Alice. Both parties are assumed to know the generation probabilities of the orthogonal states in \(\Omega _1\) and the set \(\Omega _2\) of non-orthogonal states in advance. To decode the transmitted information, the parties agree on a figure of merit which is subsequently optimized to obtain the best single-shot generalized quantum measurement. This leads to several discrimination strategies such as minimum-error discrimination16,17,18, pretty-good measurement19,20, unambiguous discrimination21,22,23,24, maximum-confidence discrimination25,26, and fixed-rate of inconclusive result27,28. Various discrimination strategies have already been experimentally demonstrated29,30,31,32,33,34,35,36,37.

Typical figures of merit for state discrimination are functions of the generation probabilities and the conditional probabilities between the states transmitted by Alice and Bob’s measurement outcomes, which in turn also depend on the generalized measurement used by Bob and the states received by Bob. Thereby, optimizing any figure of merit and choosing the best-generalized measurement become difficult problems. Analytic solutions are only known for a small number of states or families of states defined by a few parameters, such as symmetric states. This adverse scenario imposes the use of numerical optimization techniques such as semidefinite programming (SDP)38,39,40 or neural networks41,42.

Quantum state discrimination has also been studied in the context of unknown quantum states. In this case, the communication channel maps the states in \(\Omega _1\) into a new set of states \(\Omega _2\) that are unknown to the communicating parties. Surprisingly, it has been shown that it is still possible to unambiguously discriminate between unknown states, which is achieved through a programmable discriminator43,44,45,46,47,48. This device has quantum registers that allow it to store the unknown states to be discriminated. In addition, the device is universal, that is, it does not depend on the actual unknown states to be discriminated44 and achieves a success probability close to the optimum.

In this article, we are interested in discriminating unknown quantum states when information about them is not readily available. An example of this situation is free-space quantum communication49,50,51,52,53, where information is encoded into states of light and transmitted through the atmosphere. This exhibits local and temporal variations in the refractive index, which can greatly modify the state of light and makes it difficult to characterize the transmitted states54,55. Since neither Alice nor Bob has access to the density matrices of the transmitted states, no known approaches can be applied. To solve this problem, we propose the training of a measurement device to optimally discriminate a set of unknown non-orthogonal quantum states. We assume that the action of this device is defined by a large set of control parameters, such that a given set of parameter values corresponds to the realization of a positive operator-valued measure (POVM). Given a fixed figure of merit for the discrimination process, it is iteratively optimized in the space of the control parameters. The optimization is driven by a gradient-free stochastic optimization algorithm56,57,58, which approximates the gradient of the figure of merit by a finite difference. This requires at each iteration evaluations of the figure of merit at two different points in the control parameter space. Thereby, the training is driven by experimentally acquired data. Furthermore, stochastic optimization methods have been shown to be robust against noise59, so they are a standard choice in experimental contexts. The training of the measurement device is carried out until approaches the optimal value of the figure of merit within a prescribed tolerance.

We illustrate our approach by studying minimum-error discrimination, where the figure of merit is the average retrodiction error. This figure of merit can be experimentally evaluated if, during the training step, Alice communicates the labels of the states that she sent to Bob through a classical channel. Minimum-error discrimination plays a key role in quantum imaging60, quantum reading61, image discrimination62, error-correcting codes63, and quantum repeaters64. This problem does not have a closed analytical solution except for sets of states with high symmetry. Our approach may also implement other discrimination strategies at the expense of resorting to more elaborate optimization algorithms. We first consider the minimum-error discrimination of two unknown non-orthogonal pure states. In this case, the minimum of the average error probability, which can be analytically calculated, is given by the Helstrom bound. We show that it is possible to train the measurement device to reach values very close to the Helstrom bound. We extend our analysis to d unknown non-orthogonal quantum states using d-dimensional symmetric states. Discrimination of this class of states plays an important role in processes such as quantum teleportation10,11, entanglement swapping14, and dense coding15 when carried out with partially entangled states and has already been implemented experimentally34. In this case, our approach also leads to the optimal single-shot generalized quantum measurement. However, it requires a large number of iterations. This is a consequence of the dimension of the control parameter space that scales as \(d^4\). We also show that the use of a priori information effectively reduces the number of iterations, where we consider the use of initial conditions close to the optimal measurement as well as the reduction of the dimension of the control parameter space by assuming a particular property of the optimal measurement. Finally, we consider the discrimination of unknown mixed quantum states generated by quantum channels such as phase flip.

This article is organized as follows: in “Methods” we introduce our approach to the discrimination of unknown orthogonal quantum states. In “Results” we study the properties of our approach by means of several numerical experiments. In “Conclusion” we summarize and conclude.

Methods

Alice encodes the information to be transmitted into a set \(\Omega _1=\{|\psi _q\rangle \}\) of N mutually orthogonal pure states that are generated with probabilities \(\{\eta _q\}\). The communication channel transforms the states in \(\Omega _1\) into the unknown states \(\{\rho _q\}\) in \(\Omega _2\), pure or mixed. By unknown we mean that Alice and Bob do not have explicit access to the density matrices \(\{\rho _q\}\). For simplicity, we assume that the relation between states in \(\Omega _1\) and \(\Omega _2\) is one-to-one and that the action of the channel does not change the generation (or a priori) probabilities. Upon receiving each state, Bob tries to decode the information sent by Alice using a positive operator-valued measure \(\{E_m\}\) composed of positive semi-definite matrices \(E_m\) such that \(\sum _mE_m=I \), the identity operator. The probability of obtaining the m-th measurement outcome given that the state \(\rho _q\) was sent is \(P(E_m|\rho _q)=Tr(E_m\rho _q)\). We assume that if Bob obtains the m-th measurement result, he concludes that Alice attempted to transmit the state \(|\psi _m\rangle \). This decoding rule leads to errors unless the states in \(\Omega _2\) are mutually orthogonal, which leads Bob to seek to minimize the occurrence of errors in the discrimination process. Thereby, Bob needs to find the optimal POVM that minimizes the figure of merit that accounts for the errors.

Several quantum state discrimination strategies are known, each defined by a particular figure of merit. Here, we focus on minimum-error discrimination, where the number of states to be identified is equal to the number of elements of the POVM. The probability of correctly identifying the state \(|\psi _q\rangle \) is given by \(P(E_q|\rho _q)\). Since the states in \(\Omega _2\) are generated with probabilities \(\{\eta _q\}\), the average probability of correctly identifying all states is given by

The average error probability is \(p_{err}=1-p_{corr}\). This probability, which is a function of the POVM \(\{E_m\}\) and of the unknown states \(\{\rho _k\}\), is minimized over the POVM space in order to train the measurement device. We assume that the states are fixed, that is, every time Alice aims to transmit the state \(|\psi _k\rangle \), Bob receives the same state \(\rho _k\). Since the transmitted states \(\Omega _2\) behave as a set of unknown fixed parameters, this optimization cannot be carried out with numerical methods that require explicit access to the density matrices, such as SDP.

Although \(p_{err}\) cannot be evaluated numerically, it can be obtained experimentally. The value of \(p_{err}\) corresponds to a sum over probabilities \(\eta _lTr(\rho _lE_l)\), which must be independently estimated. In order to do this Alice sends N copies of each state \(|\psi _l\rangle \) to Bob, communicating classically the label of them. These states play the role of training set. Bob measures the states with the corresponding POVM, which allows estimating the value of \(Tr(\rho _lE_l)\). We simulate the experiment that allows us to estimate this value. For simulation purposes the states \(\{\rho _l\}\) are known and thus we calculate the probabilities

These are used to generate a random number \(n_l\) from a binomial distribution with success probability \(p_l\) on a sample of size N. The probability \(p_l\) is estimated as \(n_l/N\). This procedure is repeated for each state in the set \(\{\rho _k\}\) and for each one of the POVMs at each iteration. Thereby, the average error probability is estimated as

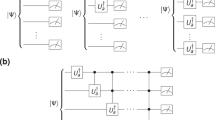

We consider that the measurement device implements a POVM using the direct sum extension. According to this, the Hilbert space \({{{\mathscr {H}}}}_s\) of the states to be discriminated is complemented with an ancilla space \({{{\mathscr {H}}}}_a\), obtaining an extended Hilbert space \({{{\mathscr {H}}}}_e={{{\mathscr {H}}}}_s\oplus {{{\mathscr {H}}}}_a\). The POVM is implemented by applying a unitary transformation U on \({{{\mathscr {H}}}}_e\) followed by a projective measurement on \({{{\mathscr {H}}}}_e\). This procedure requires adding fewer dimensions than the extension by means of the tensor product65. Let be \(d_s\) and \(d_a\) the dimensions of \({{{\mathscr {H}}}}_s\) and \({{{\mathscr {H}}}}_a\) respectively, so that the dimension of the extended system \({{{\mathscr {H}}}}_e\) is \(d=d_s+d_a\). In order to implement a POVM \(\{E_i\}\) with n elements of rank r we require \(d=rn\), so that the dimension of the ancillary system has to be \(d_a=rn-d_s\). Considering that the projective measurement on the extended system is \(\{|\,{j}\,\rangle \langle \,{j}\,|\}\), the POVM implemented has elements

where \(|\,{\varphi ^{(i)}_j}\,\rangle = \sum _{k=1}^{d_s} \langle \,{ r(i-1)+j}\,|U^\dagger |\,{k}\,\rangle |\,{k}\,\rangle \) are unnormalized state. This POVM is obtained by grouping d outcomes of the projective measurement into n set of r elements, where each group represents the result of a POVM element. For optimization purposes, the unitary matrix U is parametrized in terms of an unconstrained complex matrix Z of order \(rn\times d\). Through the QR decomposition, the matrix Z is projected into an isometric matrix S, which fulfills \(S^\dagger S = I_{d\times d}\). The isometric matrix S determines d rows of U, while the remaining have to be filled with free parameters determined only up to unitarity. The construction of the matrix U is required to obtain a physical implementation of the POVM, however, in order to obtain an explicit expression of the POVM the isometric matrix S is enough. The computation of the POVM can be done efficiently by reshaping techniques. Reshaping S into a rank-3 tensor \(S_{ijk}\) of size \(n\times r\times d\), the r-rank POVM is obtained as \(E_i = M_i^\dag M_i\), where the components of the matrices \(M_i\) are \(\langle \,{j}\,|M_{i}|\,{k}\,\rangle =S_{ijk}\). For a full-rank POVM the matrices \(M_i\) have size \(d\times d\), while for an observable they have size \(1\times d\). The average error probability \(p_{err}\) can be thus regarded as a function \(f({{\varvec{z}}})\) of the complex vector \({{{\varvec{z}}}}\) whose coefficients are given by the matrix elements of Z, that is, we have \(f({{\varvec{z}}})=p_{err}({{{\varvec{z}}}})\).

We assume that neither Alice nor Bob knows the states in \(\Omega _2\). Therefore, we cannot numerically evaluate the error probability \(p_{err}\) or its derivatives. Besides, given that we consider POVMs implemented by direct sum extension, the shift parameter rule can not be applied to evaluate the gradient66,67. Nevertheless, the error probability \(p_{err}\) can be evaluated experimentally, which allows us to overcome this problem with a gradient-free optimization algorithm. We use the Complex simultaneous perturbation stochastic approximation (CSPSA)56,57,58. This is based on the iterative rule

where \({{{\varvec{z}}}}_k\) is a complex vector in the control parameter space at the k-th iteration, \(a_k\) is a positive gain coefficient, and \({{{\varvec{g}}}}_{k}\) is an approximation of the gradient of the figure of merit \(f({{{\varvec{z}}}})\) whose components are given by

In the expression above, the quantities \(f({{{\varvec{z}}}_{k,+}})\) and \(f({{{\varvec{z}}}_{k,-}})\) are the values of the figure of merit on the vectors

where \(c_k\) is a positive gain coefficient and \(\Delta _k\) is a vector whose components are randomly generated at each iteration from the set \(\{1,-1,i,-i\}\). CSPSA allows for the existence of noise \(\zeta _{k,\pm }\) in the evaluations \(f({{{\varvec{z}}}_{k,\pm }})\).

The gain coefficients are defined by the sequences

and

where \(\{s, r, A, a, b\}\) are gain parameters. The values of the gain parameters are chosen to achieve the best possible rate of convergence. Therefore, the selection of the values itself becomes a costly optimization problem whose solution depends on the objective function and the particular optimizer. To avoid this problem, two sets of gain parameters are commonly used. Standard gain parameters with \(s=0.602\), \(r=0.101\), \(A=10{,}000.0\), \(a=2.25\), and \(b=0.5\), which provide fast convergence in the regime of a small number of iterations, and asymptotic gain parameters with \(s=1.0\), \(r=0.166\), \(A=0.0\), \(a=2.0\) and \(b=0.5\), which provide fast convergence in the regime of a large number of iterations.

According to Eq. (6), the optimization algorithm requires in each iteration the value of the objective function at two different points in the search space. In our case, this implies that two POVMs must be measured in each iteration to acquire the statistic required to estimate the average error probability. Each POVM is evaluated using an ensemble of size N and consequently the total ensemble size \(N_t\) used by the measurement training proposed here after \(k_t\) iterations is given by \(N_t=2Nk_t\). Therefore, if the measurement training is limited by a fixed amount of total ensemble size \(N_t\), it must be distributed between the ensemble size N to measure each POVM and the total number of iterations \(k_t\) in such way that the best optimum is achieved. In addition, inexpensive classical processing is also required at each iteration.

Results

We start to analyze our approach by considering the simplest case, namely, the discrimination of two unknown orthogonal pure states. We assume that Alice prepares the states \(\{|0\rangle ,|1\rangle \}\) with a priori probabilities \(\eta _0\) and \(\eta _1\), respectively. These orthogonal states are transformed by the communication channel into the states,

where the parameter s corresponds to the real-valued inner product \(\langle \psi _0|\psi _1\rangle \). In this scenario, the optimal average error probability is given by the Helstrom bound18

which can be achieved by measuring an observable.

We assume that the value of s is unknown. The training of the measurement device is carried out without the use of a priori information. In particular, the training does not use the facts that the transmitted states are pure and that the optimal measurement is an observable. For a given value of s our training procedure leads to a quantum measurement characterized by a value \({\tilde{p}}_{err}\) close to the optimal value given by the Helstrom bound. This is depicted in Fig. 1a, which shows the value of \({\tilde{p}}_{err}\) achieved by the training procedure as a function of s for \(\eta _0=\eta _1=1/2\). Since the optimization algorithm is stochastic, for each value of s we repeat the procedure considering 100 randomly chosen initial guesses in the control parameter space and 100 iterations. The statistic generated by each POVM is simulated using an ensemble size \(N=150\). In Fig. 1a the solid black line describes the Helstrom bound while the solid blue dots indicate the median of \({\tilde{p}}_{err}\) calculated over the 100 repetitions. The blue error bars describe the interquartile range. As is apparent from this figure, the training of the measurement device provides a median of \({\tilde{p}}_{err}\) that is very close to the Helstrom bound, where the difference \(|{\tilde{p}}_{err}-p_{err}|\) is on the order of \(10^{-2}\). The training procedure leads to similar results for other values of generation probabilities.

The total ensemble \(N_t\) can be split among the total number \(k_t\) of iterations and the ensemble size N is used to estimate the statistics of each POVM. This raises the question of whether for a fixed total ensemble better accuracy is achieved by increasing \(k_t\) or N. Figure 1b,c show the impact on \({\tilde{p}}_{err}\) for different splittings of \(N_t=15\times 10^3\). In Fig. 1b we have \(N=1500\) and \(k_t=10\) and in Fig. 1c we have \(N=50\) and \(k_t=300\). As these two figures indicate, a much better accuracy is obtained by splitting the total ensemble in a small ensemble N and a large number \(k_t\) of iterations. In particular, in Fig. 1c the difference \(|{\tilde{p}}_{err}-p_{err}|\) is in the order of \(10^{-3}\), that is, one order of magnitude smaller than in the case of Fig. 1a. Furthermore, the interquartile range becomes narrower indicating less variability in the set of estimates \(\{{\tilde{p}}_{err}\}\) for a given \(p_{err}\).

The features exhibited by Fig. 1 can be explained by two properties of the optimization algorithm. First, we use asymptotic gain parameters, which guarantee convergence for a high number of iterations, and second, the optimization algorithm tolerates noise in the figure of merit evaluation. Therefore, for a fixed total ensemble size \(N_t\), it seems better to increase the number of iterations as much as possible as long as the ensemble N allows to overcome the error in the figure of merit evaluation. Furthermore, since in the first few iterations the optimization algorithm is still far from the optimum, accurate figure-of-merit evaluations do not contribute to algorithm convergence.

Median of the estimated minimum-error probability \({\tilde{p}}_{err}\) as a function of the inner product s between two unknown pure states given by Eq. (11) for \(\eta _0=\eta _1=1/2\). Solid black line corresponds to the minimum-error probability \(p_{err}\) given by the Helstrom bound in Eq. (12). Solid blue dots correspond to the median of \({\tilde{p}}_{err}\) calculated over 100 initial conditions for each value of s. Blue error bars indicate the interquartile range. (a) \(N=150\) and \(k_t=100\), (b) \(N=1500\) and \(k_t=10\), and (c) \(N=50\) and \(k_t=300\). Asymptotic gain parameters are used.

The training of the measurement device for the discrimination of a larger number of states is demonstrated via symmetric states. These are given by the expression

where d is the dimension of \({{{\mathscr {H}}}}_s\), \(\omega = \exp {(2\pi i / d)}\), and the coefficients \(c_m\) are constrained by the normalization condition. As long as the generation probabilities are equal, d non-orthogonal symmetric states can be identified by measuring an observable whose eigenstates are given by the Fourier transform of the canonical base \(\{|m\rangle \}\) (with \(m=0,\dots ,d-1\)). The minimum-error discrimination of symmetric states has been experimentally demonstrated with high accuracy in dimensions up to \(d=21\)34. The discrimination of symmetric states typically arises in the processes of quantum teleportation, entanglement swapping and dense coding. These use a maximally entangled quantum channel as resource. If the entanglement decreases along the generation of the channel, then the performance of the process can be enhanced by resorting to the local discrimination of symmetric states, where the coefficients \(c_k\) entering in Eq. (13) are given by the real coefficients of the partially entangled state. If in addition, the channel coefficients are unknown, then our approach can be used.

In the case of three symmetric states, the channel coefficients are parameterized as \(c_0=\cos (\theta _1/2)\cos (\theta _2/2)\) and \(c_1=\sin (\theta _1/2)\cos (\theta _2/2)\) with \(\theta _1\) and \(\theta _2\) in the interval \([0,\pi ]\). Figure 2a shows \({\tilde{p}}_{err}\) as a function of \(\theta _1\) for a particular value of \(\theta _2\). The solid black line corresponds to the optimal minimum error discrimination probability \(p_{err}\) while the solid blue dots indicate the median of \({\tilde{p}}_{err}\) calculated on 100 initial conditions for each value of \(\theta _1\) after \(10^3\) iterations using an ensemble size \(N=10^3\). The difference \(|p_{err}-{\tilde{p}}_{err}|\) is on the order of \(10^{-2}\), as in the case of Fig. 1, but is obtained with a higher number of iterations and a larger ensemble size. In the case of a higher number of states we resort to a bi-parametric family of symmetric states given by \(c^2_k\propto 1-\root d \of {[(k-j_0+1)/(d-j_0)]\alpha }\) if \(k\ge j_0\) and \(c^2_k\propto 1\) if \(k<j_0\), where \(j_0=1,\dots ,d-1\) and \(\alpha \in [0,1]\). Figure 2b,c show the behavior of \({\tilde{p}}_{err}\) as a function of \(\alpha \) and \(j_0=2\) for \(d=4\) and \(d=5\), respectively. In both cases the ensemble size is \(N=300\) and the median was calculated over 100 initial conditions for each value of \(\alpha \). As in Fig. 2a, the difference \(|p_{err}-{\tilde{p}}_{err}|\) is on the order of \(10^{-2}\). To achieve this result, however, it was necessary to increase the number of iterations to \(6\times 10^3\) and \(1.2\times 10^4\) for \(d=4\) and \(d=5\), respectively.

Thus, as we increase the number n of states to be discriminated and the dimension d of the Hilbert space, the number of iterations \(k_t\) and the ensemble size N required to achieve a given tolerance also increase. This is due to the fact that the dimension of the search space, that is, the number of parameters that control the measurement device, increases as \(nd^2\). In addition, the probabilities entering in the estimate of \(p_{err}\) of Eq. (3) are obtained using as a resource a given ensemble size. As the number of probabilities increases it is necessary to increase the ensemble size N to obtain probability estimates that lead to a given tolerance.

Median of the estimated minimum-error probability \({\tilde{p}}_{err}\) for symmetric states as a function of: (a) \(\theta _1\) for \(d=3\), (b) \(\alpha \) for \(d=4\), and (c) \(\alpha \) for \(d=5\). Solid black line corresponds to the optimal minimum-error probability \(p_{err}\). Solid blue dots correspond to the median of \({\tilde{p}}_{err}\) calculated over 100 initial conditions for each set of symmetric states. Blue error bars indicate the interquartile range. (a) \(N=300\) and \(k_t=300\). (b) \(N=300\) and \(k_t=6\times 10^3\). (c) \(N=300\) and \(k_t=1.2\times 10^4\). Asymptotic gain parameters are used.

Median of the estimated minimum-error probability \({\tilde{p}}_{err}\) as a function of the inner product s between two unknown pure states given by Eq. (11). Solid black line corresponds to the minimum-error probability \(p_{err}\) given by the Helstrom bound of Eq. (12). Solid blue dots indicate the median of \({\tilde{p}}_{err}\) calculated over 100 initial conditions for each value of s. Blue error bars indicate the interquartile range. (a) \(\eta _0=\eta _1=1/2\), (b) \(\eta _0=1/3\) and \(\eta _1=2/3\), and (c) \(\eta _0=2/5\) and \(\eta _1=3/5\). Simulations are carried out with an ensemble size \(N=50\) and total number of iterations \(k_t=50\). Asymptotic gain parameters are used.

So far we have considered training the measurement device assuming the most general quantum measurement. This typically conveys an increase in the resources required for the training. As our previous simulations indicate, as we increase the number n of states to discriminate, as well as the dimension d, the training of the measurement device consumes even more resources, that is, larger ensembles and higher iteration numbers. To reduce the resources required for training, it is customary to reduce the dimension of the search space. This is done by imposing a set of conditions on the measurement device. This occurs when we have a priori information that allows us to ascertain that the optimal measurement satisfies a given condition. For instance, if the non-orthogonal states to be discriminated via the minimum-error strategy are pure and \(n=d\), then we can assume that the optimal POVM is an observable. This effectively decreases the dimension of the search space. Another possibility is that we are interested in reaching a given value of the minimum-error probability in a particular family of measurement devices, in which case we don’t need the optimal measurement.

This is depicted in Fig. 3, where we reproduce the Helstrom bound for states in Eq. (11) by optimizing in the set of observables. In Fig. 3a we show the case of equal generation probabilities. In this case, the training was carried out using an ensemble size \(N=50\) and a total number of iterations \(k_{t}=50\), which leads to a difference \(|p_{err}-{\tilde{p}}_{err}|\) is on the order of \(10^{-3}\). This result can be compared to the one illustrated in Fig.1c, where the same ensemble size is used but with a much larger number of iterations \(k_t=300\). Therefore, the reduction in the dimension of the search space leads to a reduction of the total ensemble \(N_{tot}\) by a factor of 6. A similar result holds in Fig. 3b,c for different values of the generation probabilities. Let us note that the initial condition in the search space is randomly chosen, that is, we do not assume as initial condition an observable close to the optimal one.

Discrimination of unknown non-orthogonal mixed single-qubit states generated by a phase flip channel. (a) Solid red line indicates the median value of \({\tilde{p}}_{err}\) for two randomly chosen states, calculated over 100 repetitions, as a function of the number of iterations. Solid blue line corresponds to the optimal value of \(p_{err}\) obtained via semidefinite programming. (b) Solid red line indicates the median value of \(|{\tilde{p}}_{err}-p_{err}|\) for 2000 randomly chosen states calculated over 100 repetitions, as a function of the number of iterations. Shaded green area corresponds to the interquartile range and ensemble size \(N=300\). Standard gain parameters are used.

Finally, we consider a more realistic scenario in which two single-qubit orthogonal states \(| \psi _0 \rangle \) and \(| \psi _1 \rangle \) generated by Alice are subjected to the action of a phase flip channel68. The action of this channel onto a single-qubit density matrix \(\rho \) is defined by the relation

where the parameter p represent the strength of the channel and \(\sigma _z=|0\rangle \langle 0|-|1\rangle \langle 1|\). The phase flip channel nullifies the off-diagonal terms in the density operator with respect to the canonical basis \(\{|0\rangle ,|1\rangle \}\) while decreasing the purity. We assume that the states transmitted by Alice are random pure states and that the value of \(p=3/5\) is unknown. The fidelity between the pure states and the noisy states in Fig. 4a is 0.785.

Figure 4a shows the result of training the measurement device to discriminate the states generated by the phase flip channel after acting on states \(|\psi _0\rangle \) and \(|\psi _1\rangle \). State \(|\psi _0\rangle \) is chosen randomly according to a Haar-uniform distribution, which fixes the state \(|\psi _1\rangle \). The training was initialized with the measurement that optimally discriminates the pure states sent by Alice. Fig. 4a displays the median (continuous red line) of \({\tilde{p}}_{err}\), calculated over 100 independent repetitions of the optimization procedure, as a function of the number of iterations. The continuous blue line corresponds to the solution \(p_{err}\) of the optimization of the minimum error for the states generated by the phase flip channel via semidefinite programming. Clearly, the value of \({\tilde{p}}_{err}\) obtained by training the measurement device converges to the optimal value \(p_{err}\). The interquartile range, described by the shaded green area, is very narrow indicating a very small variability of the training with respect to the initial conditions. Similar results hold for other values of the parameter p, which controls the convergence rate toward the optimal value of \(p_{err}\). Figure 4b displays the median of \(|{\tilde{p}}_{err}-p_{err}|\) averaged over 1000 pairs of states \(|\psi _0\rangle \) and \(|\psi _1\rangle \), where each state \(|\psi _0\rangle \) is independently chosen according to a Haar-uniform distribution and the optimization procedure is repeated 100 times, as a function of the number of iterations. As can be seen in this figure, all optimization attempts converge for all pairs of states while exhibiting a very narrow interquartile range. Thus, measurement training provides consistent and accurate results.

Conclusions

Here, we have studied the problem of discriminating unknown non-orthogonal quantum states. This situation occurs when two parties try to transmit information encoded in orthogonal quantum states that are transformed into non-orthogonal states by the action of a partially characterized quantum channel. Since the communicating parties do not know the states generated by the channel, standard approaches to discriminate non-orthogonal quantum states cannot be applied. Instead, we have proposed to train a single-shot measurement to optimally discriminate unknown non-orthogonal quantum states. This device is controlled by a large set of parameters, such that a given set of parameter values corresponds to the realization of a positive operator-valued measure (POVM). The measurement device is iteratively optimized in the space of the control parameters, or search space, to achieve the minimum value of the error probability, that is, we seek to implement the minimum-error discrimination strategy. The optimization is driven by a gradient-free stochastic optimization algorithm that approximates the gradient of the error probability by a finite difference. This requires at each iteration evaluation of the error probability at two different points in the search space. Thereby, the training is driven by experimentally acquired data. The choice of a stochastic optimization method is based on its robustness against noise.

We have studied the proposed approach using numerical simulations. First, we have shown that our approach leads to values of the error probability that are very close to the optimum. This was done in the case of two 2-dimensional unknown non-orthogonal pure states, where the optimal value of the average error probability is given by the Helstrom bound. Since the training method requires the estimation of probabilities, the total ensemble is regarded as a resource. This is divided evenly throughout the iterations of the training method. We have shown that the best results, that is, a value of the error probability closer to the Helstrom bound, can be obtained for a fixed total ensemble size by increasing the number of iterations. Thereafter, we have extended our result to the case of d d-dimensional symmetric states for \(d=3,4,5\), where our method also provides accurate results. However, to achieve a fixed accuracy as we increase the number of states and the dimension, it is necessary to increase the ensemble size and, consequently, the number of iterations. To avoid this, we have reduced the dimension of the search space by assuming that the required measurement has some special property. In particular, we have assumed that the optimal measurement is an observable. Thereby, in the case of two non-orthogonal pure states we have achieved a considerable reduction by a factor 1/6 in the ensemble size, which leads to an equal reduction in the number of iterations. Finally, we have applied the training procedure to the phase flip channel and shown that it is possible to achieve a value of the error probability close to the optimal one. Note that our proposal does not require data post-processing methods, such as maximum likelihood or Bayesian inference, which helps reduce computational cost and avoids exponential scaling of multipartite quantum states.

Our proposal finds applications whenever two parties intend to communicate through a channel whose characterization is difficult or costly. For instance, processes such as quantum teleportation, entanglement swapping, and dense coding, when performed through a partially entangled channel, can become a problem of local discrimination of non-orthogonal states8,9,10,11,12,13. If the description of the entangled channel is not available, then the states to be discriminated are unknown, in which case our method can also be applied. Recently, the problem of optimally discriminating between different configurations of a complex scattering system has been studied69 from the point of view of quantum state discrimination, where several non-orthogonal quantum states of light are associated with different hypotheses about a scattering system. These must be resolved with the best possible accuracy, which is limited by the Helstrom bound in the simplest case. Our training method can also be applied to this problem by finding the best average error probability.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Wootters, W. K. & Zurek, W. H. A single quantum cannot be cloned. Nature 299, 802–803. https://doi.org/10.1038/299802a0 (1982).

Bell, J. S. On the einstein podolsky rosen paradox. Phys. Physique 1, 195–200. https://doi.org/10.1103/physicsphysiquefizika.1.195 (1964).

Gisin, N. Quantum cloning without signaling. Phys. Lett. A 242, 1–3. https://doi.org/10.1016/s0375-9601(98)00170-4 (1998).

Pusey, M. F., Barrett, J. & Rudolph, T. On the reality of the quantum state. Nature Phys. 8, 475–478. https://doi.org/10.1038/nphys2309 (2012).

Schmid, D. & Spekkens, R. W. Contextual advantage for state discrimination. Phys. Rev. X 8, 011015. https://doi.org/10.1103/physrevx.8.011015 (2018).

Chefles, A. Quantum state discrimination. Contemp. Phys. 41, 401–424. https://doi.org/10.1080/00107510010002599 (2000).

Bae, J. & Kwek, L.-C. Quantum state discrimination and its applications. J. Phys. A: Math. Theor. 48, 083001. https://doi.org/10.1088/1751-8113/48/8/083001 (2015).

Delgado, A., Roa, L., Retamal, J. C. & Saavedra, C. Entanglement swapping via quantum state discrimination. Phys. Rev. A 71, 012303. https://doi.org/10.1103/PhysRevA.71.012303 (2005).

Solís-Prosser, M. A., Delgado, A., Jiménez, O. & Neves, L. Deterministic and probabilistic entanglement swapping of nonmaximally entangled states assisted by optimal quantum state discrimination. Phys. Rev. A 89, 012337. https://doi.org/10.1103/PhysRevA.89.012337 (2014).

Neves, L., Solís-Prosser, M. A., Delgado, A. & Jiménez, O. Quantum teleportation via maximum-confidence quantum measurements. Phys. Rev. A 85, 062322. https://doi.org/10.1103/PhysRevA.85.062322 (2012).

Roa, L., Delgado, A. & Fuentes-Guridi, I. Optimal conclusive teleportation of quantum states. Phys. Rev. A 68, 022310. https://doi.org/10.1103/PhysRevA.68.022310 (2003).

Chefles, A. Unambiguous discrimination between linearly independent quantum states. Phys. Lett. A 239, 339–347. https://doi.org/10.1016/S0375-9601(98)00064-4 (1998).

Marques, B. et al. Optimal entanglement concentration for photonic qutrits encoded in path variables. Phys. Rev. A 87, 052327. https://doi.org/10.1103/PhysRevA.87.052327 (2013).

Solís-Prosser, M. A., Delgado, A., Jiménez, O. & Neves, L. Deterministic and probabilistic entanglement swapping of nonmaximally entangled states assisted by optimal quantum state discrimination. Phys. Rev. A 89, 012337. https://doi.org/10.1103/physreva.89.012337 (2014).

Pati, A. K., Parashar, P. & Agrawal, P. Probabilistic superdense coding. Phys. Rev. A 72, 012329. https://doi.org/10.1103/physreva.72.012329 (2005).

Holevo, A. Statistical decision theory for quantum systems. J. Multivar. Anal. 3, 337–394. https://doi.org/10.1016/0047-259x(73)90028-6 (1973).

Yuen, H., Kennedy, R. & Lax, M. Optimum testing of multiple hypotheses in quantum detection theory. IEEE Trans. Inf. Theory 21, 125–134. https://doi.org/10.1109/tit.1975.1055351 (1975).

Helstrom, C. W. Quantum detection and estimation theory. J. Stat. Phys. 1, 231–252. https://doi.org/10.1007/bf01007479 (1969).

Hausladen, P. & Wootters, W. K. A ‘pretty good’ measurement for distinguishing quantum states. J. Mod. Opt. 41, 2385–2390. https://doi.org/10.1080/09500349414552221 (1994).

Hausladen, P., Jozsa, R., Schumacher, B., Westmoreland, M. & Wootters, W. K. Classical information capacity of a quantum channel. Phys. Rev. A 54, 1869–1876. https://doi.org/10.1103/PhysRevA.54.1869 (1996).

Ivanovic, I. How to differentiate between non-orthogonal states. Phys. Lett. A 123, 257–259. https://doi.org/10.1016/0375-9601(87)90222-2 (1987).

Dieks, D. Overlap and distinguishability of quantum states. Phys. Lett. A 126, 303–306. https://doi.org/10.1016/0375-9601(88)90840-7 (1988).

Peres, A. How to differentiate between non-orthogonal states. Phys. Lett. A 128, 19. https://doi.org/10.1016/0375-9601(88)91034-1 (1988).

Chefles, A. & Barnett, S. M. Optimum unambiguous discrimination between linearly independent symmetric states. Phys. Lett. A 250, 223–229. https://doi.org/10.1016/s0375-9601(98)00827-5 (1998).

Croke, S., Andersson, E., Barnett, S. M., Gilson, C. R. & Jeffers, J. Maximum confidence quantum measurements. Phys. Rev. Lett. 96, 070401. https://doi.org/10.1103/physrevlett.96.070401 (2006).

Jiménez, O., Solís-Prosser, M. A., Delgado, A. & Neves, L. Maximum-confidence discrimination among symmetric qudit states. Phys. Rev. A 84, 062315. https://doi.org/10.1103/physreva.84.062315 (2011).

Bagan, E., Muñoz-Tapia, R., Olivares-Rentería, G. A. & Bergou, J. A. Optimal discrimination of quantum states with a fixed rate of inconclusive outcomes. Phys. Rev. A 86, 040303(R). https://doi.org/10.1103/physreva.86.040303 (2012).

Herzog, U. Optimal state discrimination with a fixed rate of inconclusive results: Analytical solutions and relation to state discrimination with a fixed error rate. Phys. Rev. A 86, 032314. https://doi.org/10.1103/physreva.86.032314 (2012).

Cook, R. L., Martin, P. J. & Geremia, J. M. Optical coherent state discrimination using a closed-loop quantum measurement. Nature 446, 774–777. https://doi.org/10.1038/nature05655 (2007).

Barnett, S. M. & Riis, E. Experimental demonstration of polarization discrimination at the helstrom bound. J. Mod. Opt. 44, 1061–1064. https://doi.org/10.1080/09500349708230718 (1997).

Clarke, R. B. M. et al. Experimental realization of optimal detection strategies for overcomplete states. Phys. Rev. A 64, 012303. https://doi.org/10.1103/physreva.64.012303 (2001).

Mohseni, M., Steinberg, A. M. & Bergou, J. A. Optical realization of optimal unambiguous discrimination for pure and mixed quantum states. Phys. Rev. Lett. 93, 200403. https://doi.org/10.1103/physrevlett.93.200403 (2004).

Waldherr, G. et al. Distinguishing between nonorthogonal quantum states of a single nuclear spin. Phys. Rev. Lett. 109, 180501. https://doi.org/10.1103/physrevlett.109.180501 (2012).

Solís-Prosser, M., Fernandes, M., Jiménez, O., Delgado, A. & Neves, L. Experimental minimum-error quantum-state discrimination in high dimensions. Phys. Rev. Lett. 118, 100501. https://doi.org/10.1103/physrevlett.118.100501 (2017).

Solís-Prosser, M. A., Jiménez, O., Delgado, A. & Neves, L. Enhanced discrimination of high-dimensional quantum states by concatenated optimal measurement strategies. Quant. Sci. Technol. 7, 015017. https://doi.org/10.1088/2058-9565/ac37c4 (2021).

Solís-Prosser, M. A. et al. Experimental multiparty sequential state discrimination. Phys. Rev. A 94, 042309. https://doi.org/10.1103/PhysRevA.94.042309 (2016).

Gómez, S. et al. Experimental quantum state discrimination using the optimal fixed rate of inconclusive outcomes strategy. Sci. Rep. 12, 17312. https://doi.org/10.1038/s41598-022-22314-w (2023).

Holevo, A. Bounds for the quantity of information transmitted by a quantum communication channel. Probl. Inf. Transm. 9, 177–183 (1973).

Eldar, Y. C., Megretski, A. & Verghese, G. C. Designing optimal quantum detectors via semidefinite programming. IEEE Trans. Inf. Theory 49, 1007–1012 (2003).

Eldar, Y. C. A semidefinite programming approach to optimal unambiguous discrimination of quantum states. IEEE Trans. Inf. Theory 49, 446–456 (2003).

Chen, H., Wossnig, L., Severini, S., Neven, H. & Mohseni, M. Universal discriminative quantum neural networks. Quantum Mach. Intell. 3, 1. https://doi.org/10.1007/s42484-020-00025-7 (2020).

Patterson, A. et al. Quantum state discrimination using noisy quantum neural networks. Phys. Rev. Res. 3, 013063. https://doi.org/10.1103/physrevresearch.3.013063 (2021).

Dušek, M. & Bužek, V. Quantum-controlled measurement device for quantum-state discrimination. Phys. Rev. A 66, 022112. https://doi.org/10.1103/physreva.66.022112 (2002).

Bergou, J. A. & Hillery, M. Universal programmable quantum state discriminator that is optimal for unambiguously distinguishing between unknown states. Phys. Rev. Lett. 94, 160501. https://doi.org/10.1103/physrevlett.94.160501 (2005).

Hayashi, A., Hashimoto, T. & Horibe, M. Reexamination of optimal quantum state estimation of pure states. Phys. Rev. A 72, 032325. https://doi.org/10.1103/physreva.72.032325 (2005).

Bergou, J. A., Bužek, V., Feldman, E., Herzog, U. & Hillery, M. Programmable quantum-state discriminators with simple programs. Phys. Rev. A 73, 062334. https://doi.org/10.1103/physreva.73.062334 (2006).

Probst-Schendzielorz, S. T. et al. Unambiguous discriminator for unknown quantum states: An implementation. Phys. Rev. A 75, 052116. https://doi.org/10.1103/physreva.75.052116 (2007).

Zhou, T. Unambiguous discrimination between two unknown qudit states. Quant. Inf. Process. 11, 1669–1684. https://doi.org/10.1007/s11128-011-0327-x (2011).

Ursin, R. et al. Entanglement-based quantum communication over 144km. Nature Phys. 3, 481–486. https://doi.org/10.1038/nphys629 (2007).

Toyoshima, M. et al. Free-space quantum cryptography with quantum and telecom communication channels. Acta Astronaut. 63, 179–184. https://doi.org/10.1016/j.actaastro.2007.12.012 (2008).

Jin, X.-M. et al. Experimental free-space quantum teleportation. Nature Photon. 4, 376–381. https://doi.org/10.1038/nphoton.2010.87 (2010).

Steinlechner, F. et al. Distribution of high-dimensional entanglement via an intra-city free-space link. Nat. Commun. 8, 15971. https://doi.org/10.1038/ncomms15971 (2017).

Liao, S.-K. et al. Satellite-relayed intercontinental quantum network. Phys. Rev. Lett. 120, 030501. https://doi.org/10.1103/physrevlett.120.030501 (2018).

Anguita, J. & Cisterna, J. Algorithmic decoding of dense oam signal constellations for optical communications in turbulence. Opt. Express 30, 13540–13555. https://doi.org/10.1364/OE.455425 (2022).

Anguita, J. A., Neifeld, M. A. & Vasic, B. V. Turbulence-induced channel crosstalk in an orbital angular momentum-multiplexed free-space optical link. Appl. Opt. 47, 2414–2429. https://doi.org/10.1364/AO.47.002414 (2008).

Utreras-Alarcón, A., Rivera-Tapia, M., Niklitschek, S. & Delgado, A. Stochastic optimization on complex variables and pure-state quantum tomography. Sci. Rep. 9, 16143. https://doi.org/10.1038/s41598-019-52289-0 (2019).

Zambrano, L., Pereira, L., Niklitschek, S. & Delgado, A. Estimation of pure quantum states in high dimension at the limit of quantum accuracy through complex optimization and statistical inference. Sci. Rep. 10, 12781. https://doi.org/10.1038/s41598-020-69646-z (2020).

Gidi, J. A. et al. Stochastic optimization algorithms for quantum applications, https://doi.org/10.48550/ARXIV.2203.06044 (2022).

Rambach, M. et al. Robust and efficient high-dimensional quantum state tomography. Phys. Rev. Lett. 126, 100402. https://doi.org/10.1103/physrevlett.126.100402 (2021).

Tan, S.-H. et al. Quantum illumination with gaussian states. Phys. Rev. Lett. 101, 253601. https://doi.org/10.1103/physrevlett.101.253601 (2008).

Pirandola, S. Quantum reading of a classical digital memory. Phys. Rev. Lett. 106, 090504. https://doi.org/10.1103/physrevlett.106.090504 (2011).

Nair, R. & Yen, B. J. Optimal quantum states for image sensing in loss. Phys. Rev. Lett. 107, 193602. https://doi.org/10.1103/physrevlett.107.193602 (2011).

Lloyd, S., Giovannetti, V. & Maccone, L. Sequential projective measurements for channel decoding. Phys. Rev. Lett. 106, 250501. https://doi.org/10.1103/physrevlett.106.250501 (2011).

van Loock, P. et al. Hybrid quantum repeater using bright coherent light. Phys. Rev. Lett. 96, 240501. https://doi.org/10.1103/physrevlett.96.240501 (2006).

Chen, P.-X., Bergou, J. A., Zhu, S.-Y. & Guo, G.-C. Ancilla dimensions needed to carry out positive-operator-valued measurement. Phys. Rev. A 76, 060303(R). https://doi.org/10.1103/physreva.76.060303 (2007).

Li, J., Yang, X., Peng, X. & Sun, C.-P. Hybrid quantum-classical approach to quantum optimal control. Phys. Rev. Lett. 118, 150503. https://doi.org/10.1103/physrevlett.118.150503 (2017).

Schuld, M., Bergholm, V., Gogolin, C., Izaac, J. & Killoran, N. Evaluating analytic gradients on quantum hardware. Phys. Rev. A 99, 032331. https://doi.org/10.1103/physreva.99.032331 (2019).

Nielsen, M. A. & Chuang, I. L. Quantum Computation and Quantum Information: 10th Anniversary. (Cambridge University Press, Cambridge, 2011).

Bouchet, D., Rachbauer, L. M., Rotter, S., Mosk, A. P. & Bossy, E. Optimal control of coherent light scattering for binary decision problems. Phys. Rev. Lett. 127, 253902. https://doi.org/10.1103/PhysRevLett.127.253902 (2021).

Acknowledgements

This work was supported by ANID – Millennium Science Initiative Program – ICN17_012. AD was supported by FONDECYT Grants 1231940 and 1230586. LP was supported by ANID-PFCHA/DOCTORADO-BECAS-CHILE/2019-772200275, the CSIC Interdisciplinary Thematic Platform (PTI+) on Quantum Technologies (PTI-QTEP+), the CAM/FEDER Project No. S2018/TCS-4342 (QUITEMAD-CM), and the Proyecto Sinérgico CAM 2020 Y2020/TCS-6545 (NanoQuCo-CM). LZ was supported by ANID-PFCHA/DOCTORADO-NACIONAL/2018-21181021, EU NextGen Funds, the Government of Spain (Severo Ochoa CEX2019-000910-S), Fundació Cellex, Fundació Mir-Puig and Generalitat de Catalunya (CERCA). DC was supported by ANID-PFCHA/DOCTORADO-NACIONAL/2023-21231465.

Author information

Authors and Affiliations

Contributions

L.P., L.Z., and A.D. formulated the proposed method. D.C., L.P., and L.Z. performed the numerical simulations. All authors contributed equally to the analysis of the results and the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Concha, D., Pereira, L., Zambrano, L. et al. Training a quantum measurement device to discriminate unknown non-orthogonal quantum states. Sci Rep 13, 7460 (2023). https://doi.org/10.1038/s41598-023-34327-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-34327-0

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.