Abstract

This work presents the straightforward design of an integral controller with an anti-windup structure to prevent undesirable behavior when actuator saturation is considered, and the proposed controller improves the performance of the closed-loop dynamics of a class of nonlinear oscillators. The proposed integral controller has an adaptive control gain, which includes the absolute value of the named control error to turn off the integral action when it is saturated. Closed-loop stability analysis is performed under the Lyapunov theory framework, where it can be concluded that the system behaves in an asymptotically stable way. The proposed methodology is successfully applied to a Rikitake-type oscillator, considering a single input-single output (SISO) structure for regulation and tracking trajectory purposes. For comparison, an equivalent fixed gain integral controller is also implemented to analyze the corresponding anti-windup properties of the proposed control structure. Numerical experiments are conducted, showing the superior performance of the proposed controller.

Similar content being viewed by others

Introduction

The control of nonlinear systems with highly complex behavior is currently an important issue in science and engineering1,2,3,4. As is well known, nonlinear systems present steady-state multiplicity, where unstable homoclinic and heteroclinic manifolds are possible5,6 and the local presence of zero eigenvalues in equilibrium points7,8, the input multiplicity phenomena, and so on9,10 can affect the controllability properties of a specific nonlinear system, complicating the correct design of control laws11,12,13.

The control of nonlinear systems or even the control of chaotic dynamical systems has been studied for several years14,15,16,17,18. Controlling chaos via adaptive, sliding-mode, predictive, input-to-state linearizing, fuzzy-logic, neural network, and robust proportional-integral (PI) controllers, among other approaches, has been successfully published in the open literature19,20,21,22,23,24,25. However, most of the abovementioned control designs are based on complex mathematical frameworks and need to be coupled, for example, with sophisticated optimization algorithms and nonlinear models of systems, which can complicate their real-time application and operational adjustment by engineers25. In addition, several other issues remain, one of which is related to the physical restrictions of the chaotic oscillators and the respective manipulable control inputs, as it is well known that the corresponding state variables of oscillators can belong to a compact set that is upper-lower bounded and that the manipulable control inputs also belong to intervals with a minimum and maximum physical value26,27,28.

From the above, a traditional control problem arises, which is the saturation of the control actions. The significance of taking control input saturation into consideration in the design of practical control systems has been well studied. The saturation of a controller diminishes the anticipated closed-loop performance of the system’s dynamics and, in extreme condition, may lead to closed-loop instability29.

Now, the analysis of the saturation of control has been performed by anti-windup designs, where the applications to linear systems and PI controllers have been dominant in the open literature30,31,32,33. PI controllers are widely employed in a vast majority of linear and nonlinear systems, and the proportional term acts to stabilize the dynamic behavior of the system close to the required reference or set point, but high proportional gain values are needed to diminish the offset34, i.e., the difference in the current value of the controlled variable and the set point, making the control action very sensible. In addition, proportional controllers are sensitive to noisy measurements, and if the system reaches the set point, proportional control is turned off and the system is in open-loop operation; in this case, if an external disturbance is present, the system can become unstable34. To improve the performance of a proportional controller, an integral term of the control error can be added; the integral term is able to eliminate the offset, keep the controller turned on and reject some external disturbances35. From the abovementioned information, only the integral term of the linear controllers has been considered to regulate several systems.

Indeed, the saturation of the actuators from the focus of linear controllers has been analyzed by integral windup phenomena, integrator windup or reset windup, which refers to the situation in a proportional integral (PI) feedback regulator, where a large change in set point occurs and the integral term accumulates a significant error as it increases; therefore, the controller is overran and continues to increase as this accumulated error is unwound.

The abovementioned physical restrictions have important impacts on the control designs with an integral term of the PI controller, such that if the controller reaches a saturation condition without reaching the required reference point or trajectory, the whole system in closed-loop operation is considered to be under the named windup condition, whereas the integral part of the controller continues to theoretically add control effort, but it is physically saturated and the ideal affair is physically insolvable due to process saturation; i.e., the output of the process is limited at the bottom or top of its physical scale, making the control error constant, where the specific problem is the redundant overshooting35.

Furthermore, the analysis of saturation in terms of control has been performed by anti-windup designs, where the applications to linear systems have been dominant in the open literature36,37,38,39. Anti-windup designs can involve the controllers being turned off for ranks of time until a response falls back into a satisfactory range, which occurs when the regulator’s process can no longer affect the controlled variable. In practical applications, this task is manually done by process engineers.

This problem can be addressed by initializing the integral regulator to a preset value according to, the value before the problem by adding a set point in a suitable range to disable the integral function until the process variable that needs to be controlled enters the controllable region. This prevents the integral term from accumulating above or below predetermined bounds, and the integral term is back-calculated to constrain the process within the doable bounds. The integral term must be forced to zero every time the control error crosses or is equal to zero. This eliminates the need for the regulator to drive the system to have the same error integral in the opposite direction as the disturbance40.

The anti-windup control designs for nonlinear systems are currently a real challenge due to the practical need to design realizable controllers, for example, linearizing controllers via plant inversion; however, this approach is based on a predictive phenomenological model, which is a drawback, as well as optimal control techniques based on Pontryagin’s maximum principle or the Euler-Lagrange approach with important applications, such as secure data transmission and the stabilization of chemical systems via chaotic oscillators41,42,43. For the above reasons, linear PI controllers have been successfully considered, and several approaches have been designed to avoid windup phenomena44,45,46,47,48 by turning off the integral part of the controllers for different algorithms; however, these controllers have complex structures, and their physical implementation is difficult.

In this work, a simple control strategy is proposed that only considers an integral of the control error with an adaptive gain, which automatically turns off the control action when the controller is under saturation, avoiding the windup phenomena. The proposed controller is successfully applied to a class of nonlinear chaotic oscillators for regulation and tracking trajectory purposes.

Chaotic oscillator model

Nonlinear oscillator models have been employed as a benchmark for synchronization purposes under the framework of secure data transmission, and practical examples can be found in Chen, Van der Pool, Rikitake and other works on nonlinear chaotic oscillator models.

The Rikitake chaotic dynamical system is a model that attempts to explain the irregular polarity switching of the Earth’s geomagnetic field49,50. The frequent and irregular reversals of the Earth’s magnetic field inspired several early studies involving electrical currents within the Earth’s molten core. One of the first such models to report reversals was the Rikitake-type two-disk dynamo model51. The system exhibits Lorenz-type chaos and orbits around two unstable fixed points. This system describes the currents of two coupled dynamo disks.

The 3-D dynamics of the Rikitake-type dynamo system are described as follows:

They can also be described in vector form:

where \({\bf {x}}=\left[ \begin{matrix}x_1\\ x_2\\ x_3\\ \end{matrix}\right] ,\) \({\bf {A}}=\left[ \begin{matrix}-1&{}1&{}0\\ 0&{}-1&{}0\\ 0&{}0&{}-\delta \\ \end{matrix}\right] , \) \({\bf {f}}\left( {\bf {x}}\right) =\left[ \begin{matrix}0\\ x_1x_3\\ \gamma ^2\left( 1-x_1x_2\right) \\ \end{matrix}\right] ,\) and \({\bf {B}}=\left[ \begin{matrix}0\\ 0\\ 1\\ \end{matrix}\right] .\)

The parameter values are \(\delta =0.01\) and \(\gamma =2.0\).

Here, \({\bf {x}}\ \in {\mathbb {R}}^3\) is the state variable vector, which belongs to a compact set \(\Phi \) and, is naturally bounded, and \({\bf {f}}({\bf {x}})\) is assumed to be a smooth vector field, where \({\bf {f}}\left( \cdot \right) : {\mathbb {R}}^3\rightarrow {\mathbb {R}}^3\) and \(u({\bf {x}})\in{ \mathbb {R}}\).

Control design

Proposition 1

The integral controller in (3) stabilizes the dynamic behavior of the system (2) for regulation and tracking trajectory purposes:

Proof of Proposition 1

Let us define the control error dynamic of system (1) under controller (Eq. 3) as:

Then, Eq. (4) is rewritten in vector notation:

with: \({\bf {e}}=\left[ \begin{array}{l} e_1 \\ e_2 \\ e_3 \\ e_4 \\ \end{array} \right] , \) \(\Gamma ({\bf {e}})=-\left[ \begin{array}{llll} 1 &{} -1 &{} 0 &{} 0 \\ 0 &{} 1 &{} 0 &{} 0 \\ 0 &{} 0 &{} \delta &{} -k_3\text {abs}(e_3) \\ 0 &{} 0 &{} -1 &{} 0 \\ \end{array} \right] , \) \({\bf {F}}({\bf {e}})=\left[ \begin{array}{c} 0 \\ e_1e_3 \\ -\gamma ^2e_1e_2 \\ 0 \\ \end{array} \right] , \) and \(\Delta =\left[ \begin{array}{l} 0 \\ 0 \\ \gamma ^2+\delta x_{3r} \\ 0 \\ \end{array} \right] . \)

The abovementioned control error is defined as \({\bf {e}}={\bf {x}}-{\bf {x}}_r\), i.e., the difference between the actual values of the state variable vector and the reference vector. The reference vector, \({\bf {x}}_r\) is a constant vector for the regulation case, and it is variable for the tracking case.

By assuming that \(0\le \Vert {\bf {e}}\Vert \le {e}_B\); \(0\le {e}_B <\infty \), where \({e}_B\) is the finite upper bound of the control error, let us define:

Let us consider the following quadratic form as a Lyapunov function:

The corresponding time derivative is defined as:

Substituting Eq. (5) into Eq. (9) yields:

Equation (10) yields:

By applying the Rayleigh inequality to Eq. (11):

Then, Eq. (12) to Eq. (15) are substituted into equation (11):

where:

In Eq. (17), \(\Vert {\bf {e}}\Vert _{\Gamma ^{*}}\) is defined as

Therefore, from the above, it can be concluded, by the ultimate boundedness, that the regulation error \({\bf {e}}(t)\) is uniformly bounded for any initial condition \({\bf {e}}(t_0)\), such that \({\bf {e}}\left( t\right) =\{{\bf {e}}(t)|\ \Vert {\bf {e}}\Vert \le \mathfrak {R}; \ \mathfrak {R}>0\}\), and finally:

Numerical experiments and results

Numerical simulations were carried out on a personal computer with an Intel Core i7 processor, and the system in Eq. (5) of ordinary differential equations was numerically solved employing the ode23s library of MATLAB\(^{ \text {TM}}\), with the corresponding initial conditions \(x_{10} = 0.1\), \(x_{20} = 0.1\) and \(x_{30} = 0.1\), according to McMillen51. A single-input single-output control (SISO) configuration is selected for the system. The system is in the open-loop regime from start up until \(t = 100\)-time units, where controller (Eq. 3) is turned on, and \(x_3\) is proposed as the controlled variable. A first set of simulations is performed for regulation purposes, where the selected reference point or set point is \(x_{3r} = 1.0\), and a second set of simulations are performed for the tracking case, where system (1) is forced to follow the trajectory described for \(x_{3r} = 2.5\ \sin (0.1t + 0.5)\). For both control requirements, that is, regulation and tracking, the control saturations are given by the following lower and upper bounds:

We set \(u_{min}=-10\) and \(u_{max}=20\).

For comparison purposes, a similar standard integral controller with a fixed gain is applied as follows:

Here, to achieve the most similar conditions for the operation of controller (Eq. 21) and controller (Eq. 3), the control gain \(k_1 = -1.0\) is equal for both control laws.

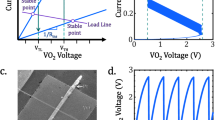

Figure 1 shows both the open-loop and the closed-loop dynamic behavior of the controlled state variable \(x_3\) for the regulation case. As observed, the corresponding trajectory almost immediately reaches the reference point \(x_{3r} = 1.0\) for the proposed controller. Additionally, when the integral controller is in operation, the corresponding trajectory has higher oscillatory overshoots and moreover, the integral controller is not able to regulate the dynamic behavior of the controlled state \(x_3\), which has a sustained oscillation.

Figures 2 and 3 show the open-loop and closed-loop performance of the uncontrolled state variable trajectories, \(x_1\) and \(x_2\), respectively. As a consequence of the performance of the controlled state variable \(x_3\), the oscillatory behavior is suppressed, and the trajectories are smoothly led to a steady state under the action of the proposed controller. Additionally, the trajectories of the uncontrolled state variables under the action of the integral controller maintain oscillatory behavior even after the control action is started, and it finally reaches a steady state.

The performance of the state variables is shown in Fig. 4, where a phase portrait is presented under the conditions mentioned in Figs. 1, 2 and 3. The corresponding orbit under the proposed controller arrives at the abovementioned steady state, with \(x_3 = x_{3r}\) and the oscillatory behavior is suppressed. However, the corresponding orbit induced by the integral controller maintains oscillations with a wide ratio, and the orbit maintains oscillatory behavior.

The abovementioned behaviors of the state variables under both controllers can be explained by the performance of both controllers under comparison. Figure 5 demonstrates the control effort performance. The proposed controller has smooth behavior and practically does not reach saturation conditions. As expected, the controller has the desired anti-windup response, leading the trajectory of the controlled state variable to the required set point and preventing the oscillatory response of uncontrolled state variables, as mentioned above. The integral control law shows both lower and upper saturation while turning on, which is the named windup effect. It can be observed that the control effort is very high due to the large oscillation that occurs at the start of the closed-loop regimen and the sustained oscillation at steady-state conditions in practical applications. These characteristics are undesirable due to the potential of physical damage to the control actuator. Finally, for the regulation case, Fig. 6 shows the dynamic performance of the named regulation error E. When control occurs at \(t = 100\) time units, the regulation error is zero when the proposed controller is turned on, which is in accordance with all the above results. For the integral control law, the expected oscillatory behavior is observed, which shows that the required set point is not reached.

Now, the proposed controller is also able to force the controlled state variable to follow a specific sinusoidal trajectory, as previously described, changing the control objective to the tracking trajectory case. A similar set of numerical simulations was performed to show the performance of the proposed controller and the integral controller. Figure 7 shows the open-loop and closed-loop dynamic behavior of the controlled state variable \(x_3\), and the controllers are turned on at \(t = 100\) time units. The proposed controllers lead to the dynamic trajectory and almost instantaneously to the required sinusoidal trajectory without overshoots, and at the setting time, as observed the integral control law provokes high overshoots and the controller is not able to reach the required trajectory.

Figures 8 and 9 show the dynamic behavior of the uncontrolled state variables, \(x_1\) and \(x_2\), in the tracking trajectory case. The sinusoidal behavior of the controlled state variable \(x_3\) leads to the suppression of the complex oscillations of the uncontrolled state, where they reach a steady state faster.

As in the regulation case, a phase portrait of the tracking trajectory case is shown in Fig. 10. As in the above case, the wide orbit, which is related to the corresponding oscillatory behavior, is related to the action of the integral controller. This is different from the narrow orbit being forced by the action of the proposed control law, which forces the \(x_3\) trajectory to reach the sinusoidal reference trajectory.

Figure 11 is related to the performance of the control effort of both controllers. As can be observed, the integral control again suffers lower and upper saturation, making the controller unable to force the system to reach the reference trajectory and leading to high oscillations in the control effort, which is, as mentioned, undesirable. However, the proposed controller has an anti-windup effect, preventing the saturation phenomena, which allows the controller to force the required closed-loop objective well. Note that the proposed controller has a smooth oscillation, which is required to maintain the desired tracking trajectory. Finally, Fig. 12 shows the performance of the tracking error. Here, it is concluded that the proposed controller reaches its control objective adequately and without time delay, overshoots, or large setting times. Furthermore, the integral control law does not reach the desired trajectory, showing undesirable performance, with large oscillations.

Conclusion

This work presents an alternative design for a class of integral controllers with adaptive gain. The adaptive gain is a function of the absolute values of the control error, where the main objective is to turn off the control action when the controller is saturated, thus preventing the named windup phenomena. The proposed methodology is successfully applied to a Rikitake-type chaotic oscillator for both regulation and tracking trajectory purposes such that the proposed control design can prevent the windup phenomena in the control saturation case. Numerical experiments show the performance of the considered methodology, and the proposed controller is compared with an equivalent integral controller with a fixed control gain.

Data availibility

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Edalati, L., Sedigh, A. K., Shooredeli, M. A. & Moarefianpour, A. Adaptive fuzzy dynamic surface control of nonlinear systems with input saturation and time-varying output constraints. Mech. Syst. Signal Process. 100, 311–329 (2018).

Tanaskovic, M., Fagiano, L., Novara, C. & Morari, M. Data-driven control of nonlinear systems: An on-line direct approach. Automatica 75, 1–10 (2017).

Wang, H., Liu, P. X., Zhao, X. & Liu, X. Adaptive fuzzy finite-time control of nonlinear systems with actuator faults. IEEE Trans. Cybern. 50, 1786–1797 (2019).

Montoya, O. D. & Gil-González, W. Nonlinear analysis and control of a reaction wheel pendulum: Lyapunov-based approach. Eng. Sci. Technol. Int. J. 23, 21–29 (2020).

Li, X. & Wang, H. Homoclinic and heteroclinic orbits and bifurcations of a new Lorenz-type system. Int. J. Bifurc. Chaos 21, 2695–2712 (2011).

Tigan, G. & Llibre, J. Heteroclinic, homoclinic and closed orbits in the Chen system. Int. J. Bifurc. Chaos 26, 1650072 (2016).

Chen, Y. & Yang, Q. A new Lorenz-type hyperchaotic system with a curve of equilibria. Math. Comput. Simul. 112, 40–55 (2015).

Wei, Z. & Yang, Q. Dynamical analysis of a new autonomous 3-D chaotic system only with stable equilibria. Nonlinear Anal. Real World Appl. 12, 106–118 (2011).

Sprott, J. C. Strange attractors with various equilibrium types. Eur. Phys. J. Spec. Top. 224, 1409–1419 (2015).

Zhou, P. & Yang, F. Hyperchaos, chaos, and horseshoe in a 4D nonlinear system with an infinite number of equilibrium points. Nonlinear Dyn. 76, 473–480 (2014).

Bao, H. et al. Memristor-based canonical Chua’s circuit: Extreme multistability in voltage–current domain and its controllability in flux-charge domain. Complexity 2018, 5935637 (2018).

Li, T. & Yu, L. Exact controllability for first order quasilinear hyperbolic systems with zero eigenvalues. Chin. Ann. Math. 24, 415–422 (2003).

Yuan, Z., Zhao, C., Di, Z., Wang, W. X. & Lai, Y. C. Exact controllability of complex networks. Nat. Commun. 4, 1–9 (2013).

Kapitaniak, T. Synchronization of chaos using continuous control. Phys. Rev. E 50, 1642–1644 (1994).

Tavazoei, M. S. & Haeri, M. Chaos control via a simple fractional-order controller. Phys. Lett. A 372, 798–807 (2008).

Mahmoodabadi, M. & Jahanshahi, H. Multi-objective optimized fuzzy-pid controllers for fourth order nonlinear systems. Engineering Science and Technology, an International Journal 19, 1084–1098 (2016).

Rajagopal, K., Vaidyanathan, S., Karthikeyan, A. & Duraisamy, P. Dynamic analysis and chaos suppression in a fractional order brushless dc motor. Electr. Eng. 99, 721–733 (2017).

Din, Q. Complexity and chaos control in a discrete-time prey–predator model. Commun. Nonlinear Sci. Numer. Simul. 49, 113–134 (2017).

Kumar, S., Matouk, A. E., Chaudhary, H. & Kant, S. Control and synchronization of fractional-order chaotic satellite systems using feedback and adaptive control techniques. Int. J. Adapt. Control Signal Process. 35, 484–497 (2021).

Emiroglu, S. Nonlinear model predictive control of the chaotic Hindmarsh–Rose biological neuron model with unknown disturbance. Eur. Phys. J. Spec. Top. 231, 979–991 (2022).

Nazzal, J. M. & Natsheh, A. N. Chaos control using sliding-mode theory. Chaos Solit. Fract. 33, 695–702 (2007).

Femat, R. & Solis-Perales, G. Robust Synchronization of Chaotic Systems Via Feedback (Springer, 2009).

Golouje, Y. N. & Abtahi, S. M. Chaotic dynamics of the vertical model in vehicles and chaos control of active suspension system via the fuzzy fast terminal sliding mode control. J. Mech. Sci. Technol. 35, 31–43 (2021).

Mani, P., Rajan, R., Shanmugam, L. & Joo, Y. H. Adaptive control for fractional order induced chaotic fuzzy cellular neural networks and its application to image encryption. Inf. Sci. 491, 74–89 (2019).

Tabatabaei, S. M., Kamali, S., Jahed-Motlagh, M. R. & Yazdi, M. B. Practical explicit model predictive control for a class of noise-embedded chaotic hybrid systems. Int. J. Control Autom. Syst. 17, 857–866 (2019).

Niemann, H. A controller architecture with anti-windup. IEEE Control Syst. Lett. 4, 139–144 (2019).

Galeani, S., Tarbouriech, S., Turner, M. & Zaccarian, L. A tutorial on modern anti-windup design. Eur. J. Control 15, 418–440 (2009).

Niemann, H. A. Controller architecture with anti-windup. IEEE Control Syst. Lett. 4, 139–144 (2020).

Hu, C. et al. Lane keeping control of autonomous vehicles with prescribed performance considering the rollover prevention and input saturation. IEEE Trans. Intell. Transport. Syst. 21, 3091–3103 (2019).

Chiah, T. L., Hoo, C. L. & Chung, E. C. Y. Study of anti-windup pi controllers with different coupling–decoupling tuning gains in motor speed. Asian J. Control 24, 2581–2590 (2022).

Dwivedi, P., Bose, S., Pandey, S. et al. Comparative analysis of pi control with anti-windup schemes for front-end rectifier. in 2020 IEEE First International Conference on Smart Technologies for Power, Energy and Control (STPEC). 1–6 (IEEE, 2020).

Chen, Y., Ma, K. & Dong, R. Dynamic anti-windup design for linear systems with time-varying state delay and input saturations. Int. J. Syst. Sci. 53, 2165–2179 (2022).

Aguilar-Ibanez, C. et al. Pi-type controllers and \(\sigma \)-\(\delta \) modulation for saturated dc-dc buck power converters. IEEE Access 9, 20346–20357 (2021).

Aguilar-López, R. & Neria-González, M. Closed-Loop Productivity Analysis of Continuous Chemical Reactors under Different Tuning Rules in PI Controller. (2022).

Astrom, K. J. PID Controllers: Theory, Design, and Tuning (The International Society of Measurement and Control, 1995).

Cristofaro, A., Galeani, S., Onori, S. & Zaccarian, L. A switched and scheduled design for model recovery anti-windup of linear plants. Eur. J. Control 46, 23–35 (2019).

Lin, Z. Control design in the presence of actuator saturation: from individual systems to multi-agent systems. Sci. China Inf. Sci. 62, 1–3 (2019).

Shin, H.-B. & Park, J.-G. Anti-windup PID controller with integral state predictor for variable-speed motor drives. IEEE Trans. Ind. Electron. 59, 1509–1516 (2011).

Azar, A. T. & Serrano, F. E. Design and modeling of anti wind up PID controllers. in Complex System Modelling and Control Through Intelligent Soft Computations. 1–44 (2015).

Huang, B., Zhai, M., Lu, B. & Li, Q. Gain-scheduled anti-windup PID control for LPV systems under actuator saturation and its application to aircraft. Aerosp. Syst. 5, 445–454 (2022).

Rajagopal, K., Kingni, S. T., Khalaf, A. J. M., Shekofteh, Y. & Nazarimehr, F. Coexistence of attractors in a simple chaotic oscillator with fractional-order-memristor component: Analysis, FPGA implementation, chaos control and synchronization. Eur. Phys. J. Spec. Top. 228, 2035–2051 (2019).

Rigatos, G. & Abbaszadeh, M. Nonlinear optimal control and synchronization for chaotic electronic circuits. J. Comput. Electron. 20, 1050–1063 (2021).

Aguilar-López, R. Chaos suppression via Euler–Lagrange control design for a class of chemical reacting system. Math. Probl. Eng. 2018, 1–6 (2018).

Tahoun, A. H. Anti-windup adaptive PID control design for a class of uncertain chaotic systems with input saturation. ISA Trans. 66, 176–184 (2017).

Lopes, A. N., Leite, V. J., Silva, L. F. & Guelton, K. Anti-windup TS fuzzy pi-like control for discrete-time nonlinear systems with saturated actuators. Int. J. Fuzzy Syst. 22, 46–61 (2020).

Hou, Y.-Y. Design and implementation of EP-based PID controller for chaos synchronization of Rikitake circuit systems. ISA Trans. 70, 260–268 (2017).

Lorenzetti, P. & Weiss, G. Saturating pi control of stable nonlinear systems using singular perturbations. in IEEE Transactions on Automatic Control (2022).

Tahoun, A. Anti-windup adaptive PID control design for a class of uncertain chaotic systems with input saturation. ISA Trans. 66, 176–184 (2017).

Donato, S., Meduri, D. & Lepreti, F. Magnetic field reversals of the earth: A two-disk Rikitake dynamo model. Int. J. Mod. Phys. B 23, 5492–5503 (2009).

Llibre, J. & Messias, M. Global dynamics of the rikitake system. Physica D: Nonlinear Phenomena 238, 241–252 (2009).

McMillen, T. The shape and dynamics of the Rikitake attractor. Nonlinear J. 1, 1–10 (1999).

Funding

This work was supported by the Secretaría de Investigación y Posgrado of the Instituto Politécnico Nacional (SIP-IPN) under the research grant SIP20221338.

Author information

Authors and Affiliations

Contributions

R.A.L.—conceptualized the study and conducted the numerical experiments, and J.L.M.M.—conducted the design, performed analysis, and organized funding. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aguilar-López, R., Mata-Machuca, J.L. Stabilization of a chaotic oscillator via a class of integral controllers under input saturation. Sci Rep 13, 5927 (2023). https://doi.org/10.1038/s41598-023-33201-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-33201-3

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.