Abstract

The Drift-Diffusion Model (DDM) is widely accepted for two-alternative forced-choice decision paradigms thanks to its simple formalism and close fit to behavioral and neurophysiological data. However, this formalism presents strong limitations in capturing inter-trial dynamics at the single-trial level and endogenous influences. We propose a novel model, the non-linear Drift-Diffusion Model (nl-DDM), that addresses these issues by allowing the existence of several trajectories to the decision boundary. We show that the non-linear model performs better than the drift-diffusion model for an equivalent complexity. To give better intuition on the meaning of nl-DDM parameters, we compare the DDM and the nl-DDM through correlation analysis. This paper provides evidence of the functioning of our model as an extension of the DDM. Moreover, we show that the nl-DDM captures time effects better than the DDM. Our model paves the way toward more accurately analyzing across-trial variability for perceptual decisions and accounts for peri-stimulus influences.

Similar content being viewed by others

Introduction

Perceptual decision-making has been studied extensively from behavioral1,2 and neurophysiological3,4 perspectives, as it is omnipresent in daily activities. When decisions are timed, evidence accumulation models accurately describe human and animal behavior. They assume that decisions are made when enough sensory evidence has been gathered.

Among them, the Diffusion Decision Model (DDM, also called Drift-Diffusion Model)5 suggests that evidence is accumulated linearly, with a constant drift. The accumulation is additionally subject to Gaussian noise; hence the decision state can be seen as a particle following a Brownian motion. The popularity of this model yields from its intuitive formalism and good fit to behavioral1 and neurophysiological data3. It has also been shown that the DDM formalizes the optimal strategy for decision-making under time constraints6,7. Interestingly, other forms of decision models such as the Leaky-Competing Accumulator model8, and attractor models9,10 can be formulated equivalently to the DDM under certain constraints6,11.

The DDM accounts for global statistics of the behavior by describing the Response Time (RT) distribution and error rate. A major limitation of this model is that it does not consider inter-trial variability. However, behavioral studies have shown that sequential effects12 impact prior expectations and the subsequent decision process13. Traditionally, expectations are modeled through the starting point, or bias, of the accumulation process5. Recent accounts have suggested that choice history affects subsequent drift rates14. Together, these studies suggest that these parameters could be intertwined and vary over time, as participants become more familiar with the task. To address this issue,15,16 proposed an extended DDM, where starting points are uniformly distributed and drifts follow a Gaussian distribution without explicit dependence between them. However, this only provides global statistics about perceptual responses without insight into single decisions or inter-trial interactions. Moreover, the linear accumulation does not describe the variation of the dynamics at the scale of the single decision, which seems inconsistent with the aforementioned empirical observations.

Linear evidence accumulation also assumes that evidence accumulation is independent of the decision state or of the time that passes. While some models consider the effect of time on the decision17, or dynamics close to the threshold18,19, no model to our knowledge accounts for initial dynamics. For example, ambiguous stimuli could yield flat initial drifts. This is partially translated into non-decision time, as it is assumed to be when sensory evidence is processed in the brain without contributing to the decision process.

Previous attempts at single-trial fitting of decisions have been made through attractor models9,20,21, and it has been shown that these models can be reduced to a Drift-Diffusion Model6,22, that is in that case, a Langevin equation with a non-linear drift23. Its dynamics allow for transitions between decision states under fluctuating stimuli11. However, the link between each parameter and the dynamics of the model is complicated to interpret. Moreover, the reduction proposed assumes a reflection symmetry of the network to obtain the given form. This seems limiting, in particular when each perceptual decision recruits different sensory modalities.

Moreover, while the few parameters of the DDM are advantageous in terms of complexity, it can be a limiting factor when analyzing endogenous effects, such as fatigue or training on decisions. Previous works have shown that post-stroke fatigue increases the non-decision time along experiment time, while RTs tended to decrease in healthy participants24. Increased environmental requirements in terms of workload can decrease RTs and alter accuracy25. While some models have taken into account the passing of time within each trials17, no models have tried to account for more global fluctuations to our knowledge.

Here we propose a straightforward one-dimensional non-linear form to address these limitations: the non-linear Drift-Diffusion Model (nl-DDM). It recreates double-well-like dynamics from an evidence-accumulation perspective without assuming reflection symmetry. We show its validity and compare its fitting performances to these of the DDM. We first provide a formal description of the nl-DDM, relating it to the DDM. Then, we fit the models on two human behavior datasets: a lexical classification task already published26, and a multisensory classification task. Then, we used correlations to compare the parameters of both models on data simulated from DDM parameters and provide an empirical explanation of the effect of the nl-DDM parameters with analogies on the DDM. We show that it fits data equally well as the DDM while providing drift variability like the extended DDM. The dependency of the drift rate on the decision state provides a framework for more refined analyses of the decision process. Last, we considered the time spent performing the lexical task and showed that the nl-DDM modeled behavioral data significantly better than the DDM in that instance, supporting the necessity to account for the experiment time. We provide open-source code pluggable onto the PyDDM toolbox22 for reproducibility and easy use of our model.

Results

In this paper, we introduce the non-linear Drift-Diffusion Model (nl-DDM) and show that it performs better than the DDM in terms of fitting accuracy on behavior. To this aim, we fitted both models on two datasets: a lexical classification dataset previously published in26, on which we also modeled the effects of the time spent doing the experiment, and a multi-sensory classification task. To provide insight into the meaning of nl-DDM parameters, we also performed correlation analyses between nl-DDM and DDM parameters on data generated from DDM parameters and subsequently fitted by the nl-DDM.

nl-DDM formalism

Our goal was to propose a simple model in which trajectories are attracted to a boundary. Placing ourselves in the context of two-alternative choice paradigms, our model needed two attractive states. In one dimension, this forces the existence of an unstable fixed-point between the two stable fixed-points27.

Therefore, the model we propose follows a Langevin equation where the drift varies with the state of the decision. The drift equation can be written in the following form:

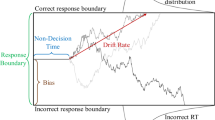

where x represents the decision variable and dx its variation in infinitesimal time dt. N(t) is a Gaussian white noise term. \(-k(x+a)(x-z)(x-a)\) represents the drift, and depends on several parameters. The parameter k is a time constant of the system, and a and z determine where the attractors, or decision boundaries, lie. \(\pm a\) represent the two attractive states, and we constrain z to the interval \(]-a, a[\) to obtain z the unstable fixed-point. In this case, the drift corresponds to the deterministic part of the equation and depends on the current decision state. A summary of the parameters of the nl-DDM is given Fig. 1, which can be compared to the description of the DDM Fig. 5. In the following, we provide a formal explanation of the meaning of each parameter.

Description of the Non-linear Drift-Diffusion Model (nl-DDM). The decision state is represented by a decision variable x traveling from a starting point (for example, drawn from a uniform distribution, centered around \(x_0\) and of width \(2s_z\). It is represented as “SP” on the figure) to a boundary (“Correct boundary” or “Incorrect boundary”) under the influence of a drift. Here, the drift depends on the current state of the decision. Depending on the position of \(x_0\) relative to z, the drift will hence have different shapes. The trajectory is also impacted by white noise so that real trajectories are similar to the thin blue lines. From the stimulus onset, the decision process is delayed by a certain non-decision time (\(T_{nd}\)). Over an ensemble of decisions, probability density functions of correct and error response times can be created, as displayed here.

The interpretation of k as a time constant is straightforward from the equation: as k increases, a decision is reached faster for any given set of parameters.

To build an intuition for the other parameters, we first consider the potential function derived from the drift term (Fig. 2):

This profile is called a double-well potential profile.

Parameter manipulation on the nl-DDM. (A–C) Potential functions of the nl-DDM for different z (A Shifting z changes the relative attractiveness of each boundary, a (B Shifting a changes the accuracy and the speed of decisions), and k (C Shifting k changes the speed of decisions). The parameters are always the same for the solid black curve: \(a=1, k=1, z=0\), allowing for a comparison of the effects of the different parameters. (D) Trajectories in the absence of noise for different values of \(x_0\), under \(a=1, k=1, z=0\). It becomes clear that the drift range for each trajectory depends on the starting point. The trajectory approaches the boundary asymptotically and will eventually be crossed since noise is omnipresent.

From Fig. 2, we can see that there are two sinks at \(\pm a\), as well as a source at z, which emerge from the topology of the system. Therefore, \(\pm a\) are the decision boundaries and control, along with z, the speed-accuracy trade-off. Taking a the boundary for correct responses and \(-a\) for incorrect ones, we can see that moving z closer to \(-a\) makes the \(-a\)-well shallower and the a-well deeper (Fig. 2A). In other words, the correct decision becomes more attractive than the incorrect one. The gradient becoming more positive on the interval [z, a], the trajectories starting on that interval also reach the correct decision faster.

By reducing a, both wells become shallower, making decisions slower (Fig. 2B). However, for a given noise scale, this also means that any perturbation in the wrong direction is easier to correct because a small perturbation in the other direction can counterbalance that effect. When the wells are deep, the decision variable is driven rapidly to the stable fixed-point, making perturbations less reversible.

We can also observe the impact of k on the potential function in Fig. 2C.

Similar to the DDM, we can fit RTs by solving the Fokker-Planck equation corresponding to the Langevin equation (Eq. 1)22. Then, a non-decision time \(T_{nd}\) shifts the resulting distribution and accounts for biological transmission delays.

This model is similar to the Double-Well Model (DWM), which emerges from attractor network models11,23. The potential profile of the DWM indeed takes the form:

Comparing this equation to Eq. (2), we observe a term in \(x^3\) that is absent from the DWM, because of the reflection symmetry assumption made in the DWM23,27. However, when \(z=0\) and \(\mu =0\), we observe the equivalence of the systems:

This equivalence is coherent with the interpretation of z and \(\mu\) as the impact of the stimulus on the decision and shows that in the absence of a stimulus, the two models follow the same behavior. Because the nl-DDM is not assuming reflection symmetry, the presence of a stimulus impacts the trajectories generated by the two models in different ways.

Model performance and comparisons

Behavioral results

It is helpful to obtain each participant’s RTs and decision accuracy for decision-making analysis, particularly for decision model fitting.

We used two datasets in this paper, described in the Methods section. They both consist of classification tasks performed by human participants. One of them is a dataset collected by Wagenmakers et al.26, in which participants had to assess whether a word presented on screen existed or not. The second one is a dataset in which participants were shown visual stimuli or a combination of visual and auditory stimuli on screen and had to classify them according to their type (either “face” or “number+sound”).

We describe here the validation conducted on the multi-sensory dataset. Analyses of the lexical dataset26 are discussed later.

On average, participants were shown \(49.82 \pm 2.42 \%\) (mean ± standard deviation, \(N=25\)) of “number+sound” stimuli, indicating the quasi-equiprobability of each stimulus. We then performed mixed-model ANOVAs on their RTs and response accuracy for both stimulus-response mapping (between-subject factor) and stimulus (within-subject factor). Across all participants and stimulus types, the mean RT is \(535 \pm 61\) ms , with an accuracy of \(98.59 \pm 0.95 \%\). For the “face” stimulus, participants responded after \(539 \pm 56\) ms with an average accuracy of \(98.51 \pm 1.17 \%\). Participants responded to the “number + sound” stimulus after \(531 \pm 69\) ms on average with an accuracy of \(98.68 \pm 0.94\%\). The difference in performance between the types of stimuli is not significant in terms of accuracy (Table 1) nor RTs (Table 2).

In the “face-left” stimulus-response mapping, where participants were instructed to click left upon face stimulus presentation and right when they were presented with a number+sound stimulus, participants responded on average within \(531 \pm 74\) ms with an accuracy of \(98.48 \pm 1.12\%\) (\(N=15\)). Participants who underwent the “face-right” mapping (\(N=10\)) responded within \(541 \pm 30\) ms with an accuracy of \(98.77\pm 0.60 \%\). The effect of the stimulus-response mapping on accuracy and RT was not significant (Tables 3 and 4). We note a marginal interaction effect between stimulus-response mapping and stimulus type on the accuracy (\(p=0.052\), Table 1). However, pairwise post-hoc comparisons revealed no difference between interaction sub-conditions (\(p_{Holm}>0.310\)).

These results show the uniformity of participant responses across mappings and stimuli.

Comparison of loss values

Parameter fitting was performed using PyDDM22 for both the nl-DDM and the DDM, minimizing the negative log-likelihood function. We fitted a model per participant and model type, resulting in 25 DDM and 25 nl-DDM on the sensory classification dataset. The DDM was fitted using 6 parameters (1 boundary, 2 drifts, i.e. one per stimulus, 1 starting point, 1 starting-point variability, 1 non-decision time), and the nl-DDM consisted of 7 parameters (k, a, 2z (one per stimulus), 1 starting point and variability, 1 non-decision time).

We computed the Bayesian Information Criterion (BIC) for each model fitted on the multi-sensory dataset to establish a comparison of model performance that considers the sample size and number of parameters for each model. This is indeed necessary when comparing performance across model types, since the number of parameters is different. We observed that the nl-DDM fitted RT data significantly better than the DDM, even when accounting for the number of parameters (Fig. 3, Shapiro-Wilk test: \(W=0.928, p=0.08\), one-tailed paired t-test, \(t(49)=1.714, p=0.046, \texttt {Cohen's}\, d=0.343, N=25\)).

Distribution of the differences between the BIC obtained after fitting the nl-DDM and fitting the DDM on the multi-sensory classification dataset. A more negative difference means a better fit of the nl-DDM compared to the DDM. This figure has been generated using JASP (0.16.0.0)28(see https://jasp-stats.org/).

Comparison of parameters

We compared the parameters of the DDM and nl-DDM using data generated from DDM parameters, varying the parameters \(B, \nu , x_0\) and \(s_z\) consecutively. Each parameter varied 100 times, resulting in 400 generated datasets, to which the parameters \(k, z, a, x_0\) and \(s_z\) of the nl-DDM were fitted. Although we fit the parameters separately for each stimulus, we merge all the results to build relations between the parameters of the DDM and the parameters of the nl-DDM.

The correlation matrix of the nl-DDM and DDM parameters across all models is given Fig. 4. Note that, since the DDM parameters were artificially varied, the correlation coefficients within DDM parameters were discarded from our analysis.

We empirically computed the relation between the parameters within the nl-DDM. We observed a strong negative correlation between a the boundary and k the time constant. This corresponds to their opposed effect on the attractiveness of the correct response. Increasing either will make the decision more attractive, so to keep the same attractiveness of the correct response, if one increases, the other should decrease. In our data, since the noise term was constant, these two terms are strongly correlated. Note that the effect of each parameter remains different, as shown on Fig. 2B and C. While increasing k deepens both wells, increasing a will deepen the wells and pull them apart. Effectively, the relation between a and k is non-linear, as seen on Supplementary Fig. S2. We noted a positive correlation between k and z, which can also be understood with Fig. 2A,C: if k increases, the correct decision well becomes deeper and thus more attractive, and to correct for this effect, z needs to increase as well. k and \(s_z\) the half-width of the starting-point distribution of the nl-DDM were related, while a related to the middle of the distribution \(x_0\) and to its half-width \(s_z\). This can be explained by the symmetric effect of k on the depth of the potential wells (Fig. 2C) and the asymmetric effect of a (Fig. 2B). In the same line, z correlated positively to \(x_0\) and negatively to \(s_z\). Increasing z results in slower correct and less accurate decisions. To maintain the same speed-accuracy trade-off, the starting-point distribution can be shifted towards the correct-decision boundary and it variability diminished so that a larger portion of it is located to the right of z, which is the attracting zone of the correct-decision boundary. Last, the starting-point parameters \(x_0\) and \(s_z\) in the nl-DDM positively correlated with each other.

Upon cross-model comparison, we first observed that the middles of the starting-point distributions and their width correlated positively, which was expected. \(x_0\) of the nl-DDM and \(s_z\) of the DDM were consequently also positively correlated, since they both correlated positively to \(s_z\) of the nl-DDM, and a negatively to \(s_z\) of the DDM for the same reason. \(x_0\) of the DDM also correlated negatively with z of the nl-DDM: increasing \(x_0\) in the DDM results in faster correct decisions and more accurate decisions. Decreasing z in the nl-DDM has the same effect, as the correct-decision well deepens and a larger proportion of the starting-point distribution is located to the right of z, hence towards the correct decision. \(s_z\) of the DDM additionally correlated negatively to z and positively to k. Increasing \(s_z\) with \(x_0=0\) in the DDM results in faster decisions and lower accuracy. Decreasing z in the nl-DDM results in faster correct decisions, which is coherent with the effect of increasing \(s_z\). Increasing k makes decisions faster and since the potential wells deepen (Fig. 2C), the decisions are also more prone to noise and hence less accurate. All these effects mirror the ones observed upon increasing \(s_z\) in the DDM.

The DDM boundary B correlated positively to k, a, z and \(x_0\) and negatively to \(s_z\) of the nl-DDM. Increasing B results in improved accuracy at the cost of slower decisions. Consistent with this effect, increasing a also increases the accuracy. Shifting the starting-point distribution towards the correct decision, i.e. to the right of z, while decreasing its variability results in more correct decisions (Fig. 2D), explaining the observed correlation. Increasing z results in slower correct responses due to the correct potential well being shallower (Fig. 2A), which mirrors the loss of speed implemented by an increase of B. Increasing k results in overall faster and less accurate decisions, which contradicts the effects of the boundary increase in the DDM. However, we also noted that k correlated positively with z and negatively with \(s_z\) of the nl-DDM. These two parameters being positively and negatively correlated to B respectively, the increase of k upon increasing of B should be a consequence of these correlations.

We also observed a significant negative correlation between z and \(\nu\). This relationship was also expected, as increasing the drift \(\nu\) in the DDM results in faster correct decisions. Mirroring this effect, z regulates the relative attractiveness of each decision well. As z becomes more negative, the correct decision (corresponding to decision boundary \(+a\)) becomes more attractive, and hence correct decisions are made faster. Another explanation for this can be derived from Fig. 2: if we shift z closer to 0, the negative and positive wells of 2 will tend to be at the same level. It means that the mean maximum drift will decrease towards zero as z increases closer to the middle of the two boundaries \(\pm a\). In other words, increasing z will decrease the drift, hence the negative correlation. Conversely, a correlated positively with \(\nu\) since pulling the potential well apart makes them more attractive (Fig. 2B). z also correlated positively with \(x_0\) of the DDM, which was expected as both have an impact on the proportion of trials that reach either boundary in the absence of noise. we noted a negative correlation between DDM drift and nl-DDM starting-point distribution. Following29, higher DDM starting-point variability results in faster error responses. Therefore, across models, if the drift becomes greater in the DDM, hence making error responses slower, the starting-point variability in the nl-DDM should diminish to have the same effect.

Correlation matrix of all parameters, computed from the parameters fitted over data simulated from DDM parameters. Pearson correlation coefficients were computed over N = 400 observations. This figure was obtained using the matplotlib (3.5.2)30-based Python library seaborn (0.11.2)31 (see https://matplotlib.org/ and https://seaborn.pydata.org/) \(^\star :p<0.05, ^{\star \star }:p<0.01, ^{\star \star \star }:p<0.001\).

Effects of time passing

The time spent performing the task is likely to impact the decision strategy, and we seek to model these effects using the DDM and the nl-DDM. We thus added a time condition to the lexical classification dataset, corresponding to whether the trial was performed in the first half of the experiment (“early” condition) or the second (“late” condition). Participant 2 did not complete all the blocks, and was eliminated from our analyses. Therefore, the following analyses are presented over 16 subjects, each exposed to all the conditions.

Model fitting

The drift and z of the DDM and nl-DDM varied as a function of the stimulus complexity (common, rare, very rare, non-existent word), and the boundary of the DDM varied depending on both the instruction (speed/accuracy) and the time condition, resulting in 4 boundaries. In the nl-DDM the effects were modeled separately using a and k, fitting k depending on the time condition and a according to the instruction. Then, the starting-point distribution and non-decision time were fitted over all trials for each model. We compared the fitting performance of the two models. The BIC of the nl-DDM was significantly smaller than the BIC obtained by the DDM (Shapiro-Wilk test: \(W=0.773, p<0.001\), one-sided Wilcoxon signed-rank test (\(BIC_{nl-DDM}<BIC_{DDM}\): \(W=34, p=0.042\)), which shows that splitting the effects of instruction and experiment time yielded better results than combining them.

Analysis of the parameters of the lexical classification dataset

We fitted both the DDM and the nl-DDM taking into account the instruction, the time of the experiment (early/late trial) and the word type for each trial. In the DDM, we hence fitted 4 drifts, corresponding to the 4 word types, and 4 boundaries, corresponding to \(2 \texttt {instructions}\times 2 \texttt {times}\). Conversely in the nl-DDM, we fitted 4z parameters (one per word type), 2a (one per instruction) and 2k (one per time of the experiment). We then performed paired t-tests to assess the discriminability of the parameters across conditions. We first compared the drift and z parameters across word types. In both the DDM and nl-DDM, we observed significant differences in the drifts and z between all word type pairs, except between rare and non-existent words (Tables 5, 6). The two models therefore discriminate between word types equally well. We observed that k differed significantly between the two time conditions (\(t(15)=4.553, p<0.001\)), and a differed significantly between the two instruction conditions (\(t(15)=4.879, p<0.001\)). Comparing the boundaries of the DDM resulted in 6 comparisons, corresponding to the Bonferroni-corrected \(\alpha =\frac{0.05}{6}=0.008\). We noted no significant difference between early and late trials in the accuracy instruction (\(t(15)=2.784, p=0.014\)), while all the other differences were significant (Table 7). The behavioral analysis (Supplementary Information 3) however underlined significant differences in RTs and accuracy across time of the experiment. The effects of time passing are therefore better transcribed by the nl-DDM than the DDM.

Discussion

We presented a non-linear model of decision-making. This model takes the form of a Langevin equation, and provides a framework in which individual trajectories of the decision variable can have different shapes under the same global parameters (Fig. 2D). We have shown that this model predicts behavioral data equally well as the DDM. From the formalism we have described, it becomes clear that inter-trial variability in drift emerges from the dynamics of the nl-DDM, offering the possibility for further single-trial analyses and modeling.

The interpretation of the nl-DDM parameters may seem counter-intuitive at first, particularly when considering that decisions are made faster when the boundaries are further apart. Our correlation analysis provided insight into bridging the meaning of nl-DDM and DDM parameters. The difference is that in the DDM, the gradient of the drift is constant, whereas it varies in decision space with the nl-DDM. By pulling the boundaries further apart, we effectively reduce the impact of one attractor on the other, making each of them more attractive. Therefore, a decision can be reached faster, at the price of accuracy. Similarly, increasing the drift in the DDM is equivalent to shifting z towards the negative boundary in the nl-DDM, as they both result in fast correct responses. However, note that these parameters are not entirely equivalent as we did not find a perfect mapping between them, meaning that the nl-DDM is conceptually different from the DDM (Fig. 4, see also Supplementary Information 2, Figs. S1 and S2).

We have shown that the nl-DDM can also account for changes in the decision dynamics that do not relate directly to a change in the experiment but rather to the state of the participants. While such an account necessitates an exponential multiplication of parameters in the DDM, the nl-DDM requires a simple duplication of the parameters. Indeed, if we again consider the case where there are two conditions (here, instruction and time), the DDM requires \(n_{\texttt {instructions}} \times n_{\texttt {times}}\) boundaries, while the nl-DDM requires \(n_{\texttt {instructions}}+n_{\texttt {times}}\) boundary parameters (a and k). The version we showed here only split the trials into two instruction and time conditions, which results in the same number of parameters in the DDM and in the nl-DDM. Note however that any more instances of either condition would have meant more parameters in the DDM relative to the nl-DDM.

We argue that drift and starting-point variability are intertwined, as transcribed in the nl-DDM and in alignment with the view that evidence accumulation starts in anticipation of stimulus apparition32. EEG research has shown a matching between pre-stimulus activity and confidence ratings in human participants33,34. The starting-point distribution models pre-stimulus states, and in the DDM the drift relates to the quality of the integrated stimulus4, with more ambiguous stimuli corresponding to lower drift rates. At the single-trial level, drift variability relates to the variation of the quality of stimulus perception and processing in the brain1. In our model, the starting point directly impacts evidence accumulation, allowing for a uniform theory of decision-making that includes explicit co-dependency of parameters. Some general forms of the DDM include a variance of the drift. In the nl-DDM, we have not implemented this possibility, as we assumed that the inter-trial variability of the drift emerged from the variability of the starting point. In neurophysiological terms, we assumed that the pre-stimulus arousal and stimulus expectations led to differences in the rate of evidence accumulation. This is supported by past observations, according to which pre-stimulus brain activation impacts RTs35,36. Pre-stimulus brain activity also modifies perceptual37 and pain38 thresholds. Therefore, depending on the pre-stimulus activity, decisions can be made even in the absence of actual evidence33,39, or under ambiguous evidence40,41. Along the same lines,42 have shown that biases were implemented through local changes in accumulation rate, which supports the intertwining of accumulation rate and pre-stimulus states. However,34,43,44,45 argue that pre-stimulus brain states should only affect the decision criterion, not how well participants perceived the stimuli. Translating the signal-detection theory to the evidence-accumulation scheme16, pre-stimulus states should only be changing the decision boundary, or equivalently the starting point, and not the drift rate. For example,34 found that pre-stimulus alpha power did not impact the accuracy of visual evidence accumulation, but only the confidence in the decision.33 found similar results with auditory stimuli. Although these observations seem to contradict our assumption that the starting point impacts the evidence-accumulation process, both phenomena could co-exist, as more extreme starting points are more attracted to the closer attractor. This results in fast and confident observations, although little evidence has been accumulated (we would be located at a plateau in our model), i.e., even if the stimulus was not well perceived.

The current analysis uses a form of the DDM without all variabilities proposed by Ratcliff and Tuerlinckx29. The reason is two-fold. First, we wanted to use simple forms of both models to emphasize the characteristics of the ground parameters of each model. One may argue that the DDM should then have been fitted using a single point as the starting point. However, this would have introduced a confound when comparing the two models. Indeed, a major advantage of the nl-DDM is the variety of dynamics that it offers depending on the starting point. Since the DDM can also be improved by adding starting-point variability, implementing it in both models seemed to be a fair compromise. Second, and related to the first, any source of variability that could have been introduced in the DDM could also have been implemented in the nl-DDM. Besides starting-point variability, non-decision time variability could also exist in the nl-DDM. As argued above, the drift and starting-point variability should be intertwined, meaning that fitting a variability of the drift would be redundant to some extent in the nl-DDM, but we could implement variability in k, which, according to our analyses on time that passes, could be a natural mirror of the remaining effects of drift variability in the DDM.

The dynamics that we propose here is rooted in empirical observations made in neurophysiological studies. More specifically, three phases can be identified in the decision trajectories: an inertia stage, a quasi-linear evidence accumulation stage, and a plateau stage. The initial inertia relates directly to the brain activation needed to integrate sensory evidence.35 and36 have shown in human EEG studies that the brain activity prior to stimulus presentation changed the speed of responses. More specifically, they showed that the more pre-activated the required sensory area, the faster the decision. The nl-DDM mimics this behavior at the single-trial level: for trials starting close to the unstable fixed-point (i.e., further from the correct decision well), the trajectories start with a plateau-like stage, whereby little evidence is accumulated because the brain would need to process the stimulus more intensively in order to extract information from it, before integrating evidence faster. This initial inertia is circumvented by shifting the starting point closer to the decision well, resulting in faster and more accurate responses. The initial inertia in the DDM is referred to as the non-decision time and encompasses both sensory processing and motor planning and execution. The nl-DDM assumes therefore that part of these processes participates in the decision process, which goes beyond the conceptualization of decision-making as the sequence of sensation, perception, and motion.

A recent review from46 shows the limitations of existing evidence-accumulation models. We try to address several of them with the nl-DDM, including the possibility for analyses beyond the global description of RTs and the formulation of initial and final dynamic changes during the decision process. In particular, our formal description has shown that different shapes of decision trajectories can co-exist within the same framework, not solely because of noise, but because of meaningful variability. We expect this model to be further used to gain insight into the across-trial variability of decisions.

The current study considered that the input was presented at the beginning of the trial and affected the decision in a constant fashion. We could also imagine more dynamic cases, where the input is processed over a finite period and participants accumulate evidence during stimulus presentation, as has been done in past DDM analyses47,48. In non-stationary contexts, the input can be considered as a variation of z in time. By shifting z to either boundary, more trajectories are attracted to the opposite boundary, hence increasing the likelihood of correct answers. In addition, it can be inferred from our formal analysis that changing z means changing the drift rate. This change in input could also explain error-correcting behaviors49 and spontaneous changes of mind50. When the stimulus ends, the DDM is modified so that the drift is null, i.e. evidence is no longer accumulated. Therefore, changes of mind are the result of noise in the system. Conversely, stimulus termination could be modeled through shifting z in the nl-DDM, which effectively modifies the drift rate of the current decision, in a way that the decision variable could toggle towards the opposite boundary upon stimulus disappearance. Conceptually, the drift in the nl-DDM not only relates to the accumulation of evidence but also encompasses decision processes related to the post-processing of evidence.

We have shown that, while similar to the DWM11 derived from attractor models23, the nl-DDM is equivalent to it only in the absence of input. A question that remains open is that of the mechanism underlying this equation. From the reduction computed in the paper by23, it would seem that a network of three populations could produce the dynamics we have described. However, the main assumption of the reduction was that the network was invariant through reflection. We argue that the mechanisms described by the nl-DDM are similar to these of the DWM, but offer a broader range of applications beyond the case of symmetrical models.

Extending this model to multiple-choice situations is another interesting ground of research. The DDM is inapplicable in such situations. The nl-DDM would require structural changes for multiple choices. Indeed, the decision variable’s trajectory is here modeled in a one-dimensional space, where the alternatives are represented as attractors. Its multiple-choice variant would require more attractors. In 1D-space, adding more stable fixed-points will result in two issues. First, traveling from one alternative to another may require passing through other decision wells, which seems incoherent with behavior. Second, adding stable fixed-points requires the implementation of as many unstable fixed-points, which would mean a two-fold increase in the number of parameters when adding one choice. A simpler solution would be to switch to a 2D-space, so there could be a central unstable fixed-point, and the subjective preference for each alternative would determine the position of each stable fixed-point.

Methods

Drift-Diffusion model

The Drift-diffusion model5 is characterized by a linear accumulation disturbed by additive noise. Formally, this can be written as the following Langevin equation (Eq. (4)):

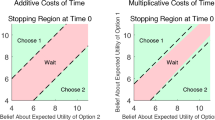

where x represents the decision variable, an abstract quantity representing the state of the decision, dx its infinitesimal variation in time dt, and N(t) is a Gaussian white noise, parameterized by its standard deviation \(\sigma\). Figure 5 gives a representation of this model.

Description of the Drift-Diffusion model (DDM). The decision state is represented through a decision variable that travels from a starting point that can be drawn for example from a uniform distribution, centered around \(x_0\) and of width \(2s_z\). The decision state is represented through a decision variable x traveling from a starting point (for example, drawn from a uniform distribution, centered around \(x_0\) and of width \(2s_z\). It is represented as “SP” on the figure) to a boundary (“Correct boundary” or “Incorrect boundary”) under the influence of a constant drift (dotted line). The trajectory is also impacted by white noise so that real trajectories are similar to the thin blue lines. From the stimulus onset, the decision process is delayed by a certain non-decision time (\(T_{nd}\)). Over an ensemble of decisions, RT distributions of correct and error responses can be estimated, as displayed here.

Evidence is accumulated following Eq. (4) until a decision boundary \(A>0\) or \(-A\) is reached. Typically, the positive boundary corresponds to correct decisions and the negative one to incorrect responses.

Finally, the starting point of accumulation is called the bias and is defined as a single point within the two boundaries. In general forms of this model, it is also possible to consider that the starting point is drawn from a uniform distribution centered around the bias \(x_0\) and of width \(2s_z\), such that \([x_0-s_z,x_0+s_z] \subseteq ]-A,A[\)51, or from other parametric distributions15. We will consider uniformly distributed starting points in our fitting to provide a fair comparison of the two models without loss of generality.

The boundary separation represents the speed-accuracy trade-off. Indeed, if this separation is bigger, decisions are less impacted by noise and hence more accurate, but at the same time, they will take longer to reach from a given starting point. In contrast, the drift mainly impacts the speed of response, as a higher drift will lead to faster correct responses and longer incorrect responses.

Fitting is typically done globally over RTs. In fact, the trajectories defined by the equation cross the decision boundaries, forming a RT distribution usually compared to an exponentially modified Gaussian. In order to obtain a close fit, it is necessary to define a non-decision time (noted \(T_{nd}\)), which corresponds to the time necessary for sensory processing of the stimulus, motor planning and execution, independently of the decision process.

Data collection and processing

In order to test the quality of the fitting of the nl-DDM, we use RTs from a classification task performed by humans described thereafter. The paradigm was initially implemented to assess the relation between RTs and emotion valence of visual stimuli.

Classification task with different sensory modalities

We first tested the quality of the nl-DDM by fitting it to data we collected. 25 (11 female, 14 male) healthy right-handed participants aged \(27.72 \pm 8.96\) (mean ± standard deviation) with normal or corrected-to-normal vision and hearing consented to taking part in a perceptual classification task experiment. EEG brain activity was also recorded (not reported here). The experiment was performed under the local ethics committee approval of the Comité d’Ethique de la Recherche Paris-Saclay (CER-Paris-Saclay, invoice notice nb. 102). All the methods described were performed in accordance with the guidelines and regulations stated by this committee and disclosed in the invoice. An interview preceded the experiment to check with the participants for non-inclusion criteria (existing neurological and psychiatric disorders, uncorrected visual and hearing deficiencies). Informed consent was obtained from all the participants included in this study.

Participants were presented at each trial with images of faces or images of numbers, and had to respond with mouse clicks to report what stimulus they perceived. A sound accompanied images of numbers to suppress any ambiguity. Participants were instructed to respond using their right hand. To control for possible differences in motor response speeds between the two fingers, one group of participants (\(N=15\)) was instructed to report faces with a left click and numbers with a right click (“face-left” stimulus-response mapping), while the other (\(N=10\)) was given the opposite instruction (“face-right” stimulus-response mapping). Responses were constrained to 2 s after stimulus onset. No feedback on the performance was given to participants. At each trial, each stimulus had a \(50\%\) chance of occurring.

Each participant performed 480 classification trials, split into 8 blocks of 60 trials each. Between each block, participants were offered a break of free duration. Each trial followed the sequence described Fig. 6. First, a central red cross appeared on the screen, indicating a pause period. After 1.5 s, the cross became white as a signal for trial start. The white cross stayed for 1.5 s, after which a video clip of visual noise appeared: 9 frames of noise of 100 ms each were displayed. After the noise clip, a last frame of random visual noise was presented, and the stimulus appeared on top of it. The last frame stayed intact until the end of the trial, and the stimulus was displayed over it for 200ms. The trial was terminated upon participant response or timed out after 2 s. A trial lasted for about 5 s, resulting in blocks of about 5 min each.

Timeline of a single trial. Each trial is preceded by a rest period, followed by a baseline period (necessary for EEG processing, not reported here), each lasting 1.5 s. A noise clip consisting of 9 random-dot frames of 100 ms each indicates the arrival of the stimulus in a non-stimulus-specific fashion. The stimulus then appears on a noisy visual background for 100 ms. The same noisy background frame then lasts until the participant’s response and times out after 2 s otherwise.

We used face sketches as used in52, which were generated from the Radboud Face Dataset53. Number stimuli were generated at the beginning of the session for each participant, under the constraint that they were 3-digit integers. In total, 10 different face stimuli and 10 different number stimuli were used for each participant.

Lexical classification dataset from Wagenmakers et al.26

To model the effects of time passing and discard the possibility of better performances emerging from the fitting algorithm or data acquisition, we also lead our analyses on a bigger pre-existing dataset taken from26. 17 human participants performed a classification task, as they were randomly presented with real or invented words. The invented words were generated from real words by changing a vowel, and the real words were labeled in three categories depending on their frequency (frequent, rare, or very rare). In total, stimuli were split into 4 categories of interest. Each participant performed 20 blocks of 96 trials each, with as many invented words as real words in each block. Participants were given the additional instruction to define the speed-accuracy trade-off in each block: they alternated between blocks where speed was emphasized and blocks where accuracy was more important. Responses were limited to 3 s, and trials with RTs below 180 ms were discarded to avoid anticipatory responses. More details can be found in26, and the dataset can be accessed from here.

Behavioral analyses

We are interested in comparing model parameters between the DDM and the nl-DDM. It is important to check whether participants’ performance across stimulus-response mappings and stimuli is coherent in terms of RTs and accuracy. Indeed, the multi-sensory experimental paradigm we defined entails two types of stimuli and two motor commands for the choices. In addition, we have created two experimental groups, which were instructed to respond with opposite motor commands. First, we computed the percentage of stimuli in each class to verify that the stimuli were globally equiprobable for each participant. Since we designed the experiment to display each stimulus with the same probability at each trial, we expect this number to be close to \(50\%\). Otherwise, participants could opt for a strategy that prioritizes one response against the other. Then, we performed two mixed-model ANOVAs, respectively testing RTs and accuracy. The stimulus-response mapping was considered a between-subject factor and the stimulus type a within-subject factor.

In the lexical classification data26, the effect of the time of the experiment is of special importance, as well as its interaction with the other experimental conditions (i.e. the word type and the instruction). We therefore performed two repeated-measures ANOVAs, respectively testing RTs and mean accuracy, assessing the effects of time (first half of the trials or second, resulting in two conditions: early vs. late trials), stimulus frequency, and instruction (accuracy or speed). One of the participants (participant 2) did not perform the \(9^{th}\) block of the experiment, which removed a significant portion of trials in one of the conditions. This participant was removed from the analyses, and the analyses were therefore performed over 16 participants. Post-hoc analyses were performed using the Holm correction.

Data fitting

The classical way of fitting evidence-accumulation models is by fitting one drift for each stimulus category separately. In that case, the positive and negative boundaries still correspond to correct and incorrect responses respectively, and the starting points are taken from the same distribution regardless of the stimulus. Consequently, one pair of boundaries \(\pm B\), the middle of the starting-point distribution \(x_0\) and its half-width \(s_z\), and two drifts \(\nu _0\) and \(\nu _1\) (corresponding respectively to “face” and “number+sound” trials) have to be fitted in the DDM. Similarly, one pair of stable fixed-points \(\pm a\), one time scale k, the middle of the starting-point distribution \(x_0\) and its half-width \(s_z\), and two unstable fixed-points \(z_0\) and \(z_1\) (that will tune the drift in the “face” and “number+sound” stimuli respectively) are needed for the nl-DDM. In both cases we fix the noise parameter to \(\sigma =0.3\). As explained by5, since the speed-accuracy trade-off is determined by the boundary separation, fitting two parameters among drift, boundary, and noise is constraining enough. In addition, each model requires fitting a non-decision time \(T_{nd}\) per stimulus type. Hence, 6 parameters must be fitted per participant for the DDM, against 7 for the nl-DDM.

We used the PyDDM toolbox22 for the fitting, minimizing the negative log-likelihood function and an implicit resolution. The nl-DDM indeed does not have explicit solutions when z is not centered. The log-likelihood is such that the more negative, the closer the modeled distribution of RTs is to the empirical RT histogram.

Fitting the lexical classification dataset26

With this dataset, we were interested in modeling the effects of time passing throughout the experiment. Therefore, we created an artificial condition based on the sequence of blocks, which characterized the trials as happening early (i.e. within the first half of the experiment) or late (i.e. within the second half of the experiment). We discarded participant 2, for whom we did not have data for the \(9^{th}\) block. All the other participants performed 20 blocks alternating between speed and accuracy instructions, therefore each time condition held 5 blocks with each instruction. Within the DDM framework and for each participant, one drift was computed per stimulus type, resulting in 4 drift terms: \(\nu _1 , \nu _2 , \nu _3 ,\nu _{NW}\), corresponding respectively to frequent, rare, very rare, and non-existent word stimuli. 4 boundaries were fitted, corresponding to 2 instructions \(\times 2\) time conditions. The non-decision time, starting point, and starting-point variability were fitted for each participant over all trials. The within-trial noise parameter was fixed to 0.3. Hence, each model consisted of 11 parameters.

Within the nl-DDM framework, we fitted for each participant one z per stimulus type (\(z_1\), \(z_2\), \(z_3\), \(z_{NW}\)). In addition, one parameter a was fitted per instruction condition and one parameter k per time condition, resulting in 4 more parameters. Similar to the DDM, the non-decision time, starting point, and starting-point variability were fitted over all trials from each participant and the noise scale is set to 0.3.

As previously, we used PyDDM22 with negative log-likelihood minimization and implicit resolution.

Performance comparison

Since the fitting on both datasets was performed using a different number of parameters and samples, we computed the Bayesian Information Criterion for each model, defined as:

That way, a penalty for more samples and parameters is considered. The negative log-likelihood is the fitting score.

Hence, we compare each loss pairwise, using a one-sided paired-sample t-test. Indeed, we want to test whether the nl-DDM is better than the DDM with these three metrics, hence testing the hypothesis \(\text {{BIC}}_\text {nl-DDM}<\text {{BIC}}_\text {DDM}\).

Comparison of parameters

For a better understanding of the parameters of the nl-DDM, their interaction and their meaning in the DDM framework, we computed the Pearson’s correlation coefficients of DDM and nl-DDM parameters over simulated experiments. In order to obtain correlation coefficients within nl-DDM parameters as well as across DDM and nl-DDM parameters, we varied one by one the DDM parameters \(B, \nu , x_0\) and \(s_z\), and simulated 500 data points for each parameter combination. The nl-DDM parameters \(k, z, a, x_0\) and \(s_z\) were subsequently fit to the generated datasets. Table 8 summarizes the sampling of DDM parameters as well as the default value for each parameter. We explored 100 variations of each parameter, resulting in 400 generated datasets. Since the noise parameters \(\sigma =0.3\) and \(T_{nd}=0.3s\) were kept constant when simulating the DDM, we also fixed \(\sigma =0.3\) and \(T_{nd}=0.3\) in the nl-DDM.

We computed the correlation matrix between all the parameters of both models. This allows for a first look into first-order interactions between model parameters, within and across model types. Since the correlations within DDM parameters were irrelevant due to their artificial manipulation, these coefficients were not computed. The correlation coefficients were computed using Pearson’s \(\rho\), defined as:

Data availability

The lexical classification dataset is already made available by Wagenmakers et al.26 and can be accessed here. The multi-sensory classification dataset as well as the Python code are available at https://github.com/IsabHox/nl-DDM.git. The corresponding author can be contacted regarding these datasets.

References

Ratcliff, R. & McKoon, G. The diffusion decision model: Theory and data for two-choice decision tasks. Neural Comput. 20, 873–922. https://doi.org/10.1162/neco.2008.12-06-420 (2008).

Ratcliff, R. & Smith, P. L. A comparison of sequential sampling models for two-choice reaction time. Psychol. Rev. 111, 333–367. https://doi.org/10.1037/0033-295X.111.2.333 (2004).

Gold, J. I. & Shadlen, M. N. Neural computations that underlie decisions about sensory stimuli. Trends Cogn. Sci. 5, 10–16. https://doi.org/10.1016/S1364-6613(00)01567-9 (2001).

Gold, J. I. & Shadlen, M. N. The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574. https://doi.org/10.1146/annurev.neuro.29.051605.113038 (2007).

Ratcliff, R. A theory of memory retrieval. Psychol. Rev. 85, 59–108. https://doi.org/10.1037/0033-295X.85.2.59 (1978).

Bogacz, R., Brown, E., Moehlis, J., Holmes, P. & Cohen, J. D. The physics of optimal decision making: A formal analysis of models of performance in two-alternative forced-choice tasks. Psychol. Rev. 113, 700–765. https://doi.org/10.1037/0033-295X.113.4.700 (2006).

Moehlis, J., Brown, E., Holmes, P. & Cohen, J. D. Optimizing reward rate in two alternative choice tasks: Mathematical formalism. Tech. Rep. 04-01 Princeton University, Center for the Study of Brain, Mind and Behavior, 2004).

Usher, M. & McClelland, J. L. The time course of perceptual choice: The leaky, competing accumulator model. Psychol. Rev. 108, 550–592. https://doi.org/10.1037/0033-295X.108.3.550 (2001).

Wang, X.-J. Probabilistic decision making by slow reverberation in cortical circuits. Neuron 36, 955–968. https://doi.org/10.1016/S0896-6273(02)01092-9 (2002).

Ditterich, J., Mazurek, M. E. & Shadlen, M. N. Microstimulation of visual cortex affects the speed of perceptual decisions. Nat. Neurosci. 6, 891–898. https://doi.org/10.1038/nn1094 (2003).

Prat-Ortega, G., Wimmer, K., Roxin, A. & de la Rocha, J. Flexible categorization in perceptual decision making. Nat. Commun. 12, 1283. https://doi.org/10.1038/s41467-021-21501-z (2021).

Abrahamyan, A., Silva, L. L., Dakin, S. C., Carandini, M. & Gardner, J. L. Adaptable history biases in human perceptual decisions. Proc. Natl. Acad. Sci. USA 113, E3548–E3557. https://doi.org/10.1073/pnas.1518786113 (2016).

Glaze, C. M., Kable, J. W. & Gold, J. I. Normative evidence accumulation in unpredictable environments. eLife 4, e08825. https://doi.org/10.7554/eLife.08825 (2015).

Urai, A. E., de Gee, J. W., Tsetsos, K. & Donner, T. H. Choice history biases subsequent evidence accumulation. eLife 8, e46331. https://doi.org/10.7554/eLife.46331 (2019).

Ratcliff, R. & Rouder, J. N. Modeling response times for two-choice decisions. Psychol. Sci. 9, 347–356. https://doi.org/10.1111/1467-9280.00067 (1998).

Ratcliff, R. & Rouder, J. N. A diffusion model account of masking in two-choice letter identification. J. Exp. Psychol. 26, 127–140. https://doi.org/10.1037/0096-1523.26.1.127 (2000).

Cisek, P., Puskas, G. A. & El-Murr, S. Decisions in changing conditions: The urgency-gating model. J. Neurosci. 29, 11560–11571. https://doi.org/10.1523/JNEUROSCI.1844-09.2009 (2009).

Busemeyer, J. R. & Townsend, J. T. Decision field theory: A dynamic-cognitive approach to decision making in an uncertain environment. Psychol. Rev. 100, 432–459. https://doi.org/10.1037/0033-295X.100.3.432 (1993).

Schurger, A. Specific relationship between the shape of the readiness potential, subjective decision time, and waiting time predicted by an accumulator model with temporally autocorrelated input noise. eNeurohttps://doi.org/10.1523/ENEURO.0302-17.2018 (2018).

Wong, K.-F. & Wang, X.-J. A recurrent network mechanism of time integration in perceptual decisions. J. Neurosci. 26, 1314–1328. https://doi.org/10.1523/JNEUROSCI.3733-05.2006 (2006).

Wong, K.-F., Huk, A. C., Shadlen, M. N. & Wang, X.-J. Neural circuit dynamics underlying accumulation of time-varying evidence during perceptual decision making. Front. Comput. Neurosci.https://doi.org/10.3389/neuro.10.006.2007 (2007).

Shinn, M., Lam, N. H. & Murray, J. D. A flexible framework for simulating and fitting generalized drift-diffusion models. eLife 9, e56938. https://doi.org/10.7554/eLife.56938 (2020).

Roxin, A. & Ledberg, A. Neurobiological models of two-choice decision making can be reduced to a one-dimensional nonlinear diffusion equation. PLoS Comput. Biol. 4, e1000046. https://doi.org/10.1371/journal.pcbi.1000046 (2008).

Ulrichsen, K. M. et al. Dissecting the cognitive phenotype of post-stroke fatigue using computerized assessment and computational modeling of sustained attention. Eur. J. Neurosci. 52, 3828–3845. https://doi.org/10.1111/ejn.14861 (2020).

Lefferts, W. K. et al. Changes in cognitive function and latent processes of decision-making during incremental ascent to high altitude. Physiol. Behav. 201, 139–145. https://doi.org/10.1016/j.physbeh.2019.01.002 (2019).

Wagenmakers, E.-J., Ratcliff, R., Gomez, P. & McKoon, G. A diffusion model account of criterion shifts in the lexical decision task. J. Mem. Lang. 58, 140–159. https://doi.org/10.1016/j.jml.2007.04.006 (2008).

Strogatz, S. H. Nonlinear Dynamics and Chaos: With Applications to Physics, Biology, Chemistry, and Engineering (Westview Press, a member of the Perseus Books Group, 2015), second edition edn. OCLC: ocn842877119.

JASP Team. JASP (Version 0.16.4)[Computer software] (2022).

Ratcliff, R. & Tuerlinckx, F. Estimating parameters of the diffusion model: Approaches to dealing with contaminant reaction times and parameter variability. Psychon. Bull. Rev. 9, 438–481. https://doi.org/10.3758/BF03196302 (2002).

Hunter, J. D. Matplotlib: A 2d graphics environment. Comput. Sci. Eng. 9, 90–95. https://doi.org/10.1109/MCSE.2007.55 (2007).

Waskom, M. L. seaborn: statistical data visualization. J. Open Source Softw. 6, 3021. https://doi.org/10.21105/joss.03021 (2021).

Grosjean, M., Rosenbaum, D. A. & Elsinger, C. Timing and reaction time. J. Exp. Psychol. 130, 256–272. https://doi.org/10.1037/0096-3445.130.2.256 (2001).

Wöstmann, M., Waschke, L. & Obleser, J. Prestimulus neural alpha power predicts confidence in discriminating identical auditory stimuli. Eur. J. Neurosci. 49, 94–105. https://doi.org/10.1111/ejn.14226 (2019).

Samaha, J., Iemi, L. & Postle, B. R. Prestimulus alpha-band power biases visual discrimination confidence, but not accuracy. Conscious. Cogn. 54, 47–55. https://doi.org/10.1016/j.concog.2017.02.005 (2017).

Petro, N. M., Thigpen, N. N., Garcia, S., Boylan, M. R. & Keil, A. Pre-target alpha power predicts the speed of cued target discrimination. NeuroImage 189, 878–885. https://doi.org/10.1016/j.neuroimage.2019.01.066 (2019).

Chen, Y. et al. The weakened relationship between prestimulus alpha oscillations and response time in older adults with mild cognitive impairment. Front. Hum. Neurosci. 14, 48. https://doi.org/10.3389/fnhum.2020.00048 (2020).

van Dijk, H., Schoffelen, J.-M., Oostenveld, R. & Jensen, O. prestimulus oscillatory activity in the alpha band predicts visual discrimination ability. J. Neurosci. 28, 1816–1823. https://doi.org/10.1523/JNEUROSCI.1853-07.2008 (2008).

Taesler, P. & Rose, M. Prestimulus theta oscillations and connectivity modulate pain perception. J. Neurosci. 36, 5026–5033. https://doi.org/10.1523/JNEUROSCI.3325-15.2016 (2016).

Barik, K., Daimi, S. N., Jones, R., Bhattacharya, J. & Saha, G. A machine learning approach to predict perceptual decisions: An insight into face pareidolia. Brain Inf. 6, 2. https://doi.org/10.1186/s40708-019-0094-5 (2019).

Rassi, E., Wutz, A., Müller-Voggel, N. & Weisz, N. Prestimulus feedback connectivity biases the content of visual experiences. Proc. Natl. Acad. Sci. USA 116, 16056–16061. https://doi.org/10.1073/pnas.1817317116 (2019).

Railo, H., Piccin, R. & Lukasik, K. M. Subliminal perception is continuous with conscious vision and can be predicted from prestimulus electroencephalographic activity. Eur. J. Neurosci. 54, 4985–4999. https://doi.org/10.1111/ejn.15354 (2021).

Kloosterman, N. A. et al. Humans strategically shift decision bias by flexibly adjusting sensory evidence accumulation. eLife 8, e37321. https://doi.org/10.7554/eLife.37321 (2019).

Benwell, C. S. Y., Coldea, A., Harvey, M. & Thut, G. Low pre-stimulus EEG alpha power amplifies visual awareness but not visual sensitivity. Eur. J. Neurosci. 55, 3125–3140. https://doi.org/10.1111/ejn.15166 (2021).

Iemi, L., Chaumon, M., Crouzet, S. M. & Busch, N. A. Spontaneous neural oscillations bias perception by modulating baseline excitability. J. Neurosci. 37, 807–819. https://doi.org/10.1523/JNEUROSCI.1432-16.2016 (2017).

Lange, J., Oostenveld, R. & Fries, P. Reduced occipital alpha power indexes enhanced excitability rather than improved visual perception. J. Neurosci. 33, 3212–3220. https://doi.org/10.1523/JNEUROSCI.3755-12.2013 (2013).

Evans, N. J. & Wagenmakers, E.-J. Evidence accumulation models: Current limitations and future directions. TQMP 16, 73–90. https://doi.org/10.20982/tqmp.16.2.p073 (2020).

Huk, A. C. & Shadlen, M. N. Neural activity in macaque parietal cortex reflects temporal integration of visual motion signals during perceptual decision making. J. Neurosci. 25, 10420–10436. https://doi.org/10.1523/JNEUROSCI.4684-04.2005 (2005).

Shinn, M., Ehrlich, D. B., Lee, D., Murray, J. D. & Seo, H. Confluence of timing and reward biases in perceptual decision-making dynamics. J. Neurosci. 40, 7326–7342. https://doi.org/10.1523/JNEUROSCI.0544-20.2020 (2020).

Rabbitt, P. M. Errors and error correction in choice-response tasks. J. Exp. Psychol. 71, 264–272. https://doi.org/10.1037/h0022853 (1966).

Pleskac, T. J. & Busemeyer, J. R. Two-stage dynamic signal detection: A theory of choice, decision time, and confidence. Psychol. Rev. 117, 864–901. https://doi.org/10.1037/a0019737 (2010).

Laming, D. R. Information Theory of Choice-Reaction Times (Academic Press, 1968).

Yang, Y.-F., Brunet-Gouet, E., Burca, M., Kalunga, E. K. & Amorim, M.-A. Brain processes while struggling with evidence accumulation during facial emotion recognition: An ERP study. Front. Hum. Neurosci. 14, 340. https://doi.org/10.3389/fnhum.2020.00340 (2020).

Langner, O. et al. Presentation and validation of the Radboud faces database. Cogn. Emotion 24, 1377–1388. https://doi.org/10.1080/02699930903485076 (2010).

Acknowledgements

The authors thank Nicolas Nieto for his discussions on the fitting method.

Author information

Authors and Affiliations

Contributions

I.H.: conception of the model; data analysis; interpretation; drafting; revisions. S.C.: conception of the model; data analysis; revisions. M.C.: conception of the model; revisions. S.G.: conception of the model; revisions. A.D.: revisions. M.A.A.:data acquisition; revisions.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hoxha, I., Chevallier, S., Ciarchi, M. et al. Accounting for endogenous effects in decision-making with a non-linear diffusion decision model. Sci Rep 13, 6323 (2023). https://doi.org/10.1038/s41598-023-32841-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-32841-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.