Abstract

Object detection has been one of the critical technologies in autonomous driving. To improve the detection precision, a novel optimization algorithm is presented to enhance the performance of the YOLOv5 model. First, by improving the hunting behavior of the grey wolf algorithm(GWO) and incorporating it into the whale optimization algorithm(WOA), a modified whale optimization algorithm(MWOA) is proposed. The MWOA leverages the population’s concentration ratio to calculate \(p_h\) for selecting the hunting branch of GWO or WOA. Tested by six benchmark functions, MWOA is proven to possess better global search ability and stability. Second, the C3 module in YOLOv5 is substituted by G-C3, and an extra detection head is added, thus a highly optimizable detection G-YOLO network is constructed. Based on the self-built dataset, 12 initial hyperparameters in the G-YOLO model are optimized by MWOA using a score fitness function of compound indicators, thus the final hyperparameters are optimized and the whale optimization G-YOLO (WOG-YOLO) model is obtained. In comparison with the YOLOv5s model, the overall mAP increases by 1.7\(\%\), the mAP of pedestrians increases by 2.6\(\%\) and the mAP of cyclists increases by 2.3\(\%\).

Similar content being viewed by others

Introduction

Autonomous driving integrates environmental perception, dynamic planning, and control execution in automobiles, it has received considerable scholarly attention in recent years1. Object detection serves as the principal perception method for autonomous vehicles, and the crux of this task is to enhance the accuracy of object detection.

Object detection algorithms can be divided into two major classes: two-stage detectors e.g. Faster R-CNN2, TS4Net3, AccLoc4, and Part-\(A^ 2\) net5, and one-stage detectors e.g. YOLO6, CG-SSD7, and PAOD8. Two-stage algorithms generate region proposals, then classify and localize objects according to them. Contrary to two-stage algorithms, one-stage ones perform classification and localization using pre-defined candidate proposals. In general, two-stage detectors achieve greater accuracy but are more time-consuming than one-stage detectors.

To obtain a more robust and accurate detection model, the following literature provides different improvement methods. Shi et al.9 introduced GIoU into K-means++ to obtain better anchors. Manuel et al.10 used an evolutionary algorithm to search for optimal region-based anchors. Wang et al.11 proposed a feature extraction network to ensure that small objects are correctly detected. Wang et al.12 adopted the dynamic attention module to improve detection performance. As a model with arbitrary hyperparameters leads to unsatisfactory performance, optimization algorithms e.g. Bayesian optimization13, and fitness sorted rider optimization algorithm14 have been used to find the optimal hyperparameter group.

How to quickly and accurately obtain the optimal high-dimensional parameter combination optimization is a major problem, the metaheuristic optimization algorithms such as ant colony optimization(ACO)15, particle swarm optimization(PSO)16, whale optimization algorithm(WOA)17, grey wolf optimization(GWO)18, and firefly algorithm(FA)19 aim to solve this. WOA is known for its simplicity and outstanding global solving ability among a variety of optimization algorithms, it has been applied in solving the optimal hyperparameter group20,21,22, data clustering23,24,25,26, multi-objective problems27,28, etc. However, the performance of canonical WOA is limited by low convergence and unsatisfactory accuracy. Therefore, WOA must be improved by weighing up exploration and exploitation29,30, integrating other algorithms31,32,33, and using better update strategies34,35. For example, in SHADE-WOA, Chakraborty et al.36 added an extra parameter \(\alpha\) which is used to control the exploration and exploitation phases. In WhaleFOA37, the original FOA’s random search strategy is replaced by WOA’s hunting strategy to enrich FOA’s global exploration capability. Chen et al.38 developed a double adaptive weight strategy, the results show that the WOA using this method has better global optimization capability.

Referring to the above literature, a novel modified whale optimization algorithm(MWOA) is proposed by fusing the structure of WOA and the hunting strategy of GWO with multi-faceted improvements. The core procedures of MWOA are as follows: the scaling factor is calculated using an adaptive update formula based on the population’s fitness. To improve GWO’s optimization performance, the position of the local optima is added as one instructor along with \(\alpha\) wolf, \(\beta\) wolf, and \(\delta\) wolf in the GWO’s hunting strategy. The instruction vectors \(V^t_{k,i}\) are improved using the new formula and they are weighted by fitness. Then the population’s concentration ratio is leveraged as the controller of the MWOA’s hunting branches. To verify the performance of MWOA, six multi-dimensional benchmark functions are used as the fitness function. The test result shows that MWOA possesses better performance. A novel G-YOLO network is proposed and MWOA is implemented to optimize its hyperparameters. A self-built dataset including pedestrians, cyclists, and cars serves as the training set and test set of G-YOLO, and the final whale optimization G-YOLO(WOG-YOLO) model presents a stronger detection ability and stability.

Proposed MWOA algorithm

Description of WOA and GWO

The canonical WOA is enlightened by the foraging mechanism of the humpback whales, it defines three behaviors to search for the best global solution. Its optimization stages can be concluded as follows: initialize the population and related parameters, calculate each individual’s fitness and identify the best global solution, then update the individuals’ position through the following formulas:

where \(\vec {X_i^t}\) and \(\vec {X}_i^{t+1}\) are the positions of i th individual in t th and \(t+1\) th iteration respectively, \(\vec {X^t_*}\) is the optimal position of in t th iteration, \(p_h\), \(r_1\), \(r_2\) are random numbers in the range of [0, 1], l is a random number in the range of \([-1,1]\), b is the spiral constant(in this paper, b equals 1), and a is the scaling factor that hinges on the current iteration step t and the maximum number of iteration steps T.

Repeat the above steps until the end requirements are satisfied.

The standard GWO selects \(\alpha\) wolf, \(\beta\) wolf, and \(\delta\) wolf from the wolf pack by each individual’s fitness, then updates the individual’s position by the following formulas:

where \(\vec {X_i^t}\) and \(\vec {X}_i^{t+1}\) are the positions of i th individual in t th and \(t+1\) th iteration respectively, \(\vec {X_k^t}\) is the position of k, k can represent the position of \(\alpha\) wolf, \(\beta\) wolf, and \(\delta\) wolf, A and C are the same as the formula in WOA.

Modified WOA

Adaptive scaling factor

In WOA and GWO, the scaling factor decreases linearly to control the process of conversion from global optimation to local optimation. However, this approach fails to accommodate the practical condition as most optimization problems are complicated non-linear processes. Hence an adaptive scaling factor formula is proposed as follows:

where \(f^t_*\) and \(f^{t-1}_*\) are the optimal fitness in the current iteration and last iteration respectively.

In the above formula, the scaling factor is modulated by \(\frac{f^{t-1}_*}{f^t_*}\), thus expanding the searching scope if \(\frac{f^{t-1}_*}{f^t_*}<1\) or vice versa if \(\frac{f^{t-1}_*}{f^t_*}\ge 1\). The cosine function introduces non-linearity into the scaling factor. Furthermore, the minimum value function is used to ensure the scaling factor is greater than or equals to zero.

Improved GWO’s hunting strategy

In WOA, the optimal individual’s position instructs the update of other individuals’ positions. This method can facilitate convergence but has poor robustness, namely, it may stagnate around the local optimal solution. To accelerate the convergence of local optimation and strengthen the ability to search for global solutions, the position update formula of GWO is introduced to replace the original optimal position update method. Furthermore, each individual’s historical optimal position \(\vec {X^t_l}\) is introduced to calculate \(\vec {V^t_l}\). The new position update formula is as follows:

where \(w_\alpha\), \(w_\beta\), \(w_\delta\), and \(w_l\) denote the weights of \(\alpha\), \(\beta\), \(\delta\), and optimal local position, \(\vec {V_\alpha ^t}\), \(\vec {V_\beta ^t}\), \(\vec {V_\delta ^t}\), and \(\vec {V_l^t}\) denote the instruct vectors of \(\alpha\), \(\beta\), \(\delta\), and each individual’s historical optimal position.

The weights \(w_\alpha ^t\), \(w_\beta ^t\), \(w_\delta ^t\), and \(w_l^t\) depend on their respective positions’ fitness. Taking the case of minimal optimization, the position gets greater weight with smaller fitness, and then the weights are normalized into the range of [0, 1]. The weight can be calculated by:

where \(f_k^t\) denotes the fitness in position k, j in \(\sum {\frac{1}{f_j}}\) can represent the fitness of \(\alpha\), \(\beta\), \(\delta\), or each individual’s historical optimal position l.

In GWO, a random number \(C_i\) in the range of [0, 2] is used to control the influence of optimal position. However, this method is uncontrollable, namely that it lacks the precise trade-off between global optimization and local optimation.

To solve this issue, \(|1-a|\) and \(1-|1-a|\) are introduced to improve the original \(\vec {V_{k,i}^t}\). \(|1-a|\) is used to control the influence of \(\alpha\), \(\beta\), and \(\delta\), \(1-|1-a|\) is used to control the influence of historical optimal position l. As a decreases from 2 to 0, \(|1-a|\) firstly decreases from 1 to 0, and then increases from 0 to 1, this method fully utilizes the position of \(\alpha\), \(\beta\), and \(\delta\) and can facilitate convergence in both early and final stages. In the middle stage, \(|1-a|\) is close to zero and \(1-|1-a|\) is close to 1, the position update is mainly instructed by l and random variations, thus the population has better global optimization ability. \(\vec {V_t}\) can be expressed as follows:

where k in \(X_{k}^t\) can be represented by \(\alpha\), \(\beta\), and \(\delta\).

During the optimation process, the warmup skill is used: in the first \(N_{warmup}\) (e.g. 2) iterations, the scaling factor is set to be very small(e.g. 0.1), and after the warmup iterations the scaling factor reverts to normal behavior. This method helps the entire population find its better optimization direction and recognize the most efficient way to enhance their fitness.

Incorporation of improved GWO’s hunting strategy into WOA

In WOA, spiral hunting and the normal optimal position update method are used, and they both have a 50\(\%\) possibility of being executed. As the spiral hunting method has a larger search scope, and the optimal position update method searches comparatively in the local scope, a new possibility \(p_{h}\) is proposed. \(p_{h}\) is used to serve as the possibility of the spiral hunting method, and it decreases to 0 gradually as a decreases. This branch control method keeps the population to be neither too concentrated nor too sparse.

where a is mentioned above in Eq. (8), \(\theta\) is the population’s concentration ratio, \(\sum _{i=1}^N f_i^{t-1}\) stands for the sum of all individuals’ fitness, N denotes the total number in the population, and \(f_*^{t-1}\) is the best fitness of the current population.

The crucial problem of swarm intelligence is that the population’s concentration ratio \(\theta\) graduates to being huge. As \(\theta\) gets larger, it results in narrower diversity of the population, hence making it harder to continue global optimation. Therefore, the population’s concentration ratio is calculated and leveraged to control the ratio \(\theta\).

The graphic process of MWOA is shown in the Fig. 1.

Benchmark function test

Six multi-dimensional benchmark functions36 are used to verify the effectiveness and precision. F1, F2, F5, and F6 have many local minima, hence the optimization algorithm is prone to stagnate around them. F6 possesses many global mininum positions with the same value and its minimum value is determined by the dimension. F3 and F4 are bowl-shaped and they don’t have a local minimum. The hyperparameters of the target detection model are generally less than 20, thus the dimension of the test function d is set to 20. They are listed as follows:

PSO16, WOA17, GWO18, WhaleFOA37, and MWOA are employed to solve the above functions. In order to obtain the objective results, the common parameters are set to be consistent: the maximum number of iterations is 100, and the number of individuals in the population is 50. In PSO, the local coefficient and global coefficient are set to be 2.05, the minimum weight of the bird is 0.4, and the maximum weight of the bird is 0.8. In WhaleFOA, the safety threshold value is 0.8, and the number of producers percentage is 0.2. Test functions and MWOA are implemented using NumPy39. The core algorithms of PSO, GWO, WOA, and WhaleFOA are implemented by mealpy40.

All algorithms are tested using a device with an i5-10600KF processor and 32.0 GB RAM, each benchmark function is run independently thirty times, Table 1 shows the best and worst results in thirty solutions. Dealing with F1, F2, F5, and F6, MWOA is blessed with a more robust global searching ability and hardly falls into stagnation. As for bowl-shaped problems like F3 and F4, MWOA achieves better accuracy and stability. To get a scrutiny of the iteration process, Fig. 2 provides the average convergence curves of PSO, GWO, WOA, WhaleFOA, and MWOA. Contrasting with other algorithms, MWOA possesses faster convergence faculty during both the early and final stages.

WOG-YOLO

Network structure

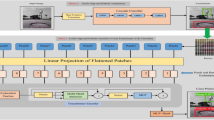

YOLOv541 is one of the most famous and utilitarian object detectors, it’s known for its high detection speed and elegant structure. Nevertheless, limited by its grid-based mechanism, YOLOv5 lacks competence in detecting small objects, thus the new YOLOv5 structure, named as G-YOLO, is proposed. The attention mechanism in SKNet42 is introduced into G-YOLO’s backbone network and the original C3 block is replaced by the G-C3 block. As convolution with a \(3\times 3\) kernel is sensitive to small features and convolution with a \(5\times 5\) kernel is sensitive to larger features, the SKConv can switch to the \(3\times 3\) or \(5\times 5\) perceptive field easily to obtain smaller scale features or bigger features. However, using the above two convolutions isn’t cost-effective compared with a single \(3\times 3\) convolution. Hence depth-wise convolution43 is used to replace the vanilla convolution, furthermore, the \(5\times 5\) convolution is replaced by \(3\times 3\) convolution whose dilation is set to 2. The improved SKConv has the same perceptive field and it has fewer parameters. The structures of G-C3, improved SKConv and GhostConv are shown in Fig. 3a–c.

An extra detection head is added to the G-YOLO to detect small objects more efficaciously and precisely. The number of branches in the PAN structure changes from 3 to 4, namely, a new branch using the \(160\times 160\) feature map is added for minor objects. The new network requires more parameters, to keep the network lightweight, GhostConv43 is introduced into the G-C3 block. GhostConv takes advantage of both vanilla convolution and depth-wise convolution, thus the number of trainable parameters reduces sharply without losing too much detection precision. Compared to the mostly used lightweight model YOLOv5s, the parameter size of G-YOLO is close to YOLOv5s. The new structure is shown in Fig. 4.

Data preparation and processing

Dataset

A great deal of previous research into autonomous driving has focused on the detection of cars, cyclists, and pedestrians using the Kitti dataset44, nevertheless, the precision in pedestrians and cyclists is unsatisfactory in comparison with that of cars. The low detection accuracy is far from the practical application of autonomous driving. The images containing vehicles, cyclists and pedestrians are extracted from the Kitti dataset, then vans and trucks are labelled cars. The final dataset contains 5325 images.

Data augmentation

In YOLOv5, mosaic is used as an image augment method, which gives the YOLOv5 network considerable enhancement in both precision and recall. The input image should be square in YOLOv5, but the width and height of images in the Kitti dataset are 1240 and 370 pixels respectively. Thus a large part of the input image is padded with blank. To reduce the padded area, three images are concatenated vertically as one image before using the mosaic method.

Optimization of YOLOv5’s hyperparameter

Parameters function as a critical part of the convolution network, it controls the entire training process and has a great impact on the performance of the final detection model. For models with a brand-new framework, tuning parameters one by one can be time-consuming and inefficient. Furthermore, inadequately tuned parameters can not fully reflect the performance of the model. Fine-tuned parameters can boost the recall and precision by setting a suitable threshold which is instrumental in obtaining a performant model.

In this paper, 12 parameters in G-YOLO are chosen to evolve using MWOA, their names and ranges are shown in Table 2. To be cost-efficient, the number of individuals is set to 5, the iteration number is set to 10.

The fitness function receives the newcome parameters, dispatches them to G-YOLO, and then activates the training of the detection model. After the G-YOLO training process, the evaluation score of the model is passed back to MWOA as the fitness. Trained with the optimal hyperparameters, the final whale optimization G-YOLO (WOG-YOLO) model is obtained.

The evaluating indicators are P, R, F1, and mAP. P refers to precision, which calculates the ratio of the number of correct detection results TP and the number of total detection results(\(TP+FP\)). R refers to recall, which calculates the ratio of the number of correct detection results TP and the number of actual objects(\(TP+FN\)). F1 is based on the harmonic mean of P and R, which considers both P and R. The indicators and scores are calculated by following formulas:

where k is the number of classes.

Results

The experiment is based on Ubuntu 18.04, using NVIDIA A2000 GPU. The batch size is 8, the number of training epochs is 100, the image size is \(640\times 640\), the confidence threshold is 0.25, and the NMS IOU threshold is 0.5.

The default and the optimized hyparameters are shown in Table 3. The most representative indicator mAP is used in the evaluation of YOLOv5s, WOG-YOLO, YOLOv745, YOLOX46 and Faster-RCNN2 and the results are shown in Table 4, the ablation study of WOG-YOLO is shown in Table 5 and the loss curve is shown in Fig. 5. The mAP of YOLOv5s is 92.5\(\%\) and the F1-score of YOLOv5s is 90.0\(\%\). By adding an extra detection head, its mAP improved by 0.6\(\%\). Based on YOLOv5-4heads, its C3 module is replaced by the lightweight G-C3 module (G-YOLO), which reduces the mAP by 0.3\(\%\). Compared with the YOLOv5s model, the final WOG-YOLO’s overall mAP increases by 1.7\(\%\), its mAP of the pedestrian increases by 2.6\(\%\), and its mAP of the cyclist increases by 2.3\(\%\). As pedestrians and cyclists have comparatively smaller features than cars, the WOG-YOLO model is more sensitive to small objects and has greater precision.

As shown in Fig. 6, WOG-YOLO has excellent capability to detect small objects. Moreover, when part of the object is covered by other things, WOG-YOLO still has reasonable detection ability.

Conclusion

To accurately identify objects in autonomous driving, a stable and effective detecting algorithm is needed. A novel and efficient optimization algorithm with WOA and GWO is proposed for improving the G-YOLO model.

The hunting strategy of GWO is improved and it’s integrated into WOA, thus the basic structure of MWOA is formed, furthermore, adaptive scaling factor, population concentration ratio, and improved position update method are implemented in MWOA. In comparison with PSO, GWO, WOA, and WhaleFOA, MWOA is verified by different kinds of benchmark functions to have greater precision and better global solving ability.

By replacing the C3 block with the G-C3 block and adding an extra detect layer, the highly optimizable G-YOLO is proposed. To improve G-YOLO’s performance, 12 hyperparameters are optimized by MWOA. The G-YOLO model is trained and evaluated using the self-built dataset containing 5325 images, thus the final whale optimization G-YOLO(WOG-YOLO) model is obtained. Compared with the 92.5\(\%\) mAP and 90.0\(\%\) F1 in YOLOv5s, WOG-YOLO is 1.7\(\%\) better in mAP and 1.0\(\%\) in F1. For small objects like pedestrians and cyclists, WOG-YOLO increases the respective mAP by 2.6\(\%\) and 2.3\(\%\).

In conclusion, the proposed method is an applicable and highly optimized approach to obtain a robust and efficient detection model in autonomous driving.

Data availibility

The datasets generated and analysed during the current study are available in Kitti.

References

Munir, F. et al. Exploring thermal images for object detection in underexposure regions for autonomous driving. Appl. Soft Comput. 121, 108793. https://doi.org/10.1016/j.asoc.2022.108793 (2022).

Ren, S., He, K., Girshick, R. & Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. in Advances in Neural Information Processing Systems (Cortes, C., Lawrence, N., Lee, D., Sugiyama, M. & Garnett, R. eds.). Vol. 28 (Curran Associates, Inc., 2015).

Zhou, J., Feng, K., Li, W., Han, J. & Pan, F. TS4Net: Two-stage sample selective strategy for rotating object detection. Neurocomputing 501, 753–764. https://doi.org/10.1016/j.neucom.2022.06.049 (2022).

Piao, Z., Wang, J., Tang, L., Zhao, B. & Wang, W. AccLoc: Anchor-Free and two-stage detector for accurate object localization. Pattern Recognit. 126, 108523. https://doi.org/10.1016/j.patcog.2022.108523 (2022).

Shi, S., Wang, Z., Shi, J., Wang, X. & Li, H. From points to parts: 3D object detection from point cloud with part-aware and part-aggregation network. IEEE Trans. Pattern Anal. Mach. Intell. 43, 2647–2664. https://doi.org/10.1109/TPAMI.2020.2977026 (2021) (conference name: IEEE Transactions on Pattern Analysis and Machine Intelligence).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016).

Ma, R. et al. CG-SSD: Corner guided single stage 3D object detection from LiDAR point cloud. ISPRS J. Photogram. Remote Sens. 191, 33–48. https://doi.org/10.1016/j.isprsjprs.2022.07.006 (2022).

Xiao, J., Jiang, H., Li, Z. & Gu, Q. Rethinking prediction alignment in one-stage object detection. Neurocomputing 514, 58–69. https://doi.org/10.1016/j.neucom.2022.09.132 (2022).

Shi, Q.-X. et al. Manipulator-based autonomous inspections at road checkpoints: Application of faster YOLO for detecting large objects. Defence Technol. 18, 937–951. https://doi.org/10.1016/j.dt.2021.04.004 (2022).

Carranza-García, M., Lara-Benítez, P., García-Gutiérrez, J. & Riquelme, J. C. Enhancing object detection for autonomous driving by optimizing anchor generation and addressing class imbalance. Neurocomputing 449, 229–244. https://doi.org/10.1016/j.neucom.2021.04.001 (2021).

Wang, X. et al. LDS-YOLO: A lightweight small object detection method for dead trees from shelter forest. Comput. Electron. Agricult. 198, 107035. https://doi.org/10.1016/j.compag.2022.107035 (2022).

Wang, X., Wang, X., Li, C., Zhao, Y. & Ren, P. Data-attention-YOLO (DAY): A comprehensive framework for mesoscale eddy identification. Pattern Recognit. 131, 108870. https://doi.org/10.1016/j.patcog.2022.108870 (2022).

Wang, Y., Wang, H. & Peng, Z. Rice diseases detection and classification using attention based neural network and Bayesian optimization. Expert Syst. Appl. 178, 114770. https://doi.org/10.1016/j.eswa.2021.114770 (2021).

Lokku, G., Reddy, G. H. & Prasad, M. N. G. OPFaceNet: OPtimized Face Recognition Network for noise and occlusion affected face images using Hyperparameters tuned convolutional neural network. Appl. Soft Comput. 117, 108365. https://doi.org/10.1016/j.asoc.2021.108365 (2022).

Dorigo, M., Maniezzo, V. & Colorni, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybernet. Part B (Cybernetics) 26, 29–41. https://doi.org/10.1109/3477.484436 (1996) (conference name: IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics)).

Cortez, R., Garrido, R. & Mezura-Montes, E. Spectral richness PSO algorithm for parameter identification of dynamical systems under non-ideal excitation conditions. Appl. Soft Comput. 128, 109490. https://doi.org/10.1016/j.asoc.2022.109490 (2022).

Mirjalili, S. & Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 95, 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008 (2016).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007 (2014).

Xie, H. et al. Improving K-means clustering with enhanced Firefly algorithms. Appl. Soft Comput. 84, 105763. https://doi.org/10.1016/j.asoc.2019.105763 (2019).

Zhou, J. et al. Optimization of support vector machine through the use of metaheuristic algorithms in forecasting TBM advance rate. Eng. Appl. Artif. Intell. 97, 104015. https://doi.org/10.1016/j.engappai.2020.104015 (2021).

Chen, K., Badji, A., Laghrouche, S. & Djerdir, A. Polymer electrolyte membrane fuel cells degradation prediction using multi-kernel relevance vector regression and whale optimization algorithm. Appl. Energy 318, 119099. https://doi.org/10.1016/j.apenergy.2022.119099 (2022).

Xiong, G. et al. Parameter extraction of solar photovoltaic models by means of a hybrid differential evolution with whale optimization algorithm. Solar Energy 176, 742–761. https://doi.org/10.1016/j.solener.2018.10.050 (2018).

Ghany, K. K. A., AbdelAziz, A. M., Soliman, T. H. A. & Sewisy, A.A.E.-M. A hybrid modified step Whale Optimization Algorithm with Tabu Search for data clustering. J. King Saud Univ. Comput. Inf. Sci. 34, 832–839. https://doi.org/10.1016/j.jksuci.2020.01.015 (2022).

Liu, W., Shao, Y., Chen, K., Li, C. & Luo, H. Whale optimization algorithm-based point cloud data processing method for sewer pipeline inspection. Autom. Construct. 141, 104423. https://doi.org/10.1016/j.autcon.2022.104423 (2022).

Jadhav, A. N. & Gomathi, N. WGC: Hybridization of exponential grey wolf optimizer with whale optimization for data clustering. Alex. Eng. J. 57, 1569–1584. https://doi.org/10.1016/j.aej.2017.04.013 (2018).

Soppari, K. & Chandra, N. S. Development of improved whale optimization-based FCM clustering for image watermarking. Comput. Sci. Rev. 37, 100287. https://doi.org/10.1016/j.cosrev.2020.100287 (2020).

Santos, C. E. D. S., Sampaio, R. C., Coelho, L. D. S., Bestard, G. A. & Llanos, C. H. Multi-objective adaptive differential evolution for SVM/SVR hyperparameters selection. Pattern Recognit. 110, 107649. https://doi.org/10.1016/j.patcog.2020.107649 (2021).

Chekuri, R. B. R., Eshwar, D., Kotteda, T. K. & Srikanth Varma, R. S. Experimental and thermal investigation on die-sinking EDM using FEM and multi-objective optimization using WOA-CS. Sustain. Energy Technol. Assess. 50, 101860. https://doi.org/10.1016/j.seta.2021.101860 (2022).

Sun, Y. & Chen, Y. Multi-population improved whale optimization algorithm for high dimensional optimization. Appl. Soft Comput. 112, 107854. https://doi.org/10.1016/j.asoc.2021.107854 (2021).

Seyyedabbasi, A. WOASCALF: A new hybrid whale optimization algorithm based on sine cosine algorithm and levy flight to solve global optimization problems. Adv. Eng. Softw. 173, 103272. https://doi.org/10.1016/j.advengsoft.2022.103272 (2022).

Mostafa Bozorgi, S., Yazdani, S., An improved whale optimization algorithm for optimization problems. IWOA. J. Comput. Des. Eng. 6, 243–259. https://doi.org/10.1016/j.jcde.2019.02.002 (2019).

Zhang, L. et al. Dynamic modeling for a 6-DOF robot manipulator based on a centrosymmetric static friction model and whale genetic optimization algorithm. Adv. Eng. Softw. 135, 102684. https://doi.org/10.1016/j.advengsoft.2019.05.006 (2019).

Liu, M., Yao, X. & Li, Y. Hybrid whale optimization algorithm enhanced with Lévy flight and differential evolution for job shop scheduling problems. Appl. Soft Comput. 87, 105954. https://doi.org/10.1016/j.asoc.2019.105954 (2020).

Luo, J. et al. Multi-strategy boosted mutative whale-inspired optimization approaches. Appl. Math. Model. 73, 109–123. https://doi.org/10.1016/j.apm.2019.03.046 (2019).

Yang, W. et al. A multi-strategy whale optimization algorithm and its application. Eng. Appl. Artif. Intell. 108, 104558. https://doi.org/10.1016/j.engappai.2021.104558 (2022).

Chakraborty, S., Sharma, S., Saha, A. K. & Chakraborty, S. SHADE-WOA: A metaheuristic algorithm for global optimization. Appl. Soft Comput. 113, 107866. https://doi.org/10.1016/j.asoc.2021.107866 (2021).

Fan, Y. et al. Boosted hunting-based fruit fly optimization and advances in real-world problems. Expert Syst. Appl. 159, 113502. https://doi.org/10.1016/j.eswa.2020.113502 (2020).

Chen, H., Yang, C., Heidari, A. A. & Zhao, X. An efficient double adaptive random spare reinforced whale optimization algorithm. Expert Syst. Appl. 154, 113018. https://doi.org/10.1016/j.eswa.2019.113018 (2020).

Harris, C. et al. Array programming with NumPy. Nature 585, 357–362. https://doi.org/10.1038/s41586-020-2649-2 (2020).

Thieu, N. V. & Mirjalili, S. MEALPY: A framework of the state-of-the-art meta-heuristic algorithms in Python. https://doi.org/10.5281/zenodo.7068595 (2022).

Jocher, G. et al. Ultralytics/yolov5: v6.2—YOLOv5 classification models, Apple M1, reproducibility, ClearML and Deci.ai integrations. https://doi.org/10.5281/zenodo.7002879 (2022).

Li, X., Wang, W., Hu, X. & Yang, J. Selective kernel networks. in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019).

Han, K. et al. Ghostnet: More features from cheap operations. in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020).

Geiger, A., Lenz, P. & Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. in 2012 IEEE Conference on Computer Vision and Pattern Recognition. 3354–3361. https://doi.org/10.1109/CVPR.2012.6248074 (ISSN: 1063-6919) (2012).

Wang, C.-Y., Bochkovskiy, A. & Liao, H.-Y. M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. https://doi.org/10.48550/arXiv.2207.02696. arXiv:2207.02696 [cs] (2022).

Ge, Z., Liu, S., Wang, F., Li, Z. & Sun, J. YOLOX: Exceeding YOLO Series in 2021. https://doi.org/10.48550/arXiv.2107.08430. arXiv:2107.08430 [cs] (2021).

Acknowledgements

This work was supported by China Shandong Province Major Scientific and Technological Innovation Project of Grant No. 2019JZZY010443 and China Shandong province Key Research and Development Program(Major Scientific and Technological Innovation Project) of Grant No. 2020CXGC011004.

Author information

Authors and Affiliations

Contributions

L.X., W.Y. wrote the main manuscript text, W.Y., L.X. reviewed and edited the original document, and J.J. prepared the datasets. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, L., Yan, W. & Ji, J. The research of a novel WOG-YOLO algorithm for autonomous driving object detection. Sci Rep 13, 3699 (2023). https://doi.org/10.1038/s41598-023-30409-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-30409-1

This article is cited by

-

Automatic Detection of Personal Protective Equipment in Construction Sites Using Metaheuristic Optimized YOLOv5

Arabian Journal for Science and Engineering (2024)

-

Deep learning-based route reconfigurability for intelligent vehicle networks to improve power-constrained using energy-efficient geographic routing protocol

Wireless Networks (2024)

-

An efficient single shot detector with weight-based feature fusion for small object detection

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.