Abstract

Accurate radiogenomic classification of brain tumors is important to improve the standard of diagnosis, prognosis, and treatment planning for patients with glioblastoma. In this study, we propose a novel two-stage MGMT Promoter Methylation Prediction (MGMT-PMP) system that extracts latent features fused with radiomic features predicting the genetic subtype of glioblastoma. A novel fine-tuned deep learning architecture, namely Deep Learning Radiomic Feature Extraction (DLRFE) module, is proposed for latent feature extraction that fuses the quantitative knowledge to the spatial distribution and the size of tumorous structure through radiomic features: (GLCM, HOG, and LBP). The application of the novice rejection algorithm has been found significantly effective in selecting and isolating the negative training instances out of the original dataset. The fused feature vectors are then used for training and testing by k-NN and SVM classifiers. The 2021 RSNA Brain Tumor challenge dataset (BraTS-2021) consists of four structural mpMRIs, viz. fluid-attenuated inversion-recovery, T1-weighted, T1-weighted contrast enhancement, and T2-weighted. We evaluated the classification performance, for the very first time in published form, in terms of measures like accuracy, F1-score, and Matthews correlation coefficient. The Jackknife tenfold cross-validation was used for training and testing BraTS-2021 dataset validation. The highest classification performance is (96.84 ± 0.09)%, (96.08 ± 0.10)%, and (97.44 ± 0.14)% as accuracy, sensitivity, and specificity respectively to detect MGMT methylation status for patients suffering from glioblastoma. Deep learning feature extraction with radiogenomic features, fusing imaging phenotypes and molecular structure, using rejection algorithm has been found to perform outclass capable of detecting MGMT methylation status of glioblastoma patients. The approach relates the genomic variation with radiomic features forming a bridge between two areas of research that may prove useful for clinical treatment planning leading to better outcomes.

Similar content being viewed by others

Introduction

The division of brain cells, in billions, is declared tumorous in case it is uncontrollable forming abnormal regions, leading to the cancers’ highest mortality rates worldwide for adults as well as children1. The tumor localization in the brain with its growth rate is a highly unpredictable entity and is broadly classified as primary and secondary tumors. The former, with its origin inside the brain, is the deadliest one, and malignant most of the time. The gliomas cover 80% of the primary brain tumors (in Grades I to IV only Grade I grows slowly, and is benign)2,3. The gliomas, Grades II and III grow quickly and frequently require prompt treatment. Grade-IV gliomas, the most aggressive type, also known as glioblastoma (GB), are the most challenging for prognosis and better clinical outcomes. In adults, GB and diffuse astrocytic glioma, due to extreme intrinsic heterogeneity in shape and microscopic anatomy, are the most dangerous tumors of the central nervous system. It has been observed that the GB patients’ prognosis, irrespective of the use of numerous treatment options, has had no substantial improvement during the last 20 years4,5,6,7,8,9,10. The World Health Organization (WHO) released details about the central nervous system tumors classification, and emphasized the integrated diagnostics utility, highlighting the clinical tumor diagnosis as integrating molecular-cytogenetic features11. O6-methylguanine-DNA methyltransferase (MGMT) is an enzyme for DNA repair that plays a vital role in chemoresistance to alkylating agents and is a promising prognostic factor predicting chemotherapy response based on the methylation of the promoter for an early diagnosed GB12,13,14. In this respect, the most common courses of treatment for GB include surgery, radiotherapy, and adjuvant chemotherapy15. The effectiveness of chemotherapy is often tied to the promotor methylation status of MGMT, an important biomarker, which functions as a repairing mechanism for guanine nucleotides and prevents cell death caused by alkylating agents12,16. In an average scenario, the presence of the MGMT enzyme is beneficial since it prevents DNA damage. In GB patients, however, the presence of MGMT decreases the effectiveness of chemotherapy by rendering the alkylating chemotherapeutic agents ineffective. This work is related to the prediction of the MGMT promoter methylation status ("Preprocessing") causing MGMT gene silencing, where its presence leads to favorable results in GB patients being treated with alkylating agent chemotherapy by stopping cell division through cross-linking DNA strands. Therefore, predicting the methylation status of MGMT promoters in GB can support further decision-making and treatment plan, and it is the sole objective of this research endeavor.

Presently, the minor structural details that are challenging to discriminate by computed tomography (CT) are detected by another non-invasive technique, namely magnetic resonance imaging (MRI). In the case of complicated GB MRI scans, the manual analysis by expert radiologists and physicians is tedious and time taking17. The complex cases need to compare the tumorous region with neighboring regions which leads to improving the perceptual information stored in the image for improved classification. This situation is impracticable in the case of a large number of images being dealt with using manual techniques. Early GB detection with reliable prediction results is important for the health of a subject11. Consequently, novel approaches are always the main source of attraction for cohorts working critically for prompt and reliable tumor detection. Machine learning (ML) and one of its variants, namely deep learning (DL), is the key enabler of artificial intelligence (AI) for discriminative feature extraction turning the images into useful information.

The RSNA ASNR MICCAI Brain Tumor Segmentation BraTS 2021 challenge (BraTS-2021 dataset) is based on multi-institutional multi-parametric Magnetic Resonance Imaging (mpMRI) scans18. This article is related to the prediction of MGMT promoter methylation status, a genetic characteristic of glioblastoma, using baseline MRI scans done before and while preparing for a surgical operation. In our work, we propose a novel classification framework distinguishing either MGMT methylated or MGMT unmethylated tumors using a challenging BraTS-2021 dataset. The former class is designated as (1, or MGMT +) while the latter is categorized as (0, or MGMT−).

The current practice for genetic analysis of cancer tissue samples is to use surgery. Further, the tumor genetic characterization requires weeks before a conclusion is reached18. The consequence of the results may lead to subsequent surgery. The notion here is to predict the cancer genetics using magnetic resonance imaging, namely radiogenomics, that might improve the therapy results along with the reduction of potential surgical treatments. The success of radiogenomics would lead to alleviating brain cancer miseries by least invasive measures for the respective diagnoses and treatments. This new treatment strategy, before any surgery, seems to have the potential to improve the prospects of management and survival of brain cancer patients.

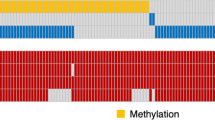

The MGMT gene is regulated by an epigenetic mechanism: the methylation of the CpG island of the MGMT promoter. The role of MGMT promoter methylation is to suppress the MGMT genes’ expression thereby decreasing MGMT enzyme function in the cell. Due to the ability of MGMT methylation to increase the effectiveness of chemotherapy, the status of MGMT methylation is often taken into consideration when determining the course of treatment18,19,20. The traditional method to determine MGMT methylation status involves the extraction of tumor tissue through surgery. After extraction, the determination of the methylation status of the tumor is a time (up to weeks) taking process. Further, after determination, additional invasive procedures may be necessary to discern the optimal treatment method18,21.

Radiogenomic diagnosis of MGMT methylation testing aids in decreasing the invasiveness of current testing procedures. Radiogenomics aims to predict cellular genomics with the use of a tissue’s phenotypic image characteristics22,23. In gliomas, radiomics is commonly used to predict survival, and evaluate the potential of chemotherapeutic treatments in treatment24. Several radiogenomic models have been developed to predict MGMT methylation status in GB patients25,26,27. However, radiogenomic models are susceptible to a lack of standardization because of the variation between methodology, software, and radiologists’ readings23. Recently, Zhang et al.28 introduced a data-sharing scheme based on blockchain with fine-grained access control. They separated the public and private parts of electronic medical records, which are subsequently encrypted separately by symmetric searchable encryption (SSE). The symmetric keys used in SSE technology were encrypted by attribute-based encryption. This helped patients to share data without any risk. For GB, the radiogenomic approach to MGMT methylation testing consists of evaluating magnetic resonance images (MRIs) of the brain29. To expand upon current radiogenomic analysis methods, artificial intelligence can be employed to construct complex predictive deep learning radiogenomic models30.

Deep convolutional neural networks are employed for a vast array of tasks, including medical image analysis31,32 and image classification14,33. Deep learning architecture has encoding blocks ordered in multiple layers. The feature maps in lower layers are forwarded to subsequent layers with increased complexity order. A convolutional neural network (CNN)34 massively reduces the number of neurons due to sparse interaction in comparison to shallow neural networks. The transfer learning methodology based on CNN is well proven for quite some time35,36,37,38,39 and has been extensively used in the analysis of different imaging databases37,38,40,41, neuroimaging42, MRI, CT (Computed Tomography)36, and ultrasound images43. Transfer learning using CNN based on AlexNet and GoogleNet for the ImageNet dataset is well known deep learning approach44. The CNNs are extensively used in vision-related applications including object detection45, spanning classification46, and segmentation47. The combination of data pre-processing and augmentation with transfer learning can be helpful for improved classification results. In our case, since the dataset is enormous, only pre-processing is seeming of great value along with a fine-tuned CNN architecture.

The features used as the source of training essence should have discrimination ability that would be exploited to predict the regions across the hyperplane with a maximum confidence level as the target label. In this context, there is a gap for efficient and robust automated systems for brain tumor detection using MRI. Hsieh et al.48 defined brain tumor categories based on region-of-interest (ROI), feature selection, and feature extraction. They used local textural features including global histogram moments using 107 images of gliomas (73 low-grade; 34 high-grade). The work, however, was reported on a limited dataset and lacking features based on other static feature extraction methods. Cheng et al.49 used a T1-weighted Contrast-Enhanced brain MRI dataset50 having three types of tumors (glioma, pituitary, and meningioma), experimenting with three feature extraction methods, namely intensity histogram, bag-of-words (BoW), and gray level co-occurrence matrix (GLCM), finding that BoW performs relatively better at a higher computational cost. The accuracy was limited due to the absence of preprocessing scenarios that could lead to improved discriminative features. The hybrid of solution spaces for the three characteristic feature sets was not explored. Similarly, Sachdeva et al.51 extracted color and textural features based on segmented ROIs, using the genetic algorithm for features’ selection with optimum fitness level, and reported the accuracy as 94.90% using a genetic algorithm-based artificial neural network (GA-ANN). However, for large datasets, the colored images have different color tints necessitating the use of staining procedures as an essential step. Further, the dynamic features need to be explored with deep learning algorithms to address enhanced discrimination features.

Claro et al.52 used hybrid feature space formed by textural features, like Tamura (coarseness, contrast, directionality, line-likeness, regularity, and roughness), gray level run length matrix (GLRLM), histogram of oriented gradients (HOG), morphology, local binary patterns (LBP), merging the extracted features using seven CNN architectures for glaucoma classification. Their feature space was based on 30,862 dimensions, which was squeezed by the gain ratio for arranging the features according to their performance concluding in an optimum setting for glaucoma detection. They found the GLCM descriptor with transfer learning-based features to be the most effective for their specific problem structure. The work needs to be explored on multi-parametric and multi-institutional datasets, for larger and more diverse datasets, with dynamic features using residual feature maps concatenated in the successive layers. Garcia et al.53 solved the problem of imbalanced datasets by using ensemble classifiers with feature space partitioning. The parameters of the partitioning were optimized by using a hybrid metaheuristic method, called GACE which combined a genetic algorithm (GA) with a cross-entropy (CE) method. More elaborative work using generative adversarial networks (GAN) can be used to tackle the underlying problem of class imbalance. Shaban et al.54 introduced a hybrid feature selection methodology that extracts the features with optimum characteristics using COVID-19 CT images. The feature selection is based on fast and accurate selection stages. They used an enhanced version of k-NN that is not trapped due to solid heuristics in choosing the neighbors of the tested subject. The work can be explored using other ML classifiers, along with DL classification techniques to involve the features based on high-level abstraction layers.

In this research, we propose a novel two-stage MGMT Promoter Methylation Prediction (MGMT-PMP) system, that precisely quantifies the image structure of GB in patients from the evaluation of FLAIR, T1w, T2, and T1Gd mpMRIs. We have selected the popular feature types, viz. GLCM, HOG, and LBP, and fused these features with novice deep learning features forming a hybrid feature set (HFS) differing vis-à-vis in three aspects. Firstly, it engages a novel Deep Learning Radiomic Feature Extraction (DLRFE) module that extracts dynamic features based on the problem structure into the classification process leading to promising results. Secondly, it provides different categories of second-order statistics and local textural features exploiting the positive aspects of each category of feature extraction modules. Third, the system is based on filtering that uses the rejection algorithm for removing redundant and irrelevant features from the RSNA dataset thereby improving the discrimination or variance and leading to its quick convergence. A comparison with recent techniques is also presented for performance analysis.

The key contributions of this research work are summarized as follows:

-

This work is related to the MGMT promotor methylation status affecting the efficiency of chemotherapy in GB patients where ‘MGMT+’ status increases the effectiveness of chemotherapy.

-

A novel two-stage prediction system for MGMT promoter methylation status, that precisely quantifies the image structure of glioblastoma in patients using FLAIR, T1w, T2, and T1Gd mpMRIs.

-

A novel deep learning-based feature extraction module that extracts dynamic features based on the problem structure.

-

The hybrid feature set formation by fusing deep features with static features of the origin GLCM, HOG, and LBP.

-

RSNA ASNR MICCAI Brain Tumor Segmentation BraTS 2021 challenge was used for radiogenomic classification with 348,642 mpMRI scans for the very first time in published form (using performance measures, viz. accuracy, F1-score, and Matthews correlation coefficient).

-

The rejection algorithm is introduced for removing redundant and irrelevant features from the dataset.

-

A detailed complexity analysis of the individual and combinatorial hybrids formed by the fusion of dynamic and static feature sets.

-

A comparison of the proposed work with other state-of-the-art techniques.

The paper organization follows; "Importance in the Clinical management and the survival of GB patients" briefly details the impact of the study on the survival of GB patients with clinical management, "Material and methods" is dedicated to materials and methods, "Results and discussion" details the results and discussion, followed by Conclusions in section "Conclusions". The abbreviations used throughout this article are illustrated in Table 1.

Importance in the clinical management and the survival of GB patients

Gliomas with varying levels of heinous symptoms have been declared a serious threat to the central nervous system. The BraTS-2021 focuses on the molecular representation and structure of the underlying tumor in intraoperative neurosurgery and serves preoperative ground using mpMRI data18,40. Its objective has been the localization of the brain tumor sub-regions that are microscopically distinct in structure. The identification of tumor boundaries in MRI is of importance in surgical treatment planning, intraoperative brain incision to monitor the tumor growth, and planning the radiotherapy and chemotherapy maps (RCM) following the surgical treatments. Irrespective of the poor prognosis of GB patients, the tumor’s MGMT promoter methylation status, which can be found by radiogenomic classification of mpMRI scans, is extremely important to indicate the chemotherapy response prediction. Further, due to the proposed fine-tuned framework, using low-cost GPU-based portable machines would greatly help medical practitioners assist consumers along with the support system to manage post-surgical treatment more effectively.

Some recent cohorts have focused on the information freshness notion. Yang et al.55 offset the COVID-19 effects by controlling the diffusion of the epidemic by introducing Age of Information (AoI), a measure for the quantification of information freshness, an optimization scheme using artificial intelligence-based diagnostic bots. Initially, they formed a health state monitoring system where the diagnostic biosensing data was transmitted through bots using edge servers. It was followed by the derivation of AoI problem in a closed form. They also proposed algorithms for bot placement and channel selection using stochastic learning.

Material and methods

The proposed framework (Fig. 1), namely MGMT Promoter Methylation Prediction (MGMT-PMP) System, forms a highly discriminative feature set, HFS, in two stages. In stage one, the notion is to extract features using the DLRFE module where the features from the last flattened layers of the convolutional neural network are acquired, whereas, in stage two the second-order statistics and local textural features are extracted. The features from these stages are merged to form HFS. The feature fusion is further used for radiogenomic classification by a strong ML classifier, like SVM or k-NN algorithms. The dataset details, preprocessing, and feature extraction finally follow the prediction model in this section.

Dataset

The proposed framework was analyzed on a publicly available RSNA-MICCAI Brain Tumor Radiogenomic Classification dataset (BRATS-2021)56, for a featured code competition that was introduced for the treatment of brain cancer to predict the state of the genetic biomarker. The BraTS-2021 is going to be a common benchmark for GB segmentation algorithms using multi-institutional and multi-parametric Magnetic Resonance Imaging (mpMRI) images of 2000 patients suffering from glioma. The preoperative MRI images were divided into training, validation, and testing cohorts. For the classification task, the target labels, based on the MGMT promoter methylation status, are provided for 585 subjects. The testing cohort is not accessible currently and the validation data for 87 subjects are provided without labels. We have used the training data provided with labels and further partitioned it for testing by cross-validating the results ten folds. The available modalities are T1 weighted (T1w), T1 weighted contrast enhancement (T1wCE), T2 weighted (T2w), and fluid-attenuated inversion-recovery (FLAIR). The two tasks that BraTS-2021 focuses on are: first, segmentation of tumor sub-regions, and second, radiogenomic classification of the MGMT promoter methylation status18. Some rescaled mpMRI images from the BraTS-2021 dataset, as illustrated in Fig. 2, showing from top to bottom: sagittal, axial, and coronal views, and the variation in columns represents the intra-class variance of the dataset. The specifications for training and testing instances in BRATS-2021, from the given “train” directory, have been illustrated in Table 2. Similar information files leading to redundant data are removed using the rejection algorithm (RA). The RA trimming effect on instances has been illustrated in the lower part of Table 2 where the specifications of the reduced dataset have been shown ("Preprocessing").

In the absence of a testing cohort, the BraTS-2021 dataset is constituted of 348,642 training instances. The sharing of this dataset can be useful provided that its security-related issues are addressed The large amount of data generated by heterogeneous Internet of Medical Things (IoMT) may be dispatched to the cloud servers for pathological analysis and diagnosis. This direct mode is convenient for both patients and clinicians, however, the open communication channel has numerous security and privacy issues. In this context, recently Wang et al.57 perceived the physical-layer security and over-centralized server problem in wireless medical sensor networks, and proposed a reliable authentication protocol using cuttingedge blockchain technology and physically unclonable functions. Further, the biometric information was dealt with a fuzzy extractor scheme.

Preprocessing

Standardized preprocessing procedures have been adopted for all the mpMRI scans included in the BraTS-2021 dataset. The pre-processing included NIfTI file format conversion58, co-registration to the template of normal adult human brain anatomy for an MRI-based atlas SRI2459, resampling to 1mm3 isotropic resolution, followed by skull stripping, isolating cortex and cerebellum from the skull and nonbrain area, as illustrated in Fig. 3. All the preprocessing phases are handled using publicly available support such as Cancer Imaging Phenomics Toolkit (CaPTk) including Federated Tumor Segmentation (FeTS) tool18,60,61,62.

The preprocessing channel commenced with the conversion of the DICOM file format to NIfTI files18,58. The files for radiogenomic classification, one of the two tasks of the BraTS-2021 dataset, are not co-registered while for segmentation, the second task, the dataset undergoes registration to a standard template provided as NIfTI files. The NIfTI format removes the associated metadata including all the Protected Health Information (PHI) from the DICOM files. In addition, since the skull-stripping lessens the extent of facial reconstruction, which may be used for face recognition of the patient subsequently, it is based on a representation learning-based methodology that describes the brain shape prior and is independent of the MRI input63,64,65.

For radiogenomic classification, the entire mpMRI images are preprocessed as illustrated in Fig. 3, to generate the skull-stripped volumes, and finally converted from NIfTI to DICOM format consisting of skull-stripped images. Finally, a two-step process for data deidentification, comprising of RSNA CTP (Clinical Trials Processor) anonymizer18, and whitelisting of these outcome DICOM files, is carried out. This removes all unnecessary tags, ensuring the removal of all PHI entries, from the DICOM headers.

For radiogenomic classification, the entire images are segregated into two classes, namely “0” and “1” based on the presence of MGMT promotor methylation status, with T1W, T1WCE, FLAIR, and T2W combined in either of the classes depending on the annotation allocated by the expert radiologists and pathologists. These images are further converted from DICOM (12 bits) to jpg (8 bits) format and resized from 512 × 512 to 64 × 64 to speed up the training process, which is unavoidable for an enormous dataset, and takes care of memory issues encountered when using an average-price GPU based portable system.

Pseudocode for rejection algorithm

The rejection algorithm, illustrated in Fig. 4, has been run for each class. The sole objective is to remove the instances that do not add discrimination to the radiogenomic classification process. The “datapath” of either of the binary classes is followed by exclusive loading of the entire image set for either of the classes, MGMT− (Class “0”) or MGMT+ (Class “1”). The number of images is fetched to act as the stopping criterion for the algorithm. The rejection algorithm checks the redundant/irrelevant images and removes them one by one from the specific class by using the threshold of the sum of pixel values (Th). After thorough investigation, Th has been empirically found equal to zero for the optimum results. Once all the images have gone through RA, the output consists of the details for each of the rejected files, with the revised datapath loading producing the improved class details. The difference in the initial instance count of a class should match the rejected files count plus the final class size (after RA). The RA resulted in 26.75% and 27.51% reduction in the number of instances for classes “0” and “1” respectively.

Deep learning-based latent shape features

We propose a DLRFE module for radiomic characteristics using deep learning by feature bleeding through the fully connected layers. The parametric tuning of the module is carried out for the least memory requirements and computational overhead culminating in maximum efficiency using average-price GPU-based computer systems. It is composed of 3 convolution blocks as illustrated in Fig. 5. The weights and biases have been maintained at smaller values using L2-regularization and a dropout layer after the third block paving an improved generalization policy.

The DLRFE module is constituted of two networks; an encoder, to infer latent variables given input image, and a fully connected network to infer the prediction of the deep learning architecture. The input to the encoder network is an image sized: (64 × 64), and the output is a classification prediction. The encoder network consists of 3 convolutional network blocks (Block-1, Block-2, and Block-3) followed by three fully connected layers. Each block consists of convolutional, ReLU, and max-pooling layers with batch normalization after Block-2, and a dropout layer after Block-3.

The fully connected layer, after converting feature maps to a 1D vector, connects neurons to other layers, causing dynamic extraction of latent features. Three fully-connected layers in the DLRFE module are FC1, FC2, and FC3 with 512, 64, and 2 neurons respectively. The activation function is carried out by the SoftMax layer for the non-normalized output of FC3 using two neurons with a probability distribution in the range [0, 1] to a binary basis. The kth class probability as the cross-entropy loss (CEL) is determined using a normalized exponential function: \({P}_{k}=\frac{{e}^{{O}_{k}}}{\sum_{m=1}^{{\varvec{M}}}{e}^{{O}_{m}}}\) where Ok is the activation of the kth class. The CEL has two label sets: the target and expected labels are represented as t(x) and p(x) respectively. The loss is defined as: \(L\left(t,p\right)=-\sum_{\forall x}t\left(x\right)\mathrm{log}\left(p\left(x\right)\right)\). The updating of weights during backpropagation is carried out by minimization of the cost function given by: \(-\frac{1}{\mathrm{J}}\sum_{j=1}^{\mathrm{J}}ln\left(p\left({t}^{j}|{x}^{j}\right)\right)\), where J is the size of the training set, xj represents the training sample with the target label tj and p(tj|xj) is the classification probability. The stochastic gradient descent approach is used epoch-wise, with each epoch divided in the form of mini-batches, for the minimization of the cost function. The updated weight for the i + 1th iteration in layer l,\({W}_{l}^{i+1}\) is given by: \({W}_{l}^{i+1}={W}_{l}^{i}+\Delta {W}_{l}^{i+1}\), where \(\Delta {W}_{l}^{i+1}\) is the corresponding weight update. The network training takes place in a circular way, feed-forward, and feed-backward iteratively.

Radiomic and radiogenomic features

Radiogenomics is based on quantitative data collected from medical images having individual genomic phenotypes, and the notion is to design a prediction framework to categorize patients for clinical outcomes. The genomic fractional variation in the tumor DNA can be obtained by multiple radiomic features during radiogenomic analysis using mpMRI scans18,66,67. The BraTS-2021 dataset for radiogenomic classification task is based on mpMRI scans and MGMT promoter methylation status. Many cohorts are employing this dataset leading to machine learning-based solutions for the prediction of MGMT promoter methylation status using radiomic features based on gray leveled imaging. Numerous radiomic feature extraction methods have been introduced and exploited depending on the nature of the problem structure and the corresponding solution domain. We have selected second-order statistics and textural features to build HFS in addition to the latent shape features ("Deep Learning-based Latent Shape Features"). We have used the three most effective feature extraction modules (FEMs) for radiogenomic classification tasks corresponding to individual feature extraction strategies, namely GLCM, HOG, and LBP. Each of the modules extracts diverse features independently from each of the mpMRI scans.

GLCM features

Many researchers have employed textural features successfully and solved classification-linked problems68,69, including the categorization of brain tumors40,70. We used GLCM-based FEM which is based on the spatial relationship of pixels in an image. These highly discriminative features describe the texture based on the repeatability of pixel groups with specific values that exist in a two-dimensional relationship in an image71,72. The GLCM is a square matrix (size: N × N), where N represents a different number of gray levels in an image. If the matrix is denoted by M(i, j) where each element (i, j) of GLCM represents the frequency of occurrence of a particular relationship between two intensities of pixels in the input image, where “i” represents the gray level of the pixel at location (x, y), and “j” represents the gray level of a contiguous pixel positioned at a relative distance “d” from the pixel at an orientation “θ”’. The relationship between the ith and jth intensities is based on these two parameters, d and θ, in four directions (0°, 45°, 90°, 135°) with an increment of 45° without symmetry repetition. We selected 13 Haralick features in our work as illustrated in Table 3 showing mathematical formulae for their calculation and comprehensive definitions73. These features are computed to form M, whereas \({p}_{ij}\) is (i, j)th entity of M divided by its size, the expressions mi and mj, and σi and σj represent the mean and standard deviations of ith row and jth column of M. The directions averaged features are values are fused to form the GLCM-based feature vector \({x}_{GLCM}={[{F}_{1} {F}_{2} {F}_{3}\dots {F}_{13}]}^{t}\).

A schematic diagram for GLCM calculation has been shown in Fig. 6. In the GLCM illustration, Fig. 6b, the top row and left column, cyan cells, are pixels presented in the input matrix. The GLCM, Fig. 6b, calculation using the input matrix, Fig. 6a, illustrates the calculation of the matrix at 0° and \(d=1\) for neighboring intensity pairs (3,0). In Fig. 6a, a pixel with intensity ‘3’ is present with pixel ‘0’ in a pair (3,0) four times as shown with a yellow circle in Fig. 6b. Similarly, the entire GLCM is calculated for single orientation and adjacent pixel intensities pairs.

HOG features

HOG features efficiently perform classification tasks due to highly discriminative characteristics associated with their extraction procedure74,75. The HOG module extracts the direction and gradient-based information from the input image that helps in describing the structure of the problem. The HOG feature extraction process is illustrated in Fig. 7a–e. Figure 7a shows an original mpMRI scan (BraTS-2021 dataset), while Fig. 7b represents the schematic of cells and a block superimposed on the original input image. Figure 7c shows the HOG descriptors with a schematic of a block and a cell, both depicted separately in Fig. 7d, while the magnified view of a single cell is shown in Fig. 7e. The feature extraction strategy consists of three activities:

-

i.

The computation of gradients by calculating the direction or magnitude of each pixel. The Sobel kernel function is used to obtain gradient in \({E}_{x}\) and \({E}_{y}\) directions to calculate gradient and angle at every pixel using the mathematical formulation: \({M}_{HOG\left|g(i,j)\right|}= \sqrt{{E}_{x}({i,j)}^{2}+{E}_{y}({i,j)}^{2}}\), and \({M}_{{HOG}_{\theta g(i,j)}}={\mathrm{tan}}^{-1}\left(\frac{{E}_{y}(i,j)}{{E}_{x}(i,j)}\right)\), where, \(\left|g(.)\right|\) denotes magnitude, \({\theta }_{g }(.)\) denotes the direction of the gradient, i and j denote rows and columns respectively.

-

ii.

The small cells of size (r × s) are derived from the input image as shown schematically in Fig. 7b,d.

-

iii.

Finally, the cells are combined into overlapping blocks, each of size (p × q), and in each block, a histogram of oriented gradients falling into each bin is computed which is further subjected to the normalization process (L2-norm) to overcome illumination variation. The normalized vector for each block of the histogram is given by: \({M}_{HOG{T}_{i}^{N}}=\frac{{T}_{i}}{\sqrt{{{T}_{2}}^{2}+{\in }^{2}}}\), where \(\in\) is any constant that can’t be divided by zero, and \(T\) indicates the non-normalized vector.

The features so collected from all the normalized blocks are fused to form a feature descriptor, xHOG, for the entire image.

LBP features

Local binary patterns (LBP) descriptor is another efficient feature extraction operator generating high-level characteristics that are used to assign a label to each pixel of an image by thresholding its neighborhood and translating the result as a binary number76. LBP has the advantages of rotation invariance along with gray level invariance77. This feature module encodes the relationship between the pixel and its neighbors in a circular manner by describing the local spatial structure of an image. The binary output using the LBP operator is obtained using the difference (Gp- Gc ) on a per-pixel basis and checking it through the central pixel (Gc) along with the surrounding pixels Gp, where p is limited in the range1,8 around 3 × 3 receptive areas30. The working principle of the LBP operator on a pixel is illustrated in Fig. 8. The LBP values are computed by binarization based on the difference between the contiguous pixels, where two groups are formed and each element is assigned to either of the group, with the help of a step function. The central pixel value \({LBP}_{p,r}({G}_{c})\) is given by:

The threshold function, H(x), treats values that are greater than zero or equal to zero. In the above equation, r represents the central pixel distance from the neighboring pixels (radius), and p represents the total number of pixels minus the central pixel included in the process78,79. In Fig. 8, r = 1 and p = 1,8, have been employed with a receptive area of 3 × 3 sized mini-image. The last stage is carried out by converting the binary codes of zeros and ones to decimal numbers to form an LBP image80.

Jackknife cross-validation

The Jackknife cross-validation with tenfold engaged in this work for parametric optimization including the training and test data formulation. It is a commonly used approach for the verification of robustness and confidence of the system performance (Fig. 1) for model selection on potential algorithms81. In this technique, the data is divided into 10 folds (\({F}_{i};i=\mathrm{1,2},\dots 10)\) or partitions. The test portion, based on a single fold, is crossed and the training portion consisting of (K-1) folds is partitioned into training and validation sets82. The diagonal folds, shown as crossed rectangles (\({F}_{i}{A}_{i};i=\mathrm{1,2}, \dots ,10\)), represent the test partitions and the off-diagonal folds, non-cross folds, represent training partitions, shown as plain rectangles (\({F}_{i}{A}_{j};i=\mathrm{1,2}, \dots ,10; j=\mathrm{1,2}, \dots ,10 \, AND\, i\ne j\)), which are divided into training and validation sets. The best parameters-based model is used with the test data to evaluate the performance. We have used the stratified cross-validation scheme due to the imbalanced class distribution in the dataset to give a close approximation of the generalization accuracy (\({A}_{i};i=\mathrm{1,2},\dots ,10\)). This ensures an equal number of instances of each class distributed across the training and test partitions83. The performance (Ac) estimated by cross-validation using each test fold accuracy Ai ∈ \(\Re\) i = 1, 2, 3,…, K, folds is given by:

The average performance \(\overline{A }\) is given by:

Classification model

The individual features including their combinatorial hybrids and HFS have been employed in the classification system using SVM and k-NN algorithms as the potential ML tools to find a reliable classification solution. The SVM was optimized using linear, RBF, and polynomial kernels. A brief overview of the classifiers being selected for this article is given by:

k-Nearest neighbors (k-NN) classification

The k-NN, a proximity-based classifier, is based on the notion that the test instance q would have the maximum number of nearest neighbors for the prospective class. In this classification technique, distance d is measured from query q to k-nearest neighbors, xt that lie in class yt. The q is assigned the class yu having the maximum number of neighbors among the k-nearest neighbors84,85. In the case of \(k=1\), the test instance is simply assigned the class of the single nearest neighbor. The formula for Minkowski distance \({d}_{mk}\), a generalized distance metric is given by:

where the larger value of r gives more influence to the distance on which q differs the most. Some of the distance metrics are Euclidean (L1-norm), Mahalanobis (L2-norm), and Chi-square distances as given in Table 4. Another variant mostly used is distance weighted-voting where the q receives vote V from each of the nearest neighbors weighted inversely to their distance from q85:

where \(1\left({y}^{u},{y}^{t}\right)\) is unity when both labels match, zero otherwise, and z determines the type of distance measure being adopted during classification.

Support vector machine classification

The SVM maps the supervised-learning tth instance pair (xt, yt), xt being the sample with label yt, from a data set X in the sample space S separated by a hyperplane with a maximized margin on either of its sides86. The class label-based least-confident points near the hyperplane are the support vectors. The better generalization of the model depends on maintaining the margin as much as possible. The outlier (noise) does not influence the decision boundaries significantly as it would simply ignore its effect in the training phase87. On the other hand, the SoftMax layer in CNN will be influenced by such a point due to its probability-based working principle. In other words, the SVM is favored as a strong classifier with a reduced error rate. The hyperplane function, defined for the linearly-separable classes, is given for the ith class by:

where \(\underline{x}\) is the input training vector and \({\mathcalligra{w}}_{i}^{T}\) is weight vector that is orthogonal to the hyperplane for \({i}\)th class, and \(b\) is the bias of the decision plane. The distance on both sides of the hyperplane defines a margin that is maximized when the weight vector \(\Vert {\mathcalligra{w}}\Vert\) is minimum. To find the optimal separating hyperplane, SVM aims to maximize the margin as given by:

Performance measures

The evaluation of the model is established on performance measures like confusion matrix, accuracy, sensitivity, specificity, precision, negative predictive value, receiver operator characteristic curve curves, F1-score, and Matthews correlation coefficient. “TP” is the number of images detected with a specific promoter methylation status and actually, they are of the same status. “FN”, also known as Type II error, is the number of images detected not found with a specific promoter methylation status but truly they are having that specific status and “FP”, also known as Type I error, is the number of images detected with a specific promoter methylation status but they are not truly having that status. “TN” is the number of images that neither are having a specific promoter methylation status nor are labeled as having a specific status by the classifier.

Accuracy, A, defines the effectiveness of a classification model as a quantitative measure. It can be calculated by using the following relationship: \(A=\left(TP+TN\right)/\left(TP+FP+FN+TN\right) \times 100\).

Sensitivity, Sn, defines the usefulness of a model to know instances of MGMT+ class. It can be computed by: \({S}_{n}=TP/\left(TP+FN\right)\). This measure of performance is also known as Recall (Rc) or true positive rate (TPR).

Specificity, Sp, defines the usefulness of a classifier to know instances of MGMT− class. It can be computed by: \({S}_{p}=TN/\left(TN+FP\right)\). The specificity is also known as true negative rate (TNR).

Precision, Pr, defines the proportion of all cases testing positives that truly belong to MGMT+ class. It can be calculated by: \({P}_{r}=TP/\left(TP+FP\right)\). The precision is also known as positive predictive value (PPV).

Negative predictive value, NPV, defines the proportion of all cases testing negatives that truly belong to MGMT− class. It can be calculated by: \(NPV=TN/\left(TN+FN\right)\).

F1-score, measured in the range [0, 1], is the harmonic mean of Pr and Rc, and it can be mathematically expressed as: \({F}_{1}-score=\left(2\times {P}_{r}\times {R}_{c}\right)/\left({P}_{r}+{R}_{c}\right)\). This measure is significant in case the class imbalance is present in the dataset.

Mathews correlation coefficient (MCC) is considered important as a performance measure in the case of binary classification problems. It varies in the range [− 1, + 1], where the value + 1 indicates the right predictive decision in agreement with the label, − 1 indicates the misclassified prediction, and 0 indicates the random prediction with 50% accuracy. Mathematically, MCC can be calculated by: \(MCC=\left(TP\times TN-FP\times FN\right)/\sqrt{\left(TP+FN\right)\left(TN+FN\right)\left(TP+FP\right)\left(TN+FP\right)}\).

Receiver operating characteristic (ROC) curve defines the classifier’s overall performance over the entire operating range depicting the classification efficiency. TPR represents Sn, whereas FPR is defined as the count of MGMT− events expected as MGMT+ events divided by the total sum of MGMT− events88. The mathematical formulation of FPR is given by: \(FPR=FP/\left(TN+FP\right)\).

Ethical approval

All the procedures were performed in accordance with the relevant guidelines and regulations.

Results and discussion

We analyzed the proposed framework to categorize mpMRI scans into either MGMT+ or MGMT− instances. After preprocessing, GB images are used for latent and radiomic features extraction, feature fusion is investigated and HFS so formed is forwarded to a strong classification algorithm. All of the experiments have been conducted using open source libraries, and the standard programming tools used to tune the proposed system using Dell G7 Laptop (Intel® Core™ i7 8th Generation CPU), 32 GB RAM, and 6 GB GPU (NVIDIA GTX-1060 and 1280 CUDA cores).

Experimental setup

The setup was initiated by selecting appropriate parametric values to extract the latent and radiomic features. The experimentation has been carried out by employing the tenfold Jack-knife cross-validation on the BraTS-2021 dataset. The optimal heuristics selection has been discussed in "Selection of optimal parameters". The contrast normalization was carried out in the range [0–1] before the classification stage. The classification results ("Dataset") have been generated on unseen test samples, with hidden labels used only for performance measurement, of the test folds. Sections "Performance analysis of DL-based latent feature extraction", "Analysis of parameters for Deep learning and radiomic feature methods", and "Performance analysis of individual FEMs" analyze the classification performance of the latent-, individual- and hybrid-features respectively. Section "Performance analysis of HFS" illustrates the time involved in feature extraction (two stages), and finally classification during the training and testing phases. Section "Performance comparison" describes a comprehensive comparison of the proposed technique with existing schemes in terms of classification performance followed by the shortcomings and the future recommendations for cohorts working in the areas of common interest.

Selection of optimal parameters

The classification performance of our framework, the MGMT-PMP system, is dependent on the tuning of numerous parameters for classification using ML classifiers. Subsequently, the analysis of optimal values of the DLRFE module, latent feature extraction, radiomic feature modules, and the strong machine learning models have been presented in detail. Only k-NN and SVM classifiers have been used, and the parameterization has been achieved based on classification performance accuracy. We have used these optimal values in the forthcoming sections.

k-NN model

The proposed model has been experimented with four variants of the k-NN classifier (\(k=\mathrm{1,3},\mathrm{5,7}\)) to categorize HFS into MGMT+ and MGMT− classes. In our case, the results for the k-NN classifier have been found to outclass relatively at \(k=1\) as illustrated in Table 5 for the BraTS-2021 dataset without RA. The performance of the k-NN model has been shown in Table 6 with RA.

The confusion matrices for varying numbers of epochs have been illustrated in Fig. 9 for radiogenomic classification of the BraTS-2021 dataset with k-NN classifier with RA. The correlation between the features for the same class, resulted in the reduction of false events for test instances after the RA is applied to the original dataset. If we compare the classification results using Table 5, it is inferred that the application of RA resulted in improving the discriminative features (Fig. 6) thereby alleviating the characteristics that are based on similar or overlapping features. The confusion matrices in Fig. 6 show that excellent results have been found from higher to lower epoch counts as the deep learning module has been used alone for dynamic feature extraction, and the k-NN is engaged for training and test purpose using these features.

The improvement in classification performance using RA has been found remarkable as shown in Fig. 10 which is a visual representation of quantitative analysis illustrated in Tables 5 and 6. Figure 10a explains the trends followed by a variation of training epochs employed by the DLRFE module with and without RA. Initially, the classification accuracy exhibits transitory behavior indicating a converging trend followed by a uniform behavior till the termination of 50 epochs. Figure 10b shows the ML-based classification accuracy using a k-NN model and latent features with and without RA. The plot represents epochs on the x-axis that have been used before the DL features were bled from the main architecture through the last fully connected layers ("Deep learning-based latent shape features"). It has been found that the classification accuracy is insensitive to the varying epochs so that the lesser epochs are sufficient to exploit the discriminative features derived for the proposed framework. F1-score measures reliable performance in case of imbalanced datasets, i.e. BraTS-2021. Figure 10c illustrates trends followed by the F1-score for original and RA-based datasets with the variation of training epochs for representation-learning-based discriminative features. It has been found that RA modified dataset resulted in outclass performance at its fifth epoch-based latent features. The low epoch features relate to the underfitting of the model due to high bias. These features resulted in good generalization during machine learning according to Occam's razor principle, stating the simplest solution is the best. The higher number of epochs corresponds to training even on noise distribution causing overfitting, and sacrificing generalization, so that the resulting model with high variance exhibits compromised performance on unknown instances. Figure 10d shows a similar trend for MCC indicating the performance improvement using RA for the original dataset. Figure 10e shows the AUC (ROC) plot showing the marked improvement with RA. Figure 10f follows the Pr trend indicating the performance rise due to RA. Figure 10g shows a similar trend of Rc with and without RA. A careful look at Table 5 indicates that the prediction discrimination threshold between positive and negative sides appears to increase Sn, by moving towards the latter, reducing FNs as illustrated in Table 7 showing the cross-validated (tenfold) distribution of confusion matrix components for different epochs runs for latent features extraction. At the same time, Sp decreases due to increased FPs following the same logic. A similar trend is encountered between Pr and Rc as that of Sp and Sn respectively. There is a slight decrease in Rc with RA due to a slight increase in FN but at the same time has the effect of raising Sp from (70.48 ± 0.00)% to (97.08 ± 0.10)% using Tables 5 and 6 respectively at epochs = 5 with k-NN for k = 1. The overall effect of RA is a balanced increase in Sp and Sn, i.e. (97.08 ± 0.10)% and (95.86 ± 0.14)% respectively as illustrated in Table 6 (k-NN with k = 1, epochs = 5). It can be inferred that high Sn (↓FNs) is accompanied by positive results and high Sp (↓FPs) leads to healthy subjects. Figure 10h shows the comparison of higher to lower NPV trends. This can be explained based on previous findings. Figure 10i plot has been based upon NPVs with and without RA. The latter has shifted the predictive threshold boundary to the negative side so that FNs are reduced. In the case of RA based NPV plot, the predictive threshold boundary between positive and negative subjects is balanced in such a way that so that the false events are almost equally divided across it, as illustrated in Table 7. This results in balanced PPV and NPV values. At relatively higher epoch values, for getting deep learning features, the NPV is higher in comparison to the NPV without RA because of a relatively lower number of FNs (Table 7).

Performance analysis of RA for BraTS-2021 dataset based on: (a) accuracy variation with DL with softmax used as the classifier, (b) accuracy variation using k-NN model ML with x-axis showing the epochs used to retrieve the \({x}_{latent}\) (c) F1-score plot, (d) MCC plot, (e) AUC (ROC) variation with epochs, (f) precision variation with epochs, (g) recall variation with epochs, (h) PPV and NPV plots for varying epochs without RA, (i) NPV with and without RA plots.

SVM model

Three important variants of SVM classifier using linear, polynomial kernels of order ∈ (2,3,4) and radial basis function (RBF) have been experimented with to classify HFS into MGMT+ and MGMT− classes using latent features. The polynomials of higher-order (> 4) were not able to generate significant results. The results using SVM for binary classification of BraTS-2021 with RA using latent features extracted at the fifth epoch have been illustrated in Table 8. It can be observed that SVM RBF has achieved outclass performance among other variants for the proposed model.

Another study has been carried out by changing the number of folds in the range [10, 20,…,50] during cross-validation for both k-NN and SVM classifiers for accuracy and F1-score. The performance variation between different folds has been found insignificant and negligible. However, the time for classification rises with an increase in the number of folds. The optimum number of folds was found ten and used throughout the article.

Performance analysis of DL-based latent feature extraction

The DLRFE module extracts the dynamic features from fully connected layers using the mpMRI scans. The convolution process is based on local operators, in case the kernel size is small, that can be thought of as a subset of fully connected layers, and the same is true for pooling, whereas the fully connected layers have a global concept so that each neuron is connected with every neuron in the next layer causing information to pass through each input to each output class. The final decision is based on the whole image89. The features in the first two fully connected layers, FC1 and FC2, are bled from the tuned architecture in the forward direction for the training and test partitions. The features are blended to form the latent features \({x}_{latent}\). The weight distribution in two fully connected layers is used to decide for either of the classes. The variation of the number of unknowns with a varying number of DL layers for the deep learning architecture is illustrated in Table 9 The parametric set finalized after empirical tuning is given in Table 10.

Analysis of parameters for deep learning and radiomic feature methods

The deep learning (latent) and radiomic feature extraction, constituting stages 1 and 2 of the proposed methodology, involves several parameters that need to be addressed by selecting their optimum values before the formation of HFS. We address the selection of appropriate values of parameters by an experimental process for each of the categories of feature extraction in a sequential manner.

Feature space visualization of deep learning-based FC1 and FC2

The t-SNE plots, a variant of Stochastic Neighborhood Embedding (SNE), for radiogenomic classification for the test cases using deep learning-based features, namely \({x}_{FC1}\), \({x}_{FC2}\), and \({x}_{latent}\), are illustrated in Fig. 11a–c, d–f using the 1-NN classifier (\(k=1)\), "Performance analysis of DL-based latent feature extraction", with original dataset and reduced dataset with RA applied respectively. For each of the high-dimensional feature vectors, the mapping is carried out in a non-linear manner to a lower (two) dimensional plan. The improvement in visualization is attributed solely to the alleviation of the tendency of points-crowding in the central region. Although there are marked inclinations of false events in FC1 and FC2 without RA due to overlapping regions, Fig. 11a–c, the fused DL features improved the results. Similarly, as illustrated in Fig. 11d–f using RA, the features represented the discrimination of vectors lying in either of the classes. Further improvement, by the exploitation of features, is carried out by our proposed system with the notion to find the complex hyperplane causing discrimination between MGMT+ and MGMT− classes.

The scatter plot for latent features mapped to ℜ2 using the t-SNE technique illustrating Stage 1 for classification of mpMRI scans into MGMT+ (“1”) and MGMT− (“0”) classes for the original dataset: (a) FC1 features \({x}_{FC1}\), (b) FC2 features \({x}_{FC2}\), and (c) latent features \({x}_{latent}\). The results depicting visual classification with the rejection algorithm are: (d) \({x}_{FC1}^{^{\prime}}\) (e) \({x}_{FC2}^{^{\prime}}\), and (f) \({x}_{latent}^{^{\prime}}\).

GLCM feature extraction process

We experimented with five distance values in the range1,5, each corresponding to a GLCM with four directions of θ, and each value of GLCM corresponds to its directional average corresponding to a specific value of d. The independent values of θ, without averaging, resulted in four GLCMs, each corresponding to a specific direction. So, a total of 20 GLCMs were generated without direction averaging. Further, several combinations of GLCMs and the hybrids of features thereof, based on cross-averaging of GLCMs using different combinations of θ, were also investigated for the classification of mpMRI scans to MGMT+ and MGMT− classes. The performance of GLCM features varies with the value of d. The array of offset matrices used for this experimentation to try different combinations of d and θ has been shown in Fig. 12a. The perception of the relationship between d and accuracy is illustrated in Fig. 12b. Further, the best results for our problem were found at (\(d=3\)) with direction averaging of four angles incremented by 45° without the repeated symmetric view. Initially, the classification accuracy shows a rising trend, and finally, accuracy falls off with a local rising surge. But the peak value of accuracy has been found at \(d=3\), therefore, this value of the relative distance from the neighboring pixels has been used for the feature extraction process.

HOG feature extraction process

The investigation was carried out to tune the parameters of the feature descriptor for our specific problem of radiogenomic classification. Further, we tried individual HOG parameters, based on classification accuracy, between the number of bins, block- and cell-sizes as illustrated in Fig. 13. Here, Fig. 13a shows the trend for the effects of varying the number of bins. Initially, the classification accuracy shows a rising trend, and finally, accuracy falls off. But the peak value of accuracy has been found at the uphill of the initial part. There is a sort of compromise found between the number of features and generalization. The higher number of features, due to the higher number of bins, resulted in dropped performance due to the curse of dimensionality. Figure 13b shows the effect of block size on possible options depending on the input image size. The higher block size resulted in outclass performance with the number of bins selected as 9 and block size as 32 × 32. Figure 13c shows the plot for cell size variation with classification accuracy. Higher cell size, before the maximum possible value, resulted in increased classification performance.

The features exploiting the large-scale spatial characteristics are based on a large HOG cell size. Consequently, the small-scale related features are lost with large cell sizes. Therefore, a compromise exists between the cell size and the details required depending on the inherent structure of the problem being experimented with. Similarly, local illumination changes are explored at a smaller HOG block size as the important information is lost when averaging the pixel intensities in a large block (with a relatively larger number of pixels). Consequently, the significant changes in the local pixels can be explored by reducing the block size. The parameters that play a key role in attaining its best individual performance were determined using the k-NN classifier, and the optimum selected values are illustrated in Table 11 out of the under-trial options. The optimum results for our problem structure were found using cell size: 32 × 32, and block size: 2 × 2 based on 9 bins resulting in \({x}_{HOG}=\) 36.

LBP features

We investigated different parameters of the LBP feature extraction module that significantly affected its performance. The trials were based on the number of neighbors p, radius r, and the receptive area to get an insight into the relationship between these parameters and the model performance for radiogenomic classification. Figure 14 shows the effect of individual LBP parameters on radiogenomic classification. Figure 14a shows the trend of classification accuracy with p so that increasing the latter encodes more details corresponding to the surroundings of each pixel. The optimum classification accuracy is 70.44% associated with a minimum number of features as 59 and \(r=1\). Similarly, Fig. 14b illustrates how varying r, the pointing boundary for the circular pattern through which neighbors are selected, influences the performance in terms of classification accuracy. The accuracy is 92.11% using the optimum value of p for \(r=5\). The optimal parameters for the LBP feature extraction module are illustrated in Table 12. The experimentation for the LBP parametric analysis was carried out using 1-NN classifier with RA that significantly influenced the performance of the framework.

Performance analysis of individual FEMs

The various k-values for k-NN along with important SVM variants have been tried but 1-NN has been found outclass. The various performance measures have been illustrated in Table 13 illustrating the corresponding results using BraTS-2021 with and without RA. Each of the extraction modules has been found to accompany reasonable performance measuring indices. However, a close analysis of the results reveals that the proposed latent features with RA perform relatively better comparing all the performance metrics. Similarly, HOG features, based on the structure of the problem with RA, have also accompanied adequate classification results. Figure 15 shows a comparison of various feature sets for their size and classification performance accuracy. The FC2, using the proposed deep learning architecture, is claimed to have a marked effect in comparison to its share (9.35%) in the HFS. The next optimum in this respect is the HOG set, with optimized parameters for our problem structure, with a 5.26% share in the HFS resulting in remarkable individual accuracy.

Performance analysis of HFS

This set of experiments is based on a combinatorial strategy using individual features from both stages, i.e. latent features from Stage 1 and radiomic features from Stage 2. The distribution of features in HFS into various categories and their proportionate share is illustrated in Table 14. The 2-Stage HFS formation for the proposed radiogenomic classification framework is illustrated in Fig. 16. The feature extraction modules are fused in this trial by manifold combinations, each consisting of two, three, four, and five feature types. Each of the experiments is evaluated using BraTS-2021 with RA using various performance measuring techniques as illustrated in Tables 15, 16 and 17. FC1, FC2, and HOG features resulted in an adequate level of performance after the application of RA on the dataset no matter whether they are utilized on an individual basis or in cross-breed mode. Results in Tables 13 and 15 concluded that individual features, FC1, FC2, and HOG, yielded maximum accuracy of 96.61%, 94.54%, and 90.23% respectively whereas the accuracy was further boosted with an increase up to 96.88% in the case of hybrid of more than two features. In Tables 16 and 17, combinations of three (10 sets), four (5 sets), and five features (1 set) were hybridized to form HFS using a k-NN classifier with RA to yield a maximum accuracy of 96.94%. However, a slight fractional enhancement in performance resulted in complex hybrids carrying more than two feature subsets, leading to the accuracy up to 96.90%, 96.92%, and 96.94% for FC1-GLCM-HOG, FC1-GLCM-HOG-LBP, and FC1-FC2-GLCM-HOG-LBP (HFS) respectively. The outclass results are mainly due to the reduction of similar features in the original dataset by the application of RA so that the diversity in HFS is combined by exploiting five distinct feature extraction modules. Each of the extraction modules, having its possible solution domain, extracts the most favorable and discriminative characteristics from the mpMRI scans. When the features originated from the RA-based reduced BraTS-2021 dataset fused, they collectively generated better results, and an overall accuracy increase of 17.59% and 17.99% was observed for individual features’ performance and hybrid strategies respectively when compared with results without RA.

From another perspective of sensitivity analysis of epochs on proposed deep learning features extraction, and consequently on ML-based classification has been illustrated in Table 18 on the radiongenomic classification dataset with RA. The epochs when larger than five marginally influence, 0.8% increase, the performance measuring standards with radiomic as static feature extraction techniques.

Computational time analysis of the framework

It is worthwhile to analyze the proposed framework for its space and time complexity in terms of CPU time requirements. To check the performance of the framework, the CPU time involvement has been considered for various feature extraction modules on an individual as well as group basis, using ML/ DL classifiers after applying RA, and training/ testing time on a per-image basis. The hybrid feature extraction time is the mere sum of the time taken by the individual FEMs.

The time requirements (with epochs) for deep learning feature extraction in minutes, machine learning training time (MLTrgT) in minutes, and machine learning testing time (MLTstT) in ms/ image are illustrated in Fig. 17a–c as DLFET, MLTrgT and MLTstT respectively. Figure 17a shows that the latent feature extraction time rises with epochs, and five epochs have been found optimum ("Selection of optimal parameters") so that the HFS culminates in high classification performance accuracy. Further, RA application resulted in a reduction of feature extraction time, so there is a time gap observed between the two trends.

Effect of RA on time required for (a) deep learning feature extraction time (minutes), (b) ML training time and (c) ML testing time for radiogenomic classification using BraTS-2021 dataset and 1-NN classifier (DLFET stands for deep learning feature extraction time and MLTrgT stands for Machine Learning Training Time).

In Fig. 17b, the training time for machine learning remained almost uniform. The variation on the x-axis represents the epochs used at which the latent features were extracted. Further, the training features are obtained by finding activations through deep learning architecture in the forward direction. The latent features are then used by a 1-NN classifier for model training. The curve related to the RA application used lesser time in comparison to the original dataset having a larger number of training instances. A similar trend has been shown in Fig. 17c in milliseconds for testing on a per-image basis using a 1-NN classifier. The testing time on a per-image curve is always accompanied by quick convergence in the case of RA.

The trend of feature extraction time/image has been demonstrated in Fig. 18a. Although a relatively higher extraction cost for FET is observed for LBP, attributed to its large radius that was found optimum considering its contribution on an individual basis ("LBP features"), the proposed system is tractable computationally, only 8.67 ms for the HFS extraction, due to time involvement per feature extraction module. However, the preprocessing times, the training time for deep features extraction, and the RA times are not included in these computations as they are to be involved once, and from thereon never to be repeated, until and unless new test data is involved which has to follow the same norms as defined earlier. Further, the higher time cost of LBP is justifiable by performance rise compared to other FEMs. Figure 18b illustrates the feature vector size for individual and hybrid features, where the latter is just the summation of the former. Figure 18c shows the classification time for individual FEMs and their hybrid. The classification time varies with the length of the feature vector, and the classification time of HFS is high compared to the individual FEMs.

Performance comparison

The classification performance of our proposed framework has been related to existing solutions for brain tumor classification using well-known radiomic features through the CE-MRI dataset. The BraTS-2021 dataset, based on radiogenomic data, has no published work so far to the best of our knowledge. In this context, we have implemented four techniques17,40,79,90 from the contemporary literature with optimal parameters for our comparison with the BraTS-2021 dataset. We used accuracy as the comparative performance measure. The tabular comparison has been illustrated in Table 19, which shows relatively better performance of the proposed framework over others in terms of performance. The radiomic features are texture oriented while latent features are problem structure oriented. The proposed system, however, uses discriminative HFS feature vectors wherein each module acquires knowledge associated with the problem structure. The manifold combinatorial fusion of different modules reinforces the multi-solution domain culminating in relatively superior performance measures.

The effect of RA on radiogenomic classification accuracy is being reported with an improvement of 16.57% in the case using the reduced dataset exploited by RA. The outclass performance of the proposed MGMT-PMP system with RA is based on three key facts: firstly, simpler model, thereby reducing the complexity of the algorithm due to more focused/ highly discriminative features from the dataset using two-stage HFS, secondly, the computational cost is reduced due to the rejection of 27.18% instances, and thirdly, resulting in an improved generalization on testing instances with more discriminative feature vectors.

Future challenges and recommendations

The main challenge to the proposed system is that it needs a clinical trial for resolving the expert opinion corresponding to the patient’s data with a second opinion. Another challenge is to define a systematic clinical step-up culminating in patient management with GB. Moreover, a study is required using the proposed technique for evaluation of its impact on the survival rate of GB patients with an associated clinical management system.

The deep learning-based prediction strategies, working as a source of latent feature extractor, are complex as well as opaque where performance metrics, viz. accuracy, F1-score, sensitivity, and specificity, are dependent on enormous parametric space using deep learning algorithms. In this context, the XAI91,92 proposed that the deep learning architectures should be examined using a white box that is transparent for multi-modal data fusion.

Conclusions

In this research activity, we proposed a novel classification system MGMT-PMP, bridging imaging and genomics, for predicting radiogenomics classes using genetic variation associated with response to radiations. In this context, a novel fine-tuned CNN architecture, namely the DLRFE module is proposed for latent feature extraction to capture the features that dynamically bleed the quantitative knowledge related to the spatial distribution and the size of tumorous structure using the brain paraphernalia by mpMRI scans. It finally results in the development of a dynamic feature vector that is used in the radiogenomic classification. Further, in the proposed scheme, several radiomic features, namely GLCM, HOG, and LBP are extracted from mpMRI scans using the BraTS-2021 challenge dataset. The application of the novice rejection algorithm has been found very effective in selecting and delineating the instances out of the main dataset. The fusion of latent features with radiomic features, forming a hybrid feature collection as HFS, is then used in a different number of neighbors using k-NN classification. Working with mpMRI scans, using k-NN (\(k=1)\) resulted in 97.28% training and 96.94% test classification accuracy. We have compared the proposed technique with numerous existing brain tumor classification techniques with the BraTS-2021 dataset and observed a significant increase in radiogenomic classification performance using RA. It has been established through results that dynamic- and static-feature fusion, using HFS, culminated in classification performance improvement as compared to individual features. This research study can have many implications extendable to multiple directions. First, this study links the genomic variation with radiomic-based data characterization algorithms paving the bridge between the two independent areas of research. The second possibility is its comprehensive use in real-time brain tumor surgery for the removal of leftover tumor cells by chemotherapeutic treatment as an alternative in tie to radiotherapy. Third, the proposed system can be used as an alternative without any dedicated machine as a portable low-cost solution for brain surgery. Further, this study can be of great impact on the clinical management and survival of GB patients.

Data availability

The use of all data mentioned in this article is publicly available at: https://www.kaggle.com/competitions/rsna-miccai-brain-tumor-radiogenomic-classification/data.

Change history

30 March 2023

A Correction to this paper has been published: https://doi.org/10.1038/s41598-023-32199-y

References

Siegel, R.L., et al. Colorectal cancer statistics, 2020. CA Cancer J. Clin. 70(3), 145–164 (2020).

Tandel, G. S. et al. A review on a deep learning perspective in brain cancer classification. Cancers 11(1), 111 (2019).

Goodenberger, M. L. & Jenkins, R. B. Genetics of adult glioma. Cancer Genet. 205(12), 613–621 (2012).

Louis, D. N. et al. cIMPACT-NOW: a practical summary of diagnostic points from Round 1 updates. Brain Pathol. 29(4), 469–472 (2019).

Louis, D. N. et al. cIMPACT-NOW update 1: not otherwise specified (NOS) and not elsewhere classified (NEC). Acta Neuropathol. 135(3), 481–484 (2018).

Brat, D. J. et al. cIMPACT-NOW update 3: recommended diagnostic criteria for “Diffuse astrocytic glioma, IDH-wildtype, with molecular features of glioblastoma, WHO grade IV”. Acta Neuropathol. 136(5), 805–810 (2018).

Ellison, D. W. et al. cIMPACT-NOW update 4: diffuse gliomas characterized by MYB, MYBL1, or FGFR1 alterations or BRAFV600E mutation. Acta Neuropathol. 137(4), 683–687 (2019).

Brat, D. J. et al. cIMPACT-NOW update 5: recommended grading criteria and terminologies for IDH-mutant astrocytomas. Acta Neuropathol. 139(3), 603–608 (2020).

Louis, D.N., et al. cIMPACT‐NOW update 6: New entity and diagnostic principle recommendations of the cIMPACT‐Utrecht meeting on future CNS tumor classification and grading. Wiley Online Library (2020).

Bakas, S. et al. Overall survival prediction in glioblastoma patients using structural magnetic resonance imaging (MRI): advanced radiomic features may compensate for lack of advanced MRI modalities. J. Med. Imaging 7(3), 031505 (2020).

Louis, D. N. et al. The 2016 World Health Organization classification of tumors of the central nervous system: A summary. Acta Neuropathol. 131(6), 803–820 (2016).

Yu, W. et al. O6-methylguanine-DNA methyltransferase (MGMT): Challenges and new opportunities in glioma chemotherapy. Front. Oncol. 9, 1547 (2020).

Rivera, A. L. et al. MGMT promoter methylation is predictive of response to radiotherapy and prognostic in the absence of adjuvant alkylating chemotherapy for glioblastoma. Neuro Oncol. 12(2), 116–121 (2010).

Zhu, W. et al. The application of deep learning in cancer prognosis prediction. Cancers 12(3), 603 (2020).

Klein, E., et al. Glioblastoma organoids: pre-clinical applications and challenges in the context of immunotherapy. Front. Oncol. 2755 (2020).

Oldrini, B. et al. MGMT genomic rearrangements contribute to chemotherapy resistance in gliomas. Nat. Commun. 11(1), 1–10 (2020).

Sultan, H. H., Salem, N. M. & Al-Atabany, W. Multi-classification of brain tumor images using deep neural network. IEEE Access 7, 69215–69225 (2019).

Baid, U., et al, The rsna-asnr-miccai brats 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv preprint arXiv:2107.02314 (2021).

Cao, V. T. et al. The correlation and prognostic significance of MGMT promoter methylation and MGMT protein in glioblastomas. Neurosurgery 65(5), 866–875 (2009).

Reifenberger, G. et al. Predictive impact of MGMT promoter methylation in glioblastoma of the elderly. Int. J. Cancer 131(6), 1342–1350 (2012).

Cankovic, M. et al. The role of MGMT testing in clinical practice: A report of the association for molecular pathology. J. Mol. Diagn. 15(5), 539–555 (2013).

Mazurowski, M. A. Radiogenomics: What it is and why it is important. J. Am. Coll. Radiol. 12(8), 862–866 (2015).

Shui, L., et al. The era of radiogenomics in precision medicine: an emerging approach to support diagnosis, treatment decisions, and prognostication in oncology. Front. Oncol. 3195 (2021).

Wang, J. et al. An MRI-based radiomics signature as a pretreatment noninvasive predictor of overall survival and chemotherapeutic benefits in lower-grade gliomas. Eur. Radiol. 31(4), 1785–1794 (2021).

Li, Z.-C. et al. Multiregional radiomics features from multiparametric MRI for prediction of MGMT methylation status in glioblastoma multiforme: A multicentre study. Eur. Radiol. 28(9), 3640–3650 (2018).

Kong, Z. et al. 18F-FDG-PET-based Radiomics signature predicts MGMT promoter methylation status in primary diffuse glioma. Cancer Imaging 19(1), 1–10 (2019).

Jiang, C. et al. Fusion radiomics features from conventional MRI predict MGMT promoter methylation status in lower grade gliomas. Eur. J. Radiol. 121, 108714 (2019).

Zhang, L. et al. BDSS: Blockchain-based data sharing scheme with fine-grained access control and permission revocation in medical environment. KSII Trans. Internet Inf. Syst. (TIIS) 16(5), 1634–1652 (2022).

Habib, A. et al. MRI-based radiomics and radiogenomics in the management of low-grade gliomas: Evaluating the evidence for a paradigm shift. J. Clin. Med. 10(7), 1411 (2021).

Trivizakis, E. et al. Artificial intelligence radiogenomics for advancing precision and effectiveness in oncologic care. Int. J. Oncol. 57(1), 43–53 (2020).