Abstract

Cross-modal hashing is an efficient method to embed high-dimensional heterogeneous modal feature descriptors into a consistency-preserving Hamming space with low-dimensional. Most existing cross-modal hashing methods have been able to bridge the heterogeneous modality gap, but there are still two challenges resulting in limited retrieval accuracy: (1) ignoring the continuous similarity of samples on manifold; (2) lack of discriminability of hash codes with the same semantics. To cope with these problems, we propose a Deep Consistency-Preserving Hash Auto-encoders model, called DCPHA, based on the multi-manifold property of the feature distribution. Specifically, DCPHA consists of a pair of asymmetric auto-encoders and two semantics-preserving attention branches working in the encoding and decoding stages, respectively. When the number of input medical image modalities is greater than 2, the encoder is a multiple pseudo-Siamese network designed to extract specific modality features of different medical image modalities. In addition, we define the continuous similarity of heterogeneous and homogeneous samples on Riemann manifold from the perspective of multiple sub-manifolds, respectively, and the two constraints, i.e., multi-semantic consistency and multi-manifold similarity-preserving, are embedded in the learning of hash codes to obtain high-quality hash codes with consistency-preserving. The extensive experiments show that the proposed DCPHA has the most stable and state-of-the-art performance. We make code and models publicly available: https://github.com/Socrates023/DCPHA.

Similar content being viewed by others

Introduction

Recently, various advanced medical imaging technologies have been applied in modern clinical analysis with the advancement of medical care1. Hospitals are generating a large number of multi-modal neuroimages every moment, therefore, it is necessary to establish an effective neuroimage cross-modal approximate nearest neighbor retrieval system to assist clinicians in navigating the data. Neuroimage cross-modal retrieval aims to provide doctors with similar neuroimages from different modalities that have been diagnosed. An effective neuroimage cross-modal retrieval system can reduce the error rate of clinical diagnosis for novice doctors and improve the efficiency of clinical diagnosis for skilled physicians.

The remarkable achievements have been made in large-scale data processing based on deep neural network in computer vision2,3,4,5, Internet of Things (IoT)6,7,8, nearest neighbor retrieval9, 10, and intelligent networks11, 12. The nearest neighbor retrieval methods are solved by learning discriminative representations in the common space, which can be roughly classified into cross-modal hash retrieval and cross-modal real-value retrieval by classifying the types of values in the common space10, 13. Cross-modal hashing is an efficient method to embed high-dimensional heterogeneous modal feature descriptors into a low-dimensional Hamming space. Due to the trade-off between retrieval efficiency and storage cost, learning to hash has been widely used in approximate nearest neighbor retrieval of large-scale multi-media data, in particular, using cross-modal hashing to assist doctors in effective clinical diagnosis has also attracted increasing attention from researchers.

Since features of different modalities usually belong to various data distributions and are generated from different manifold spaces. Therefore, a basic challenge of cross-modal retrieval is to bridge the modality gap. Most existing cross-modal hashing methods have been available to bridge the heterogeneous modality-gap14,15,16, but there are still two challenges leading to the limitation of retrieval accuracy: (1) ignoring the continuous similarity of samples on stream shape; (2) lack of discriminability of hash codes with the same semantics. Our research argued that (1) is the reason for (2) and (2) is the result of (1). Therefore, we propose a Deep Consistency-Preserving Hash Auto-encoders model, called DCPHA, based on the multi-manifold property of multi-modal hash codes distributed in Hamming space. In addition, we define the continuous similarity of heterogeneous and homogeneous samples on Riemann manifolds from the perspective of multiple sub-manifolds, respectively, and propose two constraints, i.e., multi-semantic consistency and multi-manifold similarity-preserving. And we prove theoretically that the multi-manifold similarity-preserving constraint has manifold preserving invariance.

The main contributions of our work can be summarized as follows:

-

(1)

We propose a Deep Consistency-Preserving Hash Auto-encoders model, called DCPHA, based on the multi-manifold property of the feature distribution for neuroimage cross-modal retrieval. DCPHA is an end-to-end model consisting of asymmetric auto-encoders and two semantics-preserving attention branches.

-

(2)

We propose multi-semantic consistency and multi-manifold similarity-preserving constraints based on the multi-manifold property of multi-modal hash codes. And it is proved theoretically that the multi-manifold similarity-preserving constraint has manifold preserving invariance.

-

(3)

Without loss of generality, we comprehensively evaluate the DCPHA on four benchmark datasets and implement detailed ablation experiments to validate the effectiveness of the DCPHA. The extensive experiments demonstrate the advantages of the proposed DCPHA compared to 15 advanced cross-modal retrieval methods.

Deep consistency-preserving hash auto-encoders

In this section, the proposed model DCPHA is described in detail, including formulations, deep architecture and objective function. The deep architecture of DCPHA is shown in Fig. 1. The DCPHA model consists of asymmetric auto-encoders and two semantics-preserving attention branches. The encoder is used to extract features from neuroimages of different modalities, and the decoder is designed to map the features into Hamming space by a non-linear transformation. The semantics-preserving attention branches work in the encoding and decoding stages respectively to ensure that both the learned features and the hash codes have semantics-consistency. And two constraints, i.e., multi-semantic consistency and multi-manifold similarity-preserving, are embedded in the learning of hash codes to obtain high-quality hash codes with discriminative.

The proposed DCPHA model consists of an asymmetric auto-encoders and two semantics-preserving attention branches. The encoder is used to extract features from neuroimages of different modalities, and the decoder is designed to map the features into Hamming space by a non-linear transformation. Best view in color.

Notations and definitions

In this subsection, the notations and definitions mentioned in the following equations are introduced. Without loss of generality, we suppose that there are \({\mathscr {N}}\) multi-modal sample sets in the sample space \(\psi\), \(\psi =\left\{ X_i\right\} , i\in \left[ 1,{\mathscr {N}}\right]\). Each of multi-modal sample sets \(X_i\) consists of different medical scan imagings from the same subject (e.g. MRI and PET), \(X_i=\left\{ x_i^m\right\} , m\in \left[ 1,{\mathscr {M}}\right]\), where \({\mathscr {M}}\) denotes the number of different medical scan imagings. \(x_i^m\) denotes the i-th subject of the m-th modality, assuming dimension \({\mathscr {Z}}\). Since the samples within the same multi-modal sample set originate from the same subject, they naturally share the same semantic, which is the reason why our method is appropriate for neuroimages. A one-hot vector \(\ell _i\) is assigned to each multi-modal sample set, \(\ell _i=\left[ l_1,l_2,\cdots ,l_c,\cdots ,l_C\right]\), where C denotes the number of categories. When the multi-modal sample set \(X_i\) belongs to the c-th category, \(l_c=1\) and the rest is 0. DCPHA consists of an asymmetric encoder and decoder. The purpose of the encoder is to learn the features \(f_i^m\) of sample \(x_i^m\), assuming that the dimension of \(f_i^m\) is \({\mathscr {D}}\), where \({\mathscr {D}}\ll {\mathscr {Z}}\). The decoder is designed to map the features \(f_i^m\) into Hamming space by a non-linear transformation. Let the hash code of sample \(x_i^m\) is \(h_i^m\), \(h_i^m\in \left\{ -1,1\right\} ^{\mathscr {K}}\), and our goal aims to learn an end-to-end non-linear hash function \({\mathscr {F}}\) to extract features of multi-modal medical imaging and encode them into high-quality hash codes with semantics-consistency and similarity-preserving, \(h_i^m={\mathscr {F}}\left( x_i^m;\theta \right)\). The terms, notations, definitions and types involved in this work are comprehensively shown in Table 1.

The visualization of multiple sub-manifolds in local sample space. Points A1, A2 and B1 are from two different manifolds. The green solid line connects two homogeneous manifold samples, i.e. the similarity between two homogeneous manifold samples, and the yellow solid line links two heterogeneous manifold samples, i.e. the similarity between two heterogeneous manifold samples. Best view in color.

The previous works17,18,19,20 has illustrated that multi-modal data contain multiple sub-manifolds. The visualization of multiple sub-manifolds in local sample space is shown in Fig. 2. We define the sub-manifold similarity and multi-manifold similarity from local and global respectively, as follows.

Definition 1

Heterogeneous manifold similarity. A local manifold similarity calculation definition. Assuming that there are \({\mathscr {M}}\) modal neuroimages in the sample space, and each modality contains \({\mathscr {N}}\) samples, then the heterogeneous manifold similarity \({{\textbf {S}}}_{{\textbf {H}}}\) is defined for any two samples of different modalities as Eq. (1):

with

where \(S_H\left( h_i^m,h_j^n\right)\) denotes the similarity of the heterogeneous manifold between the i-th sample of the m-th modal and the j-th sample of the n-th modal and the calculation method is shown in Eq. (2). \(\tau\) is the heat kernel constant. \(D(\cdot )\) in Eq. (3) is the modified distance metric based on the standard euclidean distance \(d(\cdot )\).

Definition 2

Homogeneous manifold similarity. A local manifold similarity calculation definition. In the sample space, the homogeneous manifold similarity \({{\textbf {S}}}_{{\textbf {I}}}\) between samples from the same modality is defined as Eq. (4):

with

where \(S_I(h_i^\cdot ,h_j^\cdot )\) denotes the homogeneous manifold similarity between the i-th sample and the j-th sample from the same modal. The calculation method is the dot product between \(\ell _2\) normalized \(h_i^{\cdot }\) and \(h_j^{\cdot }\) (i.e. cosine similarity) as shown in Eq. (5).

Definition 3

Multi-manifold similarity. A global manifold similarity calculation definition. Assuming that there are \({\mathscr {M}}\) modal neuroimages in the sample space and each modality contains \({\mathscr {N}}\) samples, then the multi-manifold similarity \({{\textbf {S}}}_{{\textbf {M}}}\) is defined as Eq. (6):

where \(S_I^1\) denotes the homogeneous manifold similarity between the samples from the 1-th modality, and \(S_I^{{\mathscr {M}}}\) similarly. \(s_H^{1,{\mathscr {M}}}\) denotes the heterogeneous manifold similarity between the 1-th modal sample and the \({\mathscr {M}}\)-th modal sample, and \(S_H^{{\mathscr {M}},1}\) similarly.

Objective functions and theory

In this subsection, the theoretical derivation of the proposed multi-semantic consistency and multi-manifold similarity-preserving constraints is presented. Alexey et al.21 propose that the criterion for a good feature representation should ensure that the mapping from the input image \(x_i^m\) to the feature \(f_i^m\) should satisfy two requirements: (1) There must be at least one feature that is similar for images of the same semantics. (2) there must be at least one feature that is sufficiently different for images of different semantics. However, the previous works14,15,16 can over-satisfy both requirements for hash codes, because these works ignore the fact that samples with the same semantics have contiguous similarity on manifold. Constructing the similarity matrix directly using semantic labels leads to samples with the same semantics being encoded into the same hash code, causing the lack of discriminability between hash codes with the same semantics. To solve the problem, we propose a multi-semantic consistency loss and a multi-manifold similarity-presering loss. The multi-semantic consistency ensures that hash codes with different semantics are discriminative. On this basis, multi-manifold similarity-preserving defines continuous similarity among samples in terms of multiple sub-manifolds, ensuring that hash codes with the same semantics have discriminability as well.

Multi-semantic consistency

The multi-semantic consistency constraint is to align the intermediate features generated by the encoder and the hash codes generated by the decoder with the high-level semantics of the input samples to guarantee that the final generated hash codes with different semantics have case-level discriminability, which is calculated as follows.

First, the sample \(x_i^m\) is learned by encoder to feature \(f_i^m\), \(f_i^m=Decoder\left( x_i^m\right)\), and the feature \(f_i^m\) is passed through the Semantic Preserving Attention Branch (SPAB) to obtain the feature prediction classification label \(y_i^m\), \(y_i^m=SPAB_E(f_i^m)\). The decoder is designed to map the features into Hamming space by a non-linear transformation. The \(f_i^m\) is fed into the decoder to obtain the hash code \(h_i^m\), \(h_i^m=Decoder\left( f_i^m\right)\). The hash code \(h_i^m\) is input into the SPAB which works in the decoding stage to obtain the hash code prediction classification label \(r_i^m\), \(r_i^m=SPAB_D(h_i^m)\). The multi-semantic consistency loss is shown in Eq. (7), where \(\parallel \cdot \parallel _{\mathscr {F}}\) denotes the Frobenius normalized.

Multi-manifold similarity-preserving

With the basis of multi-semantic consistency, multi-manifold similarity-preserving defines continuous similarity among samples in terms of multiple sub-manifolds, ensuring that hash codes with the same semantics have discriminability as well. According to previous work18,19,20, in the sample space, neuroimages of different modalities are distributed in different sub-manifolds. They are aggregated into a sophisticated multi-manifold structure. Based on the statements of Definition. (1)(2)(3), we derive the following optimization equation as Eq. (8):

where \(S_M\left( \cdot ,\cdot \right)\) denotes the multi-manifold similarity. \(I\left( \cdot ,\cdot \right)\) is an indicator function that has a value of 1 if \(\ell _i=\ell _j\) and 0 otherwise. Other notations and the corresponding explanations can be found in Table 1. \({\mathscr {J}}_2\) is the similarity-preserving loss which is defined on multi-manifolds, allowing samples with the same semantics are decoded into hash codes with discriminative.

Belkin22 used the correspondence between the Laplace and the Laplace-Beltrami operator on manifold, and the connections to heat equation, and proposed a non-linear dimensionality reduction method from Riemann space to Euclidean space (i.e. Laplacian Eigenmaps). The objective function as follows Eq. (9):

where \(H_i^m=\frac{h_i^m}{\parallel h_i^m\parallel }\), i.e. standardized feature vector.

Theorem 1

Subject to \(log\left( 1+e^{S_M\left( h_i^m,h_j^n\right) }\right) =2S_M\left( h_i^m,h_j^n\right)\), then \({\mathscr {J}}_2\) is equivalent to \({\mathscr {L}}_{laplacican}\), i.e. \({\mathscr {J}}_2\) has manifold preserving invariance.

The procedure of the theoretical proof of Theorem 1 is placed in the supplementary material. It indicates that minimizing Eq. (8) is a standard manifold embedding problem formulated by equivalent to Eq. (9). Multi-manifold similarty-preserving term essentially provides a measure of sub-manifold similarity-preserving. Therefore, Eq. (8) can be a reasonable explanation for multi-manifold similarity-preserving.

Combining Eqs. (7)(8), the objective function of DCPHA is:

where \(\alpha\) and \(\beta\) are the contribution weight parameters of \({\mathscr {J}}_1\) and \({\mathscr {J}}_2\), respectively. The third is a regularization term, which is used to avoid gradient vanishing23.

Refinement learning and optimization

The network structure of DCPHA consists of asymmetric auto-encoders and two semantics-preserving attention branchinges which working in the feature encoding and hash decoding stages, respectively. The encoder adopts a standard CNN network structure. The decoder uses a light-weight fully-connected networks. The semantics-preserving attention branch is a linear multi-layer perceptron model. Therefore Eq. (10) is a non-convex function with multiple parameters. We used a stochastic gradient descent method and iterative learning strategy with Adam optimizer24 to learn the parameters and update the network. The complete training of DCPHA consists of three steps: (1) Pre-training encoder, (2) Pre-training decoder and (3) Fine-tuning DCPHA, which are described in detail below.

Experiment

Implementation details

All experiments were conducted on a Tesla V100-SXM2 GPU using same setting. To ensure impartiality and objectivity, all comparison models adopt AlexNet as the backbone network for feature extraction. All comparison models, except that the backbone network adopts the same configuration, are all original code implementations. The batchsize is 20 and the iterations is 500. The initial learning rate is set to \(10^{-6}\).

Datasets

ADNI225 contained 579 subjects with T1-weighted sMRI and 500 subjects with PET. we adopt a single slice and strong pairing data preprocessing method. Finally, we collected 300 pairs (600 images) of sMRI and PET neuroimages as datasets.

OASIS326. We collected MRI T1-weighted and PET images of 300 subjects from the OASIS3 dataset, with a total of 600 images as the dataset. We strongly matched two different modal images of the same subject to form a cross-modal paired dataset for training. We divided the above datasets into training-set and test-set in the ratio of 8/2. The datasets generated and analysed during the current study are available from the corresponding author on reasonable request.

Compare with 15 advanced methods

In this experiments, We used the mean average precision (mAP) scores of all returned results with cosine similarity as a quantitative metric. The mAP scores jointly consider ranking information and precision and are widely used performance evaluation criteria in cross-modal hash. We report the mAP scores of the compared methods for two different cross-modal retrieval tasks: (1) retrieving PET samples using T1-wighted MRI queries (M\(\rightarrow\)P) and (2) retrieving T1-wighted MRI samples using PET queries (P\(\rightarrow\)M). On the premise of objectivity and impartiality, the comparison experiments on ADNI2 and OASIS3 datasets are shown in Tables 2, 3, respectively. From the results, DCPHA achieves state-of-the-art performance on the test-set of each dataset. The detailed analysis is as follows.

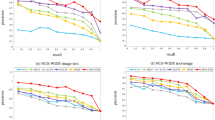

The results of neuroimage cross-modal retrieval on ADNI2 using mAP scores are shown in Table 2. As can be seen from the table, the proposed DCPHA outperforms 15 advanced counterparts. Regarding the average mAP score of 128 bits hash codes on ADNI2 dataset, DCPHA outperforms several sub-optimal models DIHN, DPN, and CSQ by \(6.86\%\), \(5.16\%\), and \(3.85\%\) respectively. In other words, our method can significantly improve the performance of neuroimage cross-modal retrieval. For further comparison, the precision curve is plotted in Fig. 3. The experimental results are consistent with the retrieved mAP results in Table 2, where DCPHA has the best performance.

We evaluated DCPHA on OASIS3 dataset for cross-modal retrieval. The mAP scores of the retrieval are shown in Table 3. The experimental results show that DCPHA has the highest retrieval mAP scores in several metrics compared to 15 advanced retrieval methods. The proposed DCPHA improves \(2.53\%\) over the best counterpart DSH from the average mAP score of 16 bits hash codes. Although the average mAP score of DSH with 32 bits hash codes is higher than DCPHA, the model constructed based on the multi-manifold property of data distribution has a great advantage in processing the multi-modal task method has a great advantage. The performance of proposed method is more stable on different lengths of hash codes. We plotted the precision curve to investigate the effectiveness of different methods for cross-modal retrieval on OASIS3 dataset as shown in Fig. 4. From the visualization, it is observed that the proposed DCPHA also outperforms all the compared methods, which is consistent with the retrieved mAP results.

The further analysis of DCPHA

In this subsection, we will further analyze our proposed DCPHA from ablation experiments, hyper-parameters sensitivity analysis and comparison on natural image benchmark dataset.

Ablation experiments

The objective function of DCPHA is mainly composed of a multi-manifold similarity-preserving loss and a multi-semantic consistency loss. In order to research the contribution of these components to the model in more detail, we developed and evaluated two variants of DCPHA. i.e. DCHA and DPHA. DCHA only uses the multi-semantic consistency loss as the objective function and DPHA only uses the multi-manifold similarity-preserving loss as the optimization objective. Table 4 shows the results of ablation experiments on ADNI2. We found that both multi-manifold similarity-preserving and multi-semantic consistency contribute to the final retrieval performance of the model. DCHA obtains better performance when the hash codes is shorter, and the mAP score of DPHA is higher when the hash code is longer, which shows that optimizing the two objective functions at the same time is better than only optimizing one of them.

Hyper-parameter sensitivity analysis

The objective function of DCPHA contains two hyper-parameters \(\alpha\) and \(\beta\), and we investigate the effect of the hyper-parameters that control the weight ratio between the losses in Eq. (10). First, we fix the length of the hash code mathcal K to 32. Then, we keep \(\alpha\) and \(\beta\) in the range of [0.1, 1] to calculate the MAP score. The result is shown in the Fig. 5. It is clear that different hyperparameters yield different performance. Considering from the average MAP, we finally chose \(\alpha =0.3\) and \(\beta =1\) as hyper-parameters for the ADNI2 dataset. By using the same scheme, we can obtain the optimal values of hyper-parameters for \(\alpha =0.1\) and \(\beta =1.0\) on the OASIS3 dataset.

Experiments on natural image benchmark datasets

In order to further measure the fitting and generalization ability of DCPHA, we conduct comparative experiments with 10 advanced cross-modal retrieval methods on the natural image benchmark datasets MIRFLICKR25K. In our experiments, we follow the dataset partition and feature exaction strategies from36, 42. In this experiment, we report the mAP scores of the compared methods for two different cross-modal retrieval tasks: 1) retrieving text using image queries (I\(\rightarrow\)T) and 2) retrieving images using text queries (T\(\rightarrow\)I). The experimental results obtained in “I\(\rightarrow\)T” and “T\(\rightarrow\)I” tasks on MIRFLICKR25K are shown in Table 5. Since our proposed multi-semantic consistency and multi-manifold similarity preserving constraints based on the multi-manifold property of multi-modal hash codes, DCPHA achieves a significant performance improvement on the multi-label benchmark dataset, i.e., MRIFLICKER25K.

Conclusion and future work

In this paper, we proposed a deep consistency-preserving hash auto-encoders model, called DCPHA, based on the multi-manifold property of hash codes distributed in Hamming space to solve the problem of lack of discriminability of hash codes with the same semantics. Specifically, DCPHA consists of a pair of asymmetric auto-encoders and two semantics-preserving attention branches that work in the feature encoding stage and hash decoding stage, respectively. In addition, two constraints, namely multi-semantic consistency and multi-manifold similarity-preserving, were embedded in the learning of hash codes. We theoretically demonstrated that our proposed multi-manifold similarity-preserving has manifold preserving invariance. As the experimental results show, the proposed DCPHA can obtain state-of-the-art performance on simple medical multi-modal image datasets (i.e., ADNI2) and multi-label natural image datasets (i.e., MIRFLICKER25K). In future work, we will build a medical multi-modal database, including diagnostic reports, audio, and construct a multi-modal hash method to accomplish mutual retrieval of data from multiple sources. And we will further explore the impact of multi-view on the generation of hash codes for multi-modal samples.

Data availability

The datasets generated during and analysed during the current study are available from the corresponding author on reasonable request.

References

Choi, J. D. et al. Choroid plexus volume and permeability at brain mri within the alzheimer disease clinical spectrum. Radiologyhttps://doi.org/10.1148/radiol.212400 (2022).

Chai, Y. et al. From data and model levels: Improve the performance of few-shot malware classification. IEEE Trans. Netw. Serv. Manag.https://doi.org/10.1109/TNSM.2022.3200866 (2022).

Chai, Y., Du, L., Qiu, J., Yin, L. & Tian, Z. Dynamic prototype network based on sample adaptation for few-shot malware detection. IEEE Trans. Knowl. Data Eng.https://doi.org/10.1109/TKDE.2022.3142820 (2022).

Liang, C., Zhu, M., Wang, N., Yang, H. & Gao, X. Pmsgan: Parallel multistage gans for face image translation. IEEE Trans. Neural Netw. Learn. Syst.https://doi.org/10.1109/TNNLS.2022.3233025 (2023).

Yu, W., Zhu, M., Wang, N., Wang, X. & Gao, X. An efficient transformer based on global and local self-attention for face photo-sketch synthesis. IEEE Trans. Image Process. 32, 483–495. https://doi.org/10.1109/TIP.2022.3229614 (2023).

Qiu, J., Chen, Y., Tian, Z., Guizani, N. & Du, X. The security of internet of vehicles network: Adversarial examples for trajectory mode detection. IEEE Netw. 35, 279–283. https://doi.org/10.1109/MNET.121.2000435 (2021).

Qiu, J. et al. A survey on access control in the age of internet of things. IEEE Internet Things J. 7, 4682–4696. https://doi.org/10.1109/JIOT.2020.2969326 (2020).

Qiu, J., Du, L., Zhang, D., Su, S. & Tian, Z. Nei-tte: Intelligent traffic time estimation based on fine-grained time derivation of road segments for smart city. IEEE Trans. Ind. Inf. 16, 2659–2666. https://doi.org/10.1109/TII.2019.2943906 (2019).

Yang, X., Wang, N., Song, B. & Gao, X. Bosr: A cnn-based aurora image retrieval method. Neural Netw. 116, 188–197. https://doi.org/10.1016/j.neunet.2019.04.012 (2019).

Hu, Z. et al. Triplet fusion network hashing for unpaired cross-modal retrieval. In Proceedings of the 2019 on International Conference on Multimedia Retrieval, 141–149, https://doi.org/10.1145/3323873.3325041 (2019).

Qiu, J. et al. Artificial intelligence security in 5g networks: Adversarial examples for estimating a travel time task. IEEE Veh. Technol. Mag. 15, 95–100. https://doi.org/10.1109/MVT.2020.3002487 (2020).

Qiu, J., Chai, Y., Tian, Z., Du, X. & Guizani, M. Automatic concept extraction based on semantic graphs from big data in smart city. IEEE Trans. Comput. Soc. Syst. 7, 225–233. https://doi.org/10.1109/TCSS.2019.2946181 (2019).

Luo, X. et al. A survey on deep hashing methods. ACM Trans. Knowl. Discov. Datahttps://doi.org/10.1145/3532624 (2020).

Jiang, Q., Cui, X. & Li, W. Deep discrete supervised hashing. IEEE Trans. Image Process. 27, 5996–6009. https://doi.org/10.1109/TIP.2018.2864894 (2018).

Hu, W. et al. Cosine metric supervised deep hashing with balanced similarity. Neurocomputing 448, 94–105. https://doi.org/10.1016/j.neucom.2021.03.093 (2021).

Shi, Y. et al. Supervised adaptive similarity matrix hashing. IEEE Trans. Image Process. 31, 2755–2766. https://doi.org/10.1109/TIP.2022.3158092 (2022).

Wang, D., Cui, P., Ou, M. & Zhu, W. Deep multimodal hashing with orthogonal regularization. In Proceedings of the 24th International Conference on Artificial Intelligence, 2291—2297. https://doi.org/10.5555/2832415.2832567 (AAAI Press, Atlanta, 2015).

Xu, L., Zeng, X., Zheng, B. & Li, W. Multi-manifold deep discriminative cross-modal hashing for medical image retrieval. IEEE Trans. Image Process. 31, 3371–3385. https://doi.org/10.1109/TIP.2022.3171081 (2022).

Liu, C., Wang, K., Wang, Y. & Yuan, X. Learning deep multimanifold structure feature representation for quality prediction with an industrial application. IEEE Trans. Ind. Inf. 18, 5849–5858. https://doi.org/10.1109/TII.2021.3130411 (2022).

Khan, A. & Maji, P. Multi-manifold optimization for multi-view subspace clustering. IEEE Transactions on Neural Networks and Learning Systems 1–13. https://doi.org/10.1109/TNNLS.2021.3054789 (2021).

Dosovitskiy, A., Springenberg, J. T., Riedmiller, M. & Brox, T. Discriminative unsupervised feature learning with convolutional neural networks. In Advances in Neural Information Processing Systems, vol. 27 (Curran Associates, Inc., Montreal, 2014).

Belkin, M. & Niyogi, P. Laplacian eigenmaps and spectral techniques for embedding and clustering. In Advances in Neural Information Processing Systems, (The MIT Press, Vancouver, 2002). https://doi.org/10.7551/mitpress/1120.003.0080

Yan, C., Gong, B., Wei, Y. & Gao, Y. Deep multi-view enhancement hashing for image retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 43, 1445–1451. https://doi.org/10.1109/TPAMI.2020.2975798 (2020).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization (2014). Preprint at https://arxiv.org/abs/1412.6980

Jack, C. R. Jr. et al. The alzheimer’s disease neuroimaging initiative (adni): Mri methods. J. Magn. Reson. Imaging 27, 685–691. https://doi.org/10.1002/jmri.21049 (2008).

LaMontagne, P. J. et al. Oasis-3: Longitudinal neuroimaging, clinical, and cognitive dataset for normal aging and alzheimer disease. MedRxivhttps://doi.org/10.1101/2019.12.13.19014902 (2019).

Zhu, H., Long, M., Wang, J. & Cao, Y. Deep hashing network for efficient similarity retrieval. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 30, https://doi.org/10.1609/aaai.v30i1.10235 (2016).

Liu, H., Wang, R., Shan, S. & Chen, X. Deep supervised hashing for fast image retrieval. In 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2064–2072, https://doi.org/10.1109/CVPR.2016.227 (2016).

Li, W., Wang, S. & Kang, W. Feature learning based deep supervised hashing with pairwise labels (2015). Preprint at https://arxiv.org/abs/1511.03855

Shen, F., Gao, X., Liu, L. & et al. Deep asymmetric pairwise hashing. In Proceedings of the 25th ACM International Conference on Multimedia, 1522–1530, (California, 2017). https://doi.org/10.1145/3123266.3123345

Cao, Z., Long, M., Wang, J. & et al. Hashnet: Deep learning to hash by continuation. In 2017 IEEE International Conference on Computer Vision, 5608–5617, (IEEE, Hawaii, 2017). https://doi.org/10.1109/ICCV.2017.598

Li, Q., Sun, Z., He, R. & Tan, T. Deep supervised discrete hashing. In Advances in Neural Information Processing Systems, vol. 30 (Curran Associates, Inc, Long Beach, 2017).

Zhu, H., Gao, S. & et al. Locality constrained deep supervised hashing for image retrieval. In Proceedings of the 26th International Joint Conference on Artificial Intelligence, 3567–3573, https://doi.org/10.24963/ijcai.2017/499 (2017).

Jiang, Q. & Li, W. Asymmetric deep supervised hashing. In Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, https://doi.org/10.1609/aaai.v32i1.11814 (2018).

Wu, D., Dai, Q., Liu, J. & et al. Deep incremental hashing network for efficient image retrieval. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 9061–9069, https://doi.org/10.1109/CVPR.2019.00928 (2019).

Zhen, L., Hu, P., Wang, X. & Peng, D. Deep supervised cross-modal retrieval. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10394–10403, https://doi.org/10.1109/CVPR.2019.01064 (2019).

Zhang, Z. et al. Improved deep hashing with soft pairwise similarity for multi-label image retrieval. IEEE Trans. Multimed. 22, 540–553. https://doi.org/10.1109/TMM.2019.2929957 (2020).

Chen, Y. & Lu, X. Deep discrete hashing with pairwise correlation learning. Neurocomputing 385, 111–121. https://doi.org/10.1016/j.neucom.2019.12.078 (2020).

Yuan, L., Wang, T., Zhang, X. & et al. Central similarity quantization for efficient image and video retrieval. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3083–3092, https://doi.org/10.1109/CVPR42600.2020.00315 (2020).

Fan, L., Ng, K. W., Ju, C. & et al. Deep polarized network for supervised learning of accurate binary hashing codes. In Proceedings of the 29th International Joint Conference on Artificial Intelligence, 825–831, https://doi.org/10.24963/ijcai.2020/115 (2020).

Liu, C. et al. Deep hash learning for remote sensing image retrieval. IEEE Trans. Geosci. Remote Sens. 59, 3420–3443. https://doi.org/10.3390/rs12172789 (2020).

Peng, Y. & Qi, J. Cm-gans: Cross-modal generative adversarial networks for common representation learning. ACM Trans. Multimed. Comput. Commun. Appli. (TOMM) 15, 1–24 (2019).

Zheng, X., Zhang, Y. & Lu, X. Deep balanced discrete hashing for image retrieval. Neurocomputing 403, 224–236 (2020).

Passalis, N. & Tefas, A. Deep supervised hashing using quadratic spherical mutual information for efficient image retrieval. Signal Process. Image Commun. 93, 116146 (2021).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 62076044), the Natural Science Foundation of Chongqing in China(Grant No. cstc2022ycjh-bgzxm0160), and the Chongqing Graduate Research Innovation Project in China (Grant No. CYS21307).

Author information

Authors and Affiliations

Contributions

X.W. performed the visualization and validation experiments, the data analyses and wrote the manuscript. X.Z. contributed to the conception of the study and contributed significantly to analysis and manuscript preparation. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, X., Zeng, X. Deep consistency-preserving hash auto-encoders for neuroimage cross-modal retrieval. Sci Rep 13, 2316 (2023). https://doi.org/10.1038/s41598-023-29320-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-29320-6

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.