Abstract

Clinical myoelectric prostheses lack the sensory feedback and sufficient dexterity required to complete activities of daily living efficiently and accurately. Providing haptic feedback of relevant environmental cues to the user or imbuing the prosthesis with autonomous control authority have been separately shown to improve prosthesis utility. Few studies, however, have investigated the effect of combining these two approaches in a shared control paradigm, and none have evaluated such an approach from the perspective of neural efficiency (the relationship between task performance and mental effort measured directly from the brain). In this work, we analyzed the neural efficiency of 30 non-amputee participants in a grasp-and-lift task of a brittle object. Here, a myoelectric prosthesis featuring vibrotactile feedback of grip force and autonomous control of grasping was compared with a standard myoelectric prosthesis with and without vibrotactile feedback. As a measure of mental effort, we captured the prefrontal cortex activity changes using functional near infrared spectroscopy during the experiment. It was expected that the prosthesis with haptic shared control would improve both task performance and mental effort compared to the standard prosthesis. Results showed that only the haptic shared control system enabled users to achieve high neural efficiency, and that vibrotactile feedback was important for grasping with the appropriate grip force. These results indicate that the haptic shared control system synergistically combines the benefits of haptic feedback and autonomous controllers, and is well-poised to inform such hybrid advancements in myoelectric prosthesis technology.

Similar content being viewed by others

Introduction

During volitional object manipulation, haptic sensations (proprioceptive, kinesthetic, and tactile) from the biological limb are used to make grasp corrections and update internal feedforward models of the object and environment1. This model refinement helps improve the speed and dexterity of subsequent manipulations, such that an initially hesitant interaction with an unknown or fragile object becomes smoother and more efficient with more experience2,3. Sensory information is particularly important for tuning grip forces to handle fragile or brittle objects; grip force must be great enough to counteract inertia and gravity, but not large enough to crush the object4. This haptic-informed knowledge is lost in typical upper-limb prostheses, as they do not provide sensory feedback.

For the last several decades, researchers have been attempting to restore haptic feedback in upper-limb prostheses (see 2018 review by Stephens-Fripp et al.5). In particular, significant effort has been placed on the use of mechanotactile stimulations on the skin to provide prosthesis wearers with cues like grip force, grip aperture, and object slip6,7,8. Prior research has demonstrated the benefits of haptic feedback in improving discriminative and dexterous task performance with a myoelectric prosthesis9,10,11,12. Notably, vibrotactile feedback remains a simple, yet effective, method of haptic feedback in prostheses due to its compact size and low power consumption13,14,15,16,17,18.

Despite the demonstrated benefits of haptic feedback for upper-limb prostheses, in particular for grip force modulation10,11,19, consistently controlling even standard myoelectric hands remains a challenge. In the simplest myoelectric scheme, direct control, the amount of electrical activity from an agonist-antagonist muscle pair is used to control a single degree-of-freedom prosthesis terminal device. The inherent delay between the user’s interpretation of the haptic feedback and the subsequent myoelectric command could render volitional movement too slow20, as well as cognitively demanding21,22.

To reduce the user’s cognitive burden while simultaneously improving task success, researchers have focused on embedding low-level autonomous intelligence directly on the prosthesis. These autonomous systems can react to and prevent grasping errors like object slip or excessive grasp force23,24,25,26,27. Similar techniques already enjoy commercial implementation, as is the case with the direct-control myoelectric Ottobock SensorHand Speed28 prosthesis. Beyond event-triggered autonomous systems, there are also controllers which attempt to optimize grasping performance, such as maximizing the contact area between the prosthetic hand and the object29. Similarly, controllers that predict the likely sequence of desired prehensile grips without user intervention have been proposed30,31,32.

While these autonomous control strategies supplement human control to varying degrees, they fail to provide critical sensory feedback to the user during volitional (i.e., manual) prosthesis operation. This sensory feedback could be used to update the user’s manipulation strategy and thereby improve volitional control. In turn, the autonomous controller could learn the human’s successful volitional control strategies and replicate it in subsequent manipulations, thus improving task performance and reducing cognitive effort.

Control approaches that arbitrate between haptically-guided human control and autonomous control can be described as haptic shared control. Haptic shared control techniques, for example, have been incorporated in automotive applications, where haptic feedback from the autonomous system guides the driver during navigation33,34,35,36,37. In our prior work, we developed a haptic shared control approach for an upper limb prosthesis and investigated its task performance utility in a dexterous reach-to-pick-and-place task without direct vision38. In this manuscript, we build on this prior study by investigating the extent to which haptic shared control improves neural efficiency in a dexterous task. Neural efficiency here is defined as the relationship between task performance and the mental effort required to achieve that level of performance39. We have previously shown that haptic feedback provided during volitional (i.e., manual) control of a prosthesis leads to improved neural efficiency in an object stiffness discrimination task over volitional control without haptic feedback22. Mental effort in this study was assessed using functional near infrared spectroscopy (fNIRS), a noninvasive optical brain-imaging technique40.

In the present study, we employ the same neurophysiological measurements to provide a holistic assessment of the impact of haptic shared control on task performance and cognitive load in a dexterous grasp and lift task with a brittle object, thereby requiring precise force control. In particular, we investigate participants’ neural efficiency as they perform the grasp-and-lift task with either a standard myoelectric prosthesis, a myoelectric prosthesis with vibrotactile feedback of grip force, or a myoelectric prosthesis featuring haptic shared control—that is, vibrotactile feedback of grip force and low-level autonomous control of grip force integrated through an imitation-learning paradigm. We hypothesize that haptic shared control will result in the highest neural efficiency (best task performance and lowest cognitive load) compared to the standard prosthesis, followed by the prosthesis featuring vibrotactile feedback of grip force.

Methods

Participants

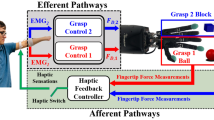

33 non-amputee participants (9 female, age 24.6 ± 3.2, 2 left-handed individuals) participated in this experimental study approved by the Johns Hopkins Medical Institute IRB (protocol #00147458). Informed consent was obtained from all participants, and all methods were performed in accordance with the relevant guidelines and regulations. Participants were pseudo-randomly assigned to one of three groups, and each group was balanced for gender. Participants in the first group completed a grasp-and-lift task using a standard myoelectric prosthesis (Standard group). Participants in the second group completed the same grasp-and-lift task using a myoelectric prosthesis with vibrotactile feedback of grip force (Vibrotactile group). Participants in the third group completed the grasp-and-lift task using a myoelectric prosthesis featuring haptic shared control (Haptic Shared Control group). Figure 1 shows one of the experimenters demonstrating the experimental setup for the Haptic Shared Control Group.

Experimental hardware

The devices used in the experiment include a mock prosthesis, vibrotactile actuator, fNIRS imaging device, and an instrumented object. Excluding the fNIRS data stream, all input and output signals were controlled through a Quanser QPIDe DAQ and QUARC real-time software in MATLAB/Simulink 2017a.

Prosthesis

The prosthesis consists of a custom thermoplastic socket that can be worn by non-amputee participants and a voluntary-closing hook-style terminal device (maximum aperture 83 mm). A Bowden cable connects the terminal device to a custom motorized linear actuator to control device opening and closing. This same prosthesis and actuator have been used and described in more detail in Thomas et al.9,22. A counterweight system was attached to the terminal device to simulate the loading conditions typically experienced by transradial amputees; it offset 500 g of the prosthesis’s 800 g mass.

The motorized linear actuator is driven in proportional, open-loop speed control mode by surface electromyography signals (sEMG) from the wrist flexor and extensor muscle groups. sEMG signals were acquired using a 16-channel Delsys Bagnoli Desktop system.

Instrumented object

Inspired by previous research41,42, an instrumented device that simulates a brittle object (\(77 \times 74 \times 139\,\text {mm}\)) was designed for the grasp-and-lift task. This object, depicted in Fig. 2, consists of a collapsible wall to signify object breakage. The object features an accelerometer to measure object movements, a magnet and Hall effect sensor to detect breaks, a 10 kg load cell to measure grip force, and a weight container to customize the object’s mass. For the present study, the mass of the object remained constant at 310 g. Conductive fabric was placed on the base of the object and surface of the testing platform to detect object lift.

Non-invasive brain imaging

Functional near infrared spectroscopy (fNIRS) utilizes near infrared light to measure cortical oxygenation changes in order to capture neural activity. Regional oxygenation concentration changes (deoxygenated and oxygenated hemoglobin and their summation total hemoglobin, total-Hb) are correlates of brain activation by oxygen consumption of neurons43,44,45,46,47. Thus, hemoglobin concentration changes are strongly linked to tissue oxygenation and metabolism. Fortuitously, the absorption spectra of oxy- and deoxy-Hb remain significantly different from each other, allowing spectroscopic separation of these compounds to be possible by using only a few sample wavelengths. fNIRS has been shown to produce similar results to other brain imaging methods, such as functional magnetic resonance imaging (fMRI)48,49and has been demonstrated in numerous prior studies50,51,52,53,54. In addition, it has better spatial resolution than electroencephalography and is also less susceptible to motion artifacts and muscle activity that may interfere with mental workload signals55. Furthermore, we have previously used fNIRS to successfully assess the effect of a myoelectric prosthesis featuring vibrotactile feedback on cognitive load in a stiffness discrimination task22. A four-optode fNIRS imager (Model 1100W; fNIR Devices, LLC, USA) was used to measure hemodynamic activity from four regions of the prefrontal cortex at a 4 Hz sampling rate. Signals were acquired and post-processed in COBI Studio (v1.5.0.51) and fnirSoft (v4.11)56. Then, a 40th order low-pass and linear-phase Finite Impulse Response (FIR) filter designed using a Hamming window and cut-off frequency of 0.1 Hz is applied to attenuate high frequency noise and physiological oscillations like heart rate and respiration rate. Then the modified Beer Lambert is applied to the filtered data to obtain the relative concentrations of hemoglobin, which are related to mental effort via neurovascular coupling40,57.

sEMG calibration and prosthesis manual control

The sEMG calibration procedure employs maximum voluntary contraction to normalize the sEMG signals between the minimum and maximum voltages of the prosthesis motor. The amplitude of wrist flexion activity is proportional to the closing speed of the prosthesis. Likewise, the amplitude of wrist extension activity is proportional to the opening speed of the prosthesis. The control equation and more details can be found in our previous study38; the only difference here is the range of voltages used to drive the current prosthesis (1.5–7 V here instead of 0.55–1.5 V in our prior work).

Vibrotactile feedback

Vibrotactile feedback of grip force was provided using a C-2 tactor (Engineering Acoustics) driven by a Syntacts amplifier (v3.1)58. The tactor was strapped to the upper-arm of the participant. Vibrotactile feedback frequency was set to 250 Hz. Vibrotactile feedback voltage \(\nu\) was proportional to the load cell voltage L from the instrumented object. As the force on the load cell increased, the amplitude of the vibration increased as shown in

The resting state of the load cell is around 4.5 V. As force is applied to the load cell, this value decreases. 4.3 V was chosen as the threshold for detecting contact on the load cell.

Haptic shared controller

The haptic shared control scheme switches between the user’s manual control (with vibrotactile feedback) of the prosthesis and an autonomous control system that attempts to mimic the user’s desired grip force. When enabled and subsequently triggered by the user, the autonomous controller independently closes the prosthesis terminal device until the user’s preset grip force is achieved.

In order to enable the controller, the user must first manually actuate the prosthesis (via sEMG) and lift the object for a minimum of one second without breaking or dropping the object. Such occurrences are identified by assessing sharp peaks in the derivative of the load cell signal, where \({\frac{dL}{dt}} > 2.5 {\frac{\text{V}}{{\text{s}}}}\) indicates a slip event, and \({\frac{dL}{dt}} > 5 {\frac{\text{V}}{{\text{s}}}}\) indicates object breakage. During this manual operation, the participant receives vibrotactile feedback of the grip force as described in the “Vibrotactile feedback” section. The average applied grip force during the one second of successful lifting is stored as the desired grip force for the shared controller. Once this value is stored, the vibrotactile feedback turns off and the blue LED on the prosthesis turns on, informing the user of the transition to autonomous control.

To activate the autonomous closing of the prosthesis terminal device, participants must generate a wrist flexion sEMG signal \(S_f\) greater than or equal to the wrist flexion threshold, \(f_L\) (without needing to sustain the activity). The closing command to the terminal device when autonomous grasping was initiated (\(h_c\)) occurred in three separate stages (\(h^1_c\), \(h^2_c\), \(h^3_c\)). First, an initial decaying signal initiated fast closing as shown in

Once the closing speed slowed below a heuristically determined threshold, the closing command was ramped up continuously until contact with the object was detected as in

where \(\frac{da}{dt}\) is the derivative of the prosthesis aperture (i.e., velocity), \(a_L\) refers to the lower velocity threshold, and \(a_U\) is the upper threshold.

Contact occurred when the load cell value L on the brittle object decreased below a threshold \(L_t\) and the aperture of the prosthesis A decreased below a threshold \(A_t\), measured by an encoder on the motor of the linear actuator. After contact, a proportional and integral controller closes the terminal device until the load cell signal is within 5% of the user’s predefined grip force \(L_{\text {d}}\) as shown in

If the autonomous control is accidentally triggered by the user, the user can activate their wrist extensors to send an “open” command, which cancels the autonomous closing. This does not disable the controller; it simply halts the autonomous closing process. If the object breaks or is dropped during the lift attempt, the autonomous controller is fully disabled, which forces the operator to then control the prosthesis in the manual mode (with haptic feedback). The controller can also be manually disabled by the user by pushing the blue LED button on the prosthesis. When the controller is disabled, the user receives a short, pulsed vibration and the LED turns off. Thus, the user will continue to stay in the autonomous mode while no grasp errors are detected, or if the user does not manually override the controller. In addition, the autonomous controller is always enabled after the user successfully lifts the object in manual mode. Signal traces for a participant using the haptic shared controller can be seen in Fig. 3.

Example signals from the first trial of a participant in the Haptic Shared Control condition as they grasped and lifted the brittle object twice. The green dashed lines indicate when the object was lifted, and the brown dotted lines indicate when the object was set down. The pink dashed lines indicate when the autonomous controller was enabled. The traces shown are sEMG flexion activity \(S_f\), closing command \(u_c\), the percent closed of the prosthesis, the load cell signal L, and the C-2 tactor vibration signal \(\nu\). The traces depict two successful grasp-and-lifts, where the first attempt was done manually with vibrotactile feedback, while the second attempt was completed using the autonomous control. Note that it is possible to identify the three stages of autonomous control (first is \(h^1_c\), the decaying command, second is \(h^2_c\), the ramping up command, and third is \(h^3_c\), the proportional integral controller command).

Experiment procedure

Before starting the experiment, each participant completed a demographics questionnaire. Next, an experimenter placed an sEMG electrode on the participant’s right wrist flexor muscle group and another on their right wrist extensor muscle group. Participants calibrated their sEMG signals using maximum voluntary contraction (MVC) of their wrist flexor and extensor muscle groups. Experimenters then placed the fNIRS imaging headset on the participant’s forehead using anatomical landmarks. The sensor pads were aligned with the vertical and horizontal symmetry axes. Care was taken to align the central markers on the left and right hemisphere sensor pads with the participant’s pupils on each hemisphere. A dark fabric band was used to cover the headset and sensor pad edges to block out any ambient light. After ensuring no hair was obstructing the sensors in the headset and confirming a comfortable fit, experimenters took baseline measurements of prefrontal cortex activity56.

While seated in front of the experimental table, participants used a GUI to complete a combined training and assessment of their sEMG signal control, modeled after the test reported in59. Participants were asked to reach and sustain three different levels of sEMG activity for five seconds at a time; each of the levels were 12.5%, 25%, and 37.5% of the user’s MVC. These values are equidistant points between 0% and 50% of MVC, which is mapped to the maximum speed of the prosthesis; these MVC percentages thus represent a reasonable range of speeds. The participant first completed one practice session for wrist flexor activity, where each of the three levels was presented once. Once practice was complete, the participant completed a test session, in which each of the three levels was presented three times. After completing the practice and testing sessions for the wrist flexor, participants repeated the same practice and test procedure for the wrist extensor.

After completing the sEMG training and assessment, participants were asked to stand to begin training for the grasp-and-lift task. If the participant was in the Vibrotactile or Haptic Shared Control group, the C-2 tactor was placed on their upper right arm. Likewise, if the participant was in the Haptic Shared Control group, the blue LED button was placed on the prosthesis. The experimenter then instructed the participant on how to close and open the prosthesis using their muscle activity. Participants were able to practice closing and opening the prosthesis until they felt comfortable. Next, the experimenter explained that the goal of the task was to grasp and lift the brittle instrumented object for three seconds without breaking or dropping it.

All participants were instructed to position the prosthesis just under a small protrusion on the collapsible wall to ensure consistent placement. Participants in the Standard group were allowed multiple attempts until they successfully lifted the object for three seconds. They were then given three more practice attempts before moving on to the actual experiment.

Participants in the Vibrotactile group were first given an overview of the feedback and were further instructed to use the feedback to find the appropriate grip force for lifting the object. They were then allowed multiple attempts until they could successfully lift the object for three seconds. Afterwards, they were given three more practice attempts before moving on to the actual experiment.

Participants in the Haptic Shared Control group were first given an overview of the shared controller, and informed about how to switch between manual and autonomous modes. They were then allowed multiple attempts until they could successfully lift the object for three seconds in the manual mode. Next, they were asked to trigger the autonomous control and lift the object (see “Haptic shared controller” section). The experimenter then demonstrated the two scenarios that automatically disabled the autonomous controller: (1) an object break, and (2) an object slip. The participant was then required to grasp and lift the object in manual mode after each demonstration in order to re-enable the autonomous controller. Finally, the experimenter demonstrated how to use the blue LED button to manually override the autonomous controller. Afterwards, the participant was allowed two more practice attempts before moving on to the actual experiment. Participants in the Haptic Shared Control group began the experiment in manual mode.

After all training had been completed, participants then completed seven one-minute trials of the grasp-and-lift task, wherein they attempted to grasp and lift the object as many times as possible within that minute without breaking or dropping the object. Participants had full view of the task. Participants were required to hold the object in the air for 3 s. A 30 s break was provided between trials.

After finishing all seven trials, participants then completed a survey regarding their subjective experience of the experiment. The questions were based on the NASA-TLX questionnaire60 and included a mix of sliding scale and short answer questions.

Metrics

The following metrics were used to analyze the three conditions from the perspective of both task performance and neural performance.

Task performance

A successful lift was defined as lifting and holding the object in the air for at least three seconds. There were no requirements for lifting height in the task. The status of each grasp attempt (successful lift or not) was recorded. In addition, the total number of successful lifts per trial was also calculated.

A safe grasping margin was defined for the instrumented object as a load cell value in the range of 3–4 V. For each grasp attempt, the 100 smallest load cell values (measured during object grasp, representing the maximum force values—refer to Fig. 3) were averaged and compared to the safe grasping interval.

Neural performance

The total concentration of hemoglobin (HbT) was used as a proxy for measuring cognitive load. The average value was extracted for each of the seven trials from four regions of the prefrontal cortex: left lateral, left medial, right medial, and right lateral.

These cognitive load measurements were combined with the total number of lifts to calculate neural efficiency as described in39. The z-scores of the number of successful lifts lasting at least 3 s without any grasp errors \(z(\text {Lift})\) and total hemoglobin concentration \(z(\text {HbT})\) were calculated to derive the neural efficiency metric as

Here, the mean and standard deviation used to calculate the z-score refer to the mean and standard deviation for all participants across all conditions. This metric describes the mental effort required to achieve a certain level of performance. A higher neural efficiency is associated with higher performance and lower cognitive load.

Survey

The post-experiment survey was a mix of sliding (0–100) and short answer questions. The sliding scale questions asked participants to rate the task’s physical demand, mental demand, and pacing. Additionally, it asked them to rate their perceived ability to complete the task, their frustration level and how much they used visual, auditory, and touch-based cues to help them complete the task. Finally, the survey prompted them to explain the strategy they employed to accomplish the task and provide any other comments about their experience.

Statistical analysis

Statistical analysis was carried out in RStudio (v4.1.0). A mixture of logistic and linear mixed models were used to assess task and neural performance. The random effects included a random intercept for the subject and a random slope for trial. Post-hoc tests were conducted with a Bonferroni correction. Model residuals were plotted and checked for homogeneity of variance and normality. A p value of 0.05 was used as the threshold for significance.

A logistic binomial mixed model was used to assess the probability of being within the safe grasping margin for each grasp attempt. The fixed effects included the trial number and the mode, where mode could be No Feedback (manual operation of the myoelectric prosthesis), Feedback (manual operation of the prosthesis with vibrotactile feedback of grip force), or Autonomous (autonomous controller operates prosthesis). The Feedback grasp attempts includes all grasp attempts from participants in the Vibrotactile group and grasp attempts from participants the Haptic Shared Control group who were manually operating the prosthesis (autonomous controller disengaged).

A separate logistic binomial mixed model was used to assess the probability of lifting the object. Individual linear mixed models were used to assess the number of lifts, the total concentration of hemoglobin for each of the four brain regions, and the neural efficiency for each of the four brain regions. The fixed effects for all models were the participant group and the trial number. For this analysis, the Vibrotactile group is separate from the Haptic Shared Control group, and does not include trials from participants in the Haptic Shared Control group manually operating the prosthesis.

Results

Three of the 33 participants who consented to participate in the study were excluded from data analysis. Of those three, one was unable to finish the experiment due to technical issues with the system. Another participant was unable to produce satisfactory sEMG signals during the calibration step, and a third participant had poor control during the experiment. This participant also exhibited poor control during the sEMG assessment, as evidenced by their high root-mean-square error during the flexion and extension sEMG assessment compared to the other participants. The following results are for the remaining 30 participants (10 in each group).

Outcome measures

Results reported for the data indicate the estimate of the fixed effects \(\beta\) and the standard error SE from linear and logistic mixed models statistical analyses. There were 531 observations for the Standard group, 522 for the Vibrotactile group, and 433 for the Haptic Shared Control group. For brevity, cognitive load results from only the right lateral prefrontal cortex will be discussed, as this region presented the most significant changes in activity. Cognitive load results from the other brain regions can be found in the Supplemental material associated with this manuscript.

Task performance

The probability of grasping the object within a safe grip force margin for each grasp attempt, where the individual data points represent the average for each trial (for all participants in each mode), and the solid lines indicate the model’s prediction. Note: the No Feedback mode refers to manual prosthesis operation, the Feedback mode refers to manual prosthesis operation with vibrotactile feedback, and the Autonomous mode refers to autonomous prosthesis operation. * indicates \(p<0.05\), ** indicates \(p<0.01\), and *** indicates \(p<0.001\).

Safe grasping margin

A binomial mixed model was used to assess the odds that a given grasp attempt was adequate for lifting the object without breaking it. Here, we compare the No Feedback, Feedback, and Autonomous modes. The No Feedback mode includes all participants in the Standard group. The Feedback mode includes participants in the Vibrotactile group as well as the Haptic Shared Control participants who were in the manual mode. The Autonomous mode includes Haptic Shared Control participants using the autonomous controller to complete the grasp-and-lift task. There were 531 observations for the No Feedback mode, 632 for the Feedback mode, and 323 for the Autonomous mode. The odds of being within a safe grasping margin were approaching a significant positive difference from 50% in the Standard mode (\(\beta =0.96, SE=0.51, p=0.06\)). However, both the Vibration mode (\(\beta =1.07, SE=0.53, p=0.045\)) and the Autonomous mode (\(\beta =2.61, SE=0.57, p<0.001\)) significantly improved the odds of being withing a safe grasping margin compared to the Standard mode. Furthermore, the Autonomous mode was significantly better than the Vibrotactile mode (\(\beta =1.55, SE=0.33, p<0.001\)) in ensuring a safe grasping margin. Experience with the task (i.e., number of trials) had no effect on the ability to grasp within the safety margin (\(\beta =-0.07, SE=0.06, p=0.22\)). See Fig. 4 for a visualization of these results. In addition to these statistical results, the number of object lifts, breaks, drops, and other grasp errors are reported for each group in Table 1. Here, other grasp errors could include attempts to lift that were neither successful nor resulted in a drop or break (e.g., the participant lifted the object and then set it down prior to the 3 s mark).

Lifting probability

A binomial mixed model was used to assess the odds that a given grasp attempt resulted in a successful lift. Here and in all subsequent results, we compare the Standard, Vibrotactile and Haptic Shared Control groups. The odds of lifting the object in the Standard group were significantly less than 50% (\(\beta =-0.96, SE=0.35, p=0.006\)). The Vibrotactile group was not better than the Standard group (\(\beta =0.30, SE=0.37, p=0.42\)). However, the Haptic Shared Control group significantly improved the probability of lifting the object compared to the Standard group (\(\beta =1.08, SE=0.36, p=0.003\)) and the Vibrotactile group (\(\beta =0.78, SE=0.37, p=0.037\)). In addition, experience with the task (i.e., number of trials) significantly improved performance across all groups (\(\beta =0.09, SE=0.04, p=0.025\)). See Fig. 5 for a visualization of these results for each group.

The probability of lifting the object for each group across trials, where the individual data points represent the average for each trial (for all participants in each group), and the solid lines indicate the model’s prediction. * indicates \(p<0.05\), ** indicates \(p<0.01\), and *** indicates \(p<0.001\).

Number of lifts

A linear mixed model was used to assess the average number of three-second lifts per trial. The average number of lifts in the Standard group was significantly higher than zero (\(\beta =1.82, SE=0.42, p<0.001\)). The Vibrotactile group was not different from the Standard group (\(\beta =0.50, SE=0.51, p=0.34\)) or the Haptic Shared Control group (\(\beta =-0.62, SE=0.51, p=0.22\)). However, the Haptic Shared Control group significantly improved the number of lifts compared to the Standard group (\(\beta =0.96, SE=0.35, p=0.006\)). In addition, experience with the task significantly improved performance across all groups (\(\beta =0.19, SE=0.04, p<0.001\)). See Fig. 6 for a visualization of these results for each group.

Neural performance

Change in average total hemoglobin concentration

The change in average total hemoglobin concentration represents the amount of cognitive load incurred. An increased concentration indicates a higher cognitive load. A linear mixed model was used to assess the hemoglobin concentration. The average total hemoglobin concentration in the right lateral prefrontal cortex was significantly higher than zero in the Standard group (\(\beta =0.71, SE=0.29, p=0.019\)). The Vibrotactile group was not significantly different from the Standard group (\(\beta =-0.10, SE=0.37, p=0.77\)). Similarly, the Haptic Shared Control group was not significantly different from the Standard group (\(\beta =-0.65, SE=0.37, p=0.086\)). Experience with the task was close to significantly improving the cognitive load (reducing total hemoglobin concentration: \(\beta =-0.05, SE=0.03, p=0.068\)). See Fig. 7 for a visualization of these results for each group.

Neural efficiency

The neural efficiency indicates the relationship between mental effort and performance. Positive neural efficiency indicates values higher than the neural efficiency grand mean across all conditions, while negative neural efficiency indicates values lower than the grand mean. A linear mixed model was used to assess neural efficiency. The neural efficiency in the Standard group was significantly less than zero (\(\beta =-0.90, SE=0.30, p=0.005\)). The Vibrotactile group was not significantly different from the Standard group (\(\beta =0.33, SE=0.37, p=0.38\)) or the Haptic Shared Control group (\(\beta =-0.58, SE=0.37, p=0.12\)). However, the neural efficiency in Haptic Shared Control group was significantly greater than in the Standard group (\(\beta =0.91, SE=0.37, p=0.021\)). In addition, experience with the task improved neural efficiency overall (\(\beta =0.12, SE=0.03, p<0.001\)). See Fig. 8 for a visualization of these results for each group.

Survey

A linear regression model was used to analyze the survey results. Participants in the Standard group provided ratings for all survey questions that were significantly different from 0 (see Table 2 for complete results). The survey responses only significantly differed by group for the following few cases. Participants in the Vibrotactile group rated their use of visual cues as significantly less than the Standard group, and in a post-hoc test with a Bonferroni correction, also less than the Haptic Shared Control group (\(\beta =-25.6, SE~=~8.21, p=0.002\)). In a post-hoc test with a Bonferroni correction, participants in the Haptic Shared Control group rated their use of somatosensory cues as significantly lower than those in the Vibrotactile group (\(\beta ~=~-29.2\), SE = 12.45, p = 0.02).

Discussion

Haptic shared control approaches have been utilized in several human–robot interaction applications with success34,37; yet, investigations regarding its effectiveness in upper-limb prostheses has been lacking. Furthermore, it is not well understood how a haptic shared control approach affects the human operator’s cognitive load and their neural efficiency. To address this gap, we developed a haptic shared control approach for a myoelectric prosthesis and holistically assessed it with both task performance and neurophysiological cognitive load metrics. Through this assessment, it was possible to understand the level of mental effort required to reach a certain level of performance. We compared this control scheme to the standard myoelectric prosthesis and a prosthesis with vibrotactile feedback of grip force in a grasp-and-lift task with a brittle object. The haptic shared control scheme arbitrated between haptically guided control of prosthesis grasping and complete autonomous control of grasping61. Here, the autonomous control replicated the human operator’s desired grasping strategy in an imitation-learning paradigm.

The primary results indicate that participants in the Haptic Shared Control group exhibited greater neural efficiency—higher task performance with similar mental effort—compared to their counterparts in the Standard group. Furthermore, vibrotactile feedback in general was instrumental in appropriately tuning grip force, which is consistent with prior literature10,11,19. This benefit combined with the improved dexterity afforded by the autonomous grasp controller substantially improved lifting ability and grip force tuning with the haptic shared control scheme compared to both the Standard and Vibrotactile control schemes.

Despite the reported benefits of haptic feedback in dexterous task performance and mental effort reduction22,62,63, vibrotactile feedback alone was not able to significantly improve lifting ability and neural efficiency compared to Standard control in this study. These results agree with findings from previous investigations on the effect of haptic feedback on grasp-and-lift of a brittle object41,42. This is likely due to the fact that feedback can only inform users of task milestones and task errors after they have occurred. In human sensorimotor control, feedforward control serves to complement feedback strategies by making predictions that guide motor action64.

Thus, for our difficult dexterous task, feedback control strategies alone were insufficient, and had to be supplemented with a feedforward understanding of the appropriate grip force necessary to grasp and lift the fragile object. Once trained, the autonomous controller offloads this burden of myoelectric feedforward control from the user, which results in a marked improvement in both performance and mental effort. This haptic shared control concept leverages the strengths of the human operator’s knowledge of the task requirements and subsequently utilizes this experience in tuning the autonomous controller. The use of the brittle object in this experiment highlights the need for advanced control schemes in prostheses, given that only the haptic shared control outperformed the standard prosthesis in terms of more appropriate grasp forces, successful lifts, and improved neural efficiency. This finding aligns with the stated benefits of haptic shared control in other human–machine interaction paradigms such as semi-autonomous vehicles and teleoperation35,36.

Due to the nature of the haptic shared control scheme in this study, participants in the Haptic Shared Control group had much less experience with the vibration feedback compared to participants in the Vibrotactile group over the course of the experimental session. Indeed, participants in the Haptic Shared Control group reported significantly less use of somatosensory cues than those in the Vibrotactile group. Thus, it is possible that the boundary between human and machine could become more seamless with additional training on the vibrotactile feedback. Other studies have shown that longer-term and extended training with haptic feedback significantly improved performance15,19.

It is worth noting here that the auditory cues from the vibrotactile actuator were likely utilized by some participants. Two participants explicitly mentioned that the sound of the tactor was as or even more salient than the tactile sensation itself. Previous research has shown that reaction time decreases with the combination of the tactile and auditory cues from vibrotactile feedback compared to the tactile cues alone65. In addition, it has also been shown that the combination of redundant, multi-modality feedback improves reaction times compared to unimodal feedback66. This type of incidental feedback is not limited to the audio-tactile cues emanating from the vibrotactor; the sounds generated by the movement of the prosthesis motor were also used by several participants across conditions. Although incidental feedback has been demonstrated to assist in dexterous tasks67, it is not enough to achieve the best performance in a task requiring quick and accurate grasp force.

Although we demonstrated the success of the haptic shared control scheme, the present study has some limitations. Only non-amputee participants were evaluated, and the task was conducted with a manufactured object, rather than everyday items. Given that the collapsible wall was a hinged mechanism, the force required to break the object could vary depending on the prosthesis placement against the wall (e.g., more force is required closer to the hinge joint compared to the top of the wall). This was partially accounted for by instructing participants to place the prosthesis underneath a visual marker on the wall. Nevertheless, it is still possible that inconsistent placement could result in inappropriate forces being applied by the autonomous controller. Furthermore, the high amount of visual focus required to achieve this placement may have resulted in similar mental effort levels across groups, as measured both by fNIRS (Fig. 7) and the survey. That the survey results showed no differences in mental effort may be explained by the notion that subjective ratings with a low sample size may not be as sensitive to mental effort changes. The present study did not include a condition involving shared control without any haptic feedback. Although such a system can be tested, careful consideration should be given to the method of communicating to the user which operating mode they are in without overburdening their visual or auditory senses. Based on our prior work38, we would expect that the haptic shared-control approach will lead to improved neural-efficiency over the shared-control approach without haptic feedback.

Future work to realize the haptic shared control concept clinically should involve verifying these present results with amputee participants and with a wider range of activities and types of objects, including real-life brittle and fragile objects. In addition, the utility of the haptic shared control system should be assessed longitudinally to understand its impact on neural efficiency and direct myoelectric control. It would also be worth evaluating the extent of learning and fatigue during long-term use of the haptic shared control as compared to the standard and vibrotactile feedback conditions. A further expansion to the autonomous system includes the ability to recognize object types in order to facilitate switching between different objects and tasks. Moreover, other approaches to haptic shared control which involve a more seamless and adaptive arbitration between volitional and autonomous control can be developed and tested. Finally, because the autonomous control can affect a user’s sense of agency, embodiment may be affected67,68. Future investigations with the haptic shared control should consider incorporating evaluations of embodiment, such as proprioceptive drift69 and embodiment questionnaires70.

Existing approaches to shared control within prosthetic systems have focused on supplementing human manual control of the prosthesis with autonomous systems29,32. These systems do not incorporate haptic feedback, and thus leave the user out of the loop. In contrast, the present study integrates haptic feedback with an autonomous controller in an imitation-learning paradigm, where the autonomous control replicates the desired grasping strategy of the human. Such a system can be expanded and generalized further to facilitate other types of human–robot interaction, such as robotic surgery and human–robot cooperation.

In summary, our results demonstrate that fNIRS can be used to assess cognitive load and neural efficiency in a complex, dynamic task conducted with a myoelectric prosthesis, and that a haptic shared control strategy in a myoelectric prosthesis ensures good task performance while incurring low cognitive burden. This is accomplished by the system’s individual components (vibrotactile feedback and the imitation-learning controller), whose benefits combine synergistically to optimize performance. These results support the need for hybrid systems in bionic prosthetics to maximize neural and dexterous performance.

Data availibility

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Johansson, R. S. & Westling, G. Roles of glabrous skin receptors and sensorimotor memory in automatic control of precision grip when lifting rougher or more slippery objects. Exp. Brain Res. 56, 550–564. https://doi.org/10.1007/BF00237997 (1984).

Gordon, A. M., Westling, G., Cole, K. J. & Johansson, R. S. Memory representations underlying motor commands used during manipulation of common and novel objects. J. Neurophysiol. 69, 25 (1993).

Johansson, R. S. & Cole, K. J. Sensory-motor coordination during grasping and manipulative actions. Curr. Opin. Neurobiol. 2, 815–823. https://doi.org/10.1016/0959-4388(92)90139-C (1992).

Gorniak, S. L., Zatsiorsky, V. M. & Latash, M. L. Manipulation of a fragile object. Exp. Brain Res. Exp. Hirnforschung Exp. Cerebrale 202, 413. https://doi.org/10.1007/S00221-009-2148-Z (2010).

Stephens-Fripp, B., Alici, G. & Mutlu, R. A review of non-invasive sensory feedback methods for transradial prosthetic hands. IEEE Access 6, 6878–6899. https://doi.org/10.1109/ACCESS.2018.2791583 (2018).

Witteveen, H. J. B., Droog, E. A., Rietman, J. S. & Veltink, P. H. Vibro- and electrotactile user feedback on hand opening for myoelectric forearm prostheses. IEEE Trans. Biomed. Eng. 59, 2219–2226. https://doi.org/10.1109/TBME.2012.2200678 (2012).

Antfolk, C. et al. Artificial redirection of sensation from prosthetic fingers to the phantom hand map on transradial amputees: Vibrotactile versus mechanotactile sensory feedback. IEEE Trans. Neural Syst. Rehabil. Eng. 21, 112–120. https://doi.org/10.1109/TNSRE.2012.2217989 (2013).

Damian, D. D., Fischer, M., Hernandez Arieta, A. & Pfeifer, R. The role of quantitative information about slip and grip force in prosthetic grasp stability. Adv. Robot. 32, 12–24. https://doi.org/10.1080/01691864.2017.1396250 (2018).

Thomas, N., Ung, G., McGarvey, C. & Brown, J. D. Comparison of vibrotactile and joint-torque feedback in a myoelectric upper-limb prosthesis. J. Neuroeng. Rehabil. 16, 70. https://doi.org/10.1186/s12984-019-0545-5 (2019).

Kim, K. et al. Haptic feedback enhances grip force control of sEMG-controlled prosthetic hands in targeted reinnervation amputees. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 798–805. https://doi.org/10.1109/TNSRE.2012.2206080 (2012).

Rosenbaum-Chou, T., Daly, W., Austin, R., Chaubey, P. & Boone, D. A. Development and real world use of a vibratory haptic feedback system for upper-limb prosthetic users. J. Prosth. Orthot. 28, 136–144. https://doi.org/10.1097/JPO.0000000000000107 (2016).

Abd, M. A., Ingicco, J., Hutchinson, D. T., Tognoli, E. & Engeberg, E. D. Multichannel haptic feedback unlocks prosthetic hand dexterity. Sci. Rep. 12, 1–17. https://doi.org/10.1038/s41598-022-04953-1 (2022).

Raveh, E., Friedman, J. & Portnoy, S. Evaluation of the effects of adding vibrotactile feedback to myoelectric prosthesis users on performance and visual attention in a dual-task paradigm Article. Clin. Rehabil. 32, 1308–1316. https://doi.org/10.1177/0269215518774104 (2018).

Raveh, E., Portnoy, S. & Friedman, J. Adding vibrotactile feedback to a myoelectric-controlled hand improves performance when online visual feedback is disturbed. Hum. Mov. Sci. 58, 32–40. https://doi.org/10.1016/J.HUMOV.2018.01.008 (2018).

Stepp, C. E., An, Q. & Matsuoka, Y. Repeated training with augmentative vibrotactile feedback increases object manipulation performance. PLoS One 7, e32743. https://doi.org/10.1371/journal.pone.0032743 (2012).

Bark, K., Wheeler, J. W., Premakumar, S. & Cutkosky, M. R. Comparison of skin stretch and vibrotactile stimulation for feedback of proprioceptive information. In Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, 2008. Haptics 2008, 71–78. https://doi.org/10.1109/HAPTICS.2008.4479916 (IEEE, 2008).

Blank, A., Okamura, A. M. & Kuchenbecker, K. J. Identifying the role of proprioception in upper-limb prosthesis control. ACM Trans. Appl. Percept. 7, 1–23. https://doi.org/10.1145/1773965.1773966 (2010).

D’Alonzo, M. & Cipriani, C. Vibrotactile sensory substitution elicits feeling of ownership of an Alien hand. PLoS One 7, e50756. https://doi.org/10.1371/journal.pone.0050756 (2012).

Clemente, F., D’Alonzo, M., Controzzi, M., Edin, B. B. & Cipriani, C. Non-invasive, temporally discrete feedback of object contact and release improves grasp control of closed-loop myoelectric transradial prostheses. IEEE Trans. Neural Syst. Rehabil. Eng. 24, 1314–1322. https://doi.org/10.1109/TNSRE.2015.2500586 (2016).

Engeberg, E. D. & Meek, S. G. Adaptive sliding mode control of grasped object slip for prosthetic hands. In 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, 4174–4179. https://doi.org/10.1109/IROS.2011.6094500 (IEEE, 2011).

Jimenez, M. C. & Fishel, J. A. Evaluation of force, vibration and thermal tactile feedback in prosthetic limbs. In Proceedings of IEEE Haptics Symposium (HAPTICS), 437–441. https://doi.org/10.1109/HAPTICS.2014.6775495 (2014).

Thomas, N., Ung, G., Ayaz, H. & Brown, J. D. Neurophysiological evaluation of haptic feedback for myoelectric prostheses. IEEE Trans. Human Mach. Syst. 51, 253–264. https://doi.org/10.1109/THMS.2021.3066856 (2021).

Salisbury, L. L. & Colman, A. B. A mechanical hand with automatic proportional control of prehension. Med. Biol. Eng. 5, 505–511. https://doi.org/10.1007/BF02479145 (1967).

Chappell, P. H., Nightingale, J. M., Kyberd, P. J. & Barkhordar, M. Control of a single degree of freedom artificial hand. J. Biomed. Eng. 9, 273–277. https://doi.org/10.1016/0141-5425(87)90013-6 (1987).

Nightingale, J. M. Microprocessor control of an artificial arm. J. Microcomput. Appl. 8, 167–173. https://doi.org/10.1016/0745-7138(85)90015-6 (1985).

Matulevich, B., Loeb, G. E. & Fishel, J. A. Utility of contact detection reflexes in prosthetic hand control. Proceedings of IEEE International Conference on Intelligent Robots and Systems 4741–4746. https://doi.org/10.1109/IROS.2013.6697039 (2013).

Osborn, L., Kaliki, R. R., Soares, A. B. & Thakor, N. V. Neuromimetic event-based detection for closed-loop tactile feedback control of upper limb prostheses. IEEE Trans. Haptics 9, 196–206. https://doi.org/10.1109/TOH.2016.2564965 (2016).

Ottobock. SensorHand Speed.

Zhuang, K. Z. et al. Shared human–robot proportional control of a dexterous myoelectric prosthesis. Nat. Mach. Intell. 1, 400–411. https://doi.org/10.1038/s42256-019-0093-5 (2019).

Edwards, A. L. et al. Application of real-time machine learning to myoelectric prosthesis control: A case series in adaptive switching. Prosthet. Orthot. Int. 40, 573–581. https://doi.org/10.1177/0309364615605373 (2016).

Mouchoux, J. et al. Artificial perception and semiautonomous control in myoelectric hand prostheses increases performance and decreases effort. IEEE Trans. Robot. 37, 1298–1312. https://doi.org/10.1109/TRO.2020.3047013 (2021).

Mouchoux, J., Bravo-Cabrera, M. A., Dosen, S., Schilling, A. F. & Markovic, M. Impact of shared control modalities on performance and usability of semi-autonomous prostheses. Front. Neurorobot. 15, 172. https://doi.org/10.3389/FNBOT.2021.768619/BIBTEX (2021).

Benloucif, A., Nguyen, A. T., Sentouh, C. & Popieul, J. C. Cooperative trajectory planning for haptic shared control between driver and automation in highway driving. IEEE Trans. Ind. Electron. 66, 9846–9857. https://doi.org/10.1109/TIE.2019.2893864 (2019).

Luo, R. et al. A workload adaptive haptic shared control scheme for semi-autonomous driving. Accident Anal. Prev. 152, 105968. https://doi.org/10.1016/J.AAP.2020.105968 (2021).

Lazcano, A. M., Niu, T., Carrera Akutain, X., Cole, D. & Shyrokau, B. MPC-based haptic shared steering system: A driver modeling approach for symbiotic driving. IEEE/ASME Trans. Mech. 26, 1201–1211. https://doi.org/10.1109/TMECH.2021.3063902 (2021).

Zhang, D., Tron, R. & Khurshid, R. P. Haptic feedback improves human–robot agreement and user satisfaction in shared-autonomy teleoperation. Proceedings—IEEE International Conference on Robotics and Automation 2021-May, 3306–3312. https://doi.org/10.1109/ICRA48506.2021.9560991 (2021).

Selvaggio, M., Cacace, J., Pacchierotti, C., Ruggiero, F. & Giordano, P. R. A shared-control teleoperation architecture for nonprehensile object transportation. IEEE Trans. Robot. 38, 569–583. https://doi.org/10.1109/TRO.2021.3086773 (2022).

Thomas, N., Fazlollahi, F., Kuchenbecker, K. J. & Brown, J. D. The utility of synthetic reflexes and haptic feedback for upper-limb prostheses in a dexterous task without direct vision. IEEE Trans. Neural Syst. Rehabil. Eng.https://doi.org/10.1109/TNSRE.2022.3217452 (2022).

Curtin, A. & Ayaz, H. Neural efficiency metrics in neuroergonomics: Theory and applications. In Neuroergonomics Vol. 22 (eds Ayaz, H. & Dehais, F.) 133–140 (Academic Press, ***, 2019). https://doi.org/10.1016/B978-0-12-811926-6.00022-1.

Ayaz, H. et al. Optical brain monitoring for operator training and mental workload assessment. Neuroimage 59, 36–47. https://doi.org/10.1016/J.NEUROIMAGE.2011.06.023 (2012).

Meek, S. G., Jacobsen, S. C. & Goulding, P. P. Extended physiologic taction: Design and evaluation of a proportional force feedback system. J. Rehabil. Res. Dev. 26, 53–62 (1989).

Brown, J. D. et al. An exploration of grip force regulation with a low-impedance myoelectric prosthesis featuring referred haptic feedback. J. Neuroeng. Rehabil. 12, 104. https://doi.org/10.1186/s12984-015-0098-1 (2015).

Ogawa, S., Lee, T. M., Kay, A. R. & Tank, D. W. Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proc. Natl. Acad. Sci. 87, 9868–9872. https://doi.org/10.1073/PNAS.87.24.9868 (1990).

Villringer, A., Planck, J., Hock, C., Schleinkofer, L. & Dirnagl, U. Near infrared spectroscopy (NIRS): A new tool to study hemodynamic changes during activation of brain function in human adults. Neurosci. Lett. 154, 101–104. https://doi.org/10.1016/0304-3940(93)90181-J (1993).

Villringer, A. & Chance, B. Non-invasive optical spectroscopy and imaging of human brain function. Trends Neurosci. 20, 435–442. https://doi.org/10.1016/S0166-2236(97)01132-6 (1997).

Obrig, H. et al. Near-infrared spectroscopy: Does it function in functional activation studies of the adult brain?. Int. J. Psychophysiol. 35, 125–142. https://doi.org/10.1016/S0167-8760(99)00048-3 (2000).

Heeger, D. J. & Ress, D. What does fMRI tell us about neuronal activity?. Nat. Rev. Neurosci. 3, 142–151. https://doi.org/10.1038/NRN730 (2002).

Fishburn, F. A., Norr, M. E., Medvedev, A. V. & Vaidya, C. J. Sensitivity of fNIRS to cognitive state and load. Front. Human Neurosci.https://doi.org/10.3389/fnhum.2014.00076 (2014).

Liu, Y. et al. Measuring speaker-listener neural coupling with functional near infrared spectroscopy. Sci. Rep. 7, 43293. https://doi.org/10.1038/srep43293 (2017).

Ayaz, H. et al. Continuous monitoring of brain dynamics with functional near infrared spectroscopy as a tool for neuroergonomic research: Empirical examples and a technological development. Front. Hum. Neurosci. 7, 871. https://doi.org/10.3389/fnhum.2013.00871 (2013).

Perrey, S. Possibilities for examining the neural control of gait in humans with fNIRS. Front. Physiol.https://doi.org/10.3389/fphys.2014.00204 (2014).

Mirelman, A. et al. Increased frontal brain activation during walking while dual tasking: An fNIRS study in healthy young adults. J. NeuroEng. Rehabil.https://doi.org/10.1186/1743-0003-11-85 (2014).

Gateau, T., Ayaz, H. & Dehais, F. In silico vs over the clouds: On-the-fly mental state estimation of aircraft pilots, using a functional near infrared spectroscopy based passive-BCI. Front. Human Neurosci. 12, 187. https://doi.org/10.3389/fnhum.2018.00187 (2018).

Ayaz, H. et al. Optical imaging and spectroscopy for the study of the human brain: Status report. Neurophotonicshttps://doi.org/10.1117/1.NPH.9.S2.S24001 (2022).

Biessmann, F., Plis, S., Meinecke, F. C., Eichele, T. & Muller, K.-R. Analysis of multimodal neuroimaging data. IEEE Rev. Biomed. Eng. 4, 26–58. https://doi.org/10.1109/RBME.2011.2170675 (2011).

Ayaz, H. et al. Using MazeSuite and functional near infrared spectroscopy to study learning in spatial navigation. J. Vis. Exp.https://doi.org/10.3791/3443 (2011).

Kuschinsky, W. Coupling of function, metabolism, and blood flow in the brain. Neurosurg. Rev. 14, 163–168. https://doi.org/10.1007/BF00310651 (1991).

Pezent, E., Cambio, B. & Ormalley, M. K. Syntacts: Open-source software and hardware for audio-controlled haptics. IEEE Trans. Haptics 14, 225–233. https://doi.org/10.1109/TOH.2020.3002696 (2021).

Prahm, C., Kayali, F., Sturma, A. & Aszmann, O. PlayBionic: Game-based interventions to encourage patient engagement and performance in prosthetic motor rehabilitation. PM &R 10, 1252–1260. https://doi.org/10.1016/J.PMRJ.2018.09.027 (2018).

Hart, S. G. & Staveland, L. E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 52, 139–183. https://doi.org/10.1016/S0166-4115(08)62386-9 (1988).

Losey, D. P., McDonald, C. G., Battaglia, E. & O’Malley, M. K. A review of intent detection, arbitration, and communication aspects of shared control for physical human–robot interaction. Appl. Mech. Rev. 70, 010804. https://doi.org/10.1115/1.4039145 (2018).

Zhou, M., Jones, D. B., Schwaitzberg, S. D. & Cao, C. G. L. Role of haptic feedback and cognitive load in surgical skill acquisition. In Proceedings of the Human Factors and Ergonomics Society, 631–635 (2007).

Cao, C. G., Zhou, M., Jones, D. B. & Schwaitzberg, S. D. Can surgeons think and operate with haptics at the same time?. J. Gastrointest. Surg. 11, 1564–1569. https://doi.org/10.1007/S11605-007-0279-8/FIGURES/5 (2007).

Schwartz, A. B. Movement: How the brain communicates with the world. Cell 164, 1122–1135. https://doi.org/10.1016/j.cell.2016.02.038 (2016).

Bao, T. et al. Vibrotactile display design: Quantifying the importance of age and various factors on reaction times. PLoS One 14, e0219737. https://doi.org/10.1371/JOURNAL.PONE.0219737 (2019).

Diederich, A. & Colonius, H. Bimodal and trimodal multisensory enhancement: Effects of stimulus onset and intensity on reaction time. Percept. Psychophys. 66, 1388–1404. https://doi.org/10.3758/BF03195006 (2004).

Sensinger, J. W. & Dosen, S. A review of sensory feedback in upper-limb prostheses from the perspective of human motor control. Front. Neurosci. 14, 345. https://doi.org/10.3389/fnins.2020.00345 (2020).

Botvinick, M. & Cohen, J. Rubber hands ‘feel’ touch that eyes see. Nature 391, 756–756. https://doi.org/10.1038/35784 (1998).

Zbinden, J., Lendaro, E. & Ortiz-Catalan, M. Prosthetic embodiment: Systematic review on definitions, measures, and experimental paradigms. J. NeuroEng. Rehabil. 19, 1–16. https://doi.org/10.1186/S12984-022-01006-6 (2022).

Bekrater-Bodmann, R. Perceptual correlates of successful body-prosthesis interaction in lower limb amputees: Psychometric characterisation and development of the Prosthesis Embodiment Scale. Sci. Rep.https://doi.org/10.1038/S41598-020-70828-Y (2020).

Acknowledgements

We would like to thank Garrett Ung for designing and constructing the instrumented object. Thanks also to Leah Jager for providing her statistical consulting services. We also thank the National Science Foundation for funding the first author through a Graduate Research Fellowship.

Author information

Authors and Affiliations

Contributions

N.T. conceived the experimental design and integrated the hardware and software to run the experiment. N.T. and A.M. ran the user study. N.T. analyzed the data. H.A. advised on the experimental design, use of fNIRS and statistical analyses, and J.D.B. advised on the general experiment and statistical analyses. N.T. wrote the manuscript draft. All authors edited the manuscript and approve its submission.

Corresponding author

Ethics declarations

Competing interests

fNIR Devices, LLC manufactures the optical brain imaging instrument and licensed IP and know-how from Drexel University. Dr. Ayaz was involved in the technology development and thus offered a minor share in the startup firm fNIR Devices. The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. All other authors have no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Thomas, N., Miller, A.J., Ayaz, H. et al. Haptic shared control improves neural efficiency during myoelectric prosthesis use. Sci Rep 13, 484 (2023). https://doi.org/10.1038/s41598-022-26673-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-26673-2

This article is cited by

-

Muscle Oximetry in Sports Science: An Updated Systematic Review

Sports Medicine (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.