Abstract

Load forecast provides effective and reliable guidance for power construction and grid operation. It is essential for the power utility to forecast the exact in-future coming energy demand. Advanced machine learning methods can support competently for load forecasting, and extreme gradient boosting is an algorithm with great research potential. But there is less research about the energy time series itself as only an internal variable, especially for feature engineering of time univariate. And the machine learning tuning is another issue to applicate boosting method in energy demand, which has more significant effects than improving the core of the model. We take the extreme gradient boosting algorithm as the original model and combine the Tree-structured Parzen Estimator method to design the TPE-XGBoost model for completing the high-performance single-lag power load forecasting task. We resample the power load data of the Île-de-France Region Grid provided by Réseau de Transport d’Électricité in the day, train and optimise the TPE-XGBoost model by samples from 2016 to 2018, and test and evaluate in samples of 2019. The optimal window width of the time series data is determined in this study through Discrete Fourier Transform and Pearson Correlation Coefficient Methods, and five additional date features are introduced to complete feature engineering. By 500 iterations, TPE optimisation ensures nine hyperparameters’ values of XGBoost and improves the models obviously. In the dataset of 2019, the TPE-XGBoost model we designed has an excellent performance of MAE = 166.020 and MAPE = 2.61%. Compared with the original model, the two metrics are respectively improved by 14.23 and 14.14%; compared with the other eight machine learning algorithms, the model performs with the best metrics as well.

Similar content being viewed by others

Introduction

Load forecasting is a technique used by the energy-providing utility to predict the electrical power needed to meet the demand and supply equilibrium1. The technique can provide a reference for the daily operation of regional power grids and the formulation of dispatching plans. According to the results of power load forecasting, dispatchers can reasonably coordinate the distribution of the output of each power plant, maintain a balance between supply and demand, and ensure power grid stability. This determines the start-stop arrangement of the generator set, reduces the redundant generator reserve capacity value, and reduces the power generation cost2,3. Time series forecasting with the Machine Learning technique is the application of a model to predict future values through experience and by the use of previously observed values automatically. In recent years, power load forecasting combining machine learning methods, as a special sequence with stable data sources from grid operators or energy utilities, has broad research prospects: Muzumdar etc. propose a mixed model for consumer’s short-term load forecasting, which contained random forest, support vector regressor, and long short-term memory as base predictors to handle varying traits of energy consumption4. Deng etc. proposes a Bagging-XGBoost algorithm for short-term load forecasting model, which can warn the time period and detailed value of peak load of distribution transformer5. Chen etc. proposes a short-term load forecasting framework integrating a boosting algorithm and combined a hybrid multistep method into the single-step forecasting6. Tan etc. proposes a Long Short-Term Memory (LSTM) network based hybrid ensemble learning forecasting model for short-time industrial power loading forecast7. Xian etc. proposed a multi-space collaboration (MSC) framework for optimal model selection to finish the model selection with strong adaptability to in more candidate size of the parameter domain8,9.

From a technical point of view, the regional power grid load has stronger periodic stability than smaller systems or bus-systems, but it has less periodicity than larger national-level systems2. While from an application point of view, for the interconnection and transaction of the national or cross-border grid, the regional power grids are the smallest node unit. So, there is practical value to research its loading forecasting research on power systems. On the other hand, from the perspective of the development of the machine learning algorithm, all core model is usage and powerful enough in pure theory, especially for the boosting ensemble algorithms. But the feature engineering of the dataset or data and hyperparameters tuning of the models themselves will affect the application results greatly. However, the features of dataset and tuning process is interdependent and there is less research now on boosting methods for timeseries without external variable, which (external variable) in some application environment have more importance and the impact of the time series itself on forecast performance would be masked10.

Gradient boosting is a state-of-the-art Machine learning algorithm. The Extreme Gradient Boosting is an important applicated popular algorithm developed by Tianqi in 2014. And because of its excellent performance on regression and classification problems, it is recommended as the first choice in many cases, such as industry and the Internet applications, which is even implemented in machine learning platforms. However, there are still many challenges in applying it for load forecasting. First, the Extreme Gradient Boosting algorithm relies on many hyperparameters to tune during the model building, and the reasonable hyperparameters directly determine the final prediction effect of the model. For the reason that an optimisation algorithm that can balance both data characteristics and model characteristics is very vital11,12. Secondly, when transforming time series into a general supervised regression problem in machine learning, it is complex to construct the data to have both historical memories and ensure the model has sufficient generalization ability after training13,14. The Two issues above are the key to combining Extreme Gradient Boosting even for all Machine learning algorithms with load forecasting tasks or time series.

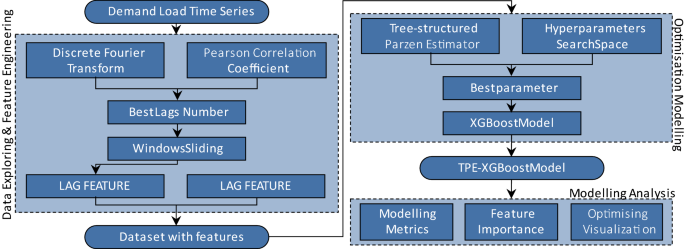

In this study, (1) we completed data exploration for regional power grid consumption demand load data in the Ile-de-France region of France, ensured the best width of the sliding window for the machine learning model by the Discrete Fourier Transform and Pearson Correlation Coefficient methods, and added 5 date features in the dataset for feature engineering work. (2) We designed the TPE-XGBoost algorithm by combining the Tree-structured Parzen Estimator method and the Extreme Gradient Boosting model. By comparing with the original unoptimised model and other 8 machine learning algorithms, our proposed model can effectively improve the prediction performance for power demand load forecasting in the individual testing dataset. (3) We conducted a model evaluation on the TPE-XGBoost model we designed and discussed in detail the feature engineering of the dataset and the modelling effect of the TPE optimisation for the XGBoost model.

Material and methods

Loading forecasting dataset and data exploring

Île-de-France (literally "Isle of France") is one of the 13 administrative regions in mainland France and the capital circle of Paris. The average temperature is 11 °C, and the average precipitation is 600 mm. Île-de-France is the most densely populated region of France. According to the 2019 report, this region provides France with a quarter of jobs in total employment, of which the tertiary sector accounts for near nine-tenths of jobs. Agriculture, forests and natural areas cover nearly 80% of the surface. As well, the region, as the first industrial zone in France, includes electronics and ICT, aviation, biotechnology, finance, mobility, automotive, pharmaceuticals and aerospace.

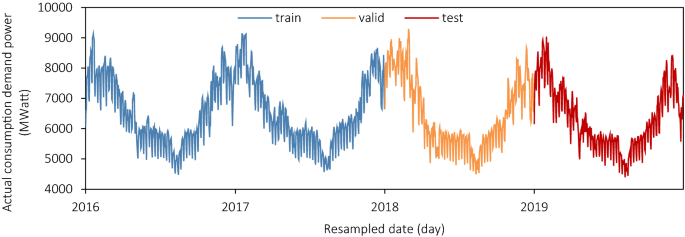

We analysed the power load in the Île-de-France region with a 30-min sampling rate with a total of 70,128 records over four years, from 2016 to 2019. The data is from the éCO2mix API provided by the RTE (Réseau de Transport d'Électricité)15. The original data were resampled as the maximum daily power into 1461 records in Fig. 1, whose y-axis is the real-time demand load power (unit: MWatt). As shown below, the trend between the years of the series is similar and has an evident periodicity; each cycle is V-shaped with visible seasonality. Due to the characteristics of power load and the region's actual situation, each cycle's trend is stable without an apparent growth or decay.

The dataset we collected from éCO2mix is divided into three parts, the blue training dataset (2016, 2017), the orange validation dataset (2018) and the red testing dataset (2019) in Fig. 1. The training one builds the main models, and the validation one is analysed for optimisation eval. And testing one will check the models’ performance on several different metrics. The data exploring part of the time series will be finished in the validation one in 2018 to avoid data leakage.

In the following Table 1, we use feature engineering to transform time series into a supervised learning dataset for machine learning as the additional date feature16.

Finally, loading forecast values at the first N moments will be added to the dataset as a memory feature in the form of a sliding window called momery_feature_1 ~ momery_feature_N. However, the choice of N, the memory length or the time lag is not casual. We will use a method combining Discrete Fourier Transform and Pearson Correlation Coefficient to complete the memory length determination.

The best width of windows analysis

Data-driven loads forecasting issues of machine learning require the datasets to be produced in the form of sliding windows. Then, the time series issue transforms into a supervised regression in machine learning. And there is a complex effect on the window width or called lags count of the dataset. The longer width of the window, the more abundant the memory information as more features in the sample. However, for the machine learning algorithms based on statistics experience, more features would cause unideal results for practical application by too many irrelevant features. On the other hand, too short a window means fewer features, which might be underfitting for insufficient information.

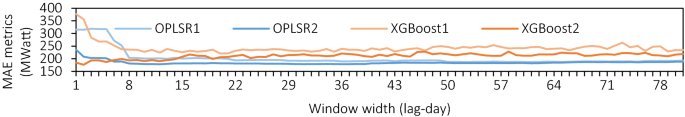

Figure 2 above is the effect of different window widths in the testing dataset of the XGBoost model and Linear Regression (OPLS), whose x-axis is the window’s width of data features and the y-axis is the mean absolute error (units: MWatt) in the testing dataset (2019), and the models indexed 1 mean no date features adding. It can be seen that the relationship between the performance and window width is not a simple linear relationship. This figure shows a dramatic decline in MAE with wider windows, it reached a low point, and then the MAE fluctuates within a specific range and worsens when the windows widen.

The Fourier Transform is a practical tool for extracting frequency or periodic components in signal analysis. Generally, the synthetic signal \(f\left(t\right)\) can be converted to frequency domain component signals \(g(freq)\) as below if it satisfies the Dirichlet conditions in the range of \((-\infty ,+\infty )\):

the power loads time series in this paper are sampled discretely with limited length, and the Fast Discrete Fourier method proposed by Bluestein17 is used instead as below:

where \(N=365\) is from the validation dataset in 2018, and the \(freq\) series contain the frequency bin centers in cycles per unit of the sample spacing with zero at the start. The second half of \(freq\) series is the conjugate of the first half, only the positive is saved. And bring \(period=\frac{1}{freq}\) back as below:

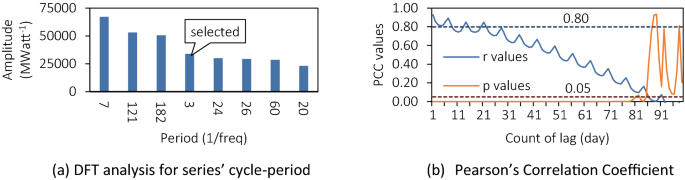

Remove the \(inf\) and the \(period=N\) item \(g(period)\), and the first eight amplitude of \(period-g\left(period\right)\) bar plots as shown in Fig. 3a18. Some of the periods are related to the natural time cycle: 121 as a quarter of a year, 182 as a semi-annual. And not all of the meaning is clear, such as 3, 24 and 26, which are difficult to have sufficient explanation.

Further, the Pearson correlation coefficient (PCCs) are used to calculate a more detailed period. The PCCs measure the linear relationship between two datasets as below:

where the \({\varvec{x}}({t}_{0})\) is the series to predict as y target of dataset and the \({\varvec{x}}\left({t}_{0}-\delta \right)\) is the \(\delta \) lag series of \({t}_{0}\). The larger PCC means there are more correlated relationships between two series.

In Fig. 3b, the X-axis is the time interval numbers and the Y-axis is the Pearson’s Correlation Coefficient values (blue) and Two-tailed p-value (orange). According to experience in the general statistical sense19, when the Pearson Coefficient is greater than 0.80 (blue dotted line), it can be considered that the two series have a strong correlation; when the p-value is less than 0.05 (orange dotted line), the hypothesis is established. The orange curve shows that the about first 50 memory features have a positive correlation with the predicted target, so the minimum period should be less than 50. Furthermore, Memory-feature 1 ~ 5 have Pearson’s correlation coefficient values greater than 0.80; that is, the values within 5 are strongly correlated. And the number of periods in the FFT to satisfy this value requirement is 3, therefore, our model will use 3 as the window width.

We will further compare the three kinds of widths, 7, 14 and 28, as a control to complete the sequential modelling.

Extreme gradient boosting optimised by tree-structured Parzen estimator

Gradient Boosting originates from the paper by Friedman in 201120. XGBoost is an open-source software library of extreme gradient boosting developed by CHEN Tianqi21 that ensembles tree models by a series of strategies and algorithms such as a greedy search strategy based on gradient boosting. As an additive ensemble model, XGBoost considers the gradient of first-order derivative and second-order derivative in the Taylor series for the loss function and constructs in the case of probability approximately correct (PAC). The objective function is as follows:

where the \(t\) means the rounds of ensemble processing and the \(\omega \) means the regularisation part.

Take a second-order Taylor expansion on the loss function and add the parameters of the tree structure in the regular term, Then the objective function transforms into below:

where the \(g\) and the \(h\) is the derivative term of the loss function; the \(T\) and \(\omega \) are the parameters of ensembled decision trees’ structure parameters; \(\gamma \) is the minimum loss required for further partitioning on the leaf nodes of single tree; \(\lambda \) is the L2 regularization term.

A greedy strategy to solves the \(obj(t)\) for a local optimal solution \(\upomega =-\frac{G}{\lambda +\mathrm{H}}\) then Bring back:

With the meta, weak learner \(t\) generated in each round, \(bes{t}_{obj\left(t\right)}\) is used as the basement strategy for the growth of the decision tree, which controls the generalisation ability for the boosting process.

Most specific detail for XGBoost can refer to the paper, XGBoost: A scalable tree boosting system21.

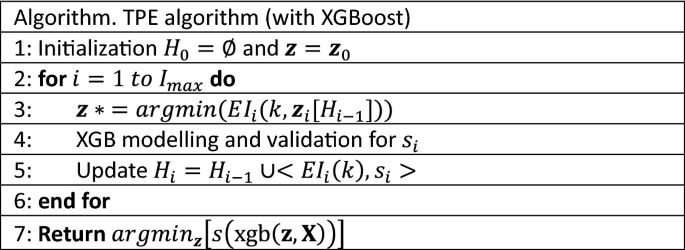

XGBoost is a powerful ensemble algorithm, and there are numerous hyper-parameters to tune for the best performance in application, however, it is a black-box process widely recognised22. We adopt the Tree-structured Parzen Estimator23 (TPE), one of the sequential model-based optimisation methods (SMBO) based on the Bayesian theorem, to optimise our XGBoost model for time-series forecasting. The TPE pseudocode as below shown in Fig. 4:Where the \({\varvec{z}}\) is the set of hyperparameters of the search space, the \(s\) is the metrics score of XGBoost with \({\varvec{z}}\) in the validation dataset, and the \(H\) is the history of validation scores and the selected \({\varvec{z}}\).

The EI is the core of TPE, which builds a probability model of the objective function and uses it to select the most promising hyperparameters to evaluate in the true objective function23:

the \(l({\varvec{z}})\) is the value of the objective function less than the threshold \(k\), and \(g({\varvec{z}})\) is the objective function greater than the threshold.

We first build the XGBoost model (XGBoost) by default values in Table 2, and then nine hyper-parameters in Table 2 are going to be optimized in the searching space below by the TPE algorithm for TPE-XGBoost models.

Alpha: a regularization parameter in meta learners’ ensemble; Decreasing it will give model looser constraints. And this is the only hyperparameter in this paper to control model in the ensemble level by regularization.

Learning rate: a weight parameter for new meta learners to slow down the boosting ensembles; smaller learning rate would make ensemble slower but more conservative.

Max depth of single tree: a parameter of meta models struction; deeper tree would more complex but more likely overfitted.

Minimized child weight: a parameter of meta models struction by controlling leaf nodes. If a leaf node with the sum of instance weight less than it, the node will be given up as a leaf; Too small weight would make ensembled model easy to underfit.

Minimized split loss: a parameter for meta models building by leaf nodes construction. The nodes would be abandoned if the loss less than this parameter; The larger it is, the more conservative the algorithm would be.

Subsample: it is samples counts ratio of subset for training; A balanced Subsample can prevent overfitting, but too small subsample would make models hard to satisfy application.

Col sample by tree/level/node: it is a subsample for features as Subsample above did.

We limit the tuning iterations to 500 and the target of each iteration will be set of the MAPE in the validation dataset (2018). And the Fig. 5 is the whole flow process in this paper.

Results

Prediction models of testing dataset

XGBoost and TPE-XGBoost we recommended have been modelled in four kinds of windows width data: 3d, 7d, 14d and 28d in train dataset (2016, 2017). In addition to this, eight other below machine learning algorithms have been conducted as comparative experiments on at the same time, including:

-

Three ensemble models: Gradient Boosting Decision Tree models (pGBDT) and Adaptive Boost models (Adaboost) based on scikit-learn; Random Forest models (RandomForest) based on XGBoost.

-

One linear model: built by Ordinary Least Squares Method (OPLSR).

-

One Support Vector Machine model: based on libsvm algorithm with a Radial basis function kernel (RBF-SVR).

-

One Neural Network model: Perceptron model (Perceptron) with triple hidden layers shaped (256, 128, 64) built by scikit-learn framework.

-

One Neighbours Model: K-Nearest Neighbours model (KNN) with Euclidean distance metrics.

-

Single Decision Tree model: Tree (SingleTree) model built by scikit-learn framework without max depth limit as the contrast of ensemble models.

Figure 6 shows mean absolute error values (MAE values) respectively, where the top model valued at 166.02 is the XGBoost optimised by the TPE algorithm with data wide of 3d.

Obviously, TPE method does improve XGBoost performance. All MAE metrics of four XGBoost models trained with different windows width data are apparent to improve after being optimised by the TPE algorithm. They decrease from 193.57, 199.46, 197.82, 209.93 to 166.02, 184.11, 184.65, 185.09 respectively, whose optimization achieves 14.23, 7.70, 6.66, and 11.83%.

The MAEs of Five ensemble learning methods (TPE-XGBoost, XGBoost, pGBDT, Random Forest and Adaboost) get a slight rise with longer windows. This proves from the side that proper selection of window width is vital for such ensemble learning models to predict time series correctly. However, OPLSR, Perceptron, and RBF-SVR models have the opposite trend after training with more previous features.

But as Fig. 2 shown, this trend would reach a limit value not as good as TPE-XGBoost models’ scores and then it will begin fluctuating in ranges. The best MAE during the 1–81 is 178.539 (width = 31), which gaps obviously with our TPE-XGBoost with 166.02. We will discuss in-depth for this phenomenon in the next part of our paper.

Another three metrics (MAPE, R2 and MaxError) from the testing set are listed partly in Table 3. Shorter windows of our method can reach higher on two overall metrics: MAPE = 2.61%, R2 = 0.9471. And the max residual metrics don't result in ideal results ranged 1183–1436. However, the 3d-TPE-XGBoost’s max residual is still in an acceptable value on par with the best 14d-OPLSR model, scored 895, and the TPE process suppresses it compared to XGBoost not optimised to a certain extent in 7d, 14d and 21d data.

Figure 7 is the sequence comparison figure of random selected 21-day predicted and real values among four seasons of 2019. The model of 3d we proposed can make excellent predictions of the periodic and frequency trend of the real time series, mutually confirmed by its better MAE, MAPE, and R2 metrics.

The TPE-XGBoost model performs well before December. A suppressing fixing by TPE can be observed compared to the unoptimised XGBoost method, especially in January, April and June. The December series forecast is terrible. This month, the negative impact of almost all models is contributed by the max residual of 3d-TPE-XGBoost.

TPE optimisation processing for XGBoost models

Three tuning methods that were also applied to the Boosting method widely are chosen as the control group. All four methods have the same search space (Table 2), and the input feature of dataset are 3d, which is as the style with the results of above section. Three control group with XGBoost is as follows:

-

Random Search24: Random search, also called black-box methods, is a family of numerical optimization methods that do not require the gradient of the problem to be optimized, and RS can hence be used on functions that are not continuous or differentiable.

-

Simulated annealing25: Simulated annealing is a probabilistic technique for approximating the global optimum of a given function. Specifically, it is a metaheuristic to approximate global optimization in a large search space for an optimization problem.

-

Evolution Strategy: We chose Lightweight Covariance Matrix Adaptation Evolution Strategy26, where CMA-ES is a stochastic, or randomized, method for real-parameter (continuous domain) optimization of non-linear, non-convex functions.

Table 4 is the results of the optimisation processing. And the TPE row is the same as above sections. Compared with vanilla XGBoost + 3d (MAE = 194), the model optimized by tuning methods has better performance generally from metrics. This proves that tuning hyperparameters for model optimization has the effect of improving model performance without the changing of core Boosting algorithms generally.

The TPE method we recommended have achieved the best results in three of the four metrics and the second best in the MaxError, which proves the effectiveness of the method. Indirectly, it can also be preliminarily demonstrated that the SMBO method has better applicability for regional loading forecast.

The visualization process of nine hyperparameter tuned by the TPE algorithm is shown in Fig. 8. The object value for loop iterations is set to the MAPE (mean absolute percentage error) from the validation dataset in 2018, which is neither included in the training nor the testing dataset. After 500 iterations of learning, the model can gain an acceptable excellent target in the validation set of MAPE = 0.02647. With the iterations increasing, the validation target is gradually distributed to a tight range. The max depth of the trees in XGBoost is selected to 3 in a range from 2 to 5; the learning rate(eta) is around 0.11 from 0.02 to 0.2.

The best hyperparameter set appearing in the 499th iteration of 500 rounds is listed in Table 5. More searching rounds would gain a better MAPE of validation set but it needs more time to run.

As an ensemble algorithm based on the tree model, FI (feature importance rate) stands for the weights in the modelling of features in the dataset. Figure 9 shows the two different width series (3d we preferred and longer 7d) of TPE-XGBoost and the Unoptimized one. The models optimized have higher FI values of the features before tn1 time of 3d one: almost 40% FI values of tn2&tn3 but the unoptimized one’s less than 10%. Wider windows models focus more on tn6 and tn7, and the TPE processing rises the rate of FI values of them and suppresses the contribution of the tn2 ~ tn5.

Discussion

-

The power load data has a clear time continuity. That is the load data will not change abruptly only in the case of extreme events (such as grid crashing, etc.). This is the reason why linear regression (OPLSR) and the simplest model perform still well for the wider windows. The XGBoost method, even most machine learning methods, is based on historical data and does not have the concept of temporal continuity. We make sliding windows to provide the memory for them and so transformed time series problems into regression problems, trains and forecasts data will reference through this window feature. As for the TPE method, from the discussion of FI above, it can be seen that it suppresses the modelling weight of the near memory features, and increases the model's attention to farther ones. It is the immediate reason why the TPE method can improve modelling performance by hyperparameters controlling.

We believe that it is necessary to use the minimum period as the window width, which is a targeted treatment of the continuity characteristics of time series or load forecasting data. The window with the shortest period includes at least the complete memory of the data of interest and does not contain redundant information of multiple periods. Although a wider window will provide richer historical information, the XGBoost algorithm's focus on data continuity will suffer, which is regulated to control the risk of generalization.

We believe that the main impact of adding the date features to the model is to ensure that the algorithm can have the ability to extract other periodicities. Time series data, including our load data, is of course highly cyclical, and the cycles it contains may be related to the real world with clear explanations. Monthly and weekly data are also cyclical, with stronger or weaker correlations between these cycles. Therefore, we have added five date features to the feature engineering. The five date features can help the algorithm to extract information from multiple cycles as much as possible. If there is no data feature, machine learning methods will maybe not perform effective fitting and prediction on periodic time series.

-

TPE-XGBoost as mentioned above is a two-step process with modelling-then-validation, that is, given the hyperparameter search space of XGBoost, adjusted and optimized by TPE for finally taking the increase or decrease of a certain target objective value as the goal. In the optimization process, which kind of metrics to use and where the metrics are from are two crucial issues.

Usually, the optimization target metrics are one of the metrics for evaluating the modelling. Such as MAE or RMSE, two reasonable targets can both describe the error value between predicted data and real data from a certain scale. However, the metrics for optimization are different from evaluating purposes. In our TPE-XGBoost algorithms, setting MAPE as the target value through the data modelling from 2016 to 2017 and predicted of true values of 2018 by 500 iterations, the MAPE can effectively improve MAE\RMSE\R2, etc. Other evaluation metrics can only improve their own performance in an independent test dataset. Other metrics are not unchanged but are less obvious than MAPE.

In addition, the usual source of objective values is the k-folds Cross-validation of the training set itself, this way can maximize the use of existing sequences for modelling and evaluation when the data is not sufficient. However, this method, as literally stated, needs to use 5 times the calculation of single modelling for repeated fitting, and the obtained k-folds have great differences in the dataset in this paper no matter what metrics are used, resulting in a slow and ineffective optimization process. In fact, for the load forecasting request itself, the data is abundant, and even several years of historical data can be traced back at power grid operators, and there is no problem of insufficient data. In the machine learning method, if such a dataset is used for the validation of the model, it is necessary to ensure that the training data and the validation data should be independent and identically distributed, and our training dataset, validation dataset and test dataset, no doubt, are all in the form of there is actually real-world data, and the data itself is consistent, so our approach of using an independent validation set is correct.

Therefore, as described in this paper's results and discussion, we propose to adopt MAPE metrics from a separate validation dataset as objective values in the optimization process of TPE-XGBoost.

-

This paper does not consider the introduction of external variables, and only studies from the time series itself, but it also achieves reliable forecasting results. This is because the external variables such as temperature, wind speed and social practice commonly used in load forecasting problems can be replaced by the date feature we introduced. These external variables are also periodic and to be predicted, and the function of the date feature is to provide a calibration reference for the memory of the time series from another aspect. Such a calibration reference that has an independent and identical distribution in the dataset is more valuable than the actual wind speed and other data.

Conclusions

The results in this paper show that (1) fewer window features are capable to revert the power loads time series we are concerned about. The three-day width of windows analysed from FFT and Pearson correlation have enough information to do better than longer ones. (2) XGBoost as a practical and effective algorithm can achieve the forecasting task with fewer features, however, there is remarkable necessary to add optimising processing by the TPE method. Hyperparameters from TPE will get the most out of the performance of XGBoost with short windows.

In summary, the optimal window width of the time series data is determined in this study through Discrete Fourier Transform and Pearson Correlation Coefficient Methods, and five additional date features are introduced to complete feature engineering. And TPE optimisation ensures nine hyperparameters’ values of XGBoost and improves the models obviously. By fitting demand series from 2016 to 2018, the TPE-XGBoost model we designed has an excellent performance of MAE = 166.020 and MAPE = 2.61% in 2019 of single lagging. Compared with the original model, the two metrics are respectively improved by 14.23 and 14.14%; compared with the other eight machine learning algorithms, the model performs with the best metrics as well.

Data availability

Data are available from the éCO2mix of Français Réseau de Transport d’Electricité(French language) website at https://www.rte-france.com/eco2mix , or get the copy at https://github.com/gniqeh/TPE-XGB-TS by MIT License.

References

Hong, T., Wang, P. & Willis, H. L. A Naïve multiple linear regression benchmark for short term load forecasting. In 2011 IEEE Power and Energy Society General Meeting 1–6 (IEEE, 2011). https://doi.org/10.1109/PES.2011.6038881.

Hong, T. & Fan, S. Probabilistic electric load forecasting: A tutorial review. Int. J. Forecast. 32, 914–938 (2016).

Hong, T. & Wang, P. On the impact of demand response: Load shedding, energy conservation, and further implications to load forecasting. In 2012 IEEE Power and Energy Society General Meeting 1–3 (IEEE, 2012). https://doi.org/10.1109/PESGM.2012.6345192.

Muzumdar, A. A., Modi, C. N. & Vyjayanthi, C. Designing a robust and accurate model for consumer-centric short-term load forecasting in microgrid environment. IEEE Syst. J. 16, 2448–2459 (2022).

Deng, X. et al. Bagging–XGBoost algorithm based extreme weather identification and short-term load forecasting model. Energy Rep. 8, 8661–8674 (2022).

Chen, Z., Chen, Y., Xiao, T., Wang, H. & Hou, P. A novel short-term load forecasting framework based on time-series clustering and early classification algorithm. Energy Build. 251, 111375 (2021).

Tan, M. et al. Ultra-short-term industrial power demand forecasting using LSTM based hybrid ensemble learning. IEEE Trans. Power Syst. 35, 2937–2948 (2020).

Xian, H. & Che, J. Multi-space collaboration framework based optimal model selection for power load forecasting. Appl. Energy 314, 118937 (2022).

Lv, S.-X. & Wang, L. Deep learning combined wind speed forecasting with hybrid time series decomposition and multi-objective parameter optimization. Appl. Energy 311, 118674 (2022).

Bergmeir, C., Hyndman, R. J. & Benítez, J. M. Bagging exponential smoothing methods using STL decomposition and Box-Cox transformation. Int. J. Forecast. 32, 303–312 (2016).

Putatunda, S. & Rama, K. A Comparative analysis of hyperopt as against other approaches for hyper-parameter optimization of XGBoost. In Proceedings of the 2018 International Conference on Signal Processing and Machine Learning—SPML ’18 6–10 (ACM Press, 2018). https://doi.org/10.1145/3297067.3297080.

Elsayed, S., Thyssens, D., Rashed, A., Jomaa, H. S. & Schmidt-Thieme, L. Do we really need deep learning models for time series forecasting?. https://doi.org/10.48550/ARXIV.2101.02118 (2021).

Norwawi, N. M. Sliding window time series forecasting with multilayer perceptron and multiregression of COVID-19 outbreak in Malaysia. In Data Science for COVID-19 547–564 (Elsevier, 2021). https://doi.org/10.1016/B978-0-12-824536-1.00025-3.

Mozaffari, L., Mozaffari, A. & Azad, N. L. Vehicle speed prediction via a sliding-window time series analysis and an evolutionary least learning machine: A case study on San Francisco urban roads. Eng. Sci. Technol. Int. J. 18, 150–162 (2015).

Eco2mix—Toutes les données de l’électricité en temps réel|RTE. https://www.rte-france.com/eco2mix.

Massaoudi, M. et al. A novel stacked generalization ensemble-based hybrid LGBM-XGB-MLP model for Short-Term Load Forecasting. Energy 214, 118874 (2021).

Rao, K. R., Kim, D. N. & Hwang, J. J. Integer fast fourier transform. In Fast Fourier Transform—Algorithms and Applications 111–126 (Springer Netherlands, 2010). https://doi.org/10.1007/978-1-4020-6629-0_4.

Puech, T., Boussard, M., D’Amato, A. & Millerand, G. A fully automated periodicity detection in time series. In Advanced Analytics and Learning on Temporal Data Vol. 11986 (eds Lemaire, V. et al.) 43–54 (Springer International Publishing, 2000).

Benesty, J., Chen, J., Huang, Y. & Cohen, I. Mean-squared error criterion. In Noise Reduction in Speech Processing, Vol. 2 1–6 (Springer, Berlin, 2009).

Friedman, J. H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 29, 1189–1232 (2001).

Chen, T. & Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 785–794 (ACM, 2016) https://doi.org/10.1145/2939672.2939785.

Turner, R. et al. Bayesian optimization is superior to random search for machine learning hyperparameter tuning: Analysis of the black-box optimization challenge 2020. https://doi.org/10.48550/ARXIV.2104.10201 (2021).

Ozaki, Y., Tanigaki, Y., Watanabe, S. & Onishi, M. Multiobjective tree-structured parzen estimator for computationally expensive optimization problems. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference 533–541 (ACM, 2020). https://doi.org/10.1145/3377930.3389817.

Claesen, M. & De Moor, B. Hyperparameter search in machine learning. https://doi.org/10.48550/ARXIV.1502.02127 (2015).

Dowsland, K. A. & Thompson, J. M. Simulated annealing. In Handbook of Natural Computing (eds Rozenberg, G. et al.) 1623–1655 (Springer, Berlin, 2012). https://doi.org/10.1007/978-3-540-92910-9_49.

Hamano, R., Saito, S., Nomura, M. & Shirakawa, S. CMA-ES with margin: Lower-bounding marginal probability for mixed-integer black-box optimization. In Proceedings of the Genetic and Evolutionary Computation Conference 639–647 (ACM, 2022). https://doi.org/10.1145/3512290.3528827.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study' s conception and design. Z.Q. and X.W. wrote the main manuscript text, plotted figures, prepared the code and finished the data analysis. H.B. and W.J. provided the crucial ideas of the Fourier transform. F.J. provided the core concept for the optimisation part and power loads data. These authors contributed equally and should be regarded as co-first authors: Z.Q., X.W. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Qinghe, Z., Wen, X., Boyan, H. et al. Optimised extreme gradient boosting model for short term electric load demand forecasting of regional grid system. Sci Rep 12, 19282 (2022). https://doi.org/10.1038/s41598-022-22024-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-22024-3

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.