Abstract

Rapid and accurate detection of a C-shaped root canal on mandibular second molars can assist dentists in diagnosis and treatment. Oral panoramic radiography is one of the most effective methods of determining the root canal of teeth. There are already some traditional methods based on deep learning to learn the characteristics of C-shaped root canal tooth images. However, previous studies have shown that the accuracy of detecting the C-shaped root canal still needs to be improved. And it is not suitable for implementing these network structures with limited hardware resources. In this paper, a new lightweight convolutional neural network is designed, which combined with receptive field block (RFB) for optimizing feature extraction. In order to optimize the hardware resource requirements of the model, a lightweight, multi-branch, convolutional neural network model was developed in this study. To improve the feature extraction ability of the model for C-shaped root canal tooth images, RFB has been merged with this model. RFB has achieved excellent results in target detection and classification. In the multiscale receptive field block, some small convolution kernels are used to replace the large convolution kernels, which allows the model to extract detailed features and reduce the computational complexity. Finally, the accuracy and area under receiver operating characteristics curve (AUC) values of C-shaped root canals on the image data of our mandibular second molars were 0.9838 and 0.996, respectively. The results show that the deep learning model proposed in this paper is more accurate and has lower computational complexity than many other similar studies. In addition, score-weighted class activation maps (Score-CAM) were generated to localize the internal structure that contributed to the predictions.

Similar content being viewed by others

Introduction

Root canal systems have many anatomical variations, some of which can significantly increase the difficulty of endodontic treatments1. Cooke et al.2 first explicitly proposed the existence of type C root canals in mandibular second molars2. Walker et al. found a high incidence of type C root canals in mandibular second molars of 52% in China3. The root canal orifice of type C was 180°. The C-shaped banded grooves with the reticular connection between the root canals form a structurally complex isthmus4. The root cross-section of the C-shaped root canal is “C” type because the tooth germ on the buccal, lingual side of the root is not or only incompletely fused during development, resulting in the formation of crown roots with longitudinal groove and deformation of the extruded root canal5. Therefore, it becomes a challenge for clinicians to treat C-shaped root canal teeth.

Panoramic radiography remains the standard imaging method during preoperative assessment of the tooth root canal6. It displays each dentition and jaws on a single film through a fast and efficient process7. Cone-beam CT (CBCT) is a common technique used by dentists to accurately assess root and root canal morphology. Nevertheless, CBCT has a larger radiation volume for patients than panoramic radiography. It is not suitable to just make a simple diagnosis for patients8,9. Therefore, direct identification of C-shaped tooth root and root canal morphology by panoramic images will be effective in helping clinical diagnosis.

However, the correct identification of C-shaped root canals requires many years of experience. The same physician’s judgment of tooth root canals by CT can be limited by time and fatigue. Radiology awareness was shown to reduce the performance of root canal classification detection in the interpretation of CT of teeth due to long working hours10. Accurate classification of the type of tooth root canal plays a crucial role in the clinical management of the patient as well as in the prognosis.

Deep learning11,12, a hot research direction in the field of machine learning, has made breakthrough progress in computer vision and other artificial intelligences in recent years. CNNs are the most commonly used techniques when processing images because of their ability to extract rich details from the input information13. They have been successfully for image-based automated diagnosis in various fields, including lesion detection or classification14,15,16,17,18,19 and medical image segmentation20,21,22,23. In the field of dentistry, studies are being conducted using CNNs on various topics: morphological classification of teeth24, classification of dental implant systems25,26, dental caries27, mandibular canal segmentation28, and segmentation of teeth29. However, in pursuit of a higher precision effect, most of these studies often ignore the computational and parametric sizes of the CNN models used. Neural networks are typically over-parameterized and there is significant redundancy for convolutional neural network models30. Such CNNs are not suitable for hardware resource-constrained environments31.

This study proposes a new lightweight CNN structure that is more suitable for C-shaped root canal detection. The proposed method contains a module that can simulate human visual perception, which improves accuracy while reducing the number of parameters and computational complexity. This design will greatly optimize the use of CNN models in resource-constrained environments.

Material and methods

Classification of the root canal of mandibular second molar teeth

There are different variants of mandibular second molars, which mainly have two canals, three canals and C-shaped root canals32. In the clinical practice of root canal therapy, dentists use radiographs to assess the patient’s teeth and root canals, obtain relevant clinical information and predict the treatment difficulties. Nevertheless, dentists usually take much time and energy to judge the root canal type based on radiological images. They have difficulty determining the root canal type directly from panoramic images. In order to gain the dentists’ accuracy when assessing the mandibular second molar using only panoramic images, two dentists on the team classified the datasets blind to the labels. This work uses the Kappa33 test to calculate consistency with ground truth and its classification scores based on the classification scores of two dentists. Finally, these correlation values are constructed into a confusion matrix and the results are presented in Fig. 1. The coefficient of agreement between the two dentists and the ground truth were 0.301 and 0.413 respectively, and the coefficient of agreement between the two dentists was 0.46. It can be seen that dentists cannot well judge the root canal type of mandibular second molars from panoramic images.

Dataset

This prospective work was conducted at the Department of Stomatology, Shanxi Provincial People’s Hospital. This research included panoramic image data from patients who received in-hospital dental care between August 2019 and November 2020 and obtained informed patient consent. Table 1 below describes the gender and age information of the patients included in this work.

The data set was marked by a radiologist with 5 years of professional experience and consisted of 384 panoramic images. The first step is the cutting stage. The area related to the mandibular second molar is selected by the manual cutting method and its size is adjusted to 90 × 90. The non-square graph is filled with zero. The second step is the cleaning stage, without the tooth with structure damage, low resolution and other unsatisfactory tooth images. After that, two team dentists conducted a second review of the data set and confirmed the availability of the basic facts. The final number of images: 361 cases of C-shaped root canals and 364 cases of non-C-shaped root canals. Figure 2 shows the whole process of data set production.

The lightweight convolutional neural network with RFB

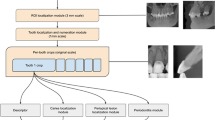

In this paper, a new neural network structure is proposed to classify root canal types, as shown in the flowchart in Fig. 3. The crucial task in this work is the classification of C-shaped root canals and non-C-shaped root canals.

The workflow chart for classification of the tooth root canal. Cut out the mandibular second molar tooth image from the panoramic image produced from the original image data. The tooth image data obtained in the previous step was inputted into different network systems to classify and compare the results. The tooth image data gained in the last step was inputted to the proposed network combining increasing receptive field modules and classical network structure method. Finally, Score-CAM is used to visualize where the network focuses when making classification decisions.

Considering the hardware constraints of the equipment and the time constraints of actual operation, this paper proposes a network FARFB that has been repeatedly debugged and modified and the network structure gives the model the best test accuracy. The network structure is shown in Fig. 4. Various network architectures and methods such as LeNet, Vgg16 and Xception were also tried in this document. The results of different network structures and methods on this data set are shown in Table 2. Experimental results show that the proposed method has better accuracy and lower resource consumption.

The network structure of FARFB (A). The capital letters A, C, F, P, S and S denote the Global Average Pooling, Convolutional, Full Connection, Pooling and SoftMax respectively. The values represent the number of channels, width and height of the feature maps. The composition structure of receptive field block (RFB) (B).

The network’s last layer is replaced by the SoftMax layer, which is used to classify several categories. After trying different configurations, this paper used 32 batches and an SGD optimizer with a momentum of 0.9 and a learning rate of 0.001. The function used to calculate the loss was the NLLLoss. This paper also used a 30-degree rotation range, horizontal flips and parallel shifts from height or width for data augmentation in the training set. This paper uses LeNet, Vgg16 and Xception to do the same calculations and keep the same parameters (except that the input image is 224 × 224 and 299 × 299 pixels). Finally, this paper chooses a combination of a lightweight convolutional neural network and RFB module as the best architecture for the current problem. Finally, to better understand the effect of the network, this paper can visually explain it and make better decisions on the model. This paper uses score-weighted class activation mapping (Score-CAM)34 to look at the areas where the network focuses when making decisions.

Multi-scale receptive fields block

FARFB proposes to extract multi-scale receptive domain features to preserve the details of tooth images and better detect C-shaped root canals. Therefore, this work has to design a network with kernels of different sizes, such as 3 × 3, 5 × 5. To lightweight this method, this paper uses a small convolution kernel instead of a large one, which usually significantly increases the temporal complexity of the model. In this work, Receptive Field Block (RFB)35 was introduced into this network structure for the sake of lightweight and better classification. RFB is combined with a lightweight CNN model for extracting deep semantic features from the images. In particular, RFB uses a multi-branch convolution structure and different size convolution kernels correspond to different receptive fields, applies extended convolution layers36 to control their eccentricities and reshapes them to produce the final representation. In the proposed method, RFB is used to find the deep and rich details of tooth root canal images.

In FARFB, an RFB module is added to the backbone network, and a branch with an RFB module is added to obtain different levels of features and feature fusion is performed at the end of the network structure. The composition structure of RFB is shown in Fig. 4B. In RFB, in addition to using 3 × 3 convolution layer to replace the 5 × 5 convolution layer, 1 × 3 and 3 × 1 convolution layers are also used to replace the 3 × 3 convolution layer. The main purpose of this is to effectively reduce the amount of computation and parameters. In addition, replacing this structure enables RFB to have a richer semantic information learning ability. A corresponding dilated pooling layer follows each branch’s specific kernel-size convolution layer. Kernel size and dilated manipulation have similar positive correlations with RF size and eccentricity in the human visual cortex. Finally, the feature mappings of all branches are connected. This makes RFB precisely what this paper needs to extract multi-scale features and minimize time complexity simultaneously. The most important reason for using RFB is that it can extract very detailed features required for medical image classification.

Training and evaluation

From the initial data set, 30% of images of each category are tested separately. A data set composed of 114 images of non-C-shaped root canals and 108 C-shaped root canals was generated. Then, the remaining images (250 images of non-C-shaped root canals and 253 images of C-shaped root canals) were used for training. The partitioning of the data set is done using open-source random algorithms. This process is shown in Fig. 5.

This paper ran the model with 150 iterations and used a premature stop strategy with ten iterations. This paper used Pytorch, a well-regarded open-source framework for building deep learning networks, running on Ubuntu 18.04.2 LTS with NVIDIA GeForce GTX 2070. This paper calculated the accuracy of each network in judging C-shaped and non-C-shaped root canals and measured the performance of the CNN model using area under the receiver operating curve (AUC), precision, recall and F1-score37. Every value was averaged among the fivefolds. Finally, this paper compares the diagnostic performance of the proposed method with the diagnosis of dentists, where one dentist (experience > 5 years) and another dentist (experience > 8 years) predicted C-shaped canals from panoramic images. Dentists evaluated panoramic images of the mandibular second molars corresponding to all test sets. The accuracy, precision, recall and AUC were calculated and compared with the proposed method.

Ethical approval

Research on human participants has been ethically reviewed and approved according to the requirements of the ethics committee of Taiyuan University of technology. According to national legislation and institutional requirements, this study has obtained written informed consent from the investigators.

Result

Performance of FARFB

Using the above FARFB model, this work achieved an average accuracy of 0.9838 for the C-shaped root canal classification of mandibular second molars (Table 2). At the same time, this paper also received other performance indices of the model, such as specificity, sensitivity and AUC of 0.9772, 0.9888 and 0.9960, respectively (Fig. 7). For the training results of the proposed method, this paper uses the fivefold cross-validation to get the average as this final result. With fivefold cross-validation, the accuracy of each fold of the model was 0.9775, 0.9865, 0.9820, 0.9820 and 0.9910, respectively. From the results, it can be seen that the proposed method has very excellent performance on each fold.

Ablation study

In this work, the method was developed in combination with the RFB module. Therefore, in this ablation study, the RFB module was removed from the proposed method as the baseline method and named it FANet. This paper compares the FARFB, which only adds the RFB module to the network structure. In this work, the same dataset and hyperparameter settings were used for both methods. The results and network model indicators of the two methods on this dataset are shown in Table 2.

The results show that the FARFB method performs better. Although FANet has fewer parameters and fewer computation than FARFB, the results of FANet are not satisfactory. FARFB achieves more than 3% higher accuracy with slightly increased parameters and calculations than FANet. This result verifies the effectiveness of the proposed FARFB method.

Comparison with traditional methods

In Fig. 6 and Table 3, this paper compares the proposed method with traditional methods in terms of accuracy, sensitivity, specificity and AUC. The accuracy, sensitivity, specificity and AUC of LeNet were 0.9117, 0.8893, 0.9298 and 0.9680, respectively. The accuracy, specificity, sensitivity and AUC of Vgg16 were 0.9712, 0.9880, 0.9704 and 0.9888, respectively. The accuracy, sensitivity, specificity, sensitivity and AUC of ResNet18 were 0.9784, 0.9737, 0.9834 and 0.9890, respectively. The accuracy, specificity, sensitivity and AUC of Xception were 0.9649, 0.9561, 0.9703 and 0.9860, respectively. From the above, it emerges from this paper that the proposed method shows a specific improvement in each performance index compared to the traditional methods.

In addition, as shown in Fig. 7, the proposed method has a minimum number of parameters and a relatively small amount of calculation. The parameters of FARFB, LeNet, vgg16, resnet18 and Xception are 0.18, 1.41, 70.30, 11.20 and 20.80, respectively (Million). Their amount of calculation are 139.50, 29.91, 15,440.00, 324.79 and 8410.00, respectively (Million Floating-point Operations per Second, MFlops). The results show that the FARFB network has minimal parameters and amount of calculation, but extremely high accuracy. This is also the ideal result of the initial design of this network structure in this paper.

The graphical performance of each network model, the number of its parameters and calculations in C-shaped and non-C-shaped root canal classification tasks (the input image size of Vgg16 is 224 × 224. The input image size of Xception is 299 × 299). The gray circles are the standard display of the number of parameters or calculations at each level.

Comparison with dentists and other studies

It is usually a challenge for dentists to find C-shaped root canals directly through panoramic images. Table 4 and Fig. 8 show the performance indicators of the proposed method and two dentists in this C-shaped root canal classification task. It can be seen that the accuracy of the two dentists is 0.6352 and 0.5770, respectively, and the AUC are 0.635 and 0.577. This shows that the proposed method has better performance than two dentists.

Table 4 also shows that the proposed method performs much better than dentists and is also better than previous methods. Compared with the method of Jeon et al., the proposed method has a 3% higher accuracy on the test set and other performance metrics are also higher.

Feature visualization of tooth images

Figure 3 shows images of C-shaped canals and non-C-shaped canals predicted using the proposed method and visualized using Score-CAM. The proposed method focuses on the feature of the intersection area of the root canal with the crown in the image and uses this feature to predict C-shaped root canals (Fig. 9a) correctly. The method focuses on the features of the area of the root canal bifurcation in the image and uses this feature to predict non-C-shaped root canals (Fig. 9b) correctly.

Score-CAM output for C-shaped and non-C-shaped type fractures classification. C-shaped root canal tooth images (a) and non-C-shaped root canal tooth images (b). Original images (top) and overlapped on the original image (bottom). The visualization certifies that the network is focusing on the correct area of the tooth.

Discussion

In this paper, a lightweight convolutional neural network model was proposed to predict C-shaped root canals in mandibular second molars by analyzing panoramic images. This paper selects several commonly used and highly representative methods in tooth image research for an experimental comparison and hopes to compare them to evaluate the effectiveness of the proposed model. Through several cross experiments, the network model proposed in this paper has the advantages of low resource consumption and high accuracy (Fig. 7). The accuracy of the proposed model in the classification of C-shaped root canals and non-C-shaped root canals is 0.9838 (CI 0.9775–0.9910), which is improved compared with the previous studies38. This paper found that adding an RFB module to a lightweight CNN can better learn and find tooth images’ depth features and details through multi-model training and ablation study. Experimental results prove that the effect of the RFB module simulating the human visual system to extract multi-scale features is very well. It helps significantly to reduce the number of parameters and time complexity. Initial efforts to identify and classify root canals were dominated by time-consuming and labor-intensive annotation by experienced dentists. Recently, breakthroughs have been made in the field of medical imaging using deep learning methods39,40,41,42,43,44. Most of the existing work on the classification of dental root canals mainly focuses on single canals, multiple canals, the binary of C-shaped root canals and multiple canals. According to the research investigation, two previous articles used deep learning to classify the root canals of mandibular first and second molars38,45. However, the results are not optimal, especially in classifying complex root canal types and a generalized approach does not exist. Meanwhile, previous studies did not consider the impact of model parameters and calculation size when classifying root canals. In most cases, limitations of hardware devices and usage environment will reduce the scope of their use scenarios. The proposed lightweight CNN model fills this deficiency and improves classification accuracy (Table 3).

In addition, to demonstrate the effectiveness of the network for learning C-shaped root canals, this paper implemented Score-CAM, an adaptive improvement of CAM46 that allows us to visualize the network’s concerns for different classifications. As shown in Fig. 9, the correct root canal region was focused by the decision-making network. Unfortunately, there are still many useless areas in the focus area of the visual map displayed by this network, which shows that the proposed network still needs improvement. This may also indicate that the lightweight shallow network is slightly lacking in the ability to extract more detailed features from tooth images.

Deep neural networks have become the most popular advanced technology in computer vision and medical imaging47,48,49. Neural networks are both computationally and memory intensive, making them difficult to deploy on embedded systems with limited hardware resources50,51. Previous studies on medical images have not considered this part, making it difficult to translate their results into actual clinical application. This will lead to wasted computing power and memory consumption of related devices. Therefore, when using the deep neural network method for medical image processing, it is necessary to consider the lightweight neural network model with the same accuracy. This reduces device resources and improves the conversion rate of research results to actual clinical applications.

This paper has several limitations. First, the training images are created by manual segmentation, which takes a lot of time. Therefore, an accurate automatic segmentation algorithm may be a further and valuable technical direction in tooth image. Second, the manually segmented tooth images have a complex background. The distance between teeth is very small, which inevitably blends with other tooth structures and the environment when segmenting the image, which to some extent prevents the model from learning the texture features of the area of interest. Third, translating the study results into practical clinical applications may still require a more significant data set to complete validation of the model’s generalization ability.

Conclusion

In this work, a lightweight CNN model is proposed to detect the presence of C-shaped root canals in mandibular second molars. Compared with the methods of previous related studies, the proposed model has higher accuracy detecting C-shaped root canals and lower resource consumption. The proposed model improves feature extraction capability by incorporating an RFB module to enhance receptive fields. The RFB module which mimics the human eye’s receptive field, provides excellent help for feature extraction from lightweight CNN models and reduces the number of model parameters. The experimental results show that the proposed FARFB is better than that of dentists in detecting root canal types of mandibular second molars and better than other traditional methods in this work.

In future studies, we will use more clinical data for feedback training to improve the comprehensive efficiency of the model. Furthermore, we will implement the segmentation algorithm of the C-shaped root canal tooth image in the panorama based on the features extracted by the proposed method in this paper. Through rapid automated detection to replace cumbersome manual operations and then assist dentists in rapid clinical diagnosis.

Data availability

The raw/processed data required to reproduce these findings cannot be shared at this time as the data also forms part of an ongoing study. Further inquiries can be directed to the corresponding author.

References

Alfawaz, H. et al. Prevalence of C-shaped canal system in mandibular first and second molars in a Saudi population assessed via cone beam computed tomography: A retrospective study. Clin. Oral Invest. 23, 107–112 (2019).

Cooke, H. G. 3rd. & Cox, F. L. C-shaped canal configurations in mandibular molars. J. Am. Dent. Assoc. 1939(99), 836–839 (1979).

Walker, R. T. Root form and canal anatomy of mandibular second molars in a Southern Chinese population. J. Endod. 14, 325–329 (1988).

Solomonov, M., Paqué, F., Fan, B., Eilat, Y. & Berman, L. H. The challenge of C-shaped canal systems: A comparative study of the self-adjusting file and ProTaper. J. Endod. 38, 209–214 (2012).

Manning, S. A. Root canal anatomy of mandibular second molars: Part II C-shaped canals. Int. Endod. J. 23, 40–45 (1990).

Araujo, G. D. T. T. et al. Influence of cone beam computed tomography versus panoramic radiography on the surgical technique of third molar removal: A systematic review. Int. J. Oral Maxillofac. Surg. 48, 1340–1347 (2019).

Choi, J.-W. Assessment of panoramic radiography as a national oral examination tool: Review of the literature. Imaging Sci. Dent. 41, 1–6 (2011).

Liu, Y.-C., Chuang, K.-S., Yu, C.-C., Chao, J.-H. & Hsu, F.-Y. Evaluation of the medical exposure doses regarding dental examinations with different X-ray instruments. Radiat. Phys. Chem. 116, 386–392 (2015).

Wrzesien, M. & Olszewski, J. Absorbed doses for patients undergoing panoramic radiography, cephalometric radiography and CBCT. Int. J. Occup. Med. Environ. Health https://doi.org/10.13075/ijomeh.1896.00960 (2017).

Krupinski, E. A., Berbaum, K. S., Caldwell, R. T., Schartz, K. M. & Kim, J. Long radiology workdays reduce detection and accommodation accuracy. J. Am. Coll. Radiol. 7, 698–704 (2010).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Radoglou-Grammatikis, P. et al. Modeling, detecting and mitigating threats against industrial healthcare systems: A combined software defined networking and reinforcement learning approach. IEEE Trans. Industr. Inf. 18, 2041–2052 (2021).

Toğaçar, M., Ergen, B. & Cömert, Z. Tumor type detection in brain MR images of the deep model developed using hypercolumn technique, attention modules and residual blocks. Med. Biol. Eng. Compu. 59, 57–70 (2021).

Bi, W. L. et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA 69, 127–157 (2019).

Wang, S. et al. Artificial intelligence in lung cancer pathology image analysis. Cancers 11, 1673 (2019).

Nadeem, M. W. et al. Brain tumor analysis empowered with deep learning: A review, taxonomy and future challenges. Brain Sci. 10, 118 (2020).

Rahaman, M. M. et al. A survey for cervical cytopathology image analysis using deep learning. IEEE Access 8, 61687–61710 (2020).

Li, Y. & Shen, L. Skin lesion analysis towards melanoma detection using deep learning network. Sensors 18, 556 (2018).

Chen, C. et al. Convolutional neural networks for forecasting flood process in internet-of-things enabled smart city. Comput. Netw. 186, 107744 (2021).

Chen, L. et al. DRINet for medical image segmentation. IEEE Trans. Med. Imaging 37, 2453–2462 (2018).

Zhang, J., Xie, Y., Wang, Y. & Xia, Y. Inter-slice context residual learning for 3d medical image segmentation. IEEE Trans. Med. Imaging 40, 661–672 (2020).

Peng, J. & Wang, Y. Medical image segmentation with limited supervision: A review of deep network models. IEEE Access 9, 36827–36851 (2021).

Wang, H. et al. Rib segmentation algorithm for X-ray image based on unpaired sample augmentation and multi-scale network. Neural Comput. Appl. https://doi.org/10.1007/s00521-021-06546-x (2021).

Schwendicke, F., Golla, T., Dreher, M. & Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 91, 103226 (2019).

Sukegawa, S. et al. Deep neural networks for dental implant system classification. Biomolecules 10, 984 (2020).

Lee, J.-H., Kim, Y.-T., Lee, J.-B. & Jeong, S.-N. A performance comparison between automated deep learning and dental professionals in classification of dental implant systems from dental imaging: A multi-center study. Diagnostics 10, 910 (2020).

Lee, J.-H., Kim, D.-H., Jeong, S.-N. & Choi, S.-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 77, 106–111 (2018).

Jaskari, J. et al. Deep learning method for mandibular canal segmentation in dental cone beam computed tomography volumes. Sci. Rep. 10, 1–8 (2020).

Tian, S. et al. Automatic classification and segmentation of teeth on 3D dental model using hierarchical deep learning networks. IEEE Access 7, 84817–84828 (2019).

Denil, M., Shakibi, B., Dinh, L., Ranzato, M. & Freitas, N. D. Predicting Parameters in Deep Learning. (University of British Columbia, 2013).

Toğaçar, M., Cömert, Z. & Ergen, B. Intelligent skin cancer detection applying autoencoder, MobileNetV2 and spiking neural networks. Chaos Solitons Fract. 144, 110714 (2021).

Zhang, R. et al. Use of cone-beam computed tomography to evaluate root and canal morphology of mandibular molars in Chinese individuals. Int. Endod. J. 44, 990–999 (2011).

McHugh, M. L. Interrater reliability: The kappa statistic. Biochem. Med. 22, 276–282 (2012).

Wang, H. et al. Score-CAM: Score-weighted visual explanations for convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops 24–25 (2020).

Liu, S. & Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV) 385–400 (2018).

F, Yu & Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. (2016).

Diker, A., Cömert, Z., Avcı, E., Toğaçar, M. & Ergen, B. A novel application based on spectrogram and convolutional neural network for ECG classification. In 2019 1st International Informatics and Software Engineering Conference (UBMYK) 1–6 (IEEE, 2019).

Jeon, S.-J. et al. Deep-learning for predicting C-shaped canals in mandibular second molars on panoramic radiographs. Dentomaxillofacial Radiol. 49, 20200513 (2021).

Wang, H. et al. Comparison of machine learning methods for classifying mediastinal lymph node metastasis of non-small cell lung cancer from 18 F-FDG PET/CT images. EJNMMI Res. 7, 1–11 (2017).

Lee, H. et al. Fully automated deep learning system for bone age assessment. J. Digit. Imaging 30, 427–441 (2017).

Xue, Y., Zhang, R., Deng, Y., Chen, K. & Jiang, T. A preliminary examination of the diagnostic value of deep learning in hip osteoarthritis. PLoS ONE 12, e0178992 (2017).

Byrne, M. F. et al. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut 68, 94–100 (2019).

Wang, X. et al. Searching for prostate cancer by fully automated magnetic resonance imaging classification: Deep learning versus non-deep learning. Sci. Rep. 7, 1–8 (2017).

Song, Q., Zhao, L., Luo, X. & Dou, X. Using deep learning for classification of lung nodules on computed tomography images. J. Healthc. Eng. 2017, 1–7 (2017).

Hiraiwa, T. et al. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofacial Radiol. 48, 20180218 (2019).

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2921–2929 (2016).

Chan, H.-P., Samala, R. K., Hadjiiski, L. M. & Zhou, C. Deep learning in medical image analysis. Deep Learn. Med. Image Anal. https://doi.org/10.1007/978-3-030-33128-3_1 (2020).

Ahmad, H. M., Khan, M. J., Yousaf, A., Ghuffar, S. & Khurshid, K. Deep learning: A breakthrough in medical imaging. Curr. Med. Imaging 16, 946–956 (2020).

Shrestha, A. & Mahmood, A. Review of deep learning algorithms and architectures. IEEE Access 7, 53040–53065 (2019).

Han, S., Mao, H. & Dally, W. J. Deep compression: Compressing deep neural networks with pruning trained quantization and huffman coding. Fiber 56, 3–7 (2015).

Wu, Y. et al. Edge computing driven low-light image dynamic enhancement for object detection. IEEE Trans. Netw. Sci. Eng. https://doi.org/10.1109/TNSE.2022.3151502 (2022).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (62176177, 61873178, 61906130), and the National Key R & D Program of China (2018AAA0102601) and the Shanxi Provincial International Cooperation Foundation (201803D421047). We gratefully appreciate the invaluable comments from anonymous reviewers and editors.

Author information

Authors and Affiliations

Contributions

L.Z.: data curation, methodology, Software, writing—original draft, writing—review and editing. F.X., Y.L.: methodology, supervision, writing—review and editing, resources. H.Z.: software, writing—review and editing. Z.X.: data curation, supervision, writing—review and editing. J.X.: conceptualization, funding acquisition, supervision. B.W.: writing—review and editing, funding acquisition, supervision, project administration. all authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, L., Xu, F., Li, Y. et al. A lightweight convolutional neural network model with receptive field block for C-shaped root canal detection in mandibular second molars. Sci Rep 12, 17373 (2022). https://doi.org/10.1038/s41598-022-20411-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-20411-4

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.