Abstract

The 25-item Emotional Processing Scale (EPS) can be used with clinical populations, but there is little research on its psychometric properties (factor structure, test–retest reliability, and validity) in individuals with psychiatric symptoms. We administered the EPS-25 to a large sample of people (N = 512) with elevated psychiatric symptoms. We used confirmatory factor analysis to evaluate three a priori models from previous research and then evaluated discriminant and convergent validity against measures of alexithymia (Toronto Alexithymia Scale-20), depressive symptoms (Patient Health Questionaire-9), and anxiety symptoms (Generalized Anxiety Disorder-7). None of the a priori models achieved acceptable fit, and subsequent exploratory factor analysis did not yield a clear factor solution for the 25 items. A 5-factor model did, however, achieve acceptable fit when we retained only 15 items, and this solution was replicated in a validation sample. Convergent and discriminant validity for this revised version, the EPS-15, was r = − 0.19 to 0.46 vs. TAS-20, r = 0.07− 0.25 vs. PHQ-9, and r = 0.29− 0.57 vs. GAD-7. Test–retest reliability was acceptable (ICC = 0.73). This study strengthens the case for the reliability and validity of the 5-factor structure of the EPS but suggest that only 15 items should be retained. Future studies should further examine the reliability and validity of the EPS-15.

Similar content being viewed by others

Introduction

According to the emotional processing model (EPM), acknowledging emotions and finding adaptive ways of expressing them are foundational to adaptive coping with stressful life events1,2,3. The EPM proposes that disruptions in coping can occur when people avoid or suppress their emotions, which will inhibit emotional processing and give rise to difficulties with unprocessed or uncontrolled emotions, which in turn may contribute to the development of psychiatric and somatic symptoms.

The concepts of emotional processing and the EPM are closely related to the concepts of emotional regulation and the process model of emotion regulation1,4. Both models, for example, recognize situational avoidance as a strategy to cope with upsetting events. An important distinction, however, is that “emotional regulation” refers primarily to attempts at influencing emotions to reach adaptive goals (such as stable mental health), whereas “emotional processing” refers to what disrupts the overarching process of overcoming difficult or stressful life events more broadly1,4,5. This difference in focus—strategies to manage emotions versus obstacles to natural emotional processing—leads to the study of different phenomena. According to the EPM, for example, it is important to determine whether people are experiencing difficulties understanding emotions, because such a lack of understanding is likely to hinder emotions being processed. In contrast, the process model of emotional regulation does not explicitly address this, as a lack of understanding emotions is not a strategy people can employ in order to regulate emotions.

The Emotional Processing Scale (EPS) was developed to enable the study of emotional processing in accordance with the EPM. The original version of this scale comprised 38 items and 8 subfactors1 which was later shortened to a 25-item version (EPS-25) which has five subscales purportedly corresponding to five facets of emotional processing2: Avoidance refers to strategies to avoid triggering emotions surrounding an event or situation. Suppression refers to attempts to not show feelings outwardly. Impoverished emotional experience captures aspects of “alexithymia”; that is, difficulties identifying own emotions related to an event. The final two subscales assess consequences of inadequate emotional processing: Signs of unprocessed emotions can, for example, manifest as nightmares, whereas Unregulated emotions can be expressed in temper tantrums.

Emotional processing deficits in individuals with elevated psychiatric symptoms

According to the EPM, a lack of adequate emotional processing will be associated with psychiatric and medically unexplained somatic symptoms1,2,5. Consistent with this hypothesis, emotional processing deficits have been identified in patients with psychogenic nonepileptic seizures6, functional neurological symptoms7, irritable bowel syndrome (IBS)8, chronic (back) pain9,10, post-traumatic stress disorder (PTSD)11,12, substance use disorder12, bipolar disorder10, and anxiety disorders13. Emotional processing deficits do not appear to be specific to psychiatric populations, however, as deficits have also been identified in populations of people with medical conditions such as ischemic heart disease14, multiple sclerosis10,15 and type 2 diabetes16.

Moreover, although several psychometric studies of the EPS-25 have been published, most of these studies have examined the EPS-25 in non-psychiatric samples, such as healthy participants, medical patients, or a combination of these17,18,19,20. Of the few validation studies on people with psychiatric problems, one had a small highly selected sample (24 patients with bipolar disorder hospitalized for depression)10, and the other investigated an unspecified population (people referred to a psychologist for various mental health problems)2. Thus, further validation of the factor structure of the EPS-25 in a large sample of people with psychiatric symptoms is needed as an initial step in determining the validity of this measure as a predictor of the development and maintenance of both psychiatric disorders and functional somatic syndromes.

Internal consistency and dimensionality

There have been several psychometric studies on the EPS-25, and studies generally report excellent internal consistency for the whole scale, and fair to good internal consistencies for the subscales2,10,17,19,20,21,22,23. In Baker et al.’s (2010) original article, the EPS-25 was administered to a mixed sample of 690 medical patients, psychiatric patients, and healthy controls2. Exploratory factor analysis revealed the 5-factor structure described above. Moreover, Gay et al. (2019) administered the EPS-25 to a combined sample of 1176 medical patients, hospitalised patients with bipolar disorder, city hall employees, and students10. An exploratory factor analysis with five factors defined a priori revealed factor loadings similar to those reported by Baker et al. (2010), although five items had cross-loadings over 0.30.

Other attempts to replicate the original 5-factor solution, however, have failed. Using two community samples (N = 1172), Spaapen (2015) conducted a confirmatory factor analysis of the 5-factor solution suggested by Baker et al. (2010), but this factor structure did not achieve acceptable fit, either in its original form or after dropping the three most problematic items18. Orbegozo et al. (2018) investigated the factor structure of the EPS-25 with confirmatory factor analysis in school and university students (N = 605), but the original 5-factor model did achieve an acceptable fit20. Moreover, neither Kharamin et al. (2021), who used a confirmatory factor analysis of the EPS-25 among university students (N = 1283)19, nor Lauriola et al.17, who administered the EPS-25 to a combined sample of gastrointestinal patients and healthy participants (N = 696), replicated the original 5-factor structure.

Given that replication of the original findings of Baker et al. (2010) has proven difficult, several authors have explored alternative structures. Spaapen (2015) investigated a 2-factor model, with Suppression as one factor, and the other four subscales representing another factor, but confirmatory factor analysis did not establish a convincing model fit18. Other authors have added a second-order latent “emotional processing” factor (i.e., a general emotional processing capacity factor) to the five subfactors, which increased model fit17,19. Another solution has been to reduce the number of items or move items among factor to achieve adequate fit20.

Taken together, despite difficulties in replicating Baker et al.’s (2010) original findings, most previous studies support a 5-factor model, either with a second-order latent “emotional processing” factor17,19,20 or without one2,10, although establishing a 5-factor model required revisions in some studies, such as reducing items18,20. Thus, the structure of the EPS-25 proposed by Baker et al. (2010) is far from consistently replicated and needs further study.

Convergent and discriminant validity

The validity of a measure can be assessed by the correlations it has with other measures of relevance. Because the EPS-25 purports to assess dysfunctional emotional processing, it should correlate relatively highly (convergent validity) with measures of similar constructs, such as alexithymia (i.e., difficulties identifying and expression emotions), but correlate less strongly (discriminant validity) with measures of constructs not directly part of emotional processing (such as depression).

The convergent validity of the EPS has been studied in relation to concepts such as emotional control1, emotional regulation10,20, and alexithymia1,2. In particular, the Impoverished emotional experience factor of the EPS-25 has been proposed1,2 as similar to the alexithymia facet of difficulties identifying one’s own feelings (measured by the Toronto Alexithymia Scale-20); however, surprisingly low concurrent validity (r = 0.35) has been found10,24. In addition, the discriminant validity of the EPS-25 has been mixed, particularly findings of a larger than hypothesized relationship between the scale and measures negative affect such as anxiety (r = 0.47 to 0.59) and depressive symptoms (r = 0.48 to 0.63)10,19,20. Emotional processing is also proposed to have discriminant validity with another facet of the alexithymia1, external oriented thinking (Toronto Alexithymia Scale-20, facet 3), and studies have found that the EPS-25-total is uncorrelated with this subscale10.

Test–retest reliability

Only two studies have investigated EPS-25 test–retest reliability. In a convenience sample of 17 healthy people, test–retest reliability over 4 to 6 weeks for the entire scale was 0.742. In another study, the 4-week test–retest correlation was 0.91 among 80 students19. Clearly there is need for further studies of test–retest reliability of the EPS-25, particularly in populations with elevated psychiatric symptoms.

Study aims and hypotheses

The planned aim of this study was to conduct a structural validation of the EPS-25 in a sample of patients with elevated psychiatric symptoms. We hypothesized that the EPS-25 would show either a 5-factorial structure (with or without a higher-order, general emotional processing capacity latent factor) consistent with the description of Baker et al. (2010), or a 2-factorial structure consistent with Spaapen’s (2015) description of the two factors. We also hypothesized that the internal consistency would be good (α ≥ 0.80) for the EPS-25 total scale and at least fair for the five subscales (α ≥ 0.60). We also tested convergent validity of the EPS-25 with the Toronto-Alexithymia Scale-20 (TAS-20), hypothesizing a relatively high correlation (r = 0.50 to 0.75) with the TAS-20 factor 1 (Difficulty Identifying Feelings). For discriminant validity, we hypothesized that the association of EPS-25 with anxiety and depression would be lower (r = 0.25 to 0.50) than the EPS relationship with TAS-20. We also expected that the EPS would not be correlated with external oriented thinking from the TAS-20. A last aim of this study was to evaluate EPS test–retest reliability, which was hypothesized to have an adequate test–retest reliability (i.e., ICC ≥ 0.60) over approximately 1 week. Finally, as we conducted analyses of the EPS-25, we developed an additional aim, which was to investigate whether a shorter version of the scale might be psychometrically sound.

Method

Sources of the data and participants

Data for this study were taken from the baseline (pre-intervention) assessment of four clinical trials of internet-delivered psychodynamic treatment25,26,27,28. These trials were conducted on people with an anxiety disorder or depression25, social anxiety disorder26, or somatic symptom disorder27. In all studies, adult participants were recruited from the community by advertisement and were enrolled using a safe internet platform. The main common exclusion criterion were the presence of other major psychiatric conditions, where outpatient care would be more appropriate (e.g., psychosis, suicidal ideation). In add trials, participants completed self-report questionnaires, from home, on a secure web platform. Traffic with the web platform was encrypted, and all studies proceeded in accordance with relevant data management and privacy legislation. For further information about recruitment procedures and patient criteria, see the original studies. The research was performed in accordance with the Declaration of Helsinki, all participants (N = 512) provided informed consent, and all four trials were conducted in accordance with relevant regulations and approved by the appropriate regulatory authorities (Regional Ethics Board of Linköping: 2011/400–31, 2013/361–31; Swedish Ethical Review Authority: 2019–03,317, 2020–03,490). ClinicalTrials.gov identifiers are: NCT01532219, NCT02105259, NCT04122846 and NCT04751825. Data are available on request by the corresponding author.

For factor analyses of the EPS-25, data from all four trials were used. For analyses of discriminant and convergent validity, data were taken from the baseline of only one trial28 that had data on the other validations measures. To calculate test–retest reliability, data came from only one trial27 that had an adequate number of days (Range: 5–12 days) between the first and second administrations of the EPS-25 prior to treatment.

Measures

The Emotional Processing Scale (EPS-25) has 25 items which are rated on a 10-point scale from 0 (completely disagree) to 9 (completely agree). The mean of all items yields the overall score, and means of the five, 5-item subscales are also calculated: avoidance, suppression, impoverished emotional experience, signs of unprocessed emotions, and unregulated emotions. The EPS-25 was translated from English to Swedish by three people fluent in both languages, by using multiple back-and-forth rounds until a satisfactory translation was reached29. (For further details, see30).

The Toronto Alexithymia Scale (TAS-20) has 20 items rated on a scale of 1 (strongly disagree) to 5 (strongly agree)31,32, and fields a total score (range: 20 to 100) as well as scores on three facets or subscales: difficulty identifying feelings (DIF), difficulty describing feelings (DDF), and externally-oriented thinking (EOT). The TAS-20 has shown both good internal consistency and test–retest reliability in the Swedish population33.

The Patient Health Questionnaire-9 (PHQ-9) assessed depressive symptom severity. The nine items rated 0 to 3 and summed (range: 0 to 27). The PHQ-9 has good psychometric properties, including an internal consistency in the range of Cronbach’s α = 0.86–0.8934.

The Generalized Anxiety Disorder-7 scale (GAD-7; Spitzer et al., 2006) assesses anxiety symptom severity. The seven items are rated 0 to 3 and summed (range: 0 to 21). Internal consistency is excellent (Cronbach’s α = 0.92)34.

Statistical analyses

Within a confirmatory factor analytic (CFA) framework, we used all available EPS-25 data (N = 512) and tested three different possible factor solutions in R 4.1.0 (R Core Team, 2016) with lavaan 0.6–8: (1) a 5-factor model corresponding to the original solution presented by Baker et al.2; (2) a 5-factor model with a second order “emotional processing” latent variable as found to be adequate in previous studies17,19; and (3) a 2-factor model with suppression and other factors as discussed by Spaapen (2015)18. Criteria for good model fit were: CFI and TLI at least 0.90 (ideally 0.95), RMSEA and SRMR < 0.08, and lowest possible AIC and BIC36.

Using Jamovi37, we analysed EPS internal consistency (Cronbach’s α), investigated convergent and discriminant validity using Pearson correlations, and estimated test–retest reliability using the intraclass correlation coefficient (ICC). For the α statistic, values ≥ 0.90 are commonly regarded excellent, ≥ 0.80 good, and ≥ 0.70 acceptable. Importantly however, α also decreases substantially with fewer items in a scale; for example, going from 5 to 3 items could be expected to lower α around 0.10–0.15 units. For the r statistic, values around 0.50 are commonly regarded as indicative of a strong/large association, 0.30 is moderate/medium, and 0.10 is weak/small38. For the ICC, values ≥ 0.75 are commonly regarded excellent, ≥ 0.60 good, ≥ 0.40 fair, and < 0.40 poor39.

Ethical approvals

The research was performed in accordance with the Declaration of Helsinki, all participants (N = 512) provided informed consent and all four clinical trials were conducted in accordance with relevant regulations and approved by the appropriate regulatory authorities: (Regional Ethics Board of Linköping: 2011/400-31, 2013/361-31; Swedish Ethical Review Authority: 2019–03,317, 2020–03,490). ClinicalTrials.gov identifiers are: NCT01532219, NCT02105259, NCT04122846 and NCT04751825.

Results

Psychometric analysis of the EPS-25

Confirmatory factor analysis (CFA) of EPS-25

Because kurtosis was high for several items, and some items had many zero scores, models were fit using maximum likelihood estimation with robust (Huber-White) standard errors and a scaled test statistic. In the CFA using all data, none of the three a priori models of EPS-25 achieved adequate fit (see Table 1). Theoretically sound changes in accordance with modification indices, including the removal items (6, 8, 14, 17, 23) and the specification of reasonable residual covariance (for example 18, 19), also did not yield acceptable model fit.

Internal consistency of EPS-25

Cronbach’s alpha was excellent for the EPS-25 overall score (α = 0.92), good for the avoidance (α = 0.87) and impoverished emotional experience subscales (α = 0.83), and acceptable (α = 0.67 to 0.75) for the other three subscales.

Convergent and discriminant validity of EPS-25

As seen in Table 2, the EPS-25-total showed strong correlations with anxiety (r = 0.66) and depression (r = 0.59). A moderate correlation of impoverished emotional experience and the alexithymia factor difficulty identifying feelings (TAS-20, factor 1) was found (r = 0.35) but also a weak correlation (r = 0.21) with externally-oriented thinking (TAS-20, factor 3).

Test–retest reliability of the EPS-25

Test–retest reliability of the EPS-25 was conducted on 51 participants from Maroti et al.27 over an approximately 1-week period (M = 8.06, SD = 1.35, range: 5–12 days). The test–retest reliability was excellent (ICC = 0.76).

Development and validation of the EPS-15

Because we could not replicate any of the a priori factor structures for the 25-item version of EPS using confirmatory factor analysis, we attempted to find a more suitable factor solution using exploratory factor analysis. Also, although the EPS-25 showed good internal consistency and test–retest reliability, discriminatory validity was unsatisfactory. The EPS-25 had large relationships with depression and anxiety and also was correlated with externally-oriented thinking of the alexithymia construct—findings that are not predicted by Baker et al.1.

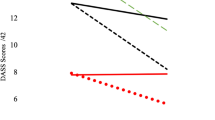

To find and validate a better factor solution, the sample was split into training (n = 262) and validation (n = 250) subsamples by randomization. We deemed these sample sizes adequate for factor analysis considering that they were close to the common recommendation of 30040, and we expected communalities to be at least moderate41. We then conducted an exploratory factor analysis (EFA) based on principal axis factoring with promax (oblique) rotation, with the intention of finding an empirically- and theoretically-sound factor solution for the data. In these analyses, we explored 1-, 2-, and 5-factor solutions as informed by the scree plot (see Fig. 1) and our theoretical understanding of the scale and the emotional processing model. We wanted to achieve distinct factors as characterized by factor loadings of all items ≥ 0.30 (ideally ≥ 0.40), with few or no substantial cross-loadings, and at least three items loading on each factor.

Exploratory factor analysis (EFA) of the EPS-15

The training data set was suitable for factor analysis (Barlett’s test p < 0.001; KMO = 0.91). The screen plot (Fig. 1) was inconclusive; the knee being indicative of 1—or more probably 2—factors, while parallel analysis resulted in weaker eigenvalues up to factor 5, even though the difference was small for factors 3 to 5. However, none of the freely estimated 1-, 2-, or 5-factor solutions for the EPS-25 could achieve acceptable fit with distinct factors, meaning that each of all 25 items had factor loadings of at least 0.40 with minimal cross-factor loadings (see Table 1).

Development and dimensionality of the EPS-15

As neither confirmatory nor exploratory factor analysis resulted in an acceptable factor solution for the EPS-25, we sought to develop a shorter scale scale, the EPS-15, with a more distinct factor structure. We intended to identify a subset of the EPS-25 items that would allow for stronger model fit and distinct, yet correlated, factors. To achieve this goal, we based the item selection process on the best fitting 25-item CFA model (this had 5 factors corresponding to the conventional subscale scoring), and the stepwise deletion of items, and addition of covariance if theoretically feasible, based on modification indices and our theoretical understanding (see Table 1), until exactly 3 items remained for each of the 5 factors. We subsequently validated this model in the validation data set.

Further statistical and theoretical considerations

Based on modification indices for the 25-item CFA training data 5-factor solution, item 6 (“Could not express feelings”) was moved to the impoverished emotion factor. The following 10 items were then removed step by step: 23, 17, 8, 14, 5, 6, 20, 19, 11, and 12. We found the correlation between the unregulated emotions and suppression factors to be unsatisfactory (r = 0.31, i.e., clearly lower than 0.40) and therefore replaced item 18 (“Felt urge to smash something”) with item 8 (“Reacted too much to what people said or did”) to increase the correlation between subscales to an acceptable r = 0.45. The resulting five-factor model on 15 items (three per factor) achieved acceptable model fit in terms of the RMSEA (0.050, [90% CI 0.033, 0.066]), SRMR (0.046), CFI (0.96), and TLI (0.95).

To reach the best suiting 15 items of the 25 items available, the items were also scrutinized for adequate content validity by D.M and R.J, who, at that time, did not know of what 15 item the structural validation had suggested (see Table 3). The items were deemed either fully indicative of its subfactor (coded as “yes”), only partly so (coded as “borderline”) or not an adequate description of content validity (coded as “no”). Following these considerations, one additional change of the EPS-15 was made as we did not regard item 10 (“My feelings did not seem to belong to me”) as a convincing example of impoverished emotional experience understood as alexithymia42 and therefore instead reintroduced item 5 (“My emotions felt blunt/dull”; see Table 3).

The resulting 15-item 5-factor model (from both statistical and theoretical considerations) had improved model fit in the training data and acceptable, though not ideal, fit in the validation data (see Table 1). The average variance extracted was 47% in both the training and validation data. All factor correlations and factor loadings were 0.40 or higher in the training data and remained so in the validation data, except for the correlation between the unregulated emotions and suppression factors, which dropped to 0.36 in the validation.

Internal consistency of the EPS-15

As shown in Table 4, Cronbach’s alpha was good for the EPS-15 overall scale (α = 0.87) and for the suppression subscale (α = 0.83), and acceptable (α = 0.62–0.76) for the remaining subscales. The composite reliability coefficient (ω) was almost identical.

Convergent and discriminant validity of EPS-15

As seen in Table 5, the EPS-15 had a strong correlation with anxiety (r = 0.57). but a weak correlation with depressive symptoms (r = 0.25). Moreover, we found a moderate correlation between impoverished emotional experience and the alexithymia factor difficulty identifying feelings (r = 0.46), but no significant correlation with external oriented thinking style (TAS-20, factor 3).

Test–retest reliability of the EPS-15

The test–retest reliability for the EPS-15 over approximately 1 week (M = 8.06 days, SD = 1.35, range: 5–12 days) was good (ICC = 0.73).

Discussion

Based on data from 512 individuals with elevated psychiatric symptoms, we could not find a satisfactory factor solution for the 25-item Emotional Processing Scale. This led us to develop a briefer 15-item version of the scale, the EPS-15, for which we found an acceptable 5-factor solution that we validated using a split sample strategy. EPS-15 had good internal consistency and test–retest reliability and demonstrated discriminant validity from the construct of depressive symptoms, although less so from anxiety symptoms.

Inconsistent findings pertaining to the EPS-25

Evidence pertaining to the factor structure of the EPS-25 was inconsistent. On the one hand, the training data scree plot appeared to be indicative of one or probably two factors (suppression vs. other). On the other hand, in parallel analysis, higher eigenvalues were obtained up to the fifth factor, and a 5-factor solutions was most promising in terms of model fit under both CFA and EFA. Most previous studies appear to speak for some type of 5-factor model, sometimes with a second order latent “emotional processing” factor17,19,20, but sometimes not2,10. There are several potential explanations for the difficulties we encountered in replicating these 5-factor solutions. In CFA, as suggested by Lauriola et al.17, poor fit may have been a result of this type of model not allowing for cross-loadings over factors in the same manner as EFA, which was used in the original publication2. However, undue reliance on cross-factor loadings for model fit could also indicate poorly defined factors. The existence of weak main factor loadings, in combination with strong cross-loadings, speaks against scoring of the five conventional subscales, and the brief EPS-15 did indeed achieve acceptable fit under CFA. Importantly, in our data, the EPS-25 5-factor EFA model, for which item factor loadings were estimated freely, was also not satisfactory, especially as it had a pattern of factor loadings that was clearly inconsistent with the conventional scoring of the EPS-25 subscales; items 6, 8, 19, 23 exhibiting cross-loadings and items 9, 10, 14 not belonging to any factor.

Properties and potential advantages of the EPS-15

The factor structure of the EPS-15 brief scale appeared to replicate over the testing and validation samples. Considering that all item factor loadings were 0.40 or higher, and acceptable model fit was achieved in the CFA framework without the need to specify cross-loadings (that is, each item loaded only on its intended factor), the factor solution appeared to support scoring of five separate subscales.

The EPS-15 demonstrated discriminant validity from the construct of depressive symptoms, although to a lesser degree of anxiety symptoms. In previous studies, the EPS-25 has not shown adequate discriminant validity from either depressive or anxiety symptoms10,19,20, and we also found that the EPS-25 had an substantial relationship with both anxiety symptoms and depressive symptoms. Moreover, it has been suggested1 that the EPS should not correlate with the Toronto Alexithymia Scale-20, externally-oriented thinking subscale, but in previous studies, subscales of the EPS-25 have been found to correlate significantly, albeit weakly, with this alexithymia facet24. Moreover, two of the EPS-25 subscales in this study had moderate correlation with externally-oriented thinking, but this was not the case for the EPS-15, further strengthening EPS-15 discriminant validity.

In the only two previous studies that have researched EPS-25 test–retest reliability, this has found to be good to excellent2,19. In the current study, both EPS-25 and EPS-15 demonstrated good test–retest reliability over approximately 1 week.

Compared to the EPS-25, the shorter EPS-15 will be easier to administer and complete, especially when used in combination with other scales (such as in routine care screening batteries), when space is limited (such as in epidemiological research), and when repeated measurements are conducted (such as during treatment). Moreover, without losing any of the important psychometric strengths of the EPS-25 (i.e., internal consistency, test–retest reliability), the EPS-15 was better able to discriminate emotional processing from depressive symptoms and facets of the alexithymia construct.

Limitations

There are several limitations of this study. The test–retest reliability and the convergent and discriminant validation analyses was conducted on a small sample. Also, the use of randomization to form the testing and validation subsamples did not result in as stringent of a validation as a true replication in data from an entirely new sample.

Participants in the current analyses self-selected to take engage in internet-delivered emotion-focused treatments, and it is not clear how similar this population is to those in clinical practice or in the community, thus limiting generalizability of the findings. Moreover, the sample used for studying concurrent and discriminant validity were all diagnosed with somatic symptom disorder, and generalization to other samples, including healthy people and those with other psychiatric conditions, is limited.

The difficulties replicating the Baker et al. (2010) original 5-factor structure with adequate factor loadings for 25 items in this study could partly stem from our population studying individuals with elevated psychiatric symptoms. Almost all previous validation studies were on healthy samples and/or medical populations17,18,19,20. Emotional processing difficulties might differ between healthy people and those with elevated psychiatric symptoms. However, replicating the original findings of Baker et al. (2010) has been consistently difficult in other validation studies of healthy and/or medical samples as well17,18,19,20. Moreover, to find an adequate factor solution, previous authors have excluded problematic items—in effect, shortening the original 25-item scale18,20. We believe that our difficulty replicating Baker et al. (2010) stems not only from differences in population but differences in factor analytic procedures and “problematic” items with cross loadings initially used to develop the psychometric properties of the EPS-25.

Overall discussion and future studies

Despite the interest in modelling and measuring emotional processing, considerable challenges remain, and many questions require further investigation. Further structural validation studies of the EPS-15 comparing different populations (i.e., comparing psychiatric to healthy populations) would be of interest. EPS-15 convergent validity should further be clarified, relating it to other measures of emotional processing, such as emotional awareness (measured by Level of Emotional Awareness Scale) or emotional regulation (measured by Difficulties of Emotional Regulation Scale). Further item analysis of the EPS-15 would be useful, given that the average variance extracted was around 50% (barely acceptable). It would also be preferable to further reduce the error variance of the EPS-15, for example by replacing or rephrasing items with relatively low factor loadings (e.g., EPS-25 equivalent items 4, 5, 13) or a particularly poor theoretical basis (e.g., EPS-25 equivalent items 5 and 9; see Table 2).

Despite continued challenges, we believe that this study contributes to the existing body of knowledge in several ways. First, an adequate test–retest reliability in a sample with elevated psychiatric symptoms (or more specifically somatic symptom disorder) has not been previously demonstrated. This is important as the EPS-25 is recommended for longitudinal psychotherapy research1,10,17. Second, despite reducing the number of items to 15, a 5-factor solution was retained, which is in line with previous research. This factor structure is important for clinicians, for example, who might desire to describe or address patient’s difficulties in emotional processing in five different domains. Third, we believe that this study overcomes some of the methodological shortcomings in previous research of the EPS-25, and the EPS-15 holds promise in populations with psychiatric symptoms. In conclusion, the EPS-15 is a promising short-form questionnaire for basic and clinical studies, although both further research on reliability and validity should be conducted.

References

Baker, R., Thomas, S., Thomas, P. W. & Owens, M. Development of an emotional processing scale. J. Psychosom. Res. 62, 167–178 (2007).

Baker, R. et al. The emotional processing scale: Scale refinement and abridgement (EPS-25). J. Psychosom. Res. 68, 83–88 (2010).

Rachman, S. Emotional processing. Behav Res Ther. 18(1), 51–60 (1980).

McRea, K. & Gross, J. Emotional regulation. Emotion 20, 1–9 (2020).

Baker, R. Emotional Processing-Healing through Feeling. (Lion Hudson plc, 2007).

Novakova, B., Howlett, S., Baker, R. & Reuber, M. Emotion processing and psychogenic non-epileptic seizures: A cross-sectional comparison of patients and healthy controls. Seizure 29, 4–10 (2015).

Williams, I. A., Howlett, S., Levita, L. & Reuber, M. Changes in emotion processing following brief augmented psychodynamic interpersonal therapy for functional neurological symptoms. Behav. Cogn. Psychother. 46, 350–366 (2018).

Phillips, K., Wright, B. J. & Kent, S. Psychosocial predictors of irritable bowel syndrome diagnosis and symptom severity. J. Psychosom. Res. 75, 467–474 (2013).

Esteves, J. E., Wheatley, L., Mayall, C. & Abbey, H. Emotional processing and its relationship to chronic low back pain: Results from a case-control study. Man. Ther. 18, 541–546 (2013).

Gay, M.-C. et al. Cross-cultural validation of a French version of the Emotional Processing Scale (EPS-25). Eur. Rev. Appl. Psychol. 69, 91–99 (2019).

Horsham, S. & Chung, M. C. Investigation of the relationship between trauma and pain catastrophising: The roles of emotional processing and altered self-capacity. Psychiatry Res. 208, 274–284 (2013).

Kemmis, L. K., Wanigaratne, S. & Ehntholt, K. A. Emotional processing in individuals with substance use disorder and posttraumatic stress disorder. Int. J. Ment. Health Addict. 15, 900–918 (2017).

Baker, R. et al. Does CBT facilitate emotional processing?. Behav. Cogn. Psychother. 40, 19–37 (2012).

Kharamin, S., Malekzadeh, M., Aria, A., Ashraf, H. & Ghafarian, S. H. R. Emotional processing in patients with ischemic heart diseases. Open Access Maced. J. Med. Sci. 6, 1627–1632 (2018).

Gay, M.-C. et al. Anxiety, emotional processing and depression in people with multiple sclerosis. BMC Neurol. 17, 43 (2017).

Pourmohammad, F. F. & Shirazi, M. Comparison quality of life and emotional processing among patients with major thalassemia, type 2 diabetes mellitus, and healthy people. Jundishapur J. Chronic Dis. Care 9, 12 (2020).

Lauriola, M. et al. The structure of the emotional processing scale (EPS-25): An exploratory structural equation modeling analysis using medical and community samples. Eur. J. Psychol. Assess. https://doi.org/10.1027/1015-5759/a000632 (2021).

Spaapen, D. Emotion Processing-Relevance to Psychotic-Like Symptoms (Springer, Berlin, 2015).

Kharamin, S., Shokraeezadeh, A. A., Shirazi, Y. G. & Malekzadeh, M. Psychometric properties of iranian version of emotional processing scale. Open Access Maced. J. Med. Sci. 9, 217–224 (2021).

Orbegozo, U., Matellanes, B., Estévez, A. & Montero, M. Adaptación al castellano del emotional processing scale-25. Ansiedad Estrés 24, 24–30 (2018).

Górska, D. & Jasielska, A. Konceptualizacja przetwarzania emocjonalnego i jego pomiar-badania nad polską wersją skali przetwarzania emocjonalnego Bakera i współpracowników. Studia Psychologiczne 2, 75–87 (2010).

Jasielska, A. & Górska, D. Badania porównawcze przetwarzania emocjonalnego w poplacjach angielskich i polskicj studentów-doniesienie z bada´n. Studia Psychologiczne 4, 5–13 (2013).

Petermann, F. Emotional processing scale (EPS-D). Z. Für Psychiatr. Psychol. Psychother. 66, 256–257 (2018).

Gay, M-C. Personal communication regarding errors in reporting correlations between EPS-25 and TAS-20. (2021).

Johansson, R. et al. Affect-focused psychodynamic psychotherapy for depression and anxiety through the Internet: a randomized controlled trial. PeerJ 1, e102 (2013).

Johansson, R. et al. Internet-based affect-focused psychodynamic therapy for social anxiety disorder: A randomized controlled trial with 2-year follow-up. Psychotherapy 54, 351–360 (2017).

Maroti, D. et al. Internet-administered emotional awareness and expression therapy for somatic symptom disorder with centralized symptoms: A preliminary efficacy trial. Front. Psychiatry 12, 620359 (2021).

Maroti, D. et al. Internet-based emotional awareness and expression therapy for somatic symptom disorder–a randomized controlled trial. In submission, (2022).

Brislin, R. Back-translation for cross-cultural research. J. Cross-Cultural Psychol. 2, 185–216 (1970).

Holmström-Karlsson, S. Sudden gains under affektfokuserad psykodynamisk behandling för depression och ångest via Internet–förekomst, inverkan på utfall och möjliga orsaker [Unpublished master thesis]. (2012).

Bagby, R. M., Parker, J. D. A. & Taylor, G. J. The twenty-item Toronto Alexithymia scale—I. Item selection and cross-validation of the factor structure. J. Psychosom. Res. 38, 23–32 (1994).

Bagby, R. M., Taylor, G. J. & Parker, J. D. A. The twenty-item Toronto Alexithymia scale—II. Convergent, discriminant, and concurrent validity. J. Psychosom. Res. 38, 33–40 (1994).

Simonsson-Sarnecki, M. et al. A Swedish translation of the 20-item Toronto alexithymia scale: Cross-validation of the factor structure. Scand. J. Psychol. 41, 25–30 (2000).

Kroenke, K., Spitzer, R. L., Williams, J. B. W. & Löwe, B. The patient health questionnaire somatic, anxiety, and depressive symptom scales: A systematic review. Gen. Hosp. Psychiatry 32, 345–359 (2010).

Spitzer, R. L., Kroenke, K., Williams, J. B. W. & Löwe, B. A brief measure for assessing generalized anxiety disorder: The GAD-7. Arch. Intern. Med. 166, 1092 (2006).

Hu, L. & Bentler, P. M. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55 (1999).

jamovi (Version 1.2). The Jamovi project (2020). [Computer Software].

Cohen, J. A power primer. Psychol. Bull. 112, 155–159 (1992).

Cicchetti, D. Guidelines, criteria, and rules of thumb for evaluating normed and standardized asseseement instruments in psychology. Science 2, 11470 (1994).

Field, A. Discovering Statistics Using IBM SPSS Statistics 5th edn. (Sage Publications Inc, 2018).

Guadagnoli, E. & Velicer, W. F. Relation of sample size to the stability of component patterns. Psychol. Bull. 103, 265–275 (1988).

Sifneos, P. E. Affect, emotional conflict, and deficit: An overview. Psychother. Psychosom. 56, 116–122 (1991).

Funding

Open access funding provided by Karolinska Institute. This study was founded by Söderström-Königska foundation and Karolinska Institute´s research grant.

Author information

Authors and Affiliations

Contributions

D.M., E.A. and R.J. made substantial contributions to conception of the work. D.M. wrote the first draft where especially E.A., but also all other authors (B.L., M.L., R.J. & G.A.), made substantial contributions to the revision of the manuscript. E.A. had the leading role of the formal analysis, but D.M. and R.J. contributed. G.A. and R.J. made a substantial contribution of the acquisition of data.

Corresponding author

Ethics declarations

Competing interest

The authors declare no conflict of interest. Publication of research is approved by Dr Roger Baker, developer of EPS-25 and Hogrefe Publishing Group, owner of EPS-25.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Maroti, D., Axelsson, E., Ljótsson, B. et al. Psychometric properties of the emotional processing scale in individuals with psychiatric symptoms and the development of a brief 15-item version. Sci Rep 12, 10456 (2022). https://doi.org/10.1038/s41598-022-14712-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-14712-x

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.