Abstract

Predicting the compressive strength of concrete is a complicated process due to the heterogeneous mixture of concrete and high variable materials. Researchers have predicted the compressive strength of concrete for various mixes using machine learning and deep learning models. In this research, compressive strength of high-performance concrete with high volume ground granulated blast-furnace slag replacement is predicted using boosting machine learning (BML) algorithms, namely, Light Gradient Boosting Machine, CatBoost Regressor, Gradient Boosting Regressor (GBR), Adaboost Regressor, and Extreme Gradient Boosting. In these studies, the BML model’s performance is evaluated based on prediction accuracy and prediction error rates, i.e., R2, MSE, RMSE, MAE, RMSLE, and MAPE. Additionally, the BML models were further optimised with Random Search algorithms and compared to BML models with default hyperparameters. Comparing all 5 BML models, the GBR model shows the highest prediction accuracy with R2 of 0.96 and lowest model error with MAE and RMSE of 2.73 and 3.40, respectively for test dataset. In conclusion, the GBR model are the best performing BML for predicting the compressive strength of concrete with the highest prediction accuracy, and lowest modelling error.

Similar content being viewed by others

Introduction

Literature review and problem statement

Concrete has been commonly used in construction and architecture due to its favourable engineering properties. Concrete has the characteristics of rich raw material, low price, and high compressive strength and good durability1. Concrete comprises four primary components: coarse aggregate, fine aggregate, cement, and water. Concrete's economic value allows it to be widely used in constructions and the accessibility to the material available in the local market. It also demonstrates excellent benefits over other construction materials such as steel, and concrete can be produced with minimum effort. In certain instances, supplementary materials like fly ash (PFA)2,3, blast furnace slag (GGBS)4, silica fume5, and other industrial waste/by-products are added in concrete to enhance the mechanical properties of the concrete4. The introduction of industrial waste/by-product6,7 into concrete offers environmental benefits while increasing the longevity and resiliency of concrete structures.

Among the various concrete property indices, compressive strength is the most critical because it is directly related to the structural safety and is required for determining the performance of structures throughout their life, from new structural design to old structural assessment8.

When dealing with concrete materials, one of the difficulties in selecting the appropriate materials and predicting the mechanical properties of the concrete, i.e., compressive strength, is due to cost and the availability of local material9. It is vital to have robust and reliable predictive models based on existing input and output data at the early stage to drive down the cost of making further experiments and reduce the cost associated with the risk of non-compliance concrete during construction5. With the use of suitable models, it can lead to success in finding combination inputs that can achieve meaningful outcomes and, at the same time, saves considerable time and money. However, empirical, and statistical models, such as linear and nonlinear regression, have been widely used. However, these models require laborious experimental work to develop, and can provide inaccurate results when the relationships between concrete properties and mixture composition and curing conditions are complex10.

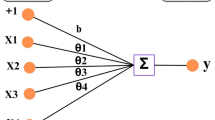

ML is a sub-class of AI that self-learning through algorithms and improves its performance based on previous datasets/experience. The distinction between AI, ML, and DL is illustrated in Fig. 1. With minimal human input, ML algorithms will automatically learn and improve over time6. ML has been widely applied in the field of engineering to solve a variety of problems i.e., predict outages, estimate angular velocity, components failure prognostics and prediction of fatigue life11,12,13,14. In civil engineering, AI and ML have been previously employed to tackle problems in various structural engineering fields15. ML application is also used in building structural design & performance assessment, improving finite element modelling of structures, and enhancing concrete properties prediction & assessment9,16,17,18,19,20.

Given the popularity of machine learning, especially in concrete technology, various studies have been conducted using ML/DL approaches10. Table 1 below shows the summary of concrete compressive strength prediction for various types of concrete using various ML and DL models. Many empirical and statistical models, i.e., linear and nonlinear regression algorithms, were employed to predict the properties of concrete10. Multiple Linear Regression (MLR)21, Support Vector Machine (SVR)22,23, Multilayer Perceptron (MLP)24, and Gradient Boosting25,26 are most used ML algorithms to predict the mechanical and chemical properties of concrete. In general, the compressive strength prediction was undertaken for several type of concrete i.e., ordinary concrete8,10, high-performance concrete25,27,28,29,30, ultra-high-performance concrete 20, and green concrete with supplementary cementitious material i.e., fly ash16,31,32, blast furnace slag4 and recycled aggregates6. ML/DL is also used to predict other mechanical and chemical properties of concrete, i.e., prediction of concrete shear strength15,24, 30], tensile strength33, flexural strength5, the thermal conductivity of concrete34, and chloride concentration of concrete35.

For the DL model, Artificial Neural Network (ANN)4,7,29,36,37,38 was widely used in most previously reported studies. The use of boosting algorithms is not extensively reported in any previous studies except the GBR models. The proposed boosting algorithms were chosen based on their popularity and frequency in other research areas such as biomedical and construction hazard analysis, which reports that the BML models have higher prediction accuracy than other ML and DL models39,40,41,42. We implemented and analysed the accuracy and error of compressive strength prediction for five different boosting algorithms, namely LBGM, CATB, GBR, ADAB, and XGB. Additionally, the BML models are enhanced using the Random Search (RS) optimization process, which involves tuning the hyper-parameters of the BML algorithms.

Objectives

The study's objective is to identify the best performing BML models, i.e., LBGM, CATB, GBR, ADAB, and XGB to predict the HPC with high volume GGBS using BML algorithms, i.e., LBGM, CATB, GBR, ADAB, and XGB. The BML models were then optimised using the Random Search (RS) optimisation process by tuning the hyper-parameters of each BML model function. Additionally, comparison studies were also conducted using commonly used ML models, i.e., linear regression, decision tree, random forest, etc., to evaluate the performance of the BML model in predicting the concrete strength.

The fundamentals behind BML algorithms models are defined in Sect. 2, followed by the statistical properties analysis of the dataset & modelling approach, findings from the optimised BML model, comparison studies between other ML models, and model validation results are provided in Sect. 3. The findings of each model’s prediction accuracy and modelling errors are concluded in Sect. 4.

Methodology

BML algorithms

Light gradient boosting machine (LBGM)

LGBM is a gradient boosting framework that uses tree-based learning algorithms developed by Microsoft50. LBGM uses two innovative sampling techniques: Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB). GOSS excludes a substantial fraction of data instances with small gradients and uses the remainder to estimate the information gain. Since data instances with large gradients contribute more to the computation of information gain, GOSS can generate a highly accurate estimate of information gain with a significantly smaller data set.

EFB allows for the grouping of mutually exclusive features, hence reducing the number of features. It also demonstrates that while determining the optimal bundling of exclusive features, a greedy approach can reach an approximation ratio of relatively high. It was reported that LGBM speeds up the training process of conventional GBDT by up to over 20 times while achieving almost the same accuracy, and it is six times faster than XGBoost50.

CAT boost regressor (CATB)

CATB is an open-sourced machine learning algorithm developed by Yandex in 2017. CATB is a decision tree algorithm based on gradient boosted decision trees. The algorithms in CATB models are a series of decision trees constructed sequentially, with each new tree having a lower loss than the prior trees. The starting parameters determine the number of trees generated, and overfitting is avoided using an overfitting detector. The processes of tree construction for a single tree in CATB algorithms include computing splits in advance, converting categorical features to numerical features, selecting the tree structure, and calculating values in leaves.

Generally, CATB employed greedy algorithms in optimising the prediction accuracy. The features of CATB models are ordered according to their splits and are then substituted in each leaf. The depth of the tree and other constraints for structure selection is specified with pre-modeling parameters, and a random permutation of classification/regression objects is conducted before the construction of each new tree. CATB models validate the model performance with a metric that indicates the direction in which the function should be improved further when deciding the construction of the next tree. CATB model surpasses leading GBR packages and achieves new state-of-the-art performance on common benchmarks51,52.

Gradient boosting regressor (GBR)

Friedman presented the GBR model as an ensemble method for regression and classification in 1999. The gradient boosting approach compares each iteration of the randomly chosen training set to the base model. In the GBR model, the lower the training data fraction, the faster the regression, as the model fits smaller data each iteration. GBR model requires the following tuning parameters: ntrees and shrinkage rate, where ntrees is the number of trees to be grown, and the shrinkage parameter, often referred to as the learning rate applied to each tree in the expansion25,53.

This algorithm's fundamental foundation is 'boosting.' The boosting process aids in transitioning prediction from a 'weak' learner via the additive training process. The essential advantage of GBR algorithms is that it avoids overfitting and makes efficient use of computational resources by using an objective function. Besides improving output performance, GBR algorithms reduce the selected error function further54.

Adaboost regressor (ADAB)

ADAB, an acronym for Adaptive Boosting, is a meta-algorithm for statistical categorization developed in 2003 by Yoav Freund and Robert Schapire. It can be combined with a variety of other types of learning algorithms to enhance performance. The output of the other learning algorithms, i.e., 'weak learners,' is combined into a weighted sum representing the boosted classifier's final output. ADAB is adaptive because it adjusts succeeding weak learners favoring instances misclassified by previous classifiers/regressors. It is less prone to overfitting than other learning algorithms in some cases29.

The individual learners in ADAB algorithms may be ineffective. Still, if their performance is marginally better than random guessing, the final model can be demonstrated to converge to a powerful learner. This technique benefits from a single best-fit decision model formed from the outcomes of several decision trees, each of which is constructed using a random selection of base features, i.e., decision factors from a training dataset55,56.

Extreme gradient boosting (XGB)

Extreme Gradient Boosting (XGB) or XGBoost is a decision tree-based ensemble ML algorithm that uses gradient boosting to make predictions for unstructured data. Tianqi Chen and Guestrin developed XGBoost, and the method uses the conventional tree gradient boosting algorithm45 to create state-of-the-art algorithms, the ‘extreme gradient boosting’23. The multiple Kaggle competition winner ‘XGBoost’ is a highly effective ML algorithm due to its scalable tree boosting system and sparsity-aware algorithm in modelling structured datasets. The algorithm has been the source of countless cutting-edge applications, and it has been the driving force behind many of these recent advances. It’s been widely used as industrial solutions such as customer churn prediction57, applicant risk assessment58, malware detection59, stock market selection60, classification of traffic accidents61, diseases identification40, and even in predicting the death of patience during SARS-COV-2(Covid-19) treatment42. The most significant benefit of XGBoost is its scalability across any condition62. In general, the XGBoost algorithms are the evolution of decision tree algorithms that were improved over time. Figure 2 below shows the development of decision tree-based algorithms to XGBoost.

Model structure

For the most part, we utilised the Python programming language on Google's Colab platform to analyse the data and create the models. An open-source, low-code machine learning library ‘PyCaret’ was used in research63. Figure 3 illustrates the step-by-step procedure for training, optimising, and validating the BML models in predicting the concrete compressive strength. Seven key processes are involved in the development of the optimised BML model, and each stage is described in detail below, with brief explanations:

-

i.

Data Collection – This entails collecting data from the laboratory and compiling it appropriately.

-

ii.

Data Pre-Processing – To correctly identify and arrange the acquired data, it is necessary to sort out the missing values and then normalise the dataset in preparation for model building.

-

iii.

Model Selection—For prediction and evaluation in this research, BML algorithms, i.e., LBGM, CATB, GBR, ADAB, and XGB, were utilised.

-

iv.

Hyper-parameter Optimisation – The RS approach was employed in each of the five proposed BML algorithms, and the results are compared to the original models.

-

v.

Model Validation—Validation and testing of the models were performed using the k-fold cross-validation approach, which randomly splits the dataset and minimises overfitting.

-

vi.

Model Evaluation – All the models are compared, and the best performing algorithms are selected based on evaluation metrics, i.e., R2, RMSE, MAE, MSE, RMSLE, MAPE.

-

vii.

Analysis and Reporting – The findings in the case study are reported based on comparing various ML models, optimisation parameters, and evaluation metrics.

Data collection and pre-processing

Overview

A total of 152 data of HPC compressive strength data were gathered from concrete trial mix conducted at a laboratory in Selangor, Malaysia. In general, the dataset is composed of seven concrete components: fine aggregate, coarse aggregate, ordinary Portland cement (OPC), ground granulated blast-furnace slag (GGBS), silica fume (SF), water, admixture, and moisture content (MC). The dataset also contains concrete compressive strength of a Grade 80 HPC, and the compressive strength results are available for 7, 28, 56, and 91 days. On average, each batch of concrete contains around 246 kg of GGBS and OPC, respectively.

The proportion of cementitious content in each batch is around 45% of GGBS, 45% of OPC, and 10% of SF. Similarly, the ratio of fine to coarse aggregate is 1:1, equating to 70% of total concrete volume with a 0.25 water-to-cement ratio, or 138 kg of water in each batch of concrete. Additionally, the moisture content of fine and coarse aggregate was included as input parameters since the water content in each concrete batches was adjusted according to the moisture content in the aggregates. Details of statistical metrics are listed in Table 2 below.

Data distribution analysis

The distribution correlations between the input parameters and the compressive strength are shown in Fig. 4. It illustrates the correlation between the data points by including the relative frequency distribution of each input parameter. Generally, the distribution of input parameters suggests that the dataset is appropriately distributed and fit for machine learning modelling.

Correlation coefficient analysis

Along with statistical and distribution analysis, a correlation coefficient study was performed to analyse the dataset and prepare for modelling. Pearson’s correlation coefficient approach indicated in Eq. 1 was used to calculate the correlation coefficient64. Pearson's correlation coefficient is a test statistic that shows the statistical link between two continuous variables. It is based on the covariance approach, in which the best method is considered for determining the relationship between two variables of interest. It reveals both the size of the association or correlation and the direction of the relationship. The correlation between all parameters was analysed for this research and visualized in Fig. 5 as a Pearson’s correlation heatmap.

where;

\(r\) = correlation coefficient.

\(x_{i}\) = values of the x-variable in a sample.

\(\overline{x}\) = mean of the values of the x-variable.

\(y_{i}\) = values of the y-variable in a sample.

\(\overline{y}\) = mean of the values of the y-variable.

As shown in Fig. 5 above, it can be observed that the correlation between input and output parameters is relatively low and generally in the range of -0.62 to 0.64. The range of the correlation coefficients indicates that the input variables can be considered low to moderately correlated to the compressive strength.

Data split and normalisation

The dataset's modelling proportion was randomly partitioned into two sets, i.e., training and testing dataset. Around 70% of the dataset was utilized for training the BML models, whereas 30% were used to test the models65, 65 Before training BML models, pre-processing data is required. To prevent training from being dominated by one or a few features with large magnitude, features should be normalised so that their range is consistent. The Z-score normalisation method was used in this study to normalise all values in a dataset so that the mean of all values is 0 and the standard deviation is 1. Equation 2 below shows the formula to perform a z-score normalization on every value in a dataset:

where:

x: Original value.

μ: Mean of data.

σ: Standard deviation of dataset.

Model validation using K-Fold cross-validation

Validation and testing of the models were performed using the k-fold cross-validation method illustrated in Fig. 6. In this study, a total of ten folds or k value of 10 were used. The dataset is randomly separated into test and training data and divided into k groups, using this cross-validation procedure. Validation of the model is performed on one of the groups, and training is performed on the remaining groups. The process is performed k times more until each distinct group is used as the validation set. The ultimate performance of the model is determined using test data that the model ‘not seen’ during training.

K-fold cross-validation enables the model to be trained and verified several times, resulting in a more accurate model with less overfitting. With the more traditional hold-out strategy, the dataset is partitioned into training, validation, and test sets, which reduces number of samples for model training. The model's performance is contingent upon a random selection of samples for the training, validation, and test sets.

Model evaluation

In this paper, six separate statistical measurement parameters were used to calculate the prediction efficiency of the BML models. In simpler terms, the evaluation parameters estimate the accumulated error in predictions concerning actual observations. The statistical parameters are: coefficient of determination (R2), mean absolute error (MAE), root mean squared error (RMSE), mean squared error (MSE), root mean squared logarithmic error (RMSLE), and mean absolute percentage error (MAPE). These mathematical formulations are defined in Eqs. 3–8; in this case, n is the total number of test dataset records while y′ and y are the predicted and measured values, respectively. The values of R2 would range from 0 to 1 – the closer the value is to 1, the higher fitting optimisation of the model is. The values MAE, RMSE, MSE, RMSLE, and MAPE are used to evaluate modelling error—the smaller the value, the lesser the difference between the predicted and measured values.

Results and discussion

Initial modelling

The LBGM, CATB, GBR, ADAB, and XGB algorithms were initially modelled using their default hyper-parameter settings. Each model's performance is measured in terms of prediction accuracy and error rates, i.e., R2, MSE, RMSE, MAE, RMSLE, and MAPE. The findings of the initial modelling are summarised in Table 3 below.

LBGM predicted the compressive strength of concrete with the highest prediction accuracy and the least prediction errors of all five BML models. The initial modelling of LBMG reached 0.86 and 0.94 for the training and testing prediction scores, respectively. GBR and XGB models also performed well, with prediction accuracy of 0.93 and 0.92 on the test dataset. The evaluation metrics in the LGBM model was the lowest in comparison to other BML models, with an MAE of 3.29, an RMSE of 4.10, and an RMSLE of 0.03 for test dataset. The GBR model was the second-best model in prediction errors with MAE and RMSE values of 3.24 and 4.22, respectively. The distribution of predicted results against actual results for all BML models are visualized in Fig. 7, along with the best fit line for the prediction distribution. The initial modelling suggests a reasonable prediction result; however, it is further improved by using the RS algorithm, which is discussed in detail in the following section.

In terms of prediction distribution, the LBGM and CATB models have the highest training scores of 0.86 and 0.85, respectively. In contrast, their prediction scores for the test dataset are significantly higher at 0.94 and 0.93. It suggests that both models concentrated on optimising the test score to get the maximum possible prediction accuracy. The prediction distribution indicates that the LBGM, GBR, and XBG results are closely spaced along the best fit lines compared to the CATB and ADAB models.

Model optimisation with RS algorithm

The RS algorithm focuses on the use of random combinations to optimise the hyperparameters of a model. It measures random combinations of a set of values to optimise decent outcomes, with the function tested at any number of random combinations in the parameter space. The chances of discovering the optimal parameter are relatively higher in RS algorithms compared to Grid Search algorithm due to various search patterns in the model being trained on the optimised parameters without aliasing. RS algorithms are best for lower dimensional data as this method takes less time and iterations to find the right parameter combination67. Numerous hyperparameters were optimised in this study, including n_estimator, learning_rate, max_depth, and subsample and min_sample_split. A total of 1000 iteration was performed to identify the performing model and the optimum hyperparameters for each BML models. Table 4 below shows the hyperparameters and values used for all the model, before and after optimisation process.

Based on the optimised BML models, the GBR model achieved the highest prediction accuracy of 0.96 for the test dataset followed with LBGM and CATB model with R2 of 0.95. In comparison, the optimised GBR model had the lowest prediction errors for test errors, with an MAE of 2.73, an RMSE of 3.40, respectively. For training dataset, the CATB model recorded lowest prediction error and highest prediction accuracy of 0.89. Table 5 shows the summary of prediction accuracy and evaluation metrics for the optimised BML models.

The comparison of the training and test datasets for both the initial and optimised BML models is shown in Fig. 8. In general, the RS algorithm improves prediction accuracy and reduces the modelling error for the training dataset of all BML models. However, the optimised ADAB model show a minor deficiency compared to the training results. The overall performance of BML models with RS optimisation shows that the GBR model is the best performing model with highest prediction accuracy and lowest modelling errors while the LGBM model are the best model without any optimisations with highest prediction accuracy and lowest modelling errors.

The prediction distributions of the optimised BML models appear to have a similar pattern for both the training and test datasets, with only a minor difference in prediction scores. The RS algorithms optimise the BML models to obtain a high prediction accuracy and a low error rate by tuning the hyperparameters for both the training and test datasets while simultaneously improving model performance. As presented in Fig. 9, the LBGM, CATB, and GBR all suggest a closed space between best fit and the identity line, demonstrating that the model's predictions are highly accurate.

Features importance analysis

The explainability and interpretability of ML models are active areas of research that seek to understand why and how an ML model predicts output values. Numerous techniques, including feature importance analysis (FIA), are frequently used to explain and interpret ML models68. The permutation FIA techniques are model-dependent, which means they evaluate model predictions rather than the actual data. The explainability and interpretability metrics reveal how well ML model predictions correspond to physical knowledge. Additionally, it enables the discovery of hidden correlations between targets and features that are not readily visible in the data by allowing ML models to make correct predictions.

The original dataset is updated for each feature by randomly shuffling the feature values. The model's evaluation metric for the updated dataset is computed and compared to the original dataset's evaluation metric. This procedure is repeated numerous times for each feature to get the mean and standard deviation of the permutation importance score.

In this research, the permutation FIA was performed in all BML models to understand the influence of each feature/component of concrete in predicting the compressive strength of concrete. Figure 10 below displays all the features used in the compressive strength prediction model and their relative importance. ‘Days’ are an essential feature for all BML models, and SF is the least important feature in GBR, ADAB, and XGB models. It demonstrates that changes to the day's value in the dataset substantially affect the concrete compressive strength results. In contrast, changes in SF value have a considerably low impact on the strength prediction.

Comparison between various ML algorithms

Subsequently, the initial BML models without optimisation was compared to 14 commonly used ML algorithms, including linear regression (LR), decision trees (DT), random forests (RF), and extra trees (ET), etc. Table 6 shows the summary of prediction accuracy and the evaluation metrics for 14 comparison ML models. For Table 6, only test dataset values were provided as the purpose of this section is to make comparison between BML model and other conventional models. Generally, all comparison models exhibit much lower prediction scores and more significant prediction errors than BML models. The comparison models show that the ET and RF models were the best performing model with an R2 of 0.78. Similarly, both models produced prediction errors, i.e., MAE and RMSE of 4.17 and 5.0, respectively. Overall, the comparison models demonstrate that the initial BML model outperforms all other machine learning models.

Conclusion and recommendation

Comparing all 5 BML models, the GBR model has outperformed the LBGM, CATB, ADAB, and XGB models. The GBR model optimised with RS algorithms achieved the highest prediction accuracy of 0.96 and the least prediction errors, with an MAE of 2.73, an RMSE of 3.40, and an RMSLE of 0.03. Notably, the RS algorithms optimisation technique improved the model prediction accuracy and reduced the modelling errors in all 5 BML models. Simultaneously, the evaluation of 14 commonly used ML models also suggests that the BML models have superior prediction accuracy and minimum prediction errors. These studies conclude that the optimised BML models, i.e., the GBR model are the best choice to predict the compressive strength of concrete, mainly for HPC and concrete with high volume GGBS replacements. For future research, a comparison study between ANN models with BML models or hyperparameter tuning with different optimisation algorithms, i.e., Grid Search, can be evaluated and compared with the proposed BML model's performance.

Data availability

All data generated or analysed during this study are included in this published article [and its supplementary information files]. GitHub: https://github.com/vilini007/HPC-GGBS-Concrete/blob/bae42e3d9f59743961176e43a4a504c51109e9c7/HPC_GGBS.ipynb. Google Colab: https://colab.research.google.com/drive/1fZzZfuTKI9MvD4enxCGiEVB0_d8p62Jk?usp=sharing.

References

Chung, K. L., Wang, L., Ghannam, M., Guan, M. & Luo, J. Prediction of concrete compressive strength based on early-age effective conductivity measurement. J. Build. Eng. https://doi.org/10.1016/j.jobe.2020.101998 (2020).

Nguyen, K. T., Nguyen, Q. D., Le, T. A., Shin, J. & Lee, K. Analyzing the compressive strength of green fly ash based geopolymer concrete using experiment and machine learning approaches. Constr. Build. Mater. 247, 118581. https://doi.org/10.1016/j.conbuildmat.2020.118581 (2020).

Gomaa, E., Han, T., ElGawady, M., Huang, J. & Kumar, A. Machine learning to predict properties of fresh and hardened alkali-activated concrete. Cement Concrete Composites 115(2020), 103863. https://doi.org/10.1016/j.cemconcomp.2020.103863 (2021).

Chiew, F. H. Prediction of blast furnace slag concrete compressive strength using artificial neural networks and multiple regression analysis. Proceedings - 2019 International Conference on Computer and Drone Applications, IConDA 2019, pp. 54–58, 2019, https://doi.org/10.1109/IConDA47345.2019.9034920.

Kang, M. C., Yoo, D. Y. & Gupta, R. Machine learning-based prediction for compressive and flexural strengths of steel fiber-reinforced concrete. Constr. Build. Mater. 266, 121117. https://doi.org/10.1016/j.conbuildmat.2020.121117 (2021).

Han, T., Siddique, A., Khayat, K., Huang, J. & Kumar, A. An ensemble machine learning approach for prediction and optimization of modulus of elasticity of recycled aggregate concrete. Constr. Build. Mater. 244, 118271. https://doi.org/10.1016/j.conbuildmat.2020.118271 (2020).

Singh, P., Khaskil, P. Prediction of compressive strength of green concrete with admixtures using neural networks. 2020 IEEE International Conference on Computing, Power and Communication Technologies, GUCON 2020, no. cm, pp. 714–717, 2020, https://doi.org/10.1109/GUCON48875.2020.9231230.

Feng, D. C. et al. Machine learning-based compressive strength prediction for concrete: An adaptive boosting approach. Constr. Build. Mater. 230, 117000. https://doi.org/10.1016/j.conbuildmat.2019.117000 (2020).

Mousavi, S. M., Aminian, P., Gandomi, A. H., Alavi, A. H. & Bolandi, H. A new predictive model for compressive strength of HPC using gene expression programming. Adv. Eng. Softw. 45(1), 105–114. https://doi.org/10.1016/j.advengsoft.2011.09.014 (2012).

Ben-Chaabene, W., Flah, M. & Nehdi, M. L. “Machine learning prediction of mechanical properties of concrete: Critical review. Constr. Build. Mater. 260, 119889. https://doi.org/10.1016/j.conbuildmat.2020.119889 (2020).

Aliev, K. & Antonelli, D. Proposal of a monitoring system for collaborative robots to predict outages and to assess reliability factors exploiting machine learning. Appl. Sci. (Switzerland) 11(4), 1–20. https://doi.org/10.3390/app11041621 (2021).

Bahaghighat, M., Abedini, F., Xin, Q., Zanjireh, M. M. & Mirjalili, S. Using machine learning and computer vision to estimate the angular velocity of wind turbines in smart grids remotely. Energy Rep. 7, 8561–8576. https://doi.org/10.1016/j.egyr.2021.07.077 (2021).

Dangut, M. D., Skaf, Z. & Jennions, I. K. An integrated machine learning model for aircraft components rare failure prognostics with log-based dataset. ISA Trans. 113, 127–139. https://doi.org/10.1016/J.ISATRA.2020.05.001 (2021).

Moshtaghzadeh, M., Bakhtiari, A., Izadpanahi, E. & Mardanpour, P. Artificial Neural Network for the prediction of fatigue life of a flexible foldable origami antenna with Kresling pattern. Thin-Walled Struct. 174, 109160. https://doi.org/10.1016/J.TWS.2022.109160 (2022).

Degtyarev, V. V. & Naser, M. Z. Boosting machines for predicting shear strength of CFS channels with staggered web perforations. Structures 34, 3391–3403. https://doi.org/10.1016/j.istruc.2021.09.060 (2021).

Selvaraj, S. & Sivaraman, S. Prediction model for optimized self-compacting concrete with fly ash using response surface method based on fuzzy classification. Neural Comput. Appl. 31(5), 1365–1373. https://doi.org/10.1007/s00521-018-3575-1 (2019).

Castelli, M., Vanneschi, L. & Silva, S. Prediction of high performance concrete strength using Genetic Programming with geometric semantic genetic operators. Expert Syst. Appl. 40(17), 6856–6862. https://doi.org/10.1016/j.eswa.2013.06.037 (2013).

Sun, H., Burton, H. V. & Huang, H. Machine learning applications for building structural design and performance assessment: State-of-the-art review. J. Build. Eng. 33(2020), 101816. https://doi.org/10.1016/j.jobe.2020.101816 (2021).

Naranjo-Pérez, J., Infantes, M., Fernando Jiménez-Alonso, J. & Sáez, A. A collaborative machine learning-optimization algorithm to improve the finite element model updating of civil engineering structures. Eng. Struct. https://doi.org/10.1016/j.engstruct.2020.111327 (2020).

Abuodeh, O. R., Abdalla, J. A. & Hawileh, R. A. Assessment of compressive strength of Ultra-high Performance Concrete using deep machine learning techniques. Appl. Soft Comput. J. 95, 106552. https://doi.org/10.1016/j.asoc.2020.106552 (2020).

Khademi, F., Jamal, S. M., Deshpande, N. & Londhe, S. Predicting strength of recycled aggregate concrete using Artificial Neural Network, Adaptive Neuro-Fuzzy Inference System and Multiple Linear Regression. Int. J. Sustain. Built Environ. 5(2), 355–369. https://doi.org/10.1016/j.ijsbe.2016.09.003 (2016).

Yan, K. & Shi, C. Prediction of elastic modulus of normal and high strength concrete by support vector machine. Constr. Build. Mater. 24(8), 1479–1485. https://doi.org/10.1016/j.conbuildmat.2010.01.006 (2010).

Azimi-Pour, M., Eskandari-Naddaf, H. & Pakzad, A. Linear and non-linear SVM prediction for fresh properties and compressive strength of high volume fly ash self-compacting concrete. Constr. Build. Mater. 230, 117021. https://doi.org/10.1016/j.conbuildmat.2019.117021 (2020).

Seleemah, A. A. A multilayer perceptron for predicting the ultimate shear strength of reinforced concrete beams. J. Civil Eng. Constr. Technol. https://doi.org/10.5897/JCECT11.098 (2012).

Kaloop, M. R., Kumar, D., Samui, P., Hu, J. W. & Kim, D. Compressive strength prediction of high-performance concrete using gradient tree boosting machine. Constr. Build. Mater. 264, 120198. https://doi.org/10.1016/j.conbuildmat.2020.120198 (2020).

Lee, S., Vo, T. P., Thai, H. T., Lee, J. & Patel, V. Strength prediction of concrete-filled steel tubular columns using Categorical Gradient Boosting algorithm. Eng. Struct. 238, 112109. https://doi.org/10.1016/j.engstruct.2021.112109 (2021).

Aslam, F. et al. Applications of gene expression programming for estimating compressive strength of high-strength concrete. Adv. Civil Eng. https://doi.org/10.1155/2020/8850535 (2020).

Lim, C. H., Yoon, Y. S. & Kim, J. H. Genetic algorithm in mix proportioning of high-performance concrete. Cem. Concr. Res. 34(3), 409–420. https://doi.org/10.1016/j.cemconres.2003.08.018 (2004).

Yeh, I. C. Modeling of strength of high-performance concrete using artificial neural networks. Cem. Concr. Res. 28(12), 1797–1808. https://doi.org/10.1016/S0008-8846(98)00165-3 (1998).

Milovancevic, M. et al. Prediction of shear debonding strength of concrete structure with high-performance fiber reinforced concrete. Structures 33, 4475–4480. https://doi.org/10.1016/j.istruc.2021.07.012 (2021).

Kaveh, A., Bakhshpoori, T. & Hamze-Ziabari, S. M. M5’ and mars based prediction models for properties of selfcompacting concrete containing fly ash. Periodica Polytechnica Civil Eng. 62(2), 281–294. https://doi.org/10.3311/PPci.10799 (2018).

Ahmad, A. et al. Prediction of compressive strength of fly ash based concrete using individual and ensemble algorithm. Materials 14(4), 1–21. https://doi.org/10.3390/ma14040794 (2021).

Bui, D. K., Nguyen, T., Chou, J. S., Nguyen-Xuan, H. & Ngo, T. D. A modified firefly algorithm-artificial neural network expert system for predicting compressive and tensile strength of high-performance concrete. Constr. Build. Mater. 180, 320–333. https://doi.org/10.1016/j.conbuildmat.2018.05.201 (2018).

Sargam, Y., Wang, K. & Cho, I. H. Machine learning based prediction model for thermal conductivity of concrete. J. Build. Eng. 34, 101956. https://doi.org/10.1016/j.jobe.2020.101956 (2020).

Cai, R. et al. Prediction of surface chloride concentration of marine concrete using ensemble machine learning. Cement Concrete Res. 136, 106164. https://doi.org/10.1016/j.cemconres.2020.106164 (2020).

Asteris, P. G., Kolovos, K. G., Douvika, M. G. & Roinos, K. Prediction of self-compacting concrete strength using artificial neural networks. Eur. J. Environ. Civ. Eng. 20(sup1), s102–s122. https://doi.org/10.1080/19648189.2016.1246693 (2016).

Siddique, R., Aggarwal, P. & Aggarwal, Y. Prediction of compressive strength of self-compacting concrete containing bottom ash using artificial neural networks. Adv. Eng. Softw. 42(10), 780–786. https://doi.org/10.1016/j.advengsoft.2011.05.016 (2011).

Słoński, M. A comparison of model selection methods for compressive strength prediction of high-performance concrete using neural networks. Comput. Struct. 88(21–22), 1248–1253. https://doi.org/10.1016/j.compstruc.2010.07.003 (2010).

Lin, S. S., Shen, S. L., Zhou, A. & Xu, Y. S. Risk assessment and management of excavation system based on fuzzy set theory and machine learning methods. Autom. Constr. 122, 103490. https://doi.org/10.1016/j.autcon.2020.103490 (2021).

Xu, H. et al. Identifying diseases that cause psychological trauma and social avoidance by GCN-Xgboost. BMC Bioinf. 21, 2–6. https://doi.org/10.1186/s12859-020-03847-1 (2020).

Dhananjay, B. & Sivaraman, J. Analysis and classification of heart rate using CatBoost feature ranking model. Biomed. Signal Process. Control 68, 102610. https://doi.org/10.1016/j.bspc.2021.102610 (2021).

Kivrak, M., Guldogan, E. & Colak, C. Prediction of death status on the course of treatment in SARS-COV-2 patients with deep learning and machine learning methods. Comput. Methods Programs Biomed. 201, 105951. https://doi.org/10.1016/j.cmpb.2021.105951 (2021).

Farooq, F., Ahmed, W., Akbar, A., Aslam, F. & Alyousef, R. Predictive modeling for sustainable high-performance concrete from industrial wastes: A comparison and optimization of models using ensemble learners. J. Clean. Prod. 292, 126032. https://doi.org/10.1016/j.jclepro.2021.126032 (2021).

Balf, F. R., Kordkheili, H. M. & Kordkheili, A. M. A new method for predicting the ingredients of self-compacting concrete (SCC) including fly ash (FA) using data envelopment analysis (DEA). Arab. J. Sci. Eng. 46(5), 4439–4460. https://doi.org/10.1007/s13369-020-04927-3 (2021).

Nguyen-Sy, T. et al. Predicting the compressive strength of concrete from its compositions and age using the extreme gradient boosting method. Constr. Build. Mater. 260, 119757. https://doi.org/10.1016/j.conbuildmat.2020.119757 (2020).

Asteris, P. G. & Kolovos, K. G. Self-compacting concrete strength prediction using surrogate models. Neural Comput. Appl. 31(1), 409–424. https://doi.org/10.1007/s00521-017-3007-7 (2019).

Zhang, J. et al. Modelling uniaxial compressive strength of lightweight self-compacting concrete using random forest regression. Constr. Build. Mater. 210, 713–719. https://doi.org/10.1016/j.conbuildmat.2019.03.189 (2019).

Yu, Y., Li, W., Li, J. & Nguyen, T. N. A novel optimised self-learning method for compressive strength prediction of high performance concrete. Constr. Build. Mater. 184, 229–247. https://doi.org/10.1016/j.conbuildmat.2018.06.219 (2018).

Goliatt, L. & Farage, M. R. C. An extreme learning machine with feature selection for estimating mechanical properties of lightweight aggregate concretes. 2018 IEEE congress on evolutionary computation, CEC 2018 - Proceedings, 2018, https://doi.org/10.1109/CEC.2018.8477673.

Ke, G. et al., “LightGBM: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst., vol. 2017-Decem, no. Nips, pp. 3147–3155 (2017).

Hancock, J. T. & Khoshgoftaar, T. M. CatBoost for big data: An interdisciplinary review. J. Big Data https://doi.org/10.1186/s40537-020-00369-8 (2020).

Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V. & Gulin, A. Catboost: Unbiased boosting with categorical features. Adv. Neural Inf. Process. Syst. 2018, 6638–6648 (2018).

Bakouregui, A. S., Mohamed, H. M., Yahia, A. & Benmokrane, B. Explainable extreme gradient boosting tree-based prediction of load-carrying capacity of FRP-RC columns. Eng. Struct. 245, 112836. https://doi.org/10.1016/j.engstruct.2021.112836 (2021).

Gong, M. et al. Gradient boosting machine for predicting return temperature of district heating system: A case study for residential buildings in Tianjin. J. Build. Eng. 27, 100950. https://doi.org/10.1016/j.jobe.2019.100950 (2020).

Pham, B. T. et al. A novel approach for classification of soils based on laboratory tests using Adaboost, Tree and ANN modeling. Transp. Geotech. 27, 100508. https://doi.org/10.1016/j.trgeo.2020.100508 (2021).

Liu, Q., Wang, X., Huang, X. & Yin, X. Prediction model of rock mass class using classification and regression tree integrated AdaBoost algorithm based on TBM driving data. Tunnell. Undergr. Space Technol. 106, 103595. https://doi.org/10.1016/j.tust.2020.103595 (2020).

Tang, Q., Xia, G., Zhang, X., & Long, F. A customer churn prediction model based on XGBoost and MLP. Proceedings - 2020 International Conference on Computer Engineering and Application, ICCEA 2020, pp. 608–612, 2020, https://doi.org/10.1109/ICCEA50009.2020.00133.

Mustika, W. F., Murfi, H., Widyaningsih, Y. Analysis accuracy of XGBoost model for multiclass classification - a case study of applicant level risk prediction for life insurance,” Proceeding - 2019 5th International Conference on Science in Information Technology: Embracing Industry 4.0: Towards Innovation in Cyber Physical System, ICSITech 2019, pp. 71–77, 2019, https://doi.org/10.1109/ICSITech46713.2019.8987474.

Wu, D., Guo, P., Wang, P. Malware Detection based on Cascading XGBoost and Cost Sensitive. Proceedings - 2020 International Conference on Computer Communication and Network Security, CCNS 2020, pp. 201–205, 2020, https://doi.org/10.1109/CCNS50731.2020.00051.

Li, J. & Zhang, R. Dynamic weighting multi factor stock selection strategy based on XGboost machine learning algorithm. Proceedings of 2018 IEEE international conference of safety produce informatization, IICSPI 2018, pp. 868–872, 2019, https://doi.org/10.1109/IICSPI.2018.8690416.

Qu, Y., Lin, Z., Li, H. & Zhang, X. Feature recognition of urban road traffic accidents based on GA-XGBoost in the context of big data. IEEE Access 7, 170106–170115. https://doi.org/10.1109/ACCESS.2019.2952655 (2019).

Chen, T. & Guestrin, C. XGBoost: A scalable tree boosting system. Proc. ACM SIGKDD Int. Conf. Knowl. Discov. Data. Min. 13, 785–794. https://doi.org/10.1145/2939672.2939785 (2016).

“Welcome to PyCaret - PyCaret Official.” https://pycaret.gitbook.io/docs/ (accessed Apr. 25, 2022).

Lasisi, A., Sadiq, M. O., Balogun, I., Tunde-Lawal, A., & Attoh-Okine, N. A boosted tree machine learning alternative to predictive evaluation of nondestructive concrete compressive strength. Proceedings - 18th IEEE International Conference on Machine Learning and Applications, ICMLA 2019, pp. 321–324, 2019, https://doi.org/10.1109/ICMLA.2019.00060.

Nguyen, H., Vu, T., Vo, T. P. & Thai, H. T. Efficient machine learning models for prediction of concrete strengths. Constr. Build. Mater. 266, 120950. https://doi.org/10.1016/j.conbuildmat.2020.120950 (2021).

Sen Fan, R., Li, Y., Ma, T. T. Research and Application of Project Settlement Overdue Prediction Based on XGBOOST Intelligent Algorithm. iSPEC 2019 - 2019 IEEE Sustainable Power and Energy Conference: Grid Modernization for Energy Revolution, Proceedings, pp. 1213–1216, 2019, https://doi.org/10.1109/iSPEC48194.2019.8975056.

Bergstra, J. & Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305 (2012).

Pan, Y. & Zhang, L. Data-driven estimation of building energy consumption with multi-source heterogeneous data. Appl. Energy 268, 114965. https://doi.org/10.1016/j.apenergy.2020.114965 (2020).

Acknowledgements

The authors would like to thank Pro Concrete Consult for providing the dataset.

Author information

Authors and Affiliations

Contributions

V. R. wrote the main manuscript text and prepared figures. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rathakrishnan, V., Bt. Beddu, S. & Ahmed, A.N. Predicting compressive strength of high-performance concrete with high volume ground granulated blast-furnace slag replacement using boosting machine learning algorithms. Sci Rep 12, 9539 (2022). https://doi.org/10.1038/s41598-022-12890-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-12890-2

This article is cited by

-

Innovative machine learning approaches to predict the compressive strength of recycled plastic aggregate self-compacting concrete incorporating different waste ashes

Multiscale and Multidisciplinary Modeling, Experiments and Design (2024)

-

Prediction of the compressive strength of normal concrete using ensemble machine learning approach

Asian Journal of Civil Engineering (2024)

-

Prediction of compressive strength and tensile strain of engineered cementitious composite using machine learning

International Journal of Mechanics and Materials in Design (2024)

-

A comparative analysis of tree-based machine learning algorithms for predicting the mechanical properties of fibre-reinforced GGBS geopolymer concrete

Multiscale and Multidisciplinary Modeling, Experiments and Design (2024)

-

Machine learning intelligence to assess the shear capacity of corroded reinforced concrete beams

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.