Abstract

Community detection is a fundamental procedure in the analysis of network data. Despite decades of research, there is still no consensus on the definition of a community. To analytically test the realness of a candidate community in weighted networks, we present a general formulation from a significance testing perspective. In this new formulation, the edge-weight is modeled as a censored observation due to the noisy characteristics of real networks. In particular, the edge-weights of missing links are incorporated as well, which are specified to be zeros based on the assumption that they are truncated or unobserved. Thereafter, the community significance assessment issue is formulated as a two-sample test problem on censored data. More precisely, the Logrank test is employed to conduct the significance testing on two sets of augmented edge-weights: internal weight set and external weight set. The presented approach is evaluated on both weighted networks and un-weighted networks. The experimental results show that our method can outperform prior widely used evaluation metrics on the task of individual community validation.

Similar content being viewed by others

Introduction

Community detection is a fundamental issue in network data analysis. It aims at dividing nodes in a network into different groups called communities. It is expected that there should be more edges within each community and few edges across different communities. The community detection procedure has been widely used in many fields such as social science, biology, medicine, and chemistry1.

During the past decades, numerous community detection algorithms have been developed from different perspectives1,2,3,4. Despite these developments, the issue of deciding whether a derived community is real or not is far from being resolved. Such a community validation issue fits naturally into a framework of hypothesis testing, in which the null hypothesis is that the target community is not real.

In fact, many metrics such as modularity and conductance have been proposed for assessing the goodness of a potential community5. However, most of these metrics are not developed based on a rigorous significance testing procedure6. Theoretically, the realness of a community should be an analytical problem relative to some particular definitions of communities. Towards this direction, several research efforts have been conducted to analytically assess the realness of one candidate community, such as OSLOM7,8 , ESSC9, DSC10, CCME11, and FOCS12. Among these methods, only OSLOM and CCME focus on validating a community in weighted networks. Unfortunately, in both OSLOM and CCME, the statistical significance of a target community is assessed through the probability of association between each node and the community13. One method that can directly test the realness of a community in edge-weighted graphs is still not available.

We formulate the community significance assessment problem in edge-weighted networks as a non-parametric two-sample test issue on censored data. In this paper, the edge-weights are assumed to be non-negative and continuous. The network structure and edge-weights may contain substantial measurement errors during the network inference process14. Based on this observation, we model each edge-weight as a censored observation in survival analysis15. If there is no edge between two nodes, the corresponding edge-weight is 0, which is either unobserved or truncated. Consequently, we construct two groups of edge-weights: one group is composed of edge-weights within the community and another group is composed of edge-weights between nodes in the community and remaining nodes outside the community. If the target community is not a real community, it is reasonable to expect that there is no difference between these two groups. Therefore, we can utilize a distribution-free two-sample test procedure in censored data analysis to assess the statistical significance of the candidate community. In this paper, we choose the popular Logrank test16 to fulfill this task.

One of the characteristics of the presented formulation is that the unreliability of edge-weights is fully incorporated into the model. Meanwhile, it provides a general framework for validating weighted communities from a new angle. In particular, for un-weighted networks, we can reveal some interesting connections between our formulation and the community evaluation metric in Ref.17. We evaluate our method on both weighted networks and un-weighted networks. Experiments demonstrate that our method is comparable with prior state-of-the-art metrics on individual community assessment.

The rest of this article is arranged as follows. In the next section, our formulation is described in detail. Thereafter, the experimental results are presented and some discussions are given in the last two sections.

Method

Notations

Given a weighted network \(G = (V,E,W)\), where V is the node set, E is the edge set, and W is the set of positive weights. For a given subset of nodes S (\(S \subseteq V\)), its induced subgraph G[S] can be regarded as a candidate community. All edges incident on the nodes in S could be divided into two groups: the set of internal edges within G[S] that are incident on two nodes from S and the set of external edges of G[S] which are incident on one node from S and another node from \(V \backslash S\).

Formulation

To test if a given candidate community in the weighted network is a real one, we present a formulation based on the non-parametric two-sample test for censored data. The main workflow of our method is shown in Fig. 1, which will be elaborated below.

The main workflow of our method. (a) The network with augmented edge-weights for missing links. Each solid line represents a real edge and each dashed line denotes an augmented edge that is not included in E. In this network, there are totally 7 nodes and the candidate community is the sub-graph induced by the node set {1,2,3,4}. The size of each line is proportional to the corresponding edge-weight and the weights of augmented edges for missing links are zeros. (b) The ordered edge-weights. For the given candidate community in (a), we can construct two sets of edge-weights. One set is composed of the internal edge-weights and another set is composed of the external edge-weights. We can order these two sets to check if their empirical cumulative distribution functions are different. If the candidate community is not a real one, it can be expected that these two sets of weights have the same distribution. In Logrank test, these two sets are merged to generate a combined ordered edge-weight list. (c) Contingency tables for distinct weights. For each of the five distinct weights in (b), we can construct a contingency table. For instance, there are 2 edges whose weights are 3.2 and these two edges are internal edges, the first column of the leftmost table is (2, 0, 2). The second column of the leftmost table is (4, 12, 16) since there are 4 (and 12) internal (and external) edges whose weights are less than 3.2. (d) The final test statistic and its formula. Based on the contingency tables in (c), we can calculate the test statistic Z and the corresponding p-value to quantify the statistical significance of the candidate community. The Bonferroni correction is further applied to deliver an adjusted p-value, which is obtained by multiplying the original p-value with number of all possible communities of the same size.

In the first step of our method, we regard each edge-weight as a censored observation. As shown in Fig. 1a, the set of edge-weights is augmented by including the weights of missing links. The edge-weights of these missing links are 0s since they are either truncated or unobserved. Then, we can construct two augmented sets of edge-weights: the set of internal edge-weights \(W_{S}^{in} = \{w_{1}^{in}, w_{2}^{in},\ldots, w_{\left( {\begin{array}{c}|S|\\ 2\end{array}}\right)}^{in}\}\) and the set of external edge-weights \(W_{S}^{out} = \{w_{1}^{out}, w_{2}^{out},\ldots, w_{|S|(|V|-|S|)}^{out}\}\).

Let \(F_{in}(\cdot )\) and \(F_{out}(\cdot )\) be complementary cumulative distribution functions for internal and external weights, respectively. Then, the community assessment issue can be modeled as a two-sample test problem, in which the hypotheses under consideration are:

\(H_{0}\): \(F_{in}(w)=F_{out}(w)\);

\(H_{1}\): \(F_{in}(w)>F_{out}(w)\), for at least one w, where \(w\ge 0\) is the non-negative weight.

Note that a larger edge-weight indicates a stronger connection between two corresponding nodes and both \(F_{in}(\cdot )\) and \(F_{out}(\cdot )\) are complementary cumulative distribution functions. If \(H_{0}\) is violated and \(H_{1}\) holds, then there will be more internal edges with positive edge-weights and the internal edges are associated with larger weights. Hence, the proposed two-sample test is capable of quantifying the realness of a target community in a statistically sound manner.

To solve the above two-sample test issue, many effective methods can be utilized. Here we adopt the Logrank test due to its popularity in censored data analysis. As shown in Fig. 1b, \(W_{S}^{in}\) and \(W_{S}^{out}\) are first merged to generate a new set \(W_{S}\) and then all edge-weights in \(W_{S}\) are sorted in a non-increasing order. For each distinct edge-weight \(w_{i}\) in \(W_{S}\), we can construct a \(2\times 2\) table as shown in Fig. 1c. In Fig. 1c, \(d_{i}^{in}\), \(d_{i}^{out}\) and \(d_{i}\) denote the number of internal edges, the number of external edges and the number of edges in \(W_{S}\) whose edge-weights are \(w_{i}\). Meanwhile, \(n_{i}^{in}\), \(n_{i}^{out}\) and \(n_{i}\) denote the number of internal edges, the number of external edges and the number of edges in \(W_{S}\) whose edge-weights are not bigger than \(w_{i}\).

Based on above notations, the test statistic Z of Logrank test is:

where q is the number of distinct edge-weights in \(W_{S}\), \(N_i=n_{i}^{in}+n_{i}^{out}, E_i^{in} = \frac{n_{i}^{in}}{N_{i}}d_{i}\) and \(v_i^{in} = \frac{n_{i}^{in} d_{i}(N_{i}-d_{i})n_{i}^{out}}{(N_{i})^2(N_{i}-1)}\). When \(H_{0}\) is true, Z approximately follows a N(0, 1) distribution. In Eq. (1), each (\(d_i^{in}-E_i^{in}\)) can be regarded as the “observed minus expected” difference with respect to the number of internal edges of a specific weight. Thus, a real community should be associated with a large Z statistic. Based on this approximation, the corresponding p-value can be obtained to assess the statistical significance of G[S].

In regard to the combinatorial nature of community detection, the community significance assessment issue is actually a multiple hypothesis testing problem. Hence, we need to conduct a multiple testing correction by calculating an adjusted p-value for each candidate community. The most popular method for multiple testing correction is probably the Bonferroni correction approach, in which the original p-value is multiplied by the number of tested hypotheses to obtain an adjusted p-value. In our context, the number of tested hypotheses can be calculated as the number of possible communities of the same size. More precisely, for a given community G[S] of size |S|, its adjusted p-value is calculated as \(p_{adj}(G[S])=min\{1, p(G[S])\times \left( {\begin{array}{c}|V|\\ |S|\end{array}}\right) \}\), where p(G[S]) is original p-value of G[S] and |V| is the number of nodes of the graph. In the experiment, the adjusted p-value is used instead of the original p-value in our method.

Un-weighted special case

For un-weighted networks, the edge-weight is either 1 or 0, and thus \(q=2\) in Eq. (1). For the weight \(w_1 = 1\), we have: \(n_{1}^{in} = \left( {\begin{array}{c}|S|\\ 2\end{array}}\right) \), \(n_{1}^{out} = |S|(|V|-|S|)\), and thus \(E_1^{in} = \frac{n_{1}^{in}}{N_{1}}d_{1} = \frac{\left( {\begin{array}{c}|S|\\ 2\end{array}}\right) }{\left( {\begin{array}{c}|S|\\ 2\end{array}}\right) +|S|(|V|-|S|)}d_1\), \(v_1^{in} = \frac{n_{1}^{in}n_{1}^{out}d_{1}(N_{1}-d_{1})}{(N_{1})^2(N_{1}-1)} = \frac{\left( {\begin{array}{c}|S|\\ 2\end{array}}\right) |S|(|V|-|S|)d_1\left[ \left( {\begin{array}{c}|S|\\ 2\end{array}}\right) +|S|(|V|-|S|)-d_1\right] }{\left[ \left( {\begin{array}{c}|S|\\ 2\end{array}}\right) +|S|(|V|-|S|)\right] ^{2}\left[ \left( {\begin{array}{c}|S|\\ 2\end{array}}\right) +|S|(|V|-|S|)-1\right] }\). For the weight \(w_2 = 0\), we have: \(n_{2}^{in} = d_{2}^{in}\), \(n_{2}^{out} = d_{2}^{out}\), \(N_2 = d_2\), and thus \(E_2^{in} = \frac{n_{2}^{in}}{N_{2}}d_{2} = d_{2}^{in}\), \(v_2^{in} = 0\). Thus, Eq. (1) can be rewritten as:

In Ref.17, a new community evaluation metric has been presented, which can expressed as follows based on our notations:

Thus, we can get the mathematical relation between Z (our test statistic for un-weighted networks) and \(Z'\) (the community evaluation metric in Ref.17):

As shown in Eq. (4), we can quantitatively establish the connection between the special case of our method for un-weighted networks and one popular metric in the literature.

Results

To test whether the presented p-value is effective on community evaluation, we conduct a series of experiments according to the pipeline shown in Fig. 2. Firstly, existing community detection algorithms are employed to produce a set of identified communities on networks with ground-truth communities. Then, we use both internal validation metrics (e.g. our method, modularity) and external validation metrics (e.g. precision, recall) to quantitatively validate each identified community. Since external validation metrics are calculated based on the ground-truth information, which can be used as the “gold standard”. In other words, one internal validation metric is a good community validation index if it is highly correlated with each external validation metric on the assessment of identified communities. Based on this assumption, we calculate the Pearson’s correlation coefficient between two vectors (one is generated from an internal validation metric and another one is produced by an external validation metric), where each vector is composed of the validation index values on a set of identified communities. Finally, the Friedman test18 and three post-hoc tests: the Nemenyi test19, the Bonferroni–Dunn test20 and the Holm’s step-down test21 are employed to check if our method is significantly better than other popular internal validation metrics.

The main workflow of our experiments. Given a network with three ground-truth communities: {1, 3, 4, 5}, {2, 6, 7, 8} and {9, 10, 11, 12}, three communities are detected by the SLPAw algorithm: \(A= \{1, 3, 4, 5\}\), \(B= \{2, 6, 7, 8, 9\}\), \(C=\{10, 11, 12\}\). Each identified community can be assessed using both the internal validation metric and the external validation metric. For example, the negative log value of our method and the precision for the identified community A is 9.39 and 1, respectively. Then, a vector for each metric on the set of identified communities is readily available. For instance, the validation index vector for our method and the Jaccard coefficient is (9.39, 6.31, 7.56) and (1, 0.8, 0.75), respectively. Consequently, the Pearson’s correlation coefficient is calculated between each pair of vectors: one from an internal validation metric and another one from an external validation metric. Based on the correlation coefficients, four statistical tests are further applied to check if our method is really better than other internal validation metrics on the task of individual community assessment.

Data sets

In our experiment, we use two groups of data sets. One group is composed of four weighted PPI (Protein–Protein Interaction) networks: Collins200722, Gavin200623, Krogan2006_core24, Krogan2006_extended24. There are three sets of ground-truth communities for these weighted PPI networks, where each set is collected from one of the following databases of protein complexes: CYC200825, MIPS26 and SGD27. There are 408, 203 and 323 ground-truth communities in these three sets, respectively. Another group is composed of six real un-weighted networks: Karate28, Football29, Personal Facebook (Personal)9, Political blogs (PolBlogs)30, Books about US politics (PolBooks)31, and Railways32. The topological characteristics of six real un-weighted networks are provided in Supplementary Table S1.

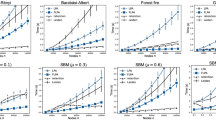

The rank distribution for each internal validation metric on (a) weighted PPI networks and (b) Un-weighted networks. Here the box plot is used to depict the distributions: minimum (lower line), lower quartile (lower edge of the box), median (center line), upper quartile (upper edge of the box) and maximum (upper line). Meanwhile, mean (star) and outlier (cross) are also plotted.

Parameter setting

We choose three classical community detection methods: SLPAw33, Infomap34 and Louvain35 to detect communities. In our experiment, we run these three methods with their default parameter settings for weighted graphs.

Experiment

We compare our method with four internal metrics: conductance36, modularity37, p-value in OSLOM7 and p-value in CCME11. The p-value of OSLOM is obtained with its default setting and the p-value of a community in CCME is the maximal p-value of all nodes within the community. Since Conductance, the p-values in our method, OSLOM and CCME are negatively correlated with Jaccard coefficient, Precision and Recall, we use the negative Pearson’s correlation coefficient for these three metrics in the performance comparison. Then, for the set of reported communities on each data set from each community detection algorithm, we can use the Pearson’s correlation coefficient with respect to each external validation metric to check which internal validation metric is better. More precisely, a better internal validation metric should have a larger correlation coefficient. The detailed results are recorded in the Supplementary Tables S2–S5. From these tables, we can obtain the rank distribution for each internal validation metric, where a larger correlation coefficient will be assigned to a smaller rank. The rank distributions on weighted PPI networks and un-weighted networks in terms of box plots are provided in Fig. 3a,b, respectively.

From Fig. 3, it can be observed that our method can achieve the smallest average rank among five internal validation metrics. To check if our method is really better than the other four internal validation metrics, we first apply the Friedman test to assess the null hypothesis that all methods have the same rank. The \(\chi _{F}^{2}\) value in the Friedman test on weighted PPI networks and un-weighted networks is 200.5704 and 45.6889, respectively. This means that the performance gaps among different internal validation metrics are statistically significant when the significance level is specified to be 0.05. Then, we further employ the Nemenyi test, the Bonferroni-Dunn test and the Holm’s step-down test to compare our method with each competing internal validation metric in a pair-wise manner.

In Table 1, we record the rank difference between our method and each competing method on both weighted PPI networks and un-weighted networks. As shown in Table 1, the rank difference values between our method and Modularity, Conductance and CCME on weighted PPI networks are larger than the critical difference (CD) thresholds for both the Nemenyi test and the Bonferroni–Dunn test when the significance level is 0.05. This indicates that our method is significantly better than Conductance, Modularity and CCME under these two tests. On the un-weighted networks, our method is significantly better than OSLOM and CCME according to the Bonferroni–Dunn test and the Nemenyi test.

In Table 2, we list the p-values for the average rank difference between our method and each competing method based on the Holm’s step-down test. Besides, the adjusted significance level for each position after sorting the p-values in a non-decreasing order is provided as well. As shown in Table 2, all the p-values on PPI networks are smaller than the corresponding adjusted significance levels. This indicates the superiority of our method over other four metrics on weighted networks is also confirmed by the Holm’s step-down test. Similar to results in Table 1, we can claim that our method is significantly better than OSLOM and CCME on un-weighted networks based on the hypothesis testing results in Table 2.

Discussion

We have presented a general approach for assessing the statistical significance of a community from weighted networks. In this new formulation, the weights of missing links are set to be zeros and all edge-weights are treated as truncated observations. Based on this assumption, the community validation issue is modeled as a two-sample test problem on censored data. The presented formulation provides a general framework for community validation from a significance testing perspective. Based on this framework, we can either reveal the rationale underlying some existing community validation metrics or develop new community evaluation measures.

References

Fortunato, S. Community detection in graphs. Phys. Rep. 486, 75–174 (2010).

Orman, G. K., Labatut, V. & Cherifi, H. Comparative evaluation of community detection algorithms: A topological approach. J. Stat. Mech: Theory Exp. 2012, P08001. https://doi.org/10.1088/1742-5468/2012/08/p08001 (2012).

Dao, V. L., Bothorel, C. & Lenca, P. Community structure: A comparative evaluation of community detection methods. Netw. Sci. 8, 1–41. https://doi.org/10.1017/nws.2019.59 (2020).

Jebabli, M., Cherifi, H., Cherifi, C. & Hamouda, A. Community detection algorithm evaluation with ground-truth data. Physica A 492, 651–706. https://doi.org/10.1016/j.physa.2017.10.018 (2018).

Chakraborty, T., Dalmia, A., Mukherjee, A. & Ganguly, N. Metrics for community analysis: A survey. ACM Comput. Surv. 50, 1–37 (2017).

Zhang, P. & Moore, C. Scalable detection of statistically significant communities and hierarchies, using message passing for modularity. Proc. Natl. Acad. Sci. U.S.A. 111, 18144–18149 (2014).

Lancichinetti, A., Radicchi, F., Ramasco, J. J. & Fortunato, S. Finding statistically significant communities in networks. PLoS ONE 6, e18961 (2011).

Lancichinetti, A., Radicchi, F. & Ramasco, J. J. Statistical significance of communities in networks. Phys. Rev. E. https://doi.org/10.1103/PhysRevE.81.046110 (2010).

Wilson, J. D., Wang, S., Mucha, P. J., Bhamidi, S. & Nobel, A. B. A testing based extraction algorithm for identifying significant communities in networks. Ann. Appl. Stat. 8, 1853–1891 (2014).

He, Z., Liang, H., Chen, Z., Zhao, C. & Liu, Y. Detecting statistically significant communities. IEEE Trans. Knowl. Data Eng. https://doi.org/10.1109/TKDE.2020.3015667 (2020).

Palowitch, J., Bhamidi, S. & Nobel, A. B. Significance-based community detection in weighted networks. J. Mach. Learn. Res. 18, 6899–6946 (2018).

Palowitch, J. Computing the statistical significance of optimized communities in networks. Sci. Rep. 9, 1–10 (2019).

He, Z., Liang, H., Chen, Z., Zhao, C. & Liu, Y. Computing exact p-values for community detection. Data Min. Knowl. Discuss. 34, 833–869 (2020).

Newman, M. E. Network structure from rich but noisy data. Nat. Phys. 14, 542–545 (2018).

Klein, J. P. & Moeschberger, M. L. Survival Analysis: Techniques for Censored and Truncated Data (Springer, 2003).

Mantel, N. Evaluation of survival data and two new rank order statistics arising in its consideration. Cancer Chemother. Rep. 50, 163–170 (1966).

Zhao, Y., Levina, E. & Zhu, J. Community extraction for social networks. Proc. Natl. Acad. Sci. U.S.A. 108, 7321–7326 (2011).

Mahrer, J. M. & Magel, R. C. A comparison of tests for the k-sample, non-decreasing alternative. Stats Med. 14, 863–871 (2010).

Nemenyi, P. Distribution-free multiple comparisons. Biometrics 18, 263 (1962).

Dunn, O. J. Multiple comparisons among means. J. Am. Stat. Assoc. 56, 52–64 (1961).

Holm, S. A simple sequentially rejective multiple test procedure. Scand. J. Stat. 6, 65–70 (1979).

Collins, S. R. et al. Toward a comprehensive atlas of the physical interactome of Saccharomyces cerevisiae. Mol. Cell. Proteomics 6, 439–450 (2007).

Gavin, A.-C. et al. Proteome survey reveals modularity of the yeast cell machinery. Nature 440, 631–636 (2006).

Krogan, N. J. et al. Global landscape of protein complexes in the yeast Saccharomyces cerevisiae. Nature 440, 637–643 (2006).

Pu, S., Wong, J., Turner, B., Cho, E. & Wodak, S. J. Up-to-date catalogues of yeast protein complexes. Nucleic Acids Res. 37, 825–831 (2009).

Mewes, H.-W. et al. MIPS: Analysis and annotation of proteins from whole genomes. Nucleic Acids Res. 32, D41–D44 (2004).

Hong, E. L. et al. Gene Ontology annotations at SGD: New data sources and annotation methods. Nucleic Acids Res. 36, D577–D581 (2008).

Zachary, W. W. An information flow model for conflict and fission in small groups. J. Anthropol. Res. 33, 452–473 (1977).

Girvan, M. & Newman, M. E. Community structure in social and biological networks. Proc. Natl. Acad. Sci. U.S.A. 99, 7821–7826 (2002).

Adamic, L. A. & Glance, N. The political blogosphere and the 2004 us election: Divided they blog. In Proc. 3rd International Workshop on Link Discovery, 36–43 (2005).

Krebs, V. Social network analysis software & services for organizations, communities, and their consultants (2013). http://www.orgnet.com

Chakraborty, T., Srinivasan, S., Ganguly, N., Mukherjee, A. & Bhowmick, S. On the permanence of vertices in network communities. In Proc. 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 1396–1405 (2014).

Xie, J., Szymanski, B. K. & Liu, X. Slpa: Uncovering overlapping communities in social networks via a speaker-listener interaction dynamic process. In IEEE 11th International Conference on Data Mining Workshops, 344–349 (2011).

Rosvall, M. & Bergstrom, C. T. Maps of random walks on complex networks reveal community structure. Proc. Natl. Acad. Sci. U.S.A. 105, 1118–1123 (2008).

Blondel, V. D., Guillaume, J.-L., Lambiotte, R. & Lefebvre, E. Fast unfolding of communities in large networks. J. Stat. Mech. Theory Exp. 2008, P10008 (2008).

Shi, J. & Malik, J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 22, 888–905 (2000).

Newman, M. E. Analysis of weighted networks. Phys. Rev. E 70, 056131 (2004).

Acknowledgements

This work has been supported by the Natural Science Foundation of China under Grant Nos. 61972066 and 61572094 and the Fundamental Research Funds for the Central Universities (No. DUT20YG106).

Author information

Authors and Affiliations

Contributions

Z.H. and W.C. designed research, performed research, and wrote the paper; X.W. and Y.L. analyzed data.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

He, Z., Chen, W., Wei, X. et al. On the statistical significance of communities from weighted graphs. Sci Rep 11, 20304 (2021). https://doi.org/10.1038/s41598-021-99175-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-99175-2

This article is cited by

-

Interplay between topology and edge weights in real-world graphs: concepts, patterns, and an algorithm

Data Mining and Knowledge Discovery (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.