Abstract

Device-to-device (D2D) communications and mobile edge computing (MEC) used to resolve traffic overload problems is a trend in the cellular network. By jointly considering the computation capability and the maximum delay, resource-constrained terminals offload parts of their computation-intensive tasks to one nearby device via a D2D connection or an edge server deployed at a base station via a cellular connection. In this paper, a novel method of cellular D2D–MEC system is proposed, which enables task offloading and resource allocation meanwhile improving the execution efficiency of each device with a low latency. We consider the partial offloading strategy and divide the task into local and remote computing, both of which can be executed in parallel through different computational modes. Instead of allocating system resources from a macroscopic view, we innovatively study both the task offloading strategy and the computing efficiency of each device from a microscopic perspective. By taking both task offloading policy and computation resource allocation into consideration, the optimization problem is formulated as that of maximized computing efficiency. As the formulated problem is a mixed-integer non-linear problem, we thus propose a two-phase heuristic algorithm by jointly considering helper selection and computation resources allocation. In the first phase, we obtain the suboptimal helper selection policy. In the second phase, the MEC computation resources allocation strategy is achieved. The proposed low complexity dichotomy algorithm (LCDA) is used to match the subtask-helper pair. The simulation results demonstrate the superiority of the proposed D2D-enhanced MEC system over some traditional D2D–MEC algorithms.

Similar content being viewed by others

Introduction

In recent years, innovative applications such as artificial intelligence, face recognition and interactive games promote the access of large-scale devices1. The fundamentally new 5G key physical layer technologies such as multi-input multi-output (MIMO), non-orthogonal multiple-access (NOMA) and full-duplex (FD) transmission for radio access networks further increase the network capacity as well as the number of accessible devices2. According to the latest forecast report provided by Cisco, by 2023, the number of terminals connected to the world will reach nearly 30 billion3. The surge of emerging applications accelerates the improvement of computation and network capacity compared to traditional wireless networks. The European Telecommunications Standard Institute (ETSI) took the lead in putting forward the concept of Mobile Edge Computing (MEC) in 2014, which then published the white paper of MEC technology4. Through MEC, the shortcomings of traditional mobile cloud computing can be effectively overcome, cloud computing capabilities can be expanded from centralized clouds to the edge of a networks, and the processing capabilities can be enhanced, providing rich and low-latency computing for ultra-dense networks (UDN), and meanwhile avoiding the problem of long latency and overload of the core networks5,6.

However, compared with cloud servers, edge servers have relatively limited computing resources7. In future scenarios of Massive Machine Type Communications(MMTC) or Internet of Things (IoT), when tasks are executed through MEC servers with limited computing resources for a large number of mobile users at the same time, the servers may become overloaded, which will reduce the performance of the wireless system. Collaborative computing among users is an effective way to extend the execution capability of MEC. Device-to-Device (D2D) communication is widely concerned because it can share channel resources with cellular networks and achieve a higher spectrum utilization as well as resource scheduling8. On the other hand, the computation-intensive tasks have higher requirements for highly reliable accesses and low-delay processing, and computation resources can be further expanded through joint communication between D2D communication and MEC9.

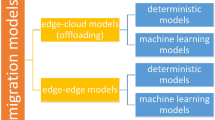

Offloading strategy speeds up the computing process and prolongs the battery life of terminal equipment10. How to efficiently realize task offloading and resource allocation is a hot issue in the current research on D2D-enhanced MEC system. Devices needs to make offloading decisions according to the tasks requirements and decide whether the tasks should be executed locally or offloaded to a third-party device nearby via D2D connection or an edge server deployed in a base station via cellular connection11,12,13. The information-centric IoT is considered in the works in14, in which the partial offloading model is considered and the task is divided into multiple subtasks. The computing resources of the BS are used to manage the control information to find subtask-helper pairs for the devices with several precedence-constrained subtasks14. Unlike14, a system model is proposed in Research15 for cooperative mobile edge computing, in which a device social graph model is developed to capture the social relationship among devices. The task dependency graph is closely related to the social graph, which facilitates flexible choices of task execution approaches15. Game-theoretic models for device-enhanced MEC offloading with a large number of UEs have been investigated in Study16. More specifically, the problem of offloading decision-making among users has been formulated as a sequential game, which achieves the Nash equilibrium16.

Both device access and delay requirement should be considered in offloading decisions because they are important factors affecting the quality of experience (QoE) of users17,18. The variability of mobile device capabilities and user preferences is leveraged in Reference19, formulating the system utility metric as a measure of QoE based on task completion time and energy consumption of a mobile device19. In20, a task model is considered to minimize the latency of local users, who have multiple independent computation tasks that can be executed in parallel but can not be further partitioned. The tasks can be offloaded to helpers and the results can be downloaded from them over prescheduled time slots enabled by the proposed TDMA-based communications20. A system is put forward in the works in21 from the perspective of holism. Four levels of heterogeneous cloud units with various hardware capabilities are employed in the system, including the D2D communication units and the edge cloud units21, through which a higher efficiency is achieved in terms of offloading, congestion, coverage and latency.

As the delay and energy consumption are affected by the offloading schemes of mobile users, the D2D–MEC systems have been investigated in recent works from the perspective of total computation latency minimization20,22, total energy consumption minimization23,24,25,26,27,28,29,30,31,32,33 and the tradeoff between the two objectives16,34,35,36,37,38. A time division multiple access (TDMA) transmission protocol is proposed in22 for minimizing the total computation latency of mobile devices with multi-helpers22. Like22, it is supposed in Reference23 that each device can be offloaded to multi-helpers, jointly considering helper selection, communication and computation resources allocation23. Kai et al. used Initial Task Allocation (ITA) algorithm to maximize the number of executed tasks, and the problem of global energy minimization was tackled while maintaining the maximum number of executed tasks25. To enable the minimization of energy consumption, an adaptive offloading scheme is proposed in the work of28, and the helper controls the offloading process based on a predicted helpers’ CPU-idling profile that specifies the amount of computation resource available for co-computing28. An optimization problem is formulated in30 to minimize the time-average energy consumption of task execution of all mobile devices, taking into consideration the incentive constraints resulted from the prevention of over-exploiting and free-riding behaviors. A Lyapunov optimization method based on online task offloading is derived accordingly30. The Lyapunov optimization technology framework is also leveraged in Reference31 to solve the problem of stochastic optimization, which is formulated as a problem of continuous arrival task offloading of mobile devices31. A two-step algorithm has been proposed in Study32, and delay-sensitive tasks are processed in the first step, while tasks of users with energy restrictions are processed in the second step. The proper offloading destination is found by the MEC server through the maximum matching with the minimum cost graph algorithm32. Except for traditional methods, a Q-learning algorithm and a deep Q-network algorithm are applied in27, meanwhile the long-term energy consumption in continuous time is minimized via reinforcement learning in33.

To maximize a weighted sum of reductions in task completion time and energy consumption, a MEC-enabled multi-cell wireless network is considered in34 to assist mobile users in executing computation-intensive tasks34. A latency and energy consumption minimization scenario is proposed in Work37 for a system with one BS. The formulated problem is transformed into a sub-problem of computation offloading and that of resource allocation, which are respectively solved through the Kuhn-Munkres algorithm and the Lagrangian dual method37. A dynamic social-motivated computation offloading method has been proposed in Study38, through which the task computation latency and energy consumption are jointly minimized. A Lyapunov optimization-based method and a drift-plus-penalty algorithm are used to solve this problem38.

A framework consisting of MEC and cache-enabled D2D communication is enabled in39 to enhance computing offloading and caching capabilities39. Like in39, the task caching in the context of a device-enhanced MEC system has been examined in Study40. Sun W et al.41 propose a Stackelberg game-based incentive mechanism to encourage other devices to help a device finish the computing tasks, then through a graph-based algorithm, the optimal task assignment solution is found to improve the task processing efficiency41. A basic three-node MEC system with two UEs is considered in the works in42, whereby one UE needs computation resources and the other is the helper/relay42. Moreover, one AP node is attached to one MEC server. A four-slot protocol is proposed to enable energy-efficient device-enhanced MEC. Besides, federated learning is involved in D2D-assisted MEC networks to lower the communication cost43. The benefits of internet service providers(ISPs) are maximized in Reference44. Finally, we provide a comparison of the pros and cons in the recent research in Table 1.

The problems formulated from the macro perspective are mainly solved in the aforementioned studies, i.e., the total task completion time, energy consumption or system capacity. However, the computing efficiency of independent devices in the D2D–MEC system has not been considered. Besides, a binary offloading strategy is considered in most studies to execute tasks in the system. In the studies of partial offloading, either the high-performance helpers with a few tasks are ignored or the computing resources of devices and the edge server are neglected. Inspired by this, we integrate D2D communication with the MEC system and propose a novel D2D–MEC method. To facilitate our analysis, we make the following reasonable assumptions throughout this paper.

-

1.

All devices in the network are linked to and can communicate with the edge server. Besides, devices can communicate with each other. Once the devices complete their tasks within the time limit, the remaining computing resources can be used to continue helping other devices, which is similar to14. Only if the devices fail to complete the tasks on time will they offload the tasks to the helper or the edge server.

-

2.

Each device has a computationally intensive and time-sensitive task that needs to be completed within a certain time, which can be segmented. As the works in41, the task can be executed locally, offloaded to one of the helper devices nearby or executed by offloading to the edge server. When a task arrives, each device will first predict whether the local computing resources are sufficient to complete it within a specified delay based on its computational capability. If so, the task can be executed locally. Otherwise, according to the proposed algorithm, the incomplete part will be offloaded to the helper device or the edge server.

-

3.

As the works in39, in the task execution process, the maximum time required by all devices is constant and equal, i.e., synchronous execution. Only when a task is completed and can the next be continued in execution simultaneously among devices. Asynchronous task processing deserves further investigation.

We adopt a partial offloading strategy and make full use of the computing resources within a specified delay, so as to improve the number of supported devices and their computing efficiency in the system. Since users tend to execute tasks through their own devices, a clustering algorithm is adopted to divide a task into two subtasks considering the users’ computing capability. One subtask is executed locally, and the other is offloaded to a helper device or an edge server. LCDA is proposed to match the subtask-helper pair. Then, the system limitations are analyzed in terms of the size of the task, the tolerance time and the number of access devices. Besides, task execution, task offloading and computation resource allocation can be completed within the time constraints. Computing efficiency can be increased through the D2D-assisted MEC system effectively, and the computation resources in both the edge server and the devices are highly utilized in the system. The main contributions to this work are summarized as follows.

-

1.

To improve the network capability and computing capability, we propose a collaborative D2D-assisted MEC system that supports multi-level task offloading and resource allocation. In particular, we divide the task into two subtasks through partial offloading, which can be separately executed locally and remotely, followed by the offloading decision of each device from the microscopic point of view instead of the previous task assignment from the macroscopic view. Finally, the computation resource allocation of the MEC server is given. The problem is proposed as a mixed-integer non-linear problem, which is resolved through a two-phase heuristic algorithm.

-

2.

To increase the number of access devices and achieve the maximum task execution capability, the LCDA algorithm is proposed to match the subtask-helper pair in the system. The MEC server guarantees that the remaining subtasks can be completed. The simulation results show that in different profiles (such as the number of devices, time constraints and the size of the intensive tasks), the algorithm has a superior performance with a lower time and space complexity, which effectively improves the computing capacity of the D2D–MEC system with time constraints.

-

3.

To verify the practicability of the proposed model and the heuristic algorithm, we use improved greedy algorithm41, initial task assignment algorithm25, bipartite graph matching algorithm14 and efficient delay-aware offloading scheme32 as the benchmark comparisons of the proposed algorithm. Compared with the four traditional D2D–MEC algorithms, the results corroborate the superior performance of the proposed scheme in the scenario with strict time constraints.

The remainders of this paper are organized as follows. The system model, problem formulation and problem solution are introduced in "Method" section. Simulation results are given in "Simulation results" section, and conclusions are given in "Conclusions" section.

Method

In this section, we introduce the D2D-enhanced MEC system, followed by the communication and computation model. The computation model is structured in the following three modes: Local execution mode, Local+D2D execution mode and Local+D2D+Edge execution mode. Then, depending on these three modes, we propose the optimization problem to find the solution to maximize the computational efficiency of the devices. Finally, three algorithms are utilized to solve this problem.

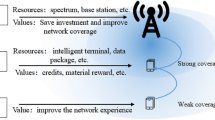

D2D–MEC system model

As shown in Fig. 1, the D2D–MEC system consists of a base station(BS) equipped with an edge server and N mobile devices owned by some users. The devices are denoted by the set \({{\mathscr {N}}} \buildrel \Delta \over = \left\{ {1,2,...,N} \right\} \), which contains Cluster 1 and Cluster 2. The devices represented by Cluster1 can complete tasks locally within the time constraints and provide help to other devices. Cluster2 represents a group of devices that can not complete tasks locally within the time delay and need to offload part of their tasks remotely. They require extra computing resources from other devices via a D2D connection or the MEC server via a cellular connection. Among them, the set of devices in Cluster1 and Cluster2 are defined as \({{{\mathscr {N}}}_1} \buildrel \Delta \over = \left\{ {1,2,...,{N_1}} \right\} , {{{\mathscr {N}}}_2} \cup {{{\mathscr {N}}}_3} \buildrel \Delta \over = \left\{ {1,2,...,{N_2}{\mathrm{+ }}{N_3}} \right\} \). In Cluster1, Dev_a represents devices that cannot provide services to nearby devices via D2D connection, and Dev_b represents devices that assist adjacent devices in Cluster2, i.e., Dev_b is a helper. In Cluster2, Dev_c refers to devices in \({{\mathscr {N}}}_2\) which need additional computing resources for task execution through D2D connection, and Dev_d refers to devices in \({{\mathscr {N}}}_3\) that fail to match neighboring helpers but need to offload their tasks to the edge server. In this process, like the work in39, BS can get task size sequence information that the device (Cluster1) can provide through feedback and send the sequence information to the device(Cluster2) that needs help. Besides, BS can acquire the channel state information (CSI) of all devices via feedback, and the orthogonal frequency-division multiple access (OFDMA) method for channel access is adopted.

Communication model

Each device in \({{\mathscr {N}}}_2 \cup {{\mathscr {N}}}_3\) is allocated one sub-channel for the cellular link or D2D link. Then we can denote B and \(N_0\) as the bandwidth and the power of additive white Gaussian noise for each sub-channel respectively. If Device n chooses to offload its task remotely, using Shannon’s formula, the maximum achievable transmission rate of the D2D connection or cellular link can be expressed as

where \({P}_n^{com}\) is the transmission power of one device to its nearby helper or the edge server, h is the channel gain, which is assumed to be known in each device and remains constant but may change from the boundary of each offloading period45,46. In addition, this paper considers that there are no objects and large buildings blocking radio waves in the first Fresnel region, so the LOS channel model is adopted. The notations used in this paper are summarized in Table 2.

Computation model

For the computation model, we consider that each device n(n \(\in {{\mathscr {N}}}\)) has a computation task characterized by \({I}_n {\mathrm{= \{ }}{{\mathrm{D}}_{\mathrm{n}}}{\mathrm{, Ap}}{{\mathrm{p}}_{\mathrm{n}}}{\mathrm{,}} t_n^{\max } {\mathrm{\} }}\). Here \({{\mathrm{D}}_{\mathrm{n}}}\) (in bits) is the data size of the task, \({\mathrm{Ap}}{{\mathrm{p}}_{\mathrm{n}}}\) is the processing density (in CPU cycles/bit), which depends on the computational complexity of the application, \({{\mathrm{C}}_{\mathrm{n}}}\) is the total number of CPU cycles required for computing \({{\mathrm{D}}_{\mathrm{n}}}\) which can be characterized by \({{\mathrm{C}}_{\mathrm{n}}}{\mathrm{= }}{{\mathrm{D}}_{\mathrm{n}}}{\mathrm{Ap}}{{\mathrm{p}}_n}\) and \( t_n^{\max }\) is the maximum tolerable latency(in second). Device n can obtain the information about \({\mathrm{D}}_{\mathrm{n}}\) and \({\mathrm{Ap}}{{\mathrm{p}}_{\mathrm{n}}}\), and they remain the same value within the time range \( t_n^{\max }\).

Each device can execute its computation task locally or offload part of the task to one nearby helper device or the edge server. We denote \({{{\mathscr {A}}}_{\mathrm{n}}}{\mathrm{= }}\left\{ { {\mathrm{x}}_n , y_n ,{z}_n } \right\} \) as the offloading decision of device n. Specifically, we have \({{{\mathscr {A}}}_{\mathrm{n}}}{\mathrm{= }}\left\{ {1,0,0} \right\} \) if device n can complete its task locally. We have \({{{\mathscr {A}}}_{\mathrm{n}}}{\mathrm{= }}\left\{ {1,1,0} \right\} \) if device n offloads some tasks to a D2D helper, and we have \({{{\mathscr {A}}}_{\mathrm{n}}}{\mathrm{= }}\left\{ {1,0,1} \right\} \) if device n chooses the edge server for remote execution. We can get \(1 \le {\mathrm{x}}_n + {y_n} + {z_n} \le 2\), with \({{\mathrm{x}}_n},{y_n} \in \{ 0,1\}\).

As depicted in Fig. 2, Dev_a, Dev_b, Dev_c and Dev_d correspond to Dev_a, Dev_b, Dev_c and Dev_d in Fig. 1 respectively. From the perspective of the utilization of computing resources, Dev_b in Fig. 2a provides additional computing resources for Dev_c in Fig. 2b, which increases the utilization of resources in Dev_b and enables the task of Dev_c to be completed within the required time. Besides, we use \( D_{b,c}^{dev}\) to represent the subtask that Dev_b would execute for Dev_c. The edge server in Fig. 2c provides additional computing resources for Dev_d in Fig. 2b. The edge server can handle a large number of tasks, which are also a factor affecting the processing of the edge server.

We next discuss the overhead of Local computing, Local+D2D computing and Local+D2D+Edge computing in terms of three aspects (delay analysis, energy consumption and computing efficiency).

Local computing

Local computing means that a device completes the task locally with its inherent computing capability within a specified time. The time taken by a local computing device is expressed as

where \({f}_n^{loc}\) represents the local computing capability of device n (in CPU cycles/s), and we use \({P}_n^{loc}\) to represent the local processing power of device n (in watt). Energy consumption brought in task processing is mainly studied, so the inherent energy consumption brought by the chip structure is not considered here. The energy consumption can then be expressed as

We define the computing efficiency(CE) of device n during \( t_n^{\max }\) as the proportion of the task handled by the device to the task that could be executed with the inherent computation capacity of the device within this time range. In this way, the local CE can be expressed as

Our goal is to enhance the CE of local execution devices, namely Dev_a and Dev_b in Cluster1 in Fig. 1. When a device executes some tasks as a helper during \( t_n^{\max }\), the CE of the device increases.

Local + D2D computing

A device that can’t entirely execute a task within its computation capability needs to request computing resources from nearby devices. When the device finds an adjacent device that can assist in processing partial tasks, the execution mode is changed to Local+D2D mode, and the auxiliary device is a helper. Device n1 represents the helper, and device n2 represents the neighboring device that needs help from device n1, and device n2 offloads the task of size \( D_{n1,n2}^{dev}\) to device n1. The delay caused by the D2D communication consists of two parts, the transmission delay of the subtask that needs to be executed by the helper device and the processing delay in the helper. The delay for device n2 and its helper n1 and the energy consumption for device n2 can be expressed as

where \({f}_{n1}^{dev}\) represents the computation capability of the helper, \({P}_{n1,n2}^{com}\) represents the transmission power of D2D communication link, \({P}_{n2}^{idl}\) represents idle power of device n2 (in watt). Besides, we define \( T_{n1}^{dev'} = {{ C_{n1,n2}^{dev} } \Big / {{f}_{n1}^{dev} }}\) represents the processing delay in the helper device. After the device executes a partial task as a helper, its energy consumption increases and is expressed as

After executing the additional task as a helper, the computing efficiency of this helper device improves and can be expressed as

Local + D2D + edge computing

Devices that did not choose to offload the subtasks to nearby helper devices would offload their subtasks to the edge server for task execution. The execution mode is modified into Local+D2D+Edge mode. Similar to Local+D2D mode, the delay is also composed of the transmission part and processing part. The delay and energy consumption can be expressed as

where \({P}_{n,e}^{com}\) represents the transmission power of the cellular link. In this paper, the consumption (time and energy) is considered from the perspective of devices using battery power, so it is necessary to consider consumption for the devices. Generally, the edge server with cable power always has enough power to complete the tasks, so the calculation energy consumption of the MEC server is omitted here, similar to the work in47. Just like the studies in48,49, the transfer of calculated results of time and energy consumption from edge server is neglected in this work, as the calculation results are generally much smaller than the calculated input data. It’s also fit for D2D computing.

Finally, we can get the total time delay and total energy consumption of device n in the current time frame expressed as

The total delay for each device can be represented by Eq. (12). \( T_n\) is the largest one of the time consumed by local execution, D2D execution and the edge server execution.

Problem formulation

In this section, we propose an integrated framework of computing offloading and computation resource allocation in D2D–MEC wireless cellular networks. We set \({\Psi _n} = [ {\mathrm{T}}_{\mathrm{n}}^{loc} , {\mathrm{T}}_{\mathrm{n}}^{dev'} , {\lambda {\mathrm{T}}}_{\mathrm{n}}^{edg}]\), \({\mu _n} = [ D_n^{loc} , D_n^{dev} , D_n^{edg}]\), and give the optimization formulation of the problem as follows

\({\Psi _n}{{\mathscr {A}}}_n^T/ t_{\mathrm{n}}^{\max }\) represents the proportion of execution time of the device in the maximum time delay of the different modes, and we expect to get a larger one.

Considering the changing trend of \( {\mathrm{T}}_{\mathrm{n}}^{loc} / t_{\mathrm{n}}^{\max }\), \( {\mathrm{T}}_{\mathrm{n}}^{dev} / t_{\mathrm{n}}^{\max }\) and \( {\mathrm{T}}_{\mathrm{n}}^{edg} / t_{\mathrm{n}}^{\max }\) are opposite, we prefer the proportion of the former two to be as high as possible, and the latter is vice versa. Thus, we introduce the negative value \(\lambda \) to represent the weight of \( {\mathrm{T}}_{\mathrm{n}}^{edg}\). Constraints C1 and C2 guarantee the offloading decisions which meet some conditions. Constraint C3 bounds the maximum delay time of each device. Constraint C4 ensures that all tasks can completely be executed. Constraint C5 is the total computation resource limitation of the edge server. Constraint C6 ensures that each device offloads its task to the edge server and can be allocated to some computation resources. We can observe that \({\mathscr {P}}1\) is a mixed-integer non-linear problem consists of both combinational variables \(\{ {{{\mathscr {A}}}_{\mathrm{n}}}\}\) and continuous variables \(\{ \mu _n, {f}_n^{edg} \}\) which is hard to resolve. In the next section, we will decompose it into two phases and solve them by heuristic algorithms.

Problem decomposition and solution

The main challenge in solving \({\mathscr {P}}1\) is that both combinational variables \(\{ {{{\mathscr {A}}}_{\mathrm{n}}}\}\) and continuous variables \(\{ \mu _n, {f}_n^{edg} \}\) are involved. However, by analyzing the problem, we can successfully divide it into two phases and then solve them individually.

By analyzing these six constraints, we can find that constraints C1 and C2 are about task offloading, constraints C5 and C6 are about the resource allocation of the edge server and constraints C3 and C4 are aimed at the whole task process. The offloading decisions \({{\mathrm{x}}_n},{y_n}{\mathrm{}},{z_n}\) satisfy \(1 \le {{\mathrm{x}}_n} + {y_n} + {z_n} \le 2\). We know that task executed via D2D connection is also executed on another helper device and \(1 \le {{\mathrm{x}}_n} + {y_n} \le 2\) holds. It has nothing to do with the edge server, which means constraints C2 and C4 can be divided. Based on this, we decompose the task into two parts, executing on the device through D2D communication and executing on the edge server.

Task assignment and offloading decision

Since our goal is to maximize the weighted sum of the computing efficiency and the proportion of edge server execution time to the total time of all devices, the main limitations of \({\mathscr {P}}1\) include the task offloading strategy and the limited computation resources of the edge server. In the first phase, we decompose the task offloading strategy and device computing efficiency acquired from \({\mathscr {P}}1\) and its mathematical formula can be expressed as

According to Eq. (9), the computing efficiency obtained from the task execution is \({{{ C_n^{loc} } \Big / {{f}_n^{loc} t_n^{\max } }} + C_{n,n2}^{dev} } \Big / {{f}_n^{dev} t_n^{\max } }\), according to Eq. (2), the local execution time is \( T_n^{loc} = {{ C_n^{loc} } \Big / {{f}_n^{loc} }}\), and we know \( T_n^{dev'} = {{ C_{n,n2}^{dev} } \Big / {{f}_n^{dev} }}\). It is easy to find that \(CE_n^{total} = {\mathrm{T}}_{\mathrm{n}}^{loc} / t_{\mathrm{n}}^{\max } + {\mathrm{T}}_{\mathrm{n}}^{dev'} / t_{\mathrm{n}}^{\max }\), they are formulated from two aspects, i.e., computation resources occupation and time occupation.

We transform the constraint (C3)\(\sim \)(C4) into \((C3)^1\sim (C4)^1\), because the device that needs help belongs to \({{\mathscr {N}}}_2\). Only the device that requires additional resources via D2D connection is considered, and its task is executed locally or executed through a helper. The total delay of these devices is the largest one of the local execution delay, the delay of the device that executes D2D computing and the helper execution delay, namely constraint \((C3)^1\).

According to whether the devices can complete their tasks within the required time, we divide the devices into two clusters: local computing and remote computing. The proposed clustering method for the task-assigned algorithm (CTAA) is described in Algorithm 1. Local computing devices may have excess computing resources to provide for another device, while remote computing devices have reached the maximum computing efficiency when performing local computing. Then, the low complexity dichotomy algorithm (LCDA) described in Algorithm 2 is used to get the set of devices that execute the remaining tasks in the nearby helper devices and the edge server respectively.

In Algorithm 2, the choice of the adjacent helper is reflected in using LCDA to match the computation resources required by the device and provided by the helper device to achieve the best matching. The device with enormous task requirements can match the corresponding helper device and vice versa. It’s the sub-optimal but best solution because the optimal solution requires a perfect match, which is bound to incur a lot of extra costs.

Computation resource allocation

The second phase considers the allocation of computing resources at the edge server to minimize computing time. We can express the problem as

We convert (C3)\(\sim \)(C4) to \((C3)^2\) \(\sim \) \((C4)^2\). The devices offloaded to the edge server are in \({{\mathscr {N}}}_3\), which does not involve helper execution, so this conversion is reasonable. In this round of task execution, the maximum delay of all devices offloaded to the edge server is the same, i.e., \( T_{\mathrm{n}}^{\max }\). We need to ensure the execution time of each device is not over the maximum time delay, namely constraint \((C3)^2\). We set the computing resources allocated to the devices that execute tasks at the edge server as \( {\{ f}_1^{edg} ,{f}_1^{edg} ,......,{f}_{ N_3 }^{edg} \}\). The total amount of computing resources on the edge server is certain. Namely, the sum of \(\sum \limits _{n \in {\mathcal{N}_3}} {{f}_n^{edg} }\) is a certain value, \( {\mathrm{F}}^{{\mathrm{fog}}}\). To find the optimal solution, we only need to find the minimum value of \(\sum \nolimits _{n \in {\mathcal{N}_3}} { T_n^{edg} }\), i.e., \(\sum \nolimits _{n \in {{{\mathscr {N}}}_3}} { T_n^{edg} } = {{ {\mathrm{C}}_1^{{\mathrm{edg}}} } \Big / {{f}_1^{{\mathrm{edg}}} }} + {{ {\mathrm{C}}_2^{{\mathrm{edg}}} } \Big / {{f}_2^{{\mathrm{edg}}} }} + ...... + {{ {\mathrm{C}}_{ N_3 }^{{\mathrm{edg}}} } \Big / {{f}_{ N_3 }^{{\mathrm{edg}}} }}\). To ensure that the edge server resources are utilized effectively and all tasks are executed in the shortest time, we transform problem \({{\mathscr {P}}}_3\) to obtain the resources allocation ratio which meets the minimum requirements. Let this ratio be \( F_1^{{\mathrm{edg}}} , F_2^{{\mathrm{edg}}} ,......, F_{ N_3 }^{{\mathrm{edg}}}\), and we set \({f}_1^{edg} = \eta F_1^{{\mathrm{edg}}} ,{f}_2^{edg} = \eta F_2^{{\mathrm{edg}}} ,......,{f}_{ N_3 }^{edg} = \eta F_{ N_3 }^{{\mathrm{edg}}}\), where \(\eta = {{ F^{fog} } \Bigg / {\sum \nolimits _{n \in {{{\mathscr {N}}}_3}} { F_n^{edg} } }}\). When each device is assigned specific resources, we can transform \(\sum \nolimits _{n \in {{{\mathscr {N}}}_3}} { T_n^{edg} }\) into

Then we add and subtract the sum of coefficients \(\sum \nolimits _{n = 1}^{ N_3 } { F_n^{{\mathrm{edg}}} }\) respectively, and the value of the formula doesn’t change. Utilizing means inequality to get the solution

According to the property of inequality, we get

Finally, we can get the computing resources allocated to each device executing the task on the edge server. The specific algorithm is described in Algorithm 3.

Simulation results

In this section, simulation results of the proposed D2D–MEC system are presented to verify the performance enhancement. The channel power gain in the D2D–MEC system is modeled as \({\mathrm{h}} = {10}^{ - 3} { d^{ - \zeta }}\phi \), where \(d \sim u(0.2,30)\)(in m) represents the distance between the two communication terminals, \(\zeta \) represents the path-loss exponent and is assumed to be 2.523, and \(\phi \) is small-scale fading and \(\phi \sim CN(0,1)\) is an independent and identically distributed circularly symmetric complex Gaussian vector with zero mean and covariance one27. The major simulation parameters employed in the simulations, unless otherwise stated, are summarized in Table 3.

Task execution result

The simulation is carried out to verify the rationality of the proposed algorithm from two aspects, i.e., task execution time and task execution energy consumption of each device, as shown in Figs. 3 and 4. In Fig. 3, the number and the access ratio of devices executed locally, offloaded to a helper via D2D connection and offloaded to the edge server via cellular connection are (24, 10, 6), (0.6, 0.25, 0.15) respectively. The number of devices executed locally is significantly higher than that executed remotely and the tasks’ execution time at the edge server is relatively less because they are not heavy. In addition, all tasks are completed within the maximum tolerance time.

Task execution delay and consumption are two important factors affecting the performance of a model. As shown in Fig. 4, the energy consumption of each device and the incremental energy consumption of helper devices are given. The average energy consumption of local computing devices is lower than that of remote computing devices. Devices have fluctuated time and consumption to execute tasks locally, which depends on their performance and the size of the assigned tasks. The results show that the proposed resource allocation model and task offloading algorithm can ensure that each device could complete the assigned task with less energy consumption within the specified time delay.

Task mode comparison

To increase the computing efficiency and the access rate of the devices, improve the completion rate of the tasks in the system, three task execution modes are adopted, namely local computing, D2D computing and edge server computing. We measure the effectiveness of the proposed algorithm in terms of the number of devices existing on the system, the task size assigned to a single device and the task execution delay, then carry out the comparison of the three modes. The results are averaged over 1000 independent experiments to ensure the scientific nature of the simulation.

We set the number of devices to 40 and 80 respectively. When the number of devices is the same and the maximum delay ranges from 0.8 to 1.5 s, we conduct a statistical simulation of device access in the three modes. As Fig. 5 shows, the trend is the same. We analyze the situation when the total number of devices is 40. When the delay is the minimum one (i.e., 0.8 s), the number of devices that execute tasks locally reaches the minimum value. The number of devices that offload tasks to helpers via D2D connection is relatively smooth cause only the devices with high performance allocated relatively small tasks are likely to execute additional tasks as helpers and these devices are relatively constant. In addition, the number of devices that execute tasks remotely is the maximum at this time, so is that of offload tasks to the edge server. With the increase of time delay, the number of devices that can complete tasks locally increase slowly, while that of offload tasks remotely decrease. The utilization of computing resource at the edge server is reduced, so is the number of devices executed on it. The remaining computing resources on the edge server can be used in other parts of the cellular network.

The introduction of D2D communication improves the computing efficiency of the device and relieves the computing pressure of the edge server effectively. In addition, if the latency is too small, most of the devices can not complete their tasks locally, which causes the congestion of the connected links and the resources allocated by the edge server may not be sufficient to complete the task.

As shown in Fig. 6, with the number of devices ranging from 10 to 80 in the system under the three modes, we conduct a statistical analysis on the access rate of devices. The performance configuration of the devices is shown in Table 3. When the total number of devices is less than 20, the number of local computing devices is larger. As the devices’ number increases and ranges from 20 to 50, D2D mode and the edge mode change in opposite directions. At this time, the number of devices accessing D2D mode increases steadily. When the number of devices is 40, the corresponding access rate of the three modes is (0.58, 0.3, 0.13) respectively, which has the same conclusion obtained in Fig. 3. When the total number of devices is greater than 50, the device access rate tends to be stable in the three modes. Besides, some insightful results can be obtained via simulation parameters from Table 3. The ranges of \({\mathrm{D}}_{\mathrm{n}}\) and \({\mathrm{Ap}}{{\mathrm{p}}_{\mathrm{n}}}\) are [0.1, 2] Mbits and [500, 2000] CPU Cycles/bit respectively, the tolerance time is 1.1s and the computation resource of a device fn is [0.5, 2] CPU cycles/s. For convenience of description, the lower and upper limits of Dn and Appn are denoted by \({\mathrm{D}}_{\mathrm{n}}^{\mathrm{l}}\) and \({\mathrm{D}}_{\mathrm{n}}^{\mathrm{h}}\), \({\mathrm{App}}_{\mathrm{n}}^{\mathrm{l}}\) and \({\mathrm{App}}_{\mathrm{n}}^{\mathrm{h}}\) respectively. The joint distribution of \({\mathrm{D}}_{\mathrm{n}}\) and \({\mathrm{Ap}}{{\mathrm{p}}_{\mathrm{n}}}\), namely the task assigned to each device is uniformly distributed on the rectangle \({\mathrm{S}} = \left\{ {\left( {{\mathrm{x,y}}} \right) |{\mathrm{D}}_{\mathrm{n}}^{\mathrm{l}} \le {\mathrm{x}} \le {\mathrm{D}}_{\mathrm{n}}^{\mathrm{h}},{\mathrm{App}}_{\mathrm{n}}^{\mathrm{l}} \le {\mathrm{y}} \le {\mathrm{App}}_{\mathrm{n}}^{\mathrm{h}}} \right\} \). When \(\left( {{\mathrm{x,y}}} \right) \in {\mathrm{S}}\) , the joint probability density is \({\mathrm{f}}\left( {{\mathrm{x,y}}} \right) = {1 \Big / {\left( {\left( {{\mathrm{D}}_{\mathrm{n}}^{\mathrm{h}} - {\mathrm{D}}_{\mathrm{n}}^{\mathrm{l}}} \right) \left( {{\mathrm{App}}_{\mathrm{n}}^{\mathrm{h}} - {\mathrm{App}}_{\mathrm{n}}^{\mathrm{l}}} \right) } \right) }}\) , when \(\left( {{\mathrm{x,y}}} \right) \notin {\mathrm{S}}\) , \({\mathrm{f}}\left( {{\mathrm{x,y}}} \right) = 0\) . Since the range of task which can be executed locally by the device follows the uniform distribution of \([{\mathrm{Tsk}}_{\mathrm{n}}^{\mathrm{l}},{\mathrm{Tsk}}_{\mathrm{n}}^{\mathrm{h}}]\), where \({\mathrm{Tsk}}_{\mathrm{n}}^{\mathrm{l}}=0.5*1.1*{10^9}\) and \({\mathrm{Tsk}}_{\mathrm{n}}^{\mathrm{h}}=2*1.1*{10^9}\). By solving the probability

We can get the ratio of the devices that execute tasks locally to be 0.65 accordingly. When the total number of devices is 40 and 80, the theoretical optimal value of the local execution devices should be 26 and 52 respectively. As shown in Fig. 5, the actual measured value is 24 and 49 respectively, and the matching degree reaches 93.27%, which is within the tolerance and consistent with our conclusion.

We extended the task size range from [0.1, 1] to [0.1, 3] Mbits to conduct statistical analysis on the number of devices in the three modes. Different task size will also affect the task execution process of each device. As shown in Fig. 7, when the task size is small, local mode and D2D mode can finish all tasks in the system. As the maximum task cap increased, some devices need to execute larger tasks, the performance of local devices limits the number of devices that can execute tasks locally. The increasing number of devices that can’t execute tasks locally affects the devices’ number that execute tasks through D2D mode. The number of devices executing tasks in D2D mode increases slightly and remains relatively stable overall. At this time, D2D mode can no longer improve the overall performance of the system. The number of devices executed on the edge server is increasing to relieve system stress. Compared with traditional D2D communication, Local + D2D + MEC mode has more significant advantages in processing tasks.

Task algorithm optimization

The mode selection algorithm we proposed in the D2D–MEC system is the low complexity dichotomy algorithm (LCDA), which we compared with two baseline algorithms, namely the maximum task assignment method (MTAM) and the random task assignment method (RTAM). The MTAM algorithm allows a device to select one neighboring device as a helper to provide the largest computation capability, which causes the device that selects previously has a higher hit rate, while that of selects afterward has a lower probability to offload its task. The RTAM algorithm randomly selects one adjacent device as a helper to match the device, i.e., the device that can provide sufficient computing capability. Fig. 8 shows how the proposed algorithm improves the computing efficiency of the local computing devices compared with the other two benchmark algorithms. Fig. 8a–c and d–f show the improvement of computing efficiency of local computing devices when the number of devices is 40 and 80 and the maximum delay is (0.8 s, 1.1 s, 1.5 s) and (0.9 s, 1.1 s, 1.3 s) respectively. Table 4 shows the number of helper devices and the average CE rate under the three algorithms in the six situations mentioned above.

Computing efficiency versus the number of local computing devices of different kinds: (a) The number of devices(NUM) and the maximum delay(DEY) is 40 and 0.8s, (b) The NUM and DEY is 40 and 1.1s, (c) The NUM and DEY is 40 and 1.5 s, (d) The number of devices(NUM) and the maximum delay(DEY) is 80 and 0.9 s, (e) The NUM and DEY is 80 and 1.1s, (f) The NUM and DEY is 80 and 1.3 s.

When the devices’ number is fixed, taken 40 as an example, the CE of the device which needs to be offloaded reaches the maximum value, so only the local execution devices are analyzed in the figure. As the delay increases, the total number of local execution devices also increases. The number of devices in Fig. 8a–c is (16, 24, 30). For the convenience of observation, the CE depicted in the figure removes the local CE. It represents the incremental CE of helpers. The number of nodes in the figure represents the number of helpers, and the amplitude represents the increased value of CE. The overall improvement in the proposed algorithm is superior to the other two algorithms.

Table 4 shows the number of helper devices and the percentage of improved efficiency under the three algorithms when the total number of devices is 40 and 80 respectively and the maximum time delay is (0.8 s, 1.1 s, 1.5 s) and (0.9 s, 1.1 s, 1.3 s) respectively. The comparison results show that the number of helper devices of the proposed algorithm is relatively small, but the average CE rate is significantly higher than the other two algorithms.

Model comparison results

The above simulation results comprehensively analyze the performance of the D2D–MEC system and show that the proposed algorithm can effectively improve the computing efficiency of the devices, enhance the capacity and increase the number of access devices for the system. To further verify the performance of the algorithm, we compare the proposed algorithm with the traditional D2D–MEC algorithm, including the improved greedy algorithm41, the initial task assignment algorithm (ITA)25, bipartite graph matching algorithm (BGMA)14, and energy-efficient and delay-aware offloading scheme (EEDOS)32. Besides, the comparison is under our proposed scenarios. The completed tasks of the five algorithms under different execution modes are illustrated in Figs. 9 and 10. Since each device is assigned a task, the devices number of the system is equal to the number of tasks. The devices number in Figs. 9 and 10 is 40 and 80 respectively and the maximum delay is 0.8–1.5 s and 0.8–1.4 s respectively.

As an example, Fig. 9 compares the total number of devices executed through local computing and D2D computing with the number of devices executed on the edge server under different time delays. As shown in the figure, the LCDA algorithm, which gives priority to local computing and D2D computing, can complete all tasks within the specified time delay, while the other four algorithms cannot. When the delay is short, the LCDA algorithm requires more computing resources from the edge server and the EEDOS algorithm has the same trend, while the other algorithms have no significant change in demand for the edge server.

The number of completed tasks of the five algorithms is compared. The number of devices is set to 40 and 80, and the delay constraint is set to 0–1.4 s respectively. Accordingly, we can acquire the total number of tasks completed by the five algorithms in each time delay. Figures 11 and 12 shows that the proposed LCDA algorithm is better than the other four algorithms. With the increase of time delay, the total number of completed tasks of the LCDA algorithm is significantly larger than the other four algorithms. When the delay time reaches 0.8s, all devices under the LCDA algorithm can complete their tasks and remain stable in the following time delay, while at this point, the other four algorithms still can’t complete all tasks.

In conclusion, compared with some traditional D2D–MEC resource allocation algorithms, the LCDA algorithm is superior under the proposed scenarios. The reason for the better performance of the proposed algorithm is that the local and edge server computation resources are considered together and the partial offloading strategy is used in the model. Compared with the proposed algorithm, the improved greedy algorithm only considers binary offloading and idle helper devices, the ITA algorithm ignores the partial offloading strategy, the BGMA algorithm does not take the computing resources of the BS into account, and the EEDOS algorithm only considers the help from idle devices but ignores the high-performance devices with little tasks to be handled.

Complexity analysis

In this section, we briefly analyze the complexity of the proposed algorithm in two phases, i.e., the computation offloading strategy and D2D computing efficiency acquired in Phase \({{\mathscr {P}}}_2\) and edge server resource allocation in Phase \({{\mathscr {P}}}_3\), where we examine the complexity of solutions to the two phases respectively. In addition, in the proposed scheme, Problem \({{\mathscr {P}}}_2\) can be divided into two sub-problems. The devices are divided into two clusters through Algorithm 1, the time complexity is O(N), where N represents the number of devices involved in resource allocation. The goal of Algorithm LCDA is to select the helper device and calculate its CE. The time complexity of LCDA is O(\(\log {N^1}\)), where \(N^1\) is the number of devices that have been matched in the system, and in the best and the worst case, the complexity is O(\(N^1\)) and O(\({iterator}^{{N^1}}\)) respectively. According to the fifth line of Algorithm 2, the iterations to find the matching device is set to iterator, and \(N^1\) is the number of matched devices. When devices can complete their tasks on time but can only provide fewer resources, those that need help need a lot of resources in this period, in this extreme case, the worst computational complexity is attained. The problem of resource allocation of the edge server is solved in \({{\mathscr {P}}}_3\), which can be completed in polynomial time. Accordingly, the overall computational complexity is O(N). Compared with the other four traditional D2D–MEC algorithms, the time complexity of the greedy algorithm is O(\(N^2\)), that of ITA algorithm is O(\(N^{N+2}\)) and that of BGMA algorithm is O(\(TN^3\)), which are related to the time delay, and EEDOS algorithm is in a long polynomial. Therefore, the scheme proposed in this paper has a low computational complexity.

Conclusions

This paper proposes a multi-user D2D–MEC system to improve the computing efficiency of devices, where each device includes a task with a variable length to execute within a specified delay. The devices choose to execute tasks locally unless they are unable to complete them on time and offload some tasks to nearby devices with ample computing resources via D2D execution or an edge server. Firstly, a mixed-integer non-linear problem is presented to maximize the computing efficiency of the system. Then, we resolve it by dividing it into two phases. Specifically, according to the local computation priority, the first phase is to divide a task into local execution and remote execution according to Algorithm 1. The nearby helpers are first selected by the remote executing devices to offload their tasks through Algorithm 2, then an offloading strategy can be obtained by solving the problem. The assignment of computing resources in the edge server is considered in the second phase, and the assignment scheme is obtained through Algorithm 3. Numerical simulation results show that, compared with some traditional D2D–MEC algorithms, the number of access devices and completed tasks can be effectively improved through the proposed algorithm. Further, the task execution efficiency is improved, and a superior performance is achieve with a lower complexity.

Through the combination of D2D communication technology and MEC, computing and spectrum resources are expanded and a large-scale access of devices is increased. This is an application scenario of 5G, providing low-latency service for computation-intensive tasks of mobile terminals, which belongs to the technical field of task offloading in the D2D–MEC system. In addition, ICT technology, as the integration of IT(MEC) and CT(D2D) technology, can be used for infrastructure construction in the smart city of 6G. In future work, ICT, digital twin and blockchain technologies will be applied to the Internet of Vehicles field, which can further promote the research on resource management and task offloading area in multi-link cooperative transmission and secure transmission. Our works have theoretical guiding significance for the subsequent research.

References

Mach, P. & Becvar, Z. Mobile edge computing: A survey on architecture and computation offloading. IEEE Commun. Surv. Tutor. 19, 1628–1656. https://doi.org/10.1109/COMST.2017.2682318 (2017).

Pencheva, E. N. & Atanasov, I. I. Mobile edge service for d2d communications. In 2018 IEEE XXVII International Scientific Conference Electronics—ET 1–4 (2018). https://doi.org/10.1109/ET.2018.8549598.

System, C. Cisco annual internet report. Cisco White Paper (2020).

Fernando, N., Seng, W. L. & Rahayu, W. Mobile cloud computing: A survey. Future Gener. Comput. Syst. 29, 84–106 (2013).

Hu, S. & Li, G. Dynamic request scheduling optimization in mobile edge computing for IoT applications. IEEE Internet Things J. 7, 1426–1437. https://doi.org/10.1109/JIOT.2019.2955311 (2020).

Qin, M. et al. Power-constrained edge computing with maximum processing capacity for IoT networks. IEEE Internet Things J. 6, 4330–4343. https://doi.org/10.1109/JIOT.2018.2875218 (2019).

Yang, L., Cao, J., Cheng, H. & Ji, Y. Multi-user computation partitioning for latency sensitive mobile cloud applications. IEEE Trans. Comput. 64, 2253–2266. https://doi.org/10.1109/TC.2014.2366735 (2015).

Tang, H. & Ding, Z. Mixed mode transmission and resource allocation for d2d communication. IEEE Trans. Wirel. Commun. 15, 162–175. https://doi.org/10.1109/TWC.2015.2468725 (2016).

Lai, W., Wang, Y., Lin, H. & Li, J. Efficient resource allocation and power control for lte-a d2d communication with pure d2d model. IEEE Trans. Veh. Technol. 69, 3202–3216. https://doi.org/10.1109/TVT.2020.2964286 (2020).

Mehrabi, M. et al. Device-enhanced MEC: Multi-access edge computing (MEC) aided by end device computation and caching—A survey. IEEE Access 7, 166079–166108. https://doi.org/10.1109/ACCESS.2019.2953172 (2019).

Mahmoodi, S. E., Uma, R. N. & Subbalakshmi, K. P. Optimal joint scheduling and cloud offloading for mobile applications. IEEE Trans. Cloud Comput. 7, 301–313. https://doi.org/10.1109/TCC.2016.2560808 (2019).

Kao, Y., Krishnamachari, B., Ra, M. & Bai, F. Hermes: Latency optimal task assignment for resource-constrained mobile computing. IEEE Trans. Mob. Comput. 16, 3056–3069. https://doi.org/10.1109/TMC.2017.2679712 (2017).

Huang, D., Wang, P. & Niyato, D. A dynamic offloading algorithm for mobile computing. IEEE Trans. Wirel. Commun. 11, 1991–1995. https://doi.org/10.1109/TWC.2012.041912.110912 (2012).

Xie, J., Jia, Y., Chen, Z., Nan, Z. & Liang, L. D2d computation offloading optimization for precedence-constrained tasks in information-centric IoT. IEEE Access 7, 94888–94898. https://doi.org/10.1109/ACCESS.2019.2928891 (2019).

Chen, X., Zhou, Z., Wu, W., Wu, D. & Zhang, J. Socially-motivated cooperative mobile edge computing. IEEE Netw. 32, 177–183. https://doi.org/10.1109/MNET.2018.1700354 (2018).

Hu, G., Jia, Y. & Chen, Z. Multi-user computation offloading with d2d for mobile edge computing. In 2018 IEEE Global Communications Conference (GLOBECOM), 1–6 (2018). https://doi.org/10.1109/GLOCOM.2018.8647906.

Oueis, J., Strinati, E. C., Sardellitti, S. & Barbarossa, S. Small cell clustering for efficient distributed fog computing: A multi-user case. In 2015 IEEE 82nd Vehicular Technology Conference (VTC2015-Fall), 1–5 (2015).https://doi.org/10.1109/VTCFall.2015.7391144.

Xu, J., Chen, L. & Ren, S. Online learning for offloading and autoscaling in energy harvesting mobile edge computing. IEEE Trans. Cognit. Commun. Netw. 3, 361–373. https://doi.org/10.1109/TCCN.2017.2725277 (2017).

Lyu, X., Tian, H., Sengul, C. & Zhang, P. Multiuser joint task offloading and resource optimization in proximate clouds. IEEE Trans. Veh. Technol. 66, 3435–3447. https://doi.org/10.1109/TVT.2016.2593486 (2017).

Xing, H., Liu, L., Xu, J. & Nallanathan, A. Joint task assignment and wireless resource allocation for cooperative mobile-edge computing. In 2018 IEEE International Conference on Communications (ICC), 1–6 (2018). https://doi.org/10.1109/ICC.2018.8422777.

Ateya, A. A., Muthanna, A. & Koucheryavy, A. 5G framework based on multi-level edge computing with D2D enabled communication. In 2018 20th International Conference on Advanced Communication Technology (ICACT), 507–512 (2018). https://doi.org/10.23919/ICACT.2018.8323812.

Xing, H., Liu, L., Xu, J. & Nallanathan, A. Joint task assignment and resource allocation for D2D-enabled mobile-edge computing. IEEE Trans. Commun. 67, 4193–4207. https://doi.org/10.1109/TCOMM.2019.2903088 (2019).

Li, Y. et al. Jointly optimizing helpers selection and resource allocation in D2D mobile edge computing. In 2020 IEEE Wireless Communications and Networking Conference (WCNC), 1–6 (2020). https://doi.org/10.1109/WCNC45663.2020.9120538.

Zhou, J., Zhang, X., Wang, W. & Zhang, Y. Energy-efficient collaborative task offloading in D2D-assisted mobile edge computing networks. In 2019 IEEE Wireless Communications and Networking Conference (WCNC), 1–6 (2019). https://doi.org/10.1109/WCNC.2019.8885523.

Kai, Y., Wang, J. & Zhu, H. Energy minimization for D2D-assisted mobile edge computing networks. In ICC 2019–2019 IEEE International Conference on Communications (ICC), 1–6 (2019). https://doi.org/10.1109/ICC.2019.8761816.

Diao, X., Zheng, J., Wu, Y. & Cai, Y. Joint computing resource, power, and channel allocations for D2D-assisted and NOMA-based mobile edge computing. IEEE Access 7, 9243–9257. https://doi.org/10.1109/ACCESS.2018.2890559 (2019).

Tang, J. et al. Energy minimization in D2D-assisted cache-enabled internet of things: A deep reinforcement learning approach. IEEE Trans. Ind. Inf. 16, 5412–5423. https://doi.org/10.1109/TII.2019.2954127 (2020).

You, C. & Huang, K. Exploiting non-causal CPU-state information for energy-efficient mobile cooperative computing. IEEE Trans. Wirel. Commun. 17, 4104–4117. https://doi.org/10.1109/TWC.2018.2820077 (2018).

Chen, X., Pu, L., Gao, L., Wu, W. & Wu, D. Exploiting massive D2D collaboration for energy-efficient mobile edge computing. IEEE Wirel. Commun. 24, 64–71. https://doi.org/10.1109/MWC.2017.1600321 (2017).

Pu, L., Chen, X., Xu, J. & Fu, X. D2D fogging: An energy-efficient and incentive-aware task offloading framework via network-assisted D2D collaboration. IEEE J. Sel. Areas Commun. 34, 3887–3901. https://doi.org/10.1109/JSAC.2016.2624118 (2016).

Jia, Q., Xie, R., Tang, Q., Li, X. & Liu, Y. Energy-efficient computation offloading in 5G cellular networks with edge computing and D2D communications. IET Commun. 13, 1122–1130 (2019).

Ranji, R., Mansoor, A. M. & Sani, A. A. EEDOS: An energy-efficient and delay-aware offloading scheme based on device to device collaboration in mobile edge computing. Telecommun. Syst. Model. Anal. Des. Manag. 73, 171–182 (2020).

Li, G., Chen, M., Wei, X., Qi, T. & Zhuang, W. Computation offloading with reinforcement learning in D2D–MEC network. In 2020 International Wireless Communications and Mobile Computing (IWCMC), 69–74 (2020). https://doi.org/10.1109/IWCMC48107.2020.9148285.

Tran, T. X. & Pompili, D. Joint task offloading and resource allocation for multi-server mobile-edge computing networks. IEEE Trans. Veh. Technol. 68, 856–868. https://doi.org/10.1109/TVT.2018.2881191 (2019).

Chai, R., Lin, J., Chen, M. & Chen, Q. Task execution cost minimization-based joint computation offloading and resource allocation for cellular D2D MEC systems. IEEE Syst. J. 13, 4110–4121. https://doi.org/10.1109/JSYST.2019.2921115 (2019).

Jia, Q. et al. Energy-efficient computation offloading in 5G cellular networks with edge computing and D2D communications. IET Commun. 13, 1122–1130. https://doi.org/10.1049/iet-com.2018.5934 (2019).

Lin, J., Chai, R., Chen, M., & Chen, Q. Task execution cost minimization-based joint computation offloading and resource allocation for cellular D2D systems. In IEEE 29th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), 1–5 (2018). https://doi.org/10.1109/PIMRC.2018.8580887.

Gao, Y., Tang, W., Wu, M., Yang, P. & Dan, L. Dynamic social-aware computation offloading for low-latency communications in IoT. IEEE Internet Things J. 6, 7864–7877. https://doi.org/10.1109/JIOT.2019.2909299 (2019).

He, Y., Ren, J., Yu, G. & Cai, Y. D2d communications meet mobile edge computing for enhanced computation capacity in cellular networks. IEEE Trans. Wirel. Commun. 18, 1750–1763. https://doi.org/10.1109/TWC.2019.2896999 (2019).

Lan, Y., Wang, X., Wang, D., Liu, Z. & Zhang, Y. Task caching, offloading, and resource allocation in D2D-aided fog computing networks. IEEE Access 7, 104876–104891. https://doi.org/10.1109/ACCESS.2019.2929075 (2019).

Sun, W., Zhang, H., Wang, L., Guo, S. & Yuan, D. Profit maximization task offloading mechanism with D2D collaboration in MEC networks. In 2019 11th International Conference on Wireless Communications and Signal Processing (WCSP), 1–6 (2019). https://doi.org/10.1109/WCSP.2019.8928117.

Cao, X., Wang, F., Xu, J., Zhang, R. & Cui, S. Joint computation and communication cooperation for mobile edge computing. In 2018 16th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), 1–6 92018). https://doi.org/10.23919/WIOPT.2018.8362865.

Zhang, X., Liu, Y., Liu, J., Argyriou, A. & Han, Y. D2D-assisted federated learning in mobile edge computing networks. In 2021 IEEE Wireless Communications and Networking Conference (WCNC), 1–7 (2021). https://doi.org/10.1109/WCNC49053.2021.9417459.

Yi, C., Huang, S. & Cai, J. Joint resource allocation for device-to-device communication assisted fog computing. IEEE Trans. Mob. Comput. 20, 1076–1091. https://doi.org/10.1109/TMC.2019.2952354 (2021).

Du, J. et al. Enabling low-latency applications in LTE-A based mixed fog/cloud computing systems. IEEE Trans. Veh. Technol. 68, 1757–1771. https://doi.org/10.1109/TVT.2018.2882991 (2019).

Li, Y. et al. Energy-efficient subcarrier assignment and power allocation in OFDMA systems with max–min fairness guarantees. IEEE Trans. Commun. 63, 3183–3195. https://doi.org/10.1109/TCOMM.2015.2450724 (2015).

Chen, X. Decentralized computation offloading game for mobile cloud computing. IEEE Trans. Parallel Distrib. Syst. 26, 974–983. https://doi.org/10.1109/TPDS.2014.2316834 (2015).

Chen, X., Jiao, L., Li, W. & Fu, X. Efficient multi-user computation offloading for mobile-edge cloud computing. IEEE/ACM Trans. Netw. 24, 2795–2808. https://doi.org/10.1109/TNET.2015.2487344 (2016).

Wang, C., Liang, C., Yu, F. R., Chen, Q. & Tang, L. Computation offloading and resource allocation in wireless cellular networks with mobile edge computing. IEEE Trans. Wirel. Commun. 16, 4924–4938. https://doi.org/10.1109/TWC.2017.2703901 (2017).

Du, J., Zhao, L., Feng, J. & Chu, X. Computation offloading and resource allocation in mixed fog/cloud computing systems with min-max fairness guarantee. IEEE Trans. Commun. 66, 1594–1608. https://doi.org/10.1109/TCOMM.2017.2787700 (2018).

Acknowledgements

This work was supported in part by the National Science and Technology Major Project of China under Grants No. 2018ZX03001026-002, in part by the Science Technology Project of Chongqing Education Commission under Grant No. KJQN201800618. We are prepared to file patent applications on the ideas.

Author information

Authors and Affiliations

Contributions

X.L., J.Z., and Y.L. designed and realized the whole system, made the code, conducted experiments, and wrote this paper. M.Z., R.W., and Y.H. helped with parts of experiments and gave comments during experiments. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, X., Zheng, J., Zhang, M. et al. A novel D2D–MEC method for enhanced computation capability in cellular networks. Sci Rep 11, 16918 (2021). https://doi.org/10.1038/s41598-021-96284-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-96284-w

This article is cited by

-

Multi-dimensional resource allocation strategy for LEO satellite communication uplinks based on deep reinforcement learning

Journal of Cloud Computing (2024)

-

Optimized flexible network architecture creation against 5G communication-based IoT using information-centric wireless computing

Wireless Networks (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.