Abstract

In this study, we aimed to develop and validate a machine learning-based mortality prediction model for hospitalized heat-related illness patients. After 2393 hospitalized patients were extracted from a multicentered heat-related illness registry in Japan, subjects were divided into the training set for development (n = 1516, data from 2014, 2017–2019) and the test set (n = 877, data from 2020) for validation. Twenty-four variables including characteristics of patients, vital signs, and laboratory test data at hospital arrival were trained as predictor features for machine learning. The outcome was death during hospital stay. In validation, the developed machine learning models (logistic regression, support vector machine, random forest, XGBoost) demonstrated favorable performance for outcome prediction with significantly increased values of the area under the precision-recall curve (AUPR) of 0.415 [95% confidence interval (CI) 0.336–0.494], 0.395 [CI 0.318–0.472], 0.426 [CI 0.346–0.506], and 0.528 [CI 0.442–0.614], respectively, compared to that of the conventional acute physiology and chronic health evaluation (APACHE)-II score of 0.287 [CI 0.222–0.351] as a reference standard. The area under the receiver operating characteristic curve (AUROC) values were also high over 0.92 in all models, although there were no statistical differences compared to APACHE-II. This is the first demonstration of the potential of machine learning-based mortality prediction models for heat-related illnesses.

Similar content being viewed by others

Introduction

Rising global temperatures owing to excessive carbon dioxide emissions or heat island effect caused by urbanization have been endangering human health worldwide1,2. Increase in the aging population, which is vulnerable to the health effects of heat, has also enhanced the occurrence of heat-related diseases3. Although a large number of studies over the decades has revealed the epidemiology, risk factors, and preventative management of such diseases, reducing the occurrence of heat-related illness is challenging because it requires solutions by society as a whole, such as installation of air conditioners for the elderly or low-income citizens. In fact, numerous instances of hospitalization and eventual death of patients suffering from heat-related illness continue to be recorded. During 2014–2018, death due to heat-related illnesses in the United States was reported to be an average of 702 per year4. In this background, medical practitioners are continuously challenged to generate high quality of care for heat-related illness.

The most important treatment for heat-related illness is rapid and effective cooling. There are various cooling strategies such as cold-water immersion, administration of cold fluids, application of ice packs or wet gauze sheets, fanning, and cooling suits2,5. In addition, more invasive methods are selected for critical patients, such as an intravascular cooling device or extracorporeal circulatory support system6,7. Occasionally, artificial ventilation, hemodialysis, or liver transplantation might be necessary for organ support8,9. However, it is difficult for clinicians to optimize therapeutic intervention according to individual patient conditions. The availability of clinical prognostic tools could be helpful in deciding these treatment options. Furthermore, the prognostic model could be used retrospectively to assess the quality of care for heat-related illness.

In recent years, prognostic tools using machine learning have been widely developed and applied in medicine, as they often outperform conventional prediction methods10. In contrast, a machine learning-based mortality prediction model for heat-related illness has not been developed previously. In this study, we aimed to develop and validate machine learning-based mortality prediction models for use in hospitalized patients with heat-related illnesses.

Methods

Data sources and ethical approval

The data for this retrospective cohort study were obtained from the “Heatstroke study” database in Japan. A heatstroke study was undertaken by the Japanese Association for Acute Medicine (JAAM) to clarify the epidemiology of heat-related illness in Japan. The data were manually recorded by a staff member or medical doctor at each participating hospital using specific record sheets. From 2014, patients with heat-related illness who were admitted to the hospitals were included in the heatstroke study, except for the period 2015–2016, in which the heatstroke study was not conducted. Diagnosis of heat-related illness was based on the judgement of the clinician in each participating hospital. Thus, data from the heatstroke studies in 2014 and 2017–2020, from 109 to 142 participating hospitals, were extracted for our study. The heatstroke study has been described elsewhere11,12.

The heatstroke study protocol was approved by the ethics committee of Showa University Hospital. Patient information was de-identified before being provided for use in this study. The requirement for patient informed consent was waived, as this was an observational study using anonymous data. The current study was conducted in accordance with the Declaration of Helsinki.

Study population

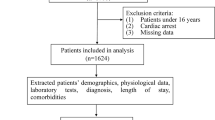

Overall, 2855 patients with heat-related illness were identified from the heatstroke study data in 2014 and 2017–2020. Of these, 285 patients were excluded because they were not hospitalized or no information was available regarding their hospitalization. Further, cases with cardiac arrest at hospital arrival and incomplete data regarding survival outcome were excluded. In total, the data of 2393 patients hospitalized with heat-related illness met the inclusion criteria. Finally, the subjects were classified into two groups: training set (n = 1516, data from 2014, 2017–2019) and test set (n = 877, data from 2020) (Fig. 1).

Outcome and variable selection

In this study, the outcome was set as death during hospital stay. From the heatstroke study database, 24 variables with missing values below 25% of all samples were extracted as predictor features for the outcome. These variables were age, sex, location at the onset (indoor or outdoor), vital signs (systolic blood pressure, diastolic blood pressure, heart rate, respiratory rate, and body temperature), total Glasgow coma scale (GCS), peripheral oxygen saturation (SpO2), and laboratory data [pH, base excess, hematocrit, platelet count, blood urea nitrogen (BUN), creatinine, total bilirubin, aspartate aminotransferase (AST), alanine aminotransferase (ALT), creatine kinase, sodium, potassium, glucose, and prothrombin time/international normalized ratio (PT-INR)] at patients’ hospital arrival. Missing data were imputed from the median of each variable.

Development of machine learning models

Four kinds of machine learning models including logistic regression, support vector machine, random forest, and XGBoost were trained by using variables selected for mortality prediction in the training set. First, feature scaling to normalize the range of independent variables was accomplished. In the process of training, tenfold stratified cross-validation was used to avoid overfitting of the model. In short, the training data were partitioned into 10 stratified subsets. Subsequently, 9 subsets (90% of training data) were used to train the model, and the remaining subset (10% of training data) was used for the validation. These training and validation processes were repeated 10 times with each of the subsets used once as a validation dataset, allowing us to obtain 10 estimates of predictive accuracy, which were averaged to obtain a single estimate. Because our data were imbalanced for the outcome, we used cost-sensitive learning. In addition, optimization of hyperparameters (values that control the machine learning process) was performed for each model (Supplementary Table 1).

To assess the feature importances for the model development, Gini importances were computed as the normalized total reduction of the criterion brought by the feature for random forest and XGBoost models. For the logistic regression model, absolute values of standardized beta coefficients were described.

Validation of developed machine learning models

The performance of the developed machine learning models was validated using the test data; this process was independent of the algorithm training process. We compared these models with the conventional acute physiology and chronic health evaluation (APACHE)-II score as the reference standard for prediction of the outcome. The area under the receiver operating characteristic curve (AUROC), the area under the precision-recall curve (AUPR), sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and accuracy were measured as the performance indicators. To observe the correlation between predicted and observed probabilities of mortality during hospital stay, we created calibration plots in the test set.

Libraries for data analyses and machine learning

To present the patient data, the mean with standard deviation (SD) or median with interquartile range (IQR) was used for the numerical variables. For categorical variables, counts with percentages were reported. For comparison analysis between two samples, the t-test and Mann–Whitney U test were used for the means and medians of samples, respectively. The frequencies were compared using the chi-square test. The two-sided significance level for all tests was set at 5% (p < 0.05). Patient characteristics were analyzed using the SciPy (version 1.5.2) with Python (version 3.7.4 in Anaconda 2019.10). Development of machine learning models was employed by Scikit-learn (version 0.21.3) with Python.

Results

Characteristics of study subjects

The baseline characteristics of the included patients are shown in Table 1. The mean age of all included patients was 65 ± 22 years, and 70.4% of the patients were men. Outdoor heat-related illness accounted for 54.9% of all patients. The mortality rate during hospital stay was only 5.2%, indicating that the analyzed dataset was highly imbalanced for the outcome. In comparison between training and test dataset, there were significant differences for age, location at the onset, body temperature, SpO2, pH, BUN, creatinine, total bilirubin, creatine kinase, and sodium. However, most of these differences appear to be clinically irrelevant.

Assessment of variable importances for the model development

Absolute values of standardized beta coefficients for logistic regression, as well as feature importances for random forest and XGBoost models, were assessed and the results were shown in Fig. 2. In all machine learning models assessed, total GCS score at patients’ hospital arrival was the most essential variable for the prediction of mortality during hospital stay. Both AST and ALT levels in blood were ranked in the top 5 important features in all models. The other key variables to develop the models were SpO2 and base excess for the logistic regression, PT-INR and systolic blood pressure for the random forest, and SpO2 and systolic blood pressure for the XGBoost.

(A) Absolute values of standardized beta coefficients for the logistic regression model. (B) Feature importances of variables for the random forest model. (C) Feature importances of variables for the XGBoost model. Asterisk shows the feature in a positive correlation to the survival outcome. Location (outdoor/indoor)* and gender* refer to outdoor location and male are positive correlation to the survival outcome, respectively. GCS Glasgow coma scale, AST aspartate aminotransferase, ALT alanine aminotransferase, SpO2 oxygen saturation, BUN blood urea nitrogen, PT-INR prothrombin time/international normalized ratio.

Comparison of the accuracy of the models and the reference standard in cross-validation of the training dataset

The training accuracy of machine learning models as the results of cross-validation were 0.852 [SD 0.048] in the logistic regression, 0.841 [SD 0.030] in the support vector machine, 0.918 [SD 0.023] in the random forest, and 0.946 [SD 0.008] in the XGBoost. In contrast, the training accuracy of APACHE-II score was low with 0.773 [SD 0.067].

Performance analysis of the developed models and the reference standard in the test dataset

Figure 3 presents the receiver operating characteristic (ROC) curves and the precision-recall (PR) curves with AUROC and AUPR values of the developed machine learning models and APACHE-II score. Validation of our developed machine learning models showed reliable performance in predicting mortality of heat-related illness, with AUROC values of 0.922 [95% confidence interval (CI) 0.868–0.975] for the logistic regression, 0.920 [CI 0.866–0.974] for the support vector machine, 0.925 [CI 0.872–0.977] for the random forest, and 0.926 [CI 0.874–0.978] for the XGBoost. However, these models could not show statistically significant differences compared to the APACHE-II score with AUROC values of 0.867 [CI 0.801–0.934].

Comparison of ROC curves, PR curves, AUROC, and AUPR among the developed machine-learning models and APACHE-II score for mortality prediction. ROC Receiver operating characteristic, PR precision-recall, AUROC area under the receiver operating characteristic curve, AUPR area under the precision-recall curve, APACHE acute physiology and chronic health evaluation, CI confidence interval.

In contrast, there were significantly increased values of AUPR in all developed machine learning models (0.415 [CI 0.336–0.494] for logistic regression, 0.395 [CI 0.318–0.472] for support vector machine, 0.426 [CI 0.346–0.506] for random forest, and 0.528 [CI 0.442–0.614] for XGBoost) compared to APACHE-II score (0.287 [CI 0.222–0.351]).

The confusion matrix and evaluation measures such as sensitivity, specificity, PPV, NPV, and accuracy of the prediction models are shown in Table 2. The logistic regression model demonstrated highest sensitivity of 0.851 [CI 0.749–0.953] and NPV of 0.990 [CI 0.983–0.997] among evaluated classifiers. On the other hand, specificity, PPV and accuracy were highest in XGBoost model with 0.999 [0.996–1.001], 0.875 [0.646–1.104], and 0.953 [0.936–0.965], respectively.

Probability calibration curves

Probability calibration curves of prediction models in validation were described in Supplementary Fig. 1. All models were not well-calibrated, indicating that the uncertainty of the predicted probability. XGBoost was underestimated, whereas APACHE-II, logistic regression, support vector machine, and random forest were overestimated for the outcome probabilities.

Discussion

To our knowledge, the current study is the first to develop and evaluate a machine learning-based prediction model for the prognosis of heat-related illness. In summary, we selected 24 clinical predictors for mortality of heat-related illness from the Japanese heatstroke database. After training these variables using several machine learning algorithms of logistic regression, support vector machine, random forest, and XGBoost, validation of the developed models demonstrated reliable performance with reasonably high AUROC. In comparison of AUPR, all models showed significantly superior performances than APACHE-II as a reference standard.

Heat-related illness can be severe, such as heatstroke, and is induced by an excessively hot and humid environment2. Therefore, it is certain that avoiding such an environment would be the best strategy to reduce the poor outcome of this disease. In fact, there has been growing evidence that the environment predisposes people to heat-related illness; in addition, the risk factors for heatstroke have been identified13,14. On the other hand, there are few studies on the prognosis of patients who actually develop heatstroke15,16. Owing to the lack of a specific mortality prediction tool for heat-related illness, general scoring systems for critically ill patients, such as sequential organ failure assessment (SOFA) and APACHE-II scores, have been commonly used to estimate the severity of this disease12,17. The development of specific and reliable prognostic models for heat-related illnesses is anticipated so that clinicians can make an informed decision for optimized treatment. In this context, the current study shows its importance and strength.

Recent evidence has shown the effectiveness of machine learning methods in the development of predictive models in medicine18,19. Similarly, we successfully developed a good prognostic model for heat-related illness by using a machine learning algorithm in this study. Referring to the AUROC values, our developed models could not show statistical superiority over the conventional APACHE-II score, even if the models demonstrated higher AUROC values over 0.92 compared to that of APACHE II score with 0.87. However, the current study included only 877 patients for the validation cohort. The limited sample size and lack of statistical power might be the reason why we were not able to find statistical differences in AUROC. More importantly, our data was imbalanced for the outcome with only 5.4% in validation. In the evaluation of performance for imbalanced dataset, AUPR is more appropriate than AUROC because it was specifically fitted for the detection of rare events. Thus, significantly higher AUPR values in the developed models than APACHE-II have encouraged the effectiveness of machine learning to detect rare cases of mortality in heat-related illness. However, calibration plots showed underestimated or overestimated prediction for outcome probability, indicating that these models should be used only for the classification problem.

Our prediction model has the potential to be used in clinical practice. Given that we used only laboratory data and clinical findings at the time of hospital presentation as the predictor variables, the prediction might be used by clinicians as a reference tool for early treatment selection, including internal cooling and cardiopulmonary bypass for severe heat-related illness, which require huge medical costs. Furthermore, the model might be used retrospectively to assess the quality of care for the treatment of heat-related illness. However, we should not use the machine learning model as a definite tool to decide treatment withdrawal.

Notably, body temperature at hospital arrival was not ranked as the highest top five of the mortality predictors selected for machine learning development. In contrast, multiple organ dysfunction indicators were widely chosen, namely, Glasgow coma scale for dysfunction of the central nervous system, systolic blood pressure for circulatory dysfunction, SpO2 for respiratory dysfunction, AST and ALT for hepatic failure, PT-INR for coagulopathy, and base excess for metabolic disorders. Inclusion of multiple organ injury markers as parameters is similar to general severity scoring models such as SOFA and APACHE II scores20,21; however, variables specifically selected for mortality prediction of heat-related illness might lead to better improvement of predictive performance than the conventional methods. For example, the liver is a common site of tissue injury in heatstroke and causes poor outcome22,23. In our machine learning models, AST and ALT levels at hospital arrival were regarded as important predictive values, whereas total bilirubin was included as a hepatic injury indicator in SOFA and no information of hepatic injury in APACHE-II; this difference may affect the predictive ability. In addition, renal dysfunction is relatively common in heatstroke17,24. Creatinine level is included in the SOFA and APACHE II scores; however, it was not mainly regarded as the one of important predictors for mortality in our machine learning models, suggesting that complications of renal dysfunction in heat-related illness might not be a strong factor for poor outcome.

Although several variables such as preexisting medical conditions and coagulation abnormalities were recognized as risk factors for the occurrence or poor outcome of heatstroke25,26,27, they were not used in the development of our machine learning models because of the huge amount of missing data in the dataset. The performance of the model might improve if these variables are available for machine learning in the future structured dataset.

Our study has several limitations. First, our prediction model cannot be generalized for application on a global scale. Heat acclimatization can occur in response to heat stress; thus, vulnerability and severity of a heat-related illness can differ depending on the climate in different countries. As we used the Japanese registry database for both training and validation of the model, external validation using databases from foreign countries should be performed in the future. Second, we imputed missing values from the median of each variable. This method is widely used, and is a simple way to impute missing data; however, it could generate bias. Third, the results of evaluation measures for our prediction model demonstrated a wide range of confidence intervals, indicating the uncertainty of the model. This can be attributed to the inadequate total sample size and rare occurrence of outcome (death during hospital stay). However, it is difficult to accumulate data for heat-related illness owing to its seasonal and geographic characteristics. In fact, to our knowledge, there are no larger databases with clinical parameters, including laboratory testing data for heat-related illness, than our heatstroke study registry. Further accumulation of data for such illness is crucial to increase the certainty of the machine learning prediction model. Fourth, we did not focus on the neurologic sequelae of surviving heatstroke patients, which is an important complication of the disease28. Although we could not obtain information on the neurological prognosis to be assessed, survival without sequelae should be the primary goal of treatment in real-world practice and thus might exhibit a more significant outcome for the prediction. Fifth, APACHE-II score is not specific to heat-related illness, therefore our study does not guarantee the superiority of machine learning models over simple statistical models which was specifically developed for heat-related illness. Finally, there would be a criticism that machine learning models need a computing device to calculate the results, and a separate model just for the patients with heat-related illnesses would not be realistic. As our selected features were mostly vital signs, laboratory data, and patient background, we suggest the use of machine learning model as a plugin to the electrical hearth record, after the completion of further improvement in the performance and prospective studies for external validation in the future.

Conclusions

In conclusion, a novel mortality prediction model for patients hospitalized with heat-related illness was developed using a machine learning technique. Although further improvement in the performance quality with increased sample size or inclusion of important variables, as well as prospective validation in a clinical setting are needed, our study demonstrated for the first time the potential of machine learning-based prediction models for heat-related illness.

References

Watts, N. et al. The 2019 report of The Lancet Countdown on health and climate change: Ensuring that the health of a child born today is not defined by a changing climate. Lancet 394, 1836–1878 (2019).

Epstein, Y. & Yanovich, R. Heatstroke. N. Engl. J. Med. 380, 2449–2459 (2019).

Choudhary, E. & Vaidyanathan, A. Heat stress illness hospitalizations—Environmental public health tracking program, 20 States, 2001–2010. MMWR Surveill. Summ. 63, 1–10 (2014).

Vaidyanathan, A., Malilay, J., Schramm, P. & Saha, S. Heat-related deaths—United States, 2004–2018. MMWR Morb. Mortal Wkly. Rep. 69, 729–734 (2020).

Bouchama, A., Dehbi, M. & Chaves-Carballo, E. Cooling and hemodynamic management in heatstroke: Practical recommendations. Crit. Care 11, R54 (2007).

Yokobori, S. et al. Feasibility and safety of intravascular temperature management for severe heat stroke: A prospective multicenter pilot study. Crit. Care Med. 46, e670–e676 (2018).

Allen, S. B. & Cross, K. P. Out of the frying pan, into the fire: A case of heat shock and its fatal complications. Pediatr. Emerg. Care 30, 904–910 (2014).

Ichai, P. et al. Liver transplantation in patients with liver failure related to exertional heatstroke. J. Hepatol. 70, 431–439 (2019).

Bi, X., Deising, A. & Frenette, C. Acute liver failure from exertional heatstroke can result in excellent long-term survival with liver transplantation. Hepatology 71, 1122–1123 (2020).

Schwalbe, N. & Wahl, B. Artificial intelligence and the future of global health. Lancet 395, 1579–1586 (2020).

Kondo, Y. et al. Comparison between the Bouchama and Japanese Association for acute medicine heatstroke criteria with regard to the diagnosis and prediction of mortality of heatstroke patients: A multicenter observational study. Int. J. Environ. Res. Public Health 16, 3433 (2019).

Shimazaki, J. et al. Clinical characteristics, prognostic factors, and outcomes of heat-related illness (Heatstroke Study 2017–2018). Acute Med. Surg. 7, e516 (2020).

Wang, Y. et al. A random forest model to predict heatstroke occurrence for heatwave in China. Sci. Total Environ. 650, 3048–3053 (2019).

Bobb, J. F., Obermeyer, Z., Wang, Y. & Dominici, F. Cause-specific risk of hospital admission related to extreme heat in older adults. JAMA 312, 2659–2667 (2014).

Yang, M. M. et al. Establishment and effectiveness evaluation of a scoring system for exertional heat stroke by retrospective analysis. Mil. Med. Res. 7, 40 (2020).

Hayashida, K. et al. A novel early risk assessment tool for detecting clinical outcomes in patients with heat-related illness (J-ERATO score): Development and validation in independent cohorts in Japan. PLoS ONE 13, e0197032 (2018).

Pease, S. et al. Early organ dysfunction course, cooling time and outcome in classic heatstroke. Intensive Care Med. 35, 1454–1458 (2009).

Meyer, A. et al. Machine learning for real-time prediction of complications in critical care: A retrospective study. Lancet Respir. Med. 6, 905–914 (2018).

Raghunath, S. et al. Prediction of mortality from 12-lead electrocardiogram voltage data using a deep neural network. Nat. Med. 26, 886–891 (2020).

Vincent, J. L. et al. The SOFA (Sepsis-related Organ Failure Assessment) score to describe organ dysfunction/failure. On behalf of the Working Group on Sepsis-Related Problems of the European Society of Intensive Care Medicine. Intensive Care Med. 22, 707–710 (1996).

Knaus, W. A., Draper, E. A., Wagner, D. P. & Zimmerman, J. E. APACHE II: A severity of disease classification system. Crit. Care Med. 13, 818–829 (1985).

Hassanein, T., Perper, J. A., Tepperman, L., Starzl, T. E. & Van Thiel, D. H. Liver failure occurring as a component of exertional heatstroke. Gastroenterology 100, 1442–1447 (1991).

Hadad, E. et al. Liver transplantation in exertional heat stroke: A medical dilemma. Intensive Care Med. 30, 1474–1478 (2004).

Yu, F. C. et al. Energy metabolism in exertional heat stroke with acute renal failure. Nephrol. Dial. Transplant. 12, 2087–2092 (1997).

Bouchama, A. et al. Prognostic factors in heat wave related deaths: A meta-analysis. Arch. Intern. Med. 167, 2170–2176 (2007).

el-Kassimi, F. A., Al-Mashhadani, S., Abdullah, A. K. & Akhtar, J. Adult respiratory distress syndrome and disseminated intravascular coagulation complicating heat stroke. Chest 90, 571–574 (1986).

Proctor, E. A. et al. Coagulopathy signature precedes and predicts severity of end-organ heat stroke pathology in a mouse model. J. Thromb. Haemost. 18, 1900–1910 (2020).

Yang, M. et al. Outcome and risk factors associated with extent of central nervous system injury due to exertional heat stroke. Medicine (Baltimore) 96, e8417 (2017).

Acknowledgements

We thank all investigators who coordinated or participated in the heatstroke study. We would like to thank Editage (www.editage.jp) for English language editing.

Funding

This research was supported by JSPS KAKENHI, Grant Number 19H03764.

Author information

Authors and Affiliations

Contributions

Concept and design: Y.H., Y.K., K.O., and H.T. Acquisition of data (heatstroke study): Y.K., T.H., S.Y., J.K., J.S., K.H., T.M., M.Y., S.T., J.Y., Y.O., Y.O., H.K., T.K., M.F., H.Y., and A.Y. Analysis and interpretation of data: Y.H., and Y.K. Drafting of the manuscript: Y.H. Revision and Discussion of the manuscript: all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hirano, Y., Kondo, Y., Hifumi, T. et al. Machine learning-based mortality prediction model for heat-related illness. Sci Rep 11, 9501 (2021). https://doi.org/10.1038/s41598-021-88581-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-88581-1

This article is cited by

-

Machine learning analysis and risk prediction of weather-sensitive mortality related to cardiovascular disease during summer in Tokyo, Japan

Scientific Reports (2023)

-

A prehospital risk assessment tool predicts clinical outcomes in hospitalized patients with heat-related illness: a Japanese nationwide prospective observational study

Scientific Reports (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.