Abstract

GPS navigation is commonplace in everyday life. While it has the capacity to make our lives easier, it is often used to automate functions that were once exclusively performed by our brain. Staying mentally active is key to healthy brain aging. Therefore, is GPS navigation causing more harm than good? Here we demonstrate that traditional turn-by-turn navigation promotes passive spatial navigation and ultimately, poor spatial learning of the surrounding environment. We propose an alternative form of GPS navigation based on sensory augmentation, that has the potential to fundamentally alter the way we navigate with GPS. By implementing a 3D spatial audio system similar to an auditory compass, users are directed towards their destination without explicit directions. Rather than being led passively through verbal directions, users are encouraged to take an active role in their own spatial navigation, leading to more accurate cognitive maps of space. Technology will always play a significant role in everyday life; however, it is important that we actively engage with the world around us. By simply rethinking the way we interact with GPS navigation, we can engage users in their own spatial navigation, leading to a better spatial understanding of the explored environment.

Similar content being viewed by others

Introduction

Navigation is a fundamental human behavior that is critical for everyday life. Exploring new environments, understanding the current location, and remembering how to get back are essential for successful navigation1. In fact, knowing where to go and where we came from are such fundamental processes that they were once critical to our own ancestral survival2. But with the arrival of GPS and navigation apps, is this a thing of the past? And more importantly, if these apps are now responsible for a function that is performed by our brain and was once critical to our survival, is it doing more harm than good?

Navigation requires the coordination of numerous perceptual and sensory processes that are further supported by several brain regions, including the hippocampus, retrosplenial cortex, striatum and entorhinal cortex, to create successful representations of space3,4,5,6,7,8. While spatial cognition is not solely attributed to one brain region, the hippocampus is well known to play a critical role in spatial learning, memory and navigation, and has long been thought to contain the “cognitive map”8,9. In fact, the creation of cognitive maps, development of spatial expertise, and exploration of novel environments can have a positive impact on hippocampal structure and function10,11,12,13,14,15. As we age, however, our hippocampus and spatial abilities decline6,16,17,18,19,20,21, even predicting the conversion from mild cognitive impairment to Alzheimer’s disease22,23,24. Therefore, creating mental maps is not only important for the navigation of daily life, but is critically important as we age.

At the most fundamental level, cognitive maps are formed through exploration8,9,25,26,27,28. While the animal work has described the formation of these spatial neural networks in detail, studies have demonstrated that humans are quite capable of learning about space in other ways such as with the use of (paper) maps29,30. Most significantly, the literature describes two main types of navigation strategies: egocentric and allocentric. Egocentric navigation is considered striatal and describes how cues within the environment relate to the individual (a set of directions) whereas allocentric navigation is hippocampal and describes how cues within the environment relate to one another (a map). While both navigation strategies have their advantages, they are thought to operate both independently and in parallel31. A true cognitive map requires an allocentric perspective of space that the hippocampus provides, so that the spatial information is flexible and can be used from any location9. Furthermore, a successful creation of cognitive maps requires active engagement in the navigation process as spatial decision making is the primary component of active learning for the acquisition of map based knowledge3,32.

Is it possible to find a balance between our internal navigation system and modern technology? Certainly, new technology has allowed us to go further and reach unexplored places in ways we would have only imagined prior to GPS access on our mobile phones. One can get lost in the streets of Tokyo and still find their way back without fear. Hence, we have reached a modern paradox for navigation: navigation apps allow us to explore more places, while at the same time making us worse explorers. Here, we argue that current GPS apps (based on turn-by-turn navigation) promote a passive form of navigation that does not support learning or the formation of cognitive maps33. Turn-by-turn navigation, is a passive navigation system based on an egocentric perspective. The user does not make any decisions about the navigation process, they simply input a desired location and follow the directions on the app.

While traditional turn-by-turn navigation is effective in its ability to lead us to a desired location, this passive form of navigation does not support spatial learning, thus having a detrimental impact on humans navigation skills and spatial cognition34,35,36,37. Several studies have observed a link between GPS navigation and poor spatial awareness. Recently, a study found that greater lifetime GPS experience, based on GPS habits and reliance on GPS in various navigation situations, correlated with worse spatial performance34. In addition, greater GPS habits were tied to lower cognitive mapping abilities and less dependence on spatial strategies associated with the hippocampus. In a study directly comparing the wayfinding abilities of GPS users, map users, and direct experience users (walking without GPS or a map), GPS users traveled longer distances, took longer to complete the navigation task, and had a worse topological understanding of the routes when compared to the map users or direct experience users37. From an anthropological perspective, Inuit hunters in northern Canada have noted their extraordinary ability to navigate the arctic tundra, despite the harsh, desolate terrain and the lack of reliable landmarks across large spaces. While the use of traditional navigation tools, like maps and compasses, has empowered their extraordinary perceptual skills, that were developed and passed down from one generation to the next; GPS technologies replaced the need for such skills altogether. Younger generations of Inuit hunters have traded in these deep-rooted wayfinding skills for modern GPS technology. While easy to use, GPS technology could not help the young Inuit hunters adapt to the extreme weather or hazards of the arctic tundra. An over dependence on GPS technology led to an increase in serious accidents and death, as these young Inuit hunters were essentially navigating “blindfolded”2. Even in modern cities, in-car GPS navigation “inhibits the process of experiencing the physical world”33. Rather than aiding our ability to navigate, GPS navigation is designed to relieve us of our involvement and disengage us from our surrounding environment. After all, GPS navigation is a form of destination-oriented transport in which humans become a “passenger of their own body”, as opposed to wayfaring, which embeds us into the environment as the driver, in order to find the destination38.

Here we suggest an alternative form of GPS navigation, that is possible with the use of 3D spatialized audio. We position a virtual auditory beacon, which is essentially a continuous sound virtually positioned in 3D, to always be emanating from the direction straight from the destination it is associated with. By the means of this virtual auditory beacon, users of GPS apps can regain their active role, and navigate in the space like they used to: without delegating decisions (see materials section for more details about the beacon implementation). Users navigate in the direction from which the beacon sounds, constrained only by the available paths traveling in that direction. In this scenario, the mobile app acts as a compass that directs the user towards the destination in an allocentric manner. The goal of our research is to demonstrate that by simply rethinking the way we interact with technology and introducing sensory augmentation into the equation (in the form of 3D audio) we can have a real impact on modern navigation without having to compromise our own internal navigation system or our ability to create mental maps.

Materials and methods

Participants

In total, we recruited 53 (19 female, 34 male; mean age = 33.8, SD = 8.21) individuals to participate in the study. Using a Microsoft email blast, we recruited both Microsoft Employees (experts) and Interns (naïve). All participants gave informed consent and were compensated with a $50 Amazon gift card upon completion of the study. The study was approved by the Microsoft Research IRB committee.

Experimental groups

Participants were classified as either Experts or Naïve based on their experience with the Microsoft campus. The Naïve group (n = 24, 9 female, 15 male; mean age = 29.9, SD = 7) consisted on Summer Interns at Microsoft who had just begun their internship. All participants in the Naïve group had been on the Microsoft campus for less than 3 weeks at the time of the study. The Expert group (n = 29, 10 female, 19 male; mean age = 37.79, SD = 8.2) consisted of Microsoft employees who had been on the Microsoft campus for at least 6 months at the time of the study (up to + 20 years). Within each group, individuals were randomly divided into two conditions: Turn-by-turn and Beacon. The Turn-by-turn group performed the task using turn-by-turn directions and the Beacon group performed the task using the Microsoft Soundscape app (https://www.microsoft.com/en-us/research/product/soundscape/).

Prior to the study, participants were asked two questions about their familiarity with the Microsoft campus and their navigation abilities (Seven-point Likert scale for each question). These self-reports of familiarity with the Microsoft campus and confidence in their own navigation abilities confirmed that the Naïve group was less familiar with the campus (Naïve: mean = 2.1, SD = 0.92; Experts: mean = 4.7, SD = 1.44, p = 0.04) but not significantly less confident in their own navigation (Naïve: mean = 3.9, SD = 1.45; Experts: mean = 4.3, SD = 1.78) compared to the Expert group (p = 0.4).

Beacon implementation

GPS coordinates were used to establish a desired destination and a virtual audio beacon using the mobile app Soundscape. The virtual audio beacon consists of continuously playing audio that is localized around the user such that the source always appears to be coming from the direction towards the desired destination. The rendering of the audio stream is performed using a head-related transfer function39, and the audio stream is localized 1 m away from the user in the direction towards the desired location, as recorded by where the phone is pointing at (Fig. 1).

Implementation of the Beacon. The participant receives spatial audio information as coming from the beacon source. To amplify the information of directionality, we further implement two types of sounds depending on whether the participant is pointing directly at the beacon (A) or it is walking in another direction (B). This small design decision guides participants into finding the beacon faster.

The localization of the audio is updated continuously based on the user’s orientation (as determined by the gyroscope and magnetometer in the user’s phone running Soundscape, Fig. 1). The beacon’s audio varies based on the angle between the user’s orientation and the bearing from the user’s location to the location of the beacon’s destination. If the angle is greater than 22.5°, a base audio track consisting of a clip-clop sound is played. If the angle is less than 22.5°, the same base audio is played, but an additional audio track consisting of a high pitch “ting” sound synchronized with the clip-clop sound is added in. This additional layer of audio provides a secondary encoding of the directionality of the beacon. It is important to note that the audio was designed to have a minimal footprint into the auditory space of the participant so it would not occlude the understanding of the real environment, without affecting sights and sounds of the world around you40.

We believe that auditory beacons will fall on the category of navigation aids, and are not designed to directly substitute human wayfinding abilities, but to augment the perceptual navigation1,41. Different beacon configurations have been explored for that purpose41,42,43. One of the earliest implementations from 2002 AudioGPS42 showed positive informal comments towards audio guided vector direction navigation. Later gpsTunes and Ontrack worked on a similar way but based on the music that the user was listening41,43. Audio Bubbles tested whether this type of tools could be used for tourist wayfinding44. However, none of the previous efforts carried a formal evaluation of the mental map creation with these tools.

A clip of the audio is provided in the Supplementary material, but we encourage to download the Microsoft Soundscape app for a full experience and demo of the beacon implementation.

Soundscape scavenger hunt

Soundscape is a spatial awareness app, original designed to assist seeing-impaired individuals in learning about the environment around them. The app is available in 7 countries and has guided over 500.000 trips of visually impaired users. Soundscape uses a combination of features to both guide users to a desired location (audio beacon) and to inform users about nearby places of interest or intersections (callouts). For the purposes of this experiment, we disabled the callouts feature of the app to ensure that participants were navigating using only the audio beacons (continuous 3D spatialized audio that always emanates from the direction towards the desired location), see Supplementary Fig. S3.

All participants participated in a scavenger hunt activity in which they had to locate five different POIs, of varying degrees of difficulty to find, on the Microsoft campus (Fig. 2). The GPS coordinate of each POI was used to establish the spatial location within the Soundscape app. There were some POIs we expected some users to have seen before (volleyball court), but others that were not immediately identifiable (two benches and hidden door or a particular building).

Overhead view of the scavenger hunt on the Microsoft campus. POI locations are denoted by the numbers and colored circles: Microsoft Sign (1; purple), Two Benches (2; red), Basketball Court (3; blue), Volleyball Court (4; orange), and Hidden Door (5; green). The locations of the pointing tasks are denoted with stars: Location A (A; yellow) and Location B (B; pink).

The Microsoft campus is very large, spanning over 2 km2, however the selected area was relatively central, and we reduced the scavenger hunt to a 0.12 km2 region.

The order of the POIs was picked to purposely avoid a direct path from one to the next, this way participants had to make active decisions on where to go. Pictures of each POI were displayed in the Soundscape app, one at a time, and participants were instructed to find the pictured location in the real world. See Supplementary material (Supplementary Fig. S3A and B), for further details on the scavenger hunt visualization in the app. While both the Turn-by-turn and Beacon groups used Soundscape to view the POIs, the Turn-by-turn group navigated to the POIs through verbal turn-by-turn directions (by the experimenter; Supplementary Fig. S4) and the Beacon group navigated to the POIs through the use of audio beacons in Soundscape. The Turn-by-turn received verbal directions (directly taken from a navigation app) from the experimenter because turn-by-turn directions did not have the spatial resolution to determine the precise location of each turn juncture on the Microsoft campus. Neither the Turn-by-turn group or the Beacon group were allowed to view their location on a visual map. Therefore, this feature was removed from the current study as the goal was to test the effects of auditory stimulation. In addition, a visual map could be more time consuming that with the use of an auditory navigation system44. The Beacon group was not allowed to make shortcuts through buildings. Once the POI was found (determined by Soundscape based on GPS location), a picture of the next POI was presented, the audio beacon was updated to point towards the new POI’s location, and participants were instructed to continue to the next POI. The presentation order of the POIs was the same for all participants and was specifically arranged so that every POI had multiple access routes. Soundscape recorded timestamps, GPS locations and heading directions at a sampling rate of approximately 1 Hz throughout the entire duration of the study.

Experimental design

Two tasks were used to assess spatial knowledge. The first was a pointing task, which is commonly used to assess spatial abilities in real-world studies45,46,47. In the pointing task (Fig. 3), a picture of a POI was presented on the phone and participants had to point, using the phone, from their current location to the POI. To confirm the direction, participants pressed a button on the screen and the Soundscape app recorded the direction. As there is inherent error in the mobile phone compass, we used relative differences between heading directions when computing the amount of pointing error as opposed to comparing the angle differences between true direction and the heading direction provided by the phone. For example, the first POI (Microsoft Sign) is visible from Location A (Fig. 2) and was therefore used as the reference point for all other POIs. In other words, the amount of error for each POI is the angle difference between the POI and the reference POI (Microsoft Sign), as provided by the phone, versus the angle difference between the true direction of the POI and the true direction of the reference POI (Microsoft Sign). In Location B, however, we did not have a reference POI visible. To determine the pointing error for each POI, we calculated the average error between the current POI and all other POIs, used as a reference point. For example, pointing error for the Microsoft Sign is the average of the angle difference between the Microsoft sign and Two Benches, Microsoft Sign and Basketball Court, Microsoft Sign and Volleyball Court, and Microsoft Sign and Hidden Door. True direction was determined using GPS coordinates of the current location and the POI.

Performance of all groups and conditions on the pre-test and post-test pointing tasks. (A) Pointing error of all groups and conditions from Location A at pre-test. (B) Pointing error of all groups and conditions from Location A at post-test. (C) Improvement of all groups and conditions when pointing from Location A (pre-test minus post-test pointing error). (D) Pointing error of all groups and conditions from novel Location B at post-test. All data are presented as ± SEM, *p < 0.05, †p < 0.1

In the second task, participants were given a simple map of the Microsoft campus (with no distinguishing features or labels) and asked to mark the locations of all five POIs (Supplementary Fig. S6). Using a simple map with outlines provided us with an objective way to determine the spatial knowledge of the participants22,37. Participant maps were scanned and overlaid with a schematic containing the actual POI locations, as determined by GPS coordinates. ImageJ (imagej.nih.gov) was used to analyze the distance (in pixels and later converted into meters) between the estimated location and the real location of all POIs, to come up with an error metric.

Prior to the scavenger hunt, participants performed a pre-test pointing task at the start location (Location A) to determine familiarity with each of the POIs. After completing the scavenger hunt, participants performed two post-test pointing tasks, one from the same location as the pre-test (location A in Fig. 2) and one from an entirely novel location (location B in Fig. 2). Lastly, all participants were asked to draw the POIs on the map given.

All participants were given the same instructions and were naïve to the details of the study. They were told that they are participating in a scavenger hunt in which they must find five different locations spread throughout the campus.

The Beacon group was taught how to use/interpret the 3D sound with verbal directions and the very first landmark (Fig. 2: Landmark 1). Standing at Location A, participants were taught what a beacon sounded like and how to interpret the direction. The Turn-by-turn groups was also given instructions for how to follow turn-by-turn directions based on the very first landmark (Supplementary Fig. S4). At every landmark they were told that this was their N location and then started going for the next landmark immediately.

Statistical analyses

All statistics were performed using Prism 8 (www.graphpad.com) and specific statistical tests used are displayed next to each result.

Results

We prepared a scavenger hunt with five different Points of Interest (POIs) that participants (n = 53) had to visit using either auditory beacons or turn-by-turn directions (Fig. 2). Participants were either experts in the terrain (with over 6 months of exposure) or completely naïve (who did not know the area, had been there for a maximum of 3 weeks).

There were no differences between groups or conditions in familiarity with the POIs prior to the scavenger hunt

Prior to starting the scavenger hunt, we determined a baseline familiarity with the POIs. All participants stood in the same spot and performed a pointing task in which they pointed to all five POI locations, even though they were not visible. Using the amount of error as a measure of accuracy, we performed a 2 × 2 ANOVA across group (Expert and Naïve) and condition (Turn-by-turn and Beacon). We did not find a significant main effect of group (Fig. 3A; F(1,49) = 0.55, p = 0.46), condition (F(1,49) = 0.003, p = 0.96), or interaction (F(1,49) = 0.09, p = 0.76), showing that at pre-test, there were no differences between groups in their ability to point to each of the POI locations (All groups: Mean = 48.55°, SD = 19.53). The mean and standard deviations for all groups were as follows: Experts Turn-by-turn (Mean = 48.61, SD = 24.01), Experts Beacon (Mean = 46.62, SD = 13.93), Naïve Turn-by-turn (Mean = 51.08°, SD = 21.29), and Naïve Beacon (Mean = 52.45°, SD = 18.09). See Supplementary material for individual POI performance (Supplementary Fig. S2A and B).

Upon completion of the scavenger hunt, participants in the Beacon condition were more accurate at pointing from the initial start location (A)

To determine how well participants learned the locations of the visited POIs, we performed a pointing task, after the scavenger hunt, from the same starting position as the initial pre-test pointing task (Location A). A 2 × 2 ANOVA across group (Expert and Naïve) and condition (Turn-by-turn and Beacon), demonstrated a significant interaction (Fig. 3B; F(1,49) = 6.2, p < 0.05) and main effect of condition (F(1,49) = 4.41, p < 0.05), but no main effect of group (F(1,49) = 1.53, p = 0.22). The mean and standard deviations for all groups were as follows: Experts Turn-by-turn (Mean = 20.62, SD = 16.51), Experts Beacon (Mean = 22.29, SD = 11.48), Naïve Turn-by-turn (Mean = 36.66°, SD = 18.10), and Naïve Beacon (Mean = 16.90°, SD = 13.98). A post-hoc analysis (Tukey’s correction for multiple comparisons) revealed that the Naïve Beacon group was significantly better than the Naïve Turn-by-turn group. These data indicate that the Beacon group, especially Naïve Beacon users, displayed a better knowledge of the POI locations. See Supplementary material for individual POI performance (Supplementary Fig. S1).

The Naïve Beacon group demonstrated a visual improvement in learning, from pre-test to post-test

To assess the amount of learning that occurred from pre-test to post-test, we compared performance on the pointing task from location A at post-test and pre-test, using the difference in accuracy (post-test pointing error minus pre-test pointing error) as a metric of learning. A 2 × 2 ANOVA across group (Expert and Naïve) and condition (Turn-by-turn and Beacon) revealed a nearly significant interaction (Fig. 3C; F(1,49) = 3.96, p = 0.05) but no main effect of group (F(1,49) = 0.03, p = 0.85) or condition (F(1,49) = 1.96, p = 0.16). The mean and standard deviations for all groups were as follows: Experts Turn-by-turn (Mean = 27.99, SD = 29.38), Experts Beacon (Mean = 24.32, SD = 17.72), Naïve Turn-by-turn (Mean = 14.42°, SD = 19.83), and Naïve Beacon (Mean = 35.55°, SD = 18.36). While there was a visual improvement in learning between Naïve Beacon and Naïve Turn-by-turn groups, this was not statistically significant. See Supplementary material for individual POI performance (Supplementary Fig. S1).

Auditory navigation improved pointing accuracy from a novel location (location B)

In addition to pointing from the pre-test location, participants also pointed to all POIs from an entirely novel location (Fig. 2, Location B) to assess how well they acquired an allocentric representation of space. A 2 × 2 ANOVA across group (Expert and Naïve) and condition (Turn-by-turn and Beacon) revealed a significant main effect of both group (F(1,49) = 4.05, p < 0.05) and condition (Fig. 3D; F(1,49) = 5.01, p < 0.05) but not interaction (F(1,49) = 1.96, p = 0.17). The mean and standard deviations for all groups were as follows: Experts Turn-by-turn (Mean = 35.17, SD = 20.94), Experts Beacon (Mean = 31.25, SD = 12.75), Naïve Turn-by-turn (Mean = 51.18°, SD = 15.37), and Naïve Beacon (Mean = 34.12°, SD = 16.05). See Supplementary material for individual POI performance (Supplementary Fig. S2A and B).

The Beacon groups were better at identifying the POI locations on a drawn map

After the scavenger hunt, participants were asked to identify the POI locations on a simple map outline of the area with no labels (Fig. 4A). A 2 × 2 ANOVA across group (Expert and Naïve) and condition (Turn-by-turn and Beacon) revealed a significant main effect of condition (Fig. 4B; F(1,49) = 7.3, p < 0.01) but not group (F(1,49) = 2.7, p < 0.1) or interaction (F(1,49) = 3.0, p = 0.08). The mean and standard deviations for all groups were as follows: Experts Turn-by-turn (Mean = 107.79, SD = 75.95), Experts Beacon (Mean = 88.32, SD = 53.09), Naïve Turn-by-turn (Mean = 177.55°, SD = 97.0), and Naïve Beacon (Mean = 86.11°, SD = 64.51). See Supplementary material for individual POI performance (Supplementary Fig. S2C and D, and Supplementary Figs. S5 and S7).

POI accuracy, path distance and routes taken during the scavenger hunt. (A) Estimated locations of all POIs of the Turn-by-turn (gray, left) and Beacon (blue, right) conditions. Each transparent colored circle represents one estimation by a participant and is linked to the corresponding POI flag by a transparent line, which represents the amount of error (Per POI and group views are available in the Supplementary material). (B) The amount of error between estimated and actual POI locations for all groups and conditions. (C) Heat map of the routes walked in the Turn-by-turn (gray, left) and Beacon (blue, right) conditions. The maps are created with the Folium package for Python on top of OpenStreetMap. (D) The distance (decimal°) walked during the scavenger hunt of all groups and conditions, and the overlay walked by the participants on the different conditions. All data are presented as ± SEM, *p < 0.05. (E) The overlap (percent %) of the paths taken during the scavenger hunt of all groups and conditions.

There was no difference in the amount of distance traveled between groups or conditions

While all participants in the Turn-by-turn groups used the same route to visit all five POIs, the participants in the Beacon group were free to use any route (Fig. 4C and 4E). We performed a simple overlap analysis to determine how similar the individual routes were to one another. A 2 × 2 ANOVA across group (Expert and Naïve) and condition (Turn-by-turn and Beacon) revealed a significant main effect of condition (F(1,49) = 92.68, p < 0.0001) but no main effect of group (F(1,49) = 1.27, p = 0.26) or interaction (F(1,49) = 3.70, p = 0.06). The mean and standard deviations for all groups were as follows: Experts Turn-by-turn (Mean = 0.71, SD = 0.06), Experts Beacon (Mean = 0.40, SD = 0.13), Naïve Turn-by-turn (Mean = 0.69°, SD = 0.05), and Naïve Beacon (Mean = 0.48°, SD = 0.06). As expected, there was more overlap between groups in the Turn-by-turn conditions as they all used the same directions. Despite the differences in route, there was no difference in the total distance that was traversed by all groups (All groups: Mean = 2267.51 m, SD = 245.93). A 2 × 2 ANOVA across group (Expert and Naïve) and condition (Turn-by-turn and Beacon) revealed no significant main effect of group (Fig. 4D; F(1,49) = 1.45, p = 0.23), condition (F(1,49) = 0.52, p = 0.47), or interaction (F(1,49) = 0.05, p = 0.81). The mean and standard deviations for all groups were as follows: Experts Turn-by-turn (Mean = 2260.51, SD = 137.26), Experts Beacon (Mean = 2195.92, SD = 125.07), Naïve Turn-by-turn (Mean = 2326.60°, SD = 188.85), and Naïve Beacon (Mean = 2293.08°, SD = 428.45).

Discussion

The goal of the study presented here was to present an alternative to turn-by-turn navigation that is effective and is still engaging users into their environment to promote spatial learning. We show that using auditory beacons to navigate can lead to greater explorative behavior and the formation of more accurate mental maps of the surrounding environment when compared to turn-by-turn navigation. Thus, demonstrating that it is possible to use GPS technology and promote learning through active navigation. In this study, groups of both familiar (Experts) and unfamiliar (Naïve) participants were asked to find the locations of different POIs on a scavenger hunt across the Microsoft campus. Half of the groups were guided to the POIs using traditional turn-by-turn directions (Turn-by-turn) and the other half used the auditory navigation app Soundscape (Beacon) to find the POIs. While the pre-post performance showed little differences between the conditions in the Expert group, the Naïve Beacon group demonstrated a more accurate spatial representation of the Microsoft campus as reflected by the significant improvement (pre-post) in their ability to point to each of the POI locations, both from a familiar and a novel location, as well as to identify the POI locations on a simple overhead map of the area. It was not surprising that experts, who were familiar with the campus, did not exhibit a significant difference in learning as we expected them to have a reasonable mental map already formed.

There are several limitations of the current study that we feel are important to acknowledge. First, while we were able to test four different groups, we ideally would have had greater numbers in each of the individual groups. A power analysis, assuming a medium effect size (d = 0.5) determined we would need 80 participants in total. Time was a limiting factor, especially in our naïve summer interns as they were extremely busy throughout the day and the duration of their internship was short. Participants were often run during an extended lunch break or before/after work hours. Second, the Turn-by-turn groups were given verbal directions by an experimenter as opposed to a navigation app. One issue we encountered when testing turn-by-turn navigation apps was that these apps could not accurately inform a walking participant of when they needed to turn. The area we used for our study required participants to walk along paved and dirt paths within the Microsoft campus and the turn-by-turn navigation apps failed to reliably direct participants to each of the POIs. Lastly, this experiment was designed for walking navigation and the area chosen, containing complex intersections and buildings, was selected based on the absence of roads for safety concerns. Many turn-by-turn systems might be better suited for actual road or car navigation as the goal is less about learning the environment and more about getting to the desired location in a timely manner (additional details of how many turns and directions were given to participants can be found in the Supplementary material). We believe however that our results would also translate to a car driving situation, since turn-by-turn has been shown to reduce the ability to recall and sketch the maps in drivers48, as well as pedestrians37.

Nevertheless, this does not detract from the main point and results of this paper: (i) Turn-by-turn directions are a passive form of navigation that do not support spatial learning of the surrounding environment34,35,36,37. (ii) By taking a more active role in their own navigation, participants were able to construct a better understanding of the spatial layout of a real-world environment. Using a spatial auditory navigation app to promote active navigation, we can theoretically engage the hippocampus7,27,49,50,51,52 resulting in a stronger and more accurate mental map of the explored space. It is worth noting that while the Soundscape auditory navigation app was originally designed for use by sight-impaired individuals, all the participants in this study had normal or corrected vision. Participants are likely still navigating primarily using vision. This auditory navigation app was an aid to support spatial navigation and the formation of a cognitive map53.

It is clear that GPS technology is an integral part of our everyday lives and it is unlikely that society would give up such an important technological advance. While the benefits of using this technology are undeniable, it is important to consider how navigation should be implemented, as GPS is a global positioning system that can support different forms of navigation. Rather than replacing the decision-making process, our work proposes to augment navigation with a rich sensory experience that engages perception and cognitive processing. After all, just as technology cannot replace the benefits of physical exercise, it cannot replace the benefits of staying mentally active.

While our ability to navigate might seem like an accessory to our modern lives, at one point these navigational skills were critical for our own ancestral survival. It is not surprising that some of the most powerful mnemonic techniques, for example the memory palace, involve setting mental pictures of items or facts in locations in an imaginary place, such as a building or a town. Memories become easier to recall when they’re associated with physical locations, even if only in the imagination54,55. Nor it is surprising that some of the first signs of cognitive decline associated with Alzheimer is the deterioration of spatial awareness23. Importantly, recent studies have found that exercising spatial cognition might protect against age-related memory decline19. Therefore, it is important that, while using technology, we still engage with the world around us as we know it is important for brain health.

In this paper we are not suggesting that we need to outright reject technology, we simply need to rethink the way we interact with technology, to design and create in ways that engage the brain rather than ignore it. Indeed, there might be hybrid solutions or situations such as in-car navigation where traditional turn-by-turn GPS will still outperform auditory beacons. Nevertheless, auditory beacon navigation is a step into a new era in which automation does not simply replace our evolutionary functions and remove us from our environment, but rather feeds into our sensory inputs to promote active engagement with the world around us. In that regard, GPS navigation based on auditory beacons is a sensory augmentation that helps us create a stronger connection with our environment.

References

Carr, N. The Glass Cage: Automation and Us (WW Norton & Company, 2014).

Aporta, C. & Higgs, E. Satellite culture: global positioning systems, Inuit wayfinding, and the need for a new account of technology. Curr. Anthropol. 46, 729–753 (2005).

Chrastil, E. R., Sherrill, K. R., Hasselmo, M. E. & Stern, C. E. There and back again: hippocampus and retrosplenial cortex track homing distance during human path integration. J. Neurosci. 35, 15442–15452 (2015).

Ekstrom, A. D. et al. Cellular networks underlying human spatial navigation. Nature 425, 184–188 (2003).

Hartley, T., Maguire, E. A., Spiers, H. J. & Burgess, N. The well-worn route and the path less traveled: distinct neural bases of route following and wayfinding in humans. Neuron 37, 877–888 (2003).

Lester, A. W., Moffat, S. D., Wiener, J. M., Barnes, C. A. & Wolbers, T. The aging navigational system. Neuron 95, 1019–1035 (2017).

Moser, M. B., Rowland, D. C. & Moser, E. I. Place cells, grid cells, and memory. Cold Spring Harb. Perspect. Biol. 7, a021808 (2015).

O’Keefe, J. & Nadel, L. The Hippocampus as a Cognitive Map (Oxford University Press, 1978).

Tolman, E. C. Cognitive maps in rats and men. Psychol. Rev. 55, 189–208 (1948).

Clemenson, G. D., Gage, F. H. & Stark, C. E. L. In Environmental Enrichment and Neuronal Plasticity (ed. Chao, M. V.) (Oxford University Press, 2018).

Clemenson, G. D., Henningfield, C. M. & Stark, C. Improving hippocampal memory through the experience of a rich Minecraft environment. Front. Behav. Neurosci. 13, 57 (2019).

Maguire, E. A. et al. Knowing where and getting there: a human navigation network. Science 280, 921–924 (1998).

Maguire, E. A. et al. Navigation-related structural change in the hippocampi of taxi drivers. Proc. Natl. Acad. Sci. U.S.A. 97, 4398–4403 (2000).

Woollett, K. & Maguire, E. A. Acquiring “the knowledge” of London’s layout drives structural brain changes. Curr. Biol. 21, 2109–2114 (2011).

Woollett, K., Spiers, H. J. & Maguire, E. A. Talent in the taxi: a model system for exploring expertise. Philos. Trans. R. Soc. Lond. B Biol. Sci. 364, 1407–1416 (2009).

Bizon, J. L. & Gallagher, M. Production of new cells in the rat dentate gyrus over the lifespan: relation to cognitive decline. Eur. J. Neurosci. 18, 215–219 (2003).

Drapeau, E. et al. Spatial memory performances of aged rats in the water maze predict levels of hippocampal neurogenesis. Proc. Natl. Acad. Sci. 100, 14385–14390 (2003).

Kolarik, B. S. et al. Impairments in precision, rather than spatial strategy, characterize performance on the virtual Morris Water Maze: a case study. Neuropsychologia 80, 90–101 (2016).

Konishi, K. & Bohbot, V. D. Spatial navigational strategies correlate with gray matter in the hippocampus of healthy older adults tested in a virtual maze. Front. Aging Neurosci. 5, 1 (2013).

van Praag, H., Shubert, T., Zhao, C. & Gage, F. H. Exercise enhances learning and hippocampal neurogenesis in aged mice. J. Neurosci. 25, 8680–8685 (2005).

Wiener, J. M., de Condappa, O., Harris, M. A. & Wolbers, T. Maladaptive bias for extrahippocampal navigation strategies in aging humans. J. Neurosci. 33, 6012–6017 (2013).

Cushman, L. A., Stein, K. & Duffy, C. J. Detecting navigational deficits in cognitive aging and Alzheimer disease using virtual reality. Neurology 71, 888–895 (2008).

Du, A. T. et al. Magnetic resonance imaging of the entorhinal cortex and hippocampus in mild cognitive impairment and Alzheimer’s disease. J. Neurol. Neurosurg. Psychiatry 71, 441–447 (2001).

Laczó, J. et al. Human analogue of the Morris water maze for testing subjects at risk of Alzheimer’s disease. Neurodegener. Dis. 7, 148–152 (2010).

Cook, D. & Kesner, R. P. Caudate nucleus and memory for egocentric localization. Behav. Neural Biol. 49, 332–343 (1988).

Kesner, R. P., Bolland, B. L. & Dakis, M. Memory for spatial locations, motor responses, and objects: triple dissociation among the hippocampus, caudate nucleus, and extrastriate visual cortex. Exp. Brain Res. 93, 462–470 (1993).

O’Keefe, J. & Dostrovsky, J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 34, 171–175 (1971).

Packard, M. G. & McGaugh, J. L. Inactivation of hippocampus or caudate nucleus with lidocaine differentially affects expression of place and response learning. Neurobiol. Learn. Mem. 65, 65–72 (1996).

Frankenstein, J., Mohler, B. J., Bülthoff, H. H. & Meilinger, T. Is the map in our head oriented north?. Psychol. Sci. 23, 120–125 (2012).

Meilinger, T., Frankenstein, J. & Bülthoff, H. H. Learning to navigate: experience versus maps. Cognition 129, 24–30 (2013).

Ferbinteanu, J. Contributions of hippocampus and striatum to memory-guided behavior depend on past experience. J. Neurosci. 36, 6459–6470 (2016).

Chrastil, E. R. & Warren, W. H. Active and passive spatial learning in human navigation: acquisition of survey knowledge. J. Exp. Psychol. Learn. Mem. Cognit. 39, 1520–1537 (2013).

Leshed, G., Velden, T., Rieger, O., Kot, B., & Sengers, P. In-car gps navigation: engagement with and disengagement from the environment. In Proceeding of the Twenty-Sixth Annual CHI Conference on Human Factors in Computing Systems—CHI ’08, 1675 (ACM Press, 2008).

Dahmani, L. & Bohbot, V. D. Habitual use of GPS negatively impacts spatial memory during self-guided navigation. Sci. Rep. 10, 6310 (2020).

Münzer, S., Zimmer, H. D. & Baus, J. Navigation assistance: a trade-off between wayfinding support and configural learning support. J. Exp. Psychol. Appl. 18, 18–37 (2012).

Gardony, A. L., Brunyé, T. T., Mahoney, C. R. & Taylor, H. A. How navigational aids impair spatial memory: evidence for divided attention. Spatial Cognit. Comput. 13, 319–350 (2013).

Ishikawa, T., Fujiwara, H., Imai, O. & Okabe, A. Wayfinding with a GPS-based mobile navigation system: a comparison with maps and direct experience. J. Environ. Psychol. 28, 74–82 (2008).

Ingold, T. Being Alive: Essays on Movement, Knowledge and Description (Routledge, 2011).

Berger, C. C., Gonzalez-Franco, M., Tajadura-Jiménez, A., Florencio, D. & Zhang, Z. Generic HRTFs may be good enough in virtual reality improving source localization through cross-modal plasticity. Front. Neurosci. 12, 21 (2018).

Gonzalez-Franco, M., Maselli, A., Florencio, D., Smolyanskiy, N. & Zhang, Z. Concurrent talking in immersive virtual reality: on the dominance of visual speech cues. Sci. Rep. 7, 3817 (2017).

Jones, M. et al. ONTRACK: Dynamically adapting music playback to support navigation. Pers. Ubiquitous Comput. 12, 513–525 (2008).

Holland, S., Morse, D. R. & Gedenryd, H. AudioGPS: spatial audio navigation with a minimal attention interface. Pers. Ubiquitous Comput. 6, 253–259 (2002).

Strachan, S., Eslambolchilar, P., Murray-Smith, R., Hughes, S., & O’Modhrain, S. GpsTunes: controlling navigation via audio feedback. In Proceedings of the 7th International Conference on Human Computer Interaction with Mobile Devices & Services, 275–278 (2005).

Mcgookin, D., Brewster, S. & Priego, P. Audio Bubbles: Employing Non-speech Audio to Support Tourist Wayfinding 41–50 (Springer, 2009).

Holmes, M. C. & Sholl, M. J. Allocentric coding of object-to-object relations in overlearned and novel environments. J. Exp. Psychol. Learn. Mem. Cognit. 31, 1069–1087 (2005).

Lawton, C. A. Strategies for indoor wayfinding> the role of orientation. J. Environ. Psychol. 16, 137–145 (1996).

Wang, R. F. & Spelke, E. S. Updating egocentric representations in human navigation. Cognition 77, 215–250 (2000).

Fenech, E. P., Drews, F. A. & Bakdash, J. Z. The effects of acoustic turn-by-turn navigation on wayfinding. Proc. Hum. Fact. Ergon. Soc. Annu. Meet. 54, 1926–1930 (2010).

Buzsáki, G. & Moser, E. I. Memory, navigation and theta rhythm in the hippocampal-entorhinal system. Nat. Neurosci. 16, 130–138 (2013).

Doeller, C. F., Barry, C. & Burgess, N. Evidence for grid cells in a human memory network. Nature 463, 657–661 (2010).

Jacobs, J. et al. Direct recordings of grid-like neuronal activity in human spatial navigation. Nat. Neurosci. 16, 1188–1190 (2013).

Killian, N. J., Jutras, M. J. & Buffalo, E. A. A map of visual space in the primate entorhinal cortex. Nature 491, 761–764 (2012).

Rossier, J., Haeberli, C. & Schenk, F. Auditory cues support place navigation in rats when associated with a visual cue. Behav. Brain Res. 117, 209–214 (2000).

Baddeley, A. et al. (eds) Episodic Memory: New Directions in Research (Oxford University Press, 2002). http://doi.org/10.1093/acprof:oso/9780198508809.001.0001.

Burgess, N., Becker, S., King, J. A. & O’Keefe, J. Memory for events and their spatial context: models and experiments. Philos. Trans. R. Soc. Lond. 356, 1493–1503 (2001).

Acknowledgements

The authors would like to thank Microsoft Research for the continued support to the research as well as the Enable team who created Microsoft Soundscape: Adam Glass, Melanie Kneisel, Daniel Tsirulnikov, Amy Karlson, Jarnail Chudge, Arturo Toledo, Emily Greene, Rico Malvar. The authors would also like to thank Matthew Bennett and Scott Reitherman for the interoceptive sound design of the Beacons. And the members of the Extended Perception Interaction and Cognition (EPIC) Team: Ken Hinckley, Bill Buxton, Eyal Ofek, and Ability team: Merrie Morris and Ed Cutrell. Finally, also thank the Stark lab at University of California Irvine.

Author information

Authors and Affiliations

Contributions

G.D.C. and M.G.F. wrote the manuscript. A.M. designed the beacon functioning and soundscape app. G.D.C. designed the experiment. A.F. built the scavenger hunt on the soundscape app. G.D.C. analyzed the data and MGF did the heatmap visualizations on GPS data. G.D.C. and M.G.F. did the data collection and recording. All authors edited the manuscript.

Corresponding author

Ethics declarations

Competing interests

Microsoft, is an entity with a financial interest in the subject matter or materials discussed in this manuscript. All authors were employed by Microsoft at the time of the studies. Nonetheless, the authors declare that the current manuscript presents balanced and unbiased results, the studies were conducted following scientific research standards. All experiments were performed in accordance with relevant guidelines and regulations. Approved by the Microsoft Research institutional review board and collected with the approval and written consent of each participant in accordance with the Declaration of Helsinki.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Clemenson, G.D., Maselli, A., Fiannaca, A.J. et al. Rethinking GPS navigation: creating cognitive maps through auditory clues. Sci Rep 11, 7764 (2021). https://doi.org/10.1038/s41598-021-87148-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-87148-4

This article is cited by

-

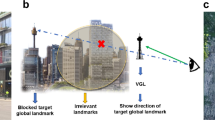

Using virtual global landmark to improve incidental spatial learning

Scientific Reports (2022)

-

Redesigning navigational aids using virtual global landmarks to improve spatial knowledge retrieval

npj Science of Learning (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.