Abstract

Climate change and emerging drug resistance make the control of many infectious diseases increasingly challenging and diminish the exclusive reliance on drug treatment as sole solution to the problem. As disease transmission often depends on environmental conditions that can be modified, such modifications may become crucial to risk reduction if we can assess their potential benefit at policy-relevant scales. However, so far, the value of environmental management for this purpose has received little attention. Here, using the parasitic disease of fasciolosis in livestock in the UK as a case study, we demonstrate how mechanistic hydro-epidemiological modelling can be applied to understand disease risk drivers and the efficacy of environmental management across a large heterogeneous domain. Our results show how weather and other environmental characteristics interact to define disease transmission potential and reveal that environmental interventions such as risk avoidance management strategies can provide a valuable alternative or complement to current treatment-based control practice.

Similar content being viewed by others

Introduction

Many infectious diseases have strong environmental components to their transmission. The role of the environment on infectious disease dynamics varies depending on their pathways. On the one hand, transmission is mainly governed by direct host-to-host contact for disease agents that cannot survive long in the landscape (e.g. influenza). On the other hand, environmental conditions become increasingly important the longer pathogens are able to survive outside of their final host (e.g. waterborne diseases like cholera, and infections transmitted through vectors or intermediate hosts that live and develop in the environment such as malaria, schistosomiasis and fasciolosis)1. Crucially, many transmission routes through water, food, soil and vectors or intermediate hosts, are not only associated with meteorological drivers like air temperature and rainfall, but are also mediated by environmental and landscape characteristics, such as hydrologic transport for cholera2, the persistence of water pools for malaria3, the hydrological regime for schistosomiasis4 and soil moisture for fasciolosis5. These characteristics may be distributed unevenly in space, change rapidly over time, act at different scales, and directly and/or indirectly affect multiple disease components, often resulting in complex disease dynamics1,6,7,8.

Controlling environmentally transmitted diseases is becoming more and more challenging. For many of these infections, no commercial vaccines are yet available for prevention, and control relies entirely on drug administration. However, as long as environmental conditions remain suitable for transmission, reinfection may occur rapidly after treatment9. Moreover, the widespread emergence of drug resistance—due to overreliance on a single medicine—is threatening the efficacy and sustainability of current treatment-based control strategies for an increasing range of infections such as schistosomiasis, fasciolosis and other Neglected Tropical Diseases, globally9,10,11,12,13. Finally, for many diseases, this problem is aggravated by the frequent misuse and overuse of drugs linked to altered epidemiological patterns (such as the emergence of infections at new times/places) caused, at least partly, by climate and land use changes14,15,16,17. In fact, the response to an increased disease challenge is often an increased use of treatment, which accelerates development of drug resistance18.

As climate change accelerates and disease agents become ever more resistant to drugs, the devising of more holistic strategies—rather than exclusively relying on treatment—is becoming a key concern. The role that environmental conditions play in driving disease transmission may offer opportunities to use environmental interventions as complementary—or even as alternative—strategies to drug administration to reduce disease burdens9,19. To be able to explore the potential of environmental management for risk reduction, models based on a better mechanistic understanding of the link between disease transmission and underlying drivers are needed20,21,22,23,24,25. In fact, testing the impact of new potential environmental control strategies on large-scale current and future disease transmission cannot be performed in the field, but requires mechanistic models for developing what-if analyses26,27. Crucially, such models need to include representation of on-the-ground environmental characteristics (beyond meteorological variables alone), which are those that often directly control epidemiological processes (at least for the transmission routes through water/food/soil/vectors/hosts mentioned above), as well as those that decision-makers might be able to manipulate locally to contribute to sustainable and effective control1,19,28.

However, while the role of on-the-ground environmental processes in mediating disease risk responses to weather factors is increasingly acknowledged (e.g. 2,3,4,25,28,29, the majority of current studies either only consider them empirically (e.g.30,31,32), or assume meteorological drivers of disease risk only (e.g.33,34,35), usually focusing on temperature and rainfall characteristics alone6,15,23,36. Arguably, partly as a consequence of this, despite showing promising results in the fight against diseases like schistosomiasis28,37, strategies targeting the environmental stage of the pathogen, to complement medical approaches, are still poorly developed9.

Therefore, in this study, focusing on fasciolosis in the UK, we explore opportunities for environmental management as a control strategy through mechanistic modelling, while considering the diversity of disease drivers across this heterogeneous domain. While rapidly emerging in humans in other parts of the world, fasciolosis in the UK mainly affects livestock, where it currently costs the agriculture sector approximately £300 M per year due to lost production38. Moreover, considerable changes in its seasonality and spread have been observed in recent decades and attributed to changing climatic patterns14,39,40,41. In fact, a significant proportion of the life cycle of liver fluke, the parasite responsible for the disease, takes place in the environment and is strongly affected by soil moisture (which varies with factors like topography and rainfall) and air temperature. Importantly, these factors determine habitat suitability for the liver fluke’s amphibious intermediate snail hosts and thus control disease risk, with saturated soil and moderate temperatures favoring transmission (5,42; SI Appendix). As resistance towards available drugs against fasciolosis is increasingly reported, the possibility to avoid infection by disrupting the parasite life cycle and reducing environmental suitability for disease transmission has been often advocated11,12. Though the practical difficulties of evaluating the effectiveness of such interventions in the field, while controlling for cofounding factors, and previous lack of environment-based mechanistic liver fluke models for what-if analyses at relevant scales, have not allowed stakeholders to fully explore the potential of environmental management for risk reduction. Here, we simulate the risk of liver fluke infection for the recent period 2006–2015 over 935 UK catchments using the newly developed and validated mechanistic Hydro-Epidemiological model for Liver Fluke (HELF,24). We assess disease risk sensitivity to underlying environmental drivers by estimating the contribution of meteorological and topographic characteristics, and their two-way interactions, to seasonal disease risk across 9 administrative regions, using ANalysis Of VAriance (ANOVA). Finally, we investigate the potential of environmental strategies by analyzing where they can provide benefits in terms of risk reduction and how they compare with current treatment-based control. Specifically, (i) we evaluate the effect of using the most efficient drugs currently available (assuming no resistance) on risk of infection43,44, and (ii) we assess the effectiveness of two environmental interventions that have been studied empirically, but whose potential impact on disease transmission has not yet been quantified across larger regions: fencing off high-risk areas to prevent livestock from grazing at high-risk times, and soil drainage11,18,45,46,47.

Results

Simulated UK-wide disease risk

Disease risk is quantified in terms of the abundance of metacercariae on pasture, i.e. the parasite life-cycle stage that is infective to the livestock hosts. Risk values simulated with HELF across Great Britain reveal differences between winter and summer (Fig. 1). While in winter the only regions where weather conditions allow the presence of infective metacercariae on pasture are those in the south of the country, where temperatures are milder, risk values are significantly higher over all regions in summer. Specifically, summer median risk levels are highest on the west coast of England and Wales (where rainfall is abundant even during the warmer months), lower in the South East of the country, which in summer can become too warm and dry for parasite survival and development, and even lower in Scotland, where, even during milder summer months, temperatures can still be relatively unfavorable. These results suggest that weather factors are important controls on risk of infection and are in agreement with current understanding and data. For example, one of the largest UK studies on liver fluke prevalence found the highest infection levels in wetter western areas of the country, which historically have been providing ideal weather conditions for disease transmission41. It is also interesting to note that, within regions, simulated summer risk values are highly variable, especially in South West of England and West Wales (SW) and North West England (NW). In fact, even if, in summer, risk values are generally high, we see that there still can be areas associated with lower abundance of metacercariae. This can be due to different dynamic weather effects on development within the parasite life cycle, but also to landscape heterogeneities (e.g. areas at the bottom of a valley will be more prone to saturation and therefore at higher risk compared to areas with similar weather patterns but located on a steep hill), and interactions between the two. This also is in agreement with existing datasets, which show significant differences in disease prevalence between neighboring areas within homogeneous climatic regions, already suggesting that other factors may affect the prevalence of liver fluke in addition to meteorological conditions41.

Seasonal abundance of metacercariae, i.e. disease risk simulated using HELF, on average over 2007–2015, across 9 UK regions. Boxplots represent variability in disease risk between catchments within regions. In the map (created using Matlab R2019a), ungauged catchments -i.e. with no hydrological data over the simulation period- are masked in grey. SW South West of England and West Wales, Mid rest of Wales and Midlands, NE North East of England, NScot North of Scotland, WScot West of Scotland, SE South East of England, EAng East Anglia, EScot East of Scotland, NW North West of England.

Disease risk sensitivity to environmental drivers

Results of ANOVA at the regional level identify the main controlling factors of disease risk across areas and indicate reasons for the large variability we see in summer. Figure 2, which presents the identified top three drivers of summer disease risk per region, shows that temperature (T, in red) is more important in northern areas and is the main control on disease risk in Scotland. Whereas, further south, variability in rainfall-related characteristics (in blue) explains more of the risk differences between catchments. Importantly, two-way interactions between analyzed factors (which in Fig. 2 are combined into a single term, INT, in yellow) play a significant role on disease transmission across all areas. In fact, INT appears as dominant control in 5 out of 9 regions, suggesting that, without considering interactions, the importance of individual factors may be overestimated. While in flat low-lying areas in the south east of the country (South-East of England and East Anglia; Figure S1), it is the interaction between weather factors themselves that explains most of the variability in risk, along the west coast of England and Wales disease risk shows higher sensitivity to interactions between meteorological characteristics and topography (Table S1). Potentially less limiting weather conditions in these areas compared to the south east (i.e. less extreme warm temperatures and more abundant rainfall), coupled with a higher topographic variability (i.e. presence of both flat and hilly terrain), result in a stronger role of topography in modifying meteorological impacts, with flatter areas in valleys saturating more easily, favouring intermediate host habitats and thus disease transmission. Notably, in the Scottish regions, among the coldest and wettest of the country, while disease risk is mainly limited by temperature, topography (TOPO, in green) also individually emerges as an important driver, with steep vs. flat terrain creating differences in disease risk even in areas close to each other, highlighting potential opportunities for disease control through environmental management.

Average percent contribution of the top three environmental drivers to summer disease risk variability simulated using HELF, per region. Rainfall-related characteristics are in blue (RD = number of rainy days and R = rainfall); temperature-related variables are in red (P = potential evapotranspiration and T = temperature); landscape factors are in green (TOPO = topography); and two-way interactions between factors (here combined into a single term INT) are in yellow. Regions are defined in Fig. 1.

Efficacy of current treatment-based control

Figure 3 shows the effect on risk of infection (simulated using HELF) of treating animals twice per year (in January and April) using the most efficient drug currently available (90% efficacy) assuming no resistance. On average, this strategy achieves a reduction in disease risk over summer of 65% (Fig. 3a). For most catchments, risk is even reduced by more than 70%. However, due to differences in weather-environmental conditions and their seasonality, treatment can have different effects on the abundance of infective metacercariae, and therefore on disease risk, across our domain (range: 45–84.9%). On the other hand, if we look at the impact of treatment on disease risk in time (Fig. 3b), we see that, in addition to risk being significantly reduced, the rise in metacercarial abundance on pasture is also delayed (by approximately one month) and, similarly, the peak of infection is shifted to later in the year (approximately from August–September to October), as a result of drug administration. Overall, these results agree with experience and suggest that treatment-based control would be effective in limiting risk across UK regions, if changing climatic and weather patterns were not increasing opportunities for transmission, and if resistance to available drugs was not developing rapidly12,14.

Effect of treating livestock twice per year (in January and April) using the current most efficient antiparasitic drug (90%) and assuming no resistance, on: (a) summer disease risk across all 935 analyzed catchments; (b) the monthly abundance of infective metacercariae on pasture (i.e. disease risk), on average across all catchments and years.

Effectiveness of environmental interventions

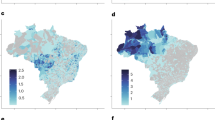

Simulating disease risk using HELF also allows us to investigate how different sensitivities to environmental drivers may translate into different opportunities for risk reduction through environmental management across areas. For example, summer risk of infection can be reduced by temporarily excluding grazing livestock from high-risk fields, though our results show that the percentage of catchment area that must be fenced off, to match the level of risk reduction achieved by drug treatment, varies from 23.8 to 42.1% across UK regions (Fig. 4a, but also see Figure S2 for a comparison between a dry and a wet year). In each catchment, this strategy consists of fencing off areas starting from those most prone to saturation, which are those most likely to provide favorable habitats for snail intermediate hosts, and therefore most suitable for disease transmission (i.e. flat areas at the bottom of valleys). Overall, the lowest percentages are found in Scotland (23.8%), where topographic variability is highest and thus snail habitats will be most localized, as well as where risk of infection is generally lower due to less favorable temperatures. In the comparatively warmer but still hilly areas of the South-West of England and West Wales, the percentage of land to be avoided is similar (26.3%), confirming that fencing may be particularly helpful in regions with larger topographic variations, rather than where the landscape is mostly flat47. In contrast, the highest fractions of land over which grazing should be avoided are located in the south east of our domain, specifically in East Anglia, where 42.1% of catchment area has to be fenced off, on average. Areas in this region are among the driest and warmest of the country, which would suggest relatively limited risk over summer and therefore limited need for fencing compared to the west coast. However, these areas are also the flattest part of Great Britain (Figure S1), which explains why fencing off large portions of land to isolate saturated fields is necessary to match the effect of treatment. In fact, whenever weather conditions allow, saturated areas and snail habitats will be widespread rather than confined to valleys at the bottom of hills or mountains as in higher relief regions. As an alternative to temporary fencing, Fig. 4b shows, for each region, the percentage increase in soil drainage that would be needed in order to match as closely as possible the reduction in summer risk of infection achieved using treatment. We find that with this other environmental intervention, which in our study -in contrast to fencing- is implemented at the catchment level, differences in terms of opportunities for risk reduction across UK areas are not as pronounced. In fact, while, as with fencing, more drainage would be required in the warmer south of the country rather than in Scotland (where, despite rainfall not being limiting, temperatures remain comparatively unfavorable), the increase in drainage needed to match treatment is approximately 8% compared to current conditions over all analyzed regions. Nevertheless, this result suggests that, in principle, at least where large percentages of catchment area would have to be fenced off, drainage could provide a valuable alternative to current treatment-based control.

Effectiveness of environmental interventions (maps created using Matlab R2019a): (a) Percentage of catchment area we would have to exclude from livestock grazing to reduce summer risk of infection by at least the same percentage achieved using treatment (on average across catchments within each region and for year 2013 as an example within our simulation period—but also see Figure S2 for a comparison with a wetter year); (b) Percentage increase in drainage that would be needed, compared to current conditions, to match as close as possible the reduction in summer risk of infection achieved using treatment (on average across catchments within each region).

Discussion

This study is the first to consider environmental controls on fasciolosis, beyond just weather characteristics, across a large heterogeneous domain, and to do so in a mechanistic (rather than empirical/correlation-based) manner. Specifically, instead of only focusing on temperature and rainfall-related variables, as in previous correlation-based large-scale liver fluke modelling studies (e.g.33,34,35), we also account for soil moisture patterns, which are assumed to vary with heterogeneous topography, and directly control habitat suitability for parasite development and disease transmission5,42. Moreover, in contrast to existing studies that do include environmental controls and management in the investigation of risk factors at specific times and locations (e.g.30,31,32), we consider these mechanistically. This represents the first step towards assessing opportunities for environmental management as a disease control strategy across the UK and beyond. In fact, on-the-ground environmental characteristics, as opposed to climate and weather patterns, may be modifiable or “reasonably amenable to management or change given current knowledge and resources” towards reducing infection levels19,28. Furthermore, it is only through mechanistic modelling that management scenarios and their impacts on disease transmission can be evaluated at a large scale and over long time horizons, under current and future potential conditions20,21,22,23,24,25,26,27, which is urgently needed for supporting long-term planning and policy-making at the national level (e.g. see the UK National Animal Disease Information System, NADIS48).

Our simulations of liver fluke risk over UK catchments, and our regional analysis of disease sensitivity to environmental factors, show that, while weather remains a key driver across the country, topography emerges as an important control in specific areas (Figs. 1, 2). First, the fact that drivers other than meteorological ones come into play when moving towards more regional levels, confirms speculations in previous empirical studies on fasciolosis, as well as findings for other environment-driven diseases. Crucially, Fox et al. warn about using liver fluke risk forecasting models which only account for climatic drivers (e.g.42) when focusing on regional levels, as, at these scales, many non-climatic factors become relevant in driving parasite survival and transmission34. Similarly, Liang et al. find that, within a climatologically homogeneous region in China, land use and characteristics of the irrigation system are the main drivers of human infection with schistosomiasis28. Second, the fact that landscape heterogeneity may alter risk of liver fluke infection, and its sensitivity to climatic/meteorological variability, suggests that future UK disease control strategies will need to explicitly account for it. Specifically, simulations of disease risk based on models with no representation of landscape heterogeneities (such as the empirical Ollerenshaw Index (42, SI), the basis of current industry standard forecasts48) may have limited utility over regions with more pronounced topographic variability going forwards. Finally, our result that landscape heterogeneity most often exerts its control on disease risk through interaction with weather variables is consistent with current knowledge of how the parasite life cycle depends on amphibious intermediate snail hosts, which live on saturated areas, that vary with topography5,42. On the other hand, the fact that regional between-catchment variation in risk is explained by different environmental characteristics, and interactions between them, suggests that regionalized control policies and practices may be more effective than one-size-fits-all national strategies.

Having recognized the role of landscape heterogeneity in driving risk of infection alongside meteorological factors, our findings on the effectiveness of environmental interventions have important implications for disease control. While the current European farm subsidy structure -whereby loss of productive grazed land would be financially penalized- may provide a disincentive to its implementation, fencing off high-risk areas to avoid grazing during high-risk periods has been often called for as an aid to current drug-based control, given the increasing climate-driven changes in disease patterns and reports of treatment failure11,18,45,46,47. The fact that this strategy provides higher benefits in terms of risk reduction in Scotland and along the west coast of south England and Wales (Fig. 4a) is of interest as these areas: (i) are characterized by extensive grazing (Figure S1); (ii) are those where treatment is most common44 and drug resistance is prevalent12; and (iii) are either those associated with the highest liver fluke prevalence historically (e.g.41 and Fig. 1), or where the disease is expanding rapidly with warming climates40. Therefore, these are areas where treatment is particularly expected to become unsustainable in the future. The percentages of land to fence off, to achieve at least the same risk reduction as that obtained with treatment, are not unsubstantial, ranging from approximately 20% of catchment area up to more than 40% on the flattest regions, on average (and higher in wetter rather than drier years, Figure S2). This may make this intervention seem un-economical or impractical under current conditions. However, these values depend on the treatment option implemented, and our current scenario reflects the maximum reduction in risk obtainable with the most efficient drugs available, assuming no resistance (Fig. 3). In reality, farmers may treat at different times, with different (and often combinations of) products, that have lower efficacy and increasing resistance43,44. Moreover, the percentages we calculate represent the proportion of land to fence off to match or outperform treatment using this strategy alone, while -in the real world- temporary fencing would most likely be implemented in combination with (targeted) treatment as part of an integrated approach, still contributing to reduce reliance on drugs and delaying development of resistance18. On another note, our hydro-epidemiological mechanistic modelling approach could also be expanded to evaluate potential side benefits of management strategies beyond disease risk reduction, providing a holistic perspective that has so far been elusive (e.g. hydrologic ecosystem services could be derived from re-using fenced off high-risk areas for tree planting, which in turn might also reduce flood risk or create additional habitats for flora and fauna), and/or to inform novel fenceless grazing systems (by guiding the identification of high-risk areas to exclude from grazing through invisible GPS fences) enabling dynamic management of livestock and disease risk under highly variable conditions49,50. The second form of environmental disease management we investigate, which is drainage, is also often mentioned in the literature as a possible alternative to treatment, but it is also more controversial for multiple reasons. Our results suggest that a relatively small increase in drainage compared to current conditions (reducing the amount of water that contributes to soil saturation at the catchment scale) could actually help achieve risk reductions similar to those we can now obtain through drug administration across UK regions (Fig. 4b). While implementation of drainage in our model is at the catchment level, without differentiating between areas more and less prone to saturation, practical implementation of this strategy would require different proportions of land to be drained in flatter vs. higher relief catchments. A detailed assessment of the costs that such an intervention entails would also be needed to appropriately evaluate its economic feasibility. In the UK, current agri-environment programs, such as the Environmentally Sensitive Area scheme operated by the Department for Environment, Food and Rural Affairs, are increasingly discouraging drainage for environmental reasons, e.g. to preserve wetlands providing habitats to endangered flora and fauna39. Moreover, despite its known potential usefulness against liver fluke, this strategy is often considered prohibitively expensive45,46,47. On the other hand, some permanent artificial land drainage channels are already in place in England and Wales, including in low-lying areas in East Anglia, which are not accounted for in our analysis51,52. Also, drainage might become a competitive option for risk reduction in the long-run when farmers are increasingly faced with changing climatic conditions and drug resistance. For example, altered rainfall and temperature patterns might induce such frequent use of treatment in some areas, that drug administration becomes too expensive and ineffective, contributing to drainage emerging as a more valuable alternative. Ultimately, the optimal strategy will likely be farm-specific and depend not only on agricultural policy, but also on local herd, logistic and economic factors (that could be included in HELF for providing decision support at farm level) like the long-term costs/benefits of less intensive disease control strategies18,44.

In conclusion, our work demonstrates the feasibility and promotes the uptake of environmental management as a control strategy against fasciolosis in livestock in the UK, but has wider implications in the fight against environmentally transmitted infectious diseases anywhere1. It is increasingly acknowledged that future disease transmission cannot be eradicated with the use of drugs alone9,37. We show that mechanistic hydro-epidemiological models provide an integrated approach to investigating disease dynamics, which recognizes the complexities underpinning transmission, and which can quantify the role of disease risk drivers, including weather-landscape interactions6,7. Such deeper understanding of how meteorological and on-the-ground environmental processes shape disease epidemiology is key for informing new and sustainable solutions to infectious disease control in our changing world. We demonstrate that we can test what-if scenarios including different environmental interventions and management strategies over large space–time domains, which is crucial to potentially support their inclusion in national long-term plans for optimal disease control. These advancements address an urgent need as global climate change increasingly alters disease seasonality and spread14,15,16,17, and drug resistance develops rapidly10,13.

Methods

Data

The dataset we use includes meteorological, hydrological and Digital Elevation Model (DEM) data. Our domain consists of 935 hydrological catchments across Great Britain (i.e. England, Wales and Scotland) for which both meteorological and hydrological data are available for a recent decade, 2006–2015. Gridded (1 km resolution) daily time series of observed rainfall and min/max temperature are obtained from CEH-CHESS53. For each catchment, spatially averaged rainfall and min/max temperature time series are derived from this gridded dataset by considering grid cells that overlap with the catchment area. Streamflow data for the same 10-year period are obtained for all catchments from the National River Flow Archive54. Finally, gridded DEM data for Great Britain are obtained from NextMap, with spatial resolution of 50 m55, and used as a basis for digital terrain analysis to derive a topographic map for each catchment as in56 (see DEM data and catchments over Great Britain, together with a land cover map, in Figure S1).

Disease risk model

We simulate disease risk using the recently-developed and tested Hydro-Epidemiological model for Liver Fluke (HELF)24. HELF mechanistically describes (at a daily time-step) how the impact of rainfall on the parasite life cycle is mediated by environmental characteristics through the process of soil moisture, known to directly drive disease transmission mainly due to its control on the habitat of the liver fluke’s intermediate snail host5, 42. The model, which assumes that topographic variability is the strongest landscape control on soil moisture and thus snail habitat distribution, estimates the propensity of an area to saturate through calculation of a Topographic Index (\(TI\)), and discretizes the distribution of \(TI\) values of a catchment into classes, from the highest, most prone to saturation, to the lowest, assumed least likely to saturate57. The saturation state of each class then becomes an input to the parasite life-cycle model component of HELF, which uses it, together with air temperature, to calculate the abundance of metacercariae on pasture, i.e. the parasitic stage that, when ingested, infects grazing animals. This abundance is thus an indicator of environmental suitability for disease transmission to grazing livestock (also referred to as “disease risk” throughout the paper for the sake of simplicity). HELF is run over each of the 935 catchments in our domain for 2006–2015 using the dataset described above, while assuming a scenario of continuous livestock grazing and no disease management, as explained in24. Simulations for the first year are discarded as warm-up period, so that soil moisture states in the model can establish themselves. Disease risk results are then aggregated from daily to seasonal values (mean over July–September for summer and January–March for winter), as well as from \(TI\) classes to the catchment scale (mean abundance of infective metacercariae, weighted based on the frequency of \(TI\) classes). Details on the model set-up for application over Great Britain and on model calibration are given in SI.

ANOVA

ANalysis Of VAriance (ANOVA) is a mathematical technique for partitioning the observed variance in a variable of interest (response variable) into contributions from individual drivers (factors) and their interactions. It has been widely used for different applications including for uncertainty estimation in climate change impact studies and for dominant control analysis (e.g.58,59). The response variable we focus on in our study is the seasonal catchment-average disease risk simulated using HELF (mean over the 9 years of simulation period, excluding 2006 for warm-up). The factors we use for variance decomposition are air temperature, potential evapotranspiration, rainfall, number of rainy days and topography (specifically, the mean of \(TI\) values over each catchment), as well as their two-way interactions. In order to perform ANOVA, each factor is grouped into two levels, each with a similar number of catchments and level of disease risk variability (to satisfy the underlying assumption of homogeneous variances in ANOVA). This set up allows us to have multiple response variable observations for each combination of factor levels, making it possible to estimate the contribution of interactions. In ANOVA, the total variation in the response variable, to be attributed to the different factors, is expressed through the total sum-of-squares. This is split into main effects, corresponding to individual drivers, and interaction terms, related to non-additive or non-linear effects58. Therefore, the contribution of each factor to disease risk variability can be calculated as the proportion of its (partial) sum-of-squares and the total sum-of-squares (multiplied by 100 to get a percent contribution). The higher the contribution, the more the factor plays a key role in controlling disease risk. More details are provided in SI. We carry out the analysis at the regional scale to better capture the spatial distribution of dominant disease risk drivers. Specifically, we divide Great Britain into nine regions, as much as possible resembling the standard areas for which the UK NADIS currently provides forecasts of liver fluke risk based on a widely-used empirical climate-based model48. These, in turn, are based on the districts employed by the MetOffice when generating climatologies for the UK60: South East of England (SE), East Anglia (EAng), South West of England and West Wales (SW), the rest of Wales and the Midlands (Mid), North West and North East of England (NW and NE), and, finally, West, East and North of Scotland (WScot, EScot, NScot).

Disease control strategies

Using HELF, we implement disease control strategies as follows:

-

1.

Treatment. A recent survey throughout Great Britain and Ireland shows that almost 70% of farmers routinely treat their animals against liver fluke44. Triclabendazole is the most common drug used, which can reach above 90% efficacy against all parasitic stages in livestock, preventing contamination of pasture for up to 12 weeks. Based on this, as well as on available industry guidelines (e.g.43), we assume farmers treat animals twice per year, once on January 1st and a second time on April 1st, using triclabendazole and under the hypothesis of no resistance. This approach is meant to reflect the maximum reduction in disease risk that can currently be achieved in the field using treatment (other available products reduce pasture contamination for shorter periods43 and the presence of resistance would reduce the efficacy of drugs in general). More details on this and on how the strategy is implemented within HELF are provided in SI.

-

2.

Fencing off high-risk areas to prevent animals from grazing during high-risk periods. Traditionally, the period at highest risk of infection in the UK is in late summer, after temperatures have become generally more favorable for the parasite life cycle to progress within the snail hosts, yielding infective metacercariae on pasture38. The areas at highest risk are those most prone to saturation (i.e. those particularly flat, at the bottom of valleys), which are able to support high snail populations as well as other critical stages of the life cycle5. Therefore, we simulate this intervention by sequentially removing summer infective metacercariae from \(TI\) classes (i.e. setting them to zero) starting from the \(TI\) class with the highest value, which will saturate first. Then, for each catchment, by using the reduction in risk level achieved through treatment as a comparison, we estimate the percentage of area we would have to fence off if we wanted to obtain the same level or lower through fencing. Results are evaluated at the regional level using the 9 administrative areas defined above.

-

3.

Drainage to permanently reduce soil moisture. For each catchment, we simulate the effect of drainage by varying a parameter within the hydrological model component of HELF that represents the catchment-level transmissivity of the soil when saturated to the surface (parameter LnTe in24). In the model, by increasing the value of this parameter, we increase the catchment contribution to discharge from the subsurface and decrease soil moisture in the catchment (here, in contrast to the implementation of fencing, we cannot assume that areas most prone to saturation are drained first, and the effect of drainage as implemented is at the catchment scale). The reduction in soil moisture, in turn, decreases habitat suitability for the snail hosts and free-living parasitic stages, and therefore reduces the abundance of infective metacercariae on pasture. Specifically, for each catchment in our domain, we increase this parameter by 1–50% of its calibrated value, using 20 uniformly distributed values, and run HELF with each of these, while leaving all other model parameters set to their calibrated values. Then, we study the percentage increase in drainage we would need to best match the risk level achieved through treatment and, again, evaluate results at the regional level.

Data availability

Underlying datasets are publicly available and referenced within the paper.

References

Eisenberg, J. N. S., Desai, M. A., Levy, K., Bates, S. J., Liang, S., Naumoff, K. & Scott, J. C. Environmental determinants of infectious disease: A framework for tracking causal links and guiding public health research. Environ. Health Perspect. 115(8), 1216–1223. https://doi.org/10.1289/ehp.9806 (2007).

Bertuzzo, E., Azaele, S., Maritan, A., Gatto, M., Rodriguez-Iturbe, I. & Rinaldo, A. On the space-time evolution of a cholera epidemic. Water Resour. Res. 44(1), 1–8. https://doi.org/10.1029/2007WR006211 (2008).

Bomblies, A., Duchemin, J. B. & Eltahir, E. A. B. Hydrology of malaria: Model development and application to a Sahelian village. Water Resour. Res. 44(12), 1–26. https://doi.org/10.1029/2008WR006917 (2008).

Perez-Saez, J., Mande, T., Ceperley, N., Bertuzzo, E., Mari, L., Gatto, M. & Rinaldo, A. Hydrology and density feedbacks control the ecology of intermediate hosts of schistosomiasis across habitats in seasonal climates. Proc. Natl. Acad. Sci. USA 113(23), 6427–6432. https://doi.org/10.1073/pnas.1602251113 (2016).

van Dijk, J., Sargison, N. D., Kenyon, F. & Skuce, P. J. Climate change and infectious disease: Helminthological challenges to farmed ruminants in temperate regions. Animal 4(3), 377–392. https://doi.org/10.1017/s1751731109990991 (2010).

Parham, P. E., Waldock, J., Christophides, G. K., Hemming, D., Agusto, F., Evans, K. J., … Michael, E. Climate, environmental and socio-economic change: weighing up the balance in vector- borne disease transmission. Philos. Trans. R. Soc. B 370, 1665. https://doi.org/10.1098/rstb.2013.0551 (2015).

Cable, J., Barber, I., Boag, B., Ellison, A. R., Morgan, E. R., Murray, K., … Booth, M. Global change, parasite transmission and disease control: Lessons from ecology. Philos. Trans. R. Soc. B 372, 1719. https://doi.org/10.1098/rstb.2016.0088 (2017).

McIntyre, K. M., Setzkorn, C., Hepworth, P. J., Morand, S., Morse, A. P. & Baylis, M. Systematic assessment of the climate sensitivity of important human and domestic animals pathogens in Europe. Sci. Rep. 7(1), 1–10. https://doi.org/10.1038/s41598-017-06948-9 (2017).

Garchitorena, A., Sokolow, S. H., Roche, B., Ngonghala, C. N., Jocque, M., Lund, A., ... De Leo, G. A. Disease ecology, health and the environment: a framework to account for ecological and socio- economic drivers in the control of neglected tropical diseases. Phil. Trans. R. Soc. B, 372, 20160128. https://doi.org/10.1098/rstb.2016.0128 (2017).

Webster, J. P., Molyneux, D. H., Hotez, P. J. & Fenwick, A. The contribution of mass drug administration to global health: Past, present and future. Philos. Trans. R. Soc. B 369(1645), 20130434. https://doi.org/10.1098/rstb.2013.0434 (2014).

Beesley, N. J., Caminade, C., Charlier, J., Flynn, R. J., Hodgkinson, J. E., Martinez-Moreno, A., … Williams, D. J. L. Fasciola and fasciolosis in ruminants in Europe: Identifying research needs. Transbound. Emerg. Dis. 65, 199–216 https://doi.org/10.1111/tbed.12682 (2018).

Kamaludeen, J., Graham-Brown, J., Stephens, N., Miller, J., Howell, A., Beesley, N. J., … Williams, D. Lack of efficacy of triclabendazole against Fasciola hepatica is present on sheep farms in three regions of England, and Wales. Vet. Rec. 184(16), 502–502. https://doi.org/10.1136/vr.105209 (2019).

WHO. https://www.who.int/news-room/fact-sheets/detail/antimicrobial-resistance (2019).

Mas-Coma, S., Valero, M. A. & Bargues, M. D. Climate change effects on trematodiases, with emphasis on zoonotic fascioliasis and schistosomiasis. Vet. Parasitol. 163(4), 264–280. https://doi.org/10.1016/j.vetpar.2009.03.024 (2009).

Altizer, S., Ostfeld, R. S., Johnson, P. T. J., Kutz, S. & Harvell, C. D. Climate change and infectious diseases: From evidence to a predictive framework. Science 341(6145), 514–519. https://doi.org/10.1126/science.1239401 (2013).

Siraj, A. S., Santos-Vega, M., Bouma, M. J., Yadeta, D., Ruiz Carrascal, D. & Pascual, M. Altitudinal changes in malaria incidence in highlands of Ethiopia and Colombia. Science 343(6175), 1154–1159. https://doi.org/10.1126/science.1244325 (2014).

Sokolow, S. H., Jones, I. J., Jocque, M., La, D., Cords, O., Knight, A., … De Leo, G. A. Nearly 400 million people are at higher risk of schistosomiasis because dams block the migration of snail- eating river prawns. Phiosl. Trans. R. Soc. B 372(1722), 20160127. https://doi.org/10.1098/rstb.2016.0127 (2017).

Morgan, E. R., Charlier, J., Hendrickx, G., Biggeri, A., Catalan, D., von Samson-Himmelstjerna, G., … Vercruysse, J. Global change and helminth infections in grazing ruminants in Europe: Impacts, trends and sustainable solutions. Agriculture 3(3), 484–502. https://doi.org/10.3390/agriculture3030484. (2013).

Prüss-Ustün, A., Wolf, J., Corvalan, C., Bos, R., & Neira, M. Preventing Disease Through Healthy Environments: A Global Assessment of the Burden of Disease from Environmental Risks (World Health Organisation, 2016).

Eisenberg, J. N. S., Brookhart, M. A., Rice, G., Brown, M. & Colford, J. M. Disease transmission models for public health decision making: Analysis of epidemic and endemic conditions caused by waterborne pathogens. Environ. Health Perspect. 110(8), 783–790. https://doi.org/10.1289/ehp.02110783 (2002).

Lloyd-Smith, J. O., George, D., Pepin, K. M., Pitzer, V. E., Pulliam, J. R. C., Dobson, A. P., … Grenfell, B. T.. Epidemic dynamics at the human–animal interface. Science 326(5958), 1362–1367. https://doi.org/10.1126/science.1177345 (2009).

Mellor, J. E., Levy, K., Zimmerman, J., Elliott, M., Bartram, J., Carlton, E., … Nelson, K.. Planning for climate change: The need for mechanistic systems-based approaches to study climate change impacts on diarrheal diseases. Science of the Total Environment, 548–549, 82–90. https://doi.org/10.1016/j.scitotenv.2015.12.087 (2016).

Wu, X., Lu, Y., Zhou, S., Chen, L. & Xu, B. Impact of climate change on human infectious diseases: Empirical evidence and human adaptation. Environ. Int. 86, 14–23. https://doi.org/10.1016/j.envint.2015.09.007 (2016).

Beltrame, L., Dunne, T., Vineer, H. R., Walker, J. G., Morgan, E. R., Vickerman, P., ... Wagener, T. A mechanistic hydro-epidemiological model of liver fluke risk. Journal of the Royal Society Interface, 15(145). https://doi.org/10.1098/rsif.2018.0072 (2018).

Rinaldo, A., Gatto, M. & Rodriguez-Iturbe, I. River networks as ecological corridors: A coherent ecohydrological perspective. Adv. Water Resour. 112, 27–58. https://doi.org/10.1016/j.advwatres.2017.10.005 (2018).

Mahmoud, M., Liu, Y., Hartmann, H., Stewart, S., Wagener, T., Semmens, D., … Winter, L. A formal framework for scenario development in support of environmental decision-making. Environ. Model. Softw. https://doi.org/10.1016/j.envsoft.2008.11.010 (2009).

Wagener, T., Sivapalan, M., Troch, P. A., McGlynn, B. L., Harman, C. J., Gupta, H. V., … Wilson, J. S. The future of hydrology: An evolving science for a changing world. Water Resour. Research, 46, W05301. https://doi.org/10.1029/2009WR008906 (2010).

Liang, S., Seto, E. Y. W., Remais, J. V, Zhong, B., Yang, C., Hubbard, A., … Spear, R. C. Environmental effects on parasitic disease transmission exemplified by schistosomiasis in western China. Proc. Natl. Acad. Sci. USA 104(17), 7110–7115. https://doi.org/10.1073/pnas.0701878104 (2007).

Mari, L., Ciddio, M., Casagrandi, R., Perez-Saez, J., Bertuzzo, E., Rinaldo, A., … Gatto, M. Heterogeneity in schistosomiasis transmission dynamics. J. Theor. Biol. 432, 87–99. https://doi.org/10.1016/j.jtbi.2017.08.015 (2017).

Knubben-Schweizer, G., Rüegg, S., Torgerson, P., Rapsch, C., Grimm, F., Hässig, M., … Braun, U. Control of bovine fasciolosis in dairy cattle in Switzerland with emphasis on pasture management. Vet. J. 186, 188–191. https://doi.org/10.1016/j.tvjl.2009.08.003 (2010).

McCann, C. M., Baylis, M. & Williams, D. J. L. The development of linear regression models using environmental variables to explain the spatial distribution of Fasciola hepatica infection in dairy herds in England and Wales. Int. J. Parasitol. 40(9), 1021–1028. https://doi.org/10.1016/j.ijpara.2010.02.009 (2010).

Selemetas, N., Phelan, P., O’Kiely, P. & de Waal, T. The effects of farm management practices on liver fluke prevalence and the current internal parasite control measures employed on Irish dairy farms. Vet. Parasitol. 207(3–4), 228–240. https://doi.org/10.1016/j.vetpar.2014.12.010 (2015).

Ollerenshaw, C. B. The approach to forecasting the incidence of fascioliasis over England and Wales 1958–1962. Agric. Meteorol. 3(1–2), 35–53. https://doi.org/10.1016/0002-1571(66)90004-5 (1966).

Fox, N. J., White, P. C. L., McClean, C. J., Marion, G., Evans, A. & Hutchings, M. R. Predicting impacts of climate change on fasciola hepatica risk. PLoS ONE 6(1), e16126. https://doi.org/10.1371/journal.pone.0016126 (2011).

Caminade, C., van Dijk, J., Baylis, M. & Williams, D. Modelling recent and future climatic suitability for fasciolosis in Europe. Geospat. Health 9(2), 301–308. https://doi.org/10.4081/gh.2015.352 (2015).

Lo Iacono, G., Armstrong, B., Fleming, L. E., Elson, R., Kovats, S., Vardoulakis, S. & Nichols, G. L. Challenges in developing methods for quantifying the effects of weather and climate on water-associated diseases: A systematic review. PLoS Negl. Trop. Dis. 11(6), e0005659. https://doi.org/10.1371/journal.pntd.0005659 (2017).

Lo, N. C., Gurarie, D., Yoon, N., Coulibaly, J. T., Bendavid, E., Andrews, J. R. & King, C. H. Impact and cost-effectiveness of snail control to achieve disease control targets for schistosomiasis. Proc. Natl. Acad. Sci. USA 115(4), E584–E591. https://doi.org/10.1073/pnas.1708729114 (2018).

Williams, D. J. L., Howell, A., Graham-Brown, J., Kamaludeen, J., & Smith, D. Liver fluke—An overview for practitioners. Cattle Pract. 22 (2014).

Pritchard, G. C., Forbes, A. B., Williams, D. J. L., Salimi-Bejestani, M. R. & Daniel, R. G. Emergence of fasciolosis in cattle in East Anglia. Vet. Rec. 157, 578–582. https://doi.org/10.1136/vr.157.19.578 (2005).

Kenyon, F., Sargison, N. D., Skuce, P. J. & Jackson, F. Sheep helminth parasitic disease in south eastern Scotland arising as a possible consequence of climate change. Vet. Parasitol. 163(4), 293–297. https://doi.org/10.1016/j.vetpar.2009.03.027 (2009).

McCann, C. M., Baylis, M. & Williams, D. J. L. Seroprevalence and spatial distribution of Fasciola hepatica-infected dairy herds in England and Wales. Vet. Rec. 166(20), 612–617. https://doi.org/10.1136/vr.b4836 (2010).

Ollerenshaw, C. B. & Rowlands, W. T. A method of forecasting the incidence of fascioliasis in Anglesey. Vet. Rec. 71(29), 591–598 (1959).

Fairweather, I. & Boray, J. C. Fasciolicides: Efficacy, actions, resistance and its management. Vet. J. 158, 81–112 (1999).

Morgan, E. R., Hosking, B. C., Burston, S., Carder, K. M., Hyslop, A. C., Pritchard, L. J., … Coles, G. C. A survey of helminth control practices on sheep farms in Great Britain and Ireland. Vet. J. 192(3), 390–397. https://doi.org/10.1016/j.tvjl.2011.08.004 (2012).

Mitchell, G. Update on Fasciolosis in cattle and sheep. Practice 24(7), 378–385 (2002).

Skuce, P. J. & Zadoks, R. N. Liver fluke A growing threat to UK livestock production. Cattle Pract. 21(2), 138–149 (2013).

Scotland’s RUral College (SRUC). Technical Note 677: Treatment and Control of Liver Fluke (2016).

National Animal Disease Information System (NADIS). https://www.nadis.org.uk/parasite-forecast.aspx (2019).

Markus, S. B., Bailey, D. W. & Jensen, D. Comparison of electric fence and a simulated fenceless control system on cattle movements. Livestock Sci. 170, 203–209. https://doi.org/10.1016/j.livsci.2014.10.011 (2014).

Marini, D., Llewellyn, R., Belson, S. & Lee, C. Controlling Within-Field Sheep Movement Using Virtual Fencing. Animals 8(3), 31. https://doi.org/10.3390/ani8030031 (2018).

Marshall, E. J. P., Wade, P. M. & Clare, P. Land drainage channels in England and Wales. Geogr. J. 144(2), 254–263 (1978).

Robinson, M. & Armstrong, A. C. The extent of agricultural field drainage in England and Wales, 1971–80. Trans. Inst. Brit. Geogr. 13(1), 19–28 (1988).

Robinson, E. L., Blyth, E. M., Clark, D. B., Finch, J. & Rudd, A. C. Trends in atmospheric evaporative demand in Great Britain using high-resolution meteorological data. Hydrol. Earth Syst. Sci. 21(2), 1189–1224. https://doi.org/10.5194/hess-21-1189-2017 (2017).

National River Flow Archive (NRFA). NERC CEH. https://nrfa.ceh.ac.uk/ (2019).

Intermap Technologies. NEXTMap British Digital Terrain 50m resolution (DTM10) Model Data by Intermap, NERC Earth Observation Data Centre. http://catalogue.ceda.ac.uk/uuid/f5d41db1170f41819497d15dd8052ad2 (2009).

Coxon, G., Freer, J., Lane, R., Dunne, T., Howden, N. J. K., Quinn, N., … Woods, R. DECIPHeR v1: Dynamic fluxEs and ConnectIvity for Predictions of HydRology. Geosci. Model Dev. 12, 2285–2306. https://doi.org/10.5194/gmd-2018-205 (2019).

Beven, K., Lamb, R., Quinn, P., Romanowicz, R. & Freer, J. TOPMODEL. In Computer Models of Watershed Hydrology (ed. Sing, V. P.) 627–668 (Water Resource Publications, 1995).

Vetter, T., Huang, S., Aich, V., Yang, T., Wang, X., Krysanova, V. & Hattermann, F. Multi-model climate impact assessment and intercomparison for three large-scale river basins on three continents. Earth Syst. Dyn. 6(1), 17–43. https://doi.org/10.5194/esd-6-17-2015 (2015).

Shen, C., Niu, J. & Phanikumar, M. S. Evaluating controls on coupled hydrologic and vegetation dynamics in a humid continental climate watershed using a subsurface-land surface processes model. Water Resour. Res. 49(5), 2552–2572. https://doi.org/10.1002/wrcr.20189 (2013).

MetOffice. https://www.metoffice.gov.uk/research/climate/maps-and-data (2017).

Acknowledgements

This work is funded as part of the Water Informatics Science and Engineering Centre for Doctoral Training (WISE CDT) under EPSRC grant no. EP/L016214/1. Partial support for T.W. was provided by a Royal Society Wolfson Research Merit Award. H.R.V. was funded by the BBSRC LoLa Consortium, “BUG: Building Upon the Genome” (Project reference: BB/M003949/1) and the University of Liverpool’s Institute of Infection and Global Health.

Author information

Authors and Affiliations

Contributions

L.B. and T.W. designed and performed most research; H.R.V., J.G.W., E.R.M. and P.V. helped design disease control strategies; L.B., H.R.V., J.G.W., E.R.M., P.V. and T.W. analyzed results; L.B. wrote the paper with contributions by all other authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Beltrame, L., Rose Vineer, H., Walker, J.G. et al. Discovering environmental management opportunities for infectious disease control. Sci Rep 11, 6442 (2021). https://doi.org/10.1038/s41598-021-85250-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-85250-1

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.