Abstract

The perception of our body in space is flexible and manipulable. The predictive brain hypothesis explains this malleability as a consequence of the interplay between incoming sensory information and our body expectations. However, given the interaction between perception and action, we might also expect that actions would arise due to prediction errors, especially in conflicting situations. Here we describe a computational model, based on the free-energy principle, that forecasts involuntary movements in sensorimotor conflicts. We experimentally confirm those predictions in humans using a virtual reality rubber-hand illusion. Participants generated movements (forces) towards the virtual hand, regardless of its location with respect to the real arm, with little to no forces produced when the virtual hand overlaid their physical hand. The congruency of our model predictions and human observations indicates that the brain-body is generating actions to reduce the prediction error between the expected arm location and the new visual arm. This observed unconscious mechanism is an empirical validation of the perception–action duality in body adaptation to uncertain situations and evidence of the active component of predictive processing.

Similar content being viewed by others

Introduction

Self-body perception is a malleable complex construct, crucial to our interaction with the environment, that emerges from the integration of multisensory stimuli that the brain receives from the physical body1,2,3. The different sensory channels continuously stream information about the state (physiological) and the configuration (postural and in relation to the environment) of the body, and this information needs to be processed and integrated online in order to assure a successful interaction with the dynamic environment in which we live and act4. Experimentally induced bodily illusions provide a compelling demonstration of such flexibility, showing how it is possible to transiently induce aberrant bodily experiences—distorted body proportion and impossible postures5,6, illusory movements7, and the embodiment of external objects8 —by manipulating ad-hoc the spatiotemporal correlations of bodily stimuli from different sensory channels.

Hence, one key approach to the understanding of self-body perception is the investigation of body ownership illusions (BOIs)8, where external bodily shaped objects like rubber (or virtual) hands are processed as part of one’s own body. These illusions show indeed how the brain can flexibly accommodate for quite coarse sensory conflicts while preserving the integrity of the body percept. In the classic rubber-hand illusion (RHI) experiment9, a rubber hand is placed next to the real hidden hand and the illusion is elicited by stimulating both hands with spatiotemporally congruent trains of visuotactile stroking. Once the illusion is established, visual stimuli from the rubber hand (e.g. strokes from a paintbrush10 or unexpected threatening events11) are processed as if “coming from” the real hand that keeps streaming proprioceptive and tactile sensations. An important element of body ownership illusions is indeed the visuo-proprioceptive binding that is established between visual stimuli from the fake hand and somatosensory stimuli from the physical one12,13. Crucially, the illusion is sustained even if the information about the spatial location of the hand provided by proprioception (real hand) and vision (rubber hand) is conflicting9,14; and experimental evidence shows how participants undergoing the illusions recalibrate the proprioceptive and visual encoding of the hand location towards the rubber hand15. This effect, known as proprioceptive drift, has been interpreted as a way to “explain away” the sensory conflict and can be neatly accounted for within predicting processing computational frameworks as the result of prediction error minimization16.

Effects akin to the proprioceptive drift observed in the classic RHI have been consistently reported across different experimental studies whenever the real and the fake limbs were spatially misaligned13,17,18,19. For example, during a full-body ownership illusion experienced over a virtual body initially collocated with the real one, participants previously instructed to be still showed an illusory perceived change in their posture after seeing their embodied avatar slowing moving into a new posture, even in absence of actual movement13. In line with this, in a classic RHI experiment in which participants were explicitly instructed to keep their posture and not move, Asai and colleagues showed how participants undergoing the ownership illusion tend to unintentionally apply a force in the direction of the rubber hand20. Unfortunately, the experimental design was not able to fully disambiguate whether the observed forces were indeed active strategies for sensory conflict compensation or the result of body posture corrections due to a body midline effect15,21,22, as the only location tested was with the rubber hand placed close to the body midline. Besides, automatic and unconscious motor adjustments to reduce conflict between the vision of the arm and proprioception were also reported23. Still, if confirmed this form of automatic compensatory movements can be regarded as an active strategy for suppressing the sensory conflict that may arise in bodily illusions, an alternative to “passive” perceptual recalibrations such as the proprioceptive drift.

Similar forms of active compensatory movements have been also observed during intentional actions in participants controlling the movements of an embodied virtual avatar. A clear example is the “self-avatar follower effect”19, a motor behavioral pattern observed in immersive virtual reality when users embody a virtual avatar and control it with a many-to-one visuomotor mapping. In particular, participants could control the extension and bending of the virtual arm, while the virtual shoulder rotation was controlled by a Virtual Reality (VR) system independently on the real shoulder configuration (so that the two arms were not spatially overlapping). Under this condition, when asked to perform a sequence of reaching actions, participants tended to integrate within the correct task execution an additional motor component irrelevant to the task, but functional to bringing the real arm closer or overlapping with the virtual one. This motor component cannot be directly explained by optimal control theories of movement24,25 but finds instead a natural interpretation in the active inference framework26 as an active strategy for suppressing sensory prediction errors19. We hypothesize that the brain resolves the sensory conflicting information about the body posture by exerting involuntary active strategies that physically reduce the conflict following a similar principle as the proprioceptive drift.

The aim of the current study is to provide a theoretical account for the active component of the RHI described above, consisting of an implicit (not intentional) attempt to reduce the visuo-proprioceptive conflict arising from the misalignment between the real and the embodied fake hand. We cast the problem in the normative framework of active inference27, implementing a model able to predict the force that an agent will produce at the level of the arm when exposed to a classic RHI configuration, where a spatial mismatch between the real and the fake hand is present and actual movement of the physical hand is “locked”. We further conducted an experimental study based on a customized setup integrating a robotic manipulandum with a VR system programmed to induce an immersive virtual version of the RHI28. The setup allowed to systematically measure the force generated using a precise force-torque sensor. Force assessments can be then used for formally testing the hypothesis that the active effects observed during illusory ownership (e.g. the attempt to move the hand towards the embodied misplaced hand) are due to the minimization of the prediction error.

Active inference29 (AIF), a mathematical framework inspired by the Predictive (Bayesian) brain30 that accounts for both perception and action, has been successfully applied, for instance, to model goal-driven behavior in organisms31 and the physiology of dopamine32. Moreover, AIF accounts of intentional reaching behavior in humans have been proposed27 and investigated on humanoid robots33,34. Relevant for this work, it was shown how tuning the relative influence of the sensor modalities affected the motoric response in a hand-target phase matching in the presence of visuo-proprioceptive conflict35. Perceptual illusions in humans have been also investigated under this paradigm, such as the force-matching illusion36. In a previous study, we synthetically replicated the proprioceptive drift observed in the RHI16, supporting the general view that this perceptual recalibration results from the minimization of the prediction error produced by the visuo-proprioceptive spatial mismatch between the real and the rubber hands once the illusion is elicited. Here we extend this formulation to the active inference framework and show how the extended implementation can reproduce the experimentally observed forces applied by the hidden real arm in the direction of the rubber/virtual hand.

Results

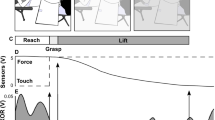

We first sketch the main features of the computational model and approach (Fig. 1A) and show the model predictions when simulating the virtual hand illusion (VHI). Model predictions are then compared with observations from a dedicated experiment (Fig. 1C) in which the VHI was elicited as in classical paradigms (via visuo-tactile stimulation, VT) and the force applied by the hidden arm during the illusion was measured. Although previous studies have shown that illusory ownership over a virtual hand spatially aligned with the real hand can be triggered also without the need for VT stimulation12, we opted for the classical paradigm because we needed the illusion to be sustained also in the presence of spatial conflict. Several studies have indeed shown how in these cases, synchronous VT stimulations trigger significantly stronger illusions with respect to conditions with any tactile stimuli37,38. In our study we need to retain a spatial conflict to facilitate the appearance of the proprioceptive drift, which in turn is the trigger for the active compensation strategies under testing. Results from simulations and experimental assessments were compared for different spatial configurations of the real and virtual hand (Fig. 1B), adopting different degrees of VT congruency (synchronous vs asynchronous) to control for the role of the ownership illusion. Altogether our results show that our AIF model can account for both passive (perceptual drift) and active (attempt to move the hand towards the embodied misplaced hand) effects observed in the laboratory.

Predictive brain approach and VHI experimental setup. (A) Schematic of the perceptual drifts (visual, proprioceptive) and the active component, and its relation to prediction minimization. Both the proprioceptive and visual drifts are produced by the same process of body estimation under visuo-proprioceptive mismatch and the active drift (forces towards the virtual hand) is a consequence of proprioception error minimization through the reflex arc pathway. (B) VHI conditions. At each trial the virtual hand was placed in one of the three different locations: Left (− 15 cm), Center (0 cm) and Right (+ 15 cm) with respect to the real hand location, i.e., the center corresponds to the real hand position. The real hand was placed 30 cm away from the body midline. (C) The perceptual stimulation was performed using immersive VR and a coin vibrator for the tactile stimulation. Lateral force measurements were recorded using a 6DOF force-torque sensor on the robotic manipulandum vBot.

Computational model predictions

We model perception and action as an inference process using an AIF27,39 based algorithm, sketched in Fig. 2A—see the details in “Methods”. During stimulation, the real hand location is hidden (grey hand) and thus, only proprioceptive cues provide information about the current body pose. The virtual hand is treated as a possible source of information and integrated into the arm posture estimation as a visual cue weighted by causality, which in turn was considered as a proxy for the ownership illusion and modulated as a function of VT synchrony. According to the model, the force at the level of the arm arises as a consequence of the prediction error generated in the multisensory integration process of the visual arm location input and the predicted arm location from the estimated body joint angles.

Computational model predictions. (A) Arm model description with one degree-of-freedom (DOF) and the VR hand as the visual input. Using the proprioceptive input \({s}_{p}\) (joint angle) and the prior information of the body state \(p(\mu )\) the model predicts the expected location of the hand \({g}_{v}\left(\mu \right)\). However, the visual information (virtual hand, \({s}_{v}\)) manipulated to appear in a different location produces a sensory conflict: my hand is not at the predicted location. Thus, producing an error that is propagated and generates the perceptual drifts and the active component. According to our hypothesis, the torque direction corresponds to reducing the prediction error: expected hand location minus the VR location. (B) Relation between the direction (sign) of the predicted force and the virtual hand location. Our model predicts a trend similar to the one shown by the purple line, e.g., negative force in the left condition implies that the attempted force is towards the virtual hand. (C) Mean and standard deviation of the predicted force with the active inference model for Left, Center and Right conditions. (D) Model predicted mean force for the synchronous condition. (E) Model predicted mean force for an ideal asynchronous condition.

The simulated VHI experimental design, had three different visual conditions (described in Fig. 1B), corresponding to three different locations of the virtual hand: Left, Right and Center (i.e. in the same location of their real hand). The output of the model is the predicted lateral force, being positive when it is towards the body midline (torso) and negative when it points away from the body. Figure 2B depicts the expected outcome depending on the force sign and the virtual hand location condition. In the left condition, a negative force indicates that the predicted force is towards the virtual hand and a positive one that the hand would move away from the virtual hand. In the right condition, this is inverted: negative force implies that the attempted force would make the real hand go away from the virtual hand and positive force indicates towards the virtual hand. In both left and right conditions zero force indicates no effect of the virtual hand location. Finally, in the center condition positive and negative forces would imply movement away from the virtual hand. In the presence of our proposed active strategies, we should see a trend similar to the one shown by the purple line.

We simulated 20 participants (10 synchronous and 10 asynchronous). Participants' variability was modeled by assigning different proprioceptive weighting (i.e. the precision or inverse variance of the proprioceptive cue) and randomizing the length of the limb. The results, summarized in Fig. 2C-E, predict lateral forces in the direction of the virtual hand in all conditions. Figure 2C shows the mean force for all simulated participants split by the location of the virtual hand (Left in blue, Center in green and Right in red), indicating three differentiated patterns. As expected, in the Center condition, characterized by congruent visuo-proprioceptive information (i.e. no conflict) the model is compatible with no force applied (mean is not significantly different from zero). Conversely, when a spatial conflict is present, the model forecasts an action towards the artificial hand to correct the error of the predicted arm location, which we should observe in human participants. Furthermore, the model in the asynchronous condition predicts attenuated forces (Fig. 2D vs Fig. 2E), as the asynchronous condition was associated with a suppression of the ownership illusion and therefore a lower level of reciprocal causality between visual and proprioceptive stimuli.

Active RHI experiment with human participants

To validate the model predictions, we investigated the exerted forces of fourteen human participants in the VHI experiment presented in Fig. 1. Participants wore an immersive virtual headset through which they could see a virtual body placed in an anatomically plausible position with respect to their visual perspective. Analogously to the computational model experiment, participants were presented with three different conditions, corresponding to three different locations of the virtual hand (Fig. 1B): Left, Right and Center. Center corresponds to the same location of their real hand. Participants were instructed to keep their arm still; the real arm was placed on a locked manipulandum equipped with a 6DOF force-torque sensor (Fig. 1C) so that the implicit (non-intentional) horizontal forces applied by participants could be measured (sensitivity: 1/24 Newtons). As we were only interested in the active component of the illusory experience, to obtain enough force sample points for statistical robustness (120 samples per individual), no other typical measures of the RHI such as proprioceptive drift or subjective assessments were taken. However, to complement this analysis, we did a preliminary study with eight participants to assess the perceptual drift and the subjective level of body-ownership from the same experimental setup—See supplementary information.

Lateral forces analysis

We first describe the registered lateral forces with respect to time during stimulation averaged for all participants (Fig. 3) and second, we present the mean forces for each condition averaged for the stimulation interval 10 s–15 s (Fig. 4). Figure 3 shows the lateral force profile during the stimulation period of 40 s, averaged for all participants and trials. Although both synchronous and asynchronous conditions had clear right vs left force patterns, the variability was higher in the asynchronous case producing noisier force profiles.

Average participant lateral force exerted during stimulation for the synchronous and asynchronous conditions. The profile is shown separately for the three experimental conditions, Left (blue), Center (green) and Right (red), with solid lines indicating the mean value across participants and the shaded region representing the standard deviation.

Mean forces applied during stimulation for the different virtual hand location conditions: Left (blue), Center (green), Right (red). The center condition corresponds to the location of the real hand. (A) Mean and standard deviation of the force applied by all subjects for the three different virtual hand locations. (B) Mean force in the synchronous condition, where tactile (vibrator) and visual stimulation (ball hitting the hand) events were concurrent (i.e. both occurring within less than 100 ms). (C) Mean force in the asynchronous condition, where the tactile event was generated randomly along the trajectory of the ball. (D) Mean force registered during stimulation for each participant. Three continuous points in the x-axis (L-blue, C-green, R-red) describes one subject. (E) Mean force histogram for the three virtual hand location conditions (all subjects).

Figure 4 shows the registered average force—for the stimulation interval 10 s-15 s stimulation phase—and standard deviation for all trials and participants for the Left (blue), Center (green) and Right (red) conditions. The marker indicates the mean force and the line expresses the standard deviation. Mean forces in all conditions (Fig. 4A-C) showed differentiated directional force patterns towards the virtual hand, in line with the hypothesized trend (purple line in Fig. 2A). Figure 4D also shows the mean force for each subject indicating the variability of the forces gains and Fig. 4E shows the forces histogram for all subjects split by VR arm location condition. Furthermore, the finding of non-null forces, comparable in intensity, in both left and right directions falsifies the hypothesis of body posture correction or body midline effect.

The rubber-hand illusion is active

Our study shows involuntary actions towards the virtual hand during the stimulation phase, despite the inhibitory control and the passive nature of the experiment (participants were instructed not to move). First, we tested the dependence of the applied force, as measured across all trials and participants, on the experimental factors: the virtual hand location, the mode of visuo-tactile stimulation and their interaction. For this, we ran a mixed effect repeated measure ANOVA with virtual hand location (3 levels) as a within-participants factor, VT stimulation modality (synchronous vs asynchronous) as a between-participants factor, and including the possible effect of their integration. We found a significant main effect of the location of the virtual hand (F2,1674 = 16.05, p = 1.2 × 10–7). The VT stimulation mode was instead not significant neither as a main factor (F1,1674 = 1.77, p = 0.18) nor in interaction with the virtual hand’s location (F2,1674 = 0.18, p = 0.83). We next ran a post-hoc analysis to test our specific hypothesis on the origin of the force dependence on the virtual hand location, i.e., a t-test of the aggregated data across trial repetitions for each participant, taking into account possible inter-individual differences. First, we validated the hypothesis that in the absence of spatial conflict (Center condition) there was no action, i.e., the forces are compatible with a zero mean distribution. A one-sample two-tailed t-test showed that forces in the Center condition have mean 0 (t1,13 = − 1.33, p = 0.90). Second, we tested the hypothesis that in the lateral conditions (Left and Right) forces are different from zero and in the direction of the virtual hand. For this, we ran a one-tailed paired t-tests contrasting forces in each of the later conditions (Right and Left) with those measured in the Center condition. In both cases, our hypothesis is supported by data. For the Right condition the average force is F = 0.036 ± 0.0009 N, significantly larger (positive) than forces in the Center condition (t1,13 = 1.84, p = 0.041). For the Left condition the average force is F = − 0.039 ± 0.0009 N, significantly smaller (negative) than forces in the Center condition (t1,13 = − 2.00, p = 0.031).

The fact that the ANOVA revealed no effects of the VT stimulation modality indicates that the modulations of the force by the virtual hand location are similar in both synchronous and asynchronous conditions (Fig. 4B,C).

Model predictions versus human observations

Figure 5 shows the comparison between the mean forces registered in the human experiment and the ones predicted by the model. The model was able to predict lateral forces exerted by the participants in the virtual rubber-hand illusion, i.e. for the synchronous group, for the left, center and right conditions. The directions were congruent in all location conditions. In both, the left and the right forces were towards the virtual hand. In the center condition, no lateral forces are present. Statistical differences were only found between location conditions when comparing our model predicted forces with the human data using a two-sample t test (p < 0.05). A mismatch in the strength of the effect was found in the asynchronous condition. While the computational model predicted an attenuated response, in the human experiment similar gains were found in the synchronous and asynchronous conditions. This is also described by the statistical difference in mean of the Left-Asynchronous condition between the model and the human data. This result might be because the VT stimulation adopted in our asynchronous condition was not able to suppress the causal link between the visual input and the body posture inference as intended (see “Discussion”). To validate this plausible interpretation of our experimental findings we performed additional simulations.

Comparison between the model predictions and human behavior (A) Mean force comparison between the human participants and the simulated ones with the active inference model for Left, Center and Right conditions. The continuous line corresponds to the human data and the dashed line with a lighter color to the model predictions. (B) Force comparison for the synchronous condition. (C) Force comparison for the asynchronous condition.

Visuo-tactile synchrony evaluation

We further studied the influence of the VT synchrony, taken as a proxy for the level of illusory ownership, on the predicted force. We evaluated the proposed computational model under different degrees of visuo-tactile synchronicity to, first, analyze whether the appearance of forces in the human experiment asynchronous condition may be due to the immersive setting and the formed implicit causal relation between the ball and the hand even using pseudo-random vibration intervals; and second, to assess the influence of the causal parameter \(\kappa\) in the visual term of the model.

In the classic RHI paradigm, the degree of VT temporal congruency (i.e. the relative timing of events) is known to modulate the illusory experience, and asynchronous conditions are typically adopted to implement control conditions where the illusion is not elicited9. However, it has been shown that some degree of asynchrony can be tolerated12,37 (e.g. delays up to about 300 ms40) without breaking the illusion. Furthermore, it has been shown that when experiencing an ownership illusion, the sensitivity threshold for detecting bodily-related VT asynchrony is dampened41. Figure 6A shows two sequences of synthetically generated VT events depending on the random strategy used. The visual event refers to the ball on hand and the tactile event to vibration. In practice, in the real experiment, the randomness of the tactile event along the ball trajectory may yield undesired consequences, where the participant assigns causality to both stimuli. This is better captured by the weak asynchronous generation. All together this experimental evidence highlights how the degree of VT asynchrony cannot be mapped one-to-one to a given degree of illusory ownership, which in turn enters in our model via the causal link parameter \(\upkappa\). Thus, to explore the effect of different degrees of illusory ownership on the predicted force, we simulated ten participants varying the causality parameter \(\upkappa\), which depends on the synchrony of the events (see “Methods”) and compared the resulting forces to the ideal asynchronous condition (i.e. the one implying no causal link between visual and tactile stimuli, \(\upkappa = 0)\). Hence, a high \(\upkappa\) would imply that two events are likely to come from the same source. Figure 6B shows the mean forces and standard deviation of the simulated participants for the asynchronous condition comparing to fixing the causality parameter to 0.3, 0.6 and 0.9.

Visuo-tactile stimulation synchrony study. (A) Synthetic visual and tactile events in the asynchronous condition depending on the random seed used to generate the vibrations. Y-axis defines how far in time the two events happen. (B) Average and standard deviation of the simulated force as obtained for 10 virtual participants each characterized by a different value of the causality parameter \(\upkappa\), which depends on the synchronicity of the visual and tactile events.

Therefore, one of the possible reasons to observe forces in the asynchronous condition is that participants developed an implicit causal relation between the visual virtual arm and their body despite the ball vibrating randomly.

Discussion

We presented a computational model based on active inference that can predict the active component of the rubber-hand illusion, i.e. a tendency to generate involuntary forces towards the embodied virtual hand, observed in previous experiments and replicated in more details and controlling more factors (under diverse configurations) in the current study. Both model simulations and experimental results provide evidence for an active component of the RHI, which because of its static configuration has been overseen for a long time. This evidence supports the basic interdependence of perception and action.

The present study suggests that humans may move their body to adjust their expected location with respect to other (visual) sensory inputs, which corresponds to reducing the prediction error. This means that the brain adapts to conflicting or uncertain information from the senses by unconsciously acting in the world. We speculate that the proposed theoretical framework can be useful for applications, such as rehabilitation, prosthetics, gaming, etc.

Previous computational models have focused on the perceptual effects of the RHI. For instance, the Bayesian causal model was able to predict the proprioceptive drift by fitting the likelihood to experimental data in humans42 and macaques43. Our previous predictive coding model16 was able not only to replicate the proprioceptive drift but also to show the potential mechanism and the dynamics behind the perceptual effect. The model presented here extends this approach with the inclusion of an active component. Our model, supported by the AIF mathematical framework, can account at the same time for three observed effects in the rubber-hand illusion: proprioceptive9 and visual15 drifts, and the active component which is the main focus of our study. The perceptual drifts, i.e., proprioceptive and visual, result from a perceptual estimate based on the integration of the two conflicting sensory information, so can be regarded as perceptual biases. The active component or drift (lateral forces) instead may emerge by the active proprioception error minimization through the reflex arc pathway.

Although the free energy principle26 (i.e. the basis behind AIF) has been widely proposed to account for several brain processes, such as perception and action27, interoception44 or self-recognition45,46,47, it is has been difficult to find behavioral validation for its active component. The active inference formulation postulates that both perception and action minimize the surprise, formalized as the discrepancy between our beliefs and the real world. Our model predicts that subjects experiencing body illusions in presence of a visuo-proprioceptive mismatch, tend to generate actions (in the form of exerted forces in the arm) that reduce this discrepancy. During the VHI, the new visual input (virtual hand) is merged with proprioceptive information generating a prediction error on the body location estimation16, which can be corrected by an active strategy moving the physical hand to reduce the conflict. The predicted behavior was validated empirically in the human experiment. We observed and measured forces exerted by the physical hand in the direction of the virtual hand. This is, the body may reduce the error of the predicted body location, triggered by the new visual input, by moving the arm. Thus, this could be potential experimental evidence of the active inference framework48. However, we should be cautious regarding the model comparison as other computational approaches could also fit our results. For instance, cross-modal learning49 could also retrieve these forces by means of sensory reconstruction. Moreover, there are relevant differences between the simplified joint model here adopted and the real musculoskeletal human body.

In contrast with our initial expectations, compensatory forces were observed in our “asynchronous” condition, which was initially expected to be a control condition in which no illusion occurs. The fact that we found a similar active effect (i.e. non-null forces in the direction of the virtual hand) in both synchronous and asynchronous conditions, may be due to our implementation of asynchronous VT stimulation, where the associated sensory conflict might have been not sufficiently high to break the illusion. The accountability of the proposed model to fit human behavioral data depends on the weighting of the visual cue, modulated by the causal parameter \(\kappa\), here taken as a proxy for the visuo-proprioceptive binding and indirectly, the level of the experienced illusion. Thus, a limitation of this study is the missing relation between the observed active strategies and the proprioceptive drifts and the strength of the illusion, as we do not explicitly simulate the emergence of the ownership illusion. According to our model, we could expect correlations between the proprioceptive drift and the recorded forces indicating that both effects may be driven by the same underlying process.

Several experiments reported that body ownership is not elicited during asynchronous stimulation28,50,51. Such evidence was nevertheless taken from experiments in which other sources of sensory conflict were present (e.g. the spatial mismatch in the classic RHI). In these cases, synchronous visuo-tactile stimulation is required as a trigger for eliciting illusory ownership, while asynchronous stimulation provides additional sensory conflict that keeps the illusion off. Other studies however have shown that VT stimulation is not necessary to trigger the illusion. In fact, ownership illusion can be triggered by congruent visuo-proprioceptive cues only, i.e. for the simple case of spatial overlap between the virtual and real body—as achievable in immersive virtual reality applications—with no further multisensory stimulation12. Furthermore, it was shown that once the illusion is established, e.g. through visuo-proprioceptive or visuo-motor triggers, incongruent visuo-tactile stimuli can be tolerated without breaking the illusion41,52. This result emerges as a consequence of the fact that the temporal window for VT integration expands due to the causal binding, established by the illusion, between visual stimuli seen on the virtual hand and somatosensory stimuli experienced through the physical hand41. It is therefore plausible that the asynchronous visuo-tactile stimulation adopted in our experiment as a control condition did not work as such. This may occur as a consequence of the enhanced immersivity/plausibility of our virtual setup, in combination with repeated exposures to conditions of perfect visuo-proprioceptive overlap between the virtual and the real hands. As reviewed above, previous works show that under this spatial configuration illusory ownership can be sustained even in presence of visuo-tactile asynchronies. Along these lines, it is possible that a residual sense of ownership could be present even in our lateral conditions under visuo-tactile asynchronous stimulation. In this case, the fact that we did not find a significant difference in the forces recorded in the synchronous versus asynchronous conditions, possibly because of a saturation effect whereby even low levels of illusory ownership elicited in the asynchronous condition may trigger perceptual displacements that saturate the active component to the level observed in the synchronous case (in which the exerted forces are very weak).

The interpretation above should be nevertheless taken with cautiousness. Due to experimental requirements (see “Methods”), we did not include explicit assessments of the subjective experience of illusory body ownership. Although lateral configurations in our experiment induced a proprioceptive drift, which is the actual trigger for the predicted force of our model, we cannot properly correlate with the illusion ownership. There is no clear consensus in the literature about this relation. Some works describe a dissociation between ownership and proprioceptive drift based on the lack of a clear correlation between the two53. Such lack of correlation appears to be mainly associated with the fact that in visuotactile asynchronous conditions non-null drifts are observed while the reported sense of ownership drops significantly with respect to the synchronous condition. Still, other relevant studies reported clear correlations between subjective ratings of the illusory experience and objective measures of the proprioceptive drift54,55. In our pilot study (see Supplementary Material) we found that participants exposed to the asynchronous condition, besides displaying a proprioceptive drift, scored positive (although with lower values than in the synchronous condition) to the explicit question on hand ownership (Q3 – “There were moments in which I felt that the virtual hand was my own hand”). Similar results with non-negative scores on ownership assessments in visuotactile asynchronous conditions (but significantly lower than in the synchronous condition) have been reported in previous studies37,41,53, including in works questioning the causal relationship between ownership and proprioceptive drift37,53. Altogether, this might suggest the presence of a residual degree of illusory ownership in our VR asynchronous condition. However, the possibility that the force we measured is dissociated from the sense of ownership cannot be excluded by our experiment alone. In any case, seen in the larger framework of the experimental evidence on ownership illusion from the last decade, our results provide new evidence for the active component of the RHI and novel insights for understanding its underlying computational base.

Previous work has shown the strong link between self-perception and action. This coupling has been observed in reactive responses to the threat observed on an embodied fake body11,56. Further support comes from experimental evidence showing how the sense of agency over the movements of virtual hands or full body avatars enhances the sense of illusory body ownership52,57,58. Our results point to a possible complementary effect, i.e. to how the sense of body ownership can potentially affect motor behavior, depending on the sensory cues available. Further studies in which the subjective sense of ownership is explicitly assessed are nevertheless needed to ascertain this possibility.

Our model provides an active inference perspective on motor behavior showing how the need to suppress sensory conflict could trigger movement, as an active strategy to preserve the integrity of self-perception. We expect that such sensory-conflict-driven actions could affect motor performance in goal-directed, as in the case of the self-avatar follower effect observed in VR settings19. Our proposed perception–action coupled approach would be furthermore advantageous for modeling adaptation during interaction, as world/body variability can be instantly taken into account while maintaining a coherent body pose estimation.

Future research needs to address the computational model behind the dynamics of illusory experience itself and more realistic visual input59. One possibility could be to integrate, in a hierarchical fashion, our AIF model with Bayesian causal inference models previously proposed for body ownership illusions8,42. These models are based on the assumption that the illusory sense of body ownership arises when the brain attributes the visual information from the rubber or virtual hand to the same entity that gives origin to somatosensory input. The causal inference model we propose here, instead, simulates the perceptual process inferring the location of the hand and the origin of actions that minimize sensory conflicts while assuming that the agent believes to some degree (represented by the \(\kappa\) parameter) that the visual and the proprioceptive/tactile sensory input are associated to the same origin. The observation of forces in the asynchronous condition in human experiments reflects the need for further testing the model under several control conditions and for integrating the two models. Even more importantly, observing the active component in a non-static environment will be an important step to corroborate the robustness of the proposed model. Heading in this direction, we plan to test the model in conditions where the real hand is able to move, and further when the virtual arm can be controlled by the agent.

Methods

Computational model

We model body perception as inferring the body posture by means of the sensation prediction errors (visual and proprioceptive) and the error in the predicted dynamics. Inspired by the fact that the RHI affects the joint angles perception60, body posture is defined by the joint angles. To design the arm model we only consider one degree of freedom: the elbow that rotates over the vertical axis (Fig. 2A). Thus, we define \({s}_{p}\) as the measured/observed joint angle of the elbow and \(\mu^{[0]} \in \left( { - \pi /2,\;\pi /2} \right)\) as the inferred elbow joint angle. The subscript notation reflects the order of the dynamics. \({\mu }^{[0]}\), \({\mu }^{[1]}\), \({\mu }^{[2]}\) represents the position, velocity and acceleration of the inferred brain variables61,62. Visual information is given by the horizontal location of the center of the virtual hand \({s}_{v}\).. We further define that zero degrees measurement indicates when the arm is in perpendicular to the horizontal axis and parallel to the body sagittal plane. Hence, left and right rotations are negative and positive respectively. Given the length of the arm \(L\), the generative model that predicts the hand visual location depending on the joint angle \({(\mu }^{\left[0\right]})\) is as follows:

where \(b\) is a localization perceptual bias (sampled from human data15) that depends on each participant and \({z}_{v}\) is normally distributed noise. The rest of the observations can be predicted with a Gaussian with mean 0. Thus, the observed sensations \({\varvec{s}}\) and the generative model \(g(\mu , \nu )\) that predicts the sensations are:

where \({s}_{p}\) and \(\dot{{s}_{p}}\) are the joint angle and velocity, \(\nu\) is the causal variable, i.e., the perceived location of the virtual hand, \({s}_{v}\) is the measured position of the virtual hand and \(Z\) is the noise associated with each variable. Note that during the RHI experiment the participant does not have visual access to their hand.

The brain generative model \(f(\mu ,\;\rho )\) that drives the dynamics of the internal variables (state) does not expect any action as there is no task involved and it is modeled as a mass-spring system. Note that this function is an approximation of the real model of the arm:

where \(W\) is the associated noise of the process, \(m\) is the mass of the arm and \(\gamma\) is the viscosity parameter. In the case of having a task we shall include a perceptual attractor in the sensory manifold and its transformation to the joint variables. For instance, we can include the virtual arm as the goal by substituting in Eq. (3) the second row by \(\left(T\left({\mu }^{\left[0\right]}\right)A\left({\mu }^{\left[0\right]}, \rho \right)-\gamma {\mu }^{\left[0\right]}\right)/m\) , where is \(A\left({\mu }^{\left[0\right]}, \rho \right)= \beta \left(\rho -{g}_{v}\left({\mu }^{\left[0\right]}\right)\right)\) and the \(T\left({\mu }^{\left[0\right]}\right)= -L \mathrm{sin}({\mu }^{\left[0\right]}-\pi /2)\). However, in this case we do not have a desired goal.

We infer the elbow angle by optimizing the free-energy bound under the Laplace approximation63. Defining the error between the inferred brain variables and the dynamics generative model as \({{\varvec{e}}}_{\mu }={\varvec{\mu}}-f(\mu ,\rho )\), the differential equation that drives \({\varvec{\mu}}={\left[{\mu }^{\left[0\right]},{\mu }^{\left[1\right]}, {\mu }^{\left[2\right]}\right]}^{T}\) is:

Regarding the action, it only depends on the sensory input, as during the RHI the participant only relies on the proprioceptive input. Thus, we define its differential equation as:

where \({K}_{a}\) is the gain that modulates the step size of the differential equation (similar to gradient descent). Note that although we computed the action and use it as an estimate of the force applied at the level of the arm, we did not use it in the update of the real hand position, to simulate the constraint of the RHI where the real hand is fixed and cannot move.

Experiments have shown that during the RHI participants modify the precision of visual and proprioceptive cues14. Thus, we optimize their precision also to reduce the prediction error:

We set a minimum of \(\mathrm{exp}(1)\) and \(\mathrm{exp}(0.1)\) for visual and proprioception precision respectively.

Finally, we considered that the perception of the virtual hand location is another unobserved variable. Thus, the model also infers the visual horizontal location, allowing the blending of the real hand perception error with the virtual hand and therefore, producing a visual drift.

To generate individual differences between participants we fixed all parameters and we randomly selected the initial proprioceptive variance \({\upsigma }_{{s}_{p}}^{2}\sim \mathcal{N}(\mathrm{1,0.5})\). This means that each participant has a different precision of the proprioceptive cue. We further included the bias in the predicted real hand location based on reported data of RHI human experimentation (in cm) \(b\sim \mathcal{N}(\mathrm{0,4.2794})\). The length (in cm) of the forearm was drawn from a normal distribution \(L\sim \mathcal{N}(\mathrm{44.7208,2.4807})\). Bias and the forearm lengths data were taken from participants' real data15. During stimulation, we also introduced artificial noise in \({\mathrm{s}}_{\mathrm{p}}\) and \({\mathrm{s}}_{\mathrm{r}}\) readings.

Finally, to generate the effect of synchronous and asynchronous stimulations on the illusion and therefore on the predicted force, we introduced the parameter \(\kappa\) as the probability of touch and visual cues being generated by the same source, so as an estimate for the level of illusory ownership over the virtual hand8,42 The \(\kappa\) parameter weights the error between the expected visual location of the arm and the virtual hand location input: \(\kappa (\nu -{g}_{v}\left(\mu \right))\). The synchronous and asynchronous VT stimulation were synthetically implemented producing tactile and visual events in the ranges of 100 ms in the synchronous condition and 800 ms otherwise34, and we considered these as two extreme cases where the illusion was either saturated or completely suppressed. So a \(\kappa\) value of 1 was set for the synchronous case, meaning that the visual input is generated by our body, and a \(\kappa\) value of 0.3 was set for the asynchronous condition, assuming that in this case the virtual hand was perceived as an external object not related to the real body. In addition we performed additional simulations, adopting different values of \(\kappa\) to explore how different degree of the illusion intensity would affect the forces applied at the level of the real arm.

Devices

Subjects were seated with their shoulders restrained against the back of a chair by a shoulder harness and their hand and forearm were rested by a flat surface attached to the vBOT robotic manipulandum64 with their forearm supported against gravity with an air sled (Top view Fig. 7; Lateral view Fig. 1C). The robotic was locked to prevent movements of the participants’ hand while measuring the applied force. Position and force data were sampled at 1 kHz. Endpoint forces at the handle were measured using an ATI Nano 25 6-axis force-torque transducer (ATI Industrial Automation, NC, USA). The position of the vBOT handle was calculated from joint-position sensors (58SA; IED) on the motor axes. Visual feedback was provided using a virtual reality (VR) device (Oculus Rift V1, Facebook technology). When using the VR system, any visual information about the real body location was prevented. The virtual environment was designed and programmed in C# using the Unity engine. The virtual environment and body size were fixed for all participants (e.g. the length of the VR arm was always the same). Tactile feedback was provided with commercially available coin vibrators (model 10B27.3018) placed on the middle point of the participant’s hand dorsum, controlled with an embedded chip and connected via USB to the VR engine. The timing of the vibration events is controlled in relation to a visual animation of a virtual ball bouncing on the dorsum of the virtual hand. A random number was generated every time the ball contacted the virtual arm. This random number was translated into the timing to trigger the tactile event along the trajectory of the ball.

Ethics

Informed consent was obtained from all participants, and the study was approved by the ethics committee of the Medical Faculty of the Technical University of Munich, and adhered to the Declaration of Helsinki. The study was performed in accordance with the ethical guidelines of the German Psychological Society (DGPs).

Participants

Fourteen right-handed volunteers (seven male and seven female) from 18 to 40 years old with normal or corrected-to-normal vision participated in this study. Handedness was evaluated using the Edinburgh Handedness Inventory. All participants had no neurological or psychological disorders, virtual sickness, nor skin hyper sensibility as indicated by self-report A pretest with the vibrator was performed on the participant hand who gave a written statement that the vibrator did not harm himself. We ensured that all participants were not wearing nail polish or had remarkable visual features on the left or right forearm and hand. Participants' height was between 1.6 and 1.9 m to fit within the constraints of the experimental apparatus. Participants were economically compensated with eight euros per hour.

Experimental design and procedure

First, we randomly assigned seven participants for the synchronous and seven for the asynchronous condition. In total, we registered 1680 trials or samples across all participants. The generation of the conditions was computed randomly in blocks as described in Fig. 8.

The center condition was always fixed in the beginning, the middle and the end of every trial. Four trials of the left or right condition were randomly selected between the center conditions. The selection of the center condition in the middle was imposed to reduce the localization bias (i.e. the effect of proprioceptive drift induced in the previous trial on the following ones) produced when stimulating in the left and right conditions.

Each trial corresponded to 40-s of VT stimulation where the force was recorded. To register the samples, we performed 10 blocks per participant separated by a short break. Each block was composed of 12 trials (4 trials for each virtual hand location condition, left, center, right). In total, each participant performed 120 trials (40 per visual hand location condition).

Participants were seated on the experiment chair, in front of a table as described in Fig. 7, following the instructions of the experimenter. Once the chair was adjusted to the needed height, the experimenter attached the vibrator to the dorsum of the hand. Participants then wore the Oculus Rift VR system. The left hand rested on the vBOT manipulandum and the right hand on the table (Fig. 7). Participants were asked to stay relaxed and still in the chair.

The initial location of the left hand was fixed for all participants and was programmed in the Manipulandum. Each trial consisted of two phases and then a resting period:

-

1.

Cross: At the beginning of each trial participants were asked to look frontal towards a cross that appeared in a random location around the middle of the scene to avoid the use of the head angle as a prior cue.

-

2.

Stimulation: While participants were looking at the cross, a virtual arm in the Left, Center or Right condition appears in the virtual scene with a ball on the top of the hand. After this appears in the virtual scene, participants were asked to look down at the index finger of the left hand. Participants can only see the virtual hand once they look down. In the synchronous condition, every time the ball touches the virtual hand there is a tactile stimulus through the vibrator (a touch event every two seconds approximately). In asynchronous stimulation, the vibration occurs with a random delay after the visual stimulation.

-

3.

Resting: At the end of each block, participants rested for 1 min alternating removing the VR system and moving the arms without removing the VR.

We tested both synchronous and asynchronous VT stimulation. In the synchronous condition, the events of ball contact with the virtual hand and the vibration on the real hand occurred within the temporal window for perceived simultaneity (less than 100 ms difference). On the other hand, the asynchronous condition vibration events were generated randomly between the visual hit and the highest height reached by the ball.

Data were analyzed offline using Matlab (R2019a) for data cleaning and preprocessing and using R for statistical testing. For force measurements we performed offset removal by subtracting the mean force over each 9-min block of trials (containing equal numbers of all three condition types) from the force data, to ensure that a bias on the force values was not introduced through shifts in participant limb posture. The impact and interaction of experimental factors on the observed forces was assessed running a mixed effect repeated measure ANOVA, while paired t-tests were run for specific post-hoc hypothesis testing.

Code and data

For reproducibility of the results, we provide an instance of the developed model (in python, Google colab) with fixed parameters that can be executed in the following link (http://therobotdecision.com/papers/RHI_AI_vPub.html) and a Jupyter notebook in the supplementary material. Force data that support the findings are provided in the supplementary material. Manipulandum raw data is available from the corresponding author upon request.

References

Graziano, M. S. A. & Botvinick, M. How the brain represents the body: Insights from neurophysiology and psychology. In Common Mechanisms in Perception and Action: Attention and Performance (eds Prinz, W. & Hommel, B.) 136–157 (Oxford University Press, Oxford, 2002).

Ehrsson, H. H. The Concept of Body Ownership and Its Relation to Multisensory Integration. In The New Handbook of Multisensory Processes (ed. Stein, B. E.) 775–792 (MIT Press, Cambridge, 2011).

Blanke, O. Multisensory brain mechanisms of bodily self-consciousness. Nat. Rev. Neurosci. 13, 556–571 (2012).

De Vignemont, F. Mind the Body: An Exploration of Bodily Self-Awareness (Oxford University Press, Oxford, 2018).

Lackner, J. R. Some proprioceptive influences on the perceptual representation of body shape and orientation. Brain 111(Pt 2), 281–297 (1988).

Ehrsson, H. H., Kito, T., Sadato, N., Passingham, R. E. & Naito, E. Neural substrate of body size: Illusory feeling of shrinking of the waist. PLoS Biol. 3, e412 (2005).

Goodwin, G. M., McCloskey, D. I. & Matthews, P. B. C. Proprioceptive illusions induced by muscle vibration: Contribution by muscle spindles to perception?. Science (80-. ). 175, 1382–1384 (1972).

Kilteni, K., Maselli, A., Kording, K. P. & Slater, M. Over my fake body: Body ownership illusions for studying the multisensory basis of own-body perception. Front. Hum. Neurosci. 9, 141 (2015).

Botvinick, M. & Cohen, J. Rubber hands ‘feel’ touch that eyes see. Nature 391, 756 (1998).

Zeller, D., Litvak, V., Friston, K. J. & Classen, J. Sensory processing and the rubber hand illusion—an evoked potentials study. J. Cogn. Neurosci. 27, 573–582 (2015).

Ehrsson, H. H., Wiech, K., Weiskopf, N., Dolan, R. J. & Passingham, R. E. Threatening a rubber hand that you feel is yours elicits a cortical anxiety response. Proc. Natl. Acad. Sci. U. S. A. 104, 9828–9833 (2007).

Maselli, A. & Slater, M. The building blocks of the full body ownership illusion. Front. Hum. Neurosci. 7, 83 (2013).

Maselli, A. & Slater, M. Sliding perspectives: Dissociating ownership from self-location during full body illusions in virtual reality. Front. Hum. Neurosci. 8, 693 (2014).

Makin, T. R., Holmes, N. P. & Ehrsson, H. H. On the other hand: Dummy hands and peripersonal space. Behav. Brain Res. 191, 1–10 (2008).

Fuchs, X., Riemer, M., Diers, M., Flor, H. & Trojan, J. Perceptual drifts of real and artificial limbs in the rubber hand illusion. Sci. Rep. 6, 24362 (2016).

Hinz, N.-A., Lanillos, P., Mueller, H. & Cheng, G. Drifting perceptual patterns suggest prediction errors fusion rather than hypothesis selection: Replicating the rubber-hand illusion on a robot. In 2018 Joint IEEE 8th International Conference on Development and Learning and Epigenetic Robotics (ICDL-EpiRob) 125–132 (IEEE, 2018).

Asai, T. Feedback control of one’s own action: Self-other sensory attribution in motor control. Conscious. Cogn. 38, 118–129 (2015).

Burin, D., Kilteni, K., Rabuffetti, M., Slater, M. & Pia, L. Body ownership increases the interference between observed and executed movements. PLoS One 14(1), e0209899 (2019).

Gonzalez-Franco, M., Cohn, B., Ofek, E., Burin, D. & Maselli, A. The Self-Avatar Follower Effect in Virtual Reality. In 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) 18–25 (IEEE, 2020).

Asai, T. Illusory body-ownership entails automatic compensative movement: For the unified representation between body and action. Exp. brain Res. 233, 777–785 (2015).

Kammers, M. P. M., de Vignemont, F., Verhagen, L. & Dijkerman, H. C. The rubber hand illusion in action. Neuropsychologia 47, 204–211 (2009).

Wann, J. P. & Ibrahim, S. F. Does limb proprioception drift?. Exp. Brain Res. 91, 162–166 (1992).

Abdulkarim, Z. & Ehrsson, H. H. Recalibration of hand position sense during unconscious active and passive movement. Exp. brain Res. 236, 551–561 (2018).

Todorov, E. & Jordan, M. I. Optimal feedback control as a theory of motor coordination. Nat. Neurosci. 5, 1226–1235 (2002).

Franklin, D. W. & Wolpert, D. M. Computational mechanisms of sensorimotor control. Neuron 72, 425–442 (2011).

Friston, K. The free-energy principle: A unified brain theory?. Nat. Rev. Neurosci. 11, 127–138 (2010).

Friston, K. J., Daunizeau, J., Kilner, J. & Kiebel, S. J. Action and behavior: A free-energy formulation. Biol. Cybern. 102, 227–260 (2010).

Slater, M., Pérez Marcos, D., Ehrsson, H. & Sanchez-Vives, M. V. Towards a digital body: The virtual arm illusion. Front. Hum. Neurosci. 2, 6 (2008).

Friston, K., FitzGerald, T., Rigoli, F., Schwartenbeck, P. & Pezzulo, G. Active inference: A process theory. Neural Comput. 29, 1–49 (2017).

Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36, 181–204 (2013).

Tschantz, A., Seth, A. K. & Buckley, C. L. Learning action-oriented models through active inference. PLoS Comput. Biol. 16, e1007805 (2020).

Friston, K. J. et al. Dopamine, affordance and active inference. PLoS Comput Biol 8, e1002327 (2012).

Oliver, G., Lanillos, P. & Cheng, G. An empirical study of active inference on a humanoid robot. IEEE Trans. Cogn. Dev. Syst. https://doi.org/10.1109/TCDS.2021.3049907 (2021).

Lanillos, P. & Cheng, G. Adaptive robot body learning and estimation through predictive coding. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 4083–4090 (IEEE, 2018).

Limanowski, J. & Friston, K. Active inference under visuo-proprioceptive conflict: Simulation and empirical results. Sci. Rep. 10, 1–14 (2020).

Brown, H., Adams, R. A., Parees, I., Edwards, M. & Friston, K. Active inference, sensory attenuation and illusions. Cogn. Process. 14, 411–427 (2013).

Longo, M. R., Cardozo, S. & Haggard, P. Visual enhancement of touch and the bodily self. Conscious. Cogn. 17, 1181–1191 (2008).

Guterstam, A., Larsson, D. E. O., Zeberg, H. & Ehrsson, H. H. Multisensory correlations-not tactile expectations-determine the sense of body ownership. PLoS ONE 14, 1–16 (2019).

Bogacz, R. A tutorial on the free-energy framework for modelling perception and learning. J. Math. Psychol. 76, 198–211 (2017).

Shimada, S., Fukuda, K. & Hiraki, K. Rubber hand illusion under delayed visual feedback. PLoS One 4, e6185 (2009).

Maselli, A., Kilteni, K., López-Moliner, J. & Slater, M. The sense of body ownership relaxes temporal constraints for multisensory integration. Sci. Rep. 6, 30628 (2016).

Samad, M., Chung, A. J. & Shams, L. Perception of body ownership is driven by Bayesian sensory inference. PLoS One 10(2), e0117178 (2015).

Fang, W. et al. Statistical inference of body representation in the macaque brain. Proc. Natl. Acad. Sci. 116, 20151–20157 (2019).

Seth, A. K. Interoceptive inference, emotion, and the embodied self. Trends Cogn. Sci. 17, 565–573 (2013).

Apps, M. A. J. & Tsakiris, M. The free-energy self: A predictive coding account of self-recognition. Neurosci. Biobehav. Rev. 41, 85–97 (2014).

Lanillos, P., Pages, J. & Cheng, G. Robot self/other distinction: Active inference meets neural networks learning in a mirror. In 24th European Conference on Artificial Intelligence (ECAI 2020) (2020).

Hoffmann, M., Wang, S., Outrata, V., Alzueta, E. & Lanillos, P. Robot in the mirror: Toward an embodied computational model of mirror self-recognition. KI Künstliche Intelligenz https://doi.org/10.1007/s13218-020-00701-7 (2021).

Pezzulo, G., Rigoli, F. & Friston, K. Active inference, homeostatic regulation and adaptive behavioural control. Prog. Neurobiol. 134, 17–35 (2015).

Calvert, G. A. Crossmodal processing in the human brain: Insights from functional neuroimaging studies. Cereb. cortex 11, 1110–1123 (2001).

Tsakiris, M. My body in the brain: A neurocognitive model of body-ownership. Neuropsychologia 48, 703–712 (2010).

Ehrsson, H. H., Spence, C. & Passingham, R. E. That’s my hand! Activity in premotor cortex reflects feeling of ownership of a limb. Science (80-. ). 305, 875–877 (2004).

Brugada-Ramentol, V., Clemens, I. & de Polavieja, G. G. Active control as evidence in favor of sense of ownership in the moving Virtual Hand Illusion. Conscious. Cogn. 71, 123–135 (2019).

Rohde, M., Di Luca, M. & Ernst, M. O. The rubber hand illusion: Feeling of ownership and proprioceptive drift do not go hand in hand. PLoS One 6, e21659 (2011).

Kalckert, A. & Ehrsson, H. H. The moving rubber hand illusion revisited: Comparing movements and visuotactile stimulation to induce illusory ownership. Conscious. Cogn. 26, 117–132 (2014).

Abdulkarim, Z. & Ehrsson, H. H. No causal link between changes in hand position sense and feeling of limb ownership in the rubber hand illusion. Attent. Percept. Psychophys. https://doi.org/10.3758/s13414-015-1016-0 (2015).

Petkova, V. & Ehrsson, H. If I were you: Perceptual illusion of body swapping. PLoS One 3(12), e3832 (2008).

van den Bos, E. & Jeannerod, M. Sense of body and sense of action both contribute to self-recognition. Cognition 85, 177–187 (2002).

Kalckert, A. & Ehrsson, H. H. Moving a rubber hand that feels like your own: A dissociation of ownership and agency. Front. Hum. Neurosci. 6, 40 (2012).

Rood, T., van Gerven, M. & Lanillos, P. A deep active inference model of the rubber-hand illusion. In International Workshop on Active Inference. European Conference on Machine Learning (ECML/PKDD 2020) (2020).

Butz, M. V., Kutter, E. F. & Lorenz, C. Rubber hand illusion affects joint angle perception. PLoS One 9, e92854 (2014).

Buckley, C. L., Kim, C. S., McGregor, S. & Seth, A. K. The free energy principle for action and perception: A mathematical review. J. Math. Psychol. 81, 55–79 (2017).

Sancaktar, C., van Gerven, M. & Lanillos, P. End-to-end pixel-based deep active inference for body perception and action. In IEEE International Conference on Development and Learning and on Epigenetic Robotics (2020).

Friston, K., Mattout, J., Trujillo-Barreto, N., Ashburner, J. & Penny, W. Variational free energy and the Laplace approximation. Neuroimage 34, 220–234 (2007).

Howard, I. S., Ingram, J. N. & Wolpert, D. M. A modular planar robotic manipulandum with end-point torque control. J. Neurosci. Methods 181, 199–211 (2009).

Acknowledgements

The authors would like to thank Gordon Cheng for his advice and support, and Mohamad Atayi for the development of the VR environment and his help in the preliminary study. This work was partially supported by the MSCA SELFCEPTION project (www.selfception.eu) EU H2020 Grant No. 741941. A.M. was supported by Office of Naval Research Global (ONRG, Award N62909-19-1-2017).

Author information

Authors and Affiliations

Contributions

P.L. conceived of the idea, developed the computational model and performed the model analysis. P.L., S.F., D.F. designed and planned the experiments. P.L. and S.F. collected the data. A.M. and D.F. performed the human analysis. P.L. and A.M. drafted the manuscript. P.L and D.F. designed the figures. All authors revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lanillos, P., Franklin, S., Maselli, A. et al. Active strategies for multisensory conflict suppression in the virtual hand illusion. Sci Rep 11, 22844 (2021). https://doi.org/10.1038/s41598-021-02200-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-02200-7

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.