Abstract

The BB84 quantum key distribution (QKD) combined with decoy-state method is currently the most practical protocol, which has been proved secure against general attacks in the finite-key regime. Thereinto, statistical fluctuation analysis methods are very important in dealing with finite-key effects, which directly affect secret key rate, secure transmission distance and most importantly, the security. There are two tasks of statistical fluctuation in decoy-state BB84 QKD. One is the deviation between expected value and observed value for a given expected value or observed value. The other is the deviation between phase error rate of computational basis and bit error rate of dual basis. Here, we provide the rigorous and optimal analytic formula to solve the above tasks, resulting to higher secret key rate and longer secure transmission distance. Our results can be widely applied to deal with statistical fluctuation in quantum cryptography protocols.

Similar content being viewed by others

Introduction

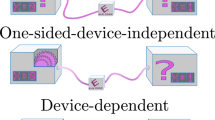

So far, there have existed many kinds of protocols describing how quantum key distribution (QKD)1,2 works, such as the Bennett–Brassard—1984 (BB84)3, Bennett–Brassard–Mermin—19924, Bennett—19925, six-state6, continuous variable7,8 and measurement-device-independent9,10,11 protocols. Although different protocols contain different processes, they all serve the same purpose to guarantee that two parties, named Alice and Bob, can share a string of key data through a channel fully controlled by an eavesdropper, named Eve3. Unlike some computational assumptions, these protocols are all proven to be secure with fundamental physical laws in the recent years12,13,14,15,16,17,18, which shows the great advantage in information transmitting that QKD holds. BB84 stands out as the most important protocol due to its best overall performance. However, implementations of the BB84 protocol differ from the original theoretical proposal. For an ideal single-photon source is not available yet, in actuality, a weak pulsed laser source is in place of it. Nevertheless, there is a critical flaw in the weak pulsed laser source that an non-negligible part of laser pulses contains more than one photon, which will be exploited by Eve through the photon-number-splitting (PNS) attack19. To address this drawback with high channel loss, the decoy-state method is introduced20,21,22.

The source will generate the phase-randomized coherent state in decoy-state method, which can be regarded as the mixed photon number state. The essence of the decoy state idea can be summarized as that the yield (bit error rate) of n-photon in signal state is equal to that in decoy state. However, this equal-yield condition can only be established under the asymptotic-key regime. The expected value of yield (bit error rate) of n-photon in signal state and decoy state are identical while the corresponding observed value cannot be assumed to be the same in the finite-key regime. By exploiting the decoy-state method, one can establish the linear system of equations about the expected values to obtain expected value of yield (bit error rate) of the single-photon component, where we need estimate the expected value of some parameters given by the known observed values. Actually, the observed value of yield (bit error rate) of the single-photon component in the key extraction data is what we really need, where we must estimate the observed value given by the known expected value.

The Gaussian analysis method23 is first proposed to deal with the the deviation between expected value and observed value given by the known observed value. The Gaussian analysis method is not rigorous because of the identically distributed assumption, which can only be valid in the collective attack. Resulting the extracted secret key cannot be secure against the coherent attack. Recently, the multiplicative form Chernoff bound24 and Hoeffding inequality25 methods are proposed to remove the identically distributed assumption, respectively. However, there is a considerable gap between the secret key rate bounds obtained from Chernoff–Hoeffding method and that obtained from the Gaussian analysis. In order to close this gap, the inverse solution Chernoff bound method26 is presented, which achieves a similar performance with Gaussian analysis. Here, we should point out that the inverse solution Chernoff bound method also seems to be not rigorous. An important assumption in Chernoff bound is that one should have the prior knowledge of expected value. However, the problem that we have in hand is the opposite that we need to estimate expected value for a given observed value. This is why the multiplicative form Chernoff bound is somehow complex and carefully tailored. A direct criterion is that the lower bound result of inverse solution Chernoff bound is superior to the Gaussian analysis when one has a small observed value. Note that the result of Gaussian analysis should be optimal because the identically distributed assumption is a special case.

For BB84 protocol, one need bound the the conditional smooth min-entropy27, which relates to the phase error rate. The phase error rate cannot be directly observed, which can only be estimated by using the random sampling without replacement theory for security against the general attacks. A hypergeometric distribution method28 is first proposed to deal with the deviation between phase error rate of computational basis and bit error rate of dual basis in the finite-key regime. By using the inequality scaling technique, a numerical equation solution by using Shannon entropy function29 is acquired to estimate the phase error rate. Based on this, an analytical solution is obtained when the data size is large25. A looser analytical solution is using the Serfling inequality24. By exploiting the Ahrens map for Hypergeometric distribution, one uses Clopper–Pearson confidence interval30 replace the Serfling inequality. Recently, a specifically tailored analytical solution is acquired31, which achieves a big advantage compared to Serfling inequality. Here, we should point out that the specifically tailored analytical solution31 for random sampling without replacement is incorrect. The inequality scaling of binomial coefficient and Eq. (11) in supplementary information of Ref.31 is wrong.

In order to further improve the secret key rate in the case of high-loss, some authors of us have developed the tightest method to solve the above two tasks of statistical fluctuation32. Thereinto, the numerical equation of Chernoff bound is used to estimate the observed value for a given expected value. A numerical equation of Chernoff bound’s variant is exploited to obtain the expected value for a given observed value. A numerical equation relating to the hypergeometric distribution is directly applied to acquire the phase error rate for a given bit error rate. These numerical equation solutions are very tight but they are very inconvenient to use. On the one hand, it will be very time consuming if we optimize the system parameters globally by solving transcendental equations. On the other hand, it is a challenge to solve transcendental equations for each time post-processing in commercial QKD system with hardware. In this work we present the optimal analytical formulas to solve the two tasks of statistical fluctuation by using the rigorous inequality scaling technique. Furthermore, we establish the complete finite-key analysis for decoy-state BB84 QKD with composable security. The simulation results show that the secret key rate and secure transmission distance of our method have a significant advantage compared with previous rigorous methods.

Results

Statistical fluctuation analysis

We let \(x^*\) be the expected value, x be the observed value, \({\underline{x}}\) and \({\overline{x}}\) be the lower and upper bound of x. Here, we first introduce the numerical equation result of Ref.32. Then we present the tight analytical formulas by using the rigorous inequality scaling technique, which are the slightly looser bounds than those obtained by solving equations.

Random sampling without replacement

Let \(X_{n+k}\)\(:=\{x_1,x_2,...,x_{n+k}\}\) be a string of binary bits with \(n+k\) size, in which the number of bits value is unknown. Let \(X_k\) be a random sample (without replacement) bit string with k size from \(X_{n+k}\). Let \(\lambda \) be the probability of bit value 1 observed in \(X_k\). Let \(X_n\) be the remaining bit string, where the probability of bit value 1 observed in \(X_n\) is \(\chi \). Then, in this article, we let \(C^j_i=\frac{i!}{j!(i-j)!}\) be the binomial coefficient. For any \(\epsilon > 0\), we have the upper tail \(\mathrm{Pr}[\chi \ge \lambda + \gamma ^{U}] \le \epsilon \), where \(\gamma ^{U}\) represents \(\gamma ^{U}(n,k,\lambda ,\epsilon )\) and \(\gamma ^{U}\) is the positive root of the following equation32

Calculating Eq. (1), we get numerical results of \(\gamma ^{U}\), corresponding to the upper bound of the random sampling without replacement. Solving transcendental equation Eq. (1) is usually very complicated. Here, we are going to make use of some techniques mathematically to get rigorous tight analytical result. Detailed proof can be found in “Methods” section. For the upper tail, let \(0<\lambda <\chi \le 0.5\), we have the analytical result

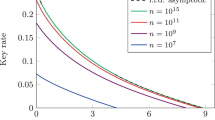

where \(A=\max \{n,k\}\) and \(G=\frac{n+k}{nk}\ln {\frac{n+k}{2\pi nk\lambda (1-\lambda )\epsilon ^{2}}}\). Therefore, the upper bound of \(\chi \) can be given by \(\chi =\lambda +\gamma ^{U}\) with a failure probability \(\epsilon \). Figure 1 shows the comparison results between our method and previous method24,25,26,32, which means that our analytic result is optimal and closes to the numerical results.

Comparison of the random sampling without replacement for five methods: our analytic result, analytic result with Serfling inequality24, approximate analytic result25, numerical result with Shannon entropy function26 and optimal numerical result with binomial coefficient32. Let \(n=10^{5}\) and failure probablity \(\epsilon =10^{-10}\).

Chernoff bound

Let \(X_1, X_2...,X_N\) be a set of independent Bernoulli random variables that satisfy \(\mathrm{Pr}(X_i=1)=p_i\) (not necessarily equal), and let \(X:=\sum _{i=1}^NX_i\). The expected value of X is denoted as \(x^*:=E[X]=\sum _{i=1}^Np_i\). An observed value of X is represented as x for a given trial. Note that, we have \(x\ge 0\), \(x^*\ge 0\), \(x^*\) is known and x is unknown. For any \(\epsilon >0\), we have the upper tail \(\mathrm{Pr}[x\ge (1+\delta ^{U})x^*]\le \epsilon \), where \(\delta ^{U}\) represents \(\delta ^{U}(x^{*},\epsilon )\) and \(\delta ^{U}>0\) is the positive root of the following equation32

For any \(\epsilon >0\), we have the lower tail \(\mathrm{Pr}[x\le (1-\delta ^{L})x^{*}]\le \epsilon \), where \(\delta ^{L}\) represents \(\delta ^{L}(x^{*},\epsilon )\) and \(0<\delta ^{L}\le 1\) is the positive root of the following equation32

By solving Eqs. (3) and (4), we get numerical results of \(\delta ^{U}\) and \(\delta ^{L}\), corresponding to the upper bound and lower bound. Solving transcendental equations Eqs. (3) and (4) are usually very complicated. For the upper tail, by using the inequality \(\ln (1+\delta ^{U})>2\delta ^{U}/(2+\delta ^{U})\) in Eq. (3), we have the analytical result

where we let \(\beta =\ln \epsilon ^{-1}\). For the lower tail, by using the inequality \(-\ln (1-\delta ^{L})<\delta ^{L}(2-\delta ^{L})/[2(1-\delta ^{L})]\) in Eq. (4), we have the analytical result

Therefore, the lower and upper bound of observed value x for a given expected value \(x^*\) can be given by \({\overline{x}}=x^{*}+\frac{\beta }{2}+\sqrt{2\beta x^{*}+\frac{\beta ^2}{4}}\) and \({\underline{x}}=x^{*}-\sqrt{2\beta x^{*}}\) with a failure probability \(\epsilon \), respectively. Note that we must have the lower bound \({\underline{x}}\ge 0\). The analytic result of upper bound in Eq. (5) is also acquired in Ref.26 while we obtain more optimal lower bound in Eq. (6).

Variant of Chernoff bound

Let \(X_1, X_2...,X_N\) be a set of independent Bernoulli random variables that satisfy \(\mathrm{Pr}(X_i=1)=p_i\) (not necessarily equal), and let \(X:=\sum _{i=1}^NX_i\). The expected value of X is denoted as \(x^*:=E[X]=\sum _{i=1}^Np_i\). An observed outcome of X is represented as x for a given trial. Note that, we have \(x\ge 0\), \(x^*\ge 0\), x is known and \(x^*\) is unknown. For any \(\epsilon >0\), we have the upper tail \(\mathrm{Pr}[x^{*}\le x+\Delta ^{U}]\), where we use \(\Delta ^{U}\) represents \(\Delta ^{U}(x,\epsilon )\) and \(\Delta ^{U}\) is the positive root of the following equation32

For any \(\epsilon >0\), we have the upper tail \(\mathrm{Pr}[x^{*}\ge x+\Delta ^{L}]\), where \(\Delta ^{L}\) represents \(\Delta ^{L}(x,\epsilon )\) and \(\Delta ^{L}\) is the positive root of the following equation32

By solving Eqs. (7) and (8), we get numerical results of \(\Delta ^{U}\) and \(\Delta ^{L}\), corresponding to the upper bound and lower bound. Solving transcendental equations Eqs. (7) and (8) are usually very complicated. For the upper tail, by using the inequality \(\ln \left( 1+\frac{\Delta ^{U}}{x}\right) <\frac{\Delta ^{U}}{x}\left( 2+\frac{\Delta ^{U}}{x}\right) /\left[ 2\left( 1+\frac{\Delta ^{U}}{x}\right) \right] \) in Eq. (7), we have the analytical result

For the lower tail, by using the inequality \(\ln \left( 1+\frac{\Delta ^{L}}{x}\right) >2\frac{\Delta ^{L}}{x}/\left( 2+\frac{\Delta ^{L}}{x}\right) \) in Eq. (8), we have the analytical result

Therefore, the lower and upper bound of expected value \(x^{*}\) for a given observed value x can be given by \({\overline{x}}^{*}=x+\beta +\sqrt{2\beta x+\beta ^2}\) and \({\underline{x}}^{*}=x-\frac{\beta }{2}-\sqrt{2\beta x+\frac{\beta ^2}{4}}\) with a failure probability \(\epsilon \), respectively. Note that we must have the lower bound \({\underline{x}}^{*}\ge 0\). Utilizing a simple function transformation, the numerical result of upper bound \({\overline{x}}^{*}\) with Eq. (7) is the same as (Eq. (28) in this paper) in Ref.26, while the analytic result of upper bound is more optimal in this work. The numerical result of lower bound \({\underline{x}}^{*}\) with Eq. (8) is different from that in Ref.26, and the difference between two analytic results of lower bound is only \(\beta \). However, we should point out that our result is always inferior to the Gaussian analysis, while the result of Ref.26 is superior to the Gaussian analysis given a small observed value, details can be found in Fig. 2. It means that our result is rigorous while that of Ref.26 is not. The case of small observed value is very important since the vacuum state is widely used in decoy-state method, especially for the experiment of measurement-device-independent QKD33.

Finite-key analysis for decoy-state BB84 QKD

We exploit our statistical fluctuation analysis methods to deal with finite-key effects against coherent attacks25,34 for BB84 QKD with two decoy states. Note that the four-intensity protocol35 usually has better performance. Compared with previous results24,25,26, we provide the complete extractable secret key formula. For example, the number of vacuum component events, the number of single-photon component events, and the phase error rate associated with the single-photons component events are all required to use observed values in the extractable secret key formula, while all or part of them are taken as the expected values in Ref.24,25,26. Obviously, they are observed values, for instance, the QKD system with single-photon source27.

The asymmetric coding BB84 protocol, based on which we consider our protocol, means that the bases \({\mathsf {Z}}\) and \({\mathsf {X}}\) are chosen with biased probabilities, both when Alice prepare the quantum states and when Bob measure those states. Furthermore, intended to simplifying the protocol a little, we let the secret key be extracted only if Alice and Bob both choose the \({\mathsf {Z}}\) basis. Also, for the same purpose, the protocol will be built on the transmission of phase-randomized laser pulses and makes use of vacuum and weak decoy states. Below we provide a detailed description of the protocol with active basis choosing.

-

1.

Preparation The first three steps are repeated by Alice and Bob for \(i=1,\ldots ,N\) until the conditions in the reconciliation step are satisfied. Alice will prepare weak coherent pulse and encode under the \(\{{\mathsf {Z}},{\mathsf {X}}\}\) basis, along with an intensity \(k \in \{\mu ,\nu ,0 \}\). Let the probability of choosing \({\mathsf {Z}}\) and \({\mathsf {X}}\) basis be \(p_z\) and \(p_x=1-p_z\). Simultaneously, the probabilities of selecting intensities are \(p_{\mu }\), \(p_{\nu }\) and \(p_{0}=1-p_{\mu }-p_{\nu }\), respectively. Then Alice sends the weak coherent pulse to Bob through the insecure quantum channel.

-

2.

Measurement When receiving the pulse, Bob also chooses a basis \({\mathsf {Z}}\) and \({\mathsf {X}}\) with probabilities \(q_{\mathrm{z}}\) and \(q_{\mathrm{x}}=1-q_{\mathrm{z}}\), respectively. Then, he measures the state with two single-photon detectors in that basis. An effective event represents at least one detector click. For double detector click event, he acquires a random bit value.

-

3.

Reconciliation Alice and Bob share the effective event, basis and intensity information with each other using an authenticated classical channel. We use the following sets \({\mathcal {Z}}_{k}\) (\({\mathcal {X}}_{k}\)), which identifies signals where both Alice and Bob select the basis \({\mathsf {Z}}\) (\({\mathsf {X}}\)) for k intensity. Then, they check for \(|{\mathcal {Z}}_{k}| \ge n^{{\mathsf {Z}}}_{k}\) and \(|{\mathcal {X}}_{k}| \ge n^{\mathsf {X}}_{k}\) for all values of k. They repeat step 1 to step 3 until these conditions are satisfied. We remark that the vacuum state prepared by Alice has no basis information.

-

4.

Parameter estimation After reconciling the basis and intensity choices, Alice and Bob will select a size of \(n^{{\mathsf {Z}}}=n^{{\mathsf {Z}}}_{\mu }+n^{{\mathsf {Z}}}_{\nu }\) to get a raw key pair \(({\mathbf{Z}}_{\mathrm{A}},{\mathbf{Z}}_{\mathrm{B}})\). All sets are used to compute the number of vacuum events \(s_0^{\mathsf {Z}}\) and single-photon events \(s_1^{\mathsf {Z}}\) and the phase error rate of single-photon events \(\phi _1^{\mathsf {Z}}\) in \({\mathbf{Z}}_{\mathrm{A}}\). After that, a condition should be met that the phase error rate \(\phi _1^{\mathsf {Z}}\) is less than \( \phi _{{\mathrm{tol}}}\), where \(\phi _{\mathrm{tol}}\) is a predetermined phase error rate. If not, Alice and Bob abort the results and get started again. Otherwise, they move on to step 5.

-

5.

Postprocessing First, Alice and Bob operate an error correction, where they reveal at most \(\lambda _{\mathrm{EC}}\) bits of information. Then, an error-verification step is performed using a random universal\(_{2}\) hash function that announces \(\lceil \log _2\frac{1}{\varepsilon _{\mathrm{cor}}}\rceil \) bits of information36, where \(\varepsilon _{\mathrm{cor}}\) is the probability that a pair of nonidentical keys passes the error-verification step. At last, there is a privacy amplification on their keys to get a secret key pair (\(\mathbf{S }_{\mathrm{A}}\),\(\mathbf{S }_{\mathrm{B}}\)), both of which are \(\ell \) bits, by using a random universal\(_{2}\) hash function.

Before stating how to calculate the security bound, we will spell out our security criteria, i.e., the so-called universally composable framework37. We have two criteria (\(\varepsilon _{\mathrm{cor}}\) and \(\varepsilon _{\mathrm{sec}}\)) to determine how secure of our protocol. If \(\Pr [{\mathbf{S}}_{\mathrm{A}}\not ={\mathbf{S}}_{\mathrm{B}}] \le \varepsilon _{\mathrm{cor}}\), which means the secret keys are identical except with a small probability \(\varepsilon _{\mathrm{cor}}\), we can call it is \(\varepsilon _{\mathrm{cor}}\)-correct. Meanwhile, if \((1-p_{\mathrm{abort}})\Vert \rho _{\mathrm{AE}}-U_{\mathrm{A}} \otimes \rho _{\mathrm{E}}\Vert _1/2 \le \varepsilon _{\mathrm{sec}}\), we can call it is \(\varepsilon _{\mathrm{sec}}\)-secret. Thereinto, \(\rho _{\mathrm{AE}}\) is the classical-quantum state describing the joint state of \({\mathbf{S}}_{\mathrm{A}}\) and \({\mathbf{E}}\), \(U_{\mathrm{A}}\) is the uniform mixture of all possible values of \({\mathbf{S}}_{\mathrm{A}}\), and \(p_{\mathrm{abort}}\) is the probability that the protocol aborts. This security criterion guarantees that the pair of secret keys can be unconditionally safe to use, we can call the protocol is \(\varepsilon \)-secure if it is \(\varepsilon _{\mathrm{cor}}\)-correct and \(\varepsilon _{\mathrm{sec}}\)-secret with \(\varepsilon _{\mathrm{cor}}+\varepsilon _{\mathrm{sec}}\le \varepsilon \).

The protocol is \(\varepsilon _{\mathrm{sec}}\)-secret if the secret key of length \(\ell \) satisfies25

where \(h(x):=-x\log _2x-(1-x)\log _2(1-x)\) is the binary Shannon entropy function. Note that observed values \({\underline{s}}_0^{\mathsf {Z}}\), \({\underline{s}}_1^{\mathsf {Z}}\) and \({\overline{\phi }}_1^{\mathsf {Z}}\) are the lower bound for the number of vacuum events, the lower bound for the number of single-photon events, and the upper bound for the phase error rate associated with the single-photons events in \({\mathbf{Z}}_{\mathrm{A}}\), respectively. Here, we simply assume an error correction leakage \(\lambda _{\mathrm{EC}} = n^{{\mathsf {Z}}}\zeta h(E^{{\mathsf {Z}}})\), with the efficiency of error correction \(\zeta = 1.22\) and the bit error rate \(E^{{\mathsf {Z}}}\) in \(({\mathbf{Z}}_{\mathrm{A}},{\mathbf{Z}}_{\mathrm{B}})\).

Let \(n^{{\mathsf {Z}}}_{k}\) and \(n^{\mathsf {X}}_{k}\) are the observed number of bit in set \({\mathcal {Z}}_{k}\) and \({\mathcal {X}}_{k}\). Let \(m^{{\mathsf {Z}}}_{k}\) and \(m^{\mathsf {X}}_{k}\) denote the observed number of bit error in set \({\mathcal {Z}}_{k}\) and \({\mathcal {X}}_{k}\). Note that one cannot obtain the \(m^{{\mathsf {Z}}}_{\mu }\) and \(m^{{\mathsf {Z}}}_{\nu }\), which we just hypothetically use to estimate the error correction information. The bit error rate is \(E^{{\mathsf {Z}}}=(m^{{\mathsf {Z}}}_{\mu }+m^{{\mathsf {Z}}}_{\nu })/n^{{\mathsf {Z}}}\). By using the decoy-state method for finite sample sizes, we can have the lower bound on the expected numbers of vacuum event \({\underline{s}}_0^{{\mathsf {Z}}^*}\) and single-photon event \({\underline{s}}_1^{{\mathsf {Z}}^{*}}\) in \({\mathbf{Z}}_{\mathrm{A}}\),

where \({\underline{n}}_0^{{\mathsf {Z}}^*}\) and \({\underline{n}}_\nu ^{{\mathsf {Z}}^*}\) (\({\overline{n}}_\mu ^{{\mathsf {Z}}^*}\) and \({\overline{n}}_0^{{\mathsf {Z}}^*}\)) are the lower (upper) bound of expected values associated with the observed values \(n_0^{{\mathsf {Z}}}\) and \(n_\nu ^{{\mathsf {Z}}}\) (\(n_\mu ^{{\mathsf {Z}}}\) and \(n_0^{{\mathsf {Z}}}\)). We can also calculate the lower bound on the expected number of single-photon event \({\underline{s}}_1^{\mathsf {X}^{*}}\) and the upper bound on the expected number of bit error \({\underline{t}}_1^{\mathsf {X}^{*}}\) associated with the single-photon event in \({\mathcal {X}}_{\mu }\cup {\mathcal {X}}_{\nu }\),

where we use a fact that expected value \(m_{0}^{\mathsf {X}^*}\equiv n_{0}^{\mathsf {X}^*}/2\). Parameters \({\underline{n}}_0^{\mathsf {X}^*}\) and \({\underline{n}}_\nu ^{\mathsf {X}^*}\) (\({\overline{n}}_\mu ^{\mathsf {X}^*}\), \({\overline{n}}_0^{\mathsf {X}^*}\) and \({\overline{m}}_{\nu }^{\mathsf {X}^{*}}\)) are the lower (upper) bound of expected values associated with the observed values \(n_0^{\mathsf {X}}\) and \(n_\nu ^{\mathsf {X}}\) (\(n_\mu ^{\mathsf {X}}\), \(n_0^{\mathsf {X}}\) and \(m_{\nu }^{\mathsf {X}}\)). The nine expected values \({\underline{n}}_0^{{\mathsf {Z}}^*}\), \({\underline{n}}_\nu ^{{\mathsf {Z}}^*}\), \({\overline{n}}_\mu ^{{\mathsf {Z}}^*}\), \({\overline{n}}_0^{{\mathsf {Z}}^*}\), \({\underline{n}}_0^{\mathsf {X}^*}\), \({\underline{n}}_\nu ^{\mathsf {X}^*}\), \({\overline{n}}_\mu ^{\mathsf {X}^*}\), \({\overline{n}}_0^{\mathsf {X}^*}\) and \({\overline{m}}_{\nu }^{\mathsf {X}^{*}}\) can be obtained by using the variant of Chernoff bound with Eqs. (9) and (10) for each parameter with failure probability \(\varepsilon _{\mathrm{sec}}/23\), for example, \({\underline{n}}_\nu ^{{\mathsf {Z}}^*}=n_\nu ^{{\mathsf {Z}}}-\Delta ^{L}(n_\nu ^{{\mathsf {Z}}},\varepsilon _{\mathrm{sec}}/23)\).

Once acquiring the four expected values \({\underline{s}}_0^{{\mathsf {Z}}^*}\), \({\underline{s}}_1^{{\mathsf {Z}}^*}\), \({\underline{s}}_1^{\mathsf {X}^*}\) and \({\overline{t}}_1^{\mathsf {X}^{*}}\), one can exploit the Chernoff bound with Eqs. (5) and (6) to calculate the corresponding observed values \({\underline{s}}_0^{{\mathsf {Z}}}\), \({\underline{s}}_1^{{\mathsf {Z}}}\), \({\underline{s}}_1^{\mathsf {X}}\) and \({\overline{t}}_1^{\mathsf {X}}\) for each parameter with failure probability \(\varepsilon _{\mathrm{sec}}/23\), for example, \({\underline{s}}_1^{{\mathsf {Z}}}={\underline{s}}_1^{{\mathsf {Z}}^*}(1-\delta ^{L}({\underline{s}}_1^{{\mathsf {Z}}^*},\varepsilon _{\mathrm{sec}}/23))\). By using the random sampling without replacement with Eq. (2), one can calculate the upper bound of hypothetically observed phase error rate associated with the single-photon events in \({\mathbf{Z}}_{\mathrm{A}}\),

In order to show the performance of our method in terms of the secret key rate and the secure transmission distance, we consider a fiber-based QKD system model with active basis choosing measurement. We use the widely used parameters of a practical QKD system38, as listed in Table 1. For a given experiment, one can directly acquire the parameters \(n_{k}^{{\mathsf {Z}}}\), \(n_{k}^{\mathsf {X}}\), \(m_{k}^{{\mathsf {Z}}}\) and \(m_{k}^{\mathsf {X}}\). For simulation, we can use the formulas \(n_k^{\mathsf {Z}}=Np_{k}p_{z}q_{z} Q_k^{\mathsf {Z}}\), \(n_k^\mathsf {X}=Np_{k}p_{x}q_{x} Q_k^\mathsf {X}\), \(m_k^{\mathsf {Z}}=Np_{k}p_{z}q_{z} E_k^{\mathsf {Z}}Q_k^{\mathsf {Z}}\) and \(m_k^\mathsf {X}=Np_{k}p_{x}q_{x} E_k^\mathsf {X}Q_k^\mathsf {X}\), where \(Q_{k}^{{\mathsf {Z}}}\) and \(Q_{k}^{\mathsf {X}}\) are the gain of Z and X basis when Alice chooses optical pulses with intensity k. For vacuum state without basis information, we should reset \(n_0^{\mathsf {Z}}=Np_{0}q_{z} Q_0^{\mathsf {Z}}\), \(n_0^\mathsf {X}=Np_{0}q_{x} Q_0^\mathsf {X}\), \(m_0^{\mathsf {Z}}=Np_{0}q_{z} E_0^{\mathsf {Z}}Q_0^{\mathsf {Z}}\) and \(m_0^\mathsf {X}=Np_{0}q_{x} E_0^\mathsf {X}Q_0^\mathsf {X}\). \(E_{k}^{{\mathsf {Z}}}\) and \(E_{k}^{\mathsf {X}}\) are the bit error rate of Z and X basis when Alice chooses optical pulses with intensity k. Without loss of generality, these gain and bit error rate parameters can be given by23

where we assume that those observed values for different parameters can be denotes by their asymptotic values without Eve’s disturbance. \(\eta =\eta _{d}\times 10^{-\alpha L/10}\) is the overall efficiency with the fiber length L and single-photon detector (Table 1).

To show the advantage of our results compared with previous works24,25,26, we drew the curves about the secret key rate \(\ell /N\) as function of the fiber length, as shown in Fig. 3. For a given number of signals \(10^{10}\), only ten seconds in 1 GHz system, we optimize numerically \(\ell /N\) over all the free parameters. For fair comparison, we add a step about from expected value to observed value estimation for all curves, which is not taken into account in Refs.24,25. The corresponding methods of Refs.23,24,25,26 to deal with statistical fluctuation can be summarized in Methods. Note that the black dashed line uses the Gaussian analysis to obtain expected value instead of the inverse solution Chernoff bound method26. The simulation results show that the secret key rate and secure transmission distance of our method have significant advantage under the security against the general attacks.

Conclusion

In this work, we proposed the almost optimal analytical formulas to deal with the statistical fluctuation under the security against the general attacks. Analytical formulas of classical postprocessing can be expediently used in practical system, which do not introduce complex calculations of resource consumption. Our methods can directly increase the performance without changing the quantum process, which should be widely used to quantum cryptography protocols against the finite-size effects. In order to compare with previous works, we establish the complete finite-key analysis for decoy-state BB84 QKD, including from observed value to expected value, from expected value to observed value and from the observed bit error of \({\mathsf {X}}\) basis to hypothetical observed phase error of \({\mathsf {Z}}\) basis. We remark that the joint constraint method39 can further decrease the statistical fluctuation. However, we do not consider this issue in this paper due to the lack of the analytical solutions, which is difficult to implement in commercial systems. The secret key rate of decoy-state BB84 QKD is linear scaling with channel transmittance \(\eta \), which has been shown by the repeaterless PLOB bound40.

Methods

Proof of random sampling without replacement

Here, we use the technique of Ref.31 to acquire the correct analytical results. We remark that the result of Ref.31 is wrong due to the incorrect inequality scaling about binomial coefficient and Eq. (11) in supplementary information of Ref.31. Specifically, a sharp double inequality for binomial coefficient can be given by Ref.41,

where \(m>p\ge 1\) and \(x\ge 1\). Ref.31 directly substitutes \(p = \alpha \), \(m = 1\) and \(x=n\) to give a inequality about binomial coefficient. Actually, one has \(\alpha \le 0.5\) in the calculation of the phase error rate. Therefore, one cannot simply let \(p=\alpha \). Besides, there is a minus sign in Eq. (11) in supplementary information of Ref.31, thus, one cannot directly exploit \(\lambda \) to replace \(\lambda _{\mathrm{all}}\).

For the upper tail, the failure probability \(\epsilon \) can be bound by29,31,32 \(C_{k}^{k \lambda } C_{n}^{n \chi }/C_{n+k}^{(n+k)y}\), where \(y=\lambda +\frac{n}{n+k} \gamma \) and \(\chi =\lambda +\gamma \). Let \(F(\alpha , n)=\frac{\alpha ^{-\alpha n}(1-\alpha )^{-(1-\alpha ) n}}{\sqrt{2 \pi n \alpha (1-\alpha )}}\) and the sharp double inequality for binomial coefficient41

where we let \(p=1\), \(\alpha =\frac{1}{m}\) and \(mx=n\) in Eq. (16). One can give the following inequality for failure probability

where Shannon entropy function \(h(x)=-x \log _{2} x-(1-x) \log _{2}(1-x)\). Note that one can prove \(e^{\left( \frac{1}{8(n+k)y}+\frac{1}{12k}-\frac{1}{12k\lambda +1}-\frac{1}{12k(1-\lambda )+1}+\frac{1}{12 n}-\frac{1}{12 n\chi +1}-\frac{1}{12 n(1-\chi )+1}\right) }<1\) and \(\sqrt{\frac{y(1-y) }{\chi (1-\chi )}}<1\) for \(n,k>0\) and \(0<\lambda<y<\chi \le 0.5\). Thereby, the inequality can be given by

By using Taylor expanding for the case of \(n\ge k\), we have \(n h(\chi )+k h(\lambda )-(n+k) h(y) \le \frac{h^{\prime \prime }(y)}{2} \frac{\gamma ^{2} n k}{n+k}\), where \(h^{\prime \prime }(y)=-\frac{1}{y(1-y) \ln 2}\). Therefore, by solving a quadratic equation with one unknown, we have

where parameter \(G=\frac{n+k}{nk}\ln {\frac{n+k}{2\pi nk\lambda (1-\lambda )\epsilon ^{2}}}\). By using Taylor expanding for the case of \(n\le k\), we have the following inequalities \(n h(\chi )+k h(\lambda )-(n+k) h(y)\le n h(\lambda )+k h(\chi )-(n+k) h(z)\le \frac{h^{\prime \prime }(z)}{2} \frac{\gamma ^{2} n k}{n+k}\) where \(z=\lambda +\frac{k}{n+k} \gamma \) and \(h^{\prime \prime }(z)=-\frac{1}{z(1-z) \ln 2}\). Therefore, by solving a quadratic equation with one unknown, we have

Note that the above result is always true for all \(n,k>0\) and \(0<\lambda <\chi \le 0.5\).

Method in Ref.24

The upper bound of the random sampling without replacement can be calculated by using the Serfling inequality,

The upper bound and lower bound of expected value for a given observed value can be calculated by using the multiplicative form Chernoff bound as follows. We always can obtain the worst lower bound of expected value, \(\mu _{\mathrm{L}}=x-\sqrt{N/2\ln {\epsilon ^{-1}}}\), where N is the total number of random variables. Let \(test_1\), \(test_2\) and \(test_3\) denote, respectively, the following three conditions: \(\mu _{\mathrm{L}}\ge \frac{32}{9}\ln (2\epsilon _{1}^{-1})\), \(\mu _{\mathrm{L}}>3\ln \epsilon _{2}^{-1}\) and \(\mu _{\mathrm{L}}>\left( \frac{2}{2e-1}\right) ^{2}\ln \epsilon _{2}^{-1}\), and let \(g(x,y)=\sqrt{2x\ln {y^{-1}}}\). Now:

-

1.

When \(test_1\) and \(test_2\) are fulfilled, we have that \(\Delta ^{U}=g(x, \epsilon _{1}^4/16)\) and \(\Delta ^{L}=g(x, \epsilon _{2}^{3/2})\).

-

2.

When \(test_1\) and \(test_3\) are fulfilled (and \(test_2\) is not fulfilled), we have that \(\Delta ^{U}=g(x, \epsilon _{1}^4/16)\) and \(\Delta ^{L}=g(x, \epsilon _{2}^{2})\).

-

3.

When \(test_1\) is fulfilled and \(test_3\) is not fulfilled, we have that \(\Delta ^{U}=g(x, \epsilon _{1}^4/16)\) and \(\Delta ^{L}=\sqrt{N/2\ln {\epsilon _{2}^{-1}}}\).

-

4.

When \(test_1\) is not fulfilled and \(test_2\) is fulfilled, we have that \(\Delta ^{U}=\sqrt{N/2\ln {\epsilon _{1}^{-1}}}\) and \(\Delta ^{L}=g(x, \epsilon _{2}^{3/2})\).

-

5.

When \(test_1\) and \(test_2\) are not fulfilled, and \(test_3\) is fulfilled, we have that \(\Delta ^{U}=\sqrt{N/2\ln {\epsilon _{1}^{-1}}}\) and \(\Delta ^{L}=g(x, \epsilon _{2}^{2})\).

-

6.

When \(test_1\), \(test_2\) and \(test_3\) are not fulfilled, we have that \(\Delta ^{U}=\sqrt{N/2\ln {\epsilon _{1}^{-1}}}\) and \(\Delta ^{L}=\sqrt{N/2\ln {\epsilon _{2}^{-1}}}\)

To simplify this simulation, we consider the case of \(\epsilon =\epsilon _{1}=\epsilon _{2}\). For all observed value x, we make \({\overline{x}}^{*}=x+\Delta ^{U}\) and \({\underline{x}}^{*}=x-\Delta ^{L}\), where

Note that it is not rigorous in Eq. (23) for small x.

Method in Ref.25

The upper bound of the random sampling without replacement can be calculated by

where the result is true only when n and k are large.

The upper bound and lower bound of expected value for a given observed value can be calculated by using the tailored Hoeffding inequality for decoy-state method. Let \(x_{k}\) be the observed value for k intensity and \(X=\sum _{k}x_{k}\). Therefore, we have \({\overline{x}}_{k}^{*}=x_{k}+\Delta ^{U}\) and \({\underline{x}}_{k}^{*}=x_{k}-\Delta ^{L}\), where

Note that the deviation is the same for all intensities of k, which will lead large fluctuation for small intensity, especially vacuum state.

Method in Ref.26

The upper bound of the random sampling without replacement can be calculated by using the following transcendental equation,

The upper bound and lower bound of expected value for a given observed value can be calculated by using the Gaussian analysis. Therefore, we have \({\overline{x}}^{*}=x+\Delta ^{U}\) and \({\underline{x}}^{*}=x-\Delta ^{L}\) with

where \(a=\mathrm{erfcinv}(b)\) is the inverse function of \(b=\mathrm{erfc}(a)\) and \(\mathrm{erfc}(a)=\frac{2}{\sqrt{\pi }}\int _{a}^{\infty }e^{-t^2}dt\) is the complementary error function.

Furthermore, the upper bound and lower bound of expected value for a given observed value can also be calculated by using the inverse solution Chernoff bound. Therefore, we have \({\overline{x}}^{*}=x/(1-\delta ^{U})\) and \({\underline{x}}^{*}=x/(1+\delta ^{L})\), where \(\delta ^{U}\) and \(\delta ^{L}\) can be obtained by using the following transcendental equation,

while the slightly looser analytic result can be given by

and

Through simple calculation, the upper bound and lower bound are \({\overline{x}}^{*}=x+\frac{3}{2}\beta +\sqrt{2\beta x+\frac{9}{4}\beta ^{2}}\) and \({\underline{x}}^{*}=x+\frac{\beta }{2}-\sqrt{2\beta x+\frac{\beta ^{2}}{4}}\), respectively.

References

Pirandola, S. et al. Advances in Quantum Cryptography. arXiv:1906.01645.

Xu, F., Ma, X., Zhang, Q., Lo, H.-K. & Pan, J.-W. Secure quantum key distribution with realistic devices. Rev. Mod. Phys. 92, 025002 (2020).

Bennett, C. H. & Brassard, G. Quantum cryptography: Public key distribution and coin tossing. in Proceedings of the Conference on Computers, Systems and Signal Processing, 175–179 (IEEE Press, New York, 1984).

Bennett, C. H., Brassard, G. & Mermin, N. D. Quantum cryptography without Bells theorem. Phys. Rev. Lett. 68, 557 (1992).

Bennett, C. H. Quantum cryptography using any two nonorthogonal states. Phys. Rev. Lett. 68, 3121 (1992).

Bruß, D. Optimal eavesdropping in quantum cryptography with six states. Phys. Rev. Lett. 81, 3018 (1998).

Grosshans, F. & Grangier, P. Continuous variable quantum cryptography using coherent states. Phys. Rev. Lett. 88, 057902 (2002).

Weedbrook, C. et al. Quantum cryptography without switching. Phys. Rev. Lett. 93, 170504 (2004).

Braunstein, S. L. & Pirandola, S. Side-channel-free quantum key distribution. Phys. Rev. Lett. 108, 130502 (2012).

Lo, H.-K., Curty, M. & Qi, B. Measurement-device-independent quantum key distribution. Phys. Rev. Lett. 108, 130503 (2012).

Wang, X.-B. Three-intensity decoy-state method for device-independent quantum key distribution with basis-dependent errors. Phys. Rev. A 87, 012320 (2013).

Mayers, D. Unconditional security in quantum cryptography. J. ACM 48, 351–406 (2001).

Lo, H.-K. & Chau, H. F. Unconditional security of quantum key distribution over arbitrarily long distances. Science 283, 2050–2056 (1999).

Shor, P. W. & Preskill, J. Simple proof of security of the bb84 quantum key distribution protocol. Phys. Rev. Lett. 85, 441 (2000).

Tamaki, K., Koashi, M. & Imoto, N. Unconditionally secure key distribution based on two nonorthogonal states. Phys. Rev. Lett. 90, 167904 (2003).

Renner, R. Security of quantum key distribution. Int. J. Quant. Inf. 6, 1–127 (2008).

Koashi, M. Simple security proof of quantum key distribution based on complementarity. New J. Phys. 11, 045018 (2009).

Tomamichel, M. & Renner, R. Uncertainty relation for smooth entropies. Phys. Rev. Lett. 106, 110506 (2011).

Brassard, G., Lütkenhaus, N., Mor, T. & Sanders, B. C. Limitations on practical quantum cryptography. Phys. Rev. Lett. 85, 1330 (2000).

Hwang, W.-Y. Quantum key distribution with high loss: Toward global secure communication. Phys. Rev. Lett. 91, 057901 (2003).

Wang, X.-B. Beating the photon-number-splitting attack in practical quantum cryptography. Phys. Rev. Lett. 94, 230503 (2005).

Lo, H.-K., Ma, X. & Chen, K. Decoy state quantum key distribution. Phys. Rev. Lett. 94, 230504 (2005).

Ma, X., Qi, B., Zhao, Y. & Lo, H.-K. Practical decoy state for quantum key distribution. Phys. Rev. A 72, 012326 (2005).

Curty, M. et al. Finite-key analysis for measurement-device-independent quantum key distribution. Nat. Commun. 5, 3732 (2014).

Lim, C. C. W., Curty, M., Walenta, N., Xu, F. & Zbinden, H. Concise security bounds for practical decoy-state quantum key distribution. Phys. Rev. A 89, 022307 (2014).

Zhang, Z., Zhao, Q., Razavi, M. & Ma, X. Improved key-rate bounds for practical decoy-state quantum-key-distribution systems. Phys. Rev. A 95, 012333 (2017).

Tomamichel, M., Lim, C. C. W., Gisin, N. & Renner, R. Tight finite-key analysis for quantum cryptography. Nat. Commun. 3, 634 (2012).

Hayashi, M. Practical evaluation of security for quantum key distribution. Phys. Rev. A 74, 022307 (2006).

Fung, C.-H.F., Ma, X. & Chau, H. Practical issues in quantum-key-distribution postprocessing. Phys. Rev. A 81, 012318 (2010).

Lucamarini, M., Dynes, J. F., Fröhlich, B., Yuan, Z. & Shields, A. J. Security bounds for efficient decoy-state quantum key distribution. IEEE J. Sel. Top. Quant. Electron. 21, 6601408 (2015).

Korzh, B. et al. Provably secure and practical quantum key distribution over 307 km of optical fibre. Nat. Photon. 9, 163 (2015).

Yin, H.-L. & Chen, Z.-B. Finite-key analysis for twin-field quantum key distribution with composable security. Sci. Rep. 9, 17113 (2019).

Yin, H.-L. et al. Measurement-device-independent quantum key distribution over a 404 km optical fiber. Phys. Rev. Lett. 117, 190501 (2016).

Hayashi, M. & Nakayama, R. Security analysis of the decoy method with the Bennett–Brassard 1984 protocol for finite key lengths. New J. Phys. 16, 063009 (2014).

Yu, Z.-W., Zhou, Y.-H. & Wang, X.-B. Reexamination of decoy-state quantum key distribution with biased bases. Phys. Rev. A 93, 032307 (2016).

Wegman, M. N. & Carter, J. L. New hash functions and their use in authentication and set equality. J. Comput. Syst. Sci. 22, 265–279 (1981).

Müller-Quade, J. & Renner, R. Composability in quantum cryptography. New J. Phys. 11, 085006 (2009).

Gobby, C., Yuan, Z. & Shields, A. Quantum key distribution over 122 km of standard telecom fiber. Appl. Phys. Lett. 84, 3762–3764 (2004).

Zhou, Y.-H., Yu, Z.-W. & Wang, X.-B. Making the decoy-state measurement-device-independent quantum key distribution practically useful. Phys. Rev. A 93, 042324 (2016).

Pirandola, S., Laurenza, R., Ottaviani, C. & Banchi, L. Fundamental limits of repeaterless quantum communications. Nat. Commun. 8, 15043 (2017).

Stanica, P. Good lower and upper bounds on binomial coefficients. J. Inequal. Pure Appl. Math. 2, 30 (2001).

Acknowledgements

We gratefully acknowledge support from the National Natural Science Foundation of China under Grant No. 61801420, the Fundamental Research Funds for the Central Universities and the Nanjing University.

Author information

Authors and Affiliations

Contributions

H.-L.Y. and Z.-B.C. conceived and designed the study. H.-L.Y., M.-G.Z. and J. G. make the proof. H.-L.Y., Y.-M.X. and Y.-S.-L. simulate the result. All the authors did participate to manuscript writing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yin, HL., Zhou, MG., Gu, J. et al. Tight security bounds for decoy-state quantum key distribution. Sci Rep 10, 14312 (2020). https://doi.org/10.1038/s41598-020-71107-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-71107-6

This article is cited by

-

Single-emitter quantum key distribution over 175 km of fibre with optimised finite key rates

Nature Communications (2023)

-

Statistical verifications and deep-learning predictions for satellite-to-ground quantum atmospheric channels

Communications Physics (2022)

-

More optimal relativistic quantum key distribution

Scientific Reports (2022)

-

Finite key effects in satellite quantum key distribution

npj Quantum Information (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.