Abstract

Development of paper-based microfluidic devices that perform colorimetric measurements requires quantitative image analysis. Because the design geometries of paper-based microfluidic devices are not standardized, conventional methods for performing batch measurements of regularly spaced areas of signal intensity, such as those for well plates, cannot be used to quantify signal from most of these devices. To streamline the device development process, we have developed an open-source program called ColorScan that can automatically recognize and measure signal-containing zones from images of devices, regardless of output zone geometry or spatial arrangement. This program, which measures color intensity with the same accuracy as standard manual approaches, can rapidly process scanned device images, simultaneously measure identified output zones, and effectively manage measurement results to eliminate requirements for time-consuming and user-dependent image processing procedures.

Similar content being viewed by others

Introduction

Paper-based microfluidic devices enable measurement capabilities for a number of fields, from clinical diagnostics1 to environmental management2 and food quality monitoring,3 by employing a variety of detection strategies with different signal output formats. These self-contained analytical systems are typically fabricated from paper patterned with hydrophobic barriers, made from materials such as wax,4 photoresist,5 glue,6 or PDMS.7 Patterned paper layers can be stacked8 or folded9 to create three-dimensional fluidic networks, which enable measurement of target analytes by automating complex liquid handling protocols. Depending on the selected signal formation strategy and analysis method, paper-based microfluidic devices can provide qualitative, semi-quantitative, or quantitative results10..

Paper-based platforms that employ electrochemical,11 fluorescence,12 and chemiluminescence13 detection strategies enable quantitative measurements, but generally require secondary equipment, such as a portable potentiostat14 or handheld UV source.15 To enable measurements without any requirements for specialized external equipment, many developers design devices using colorimetric detection strategies. Qualitative measurements (i.e., on/off sensors) may be interpreted by visual inspection,8,16 and readout zones may be compared to printed read guides1 or designed to provide distance-based outputs17,18,19,20 to enable semi-quantitative measurements. Image analysis is used to characterize assay performance21 during the device development process, but can also be performed at the point-of-use by smartphone applications22 to provide quantitative measurements while reducing user training requirements.23 These applications may operate using algorithms that are specific to the geometry of the device being analyzed or from unpublished code that is not available for modification,24 requiring developers of paper-based devices to develop their own software tools or rely on manual image analysis protocols.

As a critical component of paper-based assay development, especially for qualitative and semi-quantitative devices, image analysis facilitates investigation of device design criteria that determine how a user may interpret developed signal. During the prototyping process, device readout zones are typically imaged using a flatbed scanner or camera, and the acquired images are analyzed to inform device fabrication and assay conditions to provide sufficient analytical performance of the device. Images of device output zones are often measured using free and open-source tools (e.g., ImageJ25, Fiji26) that support user-developed plugins27,28 for application-specific analyses. Although numerous plugins exist, available options do not facilitate streamlined analysis of paper-based output zones of different colors and geometries. While manual approaches for image analysis of colorimetric signals have broad utility for general measurement needs across many fields, application of these tools for analysis of paper-based devices is labor-intensive, user dependent, and time consuming. Our program effectively packages the capabilities of existing general color analysis techniques and applies them towards solving the specific challenges facing the interpretation of paper-based assays (e.g., non-standardized zone numbers and geometries).

For example, to analyze a paper-based output zone in ImageJ, a user must first define a region of interest (ROI) for analysis (e.g., using the “Oval” tool for a circular zone). This region is typically defined on a magnified view of a high-resolution (e.g., 600–800 dpi)8,29 image. At high magnification, it can be difficult for a user to differentiate between the signal-containing area and surrounding material (e.g., hydrophobic wax barrier). To avoid introducing bias in measured signal intensities, this region must also be centered on the output zone so that the selected area does not contain any undesired surrounding material and adequately captures any non-uniform distribution of signal. When the ROI has been placed in the desired location, the output zone may be analyzed by a selected method (e.g., “RGB Measure”)30. After a single measurement is complete, the ROI can be moved to or recreated on the next output zone so that the measurement process can be repeated. The area and placement of this region must be consistent throughout the analysis process so that measurements are consistent across output zones. Measured values can then be copied or exported for further statistical analysis. The reproducibility of these results may vary by software user, as size and placement of the ROI are both manually defined for individual measurements.

Because the device development process typically requires analysis of replicate results across many conditions, potentially necessitating hundreds of devices, image analysis and data processing can be substantially labor-intensive and time-consuming for device developers. For complex devices, these requirements can inhibit broad screening of fabrication or use conditions (e.g., channel geometry, reagent storage, sample volume). Automated image processing can improve the time requirements and precision of measured results for colorimetric paper-based assays, but existing ImageJ plugins and available tools are not compatible with device-specific design geometries31 and spatial arrangements of color localization in most paper-based devices.

Existing ImageJ plugins, such as “ReadPlate”32, enable automated analysis of images of well plates with standard configurations (e.g., 96-well plates). This plugin streamlines analysis by allowing the user to define a grid of circular regions of interest that is superimposed on the well plate image. The grid is created by defining the number of rows and columns in the well plate, the pixel coordinates of bounding wells (e.g., wells A1 and H12), and the diameter of each analysis spot. Because paper-based devices are designed in custom, non-linear geometries33 according to their intended performance and function, their output zones typically do not follow the spatial arrangement of commercial liquid handling tools. Other ImageJ plugins, including “Template Matching”34 and “Template Matching and Slice Alignment”35, can perform automated recognition of desired image features based on a user-generated reference template or selection. These tools are designed to recognize the extent of agreement between a reference template and a larger image and may not be sufficient for recognizing multiple shapes or colors within a single image.

Since the development of early paper-based devices, cellular phones have been used to enable quantitative analysis of colorimetric signal21. As smartphone technologies and quantitative measurement accessories36,37 have advanced over time, many groups have written custom applications to quantify signal from paper-based devices at the point-of-care22,38,39,40,41,42. Smartphone image analysis applications are typically tailored to the geometries of individual paper-based assays43 and cannot be used to measure output zones that differ from those of the original device. In many cases, the positions of test zones are detected using registration marks patterned within the paper device22,44. These recognition algorithms do not independently identify the positions of signal formation and instead analyze known areas of signal formation. Additionally, the source code for these applications is not always published with scientific manuscripts24, and the resulting lack of modifiable open-source options requires developers of paper-based devices to either (i) create their own analysis software or (ii) rely on existing inefficient options throughout the assay development process.

To address this shortcoming, we have developed a free, open-source software called ColorScan that enables streamlined, automated analysis of paper-based microfluidic devices. This Python-based program automatically identifies and measures signal-containing zones of any geometry or color from images of paper-based devices. Our tool provides a variety of quantitative measurement options based on user-specified criteria and effectively manages data, even providing cropped images of output zones paired with measured results to facilitate figure creation. To verify the performance of this software, we compared the consistency and time requirements of our tool to manual measurements completed using ImageJ. Our software, which has the potential to simplify the time and labor-intensive process of quantitative image analysis for paper-based devices, is freely available31,45,46, as an easy-to-use Python program to facilitate widespread use and further improvement by other developers of colorimetric sensors.

Experimental design

Identification of desired software features

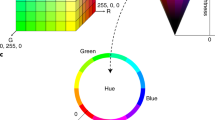

We designed ColorScan to automate the workflow of our image analysis process, which consists of three main steps: (i) selection of a region of interest, (ii) color intensity measurement within the selected region, and (iii) management of measured results. When a paper-based device is imaged to facilitate analysis, arbitrary placement or rotation of the device on the scanner bed or within the camera’s field of view can lead to variability of output zone position across replicate devices. Manual selection of analysis regions can be performed regardless of zone position, but is time consuming, while patterned registration marks for automated analysis programs place design constraints on device developers. We designed ColorScan to automatically recognize colored regions of an image based on hue (i.e., the color or shade of the output zone), saturation (i.e., amount of gray), and value (i.e., the brightness of the output zone). This recognition step is not dependent on the spatial location of colored pixels within the image file, enabling automated analysis of devices imaged in any orientation.

Manual analysis protocols, in addition to being labor-intensive and user-dependent, usually only allow an image to be measured within a single color space. Device images are typically acquired in the RBG color space, requiring conversion to determine if another color space (e.g., HSV, CIELAB)47,48 is more sensitive to the signal formed by a particular sensor or more intuitive for interpretation by visual inspection49. Standard image analysis approaches also require measurement results to be manually tracked and compiled into spreadsheets for processing. We developed ColorScan to not just automate analysis in multiple color spaces, but also effectively manage results by organizing them in a common location (i.e., a .csv file) for direct comparison during the assay development process. To streamline comparison of measurement results to their respective output zones, as well as process device images for presentation or publication, we configured ColorScan to crop and save an image of each measured output zone. The file name of each image is labeled so that it may be paired with its numerical measurement results.

Computational analysis approach

To make our software broadly accessible and readily modifiable, we chose to develop it using Python50, a programming language that is used in a wide range of fields, has a large assortment of libraries, and is compatible with a number of third-party modules. We used OpenCV51, an extensive open-source computer vision library, in conjunction with NumPy52, an array manipulation and numerical operation library, to perform image processing tasks. We also developed a graphical user interface, using the Python TkInter library53, to ensure that ColorScan would be accessible to a broad user base without requiring device developers to be experienced in coding. The source script for our Python-based program is available for download at our group’s GitHub page54 and we have included a detailed User Guide document, including instructions for downloading and using Python, as part of the Supplementary Information.

The first step in our image analysis protocol (Fig. 1A) is identification of the colorimetric signal contained by the output zones of a paper-based device. We designed ColorScan to automatically recognize the color of output zones against the contrasting color of the patterned hydrophobic barrier, which is black wax in our devices. The software masks the area around the zones by temporarily converting the image to the HSV (i.e., Hue, Saturation, Value) color-space, and excluding pixels below minimum saturation and value thresholds defined by the user. This masking step produces a binarized image (Fig. 1B), in which color-containing pixels within the device output zones are identified for further refinement.

ColorScan image analysis protocol. (A) Scanned image of paper-based microfluidic device output zones filled with dyes. (B) A binarized image generated using value and saturation masking thresholds identifies areas of the image that contain colorimetric signal. (C) The masked image is blurred to smooth zone boundaries before contour detection. (D) A contour identification step highlights the borders of all white areas within the image (magenta). A reference contour (yellow) can be selected for identification of similar contours. (E) Identified contours that are sufficiently similar in size and shape to the reference contour are highlighted in light blue. (F) An analysis region defined within the reference contour is applied to all similar contours to support batch measurement of identical analysis regions. Scale bar is 4 mm.

To reduce the jaggedness of the masked output zone edges, ColorScan performs a box blur (Fig. 1C) based on a user-defined kernel size. Next, the software runs OpenCV’s contour-identification algorithm, which is based on work by Suzuki and Abe55, on the binarized image to define the boundaries of the areas where color intensity measurements may be performed (Fig. 1D). In order to cut down on computation time, we set an arbitrary cutoff for contours containing fewer than five pixels in area. This threshold effectively determines the minimum feature size that ColorScan can detect. The open-source nature of the code, however, allows the user to modify this threshold to suit their needs. The maximum feature size is, in principle, the size of the image itself. At this point, the user selects a reference contour to facilitate identification of similar objects for analysis. ColorScan compares the sizes (i.e., pixel area contained within the border) and shapes, defined by the Hu image invariants56, of the reference contour and all of the identified contours based on thresholds set by the user in the graphical user interface. In this comparison, the OpenCV shape-matching function computes the sum of absolute differences between each of the seven Hu invariants for the two contours under comparison (i.e., the reference contour and another contour), and produces a score indicating how similar the two contours are. Contours with scores within the user-defined thresholds are selected for batch analysis (Fig. 1E).

For device designs that use a colored hydrophobic barrier feature to provide contrast for visual interpretation of assay results8,57, the software may recognize these features as part of the output zone. To ensure that inclusion of barrier edge pixels does not bias measurement results, we developed a zone refinement tool that allows the user to geometrically constrain the reference contour to exclude undesired image features. This constraint needs only be manually performed on the reference contour and is applied to all similar contours before analysis. This feature also allows the user to select regular polygonal geometries, in addition to common circular output zones. Performing this step provides direct control of the exact size and position of each analysis region in a batch measurement (Fig. 1F).

Once the user is satisfied that all of the output zones—and only the output zones—have been selected, the software will measure all of the pixels within each contour. Results can be presented as average color intensities in up to three color spaces, including RGB, HSV, and CIELAB, and also as histograms of RGB intensities, depending on user-defined preferences. Measurements are exported to a .csv file and may be compared to saved images of output zones, which are automatically cropped from the full image based on the bounding rectangle of each output zone contour and saved with their associated index identifier to facilitate data curation and presentation.

Design of software and graphical user interface

We designed ColorScan to have a simple graphical user interface that would streamline the image analysis process and improve consistency across users. The first step in using ColorScan is selecting an image. Selecting an image using the “Select Image” button displays it in the main window of the software (Fig. 2A). The program supports most image file types, the full list of which can be found in the OpenCV documentation58. The image in the main ColorScan window updates as options in the Analysis Menu window (Fig. 2B) are adjusted. This window is opened with the “Analysis” button after image selection, and its features are spatially arranged, from top to bottom, in the sequence that they should be used to complete image analysis. At the beginning of the analysis process, only the masking controls are available in the Analysis Menu window. Additional features become available as each step in the analysis sequence is completed.

First, the value slider is used to mask the area surrounding the output zones and highlights pixels above a selected brightness threshold. Next, the saturation slider is used to refine the masked areas by filtering unsaturated pixels (i.e., those close to grayscale) to show only pixels containing color. After identification of the output zones, the blur options can be used to smooth the mask edges and reduce the granularity of the areas to be analyzed. The “Dilate” and “Erode” buttons expand and shrink the masked and blurred areas, respectively, allowing for further refinement before selection of the output zone contours. Masking and blurring parameters can be saved into a named preset, which can be loaded during subsequent analyses to facilitate rapid, consistent analysis of assay replicates.

Once masking parameters include all of the desired output zones, the user can find the contours of those zones using the “Find Contours” button. This will select all color-containing regions in the image, from which the user may select a reference contour by clicking on it. The “Find Similar Contours” button selects all regions that are similar in size and shape to the reference contour for analysis. The Size Tolerance and Shape Tolerance values should be adjusted so that only the device output zones are highlighted. After these zones are selected for analysis, clicking the Refine Zones button will open a separate window that enables geometric restriction of the analysis area within each output zone.

This tool is useful for cases where undesired pixels from the hydrophobic barrier edge (e.g., black wax) or a patterned contrast feature (e.g., colored rings) are selected by the masking and contour finding steps but should not be included in the area that is being measured. To ensure that only signal from the paper-based assay is measured, the tools in the Refine Zone window allow the user to constrain the position, shape, and area of the analysis region within the reference contour. This analysis geometry is applied to all identified similar contours to provide consistent measurement area and shape across all analysis regions. It also allows for better handling of potential problem-cases where there is insufficient contrast between the color-containing regions of interest (i.e., the test zones) and the background color from the device (e.g., colored wax barriers or the paper itself), which may occur when the assay produces colors that are either very dark (low Value) or very pale (low Saturation). By refining the zone area, the user may cut out regions potentially erroneously included in the analysis region. This process can be optimized for a specific device geometry and then saved as a preset to facilitate objective and reproducible measurements across multiple images. These techniques, in combination with judicious device design features (e.g., colored rings printed around output zones to improve contrast) make consistent contour identification possible.

Importantly, the positional controls in the Refine Zone window (X Displacement, Y Displacement, Angle) can be used to define the analysis region at the end of a paper channel (Fig. 3). Many device designs21,22,57 contain detection zones at the ends of paper channels used for fluid distribution. While the white or grey color of these channels can be difficult to distinguish from colorimetric signal during masking steps in ColorScan, our zone refinement approach allows these features to be analyzed in a controlled, reproducible manner. After zone refinement, pressing the “Analyze” button completes automated measurement of all analysis regions selected by the user-defined criteria and exports results and zone images to the same directory as the original image.

ColorScan zone refinement tool. Detection zones at the ends of paper channels, like the ones shown in the above paper-based microfluidic device, can be precisely selected for measurement using the options in the Refine Zone window. Identical zone selections are propagated to the same relative positions in the contours the user and program have identified as being the same size and shape of the reference contour. This allows for reproducible, precise analyses of replicate assays on multiplexed devices.

Results and Discussion

Color intensity measurements

We compared the user experience and measurement results provided by ColorScan to those of our standard image analysis approach, completed using ImageJ, to evaluate the performance of our custom software. To complete this comparison, we analyzed four replicate paper-based microfluidic devices (Fig. 4A) containing six circular output zones each, using both ImageJ and ColorScan. These four-layered devices, described in the Materials and Methods document as part of the Supplementary Information, contained internally stored and spatially separated reactants for six colorimetric reactions. When these devices were run with water, stored analytes and their reagents were rehydrated and delivered to output zones where colorimetric signal developed to indicate the presence of (i) cysteine, (ii) a neutral pH, (iii) sulfite, (iv) cobalt(II), (v) iron(II), or (vi) molybdate. Each of these zones was surrounded by a wax-printed contrast ring (Fig. 4B), which could be used to support signal interpretation by visual inspection. These devices were not designed to perform measurements for these analytes at the point-of-care, but the homogenous (e.g., sulfite zone) and heterogeneous (e.g., cobalt(II) zone) distributions of signal intensity in their output zones are demonstrative of signal formation patterns found in practical paper-based microfluidic devices. All devices were imaged using an Epson V600 Photo flatbed scanner at a resolution of 800 dpi. Scanning at lower resolutions is not expected to appreciably bias measurements acquired from a test zone—data from neighboring pixels within a zone that can be resolved in a high-resolution image are effectively accounted for through averaging in a low-resolution image. Practically, we suggest setting 300 dpi as a lower limit for images and scans.

Paper-based microfluidic devices used to complete ColorScan performance evaluation. (A) A scanned image of a strip of four replicate devices was measured in the RGB color space using ImageJ and ColorScan. (B) Each device contained output zones that provided six different colorimetric signals. These zones were bordered by wax-printed contrast rings, a common feature of paper-based devices that are designed to be interpreted by visual inspection. Scale bars in both (A) and (B) are 6 mm.

To complete our performance evaluation, we began by manually measuring the pixel intensity of each output zone in ImageJ using the “RGB Measure” tool30. We created a circular region of interest on the scanned device image and manually measured the output zones in the numerical order shown in Fig. 4B, then compiled measurement results in a Microsoft Excel spreadsheet. To acquire images of each output zone, as ColorScan does automatically, we manually cropped selected features from the scanned device image using Adobe Photoshop. In total, our manual measurement and image processing steps took approximately 24 min. ColorScan analysis of the same image took only 2 min and automatically provided an organized spreadsheet of results and cropped images of output zones. This is a reasonable analysis time for a trained ColorScan user, and we expect the time investment required for users to familiarize themselves with the software to be minimal. Unlike manual analysis performed using ImageJ, the time required to perform automated measurements in ColorScan does not significantly depend on the number of output zones being measured, offering substantial time savings over conventional methods. Measurement results obtained in the RGB color space using each software are shown in Fig. 5. Further details of our performance evaluation, including additional comparisons for the HSV and CIELAB color spaces, are available in Materials and Methods document of the Supplementary Information.

ColorScan performance evaluation. Solid and diagonally striped bars represent ImageJ and ColorScan measurement results, respectively, performed in each channel of the RGB color space. Spot numbers correspond to the diagram shown in Fig. 4B. Error bars represent standard deviation of four replicate measurements of each numbered output zone.

For each set of output zones measured in the RGB color space, mean pixel intensities acquired using ColorScan were consistent with values obtained using ImageJ. On average, ColorScan and ImageJ values were 0.7% different, with a maximum difference of 1.8% for the blue channel intensity of the purple-colored signal in output spot 6 of each device (Table 1). These differences may be related to minor inconsistencies in analysis region position or size in each approach, or the computational methods used to average pixel intensity in each program. Additionally, the variance of mean pixel intensity values for replicate output zones is comparable for measurements performed using ColorScan and ImageJ (Table S3). These results indicate that ColorScan, in addition to providing a user-friendly approach for streamlined image analysis, performs pixel intensity measurements just as well as standard image analysis programs.

Conclusions

We have developed an open-source computer program designed specifically to facilitate the automated analysis of colorimetric signals in paper-based microfluidic devices. This program, ColorScan, independently identifies locations of signal formation in images of devices to enable simultaneous measurement of replicate output zones arrayed in any geometry. We intended this approach to address outstanding challenges facing the development of paper-based assays: (i) Because assays conducted in paper devices are not restricted to any set zone size, shape, or spatial orientation, the time-intensive methods used for manual data analysis can create an arbitrary obstacle to conducting large numbers of replicates or comprehensively evaluating device design criteria. (ii) The use of presets enables reproducible analyses that are scalable. ColorScan operates identically if an image contains few or numerous zones, if an image contains numerous devices, or if data sets span numerous images. ColorScan requires minimal user intervention and offers a substantial time savings over manual image analysis methods (e.g., via ImageJ) and provides accurate and reproducible pixel intensity measurements in multiple color spaces (RGB, HSV, and CIELAB). Moreover, ColorScan effectively manages measurement results by (i) tagging each identified test zone with a unique identifier; (ii) exporting measurements to a .csv file; (iii) creating histograms, as both .csv tables and .png plot images, of values within each zone, (iv) compiling averages and standard deviations for each zone and color space, (v) generating cropped and centered images of each logged zone to facilitate the design of publication-quality figures. By automating user-dependent aspects of the image analysis process, this tool improves the consistency and speed of color intensity measurements to support evaluation of assay conditions and design criteria during the development of paper-based microfluidics. Finally, as an open source tool, ColorScan can facilitate transparency of data analysis techniques.

References

Pollock, N. R. et al. A paper-based multiplexed transaminase test for low-cost, point-of-care liver function testing. Sci. Transl. Med. 4, 152ra129. https://doi.org/10.1126/scitranslmed.3003981 (2012).

Hofstetter, J. C. et al. Quantitative colorimetric paper analytical devices based on radial distance measurements for aqueous metal determination. Analyst 143, 3085–3090. https://doi.org/10.1039/c8an00632f (2018).

Jokerst, J. C. et al. Development of a paper-based analytical device for colorimetric detection of select foodborne pathogens. Anal. Chem. 84, 2900–2907. https://doi.org/10.1021/ac203466y (2012).

Carrilho, E., Martinez, A. W. & Whitesides, G. M. Understanding wax printing: a simple micropatterning process for paper-based microfluidics. Anal. Chem. 81, 7091–7095. https://doi.org/10.1021/ac901071p (2009).

Martinez, A. W., Phillips, S. T., Butte, M. J. & Whitesides, G. M. Patterned paper as a platform for inexpensive, low-volume, portable bioassays. Angew. Chem. Int. Ed. 46, 1318–1320. https://doi.org/10.1002/anie.200603817 (2007).

Cardoso, T. M. G. et al. Versatile fabrication of paper-based microfluidic devices with high chemical resistance using scholar glue and magnetic masks. Anal. Chim. Acta 974, 63–68. https://doi.org/10.1016/j.aca.2017.03.043 (2017).

Bruzewicz, D. A., Reches, M. & Whitesides, G. M. Low-cost printing of poly(dimethylsiloxane) barriers to define microchannels in paper. Anal. Chem. 80, 3387–3392. https://doi.org/10.1021/ac702605a (2008).

Schonhorn, J. E. et al. A device architecture for three-dimensional, patterned paper immunoassays. Lab Chip 14, 4653–4658. https://doi.org/10.1039/c4lc00876f (2014).

Liu, H. & Crooks, R. M. Three-dimensional paper microfluidic devices assembled using the principles of origami. J. Am. Chem. Soc. 133, 17564–17566. https://doi.org/10.1021/ja2071779 (2011).

Fernandes, S. C., Walz, J. A., Wilson, D. J., Brooks, J. C. & Mace, C. R. Beyond wicking: expanding the role of patterned paper as the foundation for an analytical platform. Anal. Chem. 89, 5654–5664. https://doi.org/10.1021/acs.analchem.6b03860 (2017).

Nie, Z. et al. Electrochemical sensing in paper-based microfluidic devices. Lab Chip 10, 477–483. https://doi.org/10.1039/B917150A (2010).

Carrilho, E., Phillips, S. T., Vella, S. J., Martinez, A. W. & Whitesides, G. M. Paper microzone plates. Anal. Chem. 81, 5990–5998. https://doi.org/10.1021/ac900847g (2009).

Ge, L., Wang, S., Song, X., Ge, S. & Yu, J. 3D Origami-based multifunction-integrated immunodevice: low-cost and multiplexed sandwich chemiluminescence immunoassay on microfluidic paper-based analytical device. Lab Chip 12, 3150–3158. https://doi.org/10.1039/C2LC40325K (2012).

Delaney, J. L., Doeven, E. H., Harsant, A. J. & Hogan, C. F. Use of a mobile phone for potentiostatic control with low cost paper-based microfluidic sensors. Anal. Chim. Acta 790, 56–60. https://doi.org/10.1016/j.aca.2013.06.005 (2013).

Connelly, J. T., Rolland, J. P. & Whitesides, G. M. “Paper machine” for molecular diagnostics. Anal. Chem. 87, 7595–7601. https://doi.org/10.1021/acs.analchem.5b00411 (2015).

Kim, S. & Sikes, H. D. Liposome-enhanced polymerization-based signal amplification for highly sensitive naked-eye biodetection in paper-based sensors. ACS Appl. Mater. Interfaces 11, 28469–28477. https://doi.org/10.1021/acsami.9b08125 (2019).

Pratiwi, R. et al. A selective distance-based paper analytical device for copper(II) determination using a porphyrin derivative. Talanta 174, 493–499. https://doi.org/10.1016/j.talanta.2017.06.041 (2017).

Yamada, K., Citterio, D. & Henry, C. S. “Dip-and-read” paper-based analytical devices using distance-based detection with color screening. Lab Chip 18, 1485–1493. https://doi.org/10.1039/c8lc00168e (2018).

Gerold, C. T., Bakker, E. & Henry, C. S. Selective distance-based K+ quantification on paper-based microfluidics. Anal. Chem. 90, 4894–4900. https://doi.org/10.1021/acs.analchem.8b00559 (2018).

Berry, S. B., Fernandes, S. C., Rajaratnam, A., DeChiara, N. S. & Mace, C. R. Measurement of the hematocrit using paper-based microfluidic devices. Lab Chip 16, 3689–3694. https://doi.org/10.1039/C6LC00895J (2016).

Martinez, A. W. et al. Simple telemedicine for developing regions: camera phones and paper-based microfluidic devices for real-time, off-site diagnosis. Anal. Chem. 80, 3699–3707. https://doi.org/10.1021/ac800112r (2008).

Lopez-Ruiz, N. et al. Smartphone-based simultaneous pH and nitrite colorimetric determination for paper microfluidic devices. Anal. Chem. 86, 9554–9562. https://doi.org/10.1021/ac5019205 (2014).

Phillips, E. A., Moehling, T. J., Bhadra, S., Ellington, A. D. & Linnes, J. C. Strand displacement probes combined with isothermal nucleic acid amplification for instrument-free detection from complex samples. Anal. Chem. 90, 6580–6586. https://doi.org/10.1021/acs.analchem.8b00269 (2018).

Dryden, M. D. M., Fobel, R., Fobel, C. & Wheeler, A. R. Upon the shoulders of giants: open source hardware and software in analytical chemistry. Anal. Chem. 89, 4330–4338. https://doi.org/10.1021/acs.analchem.7b00485 (2017).

ImageJ. https://imagej.nih.gov/ij/ (Accessed August 13, 2019).

Fiji: ImageJ, with "Batteries Included". https://fiji.sc/ (Accessed August 13, 2019).

Guzmán, C., Bagga, M., Kaur, A., Westermarck, J. & Abankwa, D. ColonyArea: an ImageJ plugin to automatically quantify colony formation in clonogenic assays. PLoS ONE 9, e92444. https://doi.org/10.1371/journal.pone.0092444 (2014).

Della Mea, V., Baroni, G. L., Pilutti, D. & Di Loreto, C. SlideJ: An ImageJ plugin for automated processing of whole slide images. PLoS ONE 12, e0180540. https://doi.org/10.1371/journal.pone.0180540 (2017).

Martinez, A. W., Phillips, S. T. & Whitesides, G. M. Three-dimensional microfluidic devices fabricated in layered paper and tape. Proc. Natl. Acad. Sci. USA 105, 19606–19611. https://doi.org/10.1073/pnas.0810903105 (2008).

Rasband, W. RGB Measure. https://imagej.nih.gov/ij/plugins/rgb-measure.html (2004).

DeChiara, N. S., Wilson, D. J. & Mace, C. R. An open software platform for the automated design of paper-based microfluidic devices. Sci. Rep. 7, 16224. https://doi.org/10.1038/s41598017-16542-8 (2017).

Delfino, J. M. ReadPlate. https://imagej.nih.gov/ij/plugins/readplate/index.html (2015).

Wilson, D. J. & Mace, C. R. Reconfigurable pipet for customized, cost-effective liquid handling. Anal. Chem. 89, 8656–8661. https://doi.org/10.1021/acs.analchem.7b02556 (2017).

O’Dell, W. Template matching. https://imagej.nih.gov/ij/plugins/template-matching.html (2005).

Tseng, Q. Template matching and slice alignment—ImageJ plugins. https://sites.google.com/site/qingzongtseng/template-matching-ij-plugin#downloads (Accessed August 14, 2019).

Zhu, H., Yaglidere, O., Su, T.-W., Tseng, D. & Ozcan, A. Cost-effective and compact wide-field fluorescent imaging on a cell-phone. Lab Chip. https://doi.org/10.1039/C0LC00358A (2011).

Laksanasopin, T. et al. A smartphone dongle for diagnosis of infectious diseases at the point of care. Sci. Transl. Med. 7, 273re1. https://doi.org/10.1126/scitranslmed.aaa0056 (2015).

Guan, L. et al. Barcode-like paper sensor for smartphone diagnostics: an application of blood typing. Anal. Chem. 86, 11362–11367. https://doi.org/10.1021/ac503300y (2014).

Delaney, J. L., Hogan, C. F., Tian, J. & Shen, W. Electrogenerated chemiluminescence detection in paper-based microfluidic sensors. Anal. Chem. 83, 1300–1306. https://doi.org/10.1021/ac102392t (2011).

Jalal, U. M., Jin, G. J. & Shim, J. S. Paper–plastic hybrid microfluidic device for smartphone based colorimetric analysis of urine. Anal. Chem. 89, 13160–13166. https://doi.org/10.1021/acs.analchem.7b02612 (2017).

Güder, F. et al. Paper-Based Electrical Respiration Sensor. Angew. Chem. Int. Ed. 55, 5727–5732. https://doi.org/10.1002/anie.201511805 (2016).

Choi, J. R. et al. An integrated paper-based sample-to-answer biosensor for nucleic acid testing at the point of care. Lab Chip 16, 611–621. https://doi.org/10.1039/C5LC01388G (2016).

Yetisen, A. K., Martinez-Hurtado, J. L., Garcia-Melendrez, A., da Cruz Vasconcellos, F. & Lowe, C. R. A smartphone algorithm with inter-phone repeatability for the analysis of colorimetric tests. Sens. Actuators B Chem. 196, 156–160. https://doi.org/10.1016/j.snb.2014.01.077 (2014).

Koh, A. et al. A soft, wearable microfluidic device for the capture, storage, and colorimetric sensing of sweat. Sci. Transl. Med. 8, 366ra165. https://doi.org/10.1126/scitranslmed.aaf2593 (2016).

Sanka, R., Lippai, J., Samarasekera, D., Nemsick, S. & Densmore, D. 3DμF—interactive design environment for continuous flow microfluidic devices. Sci. Rep. 9, 9166. https://doi.org/10.1038/s41598-019-45623-z (2019).

Kong, D. S. et al. Open-source, community-driven microfluidics with metafluidics. Nat. Biotechnol. 35, 523–529. https://doi.org/10.1038/nbt.3873 (2017).

Cantrell, K., Erenas, M. M., de Orbe-Payá, I. & Capitán-Vallvey, L. F. Use of the hue parameter of the hue, saturation, value color space as a quantitative analytical parameter for bitonal optical sensors. Anal. Chem. 82, 531–542. https://doi.org/10.1021/ac901753c (2010).

Lathwal, S. & Sikes, H. D. Assessment of colorimetric amplification methods in a paper-based immunoassay for diagnosis of malaria. Lab Chip 16, 1374–1382. https://doi.org/10.1039/C6LC00058D (2016).

Shen, L., Hagen, J. A. & Papautsky, I. Point-of-care colorimetric detection with a smartphone. Lab Chip 12, 4240–4243. https://doi.org/10.1039/c2lc40741h (2012).

Welcome to Python.org. https://www.python.org/ (Accessed August 20, 2019).

OpenCV. https://opencv.org/ (Accessed August 20, 2019).

van der Walt, S., Colbert, S. C. & Varoquaux, G. The NumPy array: a structure for efficient numerical computation. Comput. Sci. Eng. 13, 22–30. https://doi.org/10.1109/MCSE.2011.37 (2011).

tkinter—Python interface to Tcl/Tk. https://docs.python.org/3/library/tkinter.html (Accessed August 20, 2019).

Mace Lab GitHub Repository for ColorScan. https://github.com/MaceLab/ColorScan.

Suzuki, S. & Abe, K. Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 30, 32–46. https://doi.org/10.1016/0734-189X(85)90016-7 (1985).

Hu, M.-K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 8, 179–187. https://doi.org/10.1109/TIT.1962.1057692 (1962).

Meredith, N. A., Volckens, J. & Henry, C. S. Paper-based microfluidics for experimental design: screening masking agents for simultaneous determination of Mn(II) and Co(II). Anal. Methods 9, 534–540. https://doi.org/10.1039/C6AY02798A (2017).

Reading and Writing Images—OpenCV 3.0.0-dev documentation. https://docs.opencv.org/3.0-beta/modules/imgcodecs/doc/reading_and_writing_images.html#imread (Accessed August 15, 2019).

Acknowledgements

This material is based upon work supported by the National Science Foundation under Grant No. CBET-1846846. This work was additionally supported by Tufts University and a generous gift from James Kanagy. We are grateful to the Tufts Faculty Research Awards Committee for providing expenses required to publish this work in an open-access journal. We thank Mace Lab group members, specifically Keith Baillargeon, Paul Lawrence, Jessica Brooks, and Lara Murray, for testing the analysis script and user interface throughout the design process.

Author information

Authors and Affiliations

Contributions

D.J.W. conceived the idea and R.W.P. wrote and compiled the software. D.J.W., R.W.P., and C.R.M. designed software features and wrote the manuscript. D.J.W. prepared paper-based devices and completed analyses.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Parker, R.W., Wilson, D.J. & Mace, C.R. Open software platform for automated analysis of paper-based microfluidic devices. Sci Rep 10, 11284 (2020). https://doi.org/10.1038/s41598-020-67639-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-67639-6

This article is cited by

-

Smartphone-integrated paper-based biosensor for sensitive fluorometric ethanol quantification

Microchimica Acta (2023)

-

Junction matters in hydraulic circuit bio-design of microfluidics

Bio-Design and Manufacturing (2023)

-

A free customizable tool for easy integration of microfluidics and smartphones

Scientific Reports (2022)

-

Strategies for the detection of target analytes using microfluidic paper-based analytical devices

Analytical and Bioanalytical Chemistry (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.