Abstract

Remote earthquake triggering is a well-established phenomenon. Triggering is commonly identified from statistically significant increases in earthquake rate coincident with the passage of seismic energy. In establishing rate changes, short duration earthquake catalogs are commonly used, and triggered sequences are not typically analyzed within the context of background seismic activity. Using 500 mainshocks and four western USA 33-yearlong earthquake catalogs, we compare the ability of three different statistical methods to identify remote earthquake triggering. Counter to many prior studies, we find remote dynamic triggering is rare (conservatively, <2% of the time). For the mainshocks associated with remote rate increases, the spatial and temporal signatures of triggering differ. We find that a rate increase coincident in time with mainshock energy alone is insufficient to conclude that dynamic triggering occurred. To classify dynamically triggered sequences, we suggest moving away from strict statistical measurements and instead use a compatibility assessment that includes multiple factors, like spatial and temporal indicators.

Similar content being viewed by others

Introduction

Clear examples of remote dynamic triggering include increases in local earthquake rates over large areal extents as observed following the Mw 7.3 1992 Landers, California, earthquake1; the Mw 7.9 2002 Denali Fault, Alaska earthquake2,3,4,5; and the Mw7.4 1999 Izmit, Turkey, earthquake6. For these three examples, the rate increases were not subtle, but represented significant increases in rates of local earthquakes coincident in time with the passage of the surface waves from the triggering mainshocks. There are many other cases of documented remote dynamic triggering (see reviews and references therein7,8,9,10). In many of these examples, the seismicity rate change is subtle and the spatial extent very localized. Causality is assumed primarily because local events are either coincident with the passage of the surface waves, as determined by the presence of local earthquakes in the wavefield11,12,13,14,15 or the triggered events initiate within several hours after the passage of the mainshock seismic waves, leading to the concept of delayed dynamic triggering16,17,18,19. The prevalence of identified mainshocks generating remote, small magnitude earthquakes has led to two hypotheses: (1) remote dynamic triggering is ubiquitous13 and (2) dynamically triggered events are small, often below the magnitude of completeness (Mc) and to identify these events requires catalog enhancement14,20,21,22,23,24.

The difference between the obvious remote triggering following the Landers, Denali Fault, and Izmit earthquakes, and the subtler instances of triggering and instances of delayed dynamic triggering leads to the question: what are the indicators for dynamic triggering and do different indicators imply differences in the physical mechanisms generating the triggered earthquakes? Here, we step back and first ask whether statistically significant changes in rate are sufficient to be the sole indicator for dynamic triggering. Currently, within the statistics community there is a debate over removing “statistical significance” from the lexicon of scientific study25. This movement argues that we should abandon relying on a given significance value, like a p-value of 0.05 or 95% confidence interval (which were arbitrarily chosen to begin with), and instead move toward a compatibility assessment. In our analysis, we examine statistical significance in context of the spatial characteristics of the triggered events and the temporal behavior of the rate changes (factors also examined by Aiken et al.21).

In the initial cases of dynamic triggering, there was an increase in the rate of earthquakes over a large spatial extent1,2,3,4,5,6. For these examples, it appears there is something fundamental in the passing seismic energy, amplitude or frequency, and/or that large areas of the crust are critically stressed and primed for earthquakes26,27. This has led many to look for a triggering threshold22,28,29 or other characteristics of the dynamic wave30,31 that could be used to anticipate remote dynamic triggering. However, in many subsequent studies the change in earthquake rate is subtler and the spatial extent more localized. For these cases, it seems plausible that the driving force is spatially confined and perhaps related to the interaction between the incoming waves and the orientation of local faults12,21,32,33,34,35 or the activation of fluids16,19,36.

Assessing dynamic triggering

One of the main challenges of remote dynamic triggering studies is how to determine the background seismicity rate and subsequently how to determine significant rate changes. Previously, researchers have used many different statistical approaches. Commonly, these statistics compare windows prior to and following the arrival of the transient mainshock energy. The windows range from as small as ± one to ± three hours22,37 to ± several days4,38,39. To identify rate changes, many statistical methods compare the number of events in the window prior to the mainshock energy to the number of events in the window post the mainshock arrival.

The most common statistic cited in dynamic earthquake triggering studies is the beta-statistic40,41. This statistic was originally developed to identify periods of earthquake quiescence. However, since the statistic measures differences in seismic rate between the number of earthquakes in a pre-window and the number predicted for a post-window normalized by the variance in the rate calculated using the pre-window, it can also be used to find periods of seismicity increases. Beta is defined as:

where Ta and Tb are the window lengths for counting the number of earthquakes after (Npost) and before (Npre) the time of interest, respectively, and \(\Lambda \) = Npre * \(\frac{Ta}{Tb}\).

In originally deriving the beta-statistic, it was assumed that: (1) the dataset was stationary (declustered seismic catalog) and (2) the pre-event window used to determine the rate contain at least one earthquake. The second assumption sets limits on how small the windows can be, and Matthews and Reasenberg40 cautioned that significant anomalies found for short windows be treated “suspiciously.” They also suggested that when evaluating the significance of the beta-statistic, one look at the distribution of beta over an interval. Prejean and Hill39 investigated beta-statistic thresholds using Monte-Carlo resampling of data from Alaskan Volcanoes and found beta thresholds ranged from 2.7 to 16. Importantly, their minimum 2.7 value is larger than a beta threshold of 2, which is often used when assuming a Gaussian distribution42.

Alternative statistics to measure rate changes include the Z-statistic43,44,45. The Z-statistic, like the beta-statistic, examines the rate of events in two different time windows using a stationary catalog.

where Npre, Npost, Tb and Ta are as defined above. Here, the difference in the mean number of events between the two-time windows is normalized by the sum of the variance, and the 95% and 99% significance thresholds are 1.96 and 2.57, respectively44. Note that when calculating the beta-statistic, if Tb and Ta are combined to predict the number of events in Ta (\(\Lambda \) = (Npre + Npost)* \(\frac{Ta}{(Ta+Tb)}\)) and the difference is normalized by the variance in this rate39, then the calculated value more closely approximates a Z-statistic46.

A third statistic assumes a Poisson distribution and calculates the difference from the mean (DFM)13,23,24. In this statistic, since a Poisson distribution assumes a constant rate, the number of events in the Tb window is taken to be the mean and the standard deviation is the square root of this value. For data sets with large number of samples generating the mean, 95% and 99% significance is equal to 1.96 and 2.58 times the standard deviation (σ), respectively, and significant rate changes are found when Npost > Npre + [1.96 or 2.58] * (σ), for 95% and 99%, respectively.

The three statistics described above depend on the ability to reliably predict the rate of earthquakes based on a chosen time window. For comparison, we propose a fourth empirically derived statistic where we calculate the background rate over three-year windows using the distribution of the number of events in a shorter window (here 5-hours). However, we first construct a uniform catalog by correcting for Mc (see full explanation in the Methods section). Using the distribution of number of events in the 5-hour windows, we use percentiles to identify threshold rates that occur less than 5% and 1% of the time.

Identified cases of dynamic triggering

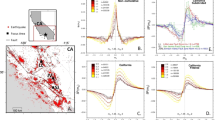

Using 500 mainshocks (Supplementary Data 1) and 33-years of regional earthquake catalog data corrected for Mc from four regions (Fig. 1; detailed description of earthquake catalogs in Data and Methods section), we compare instances of significant rate changes identified using three statistical methods: DFM, Z-statistic, and the proposed empirical statistic. We do not include the beta-statistic, because given the short-duration (5-hours), for many (1570 of 2000; 78.5%) remote mainshocks Npre is zero. Given this, it is not possible to correctly calculate the beta-statistic and we are careful to heed the warning regarding assigning significance when using small windows40. For the DFM method, if Npre is zero, we assign a value of one to preclude the case when < 2 events can be called triggering. Using the three chosen statistics, we identify 38 and 24 rate increases coincident with a mainshock at the 95% and 99% significance levels, respectively (Fig. 2, Supplementary Table S1 and Supplementary Data Files 1 and 2).

(top left inset) 500 mainshock earthquakes used in this study. Of these, 489 M ≥ 7.0 are teleseismic mainshocks (stars) and 11 are M ≥ 6.7 along the North American west coast (circles). Triggering mainshocks determined using the empirical method at the 95% significance level (pink, N = 19) and 99% (red, N = 12) are also shown. (basemap) Map of the four study regions (labeled). Figure generated using GMT-5.4.456 (http://gmt.soest.hawaii.edu/doc/5.4.4/gmt.html).

Statistical results. (a–d) 95% significant and (e–h) at 99% for methods: empirical (triangle), Z-statistic (circles), and difference from the mean (x). N is the total number of triggering mainshocks identified by any of the methods (different methods may identify the same mainshock as triggering). Nunique is the number of unique mainshocks.

Applying the DFM method using the short, 5-hour, pre-event time window identifies the most instances of rate increases coincident with the arrival of the seismic waves, 38 and 24, at the 95% and 99% confidence levels, respectively. However, because of the short 5-hour duration of the observation window (the duration used in many dynamic triggering studies13,23,24,43), this method is inherently plagued by the statistics of small numbers. In addition, there is no correction for variance in these data. The results from the proposed empirical method and the Z-statistic flag fewer triggering mainshocks than the DFM method.

If dynamic triggering preferentially triggers small magnitude quakes, as has been suggested in previous studies20,34,47, further enhancement of these regional catalogs may lead to more instances of potential dynamic triggering. However, the Mc levels for the regional earthquake catalogs used in this study are already relatively low (all time periods <2.5; majority of time periods <1.5; Supplementary Fig. S2). The different results between statistical methods can largely be attributed to the ability to more fully describe and characterize the seismicity rate within a 3-year catalog (empirical statistic) versus the rate within a 10-hour catalog (Z-statistic) or 5-hour catalog (DFM statistic). For example, for the Yellowstone and Anza catalogs, the long-term background rate can be high leading to high threshold values (>6; triggering less likely), while for the Utah and Montana catalogs the earthquake rate can be low leading to low threshold values (<4; triggering more likely).

Notably, all three statistical methods, identify previously documented cases of remote triggering in Utah and Yellowstone following the 2002 Mw 7.9 Denali Fault, Alaska, earthquake2,3,4,5, triggering in Utah following the 1992 Mw 7.3 Landers, California earthquake1, and in Anza following the 2010 Mw 7.2 El Mayor Cucapah earthquake47, (Fig. 2 and Supplementary Data Files 2 and 3). Newly identified cases of dynamic triggering include triggering in Yellowstone following the 1985 Mw 8.0 Mexico City earthquake and the 2000 Mw 7.9 Sumatra earthquake, and in Anza following the 2001 Mw 7.0 Guam earthquake and perhaps following the deep 2013 Mw 8.3 Sea of Okhotsk earthquake (Fig. 2). Other potential cases of remote triggering are discussed below.

Triggering in a regional context

Thus far, we have identified increases in rates of local earthquakes coincident with seismic waves from distant mainshocks. Next, we look at these rate increases in the context of the cumulative time history of earthquakes over a plus/minus one-month time-period and also examine the spatial footprint of the triggered events. As previously noted, for the triggered earthquakes in Utah following the 2002 Denali Fault earthquake, what was unique was the widespread distribution of events over a short-time window (not just the rate increase) and that the widespread seismicity tended to form clusters4.

For each mainshock deemed capable of remote triggering, we categorize the time history into four classes (Fig. 3). The signature of class one is most consistent with triggering following the Landers and Denali Fault earthquakes, a rapid, step-like increase in seismicity compared to the background rate (Fig. 4). The signature of class two is where the variation in cumulative event time history over a two-month time window encompassing the mainshock shows multiple bursts of seismic activity (Fig. 5). In some cases, the burst cycle initiates with the dynamic energy of the remote mainshock, but in other cases cyclical bursts were already an ongoing feature of these data. The signature of class three is when there is a rate change during an ongoing sequence that is clearly visible in the cumulative time history (Fig. 6). For class four there is no visual change in the cumulative time history over the two-month period, which is often the case when small numbers represent the deemed increase (i.e., a rate change from 2 events in the pre-window to 4 events in the post-window). Only the DFM method flags such subtle rate changes. We also classify the spatial extent of the events occurring in the Npost window: widespread (Fig. 4b,f,h), single isolated clusters (Fig. 5b,d,f), a signature in between widespread and isolated (Fig. 6d), and too few to speculate (Npost < = 2). Of note, temporal class one sequences tend to exceed the statistical thresholds by the largest factors and the triggered events tend to have the largest spatial footprints. Temporal classes two and three sequences tend to activate single source zones.

Temporal (color) and spatial (hue) behavior for mainshocks with a rate increase (Fig. 2). (a–d) 95% significance level and (e–h) at 99%. Temporal classification shown and labeled in the legend. Spatial patterns (numbers in legend): widespread (1, darker hue), single source zones (3, lightest hue) and somewhere between widespread and single source zones (2, middle hue). N is the number of triggering mainshocks shown in each panel.

When looking at the increases identified by multiple statistical measurements (Figs. 2 and 3), we find that Anza rate changes are often the result of step-increases (temporal class 1), while in Montana increases are primarily restricted to on-going sequences (temporal class 3). For Utah and Yellowstone, there is a mix of temporal types and variability in the spatial extent of the rate increase events. With three exceptions, bursty sequences are only significant at the 95% level, and it is not clear whether these small bursts of seismicity are the result of dynamic energy or the results of ongoing background seismicity. One of the exceptions is in Utah following the 2012 Mw 7.2 Sumatra quake (Fig. 5a,b), when there were three distinct local sequences during the two-month time period, the first located in southern Utah, the second in northern Utah, and the third in central Utah that was coincident with the mainshock’s seismic wave arrival in the region. Given that the bursty nature of the cumulative time plot corresponds with spatially distinct regions, this 2012 rate increase could be argued to be a step increase or just background seismicity. The other exceptions are in Yellowstone following the 2003 Mw 7.6 Scotia Sea quake (Fig. 5c,d) and the 2012 Mw 7.4 Oaxaca, Mexico quake (Fig. 5e,f). For the 2003 mainshock, there were 5–6 sequences within the two-month time frame. Spatially, there are local clusters of events, but the seismicity is diffuse for this two-month period. The cluster corresponding to the rate increase began ~1 hr after the origin of the mainshock with an ML 2.7 quake followed by four smaller shocks. For the 2012 event, there were 3 larger and several smaller sequences within the two-month time period. Again, the seismicity is quite diffuse (as is typical in Yellowstone) with a few localized clusters. One of these clusters began ~3 hr after the origin of the mainshock with an ML 2.0 quake followed by three smaller shocks. These examples demonstrate the required additional scrutiny needed to truly assess if triggering occurred.

After close examination, we find that only 24 to 38 of the 2000 (500 in four regions) mainshocks or 1%-2% are coincident with significant increases in earthquake rates at the 99% and 95% levels, respectively, and may represent remote dynamic triggering. Of these, only 8 are consistent with a temporal step change coincident with the arrival of the mainshock energy. Notably, the rate change for these eight events is significant at both the 95 and 99% levels. Eleven and three (95% and 99% levels, respectively) of the sequences are bursty and as discussed not easily associated with dynamic triggering. For the remaining 11 sequences, the rate increase is within an ongoing sequence. While difficult to conclusively associate with the passage of dynamic stresses, it is notable that like the step increase case all 11 events are significant at both the 95 and 99% levels. Overall, we find that Yellowstone and Utah are more susceptible to remote triggering than Anza and Montana. Yellowstone and Utah exhibit all three temporal classes, while rate changes in Anza exhibit step increases and rate changes in Montana exhibit enhancements within on-going sequences. Limiting our mainshocks to only shallow events (≤100 km)14,29,44 that can generate larger surface waves does not appreciably change these results (see Supplementary Table S2).

Implications for dynamic triggering

There is precedent in the seismological community that analysis using only changes in local earthquake rates can be used as an indicator for remote dynamic triggering. Here, we explore whether an increase in earthquake rate is always indicative of dynamic triggering. For example, should temporally bursty sequences be treated the same as temporal step increases? Can deep earthquakes trigger remote crustal earthquakes, as suggested by the Anza rate increase following the ~600 km deep 2013 Mw 8.3 Sea of Okhotsk earthquake? And, should increases in on-going sequences constitute remote dynamic triggering?

A second issue is the appropriateness of the statistics and the window length used to determine the earthquake background rate. Many of the statistics, beta-, Z-, and DFM, assume that the catalog represents independent points (stationary). However, declustering may be counterproductive because the goal of dynamic triggering studies is to identify changes in what could be termed remote aftershocks, so these statistics should be used with caution, especially when using short window lengths. We propose using an empirical approach to describe the rates. A potential disadvantage to the empirical approach is the need for a longer duration catalog. However, because a longer catalog is used the distribution of earthquake rates can be established using a statistically significant pool—thousands of windows each with a rate measurement. The longer catalog can also be used to establish Mc. Not correcting for Mc can significantly change the triggering results48. When applying the Z-statistic and DFM to catalogs not corrected for Mc, we find 30–50% additional events (false positives) are flagged using the Z-statistic and 100–200%, using DFM (see Supplementary Table S1). These findings suggest it is essential to apply these statistics to catalogs that contain only events above the Mc level.

A third issue raised here is what constitutes a significant increase. If significance is set at 95% more sequences are identified as potentially triggered. However, many are bursty or within on-going sequences. Whereas if significance is set at 99% some triggered sequences may be missed, including the recently established triggering in Anza following the 2010 El Mayor Cucapah earthquake47. We suggest following the lead of the statisticians who have recently called into question the usefulness and validity of statistical significance. In doing so, we contend that the seismological community should also transition from using hard-set rules of statistical significance and instead transition to the concept of compatibility25. However, if following this path, then we will also need to define indicators of dynamic triggering beyond rate. These could include the spatial and temporal classes identified in this and other papers21. Our findings suggest down-weighting the link to dynamic triggering for bursty sequences and increasing the weight for widespread spatial triggering. Other factors to consider include peak ground velocity and strain changes14,17,29,30,35,47,48,49,50 and the orientation of incoming seismic waves with respect to the local faults12,21,32,33,34,35.

Moving forward, future research on remote earthquake triggering should de-emphasize use of the beta-statistic and DFM and move beyond exploring only narrow time window rate changes. Additionally, a change in rate should be one of many indicators used to identify remote earthquake triggering. Here, we place the most confidence in sequences exhibiting either a strong step increase in rate and/or that the triggered seismicity has a distinct spatial footprint.

Data and Methods

Data

The mainshock earthquake catalog includes global and regional seismic data from 01 January 1985 through 31 December 2017 (33 years). These data include 11 earthquakes M ≥ 6.7 from within the western US and Canada (24 ≤ Latitude ≤ 51; −131 ≤ Longitude ≤ −109) and 489 global earthquakes M ≥ 7 (Fig. 1). We search for remote triggering in four locations: Anza, CA, Montana, Utah, and Yellowstone (Supplementary Table S3). For the Utah catalog, we exclude mining induced seismicity (MIS, see Supplementary Table S4 for polygon boundaries) in central Utah. MIS is often strongly correlated with production rate51, and to fully understand triggering in the MIS region, one would first need a means to account for variations in rate that are the result of production efforts. In the four regional catalogs, the reported magnitudes are typically local (ML) or duration (Md). In rare instances, for some of the larger earthquakes, moment (Mw) or body (Mb) magnitudes are also reported. For less than 1% of the data the regional catalogs contain events that do not have assigned magnitudes, for these events we assume the magnitudes are below the Mc.

Magnitude of completeness

We divide the 33-year regional catalogs into 3-year sub-catalogs, netting a total of 44 sub-catalogs for the four study regions. For each 3-year sub-catalog, we create histograms of the earthquake magnitudes and determine Mc for each sub-catalog from the mode of the histogram (Supplementary Fig. S1). When computing Mc, we use only the ML and Md events for each of the four target catalogs. We are careful, however, to check if the catalog for a given region has different ML and Md magnitude distributions. We take this cautionary step because previous work found that in some catalogs, especially for small magnitude events (<1.5), Md is consistently larger than ML52,53. If this is the case in our data, then when the two different distributions are combined they would produce a double peak in the magnitude histogram. To help negate these limitations, when there are multiple peaks, we choose the larger value as the completeness level, recognizing that this approach will underestimate the completeness level. This method of calculating Mc approximates the Maximum Curvature Technique54.

We determine Mc for our 3-year catalogs to appropriately account for changing Mc levels (Supplementary Fig. S2). We evaluate data in 3-year blocks, and discard all data below the Mc level. For the other statistical methods, we follow two approaches: (1) use the Mc corrected catalogs in order to directly compare the empirical statistic with the other statistics and (2) use all available data to mimic how the Z-statistic and DFM are typically applied.

Proposed empirical statistical method

In the new empirical method, the first goal is to establish the expected number of events within a chosen window length, here we use 5-hours to overlap with the arrival of mainshock energy and to be consistent with previous studies13,23,24,48. Using the Mc refined three-year catalogs, we count the number of events in each 5-hour time window incorporating a 1-hour sliding window. In this way, the first window spans 00:00 to 05:00, the second 01:00 to 06:00 and the third 02:00 to 07:00 and so on. The 3-year time span will increase when the dataset contains a leap-year, but in all cases the number of windows exceeds 26,000. We assign the number of events within each window (Ncount) to a timestamp at the start of the window (Supplemental Fig. S3).

We next build a histogram of Ncount values. For all regions and all 3-year sub-catalogs, 5-hour windows predominantly have zero earthquakes (Supplemental Fig. S4). The distributions are right skewed making traditional measurements of mean and standard deviation less meaningful. To identify outliers, we use the empirical distribution of Ncount values to compute a cumulative sum of the percentages for increasing Ncount, and identify threshold values (Nthresh) when the cumulative sums equals or exceeds 95% and 99% (Supplemental Fig. S4). With the acquired information, we catalog timestamps that have elevated seismicity rates with respect to the expected rate over the associated 3-year window. Using this method we avoid having to decluster the catalog, because we are assuming that the 3-year time-window is long enough such that elevated earthquake rates, like aftershock sequences and swarms, will be averaged out.

To determine time periods coincident with the arrival of the mainshocks, we establish when the first teleseismic waves arrive at the centroid of each study region using the travel time toolkit TauP55. Using this as the onset, we next determine when the Ncount rates within ± 5 hours of the arrival of the seismic waves from the remote mainshocks contain elevated rates at the 95% and 99% levels (Supplemental Fig. S3). All mainshocks associated with elevated counts are flagged for further analysis. For flagged cases, we compare the number of events in the 5-hour pre-window (Npre) to the number of events in the 5-hour post-window (Npost). If Npre > Npost, we rule out triggering. Importantly, for triggering cases not only do we require Nthresh < Npost, but we also require that Npre < Nthresh. Given this secondary restriction, our 95% level results will not necessarily be a subset of the 99% level results.

Data availability

All data used in this work came from catalog holdings from the ANSS (last accessed June 2018) and USGS COMCAT (last accessed October 2018). Data for the mainshocks and for Anza, CA, Yellowstone, and Utah regional catalogs were retrieved from the COMCAT catalog (last accessed October 2018) https://earthquake.usgs.gov/earthquakes/search/. The Montana regional catalog was retrieved from the ANSS catalog search: http://www.quake.geo.berkeley.edu/anss/catalog-search.html.

References

Hill, D. P. et al. Seismicity in the western United States remotely triggered by the M 7.4 Landers, California, earthquake of June 28, 1992. Science 260, 1617–1623 (1993).

Gomberg, J., Bodin, P., Larson, K. & Dragert, H. Earthquake nucleation by transient deformations caused by the M = 7.9 Denali, Alaska, earthquake. Nature 427, 621–624 (2004).

Husen, S., Wismer, S. & Smith, R. R. Remotely triggered seismicity in the Yellowstone National Park Region by the 2002 M w = 7.9 Denali Fault earthquake. Alaska, Bull. Seism Soc. Am. 94, S317–S331 (2004).

Pankow, K. L., Arabasz, W. J., Pechmann, J. C. & Nava, S. J. Triggered seismicity in Utah from the 3 November 2002 Denali Fault earthquake. Bull. Seism Soc. Am. 94, S332–S347 (2004).

Prejean, S. et al. Remotely triggered seismicity on the United States west coast following the M 7.9 Denali fault earthquake. Bull. Seis. Soc. Am. 94, S348–S359 (2004).

Brodsky, E. E., Karakostas, V. & Kanamori, H. A new observation of dynamically triggered regional seismicity: Earthquakes in Greece following the August 1999 Izmit, Turkey earthquake. Geophys. Res. Lett. 27, 2741–2744 (2000).

Brodsky, E. E. & van der Elst, N. J. The uses of dynamic earthquake triggering. Annual Review of Earth and Planetary Sciences 42, 317–339 (2014).

Freed, A. M. Earthquake triggering by static, dynamic, and postseismic stress transfer. Annu. Rev. Earth Planet. Sci. 33, 335–367 (2005).

Hill, D. P. & Prejean, S. in Treatise on Geophysics Vol. 4, 2nd edn (ed. Kanamori, H.) 273–304 (Elsevier, (2015).

Prejean, S.G. & Hill, D. P. Dynamic triggering of earthquakes. In Encyclopedia of Complexity and Systems Science (ed. Meyers, R.) 2600–2621 (Springer, (2009).

Li, B., Ghosh, A. and Mendoza, M. M. Delayed and sustained remote dynamic triggering of small earthquakes in the San Jacinto Fault Region by the 2014 Mw 7.2 Papanoa, Mexico earthquake. Geophys. Res. Lett. 46, https://doi.org/10.1029/2019GL084604 (2019).

Tape., C., West, M., Silwal, V. & Ruppert, N. Earthquake nucleation and triggering on an optimally oriented fault. Earth Planet. Sci. Lett. 363, 231–241 (2013).

Velasco, A. A., Hernandez, S., Parsons, T. & Pankow, K. Global ubiquity of dynamic earthquake triggering. Nature Geoscience 1, 375–379 (2008).

Wang, B., Harrington, R. M., Liu, Y., Kao, H. & Yu, H. Remote dynamic triggering of earthquakes in three unconventional Canadian hydrocarbon regions based on a multiple-station matched-filter approach. Bull. Seis. Soc. Am. 109, 372–386 (2019).

West, M., Sánchez, J. J. & McNutt, S. R. Periodically Triggered Seismicity at Mount Wrangell, Alaska, After the Sumatra Earthquake. Science 20, 1144–1146 (2005).

Elkhoury, J. E., Brodsky, E. E. & Agnew, D. C. Seismic waves increase permeability. Nature 441, 1135–38 (2006).

Johnson, C. W. & Bürgmann, R. Delayed dynamic triggering: Local seismicity leading up to three remote M ≥ 6 aftershocks of the 11 April 2012 M8.6 Indian Ocean earthquake. J. Geophys, Res: Solid Earth 121, 134–151 (2016).

Parsons, T. A hypothesis for delayed dynamic earthquake triggering. Geophysical Research Letters 32, https://doi.org/10.1029/2004GL021811 (2005).

van der Elst, N. J., Savage, H. M., Keranen, K. M. & Abers, G. A. Enhanced remote earthquake triggering at fluid-injection sites in the midwestern United States. Science 341, 164–167 (2013).

Aiken, C. et al. Exploration of remote triggering: A survey of multiple fault structures in Haiti. Earth Planet. Sci. Lett. 455, 14–24, https://doi.org/10.1016/j.epsl.2016.09.023 (2016).

Aiken, C., Meng, X. & Hardebeck, J. Testing for the ‘predictability’ of dynamically triggered earthquakes in Geysers Geothermal Field. Earth Planet. Sci. Lett. 486, 129–140, https://doi.org/10.1016/j.epsl.2018.01.015 (2018).

Jiang, T., Peng, Z., Wang, W. & Chen, Q. F. Remotely triggered seismicity in continental China following the 2008 M w 7.9 Wenchuan earthquake. Bull. Seismo. Soc. of Am. 100, 2574–2589 (2010).

Linville, L., Pankow, K., Kilb, D. & Velasco, A. Exploring remote earthquake triggering potential across EarthScopes’ Transportable Array through frequency domain array visualization. J. Geophys, Res: Solid Earth 119, 8950–8963 (2014).

Velasco, A. A., Alfaro‐Diaz, R., Kilb, D. & Pankow, K. L. A Time‐Domain Detection Approach to Identify Small Earthquakes within the Continental United States Recorded by the USArray and Regional. Networks. Bull. Seismo. Soc. of Am. 106, 512–525 (2016).

Amrhein, A. et al. Scientists rise up against statistical significance. Nature 567, 305–307 (2019).

Cox, R. T. Possible Triggering of Earthquakes by Underground Waste Disposal in the El Dorado, Arkansas Area. Seis. Res. Lett. 62, 113–122 (1991).

Townend, J. & Zoback, M. D. How faulting keeps the crust strong. Geology 28, 399–402 (2000).

Kane, D. L., Kilb, D., Berg, A. S. & Martynov, V. G. Quantifying the remote triggering capabilities of large earthquakes using data from the ANZA Seismic network catalog (Southern California). J. Geophys. Res.: Solid Earth. 112, B11302 (2007).

van der Elst, N. J. & Brodsky, E. E. Connecting near-field and far-field earthquake triggering to dynamic strain. J. Geophys. Res. 115, B07311 (2010).

Brodsky, E. E. & Prejean, S. G. New constraints on mechanisms of remotely triggered seismicity at Long Valley Caldera. J. Geophys. Res.: Solid Earth 110, B4 (2005).

Guilhem, A., Peng, Z., & Nadeau, R. M. High‐frequency identification of non‐volcanic tremor along the San Andreas Fault triggered by regional earthquakes, Geophys. Res. Lett. 37, https://doi.org/10.1029/2010GL044660 (2010).

Gonzalez‐Huizar, H., Velasco, A. A., Peng, Z., & Castro, R. R. Remote triggered seismicity caused by the 2011, M9. 0 Tohoku‐Oki, Japan earthquake. Geophys. Res. Lett. 39 (2012).

Hill, D. Dynamic stresses, Coulomb failure, and remote triggering–Corrected. Bull. Seism Soc. Am. 102, 2313–2336 (2012).

Parsons, T, Kaven, J. O., Velasco, A. A., & Gonzalez‐Huizar, H. Unraveling the apparent magnitude threshold of remote earthquake triggering using full wavefield surface wave simulation. Geochemistry, Geophysics, Geosystems 13 (2012).

Pena Castro, A. F., Dougherty, S. L., Harrington, R. M. & Cochran, E. S. Delayed dynamic triggering of disposal-induced earthquakes observed by a dense array in Northern Oklahoma. J. Geophys. Res.: Solid Earth 124, 3766–3781 (2019).

Manga M. and Wang C.-Y Earthquake Hydrology, in Treatise on Geophysics, 2nd edn Vol. 4 (ed. Schubert, G.) 305–328 (Elsevier 2015).

de Barros, L., Deschamps, A., Sladen, A., Lyon-Caen, H. & Voulgaris, N. Investigating Dynamic Triggering of Seismicity by Regional Earthquakes: The Case of the Corinth Rift (Greece). Geophys. Res. Lett. 44, https://doi.org/10.1002/2017GL075460 (2017).

Pollitz, F. F., Stein, R. S., Sevilgen, V. & Burgmann, R. The 11 April 2012 east Indian Ocean earthquake triggered large aftershocks worldwide. Nature 490, 250–253 (2012).

Prejean, S. G. & Hill, D. P. The influence of tectonic environment on dynamic earthquake triggering: A review and case study on Alaskan volcanoes. Tectonophysics 745, 293–304 (2018).

Matthews, M. V. & Reasenberg, P. A. Statistical methods for investigating quiescence and other temporal seismicity patterns. Pure and Applied Geophysics 126, 357–372 (1988).

Marsan, D. and Wyss, M. Community Online Resource for Statistical Seismicity Analysis, (2011).

Reasenberg, P. A. & Simpson, R. W. Response of regional seismicity to the static stress change produced by the loma prieta earthquake. Science 255, 1687–1690 (1992).

Aron, A. & Hardebeck, J. L. Seismicity Rate Changes along the Central California Coast due to Stress Changes from the 2003 M 6.5 San Simeon and 2004 M 6.0 Parkfield Earthquakes. Bull. Seism Soc. Am. 99, 2280–2292 (2009).

Habermann, R. E. Precursory seismicity patterns: Stalking the mature seismic gap, in Earthquake prediction - An international review, (eds. Simpson, D. W. & Richards, P. G.) 29–42 (AGU (1981).

Habermann, R. E. Teleseismic detection in the aleutian island arc. J. Geophys. Res. 88, 5056–506 (1983).

Marsan, D. & Nalbant, S. Methods for measuring seismicity rate changes: A review and a study of how the Mw 7.3 Landers earthquake affected the aftershock sequence of the Mw 6.1 Joshua Tree earthquake. Pure and Applied Geophysics 162 (2005).

Ross, Z. E., Trugman, D. T., Hauksson, E. & Shearer, P. M. Searching for hidden earthquakes in Southern California. Science 364, 767–771 (2019).

Aiken, C. & Peng, Z. Dynamic triggering of microearthquakes in three geothermal/volcanic regions of California. J. Geophys. Res: Solid Earth 119, 6992–7009 (2014).

Gomberg, J. & Bodin, P. Triggering of the Ms = 5.4 Little Skull Mountain, Nevada, earthquake with dynamic strains. Bull. Seism. Soc. Am. 84, 844–853 (1994).

Wang, B. R. M. H., Liu, Y., Yu, H., Carey, A. & van der Elst, N. J. Isolated cases of remote dynamic triggering in Canada detected using cataloged earthquakes combined with a match-filter approach. Geophys. Res. Lett. 42, 5187–5196 (2015).

Boltz, M., Pankow, K., & McCarter, M. K. Fine details of mining-induced seismicity at the Trail Mountain Mine coal mine using modified hypocentral relocation techniques, Bull. Seism. Soc. Am. 104, https://doi.org/10.1785/0120130011 (2014).

Bakun, W. H. Seismic moments, local magnitudes, and coda-duration magnitudes for earthquakes in central California. Bull. Seism. Soc. Am. 74, 439–458 (1984a).

Bakun, W. H. Magnitudes and moments of duration. Bull. Seism. Soc. Am. 74, 2335–2356 (1984b).

Wiemer, S. & Wyss, M. Minimum magnitude of completeness in earthquake catalogs: Examples from Alaska, the western United States, and Japan. Bull. Seism. Soc. Am. 90, 859–869 (2000).

Crotwell, H. P., Owens, T. J. & Ritsema, J. The TauP Toolkit: Flexible seismic travel-time and ray-path utilities. Seismological Res. Lett. 70, 154–160 (1999).

Wessel, P. & Smith, W. H. Free software helps map and display data. EOS, Trans. Am. Geophys. U. 72, 441–446 (1991).

Acknowledgements

We thank Chastity Aiken and two anonymous reviewers for their constructive comments that helped to improve this manuscript. This work also benefited from early reviews and discussions with Chris Johnson and Keith Koper. We also thank Jeanne Hardebeck, Joan Gomberg and Andrea Llenos for their thoughtful input. We thank Paul Roberson for work on figures. Funding for this work was provided by the National Science Foundation (NSF) under Grant Number EAR-1053376.

Author information

Authors and Affiliations

Contributions

K.P. devised the project, and the main conceptual ideas. K.P. and D.K. contributed to the final design and implementation of the research, to the analysis of the results, and to the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pankow, K.L., Kilb, D. Going Beyond Rate Changes as the Sole Indicator for Dynamic Triggering of Earthquakes. Sci Rep 10, 4120 (2020). https://doi.org/10.1038/s41598-020-60988-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-60988-2

This article is cited by

-

Landslide hazard cascades can trigger earthquakes

Nature Communications (2024)

-

Possible triggering relationship of six Mw > 6 earthquakes in 2018–2019 at Philippine archipelago

Acta Oceanologica Sinica (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.