Abstract

Quantum annealing is a generic solver for optimization problems that uses fictitious quantum fluctuation. The most groundbreaking progress in the research field of quantum annealing is its hardware implementation, i.e., the so-called quantum annealer, using artificial spins. However, the connectivity between the artificial spins is sparse and limited on a special network known as the chimera graph. Several embedding techniques have been proposed, but the number of logical spins, which represents the optimization problems to be solved, is drastically reduced. In particular, an optimization problem including fully or even partly connected spins suffers from low embeddable size on the chimera graph. In the present study, we propose an alternative approach to solve a large-scale optimization problem on the chimera graph via a well-known method in statistical mechanics called the Hubbard-Stratonovich transformation or its variants. The proposed method can be used to deal with a fully connected Ising model without embedding on the chimera graph and leads to nontrivial results of the optimization problem. We tested the proposed method with a number of partition problems involving solving linear equations and the traffic flow optimization problem in Sendai and Kyoto cities in Japan.

Similar content being viewed by others

Introduction

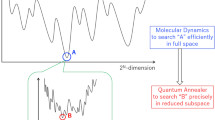

Quantum annealing (QA) is a generic algorithm aimed at solving optimization problems by exploiting the quantum tunneling effect. The scheme was originally proposed as an algorithm for numerical computation1 inspired by simulated annealing (SA)2 and exchange Monte-Carlo simulation3. Moreover, its experimental realization has been accomplished recently and attracted significant attention. Quantum annealing has the advantage of solving an optimization problem formulated with discrete variables. A well-known example is searching for the ground state of the spin-glass model, which corresponds to various types of optimization problems, such as the traveling salesman problem and satisfiability problem4,5,6. In QA, we formulate a platform to solve the optimization problem, the Ising model, and implement it in the time-dependent Hamiltonian. The Hamiltonian takes the form of the formulated Ising model at the final time. The initial Hamiltonian is governed by the "driver” Hamiltonian only with quantum fluctuation. The frequently used driver Hamiltonian consists of the transverse field, which generates the superposition of the up and down spins. The first stage of QA is initialized in the trivial ground state of the driver Hamiltonian. The quantum effect will be gradually turned off, and will end so that only the classical Hamiltonian with a nontrivial ground state remains. When the transverse field changes sufficiently slowly, the quantum adiabatic theorem ensures that we can find the nontrivial ground state at the end of QA7,8,9. Numerous reports have stated that QA outperforms SA10,11,12. The performance possibly stems from the quantum tunneling effect penetrating the valley of the potential energy. The protocol of QA is realized in an actual quantum device using contemporary technology, namely, the quantum annealer13,14,15,16. The output from the current version of the quantum annealer is not always the spin configuration in the ground state, due to the limitation of the device and environmental effects17. Therefore, several protocols based on QA do not keep the system in the ground state following the condition on the adiabatic quantum computation. Rather, they employ a nonadiabatic counterpart18,19,20,21 and the thermal effect22. The quantum annealer has been tested for numerous applications, such as portfolio optimization23, protein folding24, the molecular similarity problem25, computational biology26, job-shop scheduling27, traffic optimization28, election forecasting29, machine learning30,31,32,33,34,35, and automated guided vehicles in plants36.

In addition, studies on implementing the quantum annealer to solve various problems have been performed31,32,33,37,38,39. The potential of QA might be boosted by the nontrivial quantum fluctuation, referred to as the nonstoquastic Hamiltonian, for which efficient classical simulation is intractable40,41,42,43,44.

The current version of the quantum annealer, the D-Wave 2000Q, employs the chimera graph, on which physical qubits are set. The connection between the physical qubits is sparse and limited on the chimera graph. Several embedding techniques are thus proposed, but the number of logical qubits, which represent the optimization problems to be solved, is drastically reduced37. In particular, the optimization problem, when it is written in terms of the Ising model, including fully or even partly connected spins, suffers from the smallness of the embeddable size on the chimera graph. This is one of the bottlenecks in using D-Wave 2000Q. The problem will remain in the near future because the limitation of the connection stems due to the design of the quantum circuits, which are not yet flexible.

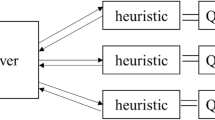

In the present study, we propose an alternative way to solve a large-scale optimization problem with fully connected interactions between the logical qubits without any division into small subproblems and without embedding. In statistical mechanics, a well-known traditional technique to tackle the fully connected interactions by hand is the Hubbard-Stratonovich transformation45,46 or its variants. This technique mitigates the difficulty in dealing with the fully connected interaction emerging from the squared term by changing it into a linear term via the inversion of the Gaussian integral. Using this technique, we formulate the original optimization problem with the squared term into another optimization problem with an equivalent linear term, but its coefficient can fluctuate stochastically. To determine the value of the coefficient, we need to estimate the expectation value conditioned on that in the previous step. In order to calculate the conditioned expectation values, we can utilize D-Wave 2000Q, which quickly outputs many samples of the Ising-variable configurations. Therefore, we construct an iterative technique involving the following two processes: (1) determination of the coefficient and (2) estimation of the expectation values via D-Wave 2000Q instead of directly solving the original optimization problem. By using our method, we can deal with a large-scale optimization problem even with the limited connections between the physical qubits, such as in D-Wave 2000Q. In addition, our technique is not restricted to the case with the quantum annealer, such as the D-Wave 2000Q. Complementary metal oxide semiconductor (CMOS) annealing, which also has the same bottleneck47, is within the range of application. Furthermore, our technique provides an alternative method to formulate the combinatorial optimization problem with several constraints. In this sense, even if the full connectivity of the logical variables in the hardware is realized such as the Fujitsu digital annealer48, our technique is valuable for finding optimal solutions. The listed hardware are special-purpose ones invented to solve the Ising model to find the minimizer of the cost function written in its form and quickly attain the sampling following particular distribution functions.

Our technique is closely related to our previous study on adaptive quantum Monte-Carlo simulation42. In the previous study, we showed that the fully connected antiferromagnetic interaction, which results in the sign problem via naive classical-quantum mapping, such as the Suzuki-Trotter decomposition49, can be transformed into a fluctuating transverse field without any sign problems, by using the Hubbard-Stratonovich transformation. In this case, one needs to estimate the expectation value of the transverse magnetization by the quantum Monte-Carlo simulation or message-passing algorithm as an approximate way50. A similar approach is found in the simulation for the strongly correlated electrons51.

The remaining part of the present paper consists of the following contents. In the next section, we show how to transform the optimization problem with squared terms into an equivalent simplified model and describe our method. In the following sections, we test our method with the number partition problem, which is a typical problem with a fully connected interaction, and solving an inverse problem from a small number of equations, which is also the case. In addition, several results for the optimization problem under several constraints are demonstrated. In the last section, we summarize our study.

Problem Setting

In QA, we formulate the optimization problem as the target Hamiltonian. In addition to the target Hamiltonian, we employ the driver Hamiltonian, which generates the quantum fluctuation driving the system. We consider the case with the target Hamiltonian f(q) where q = (q1, q2, ..., qN), and qi is a binary variable as 0 and 1. The binary variable can be written as the z-component of the Pauli matrices, which represent the logical qubits, namely, the Ising variables as qi = (1 + σi)∕2. Against the longitudinal Ising spin variables, the induction of the transverse field generates superposition to search the ground state efficiently and solves various optimization problems. In practical use of QA, several sets of the variables must satisfy the constraints. As is often the case, squared terms expressing the constraints appear. This is called the penalty method in the context of the optimization problems. We assume here that the target Hamiltonian consists of several summations of the squared terms representing the constraints such as Fi(q) = Ci ∀ i and the other terms f0(q) as

where λi is a predetermined parameter set to be relatively large because the squared terms often express the constraints for the Ising variables. The squared terms yield fully connected interactions among the Ising variables because Fi(q) often consists of the summation over several elements of q.

We introduce several examples appearing in QA. In a practical application of the quantum annealer for the reduction of traffic flow28, the squared term is then employed as

where

and qμ,i = (1 + σμ,i)/2 represents the selection of the μ-th route for the i-th car and Se,μ,i denotes the occupation of the road segment e by the μ th route and i th car. To avoid traffic congestion, they also implement another squared term as in the second term.

Furthermore, the cost function for inferring the N-dimensional original signal σk from the output consisting of M linear combinations \({y}_{\mu }={\sum }_{k}{a}_{\mu k}{q}_{k}^{0}\) in wireless communications and signal processing is written as

One of the fascinating applications of QA is Q-Boost52, which selects relevant weak classifiers to gain the performance by combining them. In addition, the squared terms stem from the penalty method for the constraints as follows

where uk(xμ) denotes the weak classifier, xμ is the data vector, and its label is yμ. If the number of the classifiers is set to be K, the additional squared term is employed as

In addition, the cost function itself is often expressed in the square form. One of the examples is the number partition problem, given as

where ni denotes a component of the numbers to be divided into two groups. One group is assigned σi = +1 and the other σi = −1. The summation ∑iniσi is desired to be zero to divide the component into two equal groups with an equal summation of the numbers.

As exemplified above, to solve various optimization problems in the quantum annealer through the formulation of the quadratic unconstrained binary optimization (QUBO) problem, we often implement the squared terms. However, the squared terms result in fully connected interactions among the Ising variables included in them. The fully connected interactions prevent efficient computation in the current version of the quantum annealer because it has a limitation in dealing with the connection between artificial spins. For example, we have to embed the original optimization problem with fully connected interactions into a sparse graph, known as the chimera graph, on the superconducting chip for the case of D-Wave 2000Q. Then, unfortunately, although D-Wave 2000Q has over 2000 active physical qubits, the number of logical qubits dealt with is reduced to about 60 in the worst case. This is one of the crucial bottlenecks of the current version of the quantum annealer. A different type of special-purpose hardware implementing the Ising model, namely, CMOS annealing, also has the same bottleneck47, whereas the Fujitsu digital annealer is free from the problem in connectivity48. To avoid the difficulty of the squared terms, in the previous study, a different type of driver Hamiltonian from the transverse field was proposed53,54. In this case, they succeeded in enhancing the performance of QA, but the current version of the quantum annealer cannot employ their method. Below, we mitigate this difficulty by tackling this problem in the optimization problem with squared terms using the standard method in statistical mechanics. The method proposed below is available in the current version of the quantum annealer.

Reduction of Squared Terms

A well-known technique for reducing the squared terms into linearized ones is the Hubbard-Stratonovich transformation or its variants45,46. First, we take the partition function expressing the equilibrium state governed by our target Hamiltonian as

where β is the inverse temperature. Here, we perform the Hubbard-Stratonovich transformation of the squared terms and obtain another expression for the partition function as

where \(\int D{z}_{k}=\int d{z}_{k}\exp (-{z}_{k}^{2}/2)\)/\(\sqrt{2\pi }\). Then, we change the integral variable \({z}_{k}\to -i\sqrt{\beta /{\lambda }_{k}}{\nu }_{k}\). The resulting partition function is

We obtain an effective Ising model with linear terms on the constraints and continuous variables ν = (ν1, ν2, ⋯), namely the Lagrange multipliers. The effective Hamiltonian is

The remaining problem is the minimization of the effective Hamiltonian instead of the original Hamiltonian. This is the same technique for dealing with constraints in optimization problems such as the Lagrange multiplier method. The original formulation employing squared terms is the penalty method. In the penalty method, we have to take a relatively large value of the coefficients λk to deal with the constraints. However, the large value of the coefficients leads to obstacles in the optimization by the current version of the quantum annealer because it has the limitation of range and interval of the coefficient. In our formulation, we can take the limit of λk → ∞ in a straightforward way. Instead of the large coefficient, the adaptive change of the multiplier νk retains the constraints. As a "dual" problem, the effective Hamiltonian for ν can be obtained as

where Z(ν) is the effective partition function defined as

Obviously, the effective Hamiltonian for ν is highly nontrivial. In other words, the complexity of the original optimization problem remains even in the dual problem with continuous variables. The minimizer of the effective Hamiltonian is the saddle point of the integrand in the partition function when we take the limit of β → ∞. The saddle point equation is given as

where the bracket denotes expectation by the probability distribution of q conditioned on the value of ν. Notice that, in general, the free energy for the so called spin-glass models, as discussed in context of the optimization problem, has many local minima. Thus, the saddle point is not unique. This is a consequence of the non-monotonic increase in \({\left\langle {F}_{k}({\bf{q}})\right\rangle }_{{\bf{q}}}\) against ν in the spin glass models. We emphasize that the complexity to find the ground state remains even by our method. In this sense, our method is strongly dependent on the form of f0(q).

Our remaining problem is to attain the saddle point by gradually changing the value of ν. To find the saddle point, one may utilize the steepest ascent method. We take β → ∞, and the expectation value is evaluated by the Ising spin configuration in the ground state. Thus, the sampling of the spin configuration by use of the D-Wave 2000Q can be performed. In particular, the practical optimization problem has a nontrivial cost function f0(q). Then, the computation of the expectation value as \({\left\langle {F}_{k}({\bf{q}})\right\rangle }_{{\bf{q}}}\) is harmful. To mitigate its difficulty, the special-purpose machine is valuable. Notice that our technique is not restricted to use of the D-Wave 2000Q. Our technique is helpful for the Fujitsu digital annealer and CMOS annealing chip to enhance the precision to satisfy the constraints.

Instead of the direct manipulation of the Hubbard-Stratonovich transformation, we may consider the variational free energy, namely the Gibbs free energy. Let us consider the target Hamiltonian without squared terms for constraints, namely f0(q). Then, the Gibbs-Boltzmann distribution is given as \(P({\bf{q}})=\exp (-\beta {f}_{0}({\bf{q}}))\)/Z. We introduce the Kullback-Leibler divergence to measure the distance between the trial distribution function P and Q as

Here, we consider the trial distribution Q with minimum distance from P, whereas the expectation satisfies the following constraints

The minimization of the KL divergence under this constraint yields the Gibbs free energy as

where E[Q] = ∑qQ(q)f0(q) and \(S[Q]=-Q({\bf{q}}){\rm{\log }}\,Q({\bf{q}})\). Here, we introduce the Lagrange multiplier ν for solving the minimization problem under the constraints. The minimizer depending on the Lagrange multiplier ν can be attained in a straightforward way as

Then, the Gibbs free energy is written as

This corresponds to the minimization of the effective Hamiltonian for ν. The Gibbs free energy is the starting point of the Plefka expansion to establish a systematic way to solve the Ising spin-glass model beyond the level of the mean-field theory. Then, we consider the weak-interaction limit for computing the summation of the logarithmic term. In contrast, we have an efficient sampler for estimating the expectation of the Ising spin-glass model such as the D-Wave 2000Q. Therefore, we do not need any approximation to proceed our formulation further to solve the optimization problem under several constraints. When Fk(q) consists of the summation over several binary variables, all we have to do is induce the longitudinal magnetic field to realize the resultant effective Hamiltonian. Thus, we construct a simple algorithm to solve the optimization problem as follows:

Initialize the Lagrange multipliers νt=0.

Compute the gradient and update the Lagrange multipliers as

$${\nu }_{k}^{t+1}={\nu }_{k}^{t}+\eta ({C}_{k}-{\left\langle {F}_{k}({\bf{q}})\right\rangle }_{{Q}^{t}}),$$(20)where η is a step width of the gradient method to achieve the maximization and Qt is the trial distribution function with ν = νt.

Let us briefly describe the above procedure for the case with a constraint for the simple summation, namely Fk(q) = ∑iqi. The initial condition, for instance, is set to be no biases on the system. Then, \({\langle {F}_{k}({\bf{q}})\rangle }_{{Q}^{t}}\) takes a finite value depending on f0(q). When \({\langle {F}_{k}({\bf{q}})\rangle }_{{Q}^{t}} > {C}_{k}\), \({\nu }_{k}^{t}\) decreases for reducing \({\langle {F}_{k}({\bf{q}})\rangle }_{{Q}^{t}}\) and vice versa. The convergence depends on the rate of the update η. One may utilize the line search for an optimal choice of η to efficiently attain convergent behavior. To solve the constraints, we need to estimate the expectation of the Ising spin glass with the Hamiltonian f0(q) − ∑kνkFk(q), in which the squared terms on the constraints are absent. This is much easier to implement it in the D-Wave 2000Q and CMOS annealing chip with finite connectivity of the graph. Notice that several optimization problems are written only by the squared terms, namely f0(q) = 0. Then, the effective Hamiltonian consists only of linear terms, namely, the local magnetic fields. In this sense, it is not necessary to use the special-purpose device to generate the sampling of the nontrivial Hamiltonian such as the D-Wave 2000Q. Our technique from this perspective would be quite valuable for the case with nontrivial f0(q).

Experiments

We test our method with various problems. The first experiment is performed for selection of the K-minimum set of the N random values. The original cost function is written as

where hi takes a random value following the uniform distribution. We set N = 2000 and K = 5. The square term in Eq. (21) often appears in application of the quantum annealer to the optimization problem under constraints. The standard approach for solving optimization problems as in Eq. (21) using the D-Wave 2000Q is embedded on the chimera graph up to 64 logical variables. However, our technique can embed 2000 logical variables directly. In this case, because f0(q) = 0, we do not necessarily need the sampling from the special-purpose devices. This is just a test for validation of our technique. In addition, the first term in Eq. (21) is very simple but the exact solution is attained in a straightforward manner. We can check the validity of our technique. The initial condition is set as ν0 = 0. We take the step width for the update in the steepest ascent by line search in all the cases shown below.

As shown in Fig. 1, we confirm that our technique can select K-minimum set from N random variables and reach the optimal solutions. We plot the residual energy, which is the difference between the cost function and its minimum value.

The second experiment is performed on the number partition problem as in Eq. (7). Then, the effective Hamiltonian is

For the number partition problem, f0(q) = 0. Therefore, we do not need the sampling from the special-purpose device. This is just a test for validation of our technique. We set 2000 components of integer numbers and permute them randomly because our available system of D-Wave 2000Q has about 2000 active physical qubits. The standard formulation of the number partition as in Eq. (7) by the D-Wave 2000Q is embedded on the chimera graph up to 64 logical variables. In contrast, our method, on the chip of D-Wave 2000Q, sets the 2000 local magnetic field for each physical qubit without any embedding techniques.

In Fig. 2, we plot the cost function (7) and not the value of the effective Hamiltonian (22) at each step of our method. In addition, the lower figure shows the multiplier νt. We employ the steepest ascent to attain the saddle point. The saddle point is expected to be around ν = 0 for the number partition problem because even tiny strength of the magnetic field enforces all the spin directions to be positive or negative but the optimal solution is randomly oriented. The initial condition is set to be ν0 = 0.2. The result confirms that the optimal solution can be obtained by our technique.

Residual energy (left) and multiplier (right) at each step in our method for the number partition problem. The cross points represent the empirical average of output from the D-Wave 2000Q, and the dashed curves denote the minimum value. The green line denotes the minimum unit of the residual energy as (1∕N)2/2 in the number partition problem. When the energy becomes lower than the green line, the solution reaches the optimal one.

The third example is solving the linear equation. In other words, it is termed as inference of the N-dimensional input from the M linear combinations as in Eq. (4). The effective Hamiltonian is

and f0(q) = 0. Again in this case, the effective Hamiltonian consists of only the local magnetic fields. Thus, D-Wave 2000Q can solve the inference problem for over 2000 dimensional inputs. We prepare the linear combination of the original signal q0 as y = Aq0, where A is a M × N matrix with random elements following the Gaussian distribution with a vanishing mean and unit variance, and \({q}_{i}^{0}=0\) and 1 follows an equal distribution. When α = M∕N > 0.633 obtained by analysis in statistical mechanics55, the inference of the N-dimensional original signal from M linear combinations by solving the optimization problem can be successful. In other words, the solution q can coincide with the original signal q0. We set M∕N = 0.8 and N = 2000. As shown in Fig. 3, we successfully find the perfect reconstruction of the original input. We observe the residual energy and mean squared error (MSE) defined as \({\left\Vert {\bf{q}}-{{\bf{q}}}_{0}\right\Vert }_{2}^{2}/N\). When the MSE and residual energy become zero, the perfect reconstruction is realized.

Residual energy (left) and MSE (right) at each step in our method for solving the linear equation. The same symbols are used as in Fig. 2 except for the green line. In this figure, the green line denotes zero.

The fourth example is essentially the same problem as the previous one. However, the original input represents the two-dimensional structure as shown in Fig. 4. The problem emerges typically in the compressed sensing for reconstructing an original input from insufficient number of outputs using its sparsity as prior information. Let us take an example like q0 in the previous case as in Fig. 4, which is two-dimensional structured data, while all the non-zero components are connected to each other. For α = 0.6, in which the number of outputs is too small to recover the original input, we employ the following Hamiltonian to infer the original input

In this case, we regard the first term as f0(q). Then, sampling using the D-Wave 2000Q is efficient to evaluate the expectation value in the update Eq. (20). As shown in Fig. (5), we demonstrate that our method solves the optimization problem written in Eq. (24) even for the insufficient outputs M∕N < 0.633.

Residual energy and MSE at each step in our method for inferring two-dimensional images. The same symbols are used as in Fig. 2 except for the green line. In this figure, the green line denotes zero.

The fifth example is the traffic flow optimization problem. Following the previous study28, we extract the route data from the OpenStreetMap via osmnx56. We prepare candidate routes for each car by the shortest path, and its variants. We then assign the binary variables qμ,i for each car i and its route μ. Each car selects a single route by satisfying the constraints as in Eq. (2). Instead of the constraints, we may decompose the quadratic term in f0(q) as follows

Then, the effective Hamiltonian can be reduced to the Ising model in the local-magnetic fields as

where hμ,i = ∑eνeSμ,i,e. Owing to the reduction of f0(q) instead of the constraints, notice that we can easily attain the expected value of the effective Hamiltonian without any sampling method as follows

where \({\mu }_{i}^{* }=arg\ ma{x}_{\mu }({h}_{\mu ,i})\). Then, the updated equation for each ν leads to a reasonable solution of the traffic flow optimization problem. However, the original optimization problem has many local minima. We may sample the binary variables following the effective Hamiltonian while tuning the Lagrange multiplier ν. Below, we compare (i) the deterministic way by using the expectation (27), (ii) sampling by classical way following the Gibbs-Boltzmann distribution, and (iii) sampling by the D-Wave 2000Q. The deterministic way quickly converges to the local minima of the cost function. In the context of statistical mechanics, the deterministic way corresponds to the level of the mean-field analysis. In this sense, this is a crude way to find an approximate solution. The following two methods are beyond the mean-field analysis level because sample fluctuation occurs. We utilize the sampling by the classical way following the Gibbs-Boltzmann distribution and by the D-Wave 2000Q just for selecting the choice of the route μ for each car i depending on the value of hμ,i while the outputs satisfy the constraints. The essential difference between two procedures is in the intermediate dynamics. The sampling by the classical way is based on hopping between the feasible solutions satisfying the constraint. In contrast, the sampling by the D-Wave 2000Q is driven by the quantum tunneling effect. The difference between two of the sampling methods appears as the performance of the resulting solutions. The latter method leads to a slightly better solution than the former one as far as our observations in this problem setting are concerned.

The number of cars is set to be 350 and that of the candidate routes is 3 for each car. The candidate routes are extracted from the actual maps. When we straightforwardly implement the optimization problem, the system contains 1050 spins and fully connected interactions, which is not directly solved by the D-Wave 2000Q. As a reference, we put the solution from the Fujitsu digital annealer because the original optimization problem is difficult to implement directly on the D-Wave 2000Q. We tune λ to attain the best solution from the Fujistu digital annealer.

As shown in Fig. 6, we obtain the lower-energy solutions using the D-Wave 2000Q in comparison to the deterministic way and sampling by the classical way. The results shown in Fig. 6 satisfy the constraints for selecting the single route for each car because we do not apply our method in reduction of the quadratic term representing the constraints in this case. To find solutions satisfying the constraints by use of our method, we need longer time to attain the feasible solutions.

Energy at each step in our method for optimizing the traffic flow at the Sendai city. The same symbols are used as in Fig. 2 except for the lines. In this figure, the red line denotes the result obtained by the deterministic way after a few steps, the green one is the minimum value by the sampling in the classical way during 10 steps, and the black one represents the reference result attained by direct manipulation of the Fujitsu digital annealer.

We test our method for the traffic flow optimization in Sendai city. Sendai city and nearby areas suffer from disaster by Tsunamis after big earthquakes in 2011. The optimal solution provides the appropriate information for evacuation avoiding traffic jam. The attained solutions are plotted in Fig. 7. For comparison, we have also plotted the shortest-path policy, in which each car selects the shortest path between the starting and destination points. As a reference, the resulting cost function is given as 920697 by the deterministic way, 848671 sampling by the classical way, and 830309 by our method with the D-Wave 2000Q, while the shortest path policy results in 1050159.

Results in Sendai city obtained by (Upper panel) the shortest-path policy and (lower panel) our method. The color strength of the red color on each road denoted by the edges represents the number of cars passing it. The blue points are the starting and destination points. We test our method around the point with \(3{8}^{\circ }2{8}^{{\prime} }\) north latitude and \(14{0}^{\circ }9{2}^{{\prime} }\) west longitude.

In addition, we also tested our method in Kyoto city as shown in Fig. 8. In this case, we attained the cost function to be 1602847 by the deterministic way, 1288513 sampling by the classical way, and 1284577 by our method with the D-Wave 2000Q, while the shortest path policy results in 1782220.

Results in Kyoto city obtained by the (Upper panel) shortest-path policy and (lower panel) our method. The same symbols and lines are used in Fig. 7. We tested our method around the point with \(3{5}^{\circ }0{3}^{{\prime} }\) north latitude and \(13{5}^{\circ }8{0}^{{\prime} }\) west longitude.

As demonstrated above, we solved the traffic-flow optimization problem exceeding the directly embeddable size on the D-Wave 2000Q. The precision of the results is at essentially the same level as that of the Fujitsu digital annealer, which can directly solve the optimization problem with a large number of binary variables.

To investigate how many steps our method takes typically, we run it in the case of the number partition problems (7), which is the inference of the N-dimensional input (4) in 1000 times. Because we choose these examples, we know the ground state a priori. As shown in Fig. 9, all the cases converge to the ground state using our method and take several dozens of typical iterations.

Histogram of number of iterations for the cases to solve Eqs. (7) (left) and (4) (right one). The horizontal axis denotes the number of iterations to attain the ground state. The left vertical axis stands for occurrences of the number of steps in 1000 runs and the right one represents the ratio. The curves are attained by the Kernel density estimation as a guide for eyes.

As the last example, we take a simple problem with double constraints, as is often seen in several practical optimization problems. The original cost function is written as

where hit is the randomly generated values, L is the linear size of the system, and the number of spins is N = L × L. This is the simplified version of the double-constraint problems as the traveling salesman problem. In the traveling salesman problem, an agent moves to each city i at each time t only once. To satisfy the rule, the cost function the double constraints as in the second and third terms as in Eq. (28). If we naively use the D-Wave 2000Q to solve this problem, the number of spins is limited to N = 64 and thus the number of cities to L = 8. We instead consider the random-field Ising model with the double constraint as in Eq. (28) to confirm the advantage of our method for satisfying such hard constraints. We implement the following effective Hamiltonian and then deal with the number of spins, which drastically increases up to L = 45, namely N = 2025, as

As in Fig. 10, we test our method to find the ground state of the original cost function (28).

Summation of the second and third terms for each iteration (left) and histogram of the number of iterations in solving Eq. (29) (right). The same symbols are used in Figs. (3) and (9). In the left panel, the vertical axis denotes the summation of the second and third terms in Eq. (10) and the horizontal one represents the step.

Summary

We propose a technique to change various optimization problems with squared terms into those only with linear terms using the Hubbard-Stratonovich transformation. The squared terms hamper efficient computation when special-purpose hardware, such as D-Wave 2000Q, is used to solve the optimization problems. Our method mitigates the difficulty in dealing with the squared terms. Instead of direct manipulation, we iteratively solve the optimization problem with linear terms and nontrivial terms. We take various examples to test our methods. The first one is to select K variables under the random field, the second one is the number partition problem, the third one is to solve the linear equations, and the forth one is to reconstruct the structured data. These are the optimization problems to find the feasible solutions satisfying the constraints. Although our method can attain feasible solutions, it takes a long time to converge to them because a number of Lagrange multipliers need to be tuned. In this sense, the application of our method is very important.

Apart from the previous four examples, the fifth one is the application of our method to give a lower-energy solution satisfying the constraints. A part of the original optimization problem is reduced to the linear term, which becomes the local field. We do not consider the constraints by our method in this case because it is easy to satisfy them under only the local field. Then, our method leads to the feasible solution with lower energy. To attain feasible solutions, we propose three of the methods. The first one is the deterministic way to find the local minima, the second one is sampling by the classical way while jumping between feasible solutions. The third one is the sampling by the D-Wave 2000Q for the binary variables, which do not necessarily satisfy the constraints. In this sense, the range of the search for the optimal solutions is considered to be wide. Thus, the third method is to efficiently find the better solution than the first and second ones.

In addition, these types of applications is a generalization of the straightforward application of our approach for the target Hamiltonian without any constraints, where we set f0(q) = 0 and C = 0, is written as

where A is a QUBO matrix, Λ is the diagonal matrix including the eigenvalues λk(k = 1, 2, ⋯, N) of A, and U is the orthogonal matrix diagonalizing Q. Then \({F}_{k}({\bf{q}})={{\bf{u}}}_{k}^{{\rm{T}}}{\bf{q}}\). In this case, the saddle-point equation is

where \({\bf{h}}={{\boldsymbol{\nu }}}_{k}^{{\rm{T}}}{{\bf{u}}}_{k}\) and

Then the Taylor expansion of the Gibbs free energy G(0) with respect to A leads to the Plefka expansion. The expansion up to the second order leads to saddle-point equation corresponding to the TAP equation. In this sense, our approach is a generalization of the mean-field analysis.

In general, we may solve the optimization problem by changing the Lagrange multipliers iteratively. On the D-Wave 2000Q, the fully connected interactions can be dealt with up to 64 binary variables. However, using our method, we can solve the QUBO including the Sherrington-Kirkpatrick model, which is a typical problem in spin glass theory, up to 2048. The sampling depending on the local field is an easy task. However, the sampling with changing value of the local fields depending on the Lagrange multipliers has a history, and it crucially affects the performance of the resulting solutions. We need another ingredient to improve the effect of the history of our method as proposed in the TAP equation to more efficiently solve the Ising spin-glass problem beyond the naive mean-field theory. Possibly, the quantum tunneling effect might remove the effect of the history. As far as our experience is concerned, we can find better solutions from the D-Wave 2000Q than from the classical way of sampling. This will be detailed in a future study.

As pointed out in the previous section, the performance of our method is strongly dependent on the form of f0(q). All the cases tested in the present study have the simple forms of f0(q) = 0 or linear combinations. In the traveling salesman problem, the interactions f0(q) = ∑t∑i,jdi,jqi,tqj,t+1, where dij is the distance between different cities i and j. Because dij > 0, the Griffiths inequality might not hold in this case. In other words, \({\left\langle {F}_{k}({\bf{q}})\right\rangle }_{{\bf{q}}}\) is not necessarily a monotonic increasing function against ν. Therefore, the current version of our method might not be capable to efficiently lead to the ground state for the typical hard optimization problems.

We again emphasize that the original optimization problem solved in our study, which has fully connected interactions, cannot be embedded on the D-Wave 2000Q. In this sense, our method makes a step to go ahead for more difficult tasks using the D-Wave 2000Q by reduction of the squared terms generating the fully connected interactions. We actually reveal not only the potential of D-Wave 2000Q, but also CMOS annealing chip. They do not suffer from the embedding of the optimization problem on the sparse graph due to the limitation of each piece of hardware. In addition, our method makes it possible to deal with the four-body interaction. By reducing the four-body interactions to the squared terms of the two-body interactions via diagonalization, we can obtain an effective two-body interacting system. In this sense, our method reveals the capability to solve a wide range of Ising models by using the special-purpose hardware. In addition, our method does not stick to the case to solve the optimization problem. Because our technique is based on statistical mechanics, we utilize our method to perform efficient sampling at low temperatures. We can find the hidden potential of the special-purpose hardware not only for solving the optimization problem but also for Boltzmann machine learning.

References

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse ising model. Phys. Rev. E 58, 5355–5363, https://doi.org/10.1103/PhysRevE.58.5355 (1998).

Kirkpatrick, S., Gelatt, C. D. & Vecchi, M. P. Optimization by simulated annealing. Science 220, 671–680, https://doi.org/10.1126/science.220.4598.671 (1983).

Hukushima, K. & Nemoto, K. Exchange monte carlo method and application to spin glass simulations. Journal of the Physical Society of Japan 65, 1604–1608, https://doi.org/10.1143/JPSJ.65.1604 (1996).

Monasson, R. & Zecchina, R. Statistical mechanics of the random k -satisfiability model. Phys. Rev. E 56, 1357–1370, https://doi.org/10.1103/PhysRevE.56.1357 (1997).

Monasson, R. Optimization problems and replica symmetry breaking in finite connectivity spin glasses. Journal of Physics A: Mathematical and General 31, 513 (1998).

Mezard, M. & Montanari, A. Information, Physics, and Computation (Oxford University Press, Inc., New York, NY, USA, 2009).

Suzuki, S. & Okada, M. Residual energies after slow quantum annealing. J. Phys. Soc. Jpn. 74, 1649–1652, https://doi.org/10.1143/JPSJ.74.1649 (2005).

Morita, S. & Nishimori, H. Mathematical foundation of quantum annealing. J. Math. Phys.49, https://doi.org/10.1063/1.2995837 (2008).

Ohzeki, M. & Nishimori, H. Quantum annealing: An introduction and new developments. J. Comput. Theor. Nanosci. 8, 963–971, https://doi.org/10.1166/jctn.2011.1776963 (2011-06-01T00:00:00).

Santoro, G. E., Martoňák, R., Tosatti, E. & Car, R. Theory of quantum annealing of an ising spin glass. Sci. 295, 2427–2430, https://doi.org/10.1126/science.1068774 (2002).

Martoňák, R., Santoro, G. E. & Tosatti, E. Quantum annealing of the traveling-salesman problem. Phys. Rev. E 70, 057701, https://doi.org/10.1103/PhysRevE.70.057701 (2004).

Baldassi, C. & Zecchina, R. Efficiency of quantum vs. classical annealing in nonconvex learning problems. Proc. Nat. Acad. Sci. 115, 1457–1462, https://doi.org/10.1073/pnas.1711456115 (2018).

Johnson, M. W. et al. A scalable control system for a superconducting adiabatic quantum optimization processor. Supercond. Sci. Technol. 23, 065004 (2010).

Berkley, A. J. et al. A scalable readout system for a superconducting adiabatic quantum optimization system. Supercond. Sci. Technol. 23, 105014 (2010).

Harris, R. et al. Experimental investigation of an eight-qubit unit cell in a superconducting optimization processor. Phys. Rev. B 82, 024511, https://doi.org/10.1103/PhysRevB.82.024511 (2010).

Bunyk, P. I. et al. Architectural considerations in the design of a superconducting quantum annealing processor. IEEE Transactions on Appl. Supercond. 24, 1–10, https://doi.org/10.1109/TASC.2014.2318294 (2014).

Amin, M. H. Searching for quantum speedup in quasistatic quantum annealers. Phys. Rev. A 92, 052323 (2015).

Ohzeki, M. Quantum annealing with the jarzynski equality. Phys. Rev. Lett. 105, 050401, https://doi.org/10.1103/PhysRevLett.105.050401 (2010).

Ohzeki, M., Nishimori, H. & Katsuda, H. Nonequilibrium work on spin glasses in longitudinal and transverse fields. J. Phys. Soc. Jpn. 80, 084002, https://doi.org/10.1143/JPSJ.80.084002 (2011).

Ohzeki, M. & Nishimori, H. Nonequilibrium work performed in quantum annealing. J. Physics: Conf. Ser. 302, 012047 (2011).

Somma, R. D., Nagaj, D. & Kieferová, M. Quantum speedup by quantum annealing. Phys. Rev. Lett. 109, 050501 (2012).

Kadowaki, T. & Ohzeki, M. Experimental and theoretical study of thermodynamic effects in a quantum annealer. J. Phys. Soc. Jpn. 88, 061008, https://doi.org/10.7566/JPSJ.88.061008 (2019).

Rosenberg, G. et al. Solving the optimal trading trajectory problem using a quantum annealer. J. Sel. Top. Signal Process. 10, 1053–1060, https://doi.org/10.1109/JSTSP.2016.2574703 (2016).

Perdomo-Ortiz, A., Dickson, N., Drew-Brook, M., Rose, G. & Aspuru-Guzik, A. Finding low-energy conformations of lattice protein models by quantum annealing. Sci. Reports 2, 571 EP - (2012).

Hernandez, M. & Aramon, M. Enhancing quantum annealing performance for the molecular similarity problem. Quantum Inf. Process. 16, 133, https://doi.org/10.1007/s11128-017-1586-y (2017).

Li, R. Y., DiFelice, R., Rohs, R. & Lidar, D. A. Quantum annealing versus classical. machine learning applied to a simplified computational biology problem. npj Quantum Inf. 4, 14, https://doi.org/10.1038/s41534-018-0060-8 (2018).

Venturelli, D., Marchand, D. J. J. & Rojo, G. Quantum Annealing Implementation of Job-Shop Scheduling. ArXiv e-prints 1506.08479 (2015).

Neukart, F. et al. Traffic flow optimization using a quantum annealer. Front. ICT 4, 29 (2017).

Henderson, M., Novak, J. & Cook, T. Leveraging Adiabatic Quantum Computation for Election Forecasting. ArXiv e-prints 1802.00069 (2018).

Crawford, D., Levit, A., Ghadermarzy, N., Oberoi, J. S. & Ronagh, P. Reinforcement Learning Using Quantum Boltzmann Machines. ArXiv e-prints 1612.05695 (2016).

Arai, S., Ohzeki, M. & Tanaka, K. Deep neural network detects quantum phase transition. J. Phys. Soc. Jpn. 87, 033001, https://doi.org/10.7566/JPSJ.87.033001 (2018).

Takahashi, C. et al. Statistical-mechanical analysis of compressed sensing for hamiltonian estimation of ising spin glass. J. Phys. Soc. Jpn. 87, 074001, https://doi.org/10.7566/JPSJ.87.074001 (2018).

Ohzeki, M. et al. Quantum annealing: next-generation computation and how to implement it when information is missing. Nonlinear Theory and Its Appl., IEICE 9, 392–405, https://doi.org/10.1587/nolta.9.392 (2018).

Neukart, F., VonDollen, D., Seidel, C. & Compostella, G. Quantum-enhanced reinforcement learning for finite-episode games with discrete state spaces. Front. Phys. 5, 71, https://doi.org/10.3389/fphy.2017.00071 (2018).

Khoshaman, A., Vinci, W., Denis, B., Andriyash, E. & Amin, M. H. Quantum variational autoencoder. Quantum Sci. Technol. 4, 014001 (2018).

Ohzeki, M., Miki, A., Miyama, M. J. & Terabe, M. Control of automated guided vehicles without collision by quantum annealer and digital devices. Front. Comput. Sci. 1, 9, https://doi.org/10.3389/fcomp.2019.00009 (2019).

Okada, S., Ohzeki, M., Terabe, M. & Taguchi, S. Improving solutions by embedding larger subproblems in a d-wave quantum annealer. Sci. Reports 9, 2098, https://doi.org/10.1038/s41598-018-38388-4 (2019).

Okada, S., Ohzeki, M. & Tanaka, K. The efficient quantum and simulated annealing of Potts models using a half-hot constraint. arXiv e-prints arXiv:1904.01522 (2019).

Okada, S., Ohzeki, M. & Taguchi, S. Efficient partition of integer optimization problems with one-hot encoding. Sci. Reports 9, 13036, https://doi.org/10.1038/s41598-019-49539-6 (2019).

Seki, Y. & Nishimori, H. Quantum annealing with antiferromagnetic fluctuations. Phys. Rev. E 85, 051112, https://doi.org/10.1103/PhysRevE.85.051112 (2012).

Seki, Y. & Nishimori, H. Quantum annealing with antiferromagnetic transverse interactions for the hopfield model. J. Phys. A: Math. Theor. 48, 335301 (2015).

Ohzeki, M. Quantum monte carlo simulation of a particular class of non-stoquastic hamiltonians in quantum annealing. Sci. Reports 7, 41186 (2017).

Arai, S., Ohzeki, M. & Tanaka, K. Dynamics of Order Parameters of Non-stoquastic Hamiltonians in the Adaptive Quantum Monte Carlo Method. ArXiv e-prints 1810.09943 (2018).

Okada, S., Ohzeki, M. & Tanaka, K. Phase diagrams of one-dimensional ising and xy models with fully connected ferromagnetic and anti-ferromagnetic quantum fluctuations. J. Phys. Soc. Jpn. 88, 024802, https://doi.org/10.7566/JPSJ.88.024802 (2019).

Stratonovich, R. L. On a Method of Calculating Quantum Distribution Functions. Sov. Phys. Doklady 2, 416 (1957).

Hubbard, J. Calculation of partition functions. Phys. Rev. Lett. 3, 77–78, https://doi.org/10.1103/PhysRevLett.3.77 (1959).

Yamaoka, M. et al. A 20k-spin ising chip to solve combinatorial optimization problems with cmos annealing. IEEE J. Solid-State Circuits 51, 303–309, https://doi.org/10.1109/JSSC.2015.2498601 (2016).

Tsukamoto, S., Takatsu, M., Matsubara, S. & Tamura, H. An accelerator architecture for combinatorial optimization problems. In FUJITSU Sci. Tech. J., vol. 53, 8 (2017).

Suzuki, M. Relationship between d-dimensional quantal spin systems and (d . 1)-dimensional ising systems: Equivalence, critical exponents and systematic approximants of the partition function and spin correlations. Prog. Theor. Phys. 56, 1454–1469, https://doi.org/10.1143/PTP.56.1454 (1976).

Ohzeki, M. Message-passing algorithm of quantum annealing with nonstoquastic hamiltonian. J. Phys. Soc. Jpn. 88, 061005, https://doi.org/10.7566/JPSJ.88.061005 (2019).

White, S. R. et al. Numerical study of the two-dimensional hubbard model. Phys. Rev. B 40, 506–516, https://doi.org/10.1103/PhysRevB.40.506 (1989).

Neven, H., Denchev, V. S., Rose, G. & Macready, W. G. Qboost: Large scale classifier training withadiabatic quantum optimization. In Hoi, S. C. H. & Buntine, W. (eds.) Proceedings of the Asian Conference on Machine Learning, vol. 25 of Proceedings of Machine Learning Research, 333–348 (PMLR, Singapore Management University, Singapore, 2012).

Hen, I. & Sarandy, M. S. Driver hamiltonians for constrained optimization in quantum annealing. Phys. Rev. A 93, 062312, https://doi.org/10.1103/PhysRevA.93.062312 (2016).

Hen, I. & Spedalieri, F. M. Quantum annealing for constrained optimization. Phys. Rev. Appl. 5, 034007, https://doi.org/10.1103/PhysRevApplied.5.034007 (2016).

Tanaka, T. A statistical-mechanics approach to large-system analysis of cdma multiuser detectors. IEEE Trans. Inf. Theor. 48, 2888–2910 (2006).

Boeing, G. Osmnx: New methods for acquiring, constructing, analyzing, and visualizing complex street networks. Comput. Environ. Urban Syst. 65, 126–139, https://doi.org/10.1016/j.compenvurbsys.2017.05.004 (2017).

Acknowledgements

The authors would like to thank Masamichi J. Miyama, Shuntaro Okada, Shunta Arai, and Shu Tanaka for the fruitful discussions. The present work was financially supported by JSPS KAKENHI Grant No. 19H01095, and Next Generation High-Performance Computing Infrastructures and Applications R&D Program by MEXT. The research was partially supported by National Institute of Advanced Industrial Science and Technology. We utilized the Fujitsu digital annealer by courtesy of Fujitsu Limited.

Author information

Authors and Affiliations

Contributions

M.O. did the experiment, analyzed all the results, and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ohzeki, M. Breaking limitation of quantum annealer in solving optimization problems under constraints. Sci Rep 10, 3126 (2020). https://doi.org/10.1038/s41598-020-60022-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-60022-5

This article is cited by

-

On good encodings for quantum annealer and digital optimization solvers

Scientific Reports (2023)

-

Mapping a logical representation of TSP to quantum annealing

Quantum Information Processing (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.