Abstract

A main goal in the analysis of a complex system is to infer its underlying network structure from time-series observations of its behaviour. The inference process is often done by using bi-variate similarity measures, such as the cross-correlation (CC) or mutual information (MI), however, the main factors favouring or hindering its success are still puzzling. Here, we use synthetic neuron models in order to reveal the main topological properties that frustrate or facilitate inferring the underlying network from CC measurements. Specifically, we use pulse-coupled Izhikevich neurons connected as in the Caenorhabditis elegans neural networks as well as in networks with similar randomness and small-worldness. We analyse the effectiveness and robustness of the inference process under different observations and collective dynamics, contrasting the results obtained from using membrane potentials and inter-spike interval time-series. We find that overall, small-worldness favours network inference and degree heterogeneity hinders it. In particular, success rates in C. elegans networks – that combine small-world properties with degree heterogeneity – are closer to success rates in Erdös-Rényi network models rather than those in Watts-Strogatz network models. These results are relevant to understand better the relationship between topological properties and function in different neural networks.

Similar content being viewed by others

Introduction

Network Neuroscience seeks to unravel the complex relationship between functional connectivity in neural systems (i.e., the correlated neural activity) and their underlying structure (e.g., the brain’s connectome); among other goals1,2,3,4,5. The functional connectivity is responsible for many tasks, such as segregation, transmission, and integration of information6,7. These (and other) tasks are found to be optimally performed by neural structures that show small-world properties2,4,6, which range from human brain connectomics5,8,9 to the Caenorhabditis elegans nematode neural networks10,11,12. In general, structural networks have been revealed by tracing individual neural processes, e.g., by diffusion tensor imaging or electron-microscopy— methods that are typically unfeasible for large neural networks. On the other hand, functional networks are revealed by performing reverse engineering on the time-series measurements of the neural activity, e.g., by using EEG or EMG recordings. These methods are known as network inference and are mainly affected by data availability and precision.

In general, network inference has been approached by means of bi-variate similarity measures, such as the pair-wise cross-correlation9,13,14, Granger Causality15,16,17, Transfer Entropy18,19,20, and mutual information21,22,23,24, to name a few. The main idea behind the similarity approach is that, units sharing a direct connection (namely, a functional or structural link exists that joins them) have particularly similar dynamics, whereas units that are indirectly connected (namely, a functional or structural link joining them is absent) are less likely to show similar dynamics. Although intuitive, this approach has found major challenges in neural systems due to their complex behaviour and structure connectedness, resulting in highly correlated dynamics from indirectly connected units and loosely correlated dynamics for directly connected units. Moreover, because most works have focused on maximising the inference success (in relation to its ability to discover the structural network) and/or optimising its applicability22,24,25,26, we are still unaware of which are the main underlying mechanisms that affect the inference results. Namely, differentiating the underlying structure with the functional connectivity – particularly with respect to establishing which of the different network properties are mainly responsible for hindering inference success rates.

In this work, we reveal that the degree of small-worldness is directly related to the success of correctly inferring the network of synthetic neural systems when using bi-variate similarity analysis. Our neural systems are composed of pulse-coupled Izhikevich maps and connected in network ensembles with different small-worldness values – but statistically similar to the C. elegans neural networks. The inference process is done by measuring the pair-wise cross-correlation and the mutual information between the neurons’ activity. We assess the inference effectiveness by means of receiver operating characteristic (ROC) analysis and, in particular, the true positive rate (TPR), which we show is the only relevant quantity under our inference framework. Our findings show that the TPR peaks around a critical coupling strength where the system transitions from synchronous bursting dynamics to a spiking incoherent regime. Specifically, we find that the highest TPR is for networks with significant small-worldness level. We analyse these results in terms of different topology choices, collective dynamics, neural activity observations (inter-spike intervals or membrane potentials), and time-series length. We expect that these results will help to understand better the role of small-worldness in brain networks, but also in other complex systems, such as climate networks27,28,29.

Results

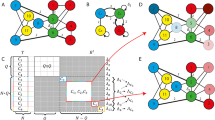

We infer the underlying network of a synthetic neural system by creating a binary matrix of 1s and 0s from the pair-wise cross-correlation (CC) – or mutual information (MI) – matrix of the signal-measurements. The resultant binary matrix represents the inferred connections that the neurons composing the system share, which we obtain by applying a threshold to the CC matrix. The threshold assumes that a strong [weak] similarity in the measured signals, i.e., a CC value above [below] the threshold, correspond to a 1[0] in the inferred adjacency matrix, suggesting that a direct [indirect] structural connection exists. In spite of this (seemingly) over-simplification, this binary process is broadly used in network inference4,5,9,22,24 and it tends to keep the most relevant information from the underlying connectivity. Moreover, when the underlying network is known, it allows to quantify how poorly or efficiently the bi-variate method performs in terms of the receiving operation characteristic (ROC) analysis30,31,32. In particular, we set the threshold such that the inferred network has the same density of connections, \(\rho =2M/N(N-1)\), as the underlying structure, where N is the network size and M is the number of existing links. This means that, in all of our results, we assume an a priori (minimal) knowledge about the underlying structure, namely, we require knowing \(\rho \) in order to choose the threshold such that the inferred network has the given \(\rho \).

The true positive rate, also known as sensitivity, is the proportion of correctly identified connections with respect to the total of existing connections30, i.e., \(TPR\equiv TP/(TP+FN)\), where \(TP\) is the number of true positives and \(FN\) is the number of false negatives. This quantity is part of the ROC analysis, which includes the true negative rate, \(TNR\), false positive rate, \(FPR\), and false negative rate, \(FNR=1-TPR\). Taken together, these variables quantify the performance of any method. However, when fixing the inferred network’s density of connections, \(\rho \), to match that of the underlying network, we can show that the \(TPR\) is the only relevant variable in the ROC analysis – all remaining quantities can be expressed in terms of the \(TPR\) and \(\rho \). For example, the \(TNR=1-FPR\), also known as specificity, can be expressed in terms of the \(TPR\) and \(\rho \) by

where we use the fact that \(TP+FN=M\) is the number of existing connections, \(TN+FP=N\,(N-1)/2-M\) is the number of non-existing connections, \(\rho =2M/N\,(N-1)\) is the density of connections, and \(TP+FP=M\) is the number of connections we keep fixed for the inferred matrix in order to maintain \(\rho \) invariant. Hence, as a result of our threshold choice, \(FN=FP\), implying that the \(FNR\equiv FN/(FN+TP)\) is identical to the false discovery rate, \(FDR\equiv FP/(FP+TP)\), and that the precision, \(PPV\equiv TP/(TP+FP)\) is identical to the TPR. Overall, these relationships mean that in order to quantify the inference success or failure, we can solely focus on studying how the TPR changes as the dynamical parameters and network structure change.

The following results are derived from time-series measurements of pulse-coupled Izhikevich maps interacting according to different network structures and coupling strengths, where each map’s uncoupled dynamic is set to bursting (see Methods for details on the map and network parameters). Pulse coupling is chosen because of its generality, which has been shown to allow the representation of several biophysical interactions33,34,35, and single parameter tuning, i.e., the coupling strength, \(\varepsilon \). In particular, we register the neurons’ membrane potentials (signals coming from the electrical impulses) and inter-spike intervals (time windows between the electrical pulses) of 10 randomly-set initial conditions and \(T=7\times {10}^{4}\) iterations, from which we discard the first 2 × 104 iterations as transient (we also analyse the effects of keeping shorter time-series).

Without losing generality, we restrict our analyses to connecting the neurons (maps) in symmetric Erdös-Rényi (ER)36 and Watts-Strogatz (WS)37 network ensembles that have identical size, N, and sparse density of connections, \(\rho \), similar to that of the C. elegans frontal and global neural network10,11,12. Specifically, when constructing the ER and WS network ensembles we set \({N}_{f}=131\) with \({\rho }_{f}\simeq 0.08\) (which corresponds to setting a mean degree, \({\langle \bar{k}\rangle }_{f}\simeq 10.5\)) or \({N}_{g}=277\) with \({\rho }_{g}\simeq 0.05\) (which corresponds to setting a mean degree, \({\langle \bar{k}\rangle }_{g}\simeq 13.8\)) to match the macroscopic characteristics of the C. elegans frontal and global networks, respectively. The reason behind this choice is that the C. elegans neural networks are one of the most cited examples of real-world small-world networks5,10,11,12,37, showing small average shortest paths connecting nodes and high clustering. Namely, this neural networks have a high small-worldness coefficient \(\sigma \), defined as the normalised ratio between the clustering coefficient and average path length38,39, but also show an heterogeneous degree distribution. More importantly, these network ensembles constitute a controlled setting where to compare and distinguish the main topological factors favouring or hindering the inference success, providing us with a reproducible framework to modify the network properties within each realisation.

We find that the resultant average sensitivity from these network ensembles is more significant, robust, and reliable on WS ensembles than on ER ensembles, pointing to a fundamental importance of the underlying small-worldness for a successful inference. In particular, Fig. 1 shows the resultant success rates –in (a) and (b) using the CC as our similarity measure and in (c) and (d) using the MI– for ER (dotted lines with unfilled squares) and WS (dotted lines with unfilled diamonds) ensembles, plus, a comparison with the results we obtain when using the C. elegans (CE) neural frontal (left panels) and global (right panels) network structure (continuous lines with filled circles). Specifically, Fig. 1(a,b) show the ensemble-averaged TPR results for N = 131 and N = 277 pulse-coupled Izhikevich maps, respectively, as a function of the coupling strength, ε, between the maps. From both panels we also note that the CE overall resultant success rates are closer to the ER ensemble-averaged TPR results than to the WS ensemble-averaged TPR results –in spite of the CE small-worldness coefficient for the N = 131 networks being the same as the WS, \(\sigma =2.80\). The results in Fig. 1 show how important the underlying degree distribution and small-worldness are in the generation of collective dynamics that can be analysed by means of a bi-variate inference method with a sufficiently high success rate.

Network inference success rates for different networks, coupling strengths, sizes and similarity measures. Panels (a,c) [Panels (b,d)] show the true positive rates, TPR, obtained using, respectively, cross correlation (CC) and mutual information (MI) measures to infer the networks connecting N = 131 [N = 277] pulse-coupled Izhikevich maps; map parameters are set such that their isolated dynamics is bursting (see Methods). The underlying connectivity structures correspond to Erdös-Rényi (ER), Watts-Strogatz (WS), or C. elegans (CE) frontal [global] neural networks. The TPR values for the ER and WS are ensemble –and initial-condition– averaged. Each of the 20 realisation with similar topological properties to that of the CE is repeated for 10 initial conditions. For the CE, the results are averaged only on the initial conditions. The TPR is found by comparing the true underlying network with the binary matrix obtained from the membrane potential time-series’ CC or MI (\(T=5\times {10}^{4}\) iterations) after fixing a threshold such that the inferred density of connections \({\rho }_{f}\) matches that of the CE: \({\rho }_{f}\simeq 0.08\) in the left panels and \({\rho }_{f}\simeq 0.05\) in the right panels. The horizontal dashed line in all panels is the random inference TPR, namely, the null hypothesis.

Comparing the \(TPR(\varepsilon )\) values in Fig. 1 when using CC – panels (a) and (b) – versus MI – panels (c) and (d)) –, we can see an overall improvement in the inference efficiency regardless of the network size. Specifically, MI achieves larger success rates than CC (\({\rm{\max }}\,{(TPR)}_{MI}\simeq 0.6\), whereas \({\rm{\max }}\,{(TPR)}_{CC}\simeq 0.5\)) for both network sizes as well as it allows to partly infer the network at coupling strengths where CC completely fails to do so; namely, for the interval where \(0.2 < \varepsilon < 0.25\) (CC for this point shows worse TPR values than making a random blind choice). This points to the known fact that the use of MI as a bi-variate similarity measure usually results in more successful and robust network inferences. Instead of trying to find an even better similarity measure that achieves a higher inference success rate, we highlight that we are mainly interested in establishing which are the main topological features that either enhance or hinder the bi-variate inference analysis.

In order to critically explore the significance that the underlying small-worldness has on the resultant inference, we fix the degree distribution and density of connections as we increase [decrease] \(\sigma \) in each of the 20 underlying ER [WS] network realisations using the rewiring method proposed in ref. 40 (see Methods). Figure 2 shows the resultant network inference – quantified by the \(TPR(\varepsilon ,\sigma )\) – after we make the isolated changes in the small-worldness coefficient, \(\sigma \), of the underlying structure for the \(N=131\) pulse-coupled Izhikevich maps (similar results are found for \(N=277\)). The ensemble-averaged inference results (colour coded curves) that we get from making this topological change to \(\sigma \) on the underlying ER and WS networked system are shown in Fig. 2(a,b), respectively. We can see from these panels that the highest \(TPR\) values are achieved for the largest \(\sigma \) values, meaning that the best inference happens for networks with large \(\sigma \). Also, we can see that there is a broad coupling strength interval (\(0.25\lesssim \varepsilon \lesssim 0.33\)) for both network classes that allows us to infer better than making blind random inference (dashed horizontal lines). From these panels, we note that network inference effectiveness increases robustly (namely, regardless of parameter changes) and significantly (namely, reliably across ensembles and random initial conditions) as the small-worldness, \(\sigma \), of the underlying structure is increased – whilst keeping its density of connections and degree distribution invariant. Consequently, in order to increase the inference success rates in the sparse ER networks, we need to increase the local clustering inter-connecting the maps. On the contrary, WS networks show optimal inference efficiency without modifying their clustering because of their inherent large small-worldness coefficient.

Network inference success rates as a function of coupling strength and small-worldness coefficient. Using map and network parameters set as in Fig. 1, panels (a,b) [panels (c,d)] show the ensemble and initial-condition averaged TPR as function of \(\varepsilon \) for N = 131 [N = 277] pulse-coupled Izhikevich maps in Erdös-Rényi (ER) and Watts-Strogatz (WS) network ensembles, respectively. A successive rewiring process40 is done to each network realisation in order to change its small-worldness coefficient, \(\sigma \), whilst maintaining the underlying density of connections and degree distribution invariant. The colour code indicates the resultant \(\sigma \) for each rewiring step that increases [panels (a,c)] or decreases [panels (b,d)] the networks’ small-worldness.

The coupling strength \({\varepsilon }^{\ast }\simeq 0.26\), which maximises the TPR, implies an average impulse per map of \({\varepsilon }^{\ast }/\langle k\rangle \simeq 0.025\). This coupling is associated to a collective regime with a loosely coupled dynamic, as we show in Fig. 3, since it corresponds to an average \(\mathrm{2.5 \% }\) synaptic increment of the membrane potential range (i.e., the difference between maximum and minimum membrane potential values) due to the action from neighbouring maps. The fact that our inference method recovers approximately \(\mathrm{50 \% }\) of all existing connections at \(\varepsilon \sim 0.26\) (\(TPR=0.5\)), implies that the \(FNR=0.5\), and from Eq. (1), this also implies that \(TNR\simeq 0.96\) and that \(FPR\simeq 0.04\) for \(\rho \simeq 0.08\). This means that for \(\varepsilon \sim 0.26\), the inference method is highly efficient in detecting true nonexistent connections (\(TNR\to 1\)) and falsely classifying these connections as existing ones (\(FPR\to 0\)). This efficiency is a consequence of sparse networks having more non-existing connections than existing connections (\(N\,(N-1)/2\gg M\)); as it happens in our ensembles. Hence, in sparse networks the challenge is to correctly identify the existing connections.

Collective dynamics for different coupling strengths and network structures. In panel (a) we show the ensemble-averaged order parameter, R, for the inter-spike intervals time-series of N = 131 maps connected using the C. elegans frontal neural network (CE, with small-worldness coefficient \(\sigma =2.8\)), Erdös-Rényi (ER, with \(\sigma =1.0\)) and Watts-Strogatz (WS, with \(\sigma =2.8\)) ensembles. For the ER networks, panels (b) (ε = 0.23) and (c) (ε = 0.26) show raster plots indicating the firing pattern of the coupled neuron maps before and after the abrupt drop in panel (a)’s R values. Panel (d) shows R for the rewired ER and WS networks, such that all these ensembles have \(\sigma =2.1\) (for comparison, black dots show R for the CE network also shown in panel (a)). Panels (e) (ε = 0.23) and (f) (\(\varepsilon =0.26\)) show the corresponding raster plots.

In spite of the similar results in Fig. 2 panels, we can distinguish a slight advantage in the ER networks ensemble-averaged \(TPR\) values [Fig. 2(a)] over the WS \(TPR\) values [Fig. 2(b)], which also appear when \(N=277\). We understand that differences in the inference results have to appear because of the dependence on the underlying degree distribution (as well as in the small-worldness), as it is observed in the results for the CE and ER networks shown in Fig. 1. In Fig. 2, the degree distributions correspond to those of the ER and WS networks respectively, which are kept invariant as the small-worldness of the underlying network is changed. However, the similarity in the results from Fig. 2(a,b) (for similar small-worldness values) can be explained due to the finite size systems, which make the ER and WS degree distributions similar (a similar behaviour is also observed for \(N=277\) – not shown here). We also note that the modified ER networks \(TPR\) results narrowly outperform WS inference results, where success rates reach values higher than \(\mathrm{50 \% }\) for coupling strengths close to \({\varepsilon }^{\ast }\simeq 0.26\). These \(TPR\) values are significantly higher than making a blind random inference of connections (dashed horizontal lines), which successfully recover only \(\simeq \mathrm{8 \% }\) of the existing connections.

All \(TPR\) curves share an abrupt increase in the success rate around a critical coupling strength of \({\varepsilon }^{\ast }\approx 0.26\). Figures 1 and 2 show this abrupt jump in the inference success for all networks analysed – though the exact value of ε* may vary slightly for each topology realisation. This sudden increase in the success rates points to a drastic change in the systems’ collective dynamics as the coupling strength is increased beyond ε*. In order to analyse the maps’ collective dynamics as a function of ε and the underlying topology, we compute the order parameter, \(R\), defined as the time-average of the squared difference between two inter-spike intervals (ISIs) time-series (i.e., the series of time differences between two consecutive spikes) summed over all pairs of ISIs. Specifically, \(R={\sum }_{j < i}\,{R}_{ij}\), with \({R}_{ij}={\langle {({T}_{i}-{T}_{j})}^{2}\rangle }_{t}\), where \({T}_{i}\) is the i-th neuron ISI time-series and \({\langle \cdot \rangle }_{t}\) is the time-average. This means that the \(R\) value is high [low] when the time series are different [similar].

In Fig. 3(a) we show how the order parameter \(R\) changes with the coupling strength, \(\varepsilon \), for different systems with \(N=131\) neurons (similar results are also found for \(N=277\) – not shown). The \(R\) values for the C. elegans (CE) frontal neural network structure are shown by the filled (black online) circles, whereas for the ER and WS networks, the ensemble-averaged and initial-condition averaged \(R\) values are shown by unfilled (blue online) diamonds and unfilled (red online) circles, respectively. These ensemble averages are calculated from \(20\) realisations, each one also averaged over \(10\) different randomly selected initial conditions. For the CE network, each \(R\) value is solely the average over \(10\) initial conditions. We can observe that close to \(\varepsilon \approx 0.25\), \(R\) decreases abruptly for all network structures – falling \(2\) orders in magnitude. This drop corresponds to a switch from a collective bursting regime to a lightly disordered spiking regime, which is more disordered than the apparently synchronous bursting dyna (\(\varepsilon < 0.25\)), but it is in fact partially coherent – a particularly suitable condition to perform a successful network inference22. For example, Fig. 3 panels (b) and (c) show the raster plots for ER networks with \(N=131\) maps coupled with \(\varepsilon =0.23\) and \(\varepsilon =0.26\), respectively. Similarly, Fig. 3(d) shows the same behaviour for the averaged \(R\) parameter in networks with ER and WS degree distributions but with different small-worldness levels (as previously described). This means that the collective dynamics’ abrupt change also happens for the modified networks, namely, the networks modified by our rewiring process to increase or decrease their overall small-world coefficient. Panels (e) and (f) show the resultant raster plots for \(\varepsilon =0.23\) and \(0.26\), respectively, for a realisation of an ER network with \(N=131\) maps and \(\sigma =2.1\).

Furthermore, we can see from Figs. 1 and 2 that the sensitivity falls rather smoothly for all networks as we increase ε beyond the critical value ε*. The reason behind this smooth change is that, as ε increases beyond ε*, the neurons gradually begin to fire in a more ordered spiking, namely, achieving synchronisation. Thus, partial coherence between the time-series vanishes and inference becomes impossible. The smooth decrease in sensitivity can be observed by the rate in which the order parameter decreases for \(\varepsilon \) larger than ε*, as in Fig. 3 panels (a) and (d). In general, the neural systems we analyse stay in a partially coherent spiking regime for an interval of coupling strength values (approximately between \(\varepsilon \approx 0.25\) and \(0.30\)), where network inference \(TPR\) values remain above the random line.

So far we have shown that increasing small-worldness favours network inference, obtaining success rates that appear robust to changes in the degree-distribution type (e.g., ER, WS, and CE), initial conditions, and similar for a broad coupling strength region. However, we can see from Fig. 1 that as the N increases from 131 (panel (a)) to 277 (panel (b)), the \(TPR\) drops significantly for the C. elegans networks. The reason for this drop comes from the broadness in the global CE neural-network’s degree-distribution. As we can see from Fig. 4, when N = 131 (panel (a)), all degree distributions are somewhat similar and narrow, but when N = 277 (panel (b)), the CE topology shows the presence of hubs and a long tailed distribution. This is why on Fig. 1(b), the \(TPR\) results for the N = 277 WS network ensemble are extremely similar to those \(TPR\) values when N = 131 in Fig. 1(a). Similarly, we can see the same resemblance in the \(TPR\) results for ER networks, which also hold a narrow degree distribution, as shown by the dashed curves in Fig. 4. On the contrary, the significant differences emerging from the CE degree distributions for N = 131 and N = 277 impact directly into the inference success rates. This leads us to believe that heterogeneity in the node degrees hinders network inference.

Average degree distributions of our neural network structures. Panel (a) [(b)] shows the N = 131 [N = 277] nodes degree-distributions for Erdös-Rényi (ER, dashed – blue online) and Watts-Strogatz (WS, continuous – pink online) ensembles, averaged over 20 network realisations. Also, the C. elegans (CE) frontal [global] neural network structure is shown with continuous black lines.

We find that the former results are also robust to changes in the time-series length. Moreover, our conclusions regarding small-worldness favouring inference still hold if one chooses a different time-series representation for the neural dynamics of each map. Namely, our findings hold for inter-spike intervals (ISI) as well as membrane potentials (see Supplementary Information for further details). Remarkably, we see that when using ISIs to measure the neural activity, inference success rates are significantly lower than when using membrane potentials – regardless of the particular topology or collective dynamics. However, these \(TPR\) values are more robust to changes in the topology realisation (i.e., different structures constructed with the same canonical models and parameters). This leads us to conclude that, using ISIs instead of membrane potentials, allows us to achieve worst inference success rates but with a more reliable outcome.

Discussion

In this work, we have shown that network inference methods based on the use of cross correlation (CC) or mutual information (MI) to measure similarities between components of a synthetic neural network are more effective when inferring small-world structures than other types of networks. This conclusion has broad implications, since CC and MI are widely used to reveal the underlying connectivity of real neural systems, such as the brain, and to gain information about long-range interaction in other systems, such as in climate networks. The effect that the topology has on the network inference success rates has been recently analysed in other networks. For example, in41 the authors show that the topological properties affect network inference performance in small, weighted, and directed gene-regulatory networks. Our results support that the topological properties of complex systems are of importance when attempting to infer the connectivity from observations of nodes’ behaviour.

We have shown that, for networks with similar degree distributions, the small-worldness level is the main topological factor affecting the inference success rates. The results shown in Figs. 1 and 2 account for the reliability and robustness of this conclusion. Also, in Fig. 1 we observe that our inferring method is consistently and robustly more successful when inferring Watts-Strogatz networks than the C. elegans (CE) neural structure, despite both having the same small-worldness level. This points to the effect that degree-distribution range has on the inference success, namely, the broader the degree distribution, the less effective the inference is. These conclusions complement other works, which are focused on obtaining high inference performances rather than studying the main topological factors affecting the success rates, such as refs. 42,43.

Our results show that the appearance of highly connected nodes (hubs), such as in the CE global network, is another important factor, hindering successful inference. In other words, we find that success rates are generally lower when inferring networks which have higher degree heterogeneity. This finding is relevant because real small-world networks, such as the C. elegans neural structure, often combine small-world properties with other complex features – such as the presence of hubs, hierarchies or rich clubs –, resulting in a higher degree heterogeneity with respect to the canonical Watts-Strogatz network model. In particular, hubs have been related to the phenomenon of hub synchronisation in brain networks44 and scale-free networks45. Hub synchronisation is particularly detrimental for network inference, since it leads to strong correlations between non-connected nodes and weak correlations between the hubs and their neighbouring nodes.

Regardless of the underlying structure, the coupling strength range that allows for a successful network inference using bi-variate similarity measures is located after a critical value, in which the system transitions from a collective dynamics with an apparently synchronous bursting regime to a collective asynchronous spiking. This transition has been reported to take place in C. elegans neural networks46 and corresponds to a partially-coherent state, which has been shown to be necessary for having successful network inference22. Figure 3 shows an abrupt change in the systems’ order parameter around the critical coupling strength, revealing a coherence-loss after the transition. Although we can only perform successful network inferences when the systems are in partial coherence, it is reasonable to assume that real neural systems are in such states, since they consistently transition between synchronous and asynchronous states in order to perform different tasks and cognitive functions1,2,3,4,5,6,46,47.

In future works it would be crucial to study how these conclusions extend to other coupling models and network structures. In ref. 47 the authors show how the combined action of two different coupling types (electrical and chemical synapses) can lead to novel dynamical regimes in neural systems with the C. elegans structure such as chimera states, which could play a key role in the development of brain diseases. In this context it would be relevant to study how these different coupling models and collective dynamics affect the relationship between the network’s small-worlndess level and the inference success rates that we have reported. Furthermore, it would be relevant to address the question of how our results extend to network structures with other degree distributions, such as scale-free networks.

Methods

Synthetic neural network model

Our synthetic neural model is the Izhikevich map33,34,35, which belongs to the bi-dimensional quadratic integrate-and-fire family. This map consists of a fast variable, \(v\), representing the membrane potential, and a slow variable, u, modelling changes in the conductance of the ionic channels. One of the main advantages of using Izhikevich maps is that it combines numerical efficiency (inherent to map-based models, which we can simply iterate to find their temporal evolution) with biological plausibility34. The isolated map equations of motion are given by

When different values of \(i\), \(c\), and \(d\), are fixed, the Izhikevich map can show extensive dynamical regimes, which have been observed in real neurons. We set the parameter values such that the regime exhibits bursting dynamics. Namely, d = 0, c = −58, and \(I=2\). However, when Izhikevich maps interact, the resulting single-neuron dynamics can differ significantly from the bursting regime.

The interactions are set to be pulsed via the fast variable, \(v\), and controlled by a global coupling-strength order parameter, \(\varepsilon \). This pulse-coupling type is able to represent many real neural interactions. With this model, every time a neuron spikes it sends a signal to the adjacent neurons (i.e., to all neurons that are connected to it), instantly advancing their membrane potentials by a constant value. Specifically, the dynamics for the \(n\)-th neuron is given by

where \({k}_{i}\) is the \(i\)-th node degree (i.e., its number of neighbours), \({A}_{ij}\) is the \(ij\)-th entry of the network’s adjacency matrix, \(\varepsilon \) is the coupling strength, and \(\delta (x)\) is the Kronecker’s delta-function. We iterate Eq. (3) \(7\times {10}^{4}\) steps from \(10\) random initial conditions for each topology and coupling strength, removing a transient of \(2\times {10}^{4}\) steps. In particular, the coupling term, \(\frac{\varepsilon }{{k}_{i}}{\sum }_{j\ne i}{A}_{ij}\delta ({v}_{j,n}-\mathrm{30)}\), acts as follows. If a connection between neurons \(i\) and \(j\) exists, then \({A}_{i,j}=1\) and neuron \(i\) receives an input of value \(\varepsilon /{k}_{i}\) every time neuron \(j\) reaches the threshold \({v}_{j,n}=30\). Otherwise, the neuron remains unchanged.

C. elegans neural structure

We use data from Dynamic Connectome Lab10,11 to construct the C. elegans’ frontal (N = 131 nodes) an global (N = 277 nodes) neural networks. These networks are represented by weighted and directed graphs, that we simplify by considering the unweighted non-directed versions. The reason behind this choice is to bring up-front the role of structure alone into our systems’ collective behavior. Under these symmetric considerations, we find that the network’s mean degree is \({\bar{k}}_{f}=10.5\) (\({\overline{k}}_{g}=13.8\)) for the frontal (global) connectome, with a sparse edge density of \({\rho }_{f}=0.08\) (\({\rho }_{g}=0.05\)). The average shortest-path length for our C. elegans frontal (global) neural network is \({\bar{l}}_{f}=2.5\) (\({\bar{l}}_{g}=2.6\)) and its clustering coefficient is \({C}_{f}=0.25\) (\(Cg=0.28\)). Both neural structures have short average path lengths (similar to Erdö-Rényi networks with equal edge density) and high clustering coefficients (similar to Watts-Strogatz networks with equal average degree), hence, they show small-world properties. The small-worldness coefficient of our C. elegans frontal (global) neural network is \({\sigma }_{f}=2.8\) (\({\sigma }_{g}=5.1\)), which falls within the expected small-world range (\(\sigma > 2\)).

Network ensembles

In order to study the role that small-worldness and degree heterogeneity have in the network inference results, we build two ensembles of 20 Erdös-Rényi (ER) adjacency matrices with N = 131 and N = 277 nodes respectively, and two ensembles of 20 Watts-Strogatz (WS) adjacency matrices. We choose the number of nodes in our network ensembles to match the sizes of the C. elegans (CE) frontal (N = 131 nodes) and global (N = 277) neural structures. We also tune the algorithms to build these networks such that they have the same edge densities as the CE frontal and global neural networks, namely, \(\rho =0.08\) and \(\rho =0.05\), respectively. In addition, the WS ensemble is also tuned such that the algorithm parameters produce networks with similar small-worldness levels to that of the CE networks. In what follows, \(\langle k\rangle \) denotes average among network ensembles, while \(\overline{k}\) expresses the average among nodes of a single network.

Our ER ensemble is built with a probability to linking nodes in each network of \(p=0.08\) (0.05) for N = 131 (N = 277) – values which are above the percolation transition. These probabilities yield mean degrees of \(\langle \overline{k}\rangle =10.5\) (\(\langle \overline{k}\rangle =13.8\)) for the N = 131 (N = 277) networks. In both cases, the variability within the ensemble of these mean degrees is \({\sigma }_{\overline{k}}=0.3\). We can corroborate that the nematode’s neural networks also have mean degrees falling within one standard deviation of the ER ensemble-averaged mean degrees. The clustering coefficient in the ER model is usually low (C = p in the thermodynamic limit), being \(\langle C\rangle =0.08\) (\(\langle C\rangle \mathrm{=0.05}\)) for our N = 131 (N = 277) network ensembles. The ER networks also hold a small average shortest-path length. In our ensembles, the shortest-path lengths are \(\langle \overline{l}\rangle =2.3\) (\(\langle \overline{l}\rangle =2.4\)) for the N = 131 (N = 277) networks. Correspondingly, the averaged small-worldness levels of our ER ensembles are \(\langle \sigma \rangle =1.0\) in both cases – as expected –, indicating the absence of small-world effect.

The Watts-Strogatz (WS) algorithm takes an initial ring configuration in which all nodes are linked to k/2 neighbours to each side, and then rewires all edges according to some probability p37. Using this model, we construct a network ensemble with link density and small-worldness levels similar to the CE neural networks. In particular, we choose a mean degree and rewiring probability that yields similar average path lengths and clustering coefficients as the CE neural structures. Specifically, we fix the mean degree at an integer value, namely, \(\langle k\rangle =10\) (14) for \(N=131\) (277) nodes. Then, for each rewiring probability p, we generate 20 adjacency matrices and calculate the mean average path length and clustering coefficients. Thus, for each rewiring probability p we have a point in the \([C,\langle l\rangle ]\) space. This allows us to choose the rewiring probability, \({p}^{\ast }\), which holds the closest point in the \([C,\langle l\rangle ]\) space to the CE networks values. For such \({p}^{\ast }\), our ensembles have shortest-path lengths of \(\langle \overline{l}\rangle =2.5\) (\(\langle \overline{l}\rangle =2.6\)), and clustering coefficients of \(C=0.4\) (\(C=0.28\)) when N = 131 (\(N=277\)). Correspondingly, our networks’ small-worldness levels are \(\langle \sigma \rangle =2.8\) (\(\langle \sigma \rangle =5.3\)) when N = 131 (N = 277). These values are similar to the CE small-worldness levels and indicate the presence of the small-world effect.

Controlling small-worldness

To obtain the results shown in Fig. 2 we build network ensembles that share the same degree distribution but have different small-worldness levels. We achieve that using the rewiring scheme proposed by Maslov et al. in40. This method consists in taking two pairs of connected nodes, say (a, b) and (c, d), removing their links and adding new crossed edges, for example (a, d) and (b, c), always checking that the new links were absent before and that the network remains connected after this process. This scheme preserves the degree of each node, hence the degree distribution remains invariant. However, this rewiring changes the clustering coefficient and average path length, thus, modifying the small-worldness coefficient, σ. To construct network ensembles with the same degree distributions but modified small-worldness, we start from the ER and WS network ensembles. Then, we perform multiple random rewiring steps – accepting or rejecting them – until we achieve a desired value for σ for each network realisation. Finally, we store the resultant networks as a modified ER or WS ensemble and repeat these steps to achieve another σ value. Consequently, we obtain network ensembles with ER and WS degree distributions but different small-worldness levels.

Bi-variate similarity measures

Our method to infer the underlying network of a synthetic neural system is done by creating binary matrices of 1s and 0s from the pair-wise cross-correlation or mutual information matrices of the signal measurements. Given two time-series, for example two membrane potentials \({v}^{\mathrm{(1)}}\) and \({v}^{\mathrm{(2)}}\), we calculate the pair-wise CC between them as

where T is the time-series length, \(\bar{v}\) is its time-average, and \({\sigma }_{v}\) is its standard deviation. The CC is able to detect linear relationships between time-series. Moreover, we take its absolute value in order to include anti-synchronous states, these are strongly correlated states, possibly due to an underlying connection between the neurons.

Alternatively, we can compare two signals by means of their mutual information, which performs better when the relationship between the time-series is non-linear. We find the mutual information between two membrane potentials, \({v}^{\mathrm{(1)}}\) and \({v}^{\mathrm{(2)}}\), from

where Hv is an estimate for the membrane potential’s Shannon entropy and \({H}_{({v}^{1},{v}^{2})}\) is an estimate for their joint Shannon entropy. Specifically, given a membrane potential time-series, v, we estimate its Shannon entropy by splitting the configuration space (i.e. the membrane potential possible values range) into 20 equally-sized bins and calculating the frequency fk in which the time-series falls within the k-th bin. We then calculate \({H}_{v}\equiv -{\sum }_{k}{f}_{k}log({f}_{k})\). Similarly, we estimate the joint Shannon entropy by splitting the two-dimensional configuration space in 400 (20 × 20) equally sized bins, and finding the frequency, fk,j, in which the joint (2-D) time-series falls within the bin [k, j]. Then, \({H}_{(u,v)}\equiv -{\sum }_{k}\,{\sum }_{j}\,{f}_{k,j}\,\log ({f}_{k,j})\).

References

Bassett, D. S. & Sporns, O. Network neuroscience. Nat. Neurosci. 20, 353 (2017).

Medaglia, J. D., Lynall, M.-E. & Bassett, D. S. Cognitive network neuroscience. J. Cognit. Neurosci. 27, 1471–1491 (2015).

Sporns, O. Contributions and challenges for network models in cognitive neuroscience. Nat. Neurosci. 17, 652 (2014).

Bullmore, E. & Sporns, O. Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10, 186 (2009).

Sporns, O., Tononi, G. & Kötter, R. The human connectome: a structural description of the human brain. PLoS computational Biol. 1, e42 (2005).

Deco, G., Tononi, G., Boly, M. & Kringelbach, M. L. Rethinking segregation and integration: contributions of whole-brain modelling. Nat. Rev. Neurosci. 16, 430 (2015).

Sporns, O. Network attributes for segregation and integration in the human brain. Curr. Opin. Neurobiol. 23, 162–171 (2013).

Jorgenson, L. A. et al. The brain initiative: developing technology to catalyse neuroscience discovery. Philos. Trans. R. Soc. B: Biol. Sci. 370, 20140164 (2015).

Haimovici, A., Tagliazucchi, E., Balenzuela, P. & Chialvo, D. R. Brain organization into resting state networks emerges at criticality on a model of the human connectome. Phys. Rev. Lett. 110, 178101 (2013).

Varier, S. & Kaiser, M. Neural development features: Spatio-temporal development of the caenorhabditis elegans neuronal network. PLoS computational Biol. 7, e1001044 (2011).

Ren, Q., Kolwankar, K. M., Samal, A. & Jost, J. Stdp-driven networks and the c. elegans neuronal network. Phys. A: Stat. Mech. its Appl. 389, 3900–3914 (2010).

Antonopoulos, C. G., Fokas, A. S. & Bountis, T. C. Dynamical complexity in the c. elegans neural network. Eur. Phys. J. Spec. Top. 225, 1255–1269 (2016).

Eguiluz, V. M., Chialvo, D. R., Cecchi, G. A., Baliki, M. & Apkarian, A. V. Scale-free brain functional networks. Phys. Rev. Lett. 94, 018102 (2005).

Perrin, J. S. et al. Electroconvulsive therapy reduces frontal cortical connectivity in severe depressive disorder. Proc. Natl. Acad. Sci. 109, 5464–5468 (2012).

Bressler, S. L. & Seth, A. K. Wiener–granger causality: a well established methodology. Neuroimage 58, 323–329 (2011).

Ge, T., Cui, Y., Lin, W., Kurths, J. & Liu, C. Characterizing time series: when granger causality triggers complex networks. N. J. Phys. 14, 083028 (2012).

Sommerlade, L. et al. Inference of granger causal time-dependent influences in noisy multivariate time series. J. Neurosci. methods 203, 173–185 (2012).

Sun, J., Taylor, D. & Bollt, E. M. Causal network inference by optimal causation entropy. SIAM J. Appl. Dyn. Syst. 14, 73–106 (2015).

Villaverde, A. F., Ross, J., Moran, F. & Banga, J. R. Mider: network inference with mutual information distance and entropy reduction. PLoS one 9, e96732 (2014).

Tung, T. Q., Ryu, T., Lee, K. H. & Lee, D. Inferring gene regulatory networks from microarray time series data using transfer entropy. In Twentieth IEEE International Symposium on Computer-Based Medical Systems (CBMS’07), 383–388 (IEEE, 2007).

Basso, K. et al. Reverse engineering of regulatory networks in human b cells. Nat. Genet. 37, 382 (2005).

Rubido, N. et al. Exact detection of direct links in networks of interacting dynamical units. N. J. Phys. 16, 093010 (2014).

Tirabassi, G., Sevilla-Escoboza, R., Buldú, J. M. & Masoller, C. Inferring the connectivity of coupled oscillators from time-series statistical similarity analysis. Sci. Rep. 5, 10829 (2015).

Bianco-Martinez, E., Rubido, N., Antonopoulos, C. G. & Baptista, M. Successful network inference from time-series data using mutual information rate. Chaos: An. Interdiscip. J. Nonlinear Sci. 26, 043102 (2016).

Kramer, M. A., Eden, U. T., Cash, S. S. & Kolaczyk, E. D. Network inference with confidence from multivariate time series. Phys. Rev. E 79, 061916 (2009).

Friedman, N., Linial, M., Nachman, I. & Pe’er, D. Using bayesian networks to analyze expression data. J. computational Biol. 7, 601–620 (2000).

Tsonis, A. A., Swanson, K. L. & Roebber, P. J. What do networks have to do with climate? Bull. Am. Meteorol. Soc. 87, 585–596 (2006).

Donges, J. F., Zou, Y., Marwan, N. & Kurths, J. Complex networks in climate dynamics. Eur. Phys. J. Spec. Top. 174, 157–179 (2009).

Donges, J. F., Zou, Y., Marwan, N. & Kurths, J. The backbone of the climate network. EPL (Europhys. Lett. 87, 48007 (2009).

Brown, C. D. & Davis, H. T. Receiver operating characteristics curves and related decision measures: A tutorial. Chemom. Intell. Lab. Syst. 80, 24–38 (2006).

Fawcett, T. An introduction to roc analysis. Pattern Recognit. Lett. 27, 861–874 (2006).

Rogers, S. & Girolami, M. A bayesian regression approach to the inference of regulatory networks from gene expression data. Bioinforma. 21, 3131–3137 (2005).

Izhikevich, E. M. Simple model of spiking neurons. IEEE Trans. neural Netw. 14, 1569–1572 (2003).

Izhikevich, E. M. Which model to use for cortical spiking neurons? IEEE Trans. neural Netw. 15, 1063–1070 (2004).

Ibarz, B., Casado, J. M. & Sanjuán, M. A. Map-based models in neuronal dynamics. Phys. Rep. 501, 1–74 (2011).

Erdos, P. & Rényi, A. On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci. 5, 17–60 (1960).

Watts, D. J. & Strogatz, S. H. Collective dynamics of ‘small-world’networks. Nat. 393, 440 (1998).

Humphries, M. D. & Gurney, K. Network ‘small-world-ness’: a quantitative method for determining canonical network equivalence. PLoS one 3, e0002051 (2008).

Telesford, Q. K., Joyce, K. E., Hayasaka, S., Burdette, J. H. & Laurienti, P. J. The ubiquity of small-world networks. Brain connectivity 1, 367–375 (2011).

Maslov, S. & Sneppen, K. Detection of topological patterns in protein networks. In Genetic Engineering: Principles and Methods, 33–47 (Springer, 2004).

Muldoon, J. J., Yu, J. S., Fassia, M.-K. & Bagheri, N. Network inference performance complexity: a consequence of topological, experimental and algorithmic determinants. Bioinformatics (2019).

Ren, J., Wang, W.-X., Li, B. & Lai, Y.-C. Noise bridges dynamical correlation and topology in coupled oscillator networks. Phys. Rev. Lett. 104, 058701 (2010).

Kuroda, K., Ashizawa, T. & Ikeguchi, T. Estimation of network structures only from spike sequences. Phys. A: Stat. Mech. its Appl. 390, 4002–4011 (2011).

Vlasov, V. & Bifone, A. Hub-driven remote synchronization in brain networks. Sci. Rep. 7, 10403 (2017).

Pereira, T. Hub synchronization in scale-free networks. Phys. Rev. E 82, 036201 (2010).

Protachevicz, P. R. et al. Bistable firing pattern in a neural network model. Front. computational neuroscience 13 (2019).

Hizanidis, J., Kouvaris, N. E., Zamora-López, G., Díaz-Guilera, A. & Antonopoulos, C. G. Chimera-like states in modular neural networks. Sci. Rep. 6, 19845 (2016).

Acknowledgements

R.A.G. and N.R. acknowledge Comisión Sectorial de Investigación Científica (CSIC) research grant 97/2016 (ini_2015_nomina_m2). All authors acknowledge CSIC group grant “CSIC2018 - FID13 - grupo ID 722”.

Author information

Authors and Affiliations

Contributions

N.R. conceived the numerical experiments, analysed the results and contributed to the writing of the manuscript. R.A.G. conducted the numerical experiments, analysed the results, prepared all figures and wrote the main manuscript. A.M. contributed to the writing of the manuscript. C.C. contributed to the writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

García, R.A., Martí, A.C., Cabeza, C. et al. Small-worldness favours network inference in synthetic neural networks. Sci Rep 10, 2296 (2020). https://doi.org/10.1038/s41598-020-59198-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-59198-7

This article is cited by

-

Network structure from a characterization of interactions in complex systems

Scientific Reports (2022)

-

A network method to identify the dynamic changes of the data flow with spatio-temporal feature

Applied Intelligence (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.