Abstract

We present an all-passive, transformable optical mapping (ATOM) near-eye display based on the “human-centric design” principle. By employing a diffractive optical element, a distorted grating, the ATOM display can project different portions of a two-dimensional display screen to various depths, rendering a real three-dimensional image with correct focus cues. Thanks to its all-passive optical mapping architecture, the ATOM display features a reduced form factor and low power consumption. Moreover, the system can readily switch between a real-three-dimensional and a high-resolution two-dimensional display mode, providing task-tailored viewing experience for a variety of VR/AR applications.

Similar content being viewed by others

Introduction

The emergence of virtual reality (VR)/augmented reality (AR) technologies opens a new way that people access digital information. Despite tremendous advancement, currently, very few VR/AR devices are crafted to comply with the “human-centric design” principle1, a rising consensus that the hardware design should center around the human perception2. To meet this standard, a near-eye display must integrate displays, sensors, and processors in a compact enclosure, while allowing for a user-friendly human-computer interaction. Among these four pillar requirements, the display plays a central role in creating a three-dimensional perception3 that mimics real-world objects.

Conventional near-eye three-dimensional displays are primarily based on computer stereoscopy4, creating depth perception using images with parallax for binocular vision. These two-dimensional images with parallax are combined to yield three-dimensional representations of the scenes, namely binocular disparity cues. A long-standing problem in computer stereoscopy is the vergence-accommodation conflict5. In this scenario, the viewer is forced to adapt to conflicting cues between the display and the real world, causing discomfort and fatigue. This problem originates from the mismatch between the fixed depth of the display screen (i.e., accommodation distance) and the depths of the depicted scenes (i.e., vergence distance) specified by the focus cues. This mismatch contradicts the viewer’s real world experience where these two distances are always identical.

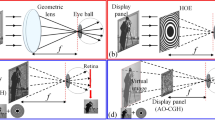

To mitigate the vergence-accommodation conflict, there are generally two strategies6. The first strategy, referred to as temporal multiplexing (varifocal)7,8,9,10,11,12, rapidly sweeps the focal plane of the projector either mechanically or electronically. Mechanical sweeping is normally performed either through adjusting the optical power of the eyepiece (e.g., an acoustic lens13,14,15, a birefringent lens16 or an Alvarez lens17) or simply shifting the axial distance between the display and the eyepiece18. While the electronic sweeping of the focal plane can be accomplished by using various active optical devices, such as a liquid crystal lens and a deformable mirror device19,20,21. Moreover, the varifocal focal effect can also be created by using multiple layers of spatial light modulators with directional backlighting22 and high-speed shutters23. In temporal-multiplexing-based displays, a series of two-dimensional images are presented sequentially at varied depths by using high-speed display devices, such as a digital micromirror device (DMD). Despite a high resolution, the temporal-multiplexing-based methods are limited by the fact that product of the image dynamic range, depth plane number and the volumetric display rate cannot be greater than the maximum binary pattern rate of the DMD. For example, given a typical DMD’s maximum binary pattern rate at 20 kHz and six depth planes displayed at a volumetric image refresh rate at 60 Hz, the dynamic range of each image is limited to only 6 bits (64 grey levels). The second strategy, referred to as spatial multiplexing24,25,26, optically combines multiple panel images and simultaneously project them towards either different depth planes (multifocal) or perspective angles (light field) using devices such as a beam splitter27, a freeform prism28, a lenslet array29,30,31, a pinhole array32, a holographic optical element (HOE)33,34 or a liquid-crystal-on-silicon spatial light modulator (LCOS-SLM)35,36. Compared with temporal multiplexing, the spatial-multiplexing-based methods have an edge in image dynamic range. Nonetheless, the current implementations are plagued by various problems. For example, using beam splitters usually leads to a bulky setup, making it unsuitable for wearable applications. The lenslet-array-based display (i.e., the light field display) suffers from a low lateral resolution (102 × 102 pixels37; 146 × 78 pixels38) due to the trade-off between the spatial and angular resolution39,40,41. In holographic displays, although the screen resolution and the number of perspective angles are decoupled, the image quality is generally jeopardized by the speckle noise42. Moreover, the calculation of holographic patterns is computationally prohibitive, restricting their use in real-time display applications43,44,45. Lastly, the LCOS-SLM-based approach relies on an active optical component (LCOS-SLM) to execute its core function, unfavorably increasing power consumption and the device’s form factor46.

To enable a compact near-eye three-dimensional display featuring both high resolution and image dynamic range, herein we developed an all-passive, transformable optical mapping (ATOM) method. Like the LCOS-SLM-based approach, the ATOM display is based on spatial multiplexing—it simultaneously maps different parts of a two-dimensional display screen to varied depths. Therefore, the product of the lateral resolution and the number of depth planes equates the total number of display pixels at the input end. However, rather than using the LCOS-SLM, the ATOM display employs a passive diffractive optical element—a distorted grating—to achieve two-dimensional-to-three-dimensional mapping. This innovative all-passive optical architecture significantly reduces the power consumption and the device’s form factor. Moreover, to improve the device’s usability, we build the system on a transformable architecture which allows a simple switch between the three-dimensional and high-resolution two-dimensional display modes, providing task-tailored viewing experience.

Operating Principle

We illustrate the operating principle of the ATOM display in Fig. 1. In the real-three-dimensional display mode, we divide the input screen into multiple sub-panels, each displaying a depth image. These images are then relayed by a 4 f system with a distorted grating at the Fourier plane. Acting as an off-axis Fresnel lens, the distorted grating adds both the linear and quadratic phase factors to the diffracted waves, directing the associated sub-panel images to a variety of depths while shifting their centers towards the optical axis. Seeing through the eyepiece, the viewer will perceive these sub-panel images appearing at different virtual depths. Also, by rendering the contents using a depth-blending algorithm47, we can provide continuous focus cues across a wide depth range.

Due to the division of the display screen, given N depth planes, the resolution of each depth image is therefore 1/N of the display screen’s native resolution, leading to a reduced field of view (FOV). To accommodate applications where a large FOV is mostly desired, we can transform the ATOM display into a high-resolution two-dimensional display simply by removing the distorted grating from the optical path and displaying a single plane image at the screen’s full resolution. This switching mechanism thus grants users a freedom to adapt the ATOM display for a specific task.

Methods

We implemented the ATOM display in the reflection mode. The optical setup is shown in Fig. 2. At the input end, we used a green-laser-illuminated digital light projector (DLP4500, 912 × 1140 pixels, Texas Instruments) as the display screen. After passing through a beam splitter (50:50), the emitted light is collimated by an infinity-corrected objective lens (focal length, 100 mm; 2X M Plan APO, Edmund Optics). In the real-three-dimensional display mode, we place a reflective distorted grating at the Fourier plane—the back aperture of the objective lens—to modulate the phase of the incident light. While in the high-resolution two-dimensional display mode, we replace the distorted grating with a mirror. The reflected light passes through the objective lens and is reflected at the beam splitter, forming intermediate depth images (real-three-dimensional display mode) or a full-resolution two-dimensional image (high-resolution two-dimensional display mode) at the front focal plane of an eyepiece (focal length, 8 mm; EFL Mounted RKE Precision Eyepiece, Edmund Optics). The resultant system parameters for the high-resolution two-dimensional and real-three-dimensional display modes are shown in Table 1.

As an enabling component, the distorted grating functions as a multiplexed off-axis Fresnel lens in the ATOM display. Although distorted gratings have been long used in microscopy48, wavefront sensing49, and optical data storage50, we deploy it for the first time in a display system. We elaborate the effect of a distorted grating on an optical system in Fig. 3(a). Given a single object, the distorted grating introduces varied levels of defocus to the wavefront associated with different diffraction orders. When combined with a lens, the distorted grating modifies its focal length and laterally shifts the image for non-zero diffraction orders. Similarly, given multiple objects located at the same plane but different lateral positions, the distorted grating can simultaneously project their different diffraction-order images onto various depths while maintaining their centers aligned (Fig. 3(b)).

Image formation in a distorted-grating-based optical system. (a) Diffraction of a single object through a distorted grating. (b) Diffraction of multiple in-plane objects through a distorted grating. Only the on-axis diffracted images are illustrated. (c) Photograph of a distorted grating. A US dime is placed at the right for size reference.

The unique diffraction property above originates from the spatially-varied shift in the grating period, Δx(x, y) (Fig. 3(a)). The correspondent local phase shift for diffraction order m can be written as:

where d is the period of an undistorted grating. At the right side of Eq. 1, the first and second term depict the contributions from the distorted and undistorted grating period, respectively. If the first distorted term has a quadratic form,

where R is the grating radius, and W is the defocus coefficient, and λ is the wavelength, the correspondent phase change \({\phi }_{m}^{Q}\) would be:

We can consider this phase change is contributed by a lens with an equivalent focal length,

The sign of diffraction order m thus determines the optical power of the distorted grating.

On the other hand, the second undistorted term in Eq. 1 introduces a linear phase shift to the wavefront in the form:

where θ is the diffraction angle, and it can be calculated from the grating equation:

Under the small-angle approximation, we correlate the diffraction angle θ with the lateral shift \({l}_{{x}_{m}}\) of a sub-panel image in the ATOM display as:

where fOBJ is the focal length of the objective lens (Fig. 2).

Finally, combining Eqs 1–7 gives:

Notably, the phase pattern in Eq. 8 is inherently associated with diffracted depth images. By contrast, in the OMNI display46, to calculate the required phase pattern displayed at LCOS-SLM, we must perform optimization for each depth image, which is computationally extensive and may lead to an ill-posed problem when the number of depth planes increases.

In our prototype, we used only the +1, 0, and −1 diffraction orders and projected their associated images to 0, 2, 4 diopters, respectively. The correspondent focal lengths and diffraction efficiencies51 of this binary amplitude grating with 50% duty cycle were computed and shown in Table 2. We calculated the structural parameters of the distorted grating (Table 3) and fabricated it as a reflective mask using direct laser writing on a soda lime base with high reflective chrome coating (Fig. 3(c)).

System Evaluation

To demonstrate the high-resolution two-dimensional display, we captured a representative image at the intermediate image plane using a Sony Alpha 7S II digital camera (Fig. 4(a)). To evaluate the real-three-dimensional display performance, we carried out a simple depth mapping experiment. We displayed three letters “A”, “B”, “C” on the three sub-panels of the display screen respectively (Fig. 4(b)) and captured the remapped images at three designated depth planes (0, 2, and 4 diopters). To compensate for the intensity variation between 0 and ±1 diffraction-order images, we applied a neutral density filter to the central sub-panel image to dim its brightness. The remapped letter images are shown at three designated depths (Fig. 4(c–e)), respectively. The letters are in focus at their designated depths while blurred at other depth locations.

To assess the focus cues provided by the ATOM display, we adopted a standard two-plane verification procedure47 and measured the modulation transfer function (MTF) at different accommodation distances. We directly placed the camera at the nominal working distance of the eyepiece and varied its axial position to mimic the accommodation of an eye. Two identical sub-panel images (slanted edge) were displayed at the input screen and projected to depth planes at 0 and 4 diopters with their centers aligned. The target depth was rendered at the dioptric midpoint 2 diopters position by using a linear depth-weighted blending where the image intensity at each designated depth plane is proportional to the dioptric distance of the point from that plane to the viewer along a line of sight. The experimentally-measured accommodation is 2.05 diopters, closely matching with the target value (Fig. 5(a)).

Next, we tested the system’s stability during the mechanical switch between two display modes. To characterize the tolerance of the distorted grating to the misalignment, we varied the distorted grating’s position both laterally and axially and measured the correspondent display performance. Again, we chose MTF as the metric and used the dual-plane characterization method above. The results imply that the MTF decreases as the grating’s position shift increases (Fig. 5(b)). Here the position shift is calculated with respect to the grating’s nominal position. Given a threshold ΔMTF = 0.1, the system can tolerate a shift of 2 mm along both lateral and axial axes. This relatively loose tolerance favors the low-cost production of the device.

Finally, we demonstrated the system’s capability in displaying a complex three-dimensional scene. Herein a linear depth-weighted blending algorithm was applied to generate different sub-panel images52. In a nutshell, the image intensity at each depth plane is linearly proportional to the dioptric distance between that plane and the viewer. Meanwhile, the sum of the image intensities is kept a constant at all depth planes. To achieve uniform image brightness across the entire depth range, we applied a tent filter to the linear depth blending, where the light intensity for each depth plane reaches a maximum at its nominal position and minimum elsewhere.

Based on the algorithm above, we generated the sub-panel images for three designated depth planes (0, 2, and 4 diopters) for a three-dimensional image (a tilted fence) and displayed them at the input screen. A camera was placed in front of the eyepiece with its focal depth adjusted to mimic the eye’s accommodation. At a series of depths, the images were captured accordingly. The representative depth-fused images at near-end (4 diopters) and far-end (0 diopter) are shown in Fig. 6(a,b), respectively, closely matching the ground-truth depth map (Fig. 6(c)). To quantitively assess the focusing effect, we measured the line spread of the fence image at the arrow-pointed location. The corresponding values at the near-focus and far-focus are 70 pixels and 120 pixels, respectively.

Discussion and Conclusion

In summary, based on the human-centric design principle, we developed a compact ATOM near-eye display which can provide correct focus cues that best mimic the natural response of human eyes. By projecting images of different sub-panels at a two-dimensional display screen to designated depths using a distorted grating, we created a real three-dimensional image covering the depth range from 4 diopters to infinity. The employment of all-passive optical components reduces the system dimension and power consumption, thereby improving the system’s wearability.

Due to the division of the input screen panel, each depth image has a reduced pixel resolution, a common problem for the spatial-multiplexing-based approaches46,53. To alleviate this problem, we built the system on a transformable architecture—we can switch between a high-resolution two-dimensional display mode and a multiple-plane three-dimensional display mode simply by removing or adding the distorted grating into the optical path, thereby providing task-tailored viewing experiences. Moreover, we envision this problem can be further reduced by using an ultra-high-resolution (4k or 8k) micro-display panel at the input end—after division, each sub-panel image still possesses a high pixel resolution. Fortunately, such ultra-high-resolution micro-display panels have gradually become available to the market.

Although not demonstrated, we can enable more depth planes by using a distorted grating with periodic structures along two dimensions54. Using such a two-dimensional diffractive element, we can perform lateral optical mapping along both x and y axes, leading to a more efficient utilization of screen pixels. In the ideal case, the total number of remapped pixels is equal to that of the original display screen. For example, given an input screen of N × N pixels, an ATOM display with a two-dimensional distorted grating can project a total of nine depth images, each with N/3 × N/3 pixels and associated with a unique diffraction order (Lx, Ly), where Lx, Ly = 0, ±1.

In the current ATOM display prototype, we decrease the light intensity associated with 0 diffraction order to compensate for the difference in diffraction efficiency, however, at the expense of reduced light throughput. To fully utilize the dynamic range of the display screen, rather than using a binary-amplitude distorted grating, we can employ a sinusoidal-phase distorted grating51 and build the system in the transmission mode. Such a diffractive phase element allows an approximately uniform energy distribution among ±1 and 0 orders, and it can be holographically fabricated by creating an interference pattern on a photoresist.

Data Availability

The data that support the findings of this research project are available from the corresponding author upon request.

References

Jerald, J. The VR book: Human-centered design for virtual reality (Morgan & Claypool, 2015).

Kress, B. & Shin, M. Diffractive and holographic optics as optical combiners in head mounted displays. In Proceedings of the 2013 ACM conference on Pervasive and ubiquitous computing adjunct publication, 1479–1482 (ACM, 2013).

Sitzmann, V. et al. Saliency in VR: How do people explore virtual environments? IEEE Transactions on Vis. Comput. Graph. 24.4, 1633–1642 (2018).

Geng, J. Three-dimensional display technologies. Adv. Opt. Photonics 5, 456–535 (2013).

Hoffman, D. M., Girshick, A. R., Akeley, K. & Banks, M. S. Vergence–accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 8, 33–33 (2008).

Hua, H. et al. Near-eye displays: state-of-the-art and emerging technologies. Three-Dimensional Imaging, Visualization, and Display 2010 and Display Technologies and Applications for Defense, Security, and Avionics IV. Vol. 7690, 769009 (International Society for Optics and Photonics, 2010).

Akşit, K. et al. Near-eye varifocal augmented reality display using see-through screens. ACM Transactions on Graphics (TOG) 36(6), 189 (2017).

Konrad, R. et al. Accommodation-invariant computational near-eye displays. ACM Transactions on Graphics (TOG) 36(4), 88 (2017).

Dunn, D. et al. Wide field of view varifocal near-eye display using see-through deformable membrane mirrors. IEEE Transactions on Vis. Comput. Graph. 23(4), 1322–1331 (2017).

Liu, S., Cheng, D. & Hua, H. An optical see-through head mounted display with addressable focal planes. 2008 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, 33–42 (IEEE, 2008).

Sugihara, T. & Miyasato, T. 32.4: A Lightweight 3‐D HMD with Accommodative Compensation. In SID Symposium Digest of Technical Papers. Vol. 29, 927–930 (Wiley Online Library, 1998).

Dunn, D. et al. 10‐1: Towards Varifocal Augmented Reality Displays using Deformable Beamsplitter Membranes. In SID Symposium Digest of Technical Papers Vol. 49, 92–95 (Wiley Online Library, 2018).

Liu, S., Hua, H. & Cheng, D. A novel prototype for an optical see-through head-mounted display with addressable focus cues. IEEE Transactions on Vis. Comput. Graph. 16(3), 381–393 (2010).

Chang, J.-H., Rick, B. V. K. & Aswin, C. Sankaranarayanan. Towards multifocal displays with dense focal stacks. In SIGGRAPH Asia 2018 Technical Papers (ACM, 2018).

Rathinavel, K. et al. An Extended Depth-at-Field Volumetric Near-Eye Augmented Reality Display. IEEE Transactions on Vis. Comput. Graph. 24(11), 2857–2866 (2018).

Love, G. D. et al. High-speed switchable lens enables the development of a volumetric stereoscopic display. Opt. Express 17(18), 15716–15725 (2009).

Stevens, R. E. et al. Varifocal technologies providing prescription and VAC mitigation in HMDs using Alvarez lenses. In Digital Optics for Immersive Displays. Vol. 10676. (International Society for Optics and Photonics, 2018).

MacKenzie, K. J., David, M. H. & Simon, J. W. Accommodation to multiple‐focal‐plane displays: Implications for improving stereoscopic displays and for accommodation control. J. Vis. 10(8), 22–22 (2010).

McQuaide, S. C. et al. 50.4: Three‐dimensional Virtual Retinal Display System using a Deformable Membrane Mirror. In SID Symposium Digest of Technical Papers. Vol. 33, 1324–1327 (Wiley Online Library, 2002).

Hu, X. & Hua, H. High-resolution optical see-through multi-focal-plane head-mounted display using freeform optics. Opt. Express 22, 13896–13903 (2014).

Dunn, D. et al. Membrane AR: varifocal, wide field of view augmented reality display from deformable membranes. In ACM SIGGRAPH 2017 Emerging Technologies, 15 (ACM, 2017).

Wetzstein, G. et al. Tensor displays: compressive light field synthesis using multilayer displays with directional backlighting. DSpace@MIT (2012).

Maimone, A. & Fuchs, H. Computational augmented reality eyeglasses. In 2013 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 29–38 (IEEE, 2013).

Hua, H. & Liu, S. Depth-fused multi-focal plane displays enable accurate depth perception. Optical Design and Testing IV. Vol. 7849. (International Society for Optics and Photonics, 2010).

Llull, P. et al. Design and optimization of a near-eye multifocal display system for augmented reality. In Propagation through and Characterization of Distributed Volume Turbulence and Atmospheric Phenomena. (Optical Society of America, 2015).

Wu, W. et al. Content-adaptive focus configuration for near-eye multi-focal displays. In 2016 IEEE International Conference on Multimedia and Expo (ICME), 1–6 (IEEE, 2016).

Feng, J.-L., Wang, Y.-J., Liu, S.-Y., Hu, D.-C. & Lu, J.-G. Three-dimensional display with directional beam splitter array. Opt. Express 25, 1564–1572 (2017).

Cheng, D. et al. Design of a wide-angle, lightweight head-mounted display using free-form optics tiling. Opt. Lett. 36, 2098–2100 (2011).

Lanman, D. & Luebke, D. Near-eye light field displays. ACM Transactions on Graphics (TOG) 32, 220 (2013).

Zhang, H.-L. et al. Tabletop augmented reality 3d display system based on integral imaging. JOSA B 34, B16–B21 (2017).

Otao, K. et al. Light field blender: designing optics and rendering methods for see-through and aerial near-eye display. In SIGGRAPH Asia 2017 Technical Briefs (ACM, 2017).

Song, W. et al. Light field head-mounted display with correct focus cue using micro structure array. Chin. Opt. Lett. 10 (2014).

Kim, H.-j. et al. Three-dimensional holographic head mounted display using holographic optical element. In 2015 IEEE International Conference on Consumer Electronics (ICCE) (IEEE, 2015).

Ooi, C., Wei, N. M. & Ochiai, Y. Eholo glass: electroholography glass. a lensless approach to holographic augmented reality near-eye display. In SIGGRAPH Asia 2018 Technical Briefs (ACM, 2018).

Padmanaban, N., Konrad, R., Stramer, T., Cooper, E. A. & Wetzstein, G. Optimizing virtual reality for all users through gaze-contingent and adaptive focus displays. Proc. Natl. Acad. Sci. 114, 2183–2188 (2017).

Matsuda, N., Fix, A. & Lanman, D. Focal surface displays. ACM Transactions on Graph. (TOG) 36, 86 (2017).

Hua, H. & Javidi, B. A 3d integral imaging optical see-through head-mounted display. Opt. Express 22, 13484–13491 (2014).

Grzegorzek, M., Theobalt, C., Koch, R. & Kolb, A. Time-of-flight and depth imaging. Sensors, Algorithms and Applications, vol. 8200 (Springer, 2013).

Huang, F.-C. et al. Eyeglasses-free display: towards correcting visual aberrations with computational light field displays. ACM Transactions on Graphics (TOG) 33(4), 59 (2014).

Yao, C. et al. Design of an optical see-through light-field near-eye display using a discrete lenslet array. Opt. Express 26(14), 18292–18301 (2018).

Tan, G. et al. Foveated imaging for near-eye displays. Optics Express 26(19), 25076–25085 (2018).

Park, J.-H. & Kim, S.-B. Optical see-through holographic near-eye-display with eyebox steering and depth of field control. Opt. Express 26(21), 27076–27088 (2018).

Maimone, A., Georgiou, A. & Kollin, J. S. Holographic near-eye displays for virtual and augmented reality. ACM Transactions on Graphics (TOG) 36(4), 85 (2017).

Sun, P. et al. Holographic near-eye display system based on double-convergence light Gerchberg-Saxton algorithm. Opt. Express 26(8), 10140–10151 (2018).

Hamann, S. et al. Time-multiplexed light field synthesis via factored Wigner distribution function. Opt. Lett. 43(3), 599–602 (2018).

Cui, W. & Gao, L. Optical mapping near-eye three-dimensional display with correct focus cues. Opt. Lett. 42, 2475–2478 (2017).

Hu, X. & Hua, H. Design and assessment of a depth-fused multi-focal-plane display prototype. J. Disp. Technol. 10, 308–316 (2014).

Dalgarno, P. A. et al. Multiplane imaging and three dimensional nanoscale particle tracking in biological microscopy. Opt. Express 18, 877–884 (2010).

Roddier, F. Wavefront sensing and the irradiance transport equation. Appl. Opt. 29, 1402–1403 (1990).

Li, M., Larsson, A., Eriksson, N., Hagberg, M. & Bengtsson, J. Continuous-level phase-only computer-generated hologram realized by dislocated binary gratings. Opt. Lett. 21, 1516–1518 (1996).

Kress, B. C. Diffractive micro-optics. In Field Guide to Digital Micro-optics, 20–49 (SPIE Press, 2014).

Ravikumar, S., Akeley, K. & Banks, M. S. Creating effective focus cues in multi-plane 3d displays. Opt. Express 19, 20940–20952 (2011).

Huang, F.-C., Chen, K. & Wetzstein, G. The light field stereoscope: immersive computer graphics via factored near-eye light field displays with focus cues. ACM Transactions on Graph. (TOG) 34, 60 (2015).

Blanchard, P. M. & Greenaway, A. H. Simultaneous multiplane imaging with a distorted diffraction grating. Appl. Opt. 38, 6692–6699 (1999).

Acknowledgements

This work was supported in part by discretionary funds from UIUC and a research grant from Futurewei Technologies, Inc. The authors would also like to thank Ricoh Innovations for gifting the DMD modules.

Author information

Authors and Affiliations

Contributions

W.C. executed the experiments. L.G. initiated and supervised the project. W.C. and L.G. analyzed the experimental results. Both authors discussed the results and commented on the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cui, W., Gao, L. All-passive transformable optical mapping near-eye display. Sci Rep 9, 6064 (2019). https://doi.org/10.1038/s41598-019-42507-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-42507-0

This article is cited by

-

Multifocal displays: review and prospect

PhotoniX (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.