Abstract

Photoplethysmography (PPG) is a simple, yet powerful technique to study blood volume changes by measuring light intensity variations. However, PPG is severely affected by motion artifacts, which hinder its trustworthiness. This problem is pressing in earables since head movements and facial expressions cause skin and tissue displacements around and inside the ear. Understanding such artifacts is fundamental to the success of earables for accurate cardiovascular health monitoring. However, the lack of in-ear PPG datasets prevents the research community from tackling this challenge. In this work, we report on the design of an ear tip featuring a 3-channels PPG and a co-located 6-axis motion sensor. This, enables sensing PPG data at multiple wavelengths and the corresponding motion signature from both ears. Leveraging our device, we collected a multi-modal dataset from 30 participants while performing 16 natural motions, including both head/face and full body movements. This unique dataset will greatly support research towards making in-ear vital signs sensing more accurate and robust, thus unlocking the full potential of the next-generation PPG-equipped earables.

Similar content being viewed by others

Background & Summary

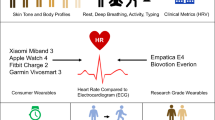

Monitoring vital signs, such as cardiovascular functions, heart rate, oxygen saturation, and blood pressure, through Photoplethysmography (PPG) is common across wearables like smartwatches1. Photoplethysmography, as suggested by its name, is an optical technique used to infer blood volumetric changes in the peripheral circulation. PPG is indeed a remarkable signal, which not only carries a wealth of clinical information (such as heart rate, heart rate variability, blood oxygen saturation, respiration rate, blood pressure, and artery characteristics2,3,4), but can also be used for non-medical applications such as authentication5 and drowsiness detection6.

At the same time, the past years have witnessed the widespread diffusion of a new family of wearables: smart earbuds (also known as earables). Earables are mostly known for their leisure applications (e.g., Apple AirPods), showing their capability in enhancing the user’s auditory experience with, for instance, noise cancellation and spatially aware audio. However, they are also gaining traction, across the research community, for personal health monitoring7,8,9,10, activity recognition11,12,13,14, authentication15, and navigation16. Earables are posed to revolutionize the mobile health (mHealth) market17. Thanks to their proximity to the human sensorium (i.e., brain, ears, eyes, mouth, and nose), earables are in a unique position with respect to other, more traditional, wearables like smartwatches18. Indeed, earables have allowed the research community to investigate a number of novel applications such as monitoring cerebral activity during sleep through electroencephalography (EEG)19, eye-movements20 and tracking eating episodes, dietary and swallowing activities21.

Notably, previous works have explored PPG sensing in or around the ear focusing on specific applications. However, PPG signal acquisition is particularly challenging in the presence of either ambient light or motion. While the former can be mitigated by ambient light rejection modules (often already implemented in hardware), there still is no unanimously agreed technique to mitigate the latter without a considerable loss of information. Earlier works considered only motion artifacts (MA) arising from body movements, like walking or running22,23,24,25. However, the head and facial region consist of an intricate mesh of muscles and blood vessels that contract and relax with each of their movements. This induces unwanted noise and motion artifacts in the PPG signals recorded from the ear. The interaction between these motions and the signals recorded from in-ear PPG sensors remains entirely unexplored.

Very few openly available datasets feature PPG data from the ear26,27. However, there are no publicly available datasets that explore the effect of facial expressions and head movements on earables. Table 1 presents an overview of existing datasets in the literature that provide PPG signals collected at various body locations. Recently27, proposed a solution for how motion artifacts can be removed for accurate heart rate and blood pressure estimation with PPG sensors placed on the ear lobes. However, they only study the effect of body motion artifacts on the acquired PPG signals. Hence, there is a strong need for an open-source dataset studying the effect of facial motions on in-ear PPG signals.

To this end, this work aims at providing the research community with a novel, multi-modal, dataset, which, for the first time, will allow studying of the impact of body and head/face movements on both the morphology of the PPG wave captured at the ear, as well as on the vital signs estimation. To accurately collect in-ear PPG data, coupled with a 6 degrees-of-freedom (DoF) motion signature, we prototyped and built a flexible research platform for in-the-ear data collection. The platform is centered around a novel ear-tip design which includes a 3-channels PPG (green, red, infrared) and a 6-axis (accelerometer, gyroscope) motion sensor (IMU) co-located on the same ear-tip. This allows the simultaneous collection of spatially distant (i.e., one tip in the left and one in the right ear) PPG data at multiple wavelengths and the corresponding motion signature, for a total of 18 data streams. Inspired by the Facial Action Coding Systems (FACS)28, we consider a set of potential sources of motion artifact (MA) caused by natural facial and head movements. Specifically, we gather data on 16 different head and facial motions, including head movements (nodding, shaking, tilting), eyes movements (vertical eyes movements, horizontal eyes movements, brow raiser, brow lowerer, right eye wink, left eye wink), and mouth movements (lip puller, chin raiser, mouth stretch, speaking, chewing). We also collect motion and PPG data under activities of different intensities, which entail the movement of the entire body (walking and running). Together with in-ear PPG and IMU data, we collect several other vital signs such as heart rate, heart rate variability, and breathing rate from a medical-grade chest device.

With approximately 17 hours of data from 30 participants of mixed gender and ethnicity (mean age: 28.7 years, standard deviation: 5.3 years), our dataset empowers the research community to analyze the morphological characteristics of in-ear PPG signals with respect to motion, device positioning (left ear, right ear), as well as a set of configuration parameters and their corresponding data quality/power consumption trade-off. We envision such a dataset could open the door to innovative filtering techniques to mitigate, and eventually eliminate, the impact of MA on in-ear PPG. We ran a set of preliminary analyses on the data and observe statistically significant morphological differences in the PPG signal across different types of motions when compared to a situation where there is no motion. These preliminary results represent the first step towards the detection of corrupted PPG segments and show the importance of studying how head/face movements impact PPG signals in the ear.

To the best of our knowledge, this is the first in-ear PPG dataset that covers a wide range of full-body and head/facial motion artifacts. Being able to study the signal quality and motion artifacts under such circumstances will serve as a reference for future research in the field, acting as a stepping stone to fully enable PPG-equipped earables.

Methods

To accurately analyze the in-ear PPG motion artifacts arising from head and facial motions, we design a controlled experiment and ask participants to perform a set of pre-defined body, head, and facial motions. We opted for a controlled study since it enables running a detailed analysis of the phenomenon under investigation and it is suitable for the reproducibility of the data collection procedure. In this section, we provide details regarding the study population, data collection procedure, and the collected data.

Participants

Thirty individuals (18 males, 12 females, 20–49 years of age, mean age: 28.7 years, standard deviation: 5.3 years) were recruited and voluntarily took part in the study. None of the participants had any underlying heart or respiratory condition and were in good health at the time of the study. We used the standard Fitzpatrick skin tone scale29 to group our participants based on skin tone. The scale includes 6 types, 1 being the lightest and 6 being the darkest. Despite being dominated by type 2 skin tone (n = 18), our dataset includes type 1 (n = 2), type 3 (n = 4), type 4 (n = 4), and type 5 (n = 2) skin tone groups.

Before taking part in the study, the investigators briefed all the participants who then gave their written consent (by completing an informed consent form) to release their data publicly. Every participant received a gift card as compensation upon completion of the study. The study was approved by the ethics board of the department of Computer Science and Technology at the University of Cambridge (application number 1873).

Devices and setup

Given the lack of existing open-source in-ear PPG platforms, we designed a custom head-worn prototype (see Fig. 1c) to collect in-ear PPG signals with established and affordable hardware components. The prototype consists of an ESP32 microcontroller collecting sensor data from both the left and right ears. In order to facilitate the PPG signal acquisition from inside the ear (Fig. 2), we fabricated a flexible PCB board consisting of a MAXM86161 (https://www.maximintegrated.com/en/products/sensors/MAXM86161.html) PPG sensor and ST-LSM6DSRX (https://www.st.com/en/mems-and-sensors/lsm6dsrx.html) IMU as shown in Fig. 1a. The flexible PCB board is interfaced via the I2C protocol to the ESP32 microcontroller for data acquisition. MAXM86161 is a well-known 3-channels PPG sensor (green - 520 to 550 nm, red - 660 nm, infrared - 880 nm) catered for in-ear sensing applications. The IMU continuously records 3-axis accelerometer and 3-axis gyroscope data to provide motion signals for in-ear motions occurring while making facial expressions or head movements. Both sensors are sampled at a frequency of 100 Hz. As shown in Fig. 1b, the flexible PCB containing the PPG sensor and the IMU was coated with soft silicone to resemble a typical ear tip to provide comfort while wearing the device, as well as remain firm within the ear during various face/head motions. We used a transparent soft silicone gel to prevent any distortions in the acquired PPG signals. Figure 2 reports a drawing of the device when placed inside the ear canal.

(a) Flexible PCB implementation of our earbud featuring MAX86161 PPG sensor and a co-located ST LSM6DSRX IMU. (b) An in-ear soft earbud was realized by embedding the in-ear flexible PCB board into a transparent silicone mold. (c) Head-worn data acquisition device consisting of an ESP32 microcontroller collecting data from in-ear PPG and IMU sensors in the left and right ear. (d) A participant wearing our earbud-based prototype and taking part in the data collection protocol.

PPG signal quality is not only affected by motion but also by the sensor’s configuration. Typically, sensors allow changing several parameters which affect the acquired signal and consequently the power consumed by the sensor. Given this trade-off, often, optimal parameters for signal quality are not the most efficient in terms of power consumption. To explore this aspect of PPG sensing, we configured our device to change the sensor parameters every 30 seconds. This way, by collecting data for 2 minutes for each motion session we could cycle through 4 different sets of configurations (Table 2). In particular, the MAXM86161 allows changing of three parameters: LED current which determines the brightness of the three LEDs, pulse width which is the time each LED is kept on during measurement, and the integration time which is the period during which the photodiode is active and sampling the reflected light. Notice that pulse width and integration time cannot be controlled individually and only 4 combinations of the two parameters are available in the sensor. As shown in Table 2, we have chosen 4 configurations that offer distinct power consumption profiles and should result in diverse SNR characteristics.

On the other hand, as a ground truth to collect vital signs from a reliable source, not affected by motion artifacts, we rely on a Zephyr Bioharness 3.0 (https://www.zephyranywhere.com/), a portable, medical-grade (FDA approved30), ECG chest band. The participants wore the portable ground truth ECG band on their chests for the whole experiment.

Data collection protocol

After being briefed about the study, the participants wore our in-ear data collection device on the head placing the ear-tips in the left and right ear canal (Fig. 1) and the Zephyr Bioharness 3.0 ECG chest band. As in several prior works30,31, the Zephyr acts as ground truth device in our data collection. Starting from a resting pose (participants sitting still without any motion), we progressively asked the participants to repetitively carry out individual movements. Notably, for the entire duration of each data collection session, one of the investigators stayed in the room with the participant (carefully observing social distancing and other COVID-19 precautions). We consider two main classes of motions: head/face movements and full-body movements. A summary of the data collection protocol (following the 2-minutes-long still baseline) is reported in Fig. 3. By looking at the inherent nature of the motions, head/face movements can be further categorized into one-shot and continuous movements.

-

1.

One-shot motions: One-shot motions are not normally performed continuously, and they are often performed in normal social interactions as well as in the form of psychosomatic tics. The selection process for the one-shot motion artifacts was informed by both anatomy principles28 and previous work32,33,34. In building our dataset, we look at Action Units (AUs) that entail the movement of the head, the eyes (and the adjacent muscles), and the mouth. Specifically, we selected: (1) nod; (2) shake; and (3) tilt as head movements. The eye movements chosen were: (4) vertical eye movements; (5) horizontal eye movements; (6) brow raiser; (7) brow lowerer; (8) right eye wink; and (9) left eye wink. Finally, we investigated: (10) lip puller; (11) chin raiser; and (12) mouth stretch as mouth movements. We instructed the participants to repeat the one-shot movements roughly every 5 seconds.

-

2.

Continuous motions: Besides, we also accounted for head/face continuous movements caused by common activities such as (13) chewing; and (14) speaking. Together with the one-shot movements, Continuous movements are quite unique to ear-worn devices. In fact, when performing these, the complex mesh of facial muscles moves substantially and, therefore, these activities are likely to cause significant deformations of the tissues in and around the ear.

Apart from head/face motions, we also considered full-body activities such as (15) walking and (16) running, which give rise to well-known sources of noise35 in the PPG signal. The list of all the considered motion artifacts is reported in Table 4 and pictured in Fig. 4. Notably, before performing each and every motion, the investigator demoed each and every gesture/activity to the participants. Ultimately, for all the conditions but the full-body movements (walking and running), we followed the wearable device validation guideline stipulated by the Consumer Technology Association36 and acquired PPG signals while seated in the upright position. During the resting condition, we instructed the participants to breathe normally without moving. The speaking condition consisted of a conversation with the investigator, where the participant described a recent event to the investigator. The chewing condition was assessed by recording PPG data while the participant was chewing gum. For the full-body motion conditions, the participants were asked to walk and run at a set pace on a treadmill. We set the treadmill’s speed at 5kph and 8kph while walking and running, respectively. For each motion condition, we recorded 2 minutes of data, automatically changing the configuration of the PPG parameters every 30 seconds using the values described earlier. The length of the sessions was carefully chosen to be long enough to yield good-quality vital signs and yet not too tedious/harmful to the participants.

Summary of the Facial Action Units (subset of the FACS) considered in the dataset65. The individuals depicted provided consent for the open publication of the images.

Collected data

We collected three types of data: (a) In-ear PPG signals from both left and right ear, (2) In-ear IMU signals from both left and right ear, and (3) Ground-truth heart rate data from Zephyr Bioharness 3.0 ECG chest band. Table 3 reports an overview of the characteristics of devices used to collect EarSet dataset. The table presents the type of data that was collected for each device as well as the sampling rate at which the data was collected. The table shows that EarSet contains data from 2 different devices (including an ECG ground truth as ground-truth information) placed on 2 unique body locations(in-ear and chest). The data from the accelerometer was available in both the body locations (ear and chest). Here now follows more details on the collected raw data.

-

1.

In-ear PPG signals: The in-ear PPG signals (19-bit analog to digital converted PPG values from MAXM86161) were collected using our custom head worn prototype at a sampling frequency of 100 Hz. The timestamps (in milliseconds resolution) are available for each PPG signal sample from both the left and the right ear. For each motion artifact, the PPG signals were collected for 2 minutes. Every 30 seconds, the PPG configuration was changed in the order reported in Table 2. As explained earlier, PPG signals were collected at three different wavelengths–green (530 nm), red (660 nm), and infrared (880 nm).

-

2.

In-ear IMU signals: The in-ear IMU signals (both 3-axis accelerometer and gyroscope) were collected simultaneously with PPG signals using our custom head worn prototype at a sampling frequency of 100 Hz. The timestamps (with a milliseconds resolution) are available for each IMU record from both the left and the right ear. The IMU signals were also recorded continuously for each motion artifact session.

-

3.

Zephyr ground-truth data: The Zephyr Bioharness 3.0 was worn by the participants on the chest and used to collect the ground-truth data. Specifically, the Zephyr provides heart rate (bpm), heart rate variability (ms) and ECG R-R interval (ms) at a sampling frequency of 1 Hz. In addition, the Zephyr provides raw 3-axis accelerometer data collected at a sampling frequency of 100 Hz. We also collect posture information (in degrees) at a sampling frequency of 1 Hz. The chest band also has a breathing sensor from which raw breathing waveform (25 Hz) and breathing/respiration rate (1 Hz) were collected.

PPG Features

Before delving into the detailed description of the dataset we collected, we summarize the signal processing techniques used with PPG signals. This lays the required signal processing foundation for understanding our dataset validation.

The most common biomarkers that can be derived from PPG are:

-

1.

Heart rate: Peaks are detected from the AC component of the PPG signal to obtain the number of beats per minute. Typically the raw PPG signal is band-pass filtered between [0.4 Hz, 4 Hz] to obtain the AC component corresponding to the heart rate.

-

2.

Oxygen saturation (SpO2): Oxygenated hemoglobin absorbs less red light whereas deoxygenated hemoglobin absorbs less infrared light. Thus, the ratio between red and infrared light intensities measured by the PPG sensor can be used to estimate SpO2 (R) as follows:

$$R=\frac{{R}_{red}}{{R}_{infrared}}=\frac{A{C}_{red}/D{C}_{red}}{A{C}_{infarared}/D{C}_{infrared}}$$(1) -

3.

Heart rate variability (HRV): Heart rate variability is measured as the time difference between adjacent peaks in a PPG signal.

-

4.

Respiration rate (RR): A Synchrosqueezing transform (SST)37 is applied on the raw PPG signals to extract the respiration component (0.1–0.9 Hz). The number of peaks in the resulting respiration component of the PPG signals corresponds to the respiration rate (breaths per minute). Besides, there are other techniques38 using time domain and frequency domain features extracted from the PPG signal along with machine learning to estimate respiration rate.

-

5.

Blood pressure (BP): Blood pressure is typically computed by placing PPG sensors at two locations on the same artery (say, finger and wrist) and then measuring the time taken by the pulse wave to travel from one PPG location to the other (pulse transit time). BP is inversely proportional to the pulse transit time obtained by calculating the peak time shifts between the two PPG sensors. In recent years, many machine learning and deep learning techniques39,40 have also been proposed to estimate blood pressure from the extracted PPG signal features.

As seen from the above biomarkers, the time domain signal features from the PPG signal are essential to estimate heart rate, heart rate variability as well as blood pressure. Some of the frequency domain features help in differentiating a normal sinus rhythm from an arterial fibrillation (AF) signal or an abnormal heart signal. In addition to the above-mentioned features, many techniques use features extracted from the first-order derivatives and the second-order derivatives of the PPG signal to compute arterial stiffness41 and blood pressure40. The second-order derivative of a PPG signal provides useful information such as the location of the dicrotic notch, i.e., the time at which the diastolic peak occurs which provides information regarding the blood flow dynamics (systolic and diastolic phases).

Table 5 shows the main feature categories required for several critical health sensing applications. In addition to the PPG signal features mentioned earlier, useful physiological features marked in Fig. 5 can also be derived from the PPG signal42. The following list describes in more detail these main features which are also the ones we use in our technical validation of how various head and facial expressions affect in-ear PPG signals:

-

1.

Systolic phase: The Amplitude of the systolic peak and the time at which the systolic peak is located in the PPG signal.

-

2.

Diastolic phase: The Amplitude of the diastolic peak and the time at which the diastolic peak is located in the PPG signal.

-

3.

Ratio between systolic and diastolic phase: It is an indicator of the abnormalities in blood pressure. It is also referred to as the Augmentation index or Reflection index.

-

4.

Pulse width: It is the time between the beginning and end of a PPG pulse wave. It correlates with our heart’s systemic vascular resistance.

-

5.

Rise time: The time between the foot of the PPG pulse and the systolic peak.

-

6.

Perfusion index (PI): PI is the ratio of the pulsatile blood flow (AC component) to the non-pulsatile or static blood in peripheral tissue (DC component).

-

7.

Dominant frequency: The dominant frequency of the PPG signal can be useful to give insights concerning the presence of artifacts at a different frequency outside the heart rate frequency band [0.4, 4 Hz].

-

8.

Spectral Kurtosis: Also known as Frequency Domain Kurtosis, describes the distribution of the observed PPG signal frequencies around the mean and is a very useful indicator of the PPG signal quality.

-

9.

Peak-to-peak magnitude variance: It is the variance of the difference between the pulse wave amplitude between two adjacent pulse waves.

-

10.

Peak-Time interval variance: It is the variance of the pulse width between peaks of two adjacent PPG waves.

During the validation of our dataset, we show how the various motions and activities performed by the participants affect the features above. This demonstrates how head and facial motions could degrade the performance of health-related applications which rely on these features. We believe EarSet will help the research community in developing mitigation strategies for these motions and activities.

Data Records

The raw data can be found at Zenodo43. Data of each participant has been anonymized with the alphanumeric format: P#. We refer to this as a participant identifier. The dataset contains a folder for each participant and an additional file, Demographics.csv, containing the demographics (e.g., gender, age) and skin tone of each participant in an anonymous format. Within each participant folder, there are two other folders, namely, EARBUDS and ZEPHYR, which contain the raw data obtained from each device during data collection. Table 6 provides an overview and description of the main files inside a participant folder.

Earbuds data

The IMU and PPG data are split into different files for each activity considered. The IMU sensor used the same configuration for the entire recording, while the PPG cycled through the four configurations described in Table 2. The transition before each configuration is marked by a line in the format #<timestamp>, current:<curr>, tint:<tint>, where <timestamp> is the UNIX time with milliseconds resolution, <curr> is the LED current in milli-Ampere and <tint> is the integration time in micro-seconds (this determines also the pulse width). All data points after this line have been collected with the new sensor configuration. Notice that the first configuration does not have such a line at the beginning.

To use the data collected from earbuds, one should first convert the raw ACC data to milli-g by multiplying it by 0.061 and the raw GYRO data to milli-dps (degrees per second) by multiplying with 17.5. This is to convert the raw data coming from the sensor from an integer format to a more usable format (i.e., milli-g and milli-dps). The PPG data does not require any conversion.

Zephyr data

The data from the Zephyr Bioharness is directly pre-processed by the device and provided at a 1 Hz granularity. Hence, data from this device can be used as is. Notably, in some instances, the first and last few data-points recorded by the Zephyr might present some artifacts due to the user wearing/removing the device.

Missing data

During the data collection, device malfunctions caused a minor loss of data. The PPG data relative to the mouth stretch activity for P0 and P27 is missing. Similarly, sensor configuration #4 is missing for P9 for the nod activity. In addition, the BRAmplitude data field recorded by the Zephyr is not present for users P17, P26, P27, P28, and P29. Finally, users P3, P4, P7, P8, and P10 have corrupted Zephyr data (notably, their IMU and PPG data from our prototype are still perfectly usable).

Technical Validation

In this section, we perform a preliminary analysis of the collected data to evaluate its technical validity. We independently processed the PPG signals from the 3 channels (green, red, infrared) recorded from the left and the right ears. The acquired PPG signals from the left and right ear were aligned in the time axis and stored in Pandas Data Frames. Each Data Frame is then re-sampled at 100 Hz to ensure a consistent sampling rate. The start and the end of each Data Frame were trimmed to ensure that each data frame has the same length. Note that our preliminary exploration only focuses on the 4th set of LED configuration parameters (LED current 32mA; pulse width 123.8μs; integration time 117.3μs), as described in our Methods.

Dataset outlook and template matching

Firstly, we analysed EarSet to study how each facial motion artifacts appear unique in the collected in-ear PPG signals. In Fig. 6, we can appreciate at a glance how two diverse facial movements, such as lip puller (a) and nod (b), have a very different impact on the PPG trace when compared to a full-body movement like running (c)–in which the signal is dominated by the running cadence rather than by the cardiac signal. Notably, we can observe substantial differences even among the two facial movements: while the impact of the lip puller appears very localized and aligned with the motion (as we can see from the variations along the gyroscope axes), the nod seems to have a more prolonged impact on the DC component of PPG trace. By manually inspecting the data, we noticed that for a few [participant, motion] combinations, the PPG was not affected by artifacts. In particular, the vertical and horizontal movement of the eyes did not cause any artifact on the PPG signals. This is due to the limited involvement of the facial muscles, especially of those near the ears, during eye movements. Similarly, for the left and right eye wink motions, some participants could not perform the motion with both eyes or not at all. In other cases, the wink was subtle and hence did not result in any artifact in the corresponding PPG signal. For the rest of the analysis, we filtered out these [participant, motion] combinations for which the PPG was not affected by motion.

To deepen our investigation, and gain a better visual understanding of how the various motion artifacts affect the morphology of the PPG pulses, we relied on a template matching analysis42. In doing so, we crafted a template pulse by taking the average of all the pulses of each user when still. We then plot the template pulse in red and use it as a reference against all the PPG pulses present in each motion session (plotted in gray). Figure 7 depicts the template matching analysis for shake (a), brow raiser (b), lip puller (c), and mouth stretch (d). The plots show how each of the considered movements affects the morphology of the PPG pulse differently, resulting in subtle, yet notable artifacts. Many applications rely on morphological features computed on the PPG signals42. Hence, such artifacts in the morphology of each pulse could lead to erroneous vitals estimation. We believe that our dataset represents a good resource for a more in-depth study and characterization of this issue for an emerging class of devices–earables equipped with heath-related sensors.

Handcrafted metrics extraction from EarSet

We sought to proceed with our exploration of the dataset by extracting handcrafted features commonly derived from PPG signals for various health sensing applications listed in our Methods. For all the PPG signal metrics excluding Perfusion Index, we apply a 4th-order Butterworth band-pass filter (low-cut =0.4Hz, high-cut =4Hz) for signal smoothening. To facilitate a fair comparison of the PPG signal metrics for each facial motion artifact available in EarSet, we normalized their values using a standard min-max normalization. We chose to independently normalize the metric values for each user’s motions artifacts. Specifically, normalizing every user independently allows us to retain the subject-dependent motion artifact characteristics as well as the unique blood vessel morphology of each user.

Figure 8 reports the empirical cumulative distribution function (ECDF) of how head/face and full-body movements impact the Peak-to-Peak Magnitude Variance (a), Peak-Time Interval Variance (b), Perfusion Index (c), and the Spectral Kurtosis (d) of the in-ear PPG signal. Similar patterns can be observed for other metrics. For this analysis, we considered the normalized PPG signal metrics computed from both the left and the right ear for all the users. We can observe that the PPG signal metrics for the “still” situation remain consistent across the entire population. On the other hand, the facial(head/face) and full-body movements appear to have more widespread distributions as well as different patterns. This is especially true for full-body movements. Notably, the findings of the spectral kurtosis analysis (d) are also aligned to the literature42, showing higher values for clean PPG signal. This can be explained by the presence of sharper peaks in the Fourier spectrum of clean (still in our case) PPG. These preliminary results suggest that different motion categories (i.e., head/face and full-body) create diverse artifacts in the PPG signal, and therefore it might be necessary to adopt dedicated approaches when applying signal filtering techniques. Our preliminary analysis of EarSet show that our dataset is a good source to start exploring this avenue.

Finally, We studied whether it is possible to spot differences between the individual motions using the collected PPG signals in EarSet. We began by looking at the Mean Absolute Error (MAE) between all the PPG signal metrics extracted under the various motion artifact and the “still” stationary PPG signal baseline. As we can see from Fig. 9, for the majority of the PPG signal metrics, there are statistically significant differences between the still baseline and most of the artifacts. As expected, more intense head/face movements, like tilt and mouth stretch, yield greater differences in the signal metrics computed against the still baseline. This is much more evident while looking at full-body movements. Besides, a comparison of data from the left (??) and right (??) ear hints at differences between the PPG signals collected from the two ears. Multi-site PPG signals from the ears have been largely understudied so far. We believe our dataset is the perfect starting point to further explore this area.

Heatmaps of how the various motion artifacts impact the handcrafted metrics extracted from the green PPG signal ((a) left ear; (b) right ear). The values reported in the heatmaps are the Mean Absolute Error (MAE) with respect to the still baseline. The heatmaps’ cells are annotated with a T whenever there is a statistically significant difference between the still baseline signal and the MA-corrupted one (p < 0.05).

Usage Notes

Data pre-processing

The data recorded from the Zephyr does not require additional processing as they are already pre-processed (with the exception of the ECGAmplitude and the BRAmplitude, which can be easily pre-processed using NeuroKit library).

However, the data collected from our earable prototype requires pre-processing. Firstly, the raw accelerometer data has to be converted to milli-g units by multiplying with 0.061, and the raw gyroscope data has to be converted to milli-dps (degrees per second) by multiplying with 17.5. This converts the raw IMU sensor data from an integer format to a more usable/standard format (i.e., milli-g and milli-dps). We then remove the direct current (DC) offset from the gyroscope data by applying a Butterworth band-pass filter (0.4–4 Hz cutoff). Secondly, the PPG signals can be pre-processed using bandpass filtering options available in Heartpy or NeuroKit libraries to extract HR, SpO2, etc.

EarSet dataset

The EarSet dataset is available in43. Convenient libraries to pre-process and clean the physiological signals include HeartPy (https://python-heart-rate-analysis-toolkit.readthedocs.io/en/latest/) to extract heart rate data from PPG or ECG sensors, NeuroKit (https://neurokit2.readthedocs.io/en/latest/index.html) and BrainFlow (https://github.com/brainflow-dev/brainflow) to analyze PPG and ECG signals.

We believe that the EarSet dataset will foster research of new solutions to problems such as:

-

Motion Artifacts Filtering: The dataset enables the exploration of how subtle head and face motions affect in-ear IMU and PPG signals. Firstly, this allows studying what kind of facial movements cause significant degradation of the PPG signals and how they might affect the accuracy of vital signs estimation. Secondly, the dataset will motivate the design of sophisticated filtering techniques for in-ear PPG signals - targeted at eliminating head and facial motion artifacts.

-

Sensor Location: EarSet offers a unique opportunity to study whether the availability of PPG sensors in both ears could improve the estimation of vital signs. Having access to independent streams of PPG signals from the left and right ears could highlight asymmetries in the way people perform head and facial movements. These findings could be exploited to design improved signal-filtering approaches.

-

Sensor Configuration: Given the need for low power consumption in future earable devices, the dataset allows the exploration of how different PPG hardware configurations (including 3 wavelengths), each with specific power requirements, affect the acquired PPG signal quality. This has important implications for the design of future devices and processing pipelines.

-

State-of-the-art Comparison: The dataset contains several physiological measurements from ECG signals measured using a Zephyr Bioharness 3.0 chest strap. This enables validation and benchmarking of vital signs estimation methods applied to in-ear data with state-of-the-art methods from commercial devices unaffected by head/facial motions.

While the EarSet dataset opens up novel opportunities for earable devices, our approach still has a few limitations and presents opportunities for further improvements. Our focus is to offer a dataset to investigate the impact of head/face motions, in addition to full-body activities, on in-ear PPG signal quality and vital signs estimation. Skin tone is an additional factor that could affect data quality35. Although EarSet offers diversity in skin tones, the acquired data does not follow a uniform distribution among the six categories of pigmentation29. Future work will consider expanding the dataset to include additional participants to uniformly cover all skin tones.

All our participants were healthy at the time of the data collection and had no heart-related conditions. Future data collection efforts will consider participants with underlying conditions that could affect the morphology of the PPG signal even without the presence of motion-related artifacts. Correctly distinguishing the two cases would significantly increase the trustworthiness of earable devices beyond commercial settings - with the potential to be applied in clinical settings. Additionally, manual assessment of the PPG signal quality from experts in the field would complement the dataset, enabling the development of automatic pipelines to estimate expert-grade clinical assessments.

Code availability

We provide the raw data files obtained during the data collection structured by a user identifier. We did not implement any specialized code to pre-process the data.

References

Castaneda, D., Esparza, A., Ghamari, M., Soltanpur, C. & Nazeran, H. A review on wearable photoplethysmography sensors and their potential future applications in health care. International journal of biosensors & bioelectronics 4, 195 (2018).

Imanaga, I., Hara, H., Koyanagi, S. & Tanaka, K. Correlation between wave components of the second derivative of plethysmogram and arterial distensibility. Japanese heart journal 39, 775–784 (1998).

Suganthi, L., Manivannan, M., Kunwar, B. K., Joseph, G. & Danda, D. Morphological analysis of peripheral arterial signals in takayasu’s arteritis. Journal of clinical monitoring and computing 29, 87–95 (2015).

Allen, J. Photoplethysmography and its application in clinical physiological measurement. Physiological measurement 28, R1 (2007).

Zhao, T. et al. Trueheart: Continuous authentication on wrist-worn wearables using ppg-based biometrics. In IEEE INFOCOM 2020-IEEE Conference on Computer Communications, 30–39 (IEEE, 2020).

Lee, B.-G., Jung, S.-J. & Chung, W.-Y. Real-time physiological and vision monitoring of vehicle driver for non-intrusive drowsiness detection. IET communications 5, 2461–2469 (2011).

Ferlini, A. et al. In-ear ppg for vital signs. IEEE Pervasive Computing 1–10, https://doi.org/10.1109/MPRV.2021.3121171 (2021).

Rupavatharam, S. & Gruteser, M. Towards in-ear inertial jaw clenching detection. In Proceedings of the 1st International Workshop on Earable Computing, 54–55 (2019).

Röddiger, T., Wolffram, D., Laubenstein, D., Budde, M. & Beigl, M. Towards respiration rate monitoring using an in-ear headphone inertial measurement unit. In Proceedings of the 1st International Workshop on Earable Computing, 48–53 (2019).

Truong, H., Montanari, A. & Kawsar, F. Non-invasive blood pressure monitoring with multi-modal in-ear sensing. In ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 6–10 (IEEE, 2022).

Prakash, J., Yang, Z., Wei, Y.-L. & Choudhury, R. R. Stear: Robust step counting from earables. In Proceedings of the 1st International Workshop on Earable Computing, 36–41 (2019).

Ma, D., Ferlini, A. & Mascolo, C. Oesense: Employing occlusion effect for in-ear human sensing. In Proceedings of the 19th Annual International Conference on Mobile Systems, Applications, and Services, MobiSys ‘21, 175–187, https://doi.org/10.1145/3458864.3467680 (Association for Computing Machinery, New York, NY, USA, 2021).

Kawsar, F., Min, C., Mathur, A. & Montanari, A. Earables for personal-scale behavior analytics. IEEE Pervasive Computing 17, 83–89 (2018).

Ferlini, A., Montanari, A., Mascolo, C. & Harle, R. Head motion tracking through in-ear wearables. In Proceedings of the 1st International Workshop on Earable Computing, 8–13 (2019).

Ferlini, A., Ma, D., Harle, R. & Mascolo, C. Eargate: gait-based user identification with in-ear microphones. In Proceedings of the 27th Annual International Conference on Mobile Computing and Networking, 337–349 (2021).

Ahuja, A., Ferlini, A. & Mascolo, C. PilotEar: Enabling In-Ear Inertial Navigation, 139–145 (Association for Computing Machinery, New York, NY, USA, 2021).

Ferlini, A., Ma, D., Qendro, L. & Mascolo, C. Mobile health with head-worn devices: Challenges and opportunities. IEEE Pervasive Computing (2022).

Choudhury, R. R. Earable computing: A new area to think about. In Proceedings of the 22nd International Workshop on Mobile Computing Systems and Applications, 147–153 (2021).

Nguyen, A. et al. A lightweight and inexpensive in-ear sensing system for automatic whole-night sleep stage monitoring. In Proceedings of the 14th ACM Conference on Embedded Network Sensor Systems CD-ROM, 230–244 (2016).

Hládek, L., Porr, B. & Brimijoin, W. O. Real-time estimation of horizontal gaze angle by saccade integration using in-ear electrooculography. Plos one 13, e0190420 (2018).

Bi, S. et al. Auracle: Detecting eating episodes with an ear-mounted sensor. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 2, 1–27 (2018).

Poh, M.-Z., Swenson, N. C. & Picard, R. W. Motion-tolerant magnetic earring sensor and wireless earpiece for wearable photoplethysmography. IEEE Transactions on Information Technology in Biomedicine 14, 786–794 (2010).

Passler, S., Müller, N. & Senner, V. In-ear pulse rate measurement: a valid alternative to heart rate derived from electrocardiography? Sensors 19, 3641 (2019).

Patterson, J. A., McIlwraith, D. C. & Yang, G.-Z. A flexible, low noise reflective ppg sensor platform for ear-worn heart rate monitoring. In 2009 sixth international workshop on wearable and implantable body sensor networks, 286–291 (IEEE, 2009).

Venema, B., Schiefer, J., Blazek, V., Blanik, N. & Leonhardt, S. Evaluating innovative in-ear pulse oximetry for unobtrusive cardiovascular and pulmonary monitoring during sleep. IEEE journal of translational engineering in health and medicine 1, 2700208–2700208 (2013).

Kalanadhabhatta, M., Min, C., Montanari, A. & Kawsar, F. Fatigueset: A multi-modal dataset for modeling mental fatigue and fatigability (2021).

Zhang, Q., Zeng, X., Hu, W. & Zhou, D. A machine learning-empowered system for long-term motion-tolerant wearable monitoring of blood pressure and heart rate with ear-ecg/ppg. IEEE Access 5, 10547–10561, https://doi.org/10.1109/ACCESS.2017.2707472 (2017).

Friesen, E. & Ekman, P. Facial action coding system: a technique for the measurement of facial movement. Palo Alto (1978).

Fitzpatrick, T. B. The validity and practicality of sun-reactive skin types i through vi. Archives of dermatology 124, 869–871 (1988).

Kim, J.-H., Roberge, R., Powell, J., Shafer, A. & Williams, W. J. Measurement accuracy of heart rate and respiratory rate during graded exercise and sustained exercise in the heat using the zephyr bioharnesstm. International journal of sports medicine 497–501 (2012).

Tailor, S. A., Chauhan, J. & Mascolo, C. A first step towards on-device monitoring of body sounds in the wild. In Adjunct Proceedings of the 2020 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2020 ACM International Symposium on Wearable Computers, 708–712 (2020).

Lee, S. et al. Automatic smile and frown recognition with kinetic earables. In Proceedings of the 10th Augmented Human International Conference 2019, AH2019, https://doi.org/10.1145/3311823.3311869 (Association for Computing Machinery, New York, NY, USA, 2019).

Verma, D., Bhalla, S., Sahnan, D., Shukla, J. & Parnami, A. Expressear: Sensing fine-grained facial expressions with earables. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 5, 1–28 (2021).

Choi, S. et al. Ppgface: Like what you are watching? earphones can” feel” your facial expressions. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 6, 1–32 (2022).

Bent, B., Goldstein, B. A., Kibbe, W. A. & Dunn, J. P. Investigating sources of inaccuracy in wearable optical heart rate sensors. NPJ digital medicine 3, 1–9 (2020).

Association, C. T. et al. Ansi/cta standard. physical activity monitoring for heart rate. Tech. Rep., ANSI/CTA-2065 (2018).

Dehkordi, P., Garde, A., Molavi, B., Ansermino, J. M. & Dumont, G. A. Extracting instantaneous respiratory rate from multiple photoplethysmogram respiratory-induced variations. Frontiers in Physiology 9, https://doi.org/10.3389/fphys.2018.00948 (2018).

Shuzan, M. N. I. et al. A novel non-invasive estimation of respiration rate from photoplethysmograph signal using machine learning model 2102.09483 (2021).

Tazarv, A. & Levorato, M. A deep learning approach to predict blood pressure from ppg signals 2108.00099 (2021).

cao, y., Chen, H., Li, F. & Wang, Y. Crisp-bp: Continuous wrist ppg-based blood pressure measurement. https://doi.org/10.1145/3447993.3483241 (2022).

Perpetuini, D. et al. Multi-site photoplethysmographic and electrocardiographic system for arterial stiffness and cardiovascular status assessment. Sensors 19, 5570 (2019).

Orphanidou, C. Signal quality assessment in physiological monitoring: state of the art and practical considerations. (2017).

Ferlini, A., Montanari, A., Balaji, A. N., Mascolo, C. & Kawsar, F. EarSet: A Multi-Modal In-Ear Dataset. Zenodo https://doi.org/10.5281/zenodo.8142332 (2023).

Biagetti, G. et al. Dataset from ppg wireless sensor for activity monitoring. Data in Brief 29, 105044, https://doi.org/10.1016/j.dib.2019.105044 (2020).

Reiss, A., Indlekofer, I., Schmidt, P. & Van Laerhoven, K. Deep ppg: Large-scale heart rate estimation with convolutional neural networks. Sensors 19, https://doi.org/10.3390/s19143079 (2019).

Jarchi, D. & Casson, A. J. Description of a database containing wrist ppg signals recorded during physical exercise with both accelerometer and gyroscope measures of motion. Data 2, https://doi.org/10.3390/data2010001 (2017).

Tan, C. W., Bergmeir, C., Petitjean, F. & Webb, G. I. Ieeeppg dataset. Zenodo https://doi.org/10.5281/zenodo.3902710 (2020).

Lee, H., Chung, H. & Lee, J. Motion artifact cancellation in wearable photoplethysmography using gyroscope. IEEE Sensors Journal 19, 1166–1175, https://doi.org/10.1109/JSEN.2018.2879970 (2019).

Schmidt, P., Reiss, A., Duerichen, R., Marberger, C. & Van Laerhoven, K. Introducing wesad, a multimodal dataset for wearable stress and affect detection. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, ICMI ‘18, 400–408, https://doi.org/10.1145/3242969.3242985 (Association for Computing Machinery, New York, NY, USA, 2018).

Pimentel, M. A. F. et al. Toward a robust estimation of respiratory rate from pulse oximeters. IEEE Transactions on Biomedical Engineering 64, 1914–1923, https://doi.org/10.1109/TBME.2016.2613124 (2017).

Rocha, L. G. et al. Real-time hr estimation from wrist ppg using binary lstms. In 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), 1–4 (IEEE, 2019).

Jarchi, D. et al. Estimation of hrv and spo2 from wrist-worn commercial sensors for clinical settings. In 2018 IEEE 15th International Conference on Wearable and Implantable Body Sensor Networks (BSN), 144–147 (IEEE, 2018).

Lu, S. et al. Can photoplethysmography variability serve as an alternative approach to obtain heart rate variability information? Journal of clinical monitoring and computing 22, 23–29 (2008).

Hoog Antink, C. et al. Accuracy of heart rate variability estimated with reflective wrist-ppg in elderly vascular patients. Scientific reports 11, 1–12 (2021).

Chang, H.-H. et al. A method for respiration rate detection in wrist ppg signal using holo-hilbert spectrum. IEEE Sensors Journal 18, 7560–7569 (2018).

Dubey, H., Constant, N. & Mankodiya, K. Respire: A spectral kurtosis-based method to extract respiration rate from wearable ppg signals. In 2017 IEEE/ACM International Conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE), 84–89 (IEEE, 2017).

Behar, J. et al. Sleepap: an automated obstructive sleep apnoea screening application for smartphones. IEEE journal of biomedical and health informatics 19, 325–331 (2014).

Korkalainen, H. et al. Deep learning enables sleep staging from photoplethysmogram for patients with suspected sleep apnea. Sleep 43, zsaa098 (2020).

Bonomi, A. G. et al. Atrial fibrillation detection using photo-plethysmography and acceleration data at the wrist. In 2016 computing in cardiology conference (cinc), 277–280 (IEEE, 2016).

Nemati, S. et al. Monitoring and detecting atrial fibrillation using wearable technology. In 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 3394–3397 (IEEE, 2016).

Miao, F., Wang, X., Yin, L. & Li, Y. A wearable sensor for arterial stiffness monitoring based on machine learning algorithms. IEEE Sensors Journal 19, 1426–1434 (2018).

Falter, M. et al. Accuracy of apple watch measurements for heart rate and energy expenditure in patients with cardiovascular disease: Cross-sectional study. JMIR mHealth and uHealth 7, e11889 (2019).

Balaji, A. N., Yuan, C., Wang, B., Peh, L.-S. & Shao, H. ph watch-leveraging pulse oximeters in existing wearables for reusable, real-time monitoring of ph in sweat. In Proceedings of the 17th Annual International Conference on Mobile Systems, Applications, and Services, 262–274 (2019).

Posada-Quintero, H. F. et al. Mild dehydration identification using machine learning to assess autonomic responses to cognitive stress. Nutrients 12, 42 (2020).

Facs - facial action coding system. https://imotions.com/blog/learning/research-fundamentals/facial-action-coding-system/ Last Accessed: 2023-03-08.

Author information

Authors and Affiliations

Contributions

All authors participated in the design of the study protocol. A.F., A.N.B and A.M. conducted the experiments. F.K., A.M and C.M. supervised the project. A.F. prepared a first draft of the manuscript. All authors edited, revised, and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Montanari, A., Ferlini, A., Balaji, A.N. et al. EarSet: A Multi-Modal Dataset for Studying the Impact of Head and Facial Movements on In-Ear PPG Signals. Sci Data 10, 850 (2023). https://doi.org/10.1038/s41597-023-02762-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-023-02762-3