Abstract

Different technologies can acquire data for gait analysis, such as optical systems and inertial measurement units (IMUs). Each technology has its drawbacks and advantages, fitting best to particular applications. The presented multi-sensor human gait dataset comprises synchronized inertial and optical motion data from 25 participants free of lower-limb injuries, aged between 18 and 47 years. A smartphone and a custom micro-controlled device with an IMU were attached to one of the participant’s legs to capture accelerometer and gyroscope data, and 42 reflexive markers were taped over the whole body to record three-dimensional trajectories. The trajectories and inertial measurements were simultaneously recorded and synchronized. Participants were instructed to walk on a straight-level walkway at their normal pace. Ten trials for each participant were recorded and pre-processed in each of two sessions, performed on different days. This dataset supports the comparison of gait parameters and properties of inertial and optical capture systems, whereas allows the study of gait characteristics specific for each system.

Measurement(s) | G Force • Spatial Orientation |

Technology Type(s) | Accelerometer • Optical Instrument |

Similar content being viewed by others

Background & Summary

Gait analysis has been explored since the 17th century1. Advances in understanding the human motion throughout the last centuries allowed researchers apply this analysis to many applications, such as clinical assessment, monitoring of sports and athletic performances, rehabilitation support, robotics research, and biometry-based recognition2. This type of analysis can use data acquired by means of different technologies, like optical systems, inertial measurement units (IMUs), force-plate platforms, force shoes, and techniques based on computer vision. Although some of these are commonly used in most fields, as optical systems and imaging techniques, each technology has its drawbacks and fits best to particular applications3,4,5,6,7.

One popular technology to acquire data for gait analysis is the optical motion capture system. It has minimal impact on the natural motion of the participant, as it does not need tethering any hardware onto the individual8. This system also fosters a precise acquisition of physical movements over virtual modeling and accurate reconstruction of movement marks and participants’ geometry9. However, optical motion capture systems are usually expensive, require high-speed processing devices and specific installations in a controlled space for their use3.

Recently, IMUs have been considered an appropriate option to perform gait analysis because they mitigate these drawbacks of optical motion capture systems. They are typically more cost-effective, do not need a controlled environment, and support indoor and outdoor places. However, they have other limitations as being more susceptible to drift caused by changes in motion direction, and to low-frequency noise from small vibrations during the capture. Also, the sensor attachment position significantly impacts the estimation of gait parameters3,9,10,11.

Several important gait datasets comprising either optical motion capture data or inertial data have been made available12,13,14,15,16,17,18 in the prior literature. However, there are currently no datasets with data being simultaneous captured from both systems, which may allow a multi-modal gait analysis. This synchronized capture has proven helpful in specific applications, such as music gesture analysis19 and sports science20. We propose, in this work, a multi-sensor gait dataset, which consists of inertial and optical motion data, and aims to provide basis for comparison and reasoning of human gait analysis using data from both systems.

The presented dataset comprises inertial and optical motion data from 25 participants free of lower-limb injuries, aged between 18 and 47 years. A smartphone and a custom microcontroller device with an IMU were attached to one of the participant’s legs to capture accelerometer and gyroscope data, and 42 reflexive markers were taped over the whole body to record three-dimensional trajectories. The participants were instructed to walk on a straight-level walkway at their normal pace. The custom device uses a wireless protocol to communicate with the computer to which the optical system was connected. This setup enabled recording and synchronizing the trajectories (acquired by the optical system) and the inertial measurements (acquired by the dedicated device and the smartphone). Ten trials for each participant were recorded and pre-processed in each of two sessions, performed on different days. This amounts to 500 trials of three-dimensional trajectories, 500 trials of accelerometer and gyroscope readings from the custom device, and 500 trials of accelerations from the smartphone.

In addition to contributing with a multi-sensor dataset which supports the comparison of gait parameters and properties of inertial and optical capture systems, the full-body marker set and the inertial sensors attached to the leg favor the study of gait characteristics specific for each system. This dataset also allows analyzing gait variations between participants and for each one (i.e., intra and inter-participants) by the captures in different days. This characteristic of the dataset fosters investigations about the effectiveness of gait recognition and user profiling using inertial data.

Methods

Participants

Twenty-five participants (12 women, 12 men, and one undeclared gender, aged between 18 and 45 years) participated in this study, which took place between December of 2019 and February of 2020. Neither of them reported injuries for both legs or medical conditions that would affect their gait or posture. The participants were either students of the School of Music at McGill University, members of CIRMMT, or authors of this work. McGill University’s Research Ethics Board Office approved this study (REB File # 198–1019), and all participants provided informed consent.

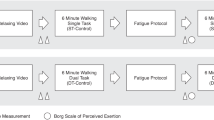

Experimental design

A proprietary optical system21, with 18 infra-red Oqus 400 and Oqus 700 cameras and sampled at 100 Hz, was adopted to track the 3D trajectories of 42 reflective markers over the participants’ body. The marker set was based on the lower-limb IORGait model proposed by Leardini et al.22, and a simplified upper-limb and trunk Plug-in Gait models23. This marker set is depicted in Fig. 1, and each reflexive marker, as well as its anatomical landmarks, are described in Table 1. Gait analysis usually focuses on the lower limb trajectories; thus, the upper-limb and trunk simplified models are used only for skeleton reconstruction purposes. The marker placement was performed by anatomical palpation using the landmarks reported in Table 1 at the beginning of each session, and was not changed during the session trials. The proprietary motion capture software (Qualisys Track Manager - QTM)24 was employed to record and pre-process the 3D trajectories of the reflexive markers. All trajectory measurements were acquired in units of millimeters.

A Nexus 5 Android-based smartphone with an InvenSense MPU-6515 six-axis IMU was used to capture accelerometer data. An Android application was designed and developed to read accelerations at a sampling rate of 100 Hz and store them into a comma-separated values (CSV) file. The accelerometer measurements yielded by the Android platform are in units of m/s2.

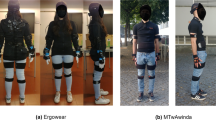

An InvenSense MPU-925025 six-axis IMU mounted to an ESP8266 microcontroller (MCU) was used to capture accelerometer and gyroscope data. A firmware to the ESP8266 was developed using Arduino Core libraries, to read raw accelerometer and gyroscope data by I2C protocol at a sampling rate of 100 Hz. The acceleration measurements were read in g units and transformed into units of m/s2 using g = 9.81 m/s2. The gyroscope measurements are in units of °/s. The MCU board was connected to the WiFi during its initialization. Then the inertial measurements were read from the MPU-9250, and sent using Open Sound Control (OSC) packages over UDP, following the defined rate. The smartphone and the MCU, screwed within a projected box, were attached to the leg using a band, as showed in Fig. 2. Five reflexive markers were taped in the band to calibrate it as a rigid body in the motion capture system, then at least three markers were kept during the walking trials to track it as a six-degree freedom object. These markers are also described in Table 1, at the fifth first lines. In the Fig. 3 is showed the motion capture software visualization of the coordinate reference calibrated for the optical motion capture system, smartphone and MCU attached to the leg being tracked as a rigid body. The MCU and smartphone were positioned to correspond to the motion capture reference in a way in which their coordinate systems are aligned.

An integration API was designed and developed to receive data from the MCU over UDP protocol and from the optical motion capture system by a real-time SDK provided by the Qualisys corporation26. The MCU’s inertial measurements and the 3D trajectories were acquired independently by this integration API but synchronously. The API starts to listen to the OSC packages from the MCU when the optical system begins the data acquisition. Also, the API stops listening to the OSC packages when the optical system finishes the data acquisition. Once the integration API guarantees both acquisitions initiate and finish together, and both systems have the same sampling rate, hence their data streams are synchronized.

Data acquisition

Two data acquisition sessions were performed for each participant, and each one lasted about one hour. The sessions were performed on different days. In each session, the following procedure was adopted:

-

1)

Calibration of the systems: the optical motion capture system was calibrated by the Wand calibration method following the manufacture’s instructions24. During the calibration, the coordinate system was defined as: x was the direction in which the participant walked; y was orthogonal to x; and z was orthogonal to both, pointing to the participants’ head. The MCU also was turned on at this moment, and its communication to the computer was verified. As well as, the Android application was started to read and store the accelerometer readings in a CSV file.

-

2)

Participant preparation: the investigator showed the laboratory to the participant, explained the recording procedure, and asked the participant to sign the consent form. Further, the participant changed their clothes to tight-fitting outfits and wore tight caps to cover their hair. The investigator attached the reflexive markers presented in Fig. 1 on the participants’ skin or the tight-fitting clothes, using a proper double-sided tape. Also, the smartphone and the MCU box placed in the band were attached to the participant’s leg.

-

3)

Trials: The participant was asked to stand up at the beginning of the walkway for few seconds to guarantee the proper function and communication of the systems. After these few seconds, the participant was asked to tap three times on the smartphone and MCU box (using their index finger, on which an additional marker was placed). Finally, the participant walked forth on a 5-m straight level walkway at their normal pace. At the end of the walkway, the participant stopped and tapped on the smartphone and MCU box again. The accelerations peaks generated by these taps allow a later verification of the data synchronization. Five trials of the participant walking using the band on their left leg were recorded, and five other sessions were recorded using the band placed on their right leg.

-

4)

Session ending: After recording the ten trials, the band, and all the markers were removed from the participants’ bodies. The Android application also was stopped. The obtained visualizations from the session recordings were shown, and the scheduling of the participant’s next session was confirmed.

Data processing

The marker trajectories were labeled using the QTM software (QTM version 2018.1 (build 4220), RT Protocol versions 1.0–1.18 supported), as well as their gap-filling procedure. The investigator manually selected the best fit of interpolation in the trajectory editor27 for each missing trajectory. Polynomial interpolation was applied in gaps smaller than ten frames, and relational interpolation, which is based on the movement of surrounding markers, was adopted for more complex cases (e.g., occlusions caused by the alignment of both legs during mid-stance and mid-swing phases). After that, the trajectories were smoothed, when necessary, using QTM software tool27 by selecting a range of the trajectories and employing the more appropriated filter. This filter was selected and applied using the QTM trajectory editor. Local spikes were identified using an acceleration threshold of 10 m/s2. Only these spikes were locally smoothed using the moving average filter of QTM trajectory editor. This approach was used to smooth local spikes without affecting the movements. The beginning and end of trials containing expressive high-frequency noise were smoothed using a Butterworth low pass filter with a 5 Hz cut-off frequency. This filter was applied only on the non-walking data, that corresponds to the first and final seconds of the trials in which the participants where preparing to start walking, as detailed in the Subsection Data acquisition. After that, these filled and smoothed trajectories were exported to the c3d (https://www.c3d.org) and Matlab file formats. These trajectories were then imported and processed using MoCap Toolbox28 under Matlab29. The exported trajectories were structured as MoCap data from MoCap Toolbox, and processed to extract the temporal section containing only walking data, i.e., removing the beginning and end of the trials. The second-order time derivatives (i.e. accelerations) from these walking data were estimated using the MoCap Toolbox, being also structured as MoCap data. The raw inertial measurements recorded by the MCU and smartphone were exported into CSV files, including the full trials, i.e., the beginning, walking data, and end of the trials. Additionally, the corresponding walking sections were extracted from these inertial data and exported to a CSV file. The walking sections obtained from the trajectories, MCU’s inertial measurements, and smartphone accelerations were assured to present temporal alignment.

Data Records

All data are available from figshare30. They are organized in two folders: raw_data containing the complete trials; and processed_data storing the walking sections extracted from the raw data by removing the beginning and end of the trials. In both folders, the participants’ trial files are organized in sub folders associated to each participant identification: userID, which IDs are from 01 to 30, not necessarily consecutive. A total of 20 trials have been recorded of each participant, 10 in day1 and 10 in day2.

Raw data

This folder stores the complete trials, including the beginning and end of them. These trials consists in 3D trajectories exported from the QTM software into c3d files, and MCU’s inertial readings stored in CSV files. The c3d files are referenced as capture_userID_dayD_TT_qtm.c3d, and CSV files as capture_userID_dayD_TT_imu.csv, in which:

-

userID is the participant identification, as explained before;

-

dayD refers to the first or second day of the participant trials, day1 or day2;

-

TT is the trial number, i.e. 0001 to 0010, for each dayD.

The description of the c3d labels are presented in Table 1. All trajectories have the dimension n×3 (n is the number of frames recorded), and the number format is real. Information about the columns of CSV files are presented in Table 2. The corresponding frames to the inertial measurements are indicated in the first column of CSV files. In some cases, the frame counting of the MCU’s inertial measurements does not start in one, but they are surely synchronized to the marker trajectories’ frames.

Processed data

This folder stores the walking sections obtained from the complete trials, removing the beginning and end of them. The walking sections of 3D trajectories were stored in Matlab files whereas the corresponding sections of inertial readings from the MCU and smartphone were stored in CSV files. The Matlab files are referenced as capture_userID_dayD_TT_qtm_walk.mat, and CSV files as capture_userID_dayD_TT_imu_walk.csv and capture_userID_dayD_TT_nexus_walk.csv, in which:

-

userID is the participant identification, as explained before;

-

dayD refers to the first or second day of the participant trials, day1 or day2;

-

TT is the trial number, i.e. 0001 to 0010, for each dayD.

First, the c3d files containing the markers trajectories were read over Matlab, and their data were stored in a structure array from MoCap Toolbox named MoCap data. The scheme and fields of this structure are described in Table 4. Format and set values of the fields are also presented. Some fields are composed by Structures, which are described on the columns to the right. Those fields kept as default values of Mocap data Structure are presented as empty or zero-valued in this table. Through the functions provided by Mocap Toolbox, walking sections were extracted by analysing the trajectories on x-axis of feet markers, medial and lateral malleolus of both sides (details in Table 1), to find the frame in which the participant displacement begins and ends. These frame numbers are stored in the field frame_init and frame_end of the Structure other of Mocap data.

Once the MCU’s inertial readings were synchronized to the trajectories by the corresponding frame numbers, these yielded beginning and ending frames were also used to section the MCU measurements. The columns and scheme of CSV files which store the walking sections extracted from MCU inertial data are the same of the presented in Table 1. The first value of column named frame presents the same amount of frame_init, and the last value presents the same amount of frame_end from the MoCap data Structure.

Aiming to facilitate comparison analyses between MCU’s inertial readings and markers’ data, second-order time derivatives (i.e., accelerations) were estimated from marker’s trajectories of walking sections. These data are also stored in MoCap data structures in Matlab files following the same nomenclature: capture_userID_dayD_TT_qtm_acc_walk.mat. The fields and values of this structure which stores the estimated accelerations are describe in Table 5. The schema, fields and format are basically the same of the trajectories’s MoCap data structure. However, the values in field data are the estimated accelerations of the corresponding trajectories (in units of mm/s2), and the value 2 of field timederOrder indicates that these data are the second-order time derivatives.

Accelerations from the smartphone were not capture synchronously to the marker trajectories, and only one CSV file stored all the accelerometer measurements of the 10 trials performed by a participant in each day. This CSV file was split into walking sections by correlation analysis between it and the walking sections of MCU accelerations, which were synchronized to the trajectories. Thus, these extracted walking sections from the smartphone accelerations do not contain the information about frame numbers although these were motion-aligned to the MCU’s accelerometer readings. The columns of the CSV files that store the walking sections of the smartphone accelerations are described in Table 3.

Technical Validation

The presented multi-sensor dataset consists of three sources: an optical motion capture system, an IMU mounted to an ESP8266 board and an Android-based smartphone. The same experienced investigator attached the markers and the smartphone and MCU within a box mounted to a band on the participant’s body for consistency purposes.

Optical 185 motion capture system

The calibration of the optical motion capture system was performed as described in the Data acquisition of the Section Methods, right before the beginning of each data acquisition session. In all achieved calibrations, the average residuals of each camera remained below 3 mm and similar among the 18 cameras. Also, the standard deviation between the actual wand length and the length perceived by the cameras was up to 1 mm in the adopted calibrations. The gaps in the 3D trajectories of the markers were filled (i.e., all the trajectories are 100% tracked), and the average residuals of 3D measured points fell below 4 mm.

MCU

The firmware was implemented using Arduino Core libraries without adopting IMU libraries to avoid potentially applying of library default filters to the inertial measurements. Commonly, a scale range between ±2 g and ±8 g is adopted to the accelerometer in the gait literature31. The scale range of ±2 g is enough to measure the maximums instantaneous accelerations of normal walking (bellow to 1 g), and can capture more gait details (a scale range of ±2 g corresponds to 16384 LSB/g according to the MPU-9250 specification25). The scale range of ±250 was adopted to the gyroscope because it also presents the higher sensitivity scale factor (131 LSB/(°/s)). Thus, the firmware reads the registers’ position of the MPU-9250 accelerometer and gyroscope, then calculates the measurements according to each sensor’ sensitivity.

Smartphone

The developed Android application uses the sensor routines provided by the platform32 and the Android Open Source Project (AOSP)33. The same scale range of ±2 g used to the MPU-9250 accelerometer was set to the MPU-6515 one using the Android Sensor API. This scale also corresponds to a specificity of 16384 LSB/g for the MPU-6515. The Android API reads these accelerations from the IMU and calculates them in units of m/s2, including the gravity.

Data synchronization

We performed a quantification of the latency communication stages of the adopted setup consisting of Qualisys adopted optical motion capture system and a micro-controlled device communicating over UDP, in the same capture laboratory. We conducted event-to-end tests on the critical components of this setup to determine the synchronization suitability. This investigation showed suitability in the synchronization because the near individual average latencies of around 25 ms for both systems34.

Usage Notes

The c3d files can be read by several free and open source tools which provide support to c3d files, such as EZC3D35 and Motion Kinematic & Kinetic Analyzer (MOKKA)36. The Matlab files store the structure MoCap data from MoCap Toolbox28, and can be manipulated using the functions provided by this Toolbox. It presents several routines to visualize, perform kinematics and kinetics analysis and apply projections on the data. This Toolbox supports any kind of marker set. The CSV format files can be read using any text or spreadsheet editor, as well as by common functions over Matlab (https://www.mathworks.com/help/matlab/ref/readtimetable.html) or Python (https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.read_csv.html).

Code availability

The developed Matlab and Python codes to process the data are freely available on the first author’s github repository (https://github.com/geisekss/motion_capture_analysis). The MoCap Toolbox is freely available and extensively documented on the University of Jyväskylä website (https://www.jyu.fi/hytk/fi/laitokset/mutku/en/research/materials/mocaptoolbox).

References

Baker, R. The history of gait analysis before the advent of modern computers. Gait & posture 26, 331–342 (2007).

Muro-De-La-Herran, A., Garcia-Zapirain, B. & Mendez-Zorrilla, A. Gait analysis methods: An overview of wearable and non-wearable systems, highlighting clinical applications. Sensors 14, 3362–3394 (2014).

Gouwanda, D. & Senanayake, S. Emerging trends of body-mounted sensors in sports and human gait analysis. In 4th Kuala Lumpur Internation‘al Conference on Biomedical Engineering, 715–718 (Springer, 2008).

Winter, D. Biomechanics and motor control of human movement, vol. 4th edition (John Wiley & Sons, 2009).

Morris, R. & Lawson, S. A review and evaluation of available gait analysis technologies, and their potential for the measurement of impact transmission. Newcastle University (2010).

Ramirez-Bautista, J., Huerta-Ruelas, J., Chaparro-Cárdenas, S. & Hernández-Zavala, A. A review in detection and monitoring gait disorders using in-shoe plantar measurement systems. IEEE reviews in biomedical engineering 10, 299–309 (2017).

Yang, G., Tan, W., Jin, H., Zhao, T. & Tu, L. Review wearable sensing system for gait recognition. Cluster Computing 22, 3021–3029 (2019).

Kaufman, K. Future directions in gait analysis. Gait analysis in the science of rehabilitation 85–112 (1998).

Akhtaruzzaman, M., Shafie, A. & Khan, M. R. Gait analysis: Systems, technologies, and importance. Journal of Mechanics in Medicine and Biology 16, 1630003 (2016).

Mayagoitia, R., Nene, A. & Veltink, P. Accelerometer and rate gyroscope measurement of kinematics: an inexpensive alternative to optical motion analysis systems. Journal of biomechanics 35, 537–542 (2002).

Lee, J. B., Sutter, K. J., Askew, C. D. & Burkett, B. J. Identifying symmetry in running gait using a single inertial sensor. Journal of Science and Medicine in Sport 13, 559–563 (2010).

Moore, J., Hnat, S. & van den Bogert, A. An elaborate data set on human gait and the effect of mechanical perturbations. PeerJ 3, e918 (2015).

Fukuchi, C., Fukuchi, R. & Duarte, M. A public dataset of overground and treadmill walking kinematics and kinetics in healthy individuals. PeerJ 6, e4640 (2018).

Schreiber, C. & Moissenet, F. A multimodal dataset of human gait at different walking speeds established on injury-free adult participants. Scientific data 6, 1–7 (2019).

Ngo, T., Makihara, Y., Nagahara, H., Mukaigawa, Y. & Yagi, Y. The largest inertial sensor-based gait database and performance evaluation of gait-based personal authentication. Pattern Recognition 47, 228–237 (2014).

Gadaleta, M. & Rossi, M. Idnet: Smartphone-based gait recognition with convolutional neural networks. Pattern Recognition 74, 25–37 (2018).

Luo, Y. et al. A database of human gait performance on irregular and uneven surfaces collected by wearable sensors. Scientific data 7, 1–9 (2020).

Santos, G. et al. Manifold learning for user profiling and identity verification using motion sensors. Pattern Recognition 106, 107408 (2020).

Freire, S., Santos, G., Armondes, A., Meneses, E. & Wanderley, M. Evaluation of inertial sensor data by a comparison with optical motion capture data of guitar strumming gestures. Sensors 20, 5722 (2020).

Lapinski, M. et al. A wide-range, wireless wearable inertial motion sensing system for capturing fast athletic biomechanics in overhead pitching. Sensors 19, 3637 (2019).

Qualisys. Qualisys motion capture systems (accessed on June of 2022).

Leardini, A. et al. A new anatomically based protocol for gait analysis in children. Gait & posture 26, 560–571 (2007).

Davis, R. III, Ounpuu, S., Tyburski, D. & Gage, J. A gait analysis data collection and reduction technique. Human movement science 10, 575–587 (1991).

Qualisys. Qualisys track manager: Introduction. Gothenburg, Sweden (accessed on June of 2022).

InvenSense. MPU-9250 Product Specification Revision 1.1. California, USA (2016).

Qualisys. QTM Real-time Server Protocol Documentation Version 1.23. Gothenburg, Sweden (Accessed on June of 2022).

Qualisys. Qualisys track manager: Processing your data. Gothenburg, Sweden (accessed on June of 2022).

Burger, B. & Toiviainen, P. MoCap Toolbox – A Matlab toolbox for computational analysis of movement data. In Bresin, R. (ed.) Proceedings of the 10th Sound and Music Computing Conference, 172–178 (KTH Royal Institute of Technology, Stockholm, Sweden, 2013).

The Mathworks, Inc., Massachusetts, USA. MATLAB version 9.7.0.1296695 (R2019b) (2019).

Santos, G., Wanderley, M., Tavares, T. & Rocha, A. A multi-sensor and cross-session human gait dataset captured through an optical system and inertial measurement units, Figshare, https://doi.org/10.6084/m9.figshare.14727231 (2022).

Sprager, S. & Juric, M. Inertial sensor-based gait recognition: A review. Sensors 15, 22089–22127 (2015).

Developers, A. Motion sensors (Accessed on June of 2022).

Project, A. O. S. Sensors (Accessed on June of 2022).

Santos, G. et al. Comparative latency analysis of optical and inertial motion capture systems for gestural analysis and musical performance. In Proceedings of 21st New Interfaces for Musical Expression (NIME) 2021 conference, https://doi.org/10.21428/92fbeb44.51b1c3a1 (2021).

Michaud, B. & Begon, M. ezc3d: An easy c3d file i/o cross-platform solution for c++, python and matlab. Journal of Open Source Software 6, 2911 (2021).

Barré, A. Mokka: Motion kinematic and kinetic analyzer (Accessed on June of 2022).

Acknowledgements

We thank the financial support of CAPES DeepEyes project (# 88882.160443/2014-01) and FAPESP DéjàVu (# 2017/12646-3). We also thank to CIRMMT staff and members for the support during this study, also all volunteers for their participation.

Author information

Authors and Affiliations

Contributions

G.S. designed the protocol, performed the data acquisition, and processed the data. M.W. provided the technical support and resources to establish the data collection. M.W. and G.S. discussed the experimental design. G.S. T.T. and A.R. contributed to the data analysis and the manuscript writing. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Santos, G., Wanderley, M., Tavares, T. et al. A multi-sensor human gait dataset captured through an optical system and inertial measurement units. Sci Data 9, 545 (2022). https://doi.org/10.1038/s41597-022-01638-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-022-01638-2

This article is cited by

-

The Poses for Equine Research Dataset (PFERD)

Scientific Data (2024)

-

Reliability and generalization of gait biometrics using 3D inertial sensor data and 3D optical system trajectories

Scientific Reports (2022)