Abstract

Wearable technology is expanding for motion monitoring. However, open challenges still limit its widespread use, especially in low-cost systems. Most solutions are either expensive commercial products or lower performance ad-hoc systems. Moreover, few datasets are available for the development of complete and general solutions. This work presents 2 datasets, with low-cost and high-end Magnetic, Angular Rate, and Gravity(MARG) sensor data. Provides data for the complete inertial pose pipeline analysis, starting from raw data, sensor-to-segment calibration, multi-sensor fusion, skeleton-kinematics, to complete Human pose. Contains data from 21 and 10 participants, respectively, performing 6 types of sequences, presenting high variability and complex dynamics with almost complete range-of-motion. Amounts to 3.5 M samples, synchronized with a ground-truth inertial motion capture system. Presents a method to evaluate data quality. This database may contribute to develop novel algorithms for each pipeline’s processing steps, with applications in inertial pose estimation algorithms, human movement forecasting, and motion assessment in industrial or rehabilitation settings. All data and code to process and analyze the complete pipeline is freely available.

Measurement(s) | Raw magnetic, angular rate, and acceleration signals from body motion • Full body kinematics |

Technology Type(s) | Inertial Motion Capture • Inertial Motion Capture (Xsens MVN Awinda) |

Factor Type(s) | Sex • Age • Body Mass • Body height |

Sample Characteristic - Organism | Homo sapiens |

Similar content being viewed by others

Background

Inertial-based wearable technology is being quickly adopted for many applications requiring estimation of body configuration in real-time (e.g. human motion analysis, ergonomic assessment, virtual interaction), given their relative low-cost and ease of use, while presenting reasonable accuracy. Moreover, the light-source and field-of-view independence allows the user to be unconstrained from predefined locations, contributing to more natural movements, while avoiding data privacy issues, contrary to camera-based solutions1,2.

Despite their widespread adoption, multiple challenges still remain, mainly when it comes to applications requiring high accuracy (e.g. rehabilitation assessment) and dealing with whole-body dynamics with joints that have multiple degrees of freedom2 in real world settings, given the: (i) complex dynamical movements over the whole range of joint motion; (ii) offset errors when converting from the sensor reference frame to the body segments frame; (iii) possibility of magnetic interference (when using magnetometer fusion) or yaw drift (when not using magnetometer fusion).

Most research has been focused on just one of these aspects. Therefore, unified datasets/benchmark with ample amounts of data are missing, needed to develop and evaluate complete products1,2. Some datasets for inertial pose estimation analysis exist in the literature. However, these present some limitations which prevent them from being directly applicable to real-world situations: (i) most datasets3,4,5 use the commercial Xsens Inertial MoCap system’s (Xsens Technologies, B.V., The Netherlands6) hardware/software for data acquisition. Therefore, data has already been pre-processed to a higher quality level. However, this data is not representative of the lower grade hardware/software, used in most real-world applications1; (ii) These datasets are geared towards developing novel inertial fusion algorithms. Notwithstanding, they ignore the rest of the pipeline involved to create working pose estimation solutions from inertial data (e.g. sensor-to-segment calibration, magnetic-rejection, etc…), which are necessary when considering end products and which are still open investigation problems1,2,7; (iii) most papers looking into inertial motion estimation have focused on using a low amount of sensors, attached to individual limbs, mostly ignoring multi-sensor fusion. Hence, they present relatively simple whole body dynamics, with participants focusing on lower- or upper-body movements, with little full range-of-motion1,2,5; (iv) Given the lack of large scale full-body inertial pose datasets, Huang et al.3 have relied on virtual IMU data obtained from large scale MoCap databases8, by derivation of linear and angular segment positions to respectively obtain acceleration and gyroscope data, similarly to an IMU. Nevertheless, this data does not contain real sensor characteristics (ex. noise, bias, etc…), and no magnetometer data is available, which is necessary in real systems to prevent yaw drift in long time usage2.

In order to address some of these limitations, we present a novel dataset for inertial pose estimation. It differs from existing literature by providing: (i) Access to the full data pipeline, from raw data, to sensor-to-segment calibration, multi-sensor fusion, skeleton-kinematics, to the complete human pose; (ii) High variability and fast dynamic trials with almost complete range-of-motion; (iii) Trials focusing on upper-body daily activities with natural movements; (iv) Two datasets with 2 types of MARG sensors, one high-end (Xsens MTw) and another low-cost (MPU9250, collected with a similar acquisition protocol to enable benchmarking; (v) Large amount of data (~3.5 M samples at 60 Hz) synchronized with a GT Inertial MoCap system.

This article is divided into 4 sections: (i) the methodology used to acquire and process the data; (ii) the database created with the data and its structure; (iii) brief validating results with baseline values; (iv) indications on where to use and access all the data and code used in this work.

Methods

This section lays out the process or data collection of the 2 datasets: Ergowear dataset (D1) and Mtw Awinda dataset (D2). The acquisition of the first dataset (D1), concerning low cost sensor data, was focused on general upper-body indoor usage, which might be found in rehabilitation, office or industrial settings. This wearable9 provides representative data of low-cost systems and ad-hoc software, which can easily be built for many applications. Nevertheless, the use of such systems poses some challenges in terms of achieving high accuracy. This results not only from the low cost hardware (relatively high noise and bias instability), but also from the lack of supporting technologies that exist on most higher-end sensors, namely the use of Strapdown Integration10 (https://base.xsens.com/s/article/Understanding-Strapdown-Integration?language=en_US), self-calibrating sensors, magnetic rejection, anti-slide straps, among others. For this reason, we conducted a second data acquisition (D2), following a similar protocol using the high-end Xsens MTw Awinda (Xsens Technologies, B.V., The Netherlands)6,10 sensors instead, which use these technologies to produce high quality “raw” sensor data. These two datasets allow a more comprehensive test of new algorithms and to benchmark them on different conditions, i.e., near of ideal hardware (Xsens) and easily accessible hardware.

Participants

Healthy participants from the University of Minho academic community were contacted to participate in the 2 studies. Both times, they were provided with the study’s goal and details, protocol, and duration. To select and recruit the participants, the following inclusion criteria was defined: (i) have more than 18 years; (ii) present full motion control and no clinical history of motor injuries.

21 healthy participants (15 males and 6 females; body mass: 66.0 ± 7.8 kg; body height: 171 ± 8.6 cm; age: 25.0 ± 2.3 years-old, see Table 2) and 10 healthy participants (6 males and 4 females; body mass: 64.4 ± 8.5 kg; body height: 171 ± 8.1 cm; age: 24.2 ± 2.6 years-old, see Table 3) were recruited for the first and second data collection, respectively. All participants accepted to participate, voluntarily and provided their written and informed consent to participate in the study, according to the ethical conduct defined by the University of Minho Ethics Committee in Life and Health Sciences (CEICVS 006/2020), that follows the standard set by the declaration of Helsinki and the Oviedo Convention. Participants’ rights were preserved and, as such, personal information that could identify them remained confidential and it is not provided in this dataset.

Instrumentation and data collection

All data was collected in the School of Engineering of University of Minho.

For D1, the data collection was conducted inside a conference room, selected to to present natural and representative conditions for indoor environments, and consisted of a standard conference room with tables, cabinets, chairs, among others, and is expected to contain a non-homogeneous magnetic field.

The D2 data collection was conducted in an empty outdoors parking lot to minimize the presence of magnetic interference in the data. This second acquisition tries to follow a best case scenario, providing a representative estimate of what can be achieved given the right conditions. In both protocols, the participants were instructed to wear sneakers and tight-fitting clothes, such as tight jeans or leggings and shirts or strap tops.

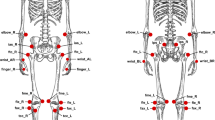

For D1, the instrumentation of the participants occurred as follows: (i) donning of the full-body inertial motion tracking system MTw Awinda, used as GT. The seventeen MARG sensors were placed following the manufacturer’s guidelines (https://base.xsens.com/s/article/Sensor-Placement-in-Xsens-Awinda-System?language=en_US), on the head, shoulders, chest, arms, forearms, wrist, waist, thighs, shanks, and feet, over bony landmarks and secured with straps; (ii) donning of the Ergowear smart garment9. This system is still in prototype phase and is embedded with 9 low-cost MARG sensors (MPU9250, Adafruit Industries), placed on the upper-body: hands, forearms, arms, head, upper-back (Around T4 vertebrae) and lower-back. After donning the Ergowear, the responsible investigator placed duct tape over the sensors, to reduce the probability of sensor sliding during the experimental protocol. Figure 1a illustrates a participant instrumented according to this experimental protocol.

For D2, each participant was instrumented in a similar way (Fig. 1b), as follows: (i) donning of the full-body inertial motion tracking system MTw Awinda, used as GT. The seventeen MARG sensors were placed following the manufacturer’s guidelines (https://base.xsens.com/s/article/Sensor-Placement-in-Xsens-Awinda-System?language=en_US), on the head, shoulders, chest, arms, forearms, wrist, waist, thighs, shanks, and feet, over bony landmarks and secured with straps; (ii) donning of a second full-body inertial motion tracking system MTw Awinda, placing the seventeen sensors precisely on top of the first system’s and secured with straps. The Xsens MtManager (Xsens Technologies, B.V., The Netherlands) acquisition software was used to acquire the raw sensor data in this case.

In both acquisitions, all sensors were always placed by the same researcher, ensuring repeatability in the instrumentation procedure and, thus, minimizing errors caused by misplacement.

On both acquisitions, GT data was synchronized and collected simultaneously, to evaluate any algorithmic solution developed. This was provided by the commercial MoCap system (Xsens Awinda). This system uses the MTw Awinda sensors with the the Xsens Analyze software (Xsens Technologies, B.V., The Netherlands, validated in11), that integrates a biomechanical human model (MVN BIOMECH) to perform multi-sensor data fusion, along with better calibration routines, resulting in more accurate data.

Data collection included: (i) GT kinematic data, namely sensors’ free acceleration, magnetic field, and orientation; segments’ orientation, position, velocity, and acceleration; and joints’ angles. These were acquired at 60 Hz using the MVN Analyze software and can be consulted on the user manual (https://www.xsens.com/hubfs/Downloads/usermanual/MVN_User_Manual.pdf); and ii) MARG raw sensor data obtained from the sensors in both cases. All data was synchronized in time using a hardware trigger.

Experimental protocol

After sensorizing the participants, their anthropometric data was collected according to the Xsens guidelines (https://base.xsens.com/s/article/Getting-Started-with-the-Awinda-in-MVN-Tutorial?language=en_US). These dimensions were introduced on the MVN Analyze to adjust the software’s biomechanical model (MVN BIOMECH) to the participant. Then, the MVN BIOMECH was calibrated in N-Pose. The calibration’s quality was ensured for each subject, performing the necessary repetitions until a good quality was achieved.

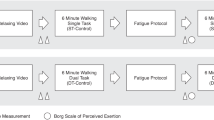

The participants were then instructed to perform 6 types of movement sequences (Fig. 2), each focusing on different movement dynamics. Each type was repeated 3 and 5 times, respectively, for the D1 and D2 acquisitions. The repetitions only imply that the type of movements are the same, as people will move freely with very different dynamics in most cases, especially in the “random” trials. The 6 types of movement sequences are described below:

-

1.

Calibration - sensor to segment calibration sequences focused on the Upper-body. Includes static poses, functional movements, and the dynamic calibration similar to the one used in Xsens calibration (moving forward and then returning). D1 dataset only contains the dynamic calibration.

-

2.

Task - movements found on an simplified factory assembly task, where the user grabs small parts to assemble a product, using both hands. The movement contains reaching movements with both arms, with relatively small variability, and with the user standing roughly in the same position.

-

3.

Circuit - movements which mimic office dynamics, while interacting with some objects. The user sits in an office chair, works on the computer, moves some boxes around, walks and then writes something on a board. These trials might contain additional magnetic interference from interacted objects.

-

4.

Sequence - sequence of movements which try to cover the full range-of-motion of human upper-body joints.

-

5.

Random - user performs free movements for: 1.5 minutes. Example of movements produced include dancing, imitating sports, randomly moving all limbs, pretending to do some task, running, etc…

-

6.

Validation - isolated movements containing maximum range of motion for each upper-body joint individually. Only collected on the Ergowear acquisition.

All trials were initiated with the participants holding a static N-Pose for 5 seconds and then proceeding to execute the motions. This was always initiated on the same location and as far away as possible to sources of magnetic disturbance for each of the datasets.

Additionally, in D2, a few set of additional trials were collected (1 repetition for each motion, with 5 participants) with the same protocol, indoors and outdoors, to compare the results obtained in the presence of magnetic disturbances.

Dataset elaboration

Raw data

This protocol was used to collect 2.5 M and 1.0 M samples of data, respectively in the D1 and D2 datasets, at 60 Hz, across all movement sequences (Ergowear data is sampled at around 100 Hz, however, the MTwAwinda and GT Xsens data are only sampled at 60 Hz). Each timestep contains: raw accelerometer, gyroscope and magnetometer data for each of the sensors; along with synchronized data exported from the Xsens MVN Analyze software, including sensor readings (magnetometer, gyroscope, accelerometer, and orientation) in segment referential, center-of-mass location, joint kinematics (angle, velocity, acceleration) and segments’ position and orientation (these can be consulted on (https://www.xsens.com/hubfs/Downloads/usermanual/MVN_User_Manual.pdf).

Processed data

In order to validate the acquired data, multiple processing steps were performed, in order to obtain the segments’ orientations from the raw sensors’ data.

Firstly, all sensors were calibrated to remove bias and scale factors over all axis, following standard methods on MARG sensor literature12.

Sensor-to-segment calibration was then performed, transforming the inertial data from the sensors’ referential to the body segments’ referential. The segment’s referential aligns with the world referential in the NWU frame of reference when the user holds the T-Pose. This is consistent with the frame of reference used by the GT Xsens Analyze software (https://www.xsens.com/hubfs/Downloads/usermanual/MVN_User_Manual.pdf), enabling direct comparison of the data. A simple static-calibration7 method was used to obtain a reasonable transformation, using the static N-Pose data collected on the start of each trial (first 5 seconds). This method assumes a default sensor placement which is then corrected in the pitch/roll axis using earth’s gravity vector as reference.

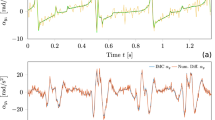

Finally, the sensor orientations’ (now in the segment’s referential) were calculated using a standard Madgwick13 complementary fusion filter.

This data was then used, in conjunction with a kinematic skeleton of the subject, to also obtain keypoint positions through FK, for easy visualization. These data can also be compared to the GT data by resampling the data to the same frequency and mapping the corresponding reference segments to match the predicted skeleton.

Calibration data

In D2 datasets, offline sensor-to-segment calibration for each subject was performed using an optimization process14 to minimize the MSE distance between sensor inertial data (accelerometer and gyroscope) and the respective GT trajectories, yielding relatively low orientation offsets. This was performed using multiple longer trials, displaying slow dynamics with high variability (sequence and task trials), and resulted in lower error than the standard calibration trials. The transformation was assumed to be constant across trials for the same subject, since the sensors were firmly secured with straps with good grip.

Unfortunately, this approach did not work reliably for the Ergowear data, since there was some sensor unpredictable displacement across trials. This derived from the subject’s movement and the fact that the smart-garment prototype was not capable to firmly secure the sensors to the participants’ segments.

Data Records

All the collected data, i.e. raw and GT data, was organized in a database15, hosted on Zenodo, to enable reuse across the research community, and contains both Ergowear and MTwAwinda trials, i.e., D1 and D2 datasets, along with code to handle the data.

Each of the datasets are structured hierarchically in 5 levels (Fig. 3), following a similar organization to provide an intuitive and easy way to select desired data: (i) level 0: Root, participant’s metadata, acquisition protocol, general dataset information and a folder containing the trials. (ii) level 1: Subject, a folder for each of the participants and calibration files; (iii) level 2: Sequence, contains a folder for each type of sequences performed (calibration, task, circuit, sequence, random and validation); (iv) level 3: Repetition, includes a folder with the repetitions id and possible annotations (ex. identifying data corruption or longer trials); and v) level 4: Data, presents the files containing the trial data and synchronized GT.

D1 - Ergowear data

Each of the D1 trial folders contain two types of data files, the first acquired with ad-hoc Ergowear software and the second, the Xsens data, exported using the proprietary Xsens MVN Analyze software.

The Ergowear files are stored as plain text ((.txt) files, with multiple columns: The packet index, MARG (accelerometer, gyroscope and magnetometer) data over the 3 axis, for each of the 9 IMU, and a relative timestamp of the packets. Each file contains only around 3000 packets, with more samples being divided into multiple files. These are named in integer order (i.e. 1.txt, 2.txt, 3.txt,…). The data is sampled at 100 Hz.

The Xsens data is contained in an excel file (.xlsx), exported from the Xsens Analyze software, which can be consulted on the user manual (https://www.xsens.com/hubfs/Downloads/usermanual/MVN_User_Manual.pdf). It contains multiple sheets each with a different data modality, which not only includes the inertial sensor information, but also segments’ orientation, position, velocity, and acceleration; and joints’ angle. This data is sampled at 60 Hz.

D2 - MTwAwinda data

Each of the D2 trial folder also contains the Xsens Analyze files as above, and data from the second set of Xsens MTw Awinda sensors, using the Xsens MtManager software for the data acquisition. To avoid misunderstanding of the terms, this paper uses MTwAwinda data as reference for the raw data acquired with Xsens MtManager software and Xsens Analyze data as GT data.

The MTwAwinda files are stored as (.csv files, one for each of the sensors (17 sensors), as exported by the MtManager software, and each named with the respective sensor ID. Each file contains columns with the following data: The packet index, MARG (accelerometer, gyroscope and magnetometer) data over the 3 axis and the sensor orientation quaternion (in sensor referential).

Technical Validation

The participants were instructed to follow the protocol proposed, and incorrect trials were repeated (e.g. performing the sequence trial incorrectly). At the same time, they were instructed to perform the motions as freely and naturally as possible to avoid biasing he results. The responsible researcher supervised and guided the experiments.

Data from both datasets was visually and qualitatively inspected, both online during the acquisition and offline, in order to ensure its validity. Trials containing issues (e.g. data desynchronization, sensors dropping, etc…) were either repeated or discarded.

Baseline results

Following the method described in the “Processed_Data” subsection, the resulting skeletons can be visualized in 3D in Fig. 4, where the acquired data displays similar motion as the GT.

The average error obtained through the simple method used is presented in Table 1, for each type of sequence and for each dataset. An overall average error of 27.46° and 7.83° were respectively obtained for the Ergowear and MTwAwinda datasets.

Data limitations

Data inspection reveals high correlation between the Xsens GT and the collected data (Baseline Results Section). However, some issues were noticed, which will inevitably lead to angle estimation errors. These were more pronounced in the D1, with Ergowear acquisition, and minimized as much as possible in the D2, with MTwAwinda acquisition. (i) trials contain lost data packets or occasional corrupted samples, which can vary from 0% to as high as 9.5% in a few trials; (ii) presence of magnetic disturbances thorough the trials, both from the surrounding environment (e.g. cabinets, table legs, building structure and wiring, etc… in the case of indoors acquisition)) and interacted objects (e.g. chair, laptop, stool, in both acquisitions); (iii) both datasets contain entire trials which have been corrupted (and thus discarded), this amounts to 35/1197 in the case of D1 and 43/340 in the case of D2; iv) presence of movement and soft-body artifacts, which although reduced as much as possible (especially in the D2 dataset), cannot be completely removed; v) the commercial MoCap system used as GT, although validated, is still dependent on good calibration and is affected by magnetic interference, and will thus contain higher errors than traditional visual MoCap systems16, adding to computed errors.

Additionally, the D1, concerning Ergowear acquisition, given its prototype state, presents additional issues: (i) inconsistent sampling frequency, which might oscillate around the expected 100 Hz (~95–105 Hz); (ii) relatively high gyroscope bias instability, caused by the fact that low-cost MARG sensors were used (MPU9250) and absence of Strap-Down Integration, leading to lower quality gyroscope estimates; (iii) sensor displacement artifacts, caused by the fact that the Ergoaware sensors were placed over the jacket, which although tight, might slide, especially during near maximal extension, leading to variable sensor-to-segment offsets across timesteps and different trials; (iv) there are no shoulder sensors, which might be necessary for a better assessment of some upper-body movements.

Code availability

This database is accompanied by a folder with all the scripts used to process and handle the data described. It is openly hosted in Zenodo15.

Additionally, an extended code repository is available on Github (https://github.com/ManuelPalermo/HumanInertialPose.git) with updated code to not only process the data described, but also calculate kinematics, visualize and evaluate the resulting motions and offers extended support for general inertial pose estimation pipelines. All scripts are based on the Python programming language and, thus, open source. The code contains a permissive MIT license for unrestricted usage.

We hope this dataset and associated code can further contribute to the development and evaluation of classic or data-driven inertial human pose estimation solutions, with applications, for example, in human movement understanding and forecasting, ergonomic assessment and gait/posture analysis.

References

Camomilla, V., Bergamini, E., Fantozzi, S. & Vannozzi, G. Trends supporting the in-field use of wearable inertial sensors for sport performance evaluation: A systematic review. Sensors (2018).

Lopez-Nava, I. H. & Munoz-Melendez, A. Wearable inertial sensors for human motion analysis: A review. IEEE Sensors Journal (2016).

Huang, Y. et al. Deep inertial poser: Learning to reconstruct human pose from sparse inertial measurements in real time. ACM Transactions on Graphics, (Proc. SIGGRAPH Asia) (2018).

Trumble, M., Gilbert, A., Malleson, C., Hilton, A. & Collomosse, J. Total capture: 3d human pose estimation fusing video and inertial sensors. In 2017 British Machine Vision Conference (BMVC) (2017).

Luo, Y. et al. A database of human gait performance on irregular and uneven surfaces collected by wearable sensors. Scientific Data https://doi.org/10.1038/s41597-020-0563-y (2020).

Roetenberg, D., Luinge, H. & Slycke, P. Xsens mvn: Full 6dof human motion tracking using miniature inertial sensors. Xsens Motion Technologies BV, Tech. Rep (2009).

Choe, N., Zhao, H., Qiu, S. & So, Y. A sensor-to-segment calibration method for motion capture system based on low cost mimu. Measurement https://doi.org/10.1016/j.measurement.2018.07.078 (2019).

Mahmood, N., Ghorbani, N., Troje, N. F., Pons-Moll, G. & Black, M. J. AMASS: Archive of motion capture as surface shapes. In International Conference on Computer Vision (2019).

Resende, A. et al. Ergowear: an ambulatory, non-intrusive, and interoperable system towards a human-aware human-robot collaborative framework. In 2021 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), https://doi.org/10.1109/ICARSC52212.2021.9429796 (2021).

Paulich, M., Schepers, M., Rudigkeit, N. & Bellusci, G. Xsens mtw awinda: Miniature wireless inertial-magnetic motion tracker for highly accurate 3d kinematic applications https://doi.org/10.13140/RG.2.2.23576.49929 (2018).

Al-Amri, M. et al. Inertial measurement units for clinical movement analysis: reliability and concurrent validity. Sensors (2018).

Ribeiro, N. IMUs: validation, gait analysis and system’s implementation. Master’s thesis, University of Minho (2017).

Madgwick, S. O. H., Harrison, A. J. L. & Vaidyanathan, R. Estimation of imu and marg orientation using a gradient descent algorithm. In 2011 IEEE International Conference on Rehabilitation Robotics, https://doi.org/10.1109/ICORR.2011.5975346 (2011).

Hansen, N., Ostermeier, A. & Gawelczyk, A. On the adaptation of arbitrary normal mutation distributions in evolution strategies: The generating set adaptation. In ICGA, 57–64 (Citeseer, 1995).

Palermo, M., Cerqueira, S., André, J. & Santos, CP. Complete Inertial Pose (CIP) Dataset, Zenodo, https://doi.org/10.5281/zenodo.5801927 (2022).

Huynh, D. Q. Metrics for 3d rotations: Comparison and analysis. Journal of Mathematical Imaging and Vision (2009).

Al-Amri, M. et al. Inertial measurement units for clinical movement analysis: Reliability and concurrent validity. Sensors https://doi.org/10.3390/s18030719 (2018).

Acknowledgements

This work is supported by: European Structural and Investment Funds in the FEDER component, through the Operational Competitiveness and Internationalization Programme (COMPETE 2020) [Project n° 39479; Funding Reference: POCI-01-0247-FEDER-39479]. Sara Cerqueira was supported by the doctoral Grant SFRH/BD/151382/2021, financed by the Portuguese Foundation for Science and Technology (FCT), under MIT Portugal Program.

Author information

Authors and Affiliations

Contributions

M.P., S.C., J.A. and C.P.S. conceived the data acquisition; M.P., S.C., J.A. and A.P. conducted the data acquisition; M.P. analyzed and processed the data; M.P. organized the database; M.P., S.C. and C.P.S. contributed to the manuscript’s edition; All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Palermo, M., Cerqueira, S.M., André, J. et al. From raw measurements to human pose - a dataset with low-cost and high-end inertial-magnetic sensor data. Sci Data 9, 591 (2022). https://doi.org/10.1038/s41597-022-01690-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-022-01690-y