Abstract

Human body movements can convey a variety of emotions and even create advantages in some special life situations. However, how emotion is encoded in body movements has remained unclear. One reason is that there is a lack of public human body kinematic dataset regarding the expressing of various emotions. Therefore, we aimed to produce a comprehensive dataset to assist in recognizing cues from all parts of the body that indicate six basic emotions (happiness, sadness, anger, fear, disgust, surprise) and neutral expression. The present dataset was created using a portable wireless motion capture system. Twenty-two semi-professional actors (half male) completed performances according to the standardized guidance and preferred daily events. A total of 1402 recordings at 125 Hz were collected, consisting of the position and rotation data of 72 anatomical nodes. To our knowledge, this is now the largest emotional kinematic dataset of the human body. We hope this dataset will contribute to multiple fields of research and practice, including social neuroscience, psychiatry, computer vision, and biometric and information forensics.

Measurement(s) | body movement coordination trait • emotion/affect behavior trait |

Technology Type(s) | motion capture system |

Factor Type(s) | emotion category • sex |

Sample Characteristic - Organism | Homo sapiens |

Machine-accessible metadata file describing the reported data: https://doi.org/10.6084/m9.figshare.12821150

Similar content being viewed by others

Background & Summary

Recognizing human emotions is crucial to people’s survival and social communication. There are various carriers and channels of emotional expression. The relevant existing works in both psychology1 and computer science2,3 mainly focus on human faces and voices as well as emotional scene (context, situations, and conditions). Although the classical theories of emotion have highlighted the significance of body movements since the 19th century4,5, as de Gelder1 said, “bodily expressions never occupied centre stage in emotion research.” In recent decades, psychologists have taken up this issue and found that body movements can provide comparable recognition accuracy relative to facial expression, regardless of static and dynamic conditions6,7. Moreover, body movements may show advantages in emotional integration, where multiple emotional stimuli affect each other. For example, when someone is experiencing the most intense emotion of her/his life (e.g., winning the Olympic gold medal), others cannot recognize his/her emotion from just the face and have to use body cues8. Similarly, complexities in various situations also exist due to the emotional interactions with voice9 and scene10,11. Therefore, human body indicators are essential for thorough emotion recognition.

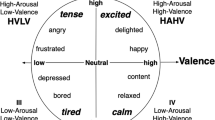

In previous studies, researchers have created some emotional body movement sets using motion capture techniques. These stimulus sets record people’s emotional expression while dancing, walking, and performing other actions7,12,13,14,15,16,17,18,19,20,21,22,23,24. However, most of these researchers have published finished products (e.g., point-light displays, video clips), but have not included raw kinematic data, and there are fewer recordings in those sets than in those for facial indicators25. Some sets consist only of arm movements and do not demonstrate the whole body26. Moreover, some studies use only one scenario for a particular emotion (e.g., fear with a pursuing attacker)6,27, or the same situational guidance across all emotions (e.g., walking)14,28. In daily life, people do not always express their emotions in a single situation or through a single physical posture. Kinematic data includes rich information, such as the position and rotation of body segments, joint angles, and spatio-temporal gait parameters. These points demonstrate the restrictions that have kept researchers from studying how emotion is encoded in body movements and revealing the relationship between quantitative assessment for each joint or body segment and emotion dimensions (e.g., pleasant-unpleasant, valence; deactivated-activated, arousal)29. Therefore, there is a lack of public human body kinematic dataset expressing various emotions. Considering this lack of available information, we aimed to produce a comprehensive dataset to assist in recognizing emotional cues from all parts of the body in richer daily situations with more ecological validity.

Taken together, we report a human body kinematic dataset that consists of 1402 trials while expressing six basic emotions (happiness, sadness, anger, fear, disgust, surprise) and neutral. Twenty-two semi-professional actors (half male) participated in this study. A low cost and validated inertial motion capture system with 17 sensors was used30,31,32. The actors performed according to the standardized guide and carefully screened daily events. The resulting kinematic dataset contains the position and rotation data of 72 anatomical nodes, which is stored in the BioVision Hierarchy (BVH) structure. This work expands the scope of emotion recognition and can help us to better understand human’s emotion conveyed via body movements. To the best of our knowledge, this is, at present, the largest emotional kinematic dataset based on the whole human body. These data are expected to be used repeatedly and foster progress in several fields, including social neuroscience, psychiatry, human-computer interaction, computer vision, and biometric and information forensics.

Methods

Preparation phase

Equipment and environment

The kinematic data were collected using a wireless motion capture system (Noitom Perception Neuron, Noitom Technology Ltd., Beijing, China) with 17 wearable sensors. This apparatus was connected to the Axis Neuron software (version 3.8.42.8591, Noitom Technology Ltd., Beijing, China) on a laptop computer (Terrans Force T5 SKYLAKE 970 M 67SH1, Windows 10 operating system, Intel Core i7 6700HQ processer). These sensors, with a sampling rate of 125 Hz, were placed on both sides of the actors, including their upper and lower arms, hips, spine, head, feet, hands, shoulders, and both upper and lower legs (see Fig. 1a). The tasks were conducted in a quiet laboratory (see Fig. 1b). The actors needed to execute each performance in a square stage of 1 m × 1 m that was 0.5 m from a wall. Proper and limited performance space would also control the horizontal distance between the actor and the object of emotions so that the horizontal displacement of all recordings was not very different.

Scenarios

In order to guide the actors to perform in a typical and natural manner, we created 70 daily event scenarios (10 for each emotion and neutral; see Supplementary File 1) according to the basic concepts of emotions33,34,35 and previous research6,7,20,21,22,26,27,36. To test the validity of the scenarios, 70 college students (mean age = 23.10 years, SD = 1.64, 42 females) were required to classify the emotion expressed in the scenarios displayed randomly on a seven-alternative forced-choice questionnaire (see Supplementary File 2). The five most recognizable scenarios for each emotion were retained (see Online-only Table 1). Note that, for emotional but not neutral recordings, free (non-scripted) performances were added, during which the performance was spontaneous, and the actors were free to interpret and express the emotions as they thought fit, not be restricted by the scenarios. Thus, there were 35 scenarios used in the recording phase.

Actors

Another group of 24 college students (mean age = 20.75 years, SD = 1.92; mean height = 1.69 m, SD = 0.07; mean weight = 58.52 kg, SD = 12.42; mean BMI = 20.25, SD = 3.30) was recruited from the drama and dance clubs of the Dalian University of Technology to perform as actors for this study (see Table 1). Actors F04 and F13 were excluded because they dropped out. All actors were physically and mentally healthy and right-handed. Each actor gave their written informed consent before performing and was told that their motion data were to be used only for scientific research. The study was approved by the Human Research Institutional Review Board of Liaoning Normal University in accordance with the Declaration of Helsinki (1991). After the recording phase, the actors were paid appropriately.

Recording phase

The actors wore black tights, and 17 Neuron sensors were attached to the corresponding body location. A four-step calibration procedure using four successive static poses was done for the Axis Neuron software before performances and when necessary (e.g., bad WIFI signal or after a rest) (see Fig. 2; for details, see https://neuronmocap.com/content/axis-neuron). To prevent inconsistencies in interpretation by different actors, standardized instructions were given before each recording (see Supplementary File 3). The actors started in a neutral stance (i.e., facing forward and arms naturally at sides). For each kind of emotion, we successively asked the actors to give a six-second free performance based on their self-understanding and the scenario performance. The order of emotions displayed for the actors was random, and the order of scenarios within each emotion was random as well. When the actors were ready and we said “start”, the Axis Neuron software simultaneously recorded the motion data. After each performance, we reviewed it and evaluated the signal quality; hence, some performances needed to be repeated several times. The recording phase took approximately two hours, during which the actors could have a rest when they felt tired.

Four-step calibration procedure. (a) Steady pose. (b) A-pose. (c) T-pose. (d) S-pose. The figures are taken from another source with permission (https://neuronmocap.com/content/axis-neuron).

Data Records

A total of 1406 trials were collected. The commercial Axis Neuron software uses a RAW file format to store data. We exported those RAW trial files to BVH files, as it is a standard file format that can be analyzed using various software (e.g., 3ds Max, https://www.autodesk.com/products/3ds-max; MotionBuilder, https://www.autodesk.com/products/motionbuilder). Four raw files were impaired during this process (i.e., F09D0V1, M04F4V2, M06N1V1, and M06SU1V1). Therefore, the human body kinematic dataset—available from https://doi.org/10.13026/kg8b-1t4937 (mean duration = 7.22 s, SD = 1.57)—that was created consists of 1402 trials expressing six emotions and neutral.

Each actor has his/her own folder, named as the actor ID (see Table 1), consisting of BVH files for all emotions. Each trial was named systematically as “<actor_ID><emotion><scenario_ID><version>” (for details, see file_info.csv in https://doi.org/10.13026/kg8b-1t4937), where “actor_ID” represents the actor ID; “emotion” includes happiness (H), sadness (SA), neutral (N), anger (A), disgust (D), fear (F), and surprise (SU); “scenario_ID” consists of the free (indicated as 0) and scenario performance indicated with the corresponding numeral from 1 to 5; and “version” denotes the number of repetitions.

A BVH file contains ASCII text and two sections (i.e., HIERARCHY and MOTION). Beginning with the keyword HIERARCHY, this section defines the joint tree, the name of each node, the number of channels, and the relative position between joints (i.e., the bone length of each part of the human body). There are totally 72 nodes data (i.e., 1 Root, 58 Joints, and 13 End Sites) in this section (see Fig. 3), which are calculated by the commercial Axis Neuron software according to the 17 sensors (see Fig. 1a). The MOTION section records the motion data. According to the joint sequence defined, the data of each frame is provided, and the position and rotation information of each joint node is recorded. There are some legends in a BVH file:

-

HIERARCHY: beginning of the header section

-

ROOT: location of the Hips (see Fig. 3)

-

JOINT: location of the skeletal joint refers to the parent-joint (see Fig. 3)

-

CHANNELS: number of channels including position and rotation channels

-

OFFSET: X, Y, and Z offsets of the segment relative to its parent-joint

-

End Site: end of a JOINT which has no child-joint (see Fig. 3)

-

MOTION: beginning of the second section

-

FRAMES: numbers of frames

-

Frame Time: sampling time per frame

Technical Validation

Scenario

To improve the reality and recognition of actors’ performances, we conducted a pilot study (see Methods) and selected the top five recognizable daily events that had the highest accuracy for each emotion (mean ± SD, happiness: 0.994 ± 0.013; anger: 0.917 ± 0.040; sadness: 0.957 ± 0.026; fear: 0.971 ± 0.023; disgust: 0.906 ± 0.041; surprise: 0.871 ± 0.030; neutral: 0.920 ± 0.042).

Calibration

As described in the recording phase (see Methods), the motion capture system was calibrated with the four-step calibration procedure before performance and as needed. We also reviewed and visually checked the quality of the motion signal and the naturalness of performances trial by trial. After all sensors have been calibrated, the pose of the model in the recording software will be consistent with the actor’s initial stance (i.e., facing forward and arms naturally at sides; see Fig. 4a), and the spatial position of the mass center across all models in the recording software will be relatively stable. Otherwise, the model will be deformed, and the initial spatial position of the mass center will be inconsistent across all performances. Therefore, the spatial positions of the first frame mass center across all recordings can reflect the calibration quality. To evaluate the calibration quality, we used the Axis Neuron software and extracted the X, Y, and Z positions of the mass center (see Fig. 4a) of the first frame from each recording (see https://physionet.org/content/kinematic-actors-emotions/2.1.0/). The data distribution in these three dimensions was relatively centralized, showing that the initial states of the actors would be consistent (see Fig. 4b–e), which suggests a good calibration quality in our study.

The location of mass center and three-dimensional distribution of the mass center of the first frame. (a) A big red transparent ball represents the mass center of the model in the recording software (this figure is taken from another source with permission, https://neuronmocap.com/content/axis-neuron). (b) 3D scatter of the mass center of the first frame. (c) The distribution of X position. (d) The distribution of Y position. (e) The distribution of Z position. Figure 4 shows that, after the calibration procedure, the initial spatial position of the actors across all performances are relatively centralized and consistent, reflecting a good calibration quality in the present study.

Usage Notes

BVH files can be imported directly into 3ds Max (https://www.autodesk.com/products/3ds-max), MotionBuilder (https://www.autodesk.com/products/motionbuilder), and other 3D applications. Therefore, these data can be used to build different avatars in virtual reality and augmented reality products. Previous studies on emotion recognition in the field of computer and information science have mainly focused on human faces and voices; hence, the dataset created in this study can improve current technologies and contribution to scientific advancements. In the fields of psychiatry and psychology, researchers can also create experimental stimuli based on the present study, such as emotional point-light displays that contain biological motion information38. Such material has been applied in the field of social cognitive impairment and can contribute to the clinical diagnosis of autistic spectrum disorders, schizophrenia, and other conditions of psychiatric patients39,40.

Amongst the trials produced, some special trials should be noted. Although we asked the actors to complete each performance within six seconds, some trials are, in fact, much shorter or longer because of the difference in human time perception or operator error (e.g., 21.84 s for F07SA0V1, 2.688 s for M01D1V2). Future studies aim to be more consistent by avoiding these trial discrepancies when possible.

Although we have got richer data, reflecting the variability of body movements by creating more daily scenarios and recruiting more actors, and the present set also gives researchers more opportunities to select experimental materials, the differences among the scenarios in the same emotion may bring some “noise”. For example, if the object of anger is a proximal dog, the expression may be targeted at a lower vertical level than if the object is a car speeding off. We will further examine the specific relationship between scenario-induced movement and subjective emotional experience (e.g., emotional intensity, valence, and arousal).

Because all the actors were told that their motion data were to be used only for scientific research before the performance, we followed the recommendation of PhysioNet and chose a suitable license as data use agreement (Restricted Health Data License 1.5.0,

https://physionet.org/content/kinematic-actors-emotions/view-license/2.1.0/). Therefore, users need to sign this agreement online before downloading and using the present dataset.

Code availability

The Matlab code used to extract the frame number of BVH files to calculate durations can be found at https://physionet.org/content/kinematic-actors-emotions/2.1.0/.

References

de Gelder, B. Why bodies? Twelve reasons for including bodily expressions in affective neuroscience. Philos. T. R. Soc. B. 364, 3475–3484 (2009).

Schuller, B., Rigoll, G. & Lang, M. Hidden markov model-based speech emotion recognition. 2003 International Conference on Multimedia and Expo, Vol I, Proceedings, 401–404 (2003).

Lalitha, S., Madhavan, A., Bhushan, B. & Saketh, S. Speech emotion recognition. 2014 International Conference on Advances in Electronics, Computers and Communications (ICAECC) (2014).

Darwin, C. The expression of the emotions in man and animals. (University of Chicago Press, 1872/1965).

James, W. T. The principles of psychology. (Holt, 1890).

de Gelder, B. & Van den Stock, J. The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front. Psychol. 2, 181 (2011).

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J. & Young, A. W. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33, 717–746 (2004).

Aviezer, H., Trope, Y. & Todorov, A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science 338, 1225–1229 (2012).

Atias, D. et al. Loud and unclear: Intense real-life vocalizations during affective situations are perceptually ambiguous and contextually malleable. J. Exp. Psychol. Gen. (2018).

Reschke, P. J., Knothe, J. M., Lopez, L. D. & Walle, E. A. Putting “context” in context: The effects of body posture and emotion scene on adult categorizations of disgust facial expressions. Emotion 18, 153–158 (2018).

Chen, Z. M. & Whitney, D. Tracking the affective state of unseen persons. Proc. Natl. Acad. Sci. USA 116, 7559–7564 (2019).

Alaerts, K., Nackaerts, E., Meyns, P., Swinnen, S. P. & Wenderoth, N. Action and emotion recognition from point light displays: An investigation of gender differences. Plos One 6, e20989 (2011).

Chouchourelou, A., Matsuka, T., Harber, K. & Shiffrar, M. The visual analysis of emotional actions. Soc. Neurosci. 1, 63–74 (2006).

Halovic, S. & Kroos, C. Walking my way? Walker gender and display format confounds the perception of specific emotions. Hum. Mov. Sci. 57, 461–477 (2018).

Lagerlof, I. & Djerf, M. Children’s understanding of emotion in dance. Eur. J. Dev. Psychol. 6, 409–431 (2009).

Lorey, B. et al. Confidence in emotion perception in point-light displays varies with the ability to perceive own emotions. Plos One 7, e42169 (2012).

Moore, D. G., Hobson, R. P. & Lee, A. Components of person perception: An investigation with autistic, non-autistic retarded and typically developing children and adolescents. Br. J. Dev. Psychol. 15, 401–423 (1997).

Ross, P. D., Polson, L. & Grosbras, M. H. Developmental changes in emotion recognition from full-light and point-light displays of body movement. Plos One 7, e44815 (2012).

Heberlein, A. S., Adolphs, R., Tranel, D. & Damasio, H. Cortical regions for judgments of emotions and personality traits from point-light walkers. J. Cogn. Neurosci. 16, 1143–1158 (2004).

Ma, Y., Paterson, H. M. & Pollick, F. E. A motion capture library for the study of identity, gender, and emotion perception from biological motion. Behav. Res. Methods 38, 134–141 (2006).

Walk, R. D. & Homan, C. P. Emotion and dance in dynamic light displays. Bull. Psychon. Soc. 22, 437–440 (1984).

Dittrich, W. H., Troscianko, T., Lea, S. E. & Morgan, D. Perception of emotion from dynamic point-light displays represented in dance. Perception 25, 727–738 (1996).

Troje, N. F. Decomposing biological motion: A framework for analysis and synthesis of human gait patterns. J. Vis. 2, 371–387 (2002).

Liu, S. L. et al. Multi-view laplacian eigenmaps based on bag-of-neighbors for RGB-D human emotion recognition. Inform. Sci. 509, 243–256 (2020).

Krumhuber, E. G., Skora, L., Küster, D. & Fou, L. A review of dynamic datasets for facial expression research. Emot. Rev. 9, 280–292 (2016).

Pollick, F. E., Paterson, H. M., Bruderlin, A. & Sanford, A. J. Perceiving affect from arm movement. Cognition 82, B51–B61 (2001).

Thoma, P., Soria Bauser, D. & Suchan, B. BESST (bochum emotional stimulus set)–a pilot validation study of a stimulus set containing emotional bodies and faces from frontal and averted views. Psychiatry Res. 209, 98–109 (2013).

Gross, M. M., Crane, E. A. & Fredrickson, B. L. Effort-shape and kinematic assessment of bodily expression of emotion during gait. Hum Mov Sci 31, 202–221 (2012).

Yik, M. S. M., Russell, J. A. & Barrett, L. F. Structure of self-reported current affect: Integration and beyond. Journal of Personality and Social Psychology 77, 600–619 (1999).

Sers, R. et al. Validity of the perception neuron inertial motion capture system for upper body motion analysis. Measurement 149 (2020).

Kim, H. S. et al. Application of a perception neuron system in simulation-based surgical training. J. Clin. Med. 8 (2019).

Robert-Lachaine, X., Mecheri, H., Muller, A., Larue, C. & Plamondon, A. Validation of a low-cost inertial motion capture system for whole-body motion analysis. J. Biomech. 99, 109520 (2020).

Ekman, P. & Cordaro, D. What is meant by calling emotions basic. Emot. Rev. 3, 364–370 (2011).

Arrindell, W. A., Pickersgill, M. J., Merckelbach, H., Ardon, A. M. & Cornet, F. C. Phobic dimensions .3. Factor analytic approaches to the study of common phobic fears - an updated review of findings obtained with adult subjects. Adv. Behav. Res. Ther. 13, 73–130 (1991).

Yoder, A. M., Widen, S. C. & Russell, J. A. The word disgust may refer to more than one emotion. Emotion 16, 301–308 (2016).

Clarke, T. J., Bradshaw, M. F., Field, D. T., Hampson, S. E. & Rose, D. The perception of emotion from body movement in point-light displays of interpersonal dialogue. Perception 34, 1171–1180 (2005).

Zhang, M. et al. Kinematic dataset of actors expressing emotions (version 2.1.0). PhysioNet https://doi.org/10.13026/kg8b-1t49 (2020).

Johansson, G. Visual-perception of biological motion and a model for its analysis. Percept. Psychophys. 14, 201–211 (1973).

Okruszek, L. It is not just in faces! Processing of emotion and intention from biological motion in psychiatric disorders. Front. Hum. Neurosci. 12 (2018).

Pavlova, M. A. Biological motion processing as a hallmark of social cognition. Cereb. Cortex 22, 981–995 (2012).

Acknowledgements

We also thank S. Liu and X. Yi for contribution to the data collation. This work was supported by the National Natural Science Foundation of China (31871106) and the Postgraduate Innovation Foundation of Liaoning Normal University (2020cx02).

Author information

Authors and Affiliations

Contributions

M.Z., S.L. and W.L. designed this study. M.Z., L.Y., K.Z., B.D., B.Z., S.C., X.J., S.G., Y.W. and B.W. collected the data. M.Z., L.Y., B.Z. and X.J. organized and analyzed the data. M.Z., L.Y., S.L., and W.L. wrote the manuscript. All authors approved the final version of the manuscript for submission.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Online-only Table

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

The Creative Commons Public Domain Dedication waiver http://creativecommons.org/publicdomain/zero/1.0/ applies to the metadata files associated with this article.

About this article

Cite this article

Zhang, M., Yu, L., Zhang, K. et al. Kinematic dataset of actors expressing emotions. Sci Data 7, 292 (2020). https://doi.org/10.1038/s41597-020-00635-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-020-00635-7

This article is cited by

-

Acting Emotions: a comprehensive dataset of elicited emotions

Scientific Data (2024)

-

A new kinematic dataset of lower limbs action for balance testing

Scientific Data (2023)

-

Multi-view emotional expressions dataset using 2D pose estimation

Scientific Data (2023)