Abstract

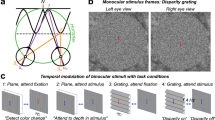

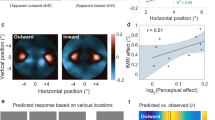

Sensory signals give rise to patterns of neural activity, which the brain uses to infer properties of the environment. For the visual system, considerable work has focused on the representation of frontoparallel stimulus features and binocular disparities. However, inferring the properties of the physical environment from retinal stimulation is a distinct and more challenging computational problem—this is what the brain must actually accomplish to support perception and action. Here we develop a computational model that incorporates projective geometry, mapping the three-dimensional (3D) environment onto the two retinae. We demonstrate that this mapping fundamentally shapes the tuning of cortical neurons and corresponding aspects of perception. For 3D motion, the model explains the strikingly non-canonical tuning present in existing electrophysiological data and distinctive patterns of perceptual errors evident in human behavior. Decoding the world from cortical activity is strongly affected by the geometry that links the environment to the sensory epithelium.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data and code availability

The modeling code and simulations, and the human psychophysical data and analysis, are available here: https://github.com/kbonnen/BinocularViewing3dMotion.

References

von Helmholtz, H. Treatise on Physiological Optics. Translated by James P. C. Southall (Dover Publications, 1867).

Gibson, J. J. The Perception of the visual World. (Houghton Mifflin, 1950).

Rokers, B., Cormack, L. K. & Huk, A. C. Disparity- and velocity-based signals for three-dimensional motion perception in human MT+. Nat. Neurosci. 12, 1050–1055 (2009).

Czuba, T. B., Huk, A. C., Cormack, L. K. & Kohn, A. Area MT encodes three-dimensional motion. J. Neurosci. 34, 15522–15533 (2014).

Sanada, T. M. & DeAngelis, G. C. Neural representation of motion-in-depth in area MT. J. Neurosci. 34, 15508–15521 (2014).

Hubel, D. H. & Wiesel, T. N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 148, 574–591 (1959).

Bacon, J. P. & Murphey, R. K. Receptive fields of cricket giant interneurones are related to their dendritic structure. J. Physiol. 352, 601–623 (1984).

Jacobs, G. A. & Theunissen, F. E. Functional organization of a neural map in the cricket cercal sensory system. J. Neurosci. 16, 769–784 (1996).

Maunsell, J. H. & Van Essen, D. C. Functional properties of neurons in middle temporal visual area of the macaque monkey. I. Selectivity for stimulus direction, speed, and orientation. J. Neurophysiol. 49, 1127–1147 (1983).

Nover, H., Anderson, C. H. & DeAngelis, G. C. A logarithmic, scale-invariant representation of speed in macaque middle temporal area accounts for speed discrimination performance. J. Neurosci. 25, 10049–10060 (2005).

Beverley, K. I. & Regan, D. Evidence for the existence of neural mechanisms selectively sensitive to the direction of movement in space. J. Physiol. 235, 17–29 (1973).

Cynader, M. & Regan, D. Neurones in cat parastriate cortex sensitive to the direction of motion in three-dimensional space. J. Physiol. 274, 549–569 (1978).

Graf, A. B. A., Kohn A., Jazayeri, M. & Movshon, J. A. Decoding the activity of neuronal populations in macaque primary visual cortex. Nat. Neurosci. 14, 239–245 (2011).

Cooper, E. A., van Ginkel, M. & Rokers, B. Sensitivity and bias in the discrimination of two-dimensional and three-dimensional motion direction. J. Vis. 16, 5–11 (2016).

Rokers, B., Fulvio, J. M., Pillow, J. W. & Cooper, E. A. Systematic misperceptions of 3-D motion explained by Bayesian inference. J. Vis. 18, 23–23 (2018).

Fulvio, J. M., Rosen, M. L. & Rokers, B. Sensory uncertainty leads to systematic misperception of the direction of motion in depth. Atten. Percept. Psychophys. 77, 1685–1696 (2015).

Hubel, D. H. & Wiesel, T. N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 160, 106–154 (1962).

Katz, L. C. & Crowley, J. C. Development of cortical circuits: lessons from ocular dominance columns. Nat. Rev. Neurosci. 3, 34–42 (2002).

Lehky, S. R. Unmixing binocular signals. Front. Hum. Neurosci. 5, 78 (2011).

Welchman, A. E., Tuck, V. L. & Harris, J. M. Human observers are biased in judging the angular approach of a projectile. Vis. Res. 44, 2027–2042 (2004).

Welchman, A. E., Lam, J. M. & Bülthoff, H. H. Bayesian motion estimation accounts for a surprising bias in 3D vision. Proc. Natl Acad. Sci. USA 105, 12087–12092 (2008).

Beverley, K. I. & Regan, D. The relation between discrimination and sensitivity in the perception of motion in depth. J. Physiol. 249, 387–398 (1975).

Cumming, B. G. & Parker, A. J. Binocular mechanisms for detecting motion-in-depth. Vis. Res. 34, 483–495 (1994).

Harris, J. M., Nefs, H. T. & Grafton, C. E. Binocular vision and motion-in-depth. Spat. Vis. 21, 531–547 (2008).

Salzman, C. D., Bitten, K. H. & Newsome, W. T. Cortical microstimulation influences perceptual judgements of motion direction. Nature 346, 174–177 (1990).

Barendregt, M., Dumoulin, S. O. & Rokers, B. Stereomotion scotomas occur after binocular combination. Vis. Res. 105, 92–99 (2014).

Acknowledgements

This research was funded by the National Eye Institute at the National Institutes of Health (EY020592, to L.K.C., A.C.H., and A.K.), the National Science Foundation (DGE-1110007, to K.B.), the Harrington Fellowship program (to K.B.), and the National Institutes of Health (T32 EY21462-6, to T.B.C. and J.A.W.). Special thanks to J. Fulvio (University of Wisconsin) and B. Rokers (New York University, Abu Dhabi) for many insightful conversations and for sharing their psychophysical data early on in this project. We thank J. Kaas (Vanderbilt University) for dispensation in referring to ‘MT’ instead of ‘middle temporal area’.

Author information

Authors and Affiliations

Contributions

T.C. and A.K. collected the electrophysiology data. K.B., L.K.C. and A.C.H. built the computational model. K.B., T.C., A.K, A.C.H. and L.K.C. interpreted the results from electrophysiology and modeling. K.B., A.C.H. and L.K.C. designed the human psychophysical experiments. K.B. and J.A.W. collected the human psychophysical data. K.B., J.A.W., A.C.H. and L.K.C. performed the analysis and interpreted the results. K.B., L.K.C. and A.C.H. wrote the paper. K.B., T.C., J.A.W., A.K., A.C.H. and L.K.C. edited the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Neuroscience thanks Gregory DeAngelis, Anthony Norcia, and Jenny Read for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figures 1–6 and Supplementary Video Captions 1 and 2.

Supplementary Video 1

Example motion cloud stimuli for free-fusing. These examples are high-contrast versions of the stimulus shown to subjects during our experiments. The spherical motion volume is shown up and to the right of fixation for more comfortable free-fusing but would be directly left or right of fixation in the actual experiment. This video shows eight motion epochs (0°, 45°, 90°, 135°, 180°, 225°, 270° and 315°).

Supplementary Video 2

Example motion cloud stimuli rendered in 2D with shading to simulate the binocular stimuli used in the experiment. This video is a 2D version of the stimulus rendered with shading on the dots to give a stronger sense of the depth percept. These example motion stimuli are high-contrast versions of the stimuli shown to subjects during our experiments. This video shows eight motion epochs (0°, 45°, 90°, 135°, 180°, 225°, 270° and 315°). An example of the motion indicator used during the experiment is shown below the stimulus and points in the direction of the motion. Additional indicator dots have been placed at the cardinal directions for reference (these were not present during the experiments). In the experiment, this indicator would appear below fixation after the motion epoch and subjects would use a knob to control which direction it pointed.

Rights and permissions

About this article

Cite this article

Bonnen, K., Czuba, T.B., Whritner, J.A. et al. Binocular viewing geometry shapes the neural representation of the dynamic three-dimensional environment. Nat Neurosci 23, 113–121 (2020). https://doi.org/10.1038/s41593-019-0544-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-019-0544-7

This article is cited by

-

Image statistics determine the integration of visual cues to motion-in-depth

Scientific Reports (2022)