Abstract

Analyzing vast textual data and summarizing key information from electronic health records imposes a substantial burden on how clinicians allocate their time. Although large language models (LLMs) have shown promise in natural language processing (NLP) tasks, their effectiveness on a diverse range of clinical summarization tasks remains unproven. Here we applied adaptation methods to eight LLMs, spanning four distinct clinical summarization tasks: radiology reports, patient questions, progress notes and doctor–patient dialogue. Quantitative assessments with syntactic, semantic and conceptual NLP metrics reveal trade-offs between models and adaptation methods. A clinical reader study with 10 physicians evaluated summary completeness, correctness and conciseness; in most cases, summaries from our best-adapted LLMs were deemed either equivalent (45%) or superior (36%) compared with summaries from medical experts. The ensuing safety analysis highlights challenges faced by both LLMs and medical experts, as we connect errors to potential medical harm and categorize types of fabricated information. Our research provides evidence of LLMs outperforming medical experts in clinical text summarization across multiple tasks. This suggests that integrating LLMs into clinical workflows could alleviate documentation burden, allowing clinicians to focus more on patient care.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

This study used six datasets that are all publicly accessible at the provided references. Three of those datasets require PhysioNet81 access due to their terms of use: MIMIC-CXR73 (radiology reports), MIMIC- III76 (radiology reports) and ProbSum79 (progress notes). For the other three datasets not requiring PhysioNet access—Open-i72 (radiology reports), MeQSum77 (patient questions) and ACI-Bench41 (dialogue)—researchers can access original versions via the provided references, in addition to our data via the following GitHub repository: https://github.com/StanfordMIMI/clin-summ. Note that any further distribution of datasets is subject to the terms of use and data-sharing agreements stipulated by the original creators.

Code availability

All code used for experiments in this study can be found in a GitHub repository (https://github.com/StanfordMIMI/clin-summ), which also contains links to open-source models hosted by HuggingFace87.

References

Golob, J. F. Jr, Como, J. J. & Claridge, J. A. The painful truth: the documentation burden of a trauma surgeon. J. Trauma Acute Care Surg. 80, 742–747 (2016).

Arndt, B. G. et al. Tethered to the EHR: primary care physician workload assessment using EHR event log data and time–motion observations. Ann. Fam. Med. 15, 419–426 (2017).

Fleming, S. L. et al. MedAlign: a clinician-generated dataset for instruction following with electronic medical records. Preprint at https://doi.org/10.48550/arXiv.2308.14089 (2023).

Yackel, T. R. & Embi, P. J. Unintended errors with EHR-based result management: a case series. J. Am. Med. Inform. Assoc. 17, 104–107 (2010).

Bowman, S. Impact of electronic health record systems on information integrity: quality and safety implications. Perspect. Health Inf. Manag. 10, 1c (2013).

Gershanik, E. F., Lacson, R. & Khorasani, R. Critical finding capture in the impression section of radiology reports. AMIA Annu. Symp. Proc. 2011, 465–469 (2011).

Gesner, E., Gazarian, P. & Dykes, P. The burden and burnout in documenting patient care: an integrative literature review. Stud. Health Technol. Inform. 21, 1194–1198 (2019).

Ratwani, R. M. et al. A usability and safety analysis of electronic health records: a multi-center study. J. Am. Med. Inform. Assoc. 25, 1197–1201 (2018).

Ehrenfeld, J. M. & Wanderer, J. P. Technology as friend or foe? Do electronic health records increase burnout? Curr. Opin. Anaesthesiol. 31, 357–360 (2018).

Sinsky, C. et al. Allocation of physician time in ambulatory practice: a time and motion study in 4 specialties. Ann. Intern. Med. 165, 753–760 (2016).

Khamisa, N., Peltzer, K. & Oldenburg, B. Burnout in relation to specific contributing factors and health outcomes among nurses: a systematic review. Int. J. Environ. Res. Public Health 10, 2214–2240 (2013).

Duffy, W. J., Kharasch, M. S. & Du, H. Point of care documentation impact on the nurse–patient interaction. Nurs. Adm. Q. 34, E1–E10 (2010).

Chang, C.-P., Lee, T.-T., Liu, C.-H. & Mills, M. E. Nurses’ experiences of an initial and reimplemented electronic health record use. Comput. Inform. Nurs. 34, 183–190 (2016).

Shanafelt, T. D. et al. Relationship between clerical burden and characteristics of the electronic environment with physician burnout and professional satisfaction. Mayo Clin. Proc. 91, 836–848 (2016).

Robinson, K. E. & Kersey, J. A. Novel electronic health record (EHR) education intervention in large healthcare organization improves quality, efficiency, time, and impact on burnout. Medicine (Baltimore) 97, e12319 (2018).

Toussaint, W. et al. Design considerations for high impact, automated echocardiogram analysis. Preprint at https://doi.org/10.48550/arXiv.2006.06292 (2020).

Brown, T. et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems 33 https://proceedings.neurips.cc/paper/2020/file/1457c0d6bfcb4967418bfb8ac142f64a-Paper.pdf (NeurIPS, 2020).

Zhao, W. X. et al. A survey of large language models. Preprint at https://doi.org/10.48550/arXiv.2303.18223 (2023).

Bubeck, S. et al. Sparks of artificial general intelligence: early experiments with GPT-4. Preprint at https://doi.org/10.48550/arXiv.2303.12712 (2023).

Liang, P. et al. Holistic evaluation of language models. Transact. Mach. Learn. Res. (in the press).

Zheng, L. et al. Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena. Preprint at https://doi.org/10.48550/arXiv.2306.05685 (2023).

Wornow, M. et al. The shaky foundations of large language models and foundation models for electronic health records. NPJ Digit. Med. 6, 135 (2023).

Thirunavukarasu, A. J. et al. Large language models in medicine. Nat. Med. 29, 1930–1940 (2023).

Singhal, K. et al. Large language models encode clinical knowledge. Nature https://doi.org/10.1038/s41586-023-06291-2 (2023).

Tu, T. et al. Towards generalist biomedical AI. Preprint at https://doi.org/10.48550/arXiv.2307.14334 (2023).

Toma, A. et al. Clinical Camel: an open-source expert-level medical language model with dialogue-based knowledge encoding. Preprint at https://doi.org/10.48550/arXiv.2305.12031 (2023).

Van Veen, D. et al. RadAdapt: radiology report summarization via lightweight domain adaptation of large language models. In 22nd Workshop on Biomedical Natural Language Processing and BioNLP Shared Tasks 449–460 (Association for Computational Linguistics, 2023).

Mathur, Y. et al. SummQA at MEDIQA-Chat 2023: in-context learning with GPT-4 for medical summarization. Preprint at https://doi.org/10.48550/arXiv.2306.17384 (2023).

Saravia, E. Prompt engineering guide. https://github.com/dair-ai/Prompt-Engineering-Guide (2022).

Best practices for prompt engineering with OpenAI API. https://help.openai.com/en/articles/6654000-best-practices-for-prompt-engineering-with-openai-api (2023).

Chung, H. et al. Scaling instruction-finetuned language models. Preprint at https://doi.org/10.48550/arXiv.2210.11416 (2022).

Tay, Y. et al. UL2: unifying language learning paradigms. Preprint at https://doi.org/10.48550/arXiv.2205.05131 (2023).

Taori, R. et al. Stanford Alpaca: an instruction-following LLaMA model. https://github.com/tatsu-lab/stanford_alpaca (2023).

Han, T. et al. MedAlpaca—an open-source collection of medical conversational AI models and training data. Preprint at https://doi.org/10.48550/arXiv.2304.08247 (2023).

The Vicuna Team. Vicuna: an open-source chatbot impressing GPT-4 with 90%* ChatGPT quality. https://lmsys.org/blog/2023-03-30-vicuna/ (2023).

Touvron, H. et al. Llama 2: open foundation and fine-tuned chat models. Preprint at https://doi.org/10.48550/arXiv.2307.09288 (2023).

OpenAI. ChatGPT. https://openai.com/blog/chatgpt (2022).

OpenAI. GPT-4 technical report. Preprint at https://doi.org/10.48550/arXiv.2303.08774 (2023).

Lampinen, A. K. et al. Can language models learn from explanations in context? In Findings of the Association for Computational Linguistics: EMNLP 2022 https://aclanthology.org/2022.findings-emnlp.38.pdf (Association for Computational Linguistics, 2022).

Dettmers, T., Pagnoni, A., Holtzman, A. & Zettlemoyer, L. QLoRA: efficient finetuning of quantized LLMs. Preprint at https://doi.org/10.48550/arXiv.2305.14314 (2023).

Yim, W.W. et al. Aci-bench: a novel ambient clinical intelligence dataset for benchmarking automatic visit note generation. Sci. Data https://doi.org/10.1038/s41597-023-02487-3 (2023).

Papineni, K., Roukos, S., Ward, T. & Zhu, W.-J. Bleu: a method for automatic evaluation of machine translation. In Proc. of the 40th Annual Meeting of the Association for Computational Linguistics. https://dl.acm.org/doi/pdf/10.3115/1073083.1073135 (Association for Computing Machinery, 2002).

Walsh, K. E. et al. Measuring harm in healthcare: optimizing adverse event review. Med. Care 55, 436–441 (2017).

Zhang, T., Kishore, V., Wu, F., Weinberger, K. Q. & Artzi, Y. BERTScore: evaluating text generation with BERT. International Conference on Learning Representations. https://openreview.net/forum?id=SkeHuCVFDr (2020).

Strobelt, H. et al. Interactive and visual prompt engineering for ad-hoc task adaptation with large language models. IEEE Trans. Vis. Comput. Graph. 29, 1146–1156 (2022).

Wang, J. et al. Prompt engineering for healthcare: methodologies and applications. Preprint at https://doi.org/10.48550/arXiv.2304.14670 (2023).

Jozefowicz, R., Vinyals, O., Schuster, M., Shazeer, N. & Wu, Y. Exploring the limits of language modeling. Preprint at https://doi.org/10.48550/arXiv.1602.02410 (2016).

Chang, Y. et al. A survey on evaluation of large language models. A CM Trans. Intell. Syst. Technol. https://doi.org/10.1145/3641289 (2023).

Poli, M. et al. Hyena hierarchy: towards larger convolutional language models. In Proceedings of the 40thInternational Conference on Machine Learning 202, 1164 (2023).

Ding, J. et al. LongNet: scaling transformers to 1,000,000,000 tokens. Preprint at https://doi.org/10.48550/arXiv.2307.02486 (2023).

Lin, C.-Y. ROUGE: a package for automatic evaluation of summaries. In Text Summarization Branches Out 74–81 (Association for Computational Linguistics, 2004).

Ma, C. et al. ImpressionGPT: an iterative optimizing framework for radiology report summarization with chatGPT. Preprint at https://doi.org/10.48550/arXiv.2304.08448 (2023).

Wei, S. et al. Medical question summarization with entity-driven contrastive learning. Preprint at https://doi.org/10.48550/arXiv.2304.07437 (2023).

Manakul, P. et al. CUED at ProbSum 2023: Hierarchical ensemble of summarization models. In The 22nd Workshop on Biomedical Natural Language Processing and BioNLP Shared Tasks 516–523 (Association for Computational Linguistics, 2023).

Yu, F. et al. Evaluating progress in automatic chest x-ray radiology report generation. Patterns (N Y) 4, 100802 (2023).

Tang, L. et al. Evaluating large language models on medical evidence summarization. NPJ Digit. Med. 6, 158 (2023).

Johnson, A., Pollard, T. & Mark, R. MIMIC-III Clinical Database Demo (version 1.4). PhysioNet https://doi.org/10.13026/C2HM2Q (2019).

Omiye, J. A., Lester, J. C., Spichak, S., Rotemberg, V. & Daneshjou, R. Large language models propagate race-based medicine. NPJ Digit. Med. 6, 195 (2023).

Zack, T. et al. Assessing the potential of GPT-4 to perpetuate racial and gender biases in health care: a model evaluation study. Lancet Digit. Health 6, e12–e22 (2024).

Chen, M. X. et al. The best of both worlds: combining recent advances in neural machine translation. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) https://doi.org/10.18653/v1/P18-1008 (Association for Computational Linguistics, 2018).

Shi, T., Keneshloo, Y., Ramakrishnan, N. & Reddy, C. K. Neural abstractive text summarization with sequence-to-sequence models. ACM Trans. Data Sci. https://doi.org/10.1145/3419106 (2021).

Raffel, C. et al. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21, 5485–5551 (2020).

Longpre, S. et al. The Flan collection: designing data and methods for effective instruction tuning. Preprint at https://doi.org/10.48550/arXiv.2301.13688 (2023).

Lehman, E. et al. Do we still need clinical language models? In Proceedings of Machine Learning Research 209, 578–597 (Conference on Health, Inference, and Learning, 2023).

Lim, Z. W. et al. Benchmarking large language models’ performances for myopia care: a comparative analysis of ChatGPT-3.5, ChatGPT-4.0, and Google Bard. EBioMedicine 95, 104770 (2023).

Rosoł, M., Gąsior, J. S., Łaba, J., Korzeniewski, K. & Młyńczak, M. Evaluation of the performance of GPT-3.5 and GPT-4 on the Medical Final Examination. Preprint at medRxiv https://doi.org/10.1101/2023.06.04.23290939 (2023).

Brin, D. et al. Comparing ChatGPT and GPT-4 performance in USMLE soft skill assessments. Sci. Rep. 13, 16492 (2023).

Deka, P. et al. Evidence extraction to validate medical claims in fake news detection. In Lecture Notes in Computer Science. https://doi.org/10.1007/978-3-031-20627-6_1 (Springer, 2022).

Nie, F., Chen, M., Zhang, Z. & Cheng, X. Improving few-shot performance of language models via nearest neighbor calibration. Preprint at https://doi.org/10.48550/arXiv.2212.02216 (2022).

Hu, E. et al. LoRA: low-rank adaptation of large language models. Preprint at https://doi.org/10.48550/arXiv.2106.09685 (2021).

Peng, A., Wu, M., Allard, J., Kilpatrick, L. & Heidel, S. GPT-3.5 Turbo fine-tuning and API updates https://openai.com/blog/gpt-3-5-turbo-fine-tuning-and-api-updates (2023).

Demner-Fushman, D. et al. Preparing a collection of radiology examinations for distribution and retrieval. J. Am. Med. Inform. Assoc. 23, 304–310 (2016).

Johnson, A. et al. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci. Data 6, 317 (2019).

Delbrouck, J.-B., Varma, M., Chambon, P. & Langlotz, C. Overview of the RadSum23 shared task on multi-modal and multi-anatomical radiology report summarization. In Proc. of the 22st Workshop on Biomedical Language Processing https://doi.org/10.18653/v1/2023.bionlp-1.45 (Association for Computational Linguistics, 2023).

Demner-Fushman, D., Ananiadou, S. & Cohen, K. B. The 22nd Workshop on Biomedical Natural Language Processing and BioNLP Shared Tasks. https://aclanthology.org/2023.bionlp-1 (Association for Computational Linguistics, 2023).

Johnson, A. et al. Mimic-iv. https://physionet.org/content/mimiciv/1.0/ (2020).

Ben Abacha, A. & Demner-Fushman, D. On the summarization of consumer health questions. In Proc. of the 57th Annual Meeting of the Association for Computational Linguistics https://doi.org/10.18653/v1/P19-1215 (Association for Computational Linguistics, 2019).

Chen, Z., Varma, M., Wan, X., Langlotz, C. & Delbrouck, J.-B. Toward expanding the scope of radiology report summarization to multiple anatomies and modalities. In Proc. of the 61st Annual Meeting of the Association for Computational Linguistics https://doi.org/10.18653/v1/2023.acl-short.41 (Association for Computational Linguistics, 2023).

Gao, Y. et al. Overview of the problem list summarization (ProbSum) 2023 shared task on summarizing patients' active diagnoses and problems from electronic health record progress notes. In Proceedings of the Association for Computational Linguistics. Meeting https://doi.org/10.18653/v1/2023.bionlp-1.43 (2023).

Gao, Y., Miller, T., Afshar, M. & Dligach, D. BioNLP Workshop 2023 Shared Task 1A: Problem List Summarization (version 1.0.0). PhysioNet. https://doi.org/10.13026/1z6g-ex18 (2023).

Goldberger, A. L. et al. PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals. Circulation 101, e215–e220 (2000).

Abacha, A. B., Yim, W.-W., Adams, G., Snider, N. & Yetisgen-Yildiz, M. Overview of the MEDIQA-Chat 2023 shared tasks on the summarization & generation of doctor–patient conversations. In Proc. of the 5th Clinical Natural Language Processing Workshop https://doi.org/10.18653/v1/2023.clinicalnlp-1.52 (2023).

Yim, W., Ben Abacha, A., Snider, N., Adams, G. & Yetisgen, M. Overview of the MEDIQA-Sum task at ImageCLEF 2023: summarization and classification of doctor–patient conversations. In CEUR Workshop Proceedings https://ceur-ws.org/Vol-3497/paper-109.pdf (2023).

Mangrulkar, S., Gugger, S., Debut, L., Belkada, Y. & Paul, S. PEFT: state-of-the-art parameter-efficient fine-tuning methods https://github.com/huggingface/peft (2022).

Frantar, E., Ashkboos, S., Hoefler, T. & Alistarh, D. GPTQ: accurate post-training quantization for generative pre-trained transformers. Preprint at https://doi.org/10.48550/arXiv.2210.17323 (2022).

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. In International Conference on Learning Representations https://openreview.net/forum?id=Bkg6RiCqY7 (2019)

Wolf, T. et al. Transformers: state-of-the-art natural language processing. In Proc. of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations https://doi.org/10.18653/v1/2020.emnlp-demos.6 (Association for Computational Linguistics, 2020).

Soldaini, L. & Goharian, N. QuickUMLS: a fast, unsupervised approach for medical concept extraction. https://ir.cs.georgetown.edu/downloads/quickumls.pdf (2016).

Okazaki, N. & Tsujii, J. Simple and efficient algorithm for approximate dictionary matching. In Proc. of the 23rd International Conference on Computational Linguistics https://aclanthology.org/C10-1096.pdf (Association for Computational Linguistics, 2010).

Koo, T. K. & Li, M. Y. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J. Chiropr. Med. 15, 155–163 (2016).

Vallat, R. Pingouin: statistics in Python. J. Open Source Softw. 3, 1026 (2018).

Acknowledgements

Microsoft provided Azure OpenAI credits for this project via both the Accelerate Foundation Models Academic Research (AFMAR) program and also a cloud services grant to Stanford Data Science. Additional compute support was provided by One Medical, which A.A. used as part of his summer internship. C.L. is supported by National Institute of Health (NIH) grants R01 HL155410 and R01 HL157235, by Agency for Healthcare Research and Quality grant R18HS026886, by the Gordon and Betty Moore Foundation and by the National Institute of Biomedical Imaging and Bioengineering under contract 75N92020C00021. A.C. receives support from NIH grants R01 HL167974, R01 AR077604, R01 EB002524, R01 AR079431 and P41 EB027060; from NIH contracts 75N92020C00008 and 75N92020C00021; and from GE Healthcare, Philips and Amazon.

Author information

Authors and Affiliations

Contributions

D.V.V. collected data, developed code, ran experiments, designed reader studies, analyzed results, created figures and wrote the manuscript. All authors reviewed the manuscript and provided meaningful revisions and feedback. C.V.U., L.B. and J.B.D. provided technical advice, in addition to conducting qualitative analysis (C.V.U.), building infrastructure for the Azure API (L.B.) and implementing the MEDCON metric (J.B.). A.A. assisted in model fine-tuning. C.B., A.P., M.P., E.P.R. and A.S. participated in the reader study as radiologists. N.R., P.H., W.C., N.A. and J.H. participated in the reader study as hospitalists. C.P.L., J.P. and A.S.C. provided student funding. S.G. advised on study design, for which J.H. and J.P. provided additional feedback. J.P. and A.S.C. guided the project, with A.S.C. serving as principal investigator and advising on technical details and overall direction. No funders or third parties were involved in study design, analysis or writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Medicine thanks Kirk Roberts and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Ming Yang, in collaboration with the Nature Medicine team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

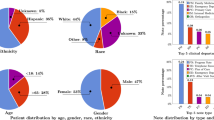

Extended Data Fig. 1 ICL vs. QLoRA.

Summarization performance comparing one in-context example (ICL) vs. QLoRA across all open-source models on patient health questions.

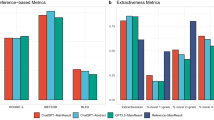

Extended Data Fig. 2 Quantitative results across all metrics.

Metric scores vs. number of in-context examples across models and datasets. We also include the best model fine-tuned with QLoRA (FLAN-T5) as a horizontal dashed line.

Extended Data Fig. 3 Annotation: progress notes.

Qualitative analysis of two progress notes summarization examples from the reader study. The table (lower right) contains reader scores for these examples and the task average across all samples.

Extended Data Fig. 4 Annotation: patient questions.

Qualitative analysis of two patient health question examples from the reader study. The table (lower left) contains reader scores for these examples and the task average across all samples.

Extended Data Fig. 5 Effect of model size.

Comparing Llama-2 (7B) vs. Llama-2 (13B). The dashed line denotes equivalence, and each data point corresponds to the average score of s = 250 samples for a given experimental configuration, that is {dataset x m in-context examples}.

Extended Data Fig. 6 Example: dialogue.

Example of the doctor-patient dialogue summarization task, including ‘assessment and plan’ sections generated by both a medical expert and the best model.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Van Veen, D., Van Uden, C., Blankemeier, L. et al. Adapted large language models can outperform medical experts in clinical text summarization. Nat Med 30, 1134–1142 (2024). https://doi.org/10.1038/s41591-024-02855-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41591-024-02855-5

This article is cited by

-

Large language models for reducing clinicians’ documentation burden

Nature Medicine (2024)