Abstract

Large-scale social networks are thought to contribute to polarization by amplifying people’s biases. However, the complexity of these technologies makes it difficult to identify the mechanisms responsible and evaluate mitigation strategies. Here we show under controlled laboratory conditions that transmission through social networks amplifies motivational biases on a simple artificial decision-making task. Participants in a large behavioural experiment showed increased rates of biased decision-making when part of a social network relative to asocial participants in 40 independently evolving populations. Drawing on ideas from Bayesian statistics, we identify a simple adjustment to content-selection algorithms that is predicted to mitigate bias amplification by generating samples of perspectives from within an individual’s network that are more representative of the wider population. In two large experiments, this strategy was effective at reducing bias amplification while maintaining the benefits of information sharing. Simulations show that this algorithm can also be effective in more complex networks.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Experiment and simulation data for this study are available through the Open Science Repository at https://doi.org/10.17605/OSF.IO/YTH5R.

Code availability

Code for both experiments, data analyses and simulations are available at https://doi.org/10.17605/OSF.IO/YTH5R, which contains an archived version of a GitHub repository containing all of the experiment code. Both experiments were built using Dallinger (Experiment 1: 5.1.0; Experiment 2: custom fork; Experiment 3: 9.0.0). The resampling algorithm in Experiments 2 and 3 were implemented in Python (3.9) using NumPyro (0.7.2). The resampling algorithm for the power analyses and the psychometric models of Experiment 1 were implemented in R using Rstan (2.21.3). The predictions shown in Extended Data Fig. 1 were generated using MATLAB. Mixed effects models were implemented in R (4.1.2) using lme4 (1.1.28). Network simulations were done in Python (3.10.4) using the NetworkX library (3.1).

References

Lerman, K. & Ghosh, R. Information contagion: an empirical study of the spread of news on digg and twitter social networks. In Fourth International AAAI Conference on Weblogs and Social Media (AAAI Press, 2010).

Bakshy, E., Rosenn, I., Marlow, C. & Adamic, L. The role of social networks in information diffusion. In Proceedings of the 21st international conference on World Wide Web 519–528 (ACM, 2012).

Lerman, K. Social information processing in news aggregation. IEEE Internet Comput. 11, 16–28 (2007).

Hermida, A. in The SAGE Handbook of Digital Journalism (eds Witschge, T. et al.) 81–94 (SAGE Publications, 2016).

Gainous, J. & Wagner, K. M.Tweeting to Power: The Social Media Revolution in American Politics (Oxford Univ. Press, 2013).

Burns, K. S. Celeb 2.0: How Social Media Foster Our Fascination with Popular Culture (ABC-CLIO, 2009).

Bakshy, E., Messing, S. & Adamic, L. A. Exposure to ideologically diverse news and opinion on Facebook. Science 348, 1130–1132 (2015).

Conover, M. et al. Political polarization on Twitter. Proc. International AAAI Conference on Web and Social Media 5, 89–96 (2011).

Pariser, E. The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think (Penguin, 2011).

Levy, R. Social media, news consumption, and polarization: evidence from a field experiment. Am. Econ. Rev. 111, 831–70 (2021).

Cinelli, M., De Francisci Morales, G., Galeazzi, A., Quattrociocchi, W. & Starnini, M. The echo chamber effect on social media. Proc. Natl. Acad. Sci. USA 118, e2023301118 (2021).

Shin, J. & Thorson, K. Partisan selective sharing: the biased diffusion of fact-checking messages on social media. J. Commun. 67, 233–255 (2017).

Settle, J. E. Frenemies: How Social Media Polarizes America (Cambridge Univ. Press, 2018).

Allcott, H., Gentzkow, M. & Yu, C. Trends in the diffusion of misinformation on social media. Res. Politics 6, 2053168019848554 (2019).

Allcott, H. & Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 31, 211–36 (2017).

Tucker, J. A. et al. Social media, political polarization, and political disinformation: a review of the scientific literature. SSRN Electron. J. https://doi.org/10.2139/ssrn.3144139 (2018).

Yarchi, M., Baden, C. & Kligler-Vilenchik, N. Political polarization on the digital sphere: a cross-platform, over-time analysis of interactional, positional, and affective polarization on social media. Polit. Commun. 38, 98–139 (2021).

Brady, W. J., McLoughlin, K., Doan, T. N. & Crockett, M. J. How social learning amplifies moral outrage expression in online social networks. Sci. Adv. 7, eabe5641 (2021).

Eom, Y.-H. & Jo, H.-H. Generalized friendship paradox in complex networks: the case of scientific collaboration. Sci. Rep. 4, 1–6 (2014).

Alipourfard, N., Nettasinghe, B., Abeliuk, A., Krishnamurthy, V. & Lerman, K. Friendship paradox biases perceptions in directed networks. Nat. Commun. 11, 707 (2020).

Jackson, M. O. The friendship paradox and systematic biases in perceptions and social norms. J. Polit. Econ. 127, 777–818 (2019).

Balkin, J. M. How to regulate (and not regulate) social media. Knight Institute Occasional Paper Series https://knightcolumbia.org/content/how-to-regulate-and-not-regulate-social-media (2020).

Cusumano, M., Gawer, A. & Yoffie, D. Social media companies should self-regulate. Now. Harvard Business Review https://hbr.org/2021/01/social-media-companies-should-self-regulate-now (2021).

Lazer, D. M. et al. The science of fake news. Science 359, 1094–1096 (2018).

Bail, C. et al. Social-media reform is flying blind. Nature 603, 766 (2022).

Mason, W. & Watts, D. J. Collaborative learning in networks. Proc. Natl Acad. Sci. USA 109, 764–769 (2012).

Becker, J., Brackbill, D. & Centola, D. Network dynamics of social influence in the wisdom of crowds. Proc. Natl Acad. Sci. USA 114, E5070–E5076 (2017).

Jayles, B. et al. How social information can improve estimation accuracy in human groups. Proc. Natl Acad. Sci. USA 114, 12620–12625 (2017).

Rendell, L. et al. Why copy others? Insights from the social learning strategies tournament. Science 328, 208–213 (2010).

Smaldino, P. E. & Richerson, P. J. Human cumulative cultural evolution as a form of distributed computation. in Handbook of Human Computation (ed Michelucci, P.) 979–992 (Springer, 2013).

Moussaïd, M., Brighton, H. & Gaissmaier, W. The amplification of risk in experimental diffusion chains. Proc. Natl Acad. Sci. USA 112, 5631–5636 (2015).

Luo, M., Hancock, J. T. & Markowitz, D. M. Credibility perceptions and detection accuracy of fake news headlines on social media: effects of truth-bias and endorsement cues. Commun. Res. 49, 171–195 (2022).

Kahneman, D., Slovic, S. P., Slovic, P. & Tversky, A. Judgment under Uncertainty: Heuristics and Biases (Cambridge Univ. Press, 1982).

Hastorf, A. H. & Cantril, H. They saw a game; a case study. J. Abnorm. Soc. Psychol. 49, 129–134 (1954).

Dunning, D. & Balcetis, E. Wishful seeing: how preferences shape visual perception. Curr. Dir. Psychol. Sci. 22, 33–37 (2013).

Leong, Y. C., Hughes, B. L., Wang, Y. & Zaki, J. Neurocomputational mechanisms underlying motivated seeing. Nat. Hum. Behav. https://doi.org/10.1038/s41562-019-0637-z (2019).

Bruner, J. S. & Goodman, C. C. Value and need as organizing factors in perception. J. Abnorm. Soc. Psychol. 42, 33–44 (1947).

Balcetis, E. & Dunning, D. See what you want to see: motivational influences on visual perception. J. Person. Soc. Psychol. 91, 612–625 (2006).

Griffiths, T. L. & Kalish, M. L. Language evolution by iterated learning with Bayesian agents. Cogn. Sci. 31, 441–480 (2007).

Rendell, L., Fogarty, L. & Laland, K. N. Rogers’ paradox recast and resolved: population structure and the evolution of social learning strategies. Evolution 64, 534–548 (2010).

Mesoudi, A. & Whiten, A. The multiple roles of cultural transmission experiments in understanding human cultural evolution. Philos. Trans. R. Soc. B 363, 3489–3501 (2008).

Miton, H. & Charbonneau, M. Cumulative culture in the laboratory: methodological and theoretical challenges. Proc. R. Soc. B 285, 20180677 (2018).

Boyd, R. & Richerson, P. J. Culture and the Evolutionary Process (Univ. Chicago Press, 1988).

Henrich, J. The Secret of Our Success: How Culture is Driving Human Evolution, Domesticating Our Species, and Making Us Smarter (Princeton Univ. Press, 2016).

Laland, K. Darwin’s Unfinished Symphony (Princeton Univ. Press, 2017).

Hardy, M. D., Krafft, P. M., Thompson, B. & Griffiths, T. L. Overcoming individual limitations through distributed computation: rational information accumulation in multigenerational populations. Top. Cogn. Sci. 14, 550–573 (2022).

Thompson, B., Van Opheusden, B., Sumers, T. & Griffiths, T. Complex cognitive algorithms preserved by selective social learning in experimental populations. Science 376, 95–98 (2022).

Olsson, A., Knapska, E. & Lindström, B. The neural and computational systems of social learning. Nat. Rev. Neurosci. 21, 197–212 (2020).

Acemoglu, D., Dahleh, M. A., Lobel, I. & Ozdaglar, A. Bayesian learning in social networks. Rev. Econ. Stud. 78, 1201–1236 (2011).

Liu, R. & Xu, F. Learning about others and learning from others: Bayesian probabilistic models of intuitive psychology and social learning. Adv. Child Dev. Behav. 63, 309–343 (2022).

Embretson, S. E. & Reise, S. P. Item Response Theory for Psychologists (Psychology Press, 2013).

Balietti, S., Getoor, L., Goldstein, D. G. & Watts, D. J. Reducing opinion polarization: effects of exposure to similar people with differing political views. Proc. Natl Acad. Sci. USA 118, e2112552118 (2021).

Guilbeault, D., Becker, J. & Centola, D. Social learning and partisan bias in the interpretation of climate trends. Proc. Natl Acad. Sci. USA 115, 9714–9719 (2018).

Bail, C. A. et al. Exposure to opposing views on social media can increase political polarization. Proc. Natl Acad. Sci. USA 115, 9216–9221 (2018).

Tokdar, S. T. & Kass, R. E. Importance sampling: a review. Wiley Interdisc. Rev. Comput. Stat. 2, 54–60 (2010).

Elvira, V., Martino, L., Luengo, D. & Bugallo, M. F. Generalized multiple importance sampling. Stat. Sci. 34, 129–155 (2019).

Mitchell, A., Gottfried, J., Kiley, J. & Matsa, K. E. Political polarization & media habits. Pew Research Center’s Journalism Project https://policycommons.net/artifacts/619536/political-polarization-media-habits/1600676 (2014).

Centola, D. & Macy, M. Complex contagions and the weakness of long ties. Am. J. Sociol. 113, 702–734 (2007).

Granovetter, M. S. The strength of weak ties. Am. J. Sociol. 78, 1360–1380 (1973).

Watts, D. J. Small Worlds: The Dynamics of Networks between Order and Randomness (Princeton Univ. Press, 2004).

Watts, D. J. & Strogatz, S. H. Collective dynamics of ‘small-world’ networks. Nature 393, 440–442 (1998).

Centola, D., Eguíluz, V. M. & Macy, M. W. Cascade dynamics of complex propagation. Physica A 374, 449–456 (2007).

Watts, D. J. The “new” science of networks. Annu. Rev. Sociol. 30, 243–270 (2004).

Feld, S. L. Why your friends have more friends than you do. Am. J. Sociol. 96, 1464–1477 (1991).

Mislove, A., Marcon, M., Gummadi, K. P., Druschel, P. & Bhattacharjee, B. Measurement and analysis of online social networks. In Proc. of the 7th ACM SIGCOMM Conference on Internet Measurement 29–42 (ACM, 2007).

Kumar, R., Novak, J. & Tomkins, A. Structure and evolution of online social networks. In Proc. of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 611–617 (ACM, 2006).

Dunbar, R. I., Arnaboldi, V., Conti, M. & Passarella, A. The structure of online social networks mirrors those in the offline world. Soc. Netw. 43, 39–47 (2015).

Whalen, A., Griffiths, T. L. & Buchsbaum, D. Sensitivity to shared information in social learning. Cogn. Sci. 42, 168–187 (2018).

Molavi, P., Tahbaz-Salehi, A. & Jadbabaie, A. Foundations of non-Bayesian social learning. Columbia Business School https://ssrn.com/abstract=2683607 (2017).

DeVito, M. A. From editors to algorithms: a values-based approach to understanding story selection in the facebook news feed. Digit. Journal. 5, 753–773 (2017).

Lazer, D. The rise of the social algorithm. Science 348, 1090–1091 (2015).

Walter, N., Cohen, J., Holbert, R. L. & Morag, Y. Fact-checking: a meta-analysis of what works and for whom. Polit. Commun. 37, 350–375 (2020).

Guess, A. M. et al. A digital media literacy intervention increases discernment between mainstream and false news in the United States and India. Proc. Natl Acad. Sci. USA 117, 15536–15545 (2020).

Grimmelmann, J. The virtues of moderation. Yale J. Law Technol. 17, 42–109 (2015).

Arganda, S., Pérez-Escudero, A. & de Polavieja, G. G. A common rule for decision making in animal collectives across species. Proc. Natl Acad. Sci. USA 109, 20508–20513 (2012).

Hoffman, M. D. & Gelman, A. The no-U-turn sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. J. Mach. Learn. Res. 15, 1593–1623 (2014).

Chmielewski, M. & Kucker, S. C. An MTurk crisis? Shifts in data quality and the impact on study results. Soc. Psychol. Person. Sci. 11, 464–473 (2020).

Hagberg, A., Swart, P. & Schult, D. Exploring Network Structure, Dynamics, and Function using NetworkX (Los Alamos National Lab, 2008).

Acknowledgements

This work was made possible with funding T.L.G. received from the NOMIS Foundation. The funders had no role in study design, data collection and analysis, decision to publish or preparation of the paper.

Author information

Authors and Affiliations

Contributions

All authors contributed to designing the experiment, developing the mitigation algorithm, and writing the paper. M.D.H. and B.D.T. implemented and ran the experiments and simulations. M.D.H. analysed the results.

Corresponding author

Ethics declarations

Competing interests

T.L.G. has previously received research funding from Facebook/Meta, a social media company. This funding was for a separate research project and has not supported this research. The authors declare no other competing interests.

Peer review

Peer review information

Nature Human Behaviour thanks the anonymous reviewers for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

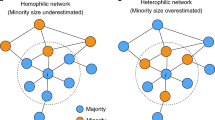

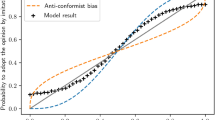

Extended Data Fig. 1 Stationary distributions for social networks.

(a) Stationary distributions on the proportion of people endorsing green as a function of the bias β towards green, for different levels of sensitivity to social information α. The bias translates into a stationary distribution strongly skewed towards green, with increasing effect as α increases. (b) Average proportion of green judgements under this stationary distribution, compared against the bias of a single individual. The social network amplifies individual biases. Because bias β and the information from the stimulus γd have the same effect in the mathematical equation on judgements, this model predicts that the effects of both will be exaggerated by participating in a social network: people will become more biased, but also more accurate. Note that this analysis could be equivalently stated in terms of blue bias and blue judgements.

Extended Data Fig. 2 Experiment 1 yoking structure and marked colour design.

All participants in the first wave were assigned to an asocial condition. In the second wave, social participants observed the judgements made by first-wave asocial participants in the same motivated condition and network index (see Methods, Experiment 1). Each network consisted of four participants with a marked colour of blue and four of green at each wave. A participant’s marked colour determined the colour of the dots in the stimulus (dot sizes and positions were fixed in a wave and network). To match this colour-swapping, social information was also presented in terms of the participant’s marked colour. That is, if 5 of 8 people chose their marked colour in the previous wave, participants with a marked colour of green would be told that 5 of 8 people chose green, and participants with a marked colour of blue that 5 of 8 participants chose blue. Marked colour corresponded to motivated colour for participants in motivated conditions. Participants in neutral conditions were assigned marked colours using an identical process, but were not informed of their marked colour.

Extended Data Fig. 3 Experiment 2 participant observations and estimated biases.

The resampling algorithm increased consensus between networks with induced biases towards green and blue. (a) Each bar shows the proportion of green judgements from the previous wave that each participant (n=784 for both conditions) observed over all 16 trials of the experiment. Bars are arranged in descending order, and bar colour corresponds to the participant’s motivated colour. (b) Estimated participant biases and transmission rates in the Social/Resampling condition. In our resampling algorithm, each participant’s judgement could be propagated multiple times to a participant at the next wave. Rather than only propagating judgements made by those with low estimated bias, the algorithm transmitted each participant’s judgements at similar rates. Points show the estimated green biases of participants in the Asocial/Motivated (wave 1) and Social/Motivated (waves 2-7) condition and the number of times their judgements were transmitted.

Extended Data Fig. 4 Simulation design.

For the network simulations and power analyses, we alternated between simulating participants’ judgements and the effects of our resampling procedure. We first fit the parameters \({\tilde{\Phi }}\) of the oracle models to Experiment 1 data Xe1 using Markov Chain Monte Carlo. One model was fit to Asocial/Motivated participants, and one to Social/Motivated participants. For the power analyses, at each wave t, we used either the asocial (wave 1) or social (waves 2-8) oracle to sample participant biases θt and simulate judgements θt (this process is illustrated here). By contrast, for the network simulations, we sampled a set of participant biases θ using the social oracle model and used these parameters to simulate judgements Xt at each iteration (there was no population turnover in our network simulations). To simulate our resampling procedure, we then fit IRT parameters θt to the simulated judgements at each wave. As in Experiments 1 and 2, these IRT models did not have access to the ground truth or participants’ true biases. We used our fitted IRT model to determine the importance weights wt for each judgement and resample a set of judgements \({\tilde{X}}_{{{{\rm{t}}}}}\) to propagate to the next wave or iteration. For each simulation, we repeated this process for 8 waves (the same fitted oracle model was used in all simulations).

Extended Data Fig. 5 Network simulation results.

Plots show the proportion of (a) bias-aligned and (b) correct judgements by iteration. Simulated participants in the first iteration made their judgements asocially. For each set of simulations, two histories were sampled starting at the second iteration; one where participants viewed the judgements made by their parents in the previous iteration (Social/Neutral), and one where these judgements were resampled using our algorithm (Social/Resampling). The same constructed networks were used for both histories, with one populated by simulated participant "paid" for blue dots, and one by simulated participant "paid" for green. Error bars show the standard errors of the proportions (n=204,800, 409600, and 819,200 for each point in the networks with 64, 128, and 256 participants, respectively).

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hardy, M.D., Thompson, B.D., Krafft, P.M. et al. Resampling reduces bias amplification in experimental social networks. Nat Hum Behav 7, 2084–2098 (2023). https://doi.org/10.1038/s41562-023-01715-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41562-023-01715-5