Abstract

The ability of the brain to extract patterns from the environment and predict future events, known as statistical learning, has been proposed to interact in a competitive manner with prefrontal lobe-related networks and their characteristic cognitive or executive functions. However, it remains unclear whether these cognitive functions also possess a competitive relationship with implicit statistical learning across individuals and at the level of latent executive function components. In order to address this currently unknown aspect, we investigated, in two independent experiments (NStudy1 = 186, NStudy2 = 157), the relationship between implicit statistical learning, measured by the Alternating Serial Reaction Time task, and executive functions, measured by multiple neuropsychological tests. In both studies, a modest, but consistent negative correlation between implicit statistical learning and most executive function measures was observed. Factor analysis further revealed that a factor representing verbal fluency and complex working memory seemed to drive these negative correlations. Thus, the antagonistic relationship between implicit statistical learning and executive functions might specifically be mediated by the updating component of executive functions or/and long-term memory access.

Similar content being viewed by others

Introduction

Statistical learning (SL) is a fundamental function of human cognition that allows the implicit extraction of probabilistic regularities from the environment, even without intention, feedback, or reward, and is crucial for predictive processing1,2,3. SL contributes to the acquisition of language4, motor5,6, musical7,8,9 and social skills10,11,12, as well as habits13,14,15. SL can occur incidentally, without awareness and the intention to learn16,17,18,19,20,21. SL does not function in an isolated manner, but in either cooperative or competitive interactions with other cognitive processes2,22. Here, we aim to investigate the interaction between implicit SL and executive functions, and to determine which aspects of executive functions show a positive (cooperative) and which a negative (competitive) relationship with SL.

The competition hypothesis was coined in the framework of interactive memory systems22,23,24,25. According to this framework, learning can rely on either the basal ganglia-based procedural system, or the medial temporal lobe-based declarative system. Larger reliance on one implies a smaller reliance on the other. Initial evidence for this hypothesis has come both from animal26,27 and human neuroimaging studies28,29. Later, studies rooted in computational neuroscience and reinforcement learning also shed more light on the role of models in guiding learning in these different systems30. It was proposed that the distinction between the declarative and procedural systems might map onto the distinction between model-based and model-free learning algorithms31,32. Model-based learning processes build and make use of a model of the environment to flexibly guide choice, but are more computationally demanding. In contrast, model-free learning processes are computationally less expensive as they rely only on recent outcomes; however, this simplicity allows for less flexibility and sensitivity to contingency changes. The brain is assumed to arbitrate between these two learning systems in a dynamic fashion both during the completion of individual tasks29,33,34, and also throughout the lifespan35,36,37. Multiple possible arbitration mechanisms have been described, including direct neuroanatomical connections between basal ganglia and medial temporal cortex, differential effects of neuromodulators, and indirect cross-inhibition via prefrontal control processes24. The present study focuses on this last mechanism.

The top-down cognitive processes necessary for the flexible cognitive control of behaviour are often collectively referred to as executive functions (EF)38,39,40. These processes are usually required when we encounter novel, unusual, or constraining situations. They include cognitive functions such as attentional control, cognitive flexibility, cognitive inhibition and working memory updating38,41. Studies exploring the neural basis of EF consistently show that these cognitive processes rely heavily on the prefrontal cortex (PFC)42,43,44,45. Moreover, individual differences in EF ability have been associated with individual differences in local prefrontal neural activity46, as well as functional connectivity between PFC and other brain regions47. Here, we focus on the question of whether and how individual differences in executive functions might affect SL.

Prefrontal EF has been implicated in arbitrating between learning systems by multiple studies. Lee et al.34, for example, showed that lateral PFC and frontopolar cortex seem to encode the reliability associated with both model-based and model-free learning systems, as well as the output of the arbitration process. Importantly, their functional connectivity results also suggested that the arbitration mechanism might work primarily by suppressing model-free learning, when it deems model-based learning to be more beneficial. An intriguing possibility is that the procedural, model-free system is the ‘default’ learner that is overridden by PFC control involvement. This hypothesis seems to be in line with a series of results that show a negative relationship between SL and prefrontal lobe control processes at both the behavioural and the neural level. For instance, disruption of PFC function by transcranial magnetic stimulation48,49, by hypnosis50, or by cognitive fatigue51 and engagement of prefrontal lobe resources using dual task conditions52,53 all have been reported to increase SL performance. Moreover, multiple neuroimaging studies have also revealed that SL seems to be associated with generally decreased functional connectivity both within PFC circuits and between the PFC and other networks54,55. Our goal is to test whether weaker PFC-dependent executive functions could lead to better SL across individuals. That is, do people with relatively weaker executive functions have relatively better SL ability?

The little interindividual differences research carried out so far has suggested that PFC-dependent cognitive functions might relate negatively to SL ability. For instance, higher EF ability has been found to be negatively related to SL ability across individuals56. However, several studies have indicated that working memory is independent from SL57. Furthermore, some recent studies have found positive associations between SL and EF ability58,59. Therefore, the relationship between SL and PFC-supported cognitive functions needs further examination in order to disentangle the still somewhat puzzling relation between the two neurocognitive mechanisms60. The relationship between implicit SL and PFC-supported cognitive functions has been empirically explored in two studies56,58; however, both studies had relatively low sample sizes (22 and 40, respectively) leading to low statistical power61, and inability to establish reliable correlations between the tasks62. As neither study included a replication sample, the robustness of their results is also currently unknown. Furthermore, they only studied the relationship between EF and SL at the task level, without considering the possibility that a pattern might instead emerge at the level of latent EF components, tapped into by multiple tasks. Such latent variable approaches might also lead to relatively higher reliability estimates of cognitive abilities, which is extremely important in inter-individual differences research63. However, the latent structure of EF abilities is itself a contentious issue, with a recent large-scale analysis failing to find measurement models that consistently showed a good fit64.

In this study, we aimed to investigate the relationship between EF and SL, using two large, independent samples acquired in two different studies, offering an internal replication and a far larger overall sample size than previous studies. Both studies measured implicit SL ability using the alternating serial reaction time task (ASRT) (Fig. 1), a valid and reliable task of implicit SL65,66, and EF ability using a wide variety of well-characterized neuropsychological tasks. Reasoning in terms of competitive neurocognitive systems, we hypothesized that a negative correlation would be found between EF ability and SL performance. However, due to the heterogeneous findings in the literature regarding SL – EF relationships and the structure of EF itself, we did not formulate strong hypotheses regarding specific tasks and latent EF components. Instead, we focused on assessing EF – SL relationships in a data-driven manner, at both the level of individual EF tasks, as well as the level of latent EF components, tapped into by multiple tasks assessing EF, extracted by exploratory factor analysis.

a In Study 1, the task stimuli consisted of yellow arrows that pointed in one of the four cardinal directions. A fixation cross was presented between each arrow. b In Study 2, task stimuli were the head of a dog that appeared in one out of four different positions. c Each stimuli position can be coded with a number. Here, we have 1 = left, 2 = up, 3 = down, 4 = right for the arrows in Study 1, and 1 = left, 2 = center-left, 3 = center-right, 4 = right of the screen for the dog’s head in Study 2. d The stimulus presentation followed an eight-element sequence, in which pattern (P) and random (R) stimuli alternated. The sequence was presented a total of 10 times per block. e Sixty-four different triplets (runs of three consecutive stimuli) could result from the sequence structure. Some of the triplets appear more often than others. High-probability triplets could end in pattern or random element whereas low-probability triplets always ended with a random element. High- and low-probability triplets are denoted in green and yellow, respectively.

Results

Descriptive statistics of the samples are presented in Table 1. Histograms showing the distributions of all variables are presented in Supplementary Fig. 2 and Supplementary Fig. 3, for Study 1 and 2, respectively.

Factor analyses of EF measures

Besides investigating the relationship between performance on individual EF tasks and implicit SL, we aimed to investigate the relationship between implicit SL and general EF ability that might be captured by shared variance on all tasks. To extract such a common EF measure from the individual tasks, we used Maximum Likelihood Exploratory Factor Analysis (ML EFA). We opted for EFA in order to find a set of latent constructs that capture our specific set of EF measures, while remaining a priori agnostic about the exact structure of these components. The ML approach allows the computation of various goodness of fit indices that are unavailable to principal factor approaches, such as RMSEA, and is the recommended factor extraction method by multiple authors67,68,69.

Study 1

We established the factorability of the data using 3 approaches. Firstly, the diagonals of the anti-image correlation matrix of the data were all over 0.5, which suggests good factorability. Secondly, the overall Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy was 0.59, which is somewhat below the cut-off value of 0.6 originally suggested by Kaiser 70, but above the cut-off of 0.5, suggested by other authors71. Finally, Bartlett’s test of sphericity was significant (χ2 (36) = 145.029, p < 0.001), suggesting adequate factorability. Based on this, the data were deemed appropriate for factor analysis.

To determine the number of factors to extract, we relied on parallel analysis, and goodness of fit indices. Parallel analysis suggested that 1 factor was extractable, based on the comparison of the eigenvalues with randomly generated data (Fig. 2a). Thus, the single factor solution was selected.

a In Study 1 and b in Study 2. The observed eigenvalues are indicated by red dots. These were compared to the distribution of eigenvalues obtained from simulated data, indicated by violin plots and boxplots. Stars indicate factors for which the observed eigenvalue is larger than the 95th percentile of the simulated distributions, and thus were deemed extractable.

This factor explained 16% of the variance. The χ2 test of the null hypothesis that one factor is sufficient was not significant (χ2 (27) = 36.87, p = 0.098), suggesting that the null can be accepted, meaning that our single factor was sufficient in capturing the full dimensionality of the data. Goodness of fit indices were also indicative of good fit (RMSEA = 0.044, 95% CI = [0.000, 0.077]; SRMR = 0.062). Factor loadings are presented in Table 2. Factor 1 had high loadings from the three fluency variables and from CSPAN, confirming the pattern of bivariate correlations (Fig. 5a). We calculated factor scores for each subject, which are used in the analyses below.

Study 2

We proceeded in the same manner for Study 2. We followed the same approach to establishing the factorability of data, as in the analysis of Study 1. The diagonals of the anti-image correlation matrix of the data were all over 0.5. The overall KMO measure of sampling adequacy was 0.60, meeting both more conservative and more liberal cut-off criteria. Finally, Bartlett’s test of sphericity was significant (χ2 (10) = 54.591, p < 0.001). Based on this, the data were deemed appropriate for factor analysis.

To determine the number of factors to extract, we again relied on parallel analysis. Parallel analysis suggested that 2 factors are extractable, based on the comparison of their eigenvalues with random data (Fig. 2b). Thus, the 2 factor solution was selected.

These two factors explained 19% and 14% of the variance, respectively. The total variance explained of the model was thus 34%, somewhat more than the explained variance of the Study 1 EFA model. The χ2 test of the null hypothesis that 2 factors are sufficient was not significant (χ2 (1) = 1.40, p = 0.236), suggesting that the null can be accepted, meaning that our 2 factors are sufficient in capturing the full dimensionality of the data. Goodness of fit indices were also indicative of good fit (RMSEA = 0.050, 95% CI = [0.000, 0.227]; SRMR = 0.020), although the RMSEA had a noticeably larger CI, compared to the Study 1 EFA model. Factor loadings are presented in Table 3. Factor 1 had high loadings from the 2 fluency variables and a somewhat weaker loading from DSPAN. Factor 2 had high positive loadings from the CSPAN, DSPAN and Corsi tasks. Thus, the factor structure reflected the dissociation between the fluency and the short-term memory measures.

Implicit SL trajectories and EF factor scores

We measured implicit SL ability by the ASRT task (see Methods for details). In this task, participants have to respond as fast and as accurately as possible to a series of visually presented stimuli. Each stimulus can appear on four different spatial locations, corresponding to four different response buttons. Importantly, there is a hidden underlying structure in which low-probability triplets (less predictable sequences of three successive stimuli), and high-probability (more predictable sequences of three successive stimuli) stimuli are interspersed. Performance differences between the low- and high-probability triplets indicate implicit SL, and overall performance improvements, irrespective of triplet category indicate general visuomotor skill learning and task proficiency.

Study 1

Block wise median reaction time was used as the outcome variable in a linear mixed model including Triplet Type (Factor: high- vs. low-probability), Block (1-25), and EF factor 1 scores, as well as all their higher order interactions as fixed effects, and subject-specific correlated intercepts and slopes for Block, as random effects (see Methods and Fig. 1). The Triplet Type factor indicates differences in implicit SL, while main effects and interactions without the Triplet Type factor are interpreted as differences in general skill learning. Learning trajectories are plotted in Fig. 3a, and full model results are presented in Supplementary Table 1.

The model resulted in a statistically significant main effect of Triplet Type (F(1,8924) = 83.53, p < 0.001), with faster reaction times for high-probability triplets than for low-probability triplets (b = −1.42, 95% CI = [-1.73, -1.12], high: 365 ms, 95% CI = [361,369]; low: 368 ms, 95% CI = [364,372]). This effect indicates that on average, subjects engaged in implicit SL. There was a statistically significant main effect of Block (F(1,183.97) = 40.73, p < 0.001), with decreasing overall reaction times throughout the task (b = −0.31, 95% CI = [-0.41, -0.12]). This effect indicates that on average subjects also engaged in general skill learning, i.e., they became faster overall as the task went on, irrespective of triplet probabilities. There was a statistically significant main effect of EF factor 1 (F(1,184.01) = 7.99, p = 0.004), indicating overall faster reaction times in participants with higher EF ability (b = −6.76, 95% CI = [-11.48, -2.04]). There was a statistically significant Triplet Type*Block interaction (F(1,8924.00) = 10.24, p = 0.001). This stemmed from increasing implicit SL with time (steeper decrease in RT for high-, than for low-probability triplets; high: b = −0.38, 95% CI = [-0.49, -0.28]; low: b = −0.24, 95% CI = [-0.35, -0.14]; high – low contrast p = 0.001).

Importantly, there was a Triplet Type*EF factor 1 interaction (F(1,8924) = 4.54, p = 0.031). EF factor 1 scores significantly negatively correlated with average implicit SL learning scores (Fig. 4a, Pearson’s r = −0.156, 95% CI = [-0.293, -0.012], p = 0.034, Spearman’s rho = −0.154, 95% CI = [-0.292, -0.011], p = 0.036). The Bayes factor for the one-sided alternative hypothesis that the two variables are negatively correlated was BF-0 = 1.683, meaning that the data are ~1.7 times more likely to occur under the alternative hypothesis, indicating anecdotal evidence in favour of it. Robustness checks of the Bayes factor to varying prior distribution width are included in the .jasp files in Supplementary Materials. The lack of a statistically significant Epoch*EF factor 1 interaction indicates that EF ability did not influence general skill learning. Whereas the lack of a statistically significant three-way interaction between Triplet Type*Epoch*EF factor 1 indicates that EF ability influenced overall implicit SL throughout the whole task and not the learning trajectory itself.

Study 2

Block wise median reaction time was used as the outcome variable in a linear mixed model including Triplet Type (Factor: high- vs. low-probability), Block (1–45), and EF factor 1 and 2 scores, as well as all their higher order interactions as fixed effects, and subject-specific correlated intercepts and slopes for Block, as random effects. Learning trajectories are plotted in Fig. 3b, and full model results are presented in Supplementary Table 2.

The model resulted in a statistically significant main effect of Triplet Type (F(1,13810.00) = 1289.09, p < 0.001), with faster reaction times for high-probability triplets than for low-probability triplets (b = −6.25, 95% CI = [-6.59, -5.91], high: 371 ms, 95% CI = [367,376]; low: 384 ms, 95% CI = [379,388]). This effect indicates that on average, subjects engaged in implicit SL. There was a statistically significant main effect of Block (F(1,154.00) = 662.76, p < 0.001), with decreasing overall reaction times throughout the task (b = −1.12, 95% CI = [-1.20, -1.03]). This effect indicates that on average subjects also engaged in general skill learning, i.e., they became faster overall as the task went on, irrespective of triplet probabilities. There was a statistically significant main effect of EF factor 2 scores (F(1,154.03) = 9.67, p = 0.002), indicating overall faster reaction times in participants with higher EF ability (b = −9.84, 95% CI = [-16.08, -3.59]). There was a statistically significant Triplet Type*Block interaction (F(1,13810.00) = 156.70, p < 0.001). This stemmed from increasing implicit SL with time (steeper decrease in RT for high-, than for low-probability triplets; high: b = −1.28, 95% CI = [-1.37, -1.19]; low: b = −0.95, 95% CI = [-1.04, -0.86]; high – low contrast p < 0.001). There was a statistically significant Block*EF factor 1 interaction (F(1,154.00) = 8.31, p = 0.005), with a significantly smaller decrease in RT at higher EF factor 1 scores (slope of Block at 25th percentile of EF 1: b = −1.20, 95% CI = [-1.30 -1.09]; slope of Block at 75th percentile of EF1: b = −1.05, 95% CI = [-1.14, -0.95]; contrast p = 0.005). This indicates that contrary to Study 1, EF ability did influence general skill learning to some degree in Study 2 and that high EF ability was associated with somewhat weaker general skill learning in this sample.

There was once again a Triplet Type*EF factor 1 interaction (F(1,13810.00) = 5.26, p = 0.022). EF factor 1 scores significantly negatively correlated with average implicit SL learning scores, and with a similar effect size, as in Study 1 (Fig. 4b, Pearson’s r = −0.170, 95% CI = [-0.319, -0.014], p = 0.033, Spearman’s rho = −0.150, 95% CI = [-0.300, 0.007], p = 0.060). The Bayes factor for the one-sided alternative hypothesis that the two variables are negatively correlated was BF-0 = 1.875, meaning that the data are ~1.9 times more likely to occur under the alternative hypothesis, indicating anecdotal evidence in favour of it.

EF factor 2 scores did not have a strong association with implicit SL, as reflected by the lack of significant Triplet Type*EF factor 2 or Triplet Type*Block*EF factor 2 interactions. EF factor 2 scores did not correlate significantly with average implicit SL learning scores (Factor 2: Pearson’s r = −0.034, 95% CI = [-0.190, 0.123], p = 0.672, Spearman’s rho = −0.023, 95% CI = [-0.179, 0.134], p = 0.771, BF-0 = 0.145). Robustness checks of the Bayes factor to varying prior distribution width are included in the .jasp files in Supplementary Materials. They also did not seem to influence general skill learning, as reflected in the lack of a significant Block*EF factor 2 interaction. The lack of a statistically significant three-way interaction between Triplet Type*Block*EF factor 1 indicates that, like in Study 1, EF ability influenced overall implicit SL throughout the whole task and not the learning trajectory itself, validating our use of whole task learning scores in the correlational analyses for Study 2 as well.

Correlations between implicit SL ability and individual EF tasks

Study 1

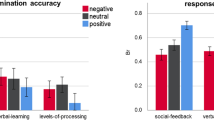

Bivariate Pearson’s correlations between individual EF tasks and average ASRT learning scores indicating implicit SL ability in Study 1 are presented in Fig. 5a. EF measures tended to be weakly or moderately positively correlated, the strongest relationships seemed to be between the fluency measures, and between the fluency measures and CSPAN.

a In Study 1 and b in Study 2. More negative and more positive correlations are indicated by red and blue backgrounds, respectively. The working memory and verbal fluency measures correlated moderately positively with each other, and weakly negatively with the ASRT task. P-values are not corrected for multiple comparisons. ***p < 0.001, **p < 0.01, *p < 0.05, +p < 0.10.

Implicit SL ability had generally negative correlations with individual EF measures, the strongest of these was with Action fluency (Pearson’s r = −0.165, 95% CI = [-0.302, -0.021], p = 0.025, Spearman’s rho = −0.162, 95% CI = [-0.299, -0.018], p = 0.027).

Study 2

Bivariate Pearson’s correlations between individual EF tasks and ASRT learning scores indicating implicit SL ability in Study 2 are presented in Fig. 5b. EF measures tended to be weakly or moderately positively correlated, again the fluency measures correlated with each other the strongest.

Implicit SL ability had generally negative correlations with individual EF measures, the strongest of these was with Semantic fluency (Pearson’s r = −0.167, 95% CI = [-0.315, -0.011], p = 0.037, Spearman’s rho = −0.145, 95% CI = [-0.295, 0.012], p = 0.070).

Continuously cumulating meta-analysis

Besides testing the hypothesis of a negative association between common EF ability and implicit SL in our two samples separately, we also ran a fixed-effect meta-analytic model, in order to pool evidence from both of our studies into a single effect size, while estimating their heterogeneity. Heterogeneity metrics revealed little between-studies variability in the effect, validating our choice of a fixed-effect model. The between-study heterogeneity variance was estimated at τ2 = 0, with an I2 value of 0%, Cochran’s Q test was also not statistically significant, Q(1) = 0.60, p = 0.440. The pooled effect size was negative and significantly different from zero, r = −0.115, 95% CI = [-0.218, -0.008], p = 0.035 (Fig. 6). The weights of Study 1 and Study 2 in the pooled effect were 54.3% and 45.7%, respectively. We also carried out this analysis using factor scores derived from all EF measures in both tasks, instead of just the shared ones (Supplementary Fig. 1). This analysis also yielded a pooled effect size, significantly more negative than 0, r = −0.162, 95% CI = [-0.264, -0.057], p = 0.003.

Pearson’s r between EF factor scores and implicit SL learning scores, along with its 95% CI is shown next to each individual study. Below, the pooled effect size from a fixed-effect meta-analytic model and its 95% CI is shown. Individual and total sample sizes are also indicated, as well as study weight. Factor scores were derived only from tasks that were shared by both studies.

Discussion

In this study, we explored how implicit SL relates to multiple components of prefrontal lobe-dependent EF. At the level of individual tasks, SL correlated negatively with most EF measures, the strongest of these correlations being with verbal fluency. At the level of latent EF components, an EF factor comprising verbal fluency and counting span scores in Study 1 and verbal fluency and digit span scores in Study 2 also correlated negatively with SL, with a modest, but similar effect size in both studies. Our results imply that individuals with better verbal fluency and working memory ability have lower implicit SL ability, suggesting that specific prefrontal lobe functions might interfere with implicit SL while still allowing for the acquisition of the underlying regularities. We aimed to improve upon previous studies and extend their results in several ways. We made use of far larger samples (NStudy1 = 186, NStudy2 = 157), giving us larger statistical power than any previous study in the field61. Our successful internal replication, using a second sample and a cumulative meta-analytic approach, speaks to the robustness of our results72. Our measurement of EF components at both the task and the latent variable level using exploratory factor analysis allowed for a more thorough characterization of this complex cognitive function38, and attenuated measurement error63, while remaining agnostic about the measurement model of EF64. Finally, we used an implicit SL task that has been shown to be both valid65 and reliable66, which is crucial, given the recent debate about the psychometric properties of commonly used implicit learning tasks73.

We interpret our results in the PFC-mediated competition model of declarative-procedural interactions22,24,25, and suggest that the observed negative relationship between EF and SL might be due to the suppression of model-free, procedural learning by prefrontal EF. Support for this theory has come not only from the effects of PFC disruption on learning48,49,50,51,52,53 and from computational modelling34 studies, but also from neural data. For example, a meta-analysis of multiple primate studies by Loonis et al.74 has revealed distinct patterns of post-choice oscillatory synchrony within PFC during implicit versus explicit learning, such that Delta/Theta band synchrony increased after correct choices during implicit learning, but after incorrect choices during explicit learning. Moreover, their results also suggested that whereas explicit learning was associated with increased synchrony between PFC and hippocampus in the alpha and beta bands, implicit learning was associated with decreased synchrony between PFC and caudate in the theta band. A similar pattern of results was revealed in humans by Voss et al.75, who showed that the use of a flexible, declarative learning strategy was linked to the interaction between the medial temporal lobe and the fronto-parietal attentional network, whereas the use of a more rigid, procedural learning strategy was linked to caudate nucleus fronto-parietal network interactions. Furthermore, neuroimaging studies also indicate that SL is associated with decreased functional connectivity within PFC circuits and between the PFC and other networks54,55.

In Study 1, the most parsimonious model consisted of a single factor, comprising fluency and counting span performances. The link between verbal fluency and working memory tasks is not surprising. Fluency scores have constantly been found to relate to verbal working memory76,77,78,79,80. Higher working memory capacity may aid in handling the cognitive load in fluency tasks81, while lower capacity may lead to more perseveration errors82. Shao et al.83 have also found that both category and letter fluency scores were uniquely predicted by updating in working memory. Similar findings that relate updating to verbal fluency performance were found by Gustavson et al.84. The factor structure uncovered by Fisk and Sharp 85 is also suggestive of this relationship. Although they loaded onto a separate factor more strongly, a factor representing updating also had quite high loadings from word fluency tasks. Thus, the factor in Study 1 may reflect updating. This makes sense when considering the demands of verbal fluency and counting span tasks. Both tasks require tracking and updating sequences of items. In verbal fluency tasks, individuals generate words fitting a specific category, requiring constant updating of working memory. Without updating, they may repeat words or struggle to generate new ones, affecting performance. Counting span tasks involve maintaining and updating a number sequence while performing a secondary task. Without updating, individuals may lose track of the sequence, negatively impacting performance. Updating appears to be a suitable explanation for the common factor in verbal fluency and counting span tasks. Although inhibition and set shifting have been proposed as significant factors in fluency tasks83, the absence of positive correlations between our measures of shifting (BCST) and inhibition (GNG) and other executive function tasks makes it challenging to consider them as appropriate explanations for factor 1.

While our general findings, suggestive of a negative relationship across individuals in EF and SL are in line with the competitive neurocognitive systems framework22,25,86, the fact that it is primarily for the updating component that this association was uncovered runs contrary to some previous results showing a positive relationship between working memory and SL58,87,88. The central idea of these studies was that larger working memory capacity might open up a larger “window” for serial order learning. However, as noted by Janacsek and Nemeth (2013)57, these effects seem primarily observed in explicit learning conditions and consolidation, rather than implicit learning of probabilistic representations per se. Our results imply that under implicit learning, EF updating might instead compete with SL. A possible explanation might be that updating might disrupt the stabilization of a simple predictive model allowing triplet learning in the ASRT task. However, we note that the nature of this kind of “updating” of implicit probabilistic representations is likely quite different from the explicit updating of items in working memory that is involved in EF tasks. Cognitive control, executive functions and working memory are related to a model-based computational strategy89,90. So our results might be interpretable as showing that more automatic, model-free learning is antagonistically related to model-based updating associated with goal-directed processes.

An alternative explanation to updating is that both working memory and fluency are strongly related to long-term memory access. Numerous theories and empirical evidence support the former91, while the fluency test merely involves retrieving words from the mental lexicon92. From this, we can speculate that if access to our long-term representations and models is poorer, it significantly enhances model-free learning because there is no interference from previous models in learning new patterns and predictions. This explanation aligns with the explanation provided by Ambrus et al.48, who attributed their results of inhibitory TMS-enhanced SL to difficulties in long-term memory access or the suppression of top-down processes. This might also explain the finding in Study 2, where only factor 1 exhibited a negative association with statistical learning, while factor 2 did not. Given that factor 2 is predominantly influenced by short-term memory tasks (specifically counting span, digit span, and Corsi block), it is conceivable that it predominantly reflects maintenance of information rather than executive long-term memory retrieval. This distinction suggests that the tasks comprising factor 2 may capture cognitive processes more focused on the immediate retention of information, rather than the retrieval of information from long-term memory stores. However, this hypothesis needs to be validated through studies that examine long-term memory access, cognitive control, and predictive processes not only through behavioural but also neuroimaging techniques within a single experimental design.

While our study has notable strengths, including the incorporation of two large, independent samples, multiple EF measures, and the use of data-driven latent component extraction, there are some limitations that need to be mentioned, and addressed in future research. Firstly, the effect sizes were relatively small. The correlations between individual EF tasks and implicit SL were especially small, with only one fluency task in each dataset reaching statistical significance. This likely stems partially from the wide array of factors that influence EF and SL ability, and partially from attenuated correlations due to measurement error. We have recently estimated the reliability of the ASRT task in similar settings as the current study, to be between 0.754 and 0.791. If we correct our correlation coefficients for attenuation with the lowest reliability value from that study, we obtain \(r=\frac{-0.155}{\sqrt{0.754}}=-0.179\) and \(r=\frac{-0.170}{\sqrt{0.754}}=-0.196\), which are likely better estimates of the true relationships. Secondly, to replicate the results obtained in Study 1, we re-analysed data from a sample previously collected by us, and first described in Kóbor et al. (2017)18. While we note that as this is an earlier study, which was not designed with the research question of this study in mind, we believe the differences between protocols do not meaningfully diminish the informativeness of our replication attempt. Indeed, the two primary differences are the different set of EF tasks, and the longer ASRT. Regarding the first, the results of Study 1 show that tasks tapping into the updating component of EF (CSPAN and verbal fluency) showed the clearest relationship with implicit SL. These are exactly the tasks that were shared between studies. Regarding the second study, we have previously shown that longer ASRT tasks lead to more reliable learning scores66, which are crucial for correlational research designs. Thus, if anything the longer task should increase the power of our replication attempt. However, the different response to stimulus intervals of the two studies does decrease their comparability. As a result, it would be beneficial for future studies and replication attempts to show a greater consistency across studies in the protocol. Finally and relatedly, the incomplete nature of our EF battery should also be addressed by future work. Our set of EF tasks did not cover the most widely accepted theoretical model of EF components in an equal manner, as for set shifting and inhibition, we only had the BCST and GNG tasks, respectively41. Thus, our results should be extended and confirmed by future studies with a more complete set of EF tasks, and a more theory-driven assessment of latent EF components, for example with Confirmatory Factor Analytic models. We note however, that the ubiquity of the Unity and Diversity model has been questioned recently64.

This study represents a comprehensive examination of the relationship between implicit SL and EF by integrating multiple datasets and analyzing large participant cohorts. We found that general executive function has a negative relationship with implicit SL, suggesting that individuals with better EF functions might be worse at acquiring probabilistic models of visuospatial information. Our results fall in line with the theoretical framework stating that implicit automatic processing works in competition with PFC functions. This study takes a step further by exploring which components of executive functioning might be at the core of this competition. Our results are indicative that it is the updating component that is driving this relationship. Our study highlights the importance of exploring the relationship between different cognitive abilities using larger sets of subjects and in a data-driven manner.

Methods

Participants

For Study 1, conducted in France, participants took part in a 2-day experiment. One hundred eighty nine healthy young adults members of the general public were recruited through online advertisement with the following criteria: participants were right-handed, aged under 35 years, and with no or limited musical training (as measured by practice inferior to 10 years). Participants declared not having active neurological or psychiatric conditions, and declared not to be taking any psychoactive medication. Among the 189 participants, two did not come back for the second session and one did not comply with the task instructions on the first session. Thus, the data from the remaining 186 subjects is presented in this study. All participants provided signed informed consent agreements and received financial compensation for their participation. The relevant institutional review board (i.e., the “Comité de Protection des Personnes, CPP Est I” ID: RCB 2019-A02510-57) gave ethical approval for the study.

In Study 2, conducted in Hungary, 180 university students took part in a multi-session experiment. Criteria were the same as in Study 1, with the exception of being under 35 and the limitations on the musical expertise. From this pool, we excluded 23 subjects who had missing data on any of the EF tasks. This study was approved by the United Ethical Review Committee for Research in Psychology (EPKEB) in Hungary (Approval number: 30/2012) and by the research ethics committee of Eötvös Loránd University, Budapest, Hungary. Descriptive statistics of both samples are presented in Table 1.

Measure of statistical learning: Alternating serial reaction time (ASRT) task

In Study 1, implicit SL was measured by a modified version of the ASRT task88,93 (Fig. 1a). In this task, participants were presented with a yellow arrow on the center of the screen pointing in one of four possible directions (left, up, down or right) for 200 ms. The presentation of the arrow was followed by a presentation of a fixation cross for 500 ms. Using a four-button Cedrus RB-530 response box, participants were instructed to press, as quickly as possible, the button corresponding to the direction of the arrow. Finger placement on each of the buttons of the response box was as follows: the up button had to be pressed with the left index finger; the down button had to be pressed with the right thumb; the right button had to be pressed with the right index; and the left button had to be pressed with left thumb. If participants responded correctly, the fixation cross would remain in the screen for another 750 ms. If participants did not answer or answered incorrectly, an exclamation mark or an “X” would appear for 500 ms, respectively, followed by a 250 ms fixation cross.

Unknowingly to the participants, the appearance of the stimuli followed a predetermined structure where pattern elements (P) alternated with random elements (R) (e.g. 4-R-2-R-3-R-1-R, where the numbers represent the predetermined position of the stimuli, and “R” represent a random position) (Fig. 1c, d). Due to the pattern elements (P) alternating with random elements (R) in the ASRT task, some triplets (runs of three trials) had a higher probability of occurrence than others (Fig. 1e). For example, in an 4-R-2-R-3-R-1-R sequence, the (4-3-2) or (3-4-1) triplets have a higher probability of occurrence compared to (2-3-2) or (4-3-1) triplets, as the former type of triplets can be found in P-R-P or in R-P-R structures whereas the second type of triplets can only occur in R-P-R structures. As a result, the former type of triplets are five times more likely to occur than the second type of triplets. Thus, the two types of triplets are referred to as high- and low-probability triplets, respectively. Previous studies using the ASRT task have consistently shown that, with increasing practice in the task, participants’ responses to the last element of high-probability triplets become faster compared to responses to the last element of low-probability triplets18,93,94. Importantly, this phenomenon occurs without the emergence of an explicit knowledge of the sequence structure as reported by the participants18,21,94. Thus, implicit SL in the task can be measured by computing the difference of reaction times between the last elements of high-probability triplets and the last element of low-probability triplets.

This first session consisted of 25 blocks of the ASRT task. There were 85 stimuli in each block, of which the first five were randomly ordered for practice purposes followed by 10 repetitions of the eight-element alternating sequence.

The ASRT task employed in Study 2 had four notable differences to the version used in Study 1. Firstly, in this version of the task, the stimuli (here, a drawing of a dog’s head instead of arrows) could appear in one of four horizontally arranged empty circles on the screen, instead of the four cardinal directions (Fig. 1b). Secondly, the responses corresponded to the Z, C, B, and M keys on a QWERTY keyboard (with the rest of the keys removed). Thirdly, the task consisted of 45 blocks. We previously observed that although acceptable levels of reliability emerge even with 25 blocks, longer tasks lead to more reliable learning scores, which might be crucial for the correlational analyses we planned here66. Finally, the timing of the stimuli differed. The task in Study 2 was self-paced (i.e., the target stimulus remained on the screen until the correct response key was pressed) with a response-to-stimulus interval of 120 ms. Despite the differences, as detailed below, robust learning was observed in both studies, with similar distribution of learning scores, except an overall lower mean learning score in Study 1.

Neuropsychological tests for Study 1

The neuropsychological assessments conducted in Study 1 aimed to comprehensively capture Miyake’s conceptualization of executive functions, specifically focusing on cognitive flexibility, inhibition, and updating41. To operationalize these constructs, a battery of established and reliable cognitive tests was employed. The attentional network test, the Berg Card Sorting Test, the Counting Span Task, the Go/No-Go Task, and three verbal fluency tasks were administered to systematically evaluate the targeted executive functions. The selection of these assessments was based on their well-documented validity and reliability in previous research80,95,96,97,98.

Attentional network test (ANT)

The ANT allowed us to measure the capacities of three distinct networks of attention: the alerting, orienting and executive networks99. This task required participants to determine, as fast as possible, whether a central arrow, presented in a set of five arrows, points to the left or to the right. The set of arrows can appear above or below a fixation cross and can be preceded or not by a spatial cue indicating their following location. Furthermore, the central arrow can be congruent (pointing in the same direction) or incongruent (pointing in a different direction) to the other arrows. We followed the standard calculation of network scores99. The alerting component of attention was calculated by subtracting the mean RT of the central cue conditions from the mean of the no-cue conditions for each participant. In a similar manner, the orienting component of attention was calculated by subtracting the mean RT of the spatial cue conditions from the mean RT the center cue conditions and the executive component of attention was calculated by subtracting the mean RT of all congruent conditions from the mean RT of all incongruent conditions. In this task, higher scores for the alerting, orienting and executive scores indicate better attentional performances in these three aspects of attention.

Berg card sorting task (BCST)

We measured set shifting or cognitive flexibility using the computerised version of the BCST.64 available in the Psychology Experiment Building Language (PEBL) software100. In this test, a set of four cards on the top of the screen are presented to the participant. Each card has three characteristics: the colour, the shape and the number of items on the card. The participant was told to match new cards to the cards on the top of the screen according to one of the three characteristics, but they were not told which one; however, they received feedback about whether each choice they made was right or wrong. Thus, the participant was required to find the correct rule (matching characteristic) needed to match the cards as quickly and as accurately as possible. The participant was informed that the rule could change during the task. Cognitive flexibility in the BCST was measured by counting the perseverative errors, meaning the amount of errors reflecting lack of adaptation following a rule switch.

Counting Span (CSPAN) task

Updating or working memory capacity was measured by the CSPAN101. In this task, different shapes (blue circles, blue squares, and yellow circles) appeared on the computer screen. The participants’ task was to count out loud and retain the amount of blue circles (targets) among the other shapes (distractors) in a series of images. Each image included three to nine blue circles, one to nine blue squares and one to five yellow circles. At the end of each trial, participants repeated orally the total number of targets presented in the image and if counting was correct, the experimenter passed to the following trial. When presented with a recall cue at the end of a set, participants had to rename the total number of targets of each image in their order of presentation. If the recall was correct, participants started a new set containing an extra image. The number of items presented in each image ranged from two to six. When participants made a mistake in the recall, the task was stopped and a new run, starting from a set with two trials, would start again. Each participant completed three runs of the task. Memory span capacity was computed as the mean of the highest set size the participant was able to recall correctly in the three runs.

Go No-go (GNG) task

We measured cognitive inhibition with the computerised version of the GNG task available in the Psychology Experiment Building Language (PEBL) software100. In the GNG task, participants were instructed to respond to certain stimuli (“go” stimuli) by clicking on a button as fast as possible and to refrain from clicking on other stimuli (“no-go” stimuli). In this version, participants were presented with a 2×2 array with four blue stars (one in the centre of each square of the array). Every 1500 ms, a stimulus (the letter P or R) would appear for 500 ms in the place of one of the blue stars. For the first half of the task, the letter P would be the “go” stimulus and the letter R would be the “no-go”. This rule would be then inverted in the second half of the task. Participants completed 320 trials. The ratio between “go” and “no-go” trials was 80:20, respectively. Cognitive inhibition capacity in the GNG task was measured by the d’:

A higher d’ indicated better cognitive inhibition.

Verbal fluency tasks

Verbal fluency was measured with three subtasks testing the lexical, semantic and action components of verbal fluency. In the lexical fluency subtask, participants were required to say as many words as possible starting with the letter P. The letter P is often used in the French version of the phonemic fluency102. In the semantic fluency subtask, participants were required to name animals, and in the action fluency subtask, isolated verbs describing an action realisable by a person. In each subtask, participants were instructed to say as many words as possible within 1 min while avoiding word repetitions, words with the same etymological root and proper nouns. Every time the participant disrespected one of these rules, an error would be counted. Each verbal fluency subtask has a score computed by subtracting the amount of errors from the total amount of words produced within 1 min. A higher score in each component of verbal fluency indicated a higher verbal fluency capacity.

Neuropsychological tests for Study 2

In Study 2, our attention shifted to a specific aspect of prefrontal lobe function, specifically targeting working memory. To comprehensively assess this cognitive domain, we employed a battery of well-established tasks, including the Counting Span Task, the Digit Span Task, the Corsi Block Tapping Task, and two verbal fluency tests. The careful selection of these tasks was grounded in their widespread utilization within the field and their demonstrated reliability in prior studies95,96,103.

Counting Span (CSPAN) task

The procedure for measuring working memory capacity with the CSPAN task was identical in the two studies.

Digit Span (DSPAN) task

Phonological short-term memory capacity was measured using the DSPAN task104. In this task, participants listened to and then repeated a list of digits enunciated by an experimenter. The task comprised seven levels of difficulty ranging from three to nine items. Each level comprised four different lists. If participants correctly recalled all items for at least three out of the four lists, they were permitted to move up a level. If participants could not recall at least three lists from the four lists correctly, the task ended. Memory span in the DSPAN task was considered to be the level (three to nine) at which the participant was still able to recall three from the four lists correctly. A higher memory span score is indicative of a better phonological short-term memory capacity.

Corsi blocks tapping task (Corsi) task

Visuo-spatial short-term memory capacity was assessed using the Corsi task105. In this task, nine cubes were placed in front of the participant in a fixed pseudo-random manner. The blocks were labelled with numbers only visible to the experimenter. The experimenter tapped a number of blocks in a specific sequence after which the participant had to tap the same blocks in the same order. Similarly to the DSPAN, the Corsi task comprised seven levels ranging from three to nine items. Four sequences were presented within a level. Memory span in the Corsi task was considered to be the level (three to nine) at which the participant was still able to recall three from the four lists correctly. A higher memory span score is indicative of a better visuo-spatial short-term memory capacity.

Verbal fluency tasks

Verbal fluency in Study 2 was measured with the Hungarian version of the task106. Procedure was similar to the one used in Study 1 with two main differences: the lexical fluency was tested using the letter K and the action fluency was not measured in this study.

Procedure

In Study 1 the experiment was organized over two sessions. During the first session, the ASRT task was administered. Participants were informed that the aim of the study was to study how an extended practice affected performance in a simple reaction time task. Therefore, participants were instructed to respond as fast and as accurately as they could. Participants were not given any information about the underlying structure of the task.

Participants completed the ASRT task in a soundproof experimental booth with a computer screen observable through a window and a four button (up, down, right left) response box placed over a table. Prior to the 25 blocks of the learning phase, the participants completed a three-block training phase where stimuli were completely random. Thus, the first three blocks contained no underlying sequence. This ensured that participants correctly understood the task instructions and familiarized themselves with the response keys. At the end of each block, participants received feedback on the screen reporting their accuracy and reaction time on the elapsed block. This was followed by a 15 s resting period. Participants were then free to choose when to start the next block.

The ANT, BCST, CSPAN, GNG and verbal fluency tasks were administered in the second experimental session. This session took ~1 h. In order to avoid a possible fatigue effect in a particular task, the order of presentation of each task was randomized over participants.

The procedure for Study 2 was similar. The ASRT task was administered in the first session and CSPAN, DSPAN, Corsi and verbal fluency tasks were administered in a second session.

Statistical analysis

Factor analyses

We first aimed to determine the potential latent structure of our sets of EF measures in a data-driven manner. Therefore, we investigated the factor structure of the EF measures in both of our datasets separately, using maximum likelihood exploratory factor analysis (ML EFA) with varimax rotation, as implemented in the psych package in R107, with default settings. To aid interpretation, the scores that reflect error percentages were subtracted from 100, so that higher values represent better performance in all variables. To assess the factorability of the data, we utilised 3 complementary approaches71. Firstly, we inspected the off-diagonal elements of the anti-image covariance matrix. If the dataset is appropriate for factor analysis, these elements should be all above 0.5071. Secondly, we computed the Kaiser-Meyer-Olkin (KMO) test of sampling adequacy70. The higher the overall KMO index, the more appropriate a factor analytic model is for the data. The original cut-off recommended by Kaiser, 197070 is 0.60, but other authors have also suggested 0.5071. Finally, we performed Bartlett’s test of sphericity, which tests the hypothesis that the sample correlation matrix came from a multivariate normal population in which the variables of interest are independent108. Rejection of the hypothesis is taken as an indication that the data are appropriate for analysis.

We determined the number of factors to extract using Horn’s parallel analysis109. This approach is based on comparing the eigenvalues of factors of the observed data with those of random data from a matrix of the same size. Factors with higher eigenvalues in the observed, than in the random data are kept. We used a more stringent criteria, and compared the observed eigenvalues to the 95th percentile, instead of the mean of the simulated distributions. Furthermore, our use of ML EFA also allowed us to calculate multiple fit indices of the applied factor analytic models. Following the recommendations of Fabrigar et al.68 and Hu & Bentler 110, we chose to focus on the Root Mean Square Error of Approximation (RMSEA) and the Standardized Root Mean Squared Residual (SRMR). According to a commonly used guideline, RMSEA values less than 0.05 constitute good fit, values in the 0.05–0.08 range acceptable fit, values in the 0.08–0.10 range marginal fit, and values greater than 0.10 poor fit. Similarly, SRMR values below 0.08 are generally considered indicators of good model fit111. After we determined the number of factors to extract, participant level factor scores were calculated for all factors, based on Thomson’s 112 regression method.

ASRT learning trajectories

ASRT task performance was assessed by calculating the median reaction times (RTs) of correct responses for high- and low-probability triplets separately in each epoch. We then computed learning scores for each epoch by subtracting the median RTs of high-probability triplets from the median RTs of low-probability triplets. A greater difference between high- and low-probability trials indicates greater learning. We excluded from the analysis trills (e.g., 2-1-2) and repetitions (e.g., 2-2-2) as participants show pre-existing tendencies to answer faster in these types of triplets. The first five trials (five warm-up random) of each block and trials with RTs below 100 ms were also removed from the analysis. These criteria led to the exclusion of 23.0% of all trials in study 1, and a comparable 23.3% of all trials in study 2.

To evaluate the trajectory of implicit SL and its relationship with the latent EF factor scores, we fit linear mixed models. Linear mixed models were fit with the mixed function from the afex package113, which enabled appropriate effects coding and accounted for interactions in the models114. The models predicted block-wise median RTs (ms) from three independent variables of Triplet Type, Block and EF factor scores, and contained all main effects and higher order interactions as fixed effects, as well as subject-specific correlated slopes for Block. Triplet Type was a factor variable, effects coded to reflect the high- and low-probability triplet categories, with Low-probability triplets being the reference category. Block and EF factor scores were treated as continuous variables and were mean centered before analysis to aid interpretation. Assumptions regarding linearity, homoscedasticity and normality of residuals were evaluated by scatterplots and QQ plots of residuals and were met in each case. Sample sizes in terms of total number of data points and of sampling units, random effects estimates, Nakagawa’s marginal and conditional R2115, and the adjusted ICC are all consistently reported in summary tables116.

Post-hoc contrasts were conducted with the emmeans R package117. For inference about fixed effects, we used Type III tests, relying on comparing nested models with the effect of interest either included or removed. For these tests, we used the Satterthwaite approximation of degrees of freedom118. Figures were created with ggplot2119. An alpha level of 0.05 was used throughout and all significance tests are two-tailed. Data were analysed with R version 4.2.3. and JASP version 0.16.3.

Correlations

For the correlational analyses, a single index of implicit SL score for each participant was obtained by averaging the learning scores across blocks. Previous results from our group have indicated that while the reliability of learning scores is low for individual blocks, averaging across at least 25 blocks leads to acceptable levels of reliability66. Correlations between this score and the individual EF tasks were then assessed. For the ASRT - EF factor score correlations that were of primary interest, we also calculated Bayes factors, using JASP (JASP Team, 2019), with default priors (stretched beta distribution with a width of 1). We tested the alternative hypothesis that the two variables are negatively correlated. We also tested the robustness of our Bayes factors to different prior widths.

Meta-analysis

In addition to testing our hypotheses in each of the two samples separately, following the recommendation of Braver et al.72, we also carried out a continuously cumulating meta-analysis (CCMA) of the two studies. A meta-analytic approach allows us to pool individual effects sizes into a single estimate, while quantifying their heterogeneity. In order to make the two studies comparable, in the two datasets separately, we extracted factor scores from a single factor ML EFA applied to the set of EF tasks that were shared in both studies. These were Lexical fluency, Semantic fluency and CSPAN. We then calculated the Pearson’s correlation between these EF factor scores and the implicit SL learning scores and ran our meta-analytic model on these effects. We fit a fixed-effect meta-analysis model implemented in the ‘meta’ R package120,121, using the inverse variance pooling method. We expected little between-study heterogeneity in the effect, therefore a priori, we decided on a fixed-effect model to test the average true effect in the two studies. We nevertheless estimated between-study heterogeneity using the REML estimator122. As reported below, commonly used measures of heterogeneity indicated little between-studies variability, validating our choice of a fixed-effect model. We relied on the REML estimate of the variance of the distribution of true effects, τ2, on the percentage of variability in the effect sizes that is not caused by sampling error, I2123, and on Cochran’s Q124, which can be used to test whether there is more variation than can be expected from sampling error alone.

Data availability

In accordance with the transparency and reproducibility principles, we provide information regarding the availability of data supporting the findings of this study. All data necessary to replicate the findings of this manuscript can be found on the OSF platform, at the following link: https://osf.io/2asnb/.

Code availability

In accordance with the transparency and reproducibility principles, we provide analysis codes supporting the findings of this study. All codes necessary to replicate our analyses can be found on the OSF platform, at the following link: https://osf.io/2asnb/.

References

Aslin, R. N. Statistical learning: a powerful mechanism that operates by mere exposure. WIREs Cogn. Sci. 8, e1373 (2017).

Conway, C. M. How does the brain learn environmental structure? ten core principles for understanding the neurocognitive mechanisms of statistical learning. Neurosci. Biobehav. Rev. 112, 279–299 (2020).

Kaufman, S. B. et al. Implicit learning as an ability. Cognition 116, 321–340 (2010).

Ullman, M. T. in Theories in Second Language Acquisition 953–968 (Routledge, 2020).

Hallgató, E., Győri-Dani, D., Pekár, J., Janacsek, K. & Nemeth, D. The differential consolidation of perceptual and motor learning in skill acquisition. Cortex 49, 1073–1081 (2013).

Verburgh, L., Scherder, E. J. A., van Lange, Pa. M. & Oosterlaan, J. The key to success in elite athletes? explicit and implicit motor learning in youth elite and non-elite soccer players. J. Sports Sci. 34, 1782–1790 (2016).

Rohrmeier, M. & Rebuschat, P. Implicit learning and acquisition of music. Top. Cogn. Sci. 4, 525–553 (2012).

Romano Bergstrom, J. C., Howard, J. H. & Howard, D. V. Enhanced implicit sequence learning in college‐age video game players and musicians. Appl. Cogn. Psychol. 26, 91–96 (2012).

Shook, A., Marian, V., Bartolotti, J. & Schroeder, S. R. Musical experience influences statistical learning of a novel language. Am. J. Psychol. 126, 95–104 (2013).

Baldwin, D., Andersson, A., Saffran, J. & Meyer, M. Segmenting dynamic human action via statistical structure. Cognition 106, 1382–1407 (2008).

Parks, K. M. A., Griffith, L. A., Armstrong, N. B. & Stevenson, R. A. Statistical learning and social competency: the mediating role of language. Sci. Rep. 10, 1–15 (2020).

Ruffman, T., Taumoepeau, M. & Perkins, C. Statistical learning as a basis for social understanding in children. Br. J. Dev. Psychol. 30, 87–104 (2012).

Graybiel, A. M. Habits, rituals, and the evaluative brain. Annu. Rev. Neurosci. 31, 359–387 (2008).

Horváth, K., Nemeth, D. & Janacsek, K. Inhibitory control hinders habit change. Sci. Rep. 12, 8338 (2022).

Szegedi-Hallgató, E. et al. Explicit instructions and consolidation promote rewiring of automatic behaviors in the human mind. Sci. Rep. 7, 4365 (2017).

Destrebecqz, A. & Cleeremans, A. Can sequence learning be implicit? new evidence with the process dissociation procedure. Psychon. Bull. Rev. 8, 343–350 (2001).

Fu, Q., Dienes, Z. & Fu, X. Can unconscious knowledge allow control in sequence learning? conscious. Cogn 19, 462–474 (2010).

Kóbor, A., Janacsek, K., Takács, Á. & Nemeth, D. Statistical learning leads to persistent memory: evidence for one-year consolidation. Sci. Rep. 7, 760 (2017).

Song, S., Howard, J. H. & Howard, D. V. Implicit probabilistic sequence learning is independent of explicit awareness. Learn. Mem. 14, 167–176 (2007).

Song, S., Howard, J. H. & Howard, D. V. Sleep does not benefit probabilistic motor sequence learning. J. Neurosci. 27, 12475–12483 (2007).

Vékony, T., Ambrus, G. G., Janacsek, K. & Nemeth, D. Cautious or causal? key implicit sequence learning paradigms should not be overlooked when assessing the role of DLPFC (Commentary on prutean et al). Cortex 148, 222–226 (2022).

Poldrack, R. A. & Packard, M. G. Competition among multiple memory systems: converging evidence from animal and human brain studies. Neuropsychologia 41, 245–251 (2003).

Batterink, L. J., Paller, K. A. & Reber, P. J. Understanding the neural bases of implicit and statistical learning. Top. Cogn. Sci. 11, 482–503 (2019).

Freedberg, M., Toader, A. C., Wassermann, E. M. & Voss, J. L. Competitive and cooperative interactions between medial temporal and striatal learning systems. Neuropsychologia 136, 107257 (2020).

Reber, A. S. & Allen, R. The Cognitive Unconscious: The First Half Century (Oxford University Press, 2022).

Eichenbaum, H., Fagan, A., Mathews, P. & Cohen, N. J. Hippocampal system dysfunction and odor discrimination learning in rats: Impairment of facilitation depending on representational demands. Behav. Neurosci. 102, 331–339 (1988).

Packard, M. G., Hirsh, R. & White, N. M. Differential effects of fornix and caudate nucleus lesions on two radial maze tasks: evidence for multiple memory systems. J. Neurosci. Off. J. Soc. Neurosci. 9, 1465–1472 (1989).

Poldrack, R. A., Prabhakaran, V., Seger, C. A. & Gabrieli, J. D. E. Striatal activation during acquisition of a cognitive skill. Neuropsychology 13, 564–574 (1999).

Poldrack, R. A. et al. Interactive memory systems in the human brain. Nature 414, 546–550 (2001).

Daw, N. D., Niv, Y. & Dayan, P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat. Neurosci. 8, 1704–1711 (2005).

Doll, B. B., Shohamy, D. & Daw, N. D. Multiple memory systems as substrates for multiple decision systems. Neurobiol. Learn. Mem. 117, 4–13 (2015).

Shohamy, D. & Daw, N. D. Habits and reinforcement learning. in The cognitive neurosciences, 5th edn (Eds. Michael S. G, George R. M) 591–603 (MIT Press, Cambridge, MA, US, 2014).

Doyon, J. et al. Contributions of the basal ganglia and functionally related brain structures to motor learning. Behav. Brain Res. 199, 61–75 (2009).

Lee, S. W., Shimojo, S. & O’Doherty, J. P. Neural computations underlying arbitration between model-based and model-free learning. Neuron 81, 687–699 (2014).

Decker, J. H., Otto, A. R., Daw, N. D. & Hartley, C. A. From creatures of habit to goal-directed learners: tracking the developmental emergence of model-based reinforcement learning. Psychol. Sci. 27, 848–858 (2016).

Nemeth, D., Janacsek, K. & Fiser, J. Age-dependent and coordinated shift in performance between implicit and explicit skill learning. Front. Comput. Neurosci. https://doi.org/10.3389/fncom.2013.00147 (2013).

Palminteri, S., Kilford, E. J., Coricelli, G. & Blakemore, S.-J. The computational development of reinforcement learning during adolescence. PLOS Comput. Biol. 12, e1004953 (2016).

Diamond, A. Executive functions. Annu. Rev. Psychol. 64, 135–168 (2013).

Koechlin, E. Prefrontal executive function and adaptive behavior in complex environments. Curr. Opin. Neurobiol. 37, 1–6 (2016).

Miyake, A. & Friedman, N. P. The nature and organization of individual differences in executive functions: four general conclusions. Curr. Dir. Psychol. Sci. 21, 8–14 (2012).

Miyake, A. et al. The unity and diversity of executive functions and their contributions to complex ‘frontal lobe’ tasks: a latent variable analysis. Cognit. Psychol. 41, 49–100 (2000).

Badre, D., Kayser, A. S. & D’Esposito, M. Frontal cortex and the discovery of abstract action rules. Neuron 66, 315–326 (2010).

Braver, T. S., Reynolds, J. R. & Donaldson, D. I. Neural mechanisms of transient and sustained cognitive control during task switching. Neuron 39, 713–726 (2003).

Koechlin, E., Ody, C. & Kouneiher, F. The architecture of cognitive control in the human prefrontal cortex. Science 302, 1181–1185 (2003).

Miller, E. K. & Cohen, J. D. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202 (2001).

Osaka, M. et al. The neural basis of individual differences in working memory capacity: an fMRI study. NeuroImage 18, 789–797 (2003).

Kondo, H., Osaka, N. & Osaka, M. Cooperation of the anterior cingulate cortex and dorsolateral prefrontal cortex for attention shifting. NeuroImage 23, 670–679 (2004).

Ambrus, G. G. et al. When less is more: enhanced statistical learning of non-adjacent dependencies after disruption of bilateral DLPFC. J. Mem. Lang. 114, 104144 (2020).

Smalle, E. H. M., Panouilleres, M., Szmalec, A. & Möttönen, R. Language learning in the adult brain: disrupting the dorsolateral prefrontal cortex facilitates word-form learning. Sci. Rep. 7, 13966 (2017).

Nemeth, D., Janacsek, K., Polner, B. & Kovacs, Z. A. Boosting human learning by hypnosis. Cereb. Cortex 23, 801–805 (2013).

Borragán, G., Slama, H., Destrebecqz, A. & Peigneux, P. Cognitive fatigue facilitates procedural sequence learning. Front. Hum. Neurosci. 10, 86 (2016).

Filoteo, J. V., Lauritzen, S. & Maddox, W. T. Removing the frontal lobes: the effects of engaging executive functions on perceptual category learning. Psychol. Sci. 21, 415–423 (2010).

Smalle, E. H. M., Daikoku, T., Szmalec, A., Duyck, W. & Möttönen, R. Unlocking adults’ implicit statistical learning by cognitive depletion. Proc. Natl. Acad. Sci. 119, e2026011119 (2022).

Park, J., Janacsek, K., Nemeth, D. & Jeon, H.-A. Reduced functional connectivity supports statistical learning of temporally distributed regularities. NeuroImage 260, 119459 (2022).

Tóth, B. et al. Dynamics of EEG functional connectivity during statistical learning. Neurobiol. Learn. Mem. 144, 216–229 (2017).

Virag, M. et al. Competition between frontal lobe functions and implicit sequence learning: evidence from the long-term effects of alcohol. Exp. Brain Res. 233, 2081–2089 (2015).

Janacsek, K. & Nemeth, D. Implicit sequence learning and working memory: correlated or complicated? Cortex 49, 2001–2006 (2013).

Park, J., Yoon, H.-D., Yoo, T., Shin, M. & Jeon, H.-A. Potential and efficiency of statistical learning closely intertwined with individuals’ executive functions: a mathematical modeling study. Sci. Rep. 10, 18843 (2020).

Petok, J. R., Dang, L. & Hammel, B. Impaired executive functioning mediates the association between aging and deterministic sequence learning. Aging Neuropsychol. Cogn. 0, 1–17 (2022).

Janacsek, K. & Németh, D. The puzzle is complicated: when should working memory be related to implicit sequence learning, and when should it not? (Response to Martini et al.). Cortex 64, 411–412 (2015).

Button, K. S. et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376 (2013).

Schönbrodt, F. D. & Perugini, M. At what sample size do correlations stabilize? J. Res. Personal. 47, 609–612 (2013).

Haines, N., Sullivan-Toole, H. & Olino, T. From classical methods to generative models: tackling the unreliability of neuroscientific measures in mental health research. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 8, 822–831 (2023).

Karr, J. E. et al. The unity and diversity of executive functions: a systematic review and re-analysis of latent variable studies. Psychol. Bull. 144, 1147–1185 (2018).

Buffington, J., Demos, A. P. & Morgan-Short, K. The reliability and validity of procedural memory assessments used in second language acquisition research. Stud. Second Lang. Acquis. 43, 635–662 (2021).

Farkas, B. C., Krajcsi, A., Janacsek, K. & Nemeth, D. The complexity of measuring reliability in learning tasks: an illustration using the alternating serial reaction time task. Behav. Res. Methods 56, 301–317 (2024).

Beavers, A. et al. Practical considerations for using exploratory factor analysis in educational research. Pract. Assess. Res. Eval. https://doi.org/10.7275/qv2q-rk76 (2019).

Fabrigar, L. R., Wegener, D. T., MacCallum, R. C. & Strahan, E. J. Evaluating the use of exploratory factor analysis in psychological research. Psychol. Methods 4, 272–299 (1999).

Goretzko, D., Pham, T. T. H. & Bühner, M. Exploratory factor analysis: current use, methodological developments and recommendations for good practice. Curr. Psychol. 40, 3510–3521 (2021).

Kaiser, H. F. A second generation little jiffy. Psychometrika 35, 401–415 (1970).

Dziuban, C. D. & Shirkey, E. C. When is a correlation matrix appropriate for factor analysis? some decision rules. Psychol. Bull. 81, 358–361 (1974).

Braver, S. L., Thoemmes, F. J. & Rosenthal, R. Continuously cumulating meta-analysis and replicability. Perspect. Psychol. Sci. 9, 333–342 (2014).

West, G., Vadillo, M. A., Shanks, D. R. & Hulme, C. The procedural learning deficit hypothesis of language learning disorders: we see some problems. Dev. Sci. 21, e12552 (2018).

Loonis, R. F., Brincat, S. L., Antzoulatos, E. G. & Miller, E. K. A meta-analysis suggests different neural correlates for implicit and explicit learning. Neuron 96, 521–534.e7 (2017).

Voss, M. W. et al. Effects of training strategies implemented in a complex videogame on functional connectivity of attentional networks. NeuroImage 59, 138–148 (2012).

Daneman, M. Working memory as a predictor of verbal fluency. J. Psycholinguist. Res. 20, 445–464 (1991).

Rende, B., Ramsberger, G. & Miyake, A. Commonalities and differences in the working memory components underlying letter and category fluency tasks: a dual-task investigation. Neuropsychology 16, 309–321 (2002).

Unsworth, N., Spillers, G. J. & Brewer, G. A. Variation in verbal fluency: a latent variable analysis of clustering, switching, and overall performance. Q. J. Exp. Psychol. 64, 447–466 (2011).

Unsworth, N. & Engle, R. W. Individual differences in working memory capacity and learning: evidence from the serial reaction time task. Mem. Cognit. 33, 213–220 (2005).

Woods, S. P. et al. Action (verb) fluency: test-retest reliability, normative standards, and construct validity. J. Int. Neuropsychol. Soc. JINS 11, 408–415 (2005).

Rosen, V. M. & Engle, R. W. The role of working memory capacity in retrieval. J. Exp. Psychol. Gen. 126, 211–227 (1997).

Azuma, T. Working memory and perseveration in verbal fluency. Neuropsychology 18, 69–77 (2004).

Shao, Z., Janse, E., Visser, K. & Meyer, A. S. What do verbal fluency tasks measure? predictors of verbal fluency performance in older adults. Front. Psychol. 5, 772 (2014).

Gustavson, D. E. et al. Integrating verbal fluency with executive functions: evidence from twin studies in adolescence and middle age. J. Exp. Psychol. Gen. 148, 2104–2119 (2019).

Fisk, J. E. & Sharp, C. A. Age-related impairment in executive functioning: updating, inhibition, shifting, and access. J. Clin. Exp. Neuropsychol. 26, 874–890 (2004).

Reber, P. J. The neural basis of implicit learning and memory: a review of neuropsychological and neuroimaging research. Neuropsychologia 51, 2026–2042 (2013).

Frensch, P. A. & Miner, C. S. Effects of presentation rate and individual differences in short-term memory capacity on an indirect measure of serial learning. Mem. Cognit. 22, 95–110 (1994).

Howard, J. H. & Howard, D. V. Age differences in implicit learning of higher order dependencies in serial patterns. Psychol. Aging 12, 634–656 (1997).

Otto, A. R., Gershman, S. J., Markman, A. B. & Daw, N. D. The curse of planning: dissecting multiple reinforcement-learning systems by taxing the central executive. Psychol. Sci. 24, 751–761 (2013).

Otto, A. R., Raio, C. M., Chiang, A., Phelps, E. A. & Daw, N. D. Working-memory capacity protects model-based learning from stress. Proc. Natl Acad. Sci. USA 110, 20941–20946 (2013).

Unsworth, N., Brewer, G. A. & Spillers, G. J. Working memory capacity and retrieval from long-term memory: the role of controlled search. Mem. Cognit. 41, 242–254 (2013).

Gaskell, M. G. & Altmann, G. The Oxford Handbook of Psycholinguistics 1st ed, Vol 880 (Oxford University Press, 2007).

Nemeth, D. et al. Sleep has no critical role in implicit motor sequence learning in young and old adults. Exp. Brain Res. 201, 351–358 (2010).

Janacsek, K., Fiser, J. & Nemeth, D. The best time to acquire new skills: age-related differences in implicit sequence learning across the human lifespan. Dev. Sci. 15, 496–505 (2012).

Conway, A. R. A. et al. Working memory span tasks: a methodological review and user’s guide. Psychon. Bull. Rev. 12, 769–786 (2005).

Harrison, J. E., Buxton, P., Husain, M. & Wise, R. Short test of semantic and phonological fluency: normal performance, validity and test-retest reliability. Br. J. Clin. Psychol. 39, 181–191 (2000).

Langner, R. et al. Evaluation of the reliability and validity of computerized tests of attention. PLoS One 18, e0281196 (2023).

Piper, B. J. et al. Reliability and validity of neurobehavioral function on the psychology experimental building language test battery in young adults. PeerJ 3, e1460 (2015).

Fan, J., McCandliss, B. D., Sommer, T., Raz, A. & Posner, M. I. Testing the efficiency and independence of attentional networks. J. Cogn. Neurosci. 14, 340–347 (2002).