Abstract

The impact of face masks on social cognition and interaction became a popular topic due to the long-lasting COVID-19 pandemic. This theme persists in the focus of attention beyond the pandemic, since face covering not only reduces the overall amount of face information available but also introduces biases and prejudices affecting social perception at large. Many questions are still open. One of them is whether gender of beholders affects inferring of emotions covered by face masks. Reading covered faces may be particularly challenging for individuals with mental disorders, most of which are gender-specific. Previous findings are not only sparse, but inconclusive because most research had been conducted online with resulting samples heavily dominated by females. Here in a face-to-face study, females and males were presented with a randomized set of faces covered by masks. In a two-alternative forced-choice paradigm, participants had to indicate facial emotions displayed by posers. In general, the outcome dovetails with earlier findings that face masks affect emotion recognition in a dissimilar way: Inferring some emotions suffers more severely than others, with the most pronounced influence of mask wearing on disgust and close to ceiling recognition of fear and neutral expressions. Contrary to our expectations, however, males were on overall more proficient in emotion recognition. In particular, males substantially excelled in inferring disgust. The findings help to understand gender differences in recognition of disgust, the forgotten emotion of psychiatry, that is of substantial value for a wide range of mental disorders including schizophrenia. Watch Prof. Marina Pavlova discussing this her work and this article: https://vimeo.com/860126397/5966610f49?share=copy.

Similar content being viewed by others

Introduction

The impact of face masks on social cognition and interaction became a valuable and popular research topic due to the long-lasting COVID-19 pandemic with compulsory face-masks-wearing safety regulations. This theme remains in the focus of research attention beyond the pandemic, since face coverings not only reduce the overall amount of face information but introduce perceptual biases and prejudices affecting efficient social interaction and mental health at large1,2.

For inferring most emotional expressions (in particular, subtle), complementary information flows from the upper and lower face parts are desirable. Indeed, in daily life, beholders habitually have access to a plenty of facial cues. Yet, it is assumed the lower part of a face is essential for the recognition of happiness and disgust, the upper portion for anger and fear, and both for surprise and sadness3,4. Already initial studies in the field indicated that not all emotions are equally affected by medical face masks covering the lower portion of a face2,5. Irrespective of differences in cultural/ethnical background (East Asians prioritize global information and fixate more on the center of a face, the nose area, and less on the eyes and mouth areas than Westerners6,7,8), digital superimposing masks on photographs of faces persistently leads to a substantial decrease in inferring sadness, and, in particular, disgust as well as their perceived intensity and confidence in recognition9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27. On the same wavelength, in UK residents of different ethnicity (Caucasian, Black, and Asian/Pacific observers), face masks are reported to primarily hamper inferring disgust and sadness, also having substantial impact on the recognition of happiness28. In Turkish university students, neither effects of mask pattern (angular or curvy) nor color (black or white) on facial emotion recognition was found, with the most pronounced influence of all types of masks on afraid/fearful and disgusted faces13. The findings obtained with the separate groups tested in May 2020 and July 2021 indicate that the unfavorable influence of face masks on sadness and disgust recognition still persists after more than a year of the pandemic14, or, in other words, the impact of habituation or experience with masked faces on face reading appears to be rather negligible. Noteworthy, comparable effects of face covering are obtained using female faces expressing emotions with a face mask and in faces with a mask artificially imposed onto face photographs (except anger), with the poorest recognition of disgust and sadness16. Brief exposure (for 250 s) to masked faces results in a basically similar pattern of results, with recognition of facial disgust affected most strongly, along with a rather limited impact on recognition of anger29.

There is much less harmony concerning emotional expressions most resistant to face masks wearing. Experimental studies underscore neutral expressions9,11,14,21,24,28 and happiness19. The primacy of anger in the sense of its robustness against face masks is also emphasized20,24,26,30, albeit the opposite effects are described as well9,10,12,15,17,29. This discrepancy may be attributable to methodological issues, in particular, differences in emotion expression by posers (Fig. 1) or faces databases used such as the MPI FACES database or Radboud Faces Database. In some studies, visual input for emotional impressions is hardly comparable in terms of head tilts, rolls, and yaws. Moreover, cultural differences in emotion expression and experience may contribute to inconsistency of the findings. For example, face masks hamper recognition of happiness in US American but not in Japanese individuals31.

Anger expressed with different involvement of the upper and lower face parts in an Italian poser (left panel; from Proverbio and Cerri, 2022, Front Neurosci; the Creative Commons Attribution [CC BY] license) and in a face taken from the Vienna Emotion Recognition Task, VERT-K, with subsequent modification (right panel; from Grahlow et al., 2022, PLoS ONE; the Creative Commons Attribution [CC BY] license) may lead to different conclusions by studying facial expressions under unusual viewing conditions, for example, when hidden behind a mask.

The pattern of results similar to the effects obtained with static photographs is demonstrated using more ecologically valid faces in motion. In videos of dynamic faces, masks impair inferring sadness, disgust, and happiness, leaving neutral expressions, fear, and social (fake/dishonest/polite) smiling largely untouched32,33. Face masks affect ratings of the extent to which reward, affiliation, and dominance smiles in moving faces convey positive feelings, reassurance, and superiority, respectively34. Fairly unexpectedly, however, social smiles are reported to appear more honest in masked than unmasked dynamic faces33. Under usual viewing conditions, social smiles are determined primarily by information from the mouth with indifferent cold eyes, whereas shining warm eyes make real smiles35. Yet, even covered by masks true smiles are rated as happy and pleasant or, in other words, the glow of real smiles still shows36. However, hidden behind masks happiness is often mistaken for neutral expressions, a poker face37. Noteworthy, individuals with higher empathic concern demonstrate higher recognition levels for disgust in masked faces, but not for other basic emotions29. Principally, this agrees with the findings that emotional intelligence as well as self-reported emotional intelligence do not affect emotion recognition in both masked and unmasked faces14. In the same vein, both affective and cognitive empathy are not tied with emotion recognition in masked faces27.

Reading covered faces may be particularly challenging for individuals with mental, neurological, and psychosomatic disorders characterized by deficient non-verbal social cognition already in the pre-pandemic period2. However, the data is extremely sparse and controversial. Most neuropsychiatric conditions are gender- (a social construct reflecting social norms, roles, biases, and practices) and/or sex- (a neurobiological construct) specific, possessing a skewed ratio: females and males are differently affected in terms of prevalence, clinical manifestation, and symptom severity. Major depressive disorder (MDD) shows a female preponderance with around twice as many women affected as men38. By contrast, in schizophrenia (SZ), males are more often affected with a ratio ranging from 1.4 to 1.6 with an earlier age of onset, worse premorbid functioning, and a greater severity of negative symptoms39. Moreover, males and females with SZ may possess distinct profiles in social cognition and metacognition40,41.

The question arises whether individuals with mental disorders exhibit gender differences in reading faces covered by masks? To date, only a handful of studies address this issue even in typically developing (TD) individuals, and the outcome is inconclusive. The primary reason is that most studies have been conducted online with samples heavily predominated by females. This makes revealing gender differences questionable: gender comparisons in unbalanced samples may lead to paradoxical statistical outcomes. Studies with designs balanced in respect to gender either report the absence of gender differences in static14,17,42 and dynamic masked faces34, or reveal female superiority in reading covered faces42,43. Females rate negative emotions covered by face masks as more negative, and positive emotions as more positive than males44.

Covering faces with masks leaves a comparable amount of visual information for face reading as the Reading the Mind in the Eyes Test (RMET) that contains a set of photographs of a pair of the eyes along with the surrounding part of a face2,45. Most recent work indicates that the RMET predicts the accuracy of facial affect recognition in masked faces, whereas the Tromsø Social Intelligence Scale (TSIS) does not46. Considering well-documented (small, but reliable) female proficiency in reading language of the eyes as assessed by the RMET2,45,47, one can expect that females are also more skillful in reading emotions in masked faces. The present work intended to clarify whether gender of perceivers affects inferring emotions in faces covered by face masks.

Methods

Participants

Overall, 53 participants (25 females and 28 males) were engaged in the study. The data sets of two male participants had to be discarded, since routine check prior to data analysis revealed that they were outliers with the overall recognition accuracy beyond ±3 standard deviations (SDs) of other male participants. Thus, the data of 26 male participants entered the data processing. None of them had head injuries, a history of mental disorders (including autism spectrum disorders (ASD), SZ, and MDD), or regular drug intake (medication). Males were aged 23.69 ± 3.90 years (mean ± SD; median, Mdn, 23 years, 95% confidence interval, CI [23.08, 24.31]; age range 18–33 years), and females 22.28 ± 3.34 years (Mdn, 22 years, 95% CI [21.74, 22.82]; age range 18–31 years), with no age difference between the groups (Mann-Whitney test, U = 261, p = 0.230, two-tailed, n.s.). As performance on the task required a proficient language command, German as native language (mother tongue) served as an inclusion criterion. We also strived for homogeneity in respect to cultural background that can potentially affect reading of masked faces31. The number of participants was determined prior to the study by demands of statistical data processing. As in previous work48,49,50,51, gender was self-identified by participants; there were also no female participants with extreme masculine appearance and behavior, and vice versa. All observers had normal or corrected-to-normal vision. Participants were run individually and were naïve as to the purpose of the study. None had previous experience with such displays and tasks. The study was conducted in line with the Declaration of Helsinki and approved by the local Ethics Committee at the University of Tübingen Medical School. Informed written consent was obtained from all participants. Participation was voluntary, and the data sets were processed anonymously.

Face stimuli, task, and procedure

The original face stimuli without masks were taken from the MPI FACES database52 with the project-specific permission, and then modified by superimposing face masks with a graphics editor9. Frontal photographs of six (three female and three male) Caucasians were used from three distinct age groups (young, middle, and older age). Each depicted person displayed six emotional states (anger, disgust, fear, happiness, sadness, and neutrality; Fig. 2). A typical face mask in beige (a so-called “community mask” commonly used during the COVID-19 pandemic) was applied by means of a graphics editor to all faces and adapted individually to properly fit the specific face. Realistic shadow effects were added to improve the naturalistic impression of the images with masked faces (Fig. 2). The stimulus set comprised 36 images (6 emotions × 2 genders × 3 age groups) repeated three times per session, resulting in a total of 108 trials. As the task was designed for later use in patients, unlike9,14, we used only two (one correct and one incorrect) rather than all possible six alternative responses for emotion recognition. Using only two response alternatives leads to a considerable decrease in task difficulty (in the sense of decision-making complexity as well as reliance on language proficiency and comprehension) and test duration, both of which are welcome in examination of patients. The response alternative pairs were chosen based primarily on the emotion confusion data9,14: angry— disgusted, fearful—sad, and neutral—happy. For avoiding possible transfer and passive leaning effects on emotion recognition, we used masked faces only.

Participants were administered a computer version of the emotion recognition task by using Presentation software (Neurobehavioral Systems, Inc., Albany, CA, USA). The stimuli subtended a visual angle of 9.8° × 9.8° at an observation distance of 70 cm. They were presented in a pseudo-randomized order, one at a time for 2 s in three runs separated by short breaks. A schematic representation of experimental procedure (each trial) is given in Fig. 3. Upon image offset, two words (correct and incorrect responses) appeared on the right and left sides of a black screen. The correct response position varied randomly across trials. Participants were asked to respond as accurately but also as fast as possible once a response screen was on (with a time limit of 5 s). On each trial, they had to indicate a displayed emotion by pressing a respective key on the side of correct response. Once a response was given (or else the time limit elapsed), a white fixation cross appeared for a duration jittered between 1.5 and 2 s prior to the start of next trial. Instructions were carefully explained to participants and their understanding had been proven with pre-testing (about ten trials) performed under supervision of an examiner. No immediate feedback was provided to participants. The testing lasted for about 15 min.

Each trial started with presentation of a white fixation cross in the middle of the screen for 1.5–2 s followed by 2-s presentation of one out of six facial emotional expressions hidden behind a mask. After stimulus presentation, participants had to indicate, within 5 s in a 2AFC task, the displayed emotion by choosing one response option among two alternatives (correct and incorrect response; for example, either disgusted [correct] or angry [incorrect] facial expression). The face image is presented for illustrative purposes only, and had not been used as experimental material in the present study.

Data processing and analysis

Prior to statistical data processing, normality of data distributions was routinely examined by using Shapiro-Wilk tests with subsequent use of either parametric (for normally distributed data sets) or non-parametric statistics. For not normally distributed data sets, additionally to means and SDs, Mdns and 95% CIs are reported. Statistical inference was accomplished by means of mixed-model analyses of variance (ANOVAs, the outcome of which is reported to be resistant to normality of data distribution53,54,55) and post-hoc pairwise comparisons by using Tukey’s honestly significant difference (HSD) tests with software package JMP (Version 16, SAS Institute, Cary, NC, USA). Non-parametric statistics (Mann–Whitney test and Wilcoxon signed-rank test) were performed for between- and within-group comparisons, respectively, with MATLAB (version 2022a; MathWorks Inc., Natick, MA, USA).

Results

Recognition accuracy

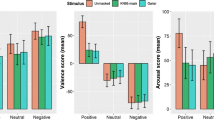

Individual correct response rates were submitted to a two-way mixed-model ANOVA with the within-subject factor Emotional Expression (angry, fearful, neutral, disgusted, happy, and sad) and between-subject factor Observer Gender (female/male). A main effect of Gender was significant (F(1,245) = 4.17, p = 0.042; effect size, eta-squared η2 = 0.079), albeit, contrary to our expectations, with a higher emotion recognition accuracy in males than in females (Fig. 4). A main effect of Emotional Expression was highly significant (F(5,245) = 78.15, p < 0.0001; effect size, η2 = 0.615). A Gender by Emotion interaction tended to reach significance (F(5,245) = 2.14, p = 0.062).

In accord with previous findings (see “Introduction” section), the outcome shows that face masks disproportionally affect facial emotional recognition: the most recognizable (close to the ceiling level of performance, Fig. 4) were neutral expression, fear, and happiness, whereas disgust, sadness, and angriness turned out to be least recognizable. As this analysis is beyond the focus of the present study, the outcome of post-hoc pair-wise comparisons (two-tailed Tukey HSD, multiplicity adjusted) is provided in Supplementary Material (Tables S1–S2). As seen from Table 1 summarizing the outcome of the least squares mean analysis (expressions with no differences in recognition are marked by the same letter), the most recognizable were neutral expressions and fear (without any difference between them, both marked by A), followed by happiness (marked by B), and then by sadness and anger (without any difference between them, both marked by C). Disgust was the most poorly recognizable emotion.

Contrary to our expectations, males were more proficient than females in recognition of disgust (males, 0.61 ± 0.12, and females, 0.56 ± 0.19; t(49) = 3.34, p = 0.044, corrected, p = 0.007, uncorrected; Tukey HSD corrected, two-tailed; effect size Cohen’s d = 0.954). As seen in Fig. 4, no gender differences occurred for all other emotions. A further analysis (performed separately for female and male faces) showed that mostly female faces behind a mask contributed to hitches in disgust recognition in both female and male beholders (Fig. 5). Both female and male participants recognized disgust in female masked faces substantially poorer than in male faces (for females, Wilcoxon signed-rank test, z = 3.59, p < 0.0003, two-tailed, effect size d = 2.063; for males, z = 3.50, p < 0.0005; two-tailed, effect size d = 1.888). As expected, males excelled on recognition of disgust in female faces and tended to be more proficient in male faces (for female faces, Mann–Whitney test, U = 231, p = 0.039; for male faces, U = 247, p = 0.072).

Mean correct response rate for recognition of disgust hidden behind a mask in female (orange) and male (violet) participants separately for female and male posers. Vertical bars represent ±SEM. Double asterisks indicate significant differences (p < 0.05), single asterisk indicates a tendency (p = 0.07).

Response time

Individual response time (RT) values for correct responses were submitted to a two-way mixed-model ANOVA with the within-subject factor Emotional Expression (angry, fearful, neutral, disgusted, happy, and sad) and between-subject factor Observer Gender (female/male). Note, the analysis of RT plays only a secondary role, since participants had been asked to respond as soon as possible after the stimulus offset. A main effect of Gender was not significant (F(1,245) = 1.22, p = 0.270, n.s.), whereas a main effect of Emotional Expression was highly significant (F(5,245) = 57.33, p < 0.0001; effect size, η2 = 0.539). As seen in Fig. 4, in accord with the recognition accuracy analysis, the fastest responses were given for neutral expression, fear, and happiness, while RTs for disgust and sadness were longer. A Gender by Emotion interaction failed to reach significance (F(5,245) = 0.97, p = 0.436, n.s.). Pair-wise comparisons did not reveal any gender differences in RT for all emotions.

Discussion

This work was aimed at investigation of gender impact on the recognition of facial emotions hidden behind a mask. The outcome indicates that: (i) Masks hamper recognition (both accuracy and RT) of emotions in a different way: while some emotions such as happiness, fear, and neutral expressions remain rather well recognizable even when a face is hidden behind a mask, others such as anger, sadness and, in particular, disgust are poorly recognizable. This is in close agreement with previous research2,5. (ii) Contrary to our expectations, however, males were, in general, more proficient in facial emotion recognition, in particular, in recognition of disgust behind a mask, than females. A large body of earlier studies analyzing gender impact on reading covered faces has been conducted online with samples heavily predominated by females. Comparison of gender differences in unbalanced samples may result in paradoxical statistical outcomes. A few studies (with designs balanced in respect to gender) either report the absence of gender differences14,17, or reveal female superiority in reading covered faces43. In particular, women are reported to show a better performance for subtle expressions such as surprise and sadness, both in masked and whole-face conditions, and men excel in recognition of fear, especially in masked faces20. Research on reading language of the eyes as assessed by the RMET also implies female superiority in reading masked faces45. However, it was not the case in the present study. Noteworthy, males are reported to be more proficient than females in recognition of emojis (especially negative ones), while females are better in recognition of natural facial expressions56. The lack of fine-grained structure in emojis appears to be more favorable for males. This strategy may be also more profitable for males while reading covered by masks faces. (iii) Furthermore, compared to masked male faces, disgust represented in female faces is particularly poorly recognizable by both female and male beholders. These items will be discussed further in turn.

Why is disgust in masked faces recognized so poorly?

It is widely believed that the eyes represent the window to the soul45. Yet, in the same vein as previous research, the present findings indicate that (i) not all emotions are equally affected by face masks covering the lower portion of a face, and (ii) digital superimposing masks on photographs of faces consistently results in a substantial decrease in inferring sadness and disgust as well as their perceived intensity and confidence in recognition9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27. The most probable reason for this is that disgust is expressed primarily by the lower part of a face, namely, by a mouth and a nose3,4. In particular, disgust expressions are predominately comprised of the nose wrinkle, lip corner pullers, and lower lip depressor34,57,58. In accord with this, longer fixation on the mouth positively ties with recognition accuracy of disgust (as well as anger)59. Furthermore, disgust recognition benefits more than other basic emotions from audiovisual information as compared with video-only or audio-only conditions60. An area of the eyes and surrounding regions may be rather comparable when expressing sadness, disgust, and anger, sharing similar activation of muscles of the upper face part (Fig. 2). This may lead to perceptual errors of mistaking these emotions for one another9,14,21,23.

Why do males excel in disgust recognition? Why is disgust less recognizable in female faces?

Recognition of disgust is heavily affected by a face mask in both female and male observers, albeit it is easily recognizable by healthy people in unmasked faces9,14. The present study shows that disgust recognition is affected in women more severely than in men. One may ask why males are more proficient in reading disgust in covered faces. One possible explanation would be that females and males use different gender-dependent perceptual strategies. For example, eye tracking indicates that females look at the eyes before looking at the mouth for angry, happy, and surprised, but not for disgusted, fearful or sad facial expressions61. When information from a mouth is absent (hidden behind a mask), this strategy may be inefficient for disgust recognition. Different perceptual styles (either global/holistic or local) may also account for gender differences in reading disgust in masked faces. It is believed that females possess a rather holistic perceptual style, whereas males a rather local one with an effortful piecemeal analysis of facial features and cues51,62,63,64,65,66,67. Obviously, face covering more heavily affects the holistic style requiring appearance of faces in their entirety, whereas the local information processing style allows extracting some subtle cues pointing to disgust in the upper part of faces. This is in line with the study in Japanese individuals revealing that people who are capable of inferring complex mental states of others from subtle cues may be less susceptible to the negative impact of mask wearing25. Moreover, individuals with a higher empathic concern demonstrate higher recognition level for disgust in masked faces29. Reportedly, women not only experience emotional disgust more often, but also spend more time attending to disgust facial expressions than men68. In our opinion, however, aversive behavior toward disgust seems to be more plausible. In line with this, as compared to female SZ patients, male individuals with SZ excel in recognition of disgust in unmasked faces69. Apparently, this agrees with the present findings indicating that reading disgust in masked female faces may be more demanding than in male faces, and for female as compared to male observers. One possible account for this may be that female posers express disgust even to a greater degree by the lower part of a face than male posers as well as even less by the upper part of a face. These assumptions, however, call for further experimental support.

Reading covered faces in mental and neurological disorders

As mentioned earlier, reading covered faces may be particularly challenging for individuals with mental, neurological, neurodevelopmental, and psychosomatic disorders2. However, experimental evidence is sparse. Among patients with MDD, SZ, bipolar disorder (BD), and TD individuals, patients with MDD and SZ exhibit most difficulties in identifying subtle (but not intense) expressions of happiness70. While masks heavily impact recognition of happiness and sadness in TD and ASD persons, reading of anger is unaffected in both groups. Yet, disgust recognition in covered by masks faces is similarly diminished in both groups71. In the absence of high levels of comorbid alexithymia (difficulties in identifying and describing emotions experienced by oneself or others), no evidence is reported for deficient emotion recognition with masked faces in ASD72. By contrast, healthy individuals with higher scores on the AQ-10 (the 10-item Autism Spectrum Quotient) are less accurate and confident in facial expression recognition, perceiving emotional expressions as less intense12. Yet, another study in TD individuals reveals that reading emotions in masked faces is unrelated to alexithymia as assessed by the 20-item Toronto Alexithymia Scale as well as to autistic traits expression as measured by the Autism Spectrum Quotient23. Individuals with developmental prosopagnosia (DP, a neurodevelopmental condition characterized by lifelong deficits in face recognition of neural and genetic origins73) exhibit the same level of facial emotion recognition of unmasked faces as neurotypical controls, but demonstrate deficits in subtle emotion recognition in masked faces, in particular, mistaking happiness for neutral expression74. Facial emotion recognition is affected by masks in cognitively unimpaired relapsing-remitting patients with multiple sclerosis (MS); these patients also exhibit selective impairments in recognition of fear both in unmasked and masked faces75.

In a nutshell, the primary novel outcome of the present study indicates that males are not over-performed by female peers in reading basic emotions in covered by masks faces. In particular, reading disgust in masked faces is more demanding for females than for males, and for female than for male faces expressing disgust. These findings may help to explain gender/sex differences in disgust recognition that is of substantial value for understanding and treatment of mental disorders. Disgust is considered the forgotten emotion of psychiatry76 that explains everything77. Moreover, disgust is one of the primal emotions that define a uniquely human social cognitive domain57,58. Most important, a wide range of mental disorders (anxiety disorders, obsessive-compulsive disorder (OCD), specific phobias, depression, eating disorders, and body dysmorphia) are characterized by alterations in expression and/or recognition of disgust. In response to images (non-face, scenic) eliciting disgust, individuals with SZ exhibit alterations in functional magnetic resonance imaging (fMRI) brain activation, in particular, hyperactivation of the right temporal cortex78. In response to briefly exposed facial disgust expressions, reduced fMRI activation of the insula is reported in patients with SZ; moreover, this activation is positively linked to social loneliness and negatively tied with agreeableness79. For understanding the value of disgust for mental health, many exciting research avenues remain to be explored.

Data availability

The data supporting the conclusions of this paper are either included in the paper or will be made available by the authors upon request to any qualified researcher.

References

Leder, J., Koßmann, L. & Carbon, C. C. Perceptions of persons who wear face coverings are modulated by the perceivers’ attitude. Front. Neurosci. 16, 988546 (2022).

Pavlova, M. A. & Sokolov, A. A. Reading covered faces. Cereb. Cortex 32, 249–265 (2022).

Bassili, J. N. Emotion recognition: the role of facial movement and the relative importance of upper and lower areas of the face. J. Pers. Soc. Psychol. 37, 2049–2058 (1979).

Wegrzyn, M., Vogt, M. S. L., Kireclioglu, B., Schneider, J. & Kissler, J. Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS ONE 12, e0177239 (2017).

Pavlova, M. A., Carbon, C. C., Coello, Y., Sokolov, A. A. & Proverbio, A. M. Editorial: Impact of face covering on social cognition and interaction. Front. Neurosci. 17, 1150604 (2023).

Blais, C., Jack, R. E., Scheepers, C., Fiset, D. & Caldara, R. Culture shapes how we look at faces. PLoS ONE 3, e3022 (2008).

Blais, C., Linnell, K. J., Caparos, S. & Estéphan, A. Cultural differences in face recognition and potential underlying mechanisms. Front. Psychol. 12, 627026 (2021).

Caldara, R. Culture reveals a flexible system for face processing. Curr. Dir. Psychol. Sci. 26, 249–255 (2017).

Carbon, C. C. Wearing face masks strongly confuses counterparts in reading emotions. Front. Psychol. 11, 566886 (2020).

Bani, M. et al. Behind the mask: emotion recognition in healthcare students. Med. Sci. Educ. 31, 1273–1277 (2021).

Noyes, E., Davis, J. P., Petrov, N. V., Brewer, R. & Ritchie, K. L. The effect of face masks and sunglasses on identity and expression recognition with super-recognizers and typical observers. R. Soc. Open Sci. 8, 201169 (2021).

Pazhoohi, F., Forby, L. & Kingstone, A. Facial masks affect emotion recognition in the general population and individuals with autistic traits. PLoS ONE 16, e0257740 (2021).

Blazhenkova, O., Dogerlioglu-Demir, K. & Booth, R. E. Masked emotions: do face mask patterns and colors affect the recognition of emotions? Cogn. Res. Princ. Implic. 7, 33 (2022).

Carbon, C. C., Held, M. J. & Schütz, A. Reading emotions in faces with and without masks is relatively independent of extended exposure and individual difference variables. Front. Psychol. 13, 856971 (2022).

Grahlow, M., Rupp, C. I. & Derntl, B. The impact of face masks on emotion recognition performance and perception of threat. PLoS ONE 17, e0262840 (2022).

Grenville, E. S. & Dwyer, D. E. Face masks have emotion-dependent dissociable effects on accuracy and confidence in identifying facial expressions of emotion. Cogn. Res. Princ. Implic. 7, 15 (2022).

Kim, G., Seong, S. R., Hong, S.-S. & Choi, E. Impact of face masks and sunglasses on emotion recognition in South Koreans. PLoS ONE 17, e0263466 (2022).

Kastendieck, T. & Hess, U. (Un)mask yourself! Effects of face masks on facial mimicry and emotion perception during the COVID-19 pandemic. Cogn. Emot. 36, 59–69 (2021).

Maiorana, N. et al. The effect of surgical masks on the featural and configural processing of emotions. Int. J. Environ. Res. Public Health 19, 2420 (2022).

Proverbio, A. M. & Cerri, A. The recognition of facial expressions under surgical masks: the primacy of anger. Front. Neurosci. 16, 864490 (2022).

Rinck, M., Primbs, M., Verpaalen, I. A. M. & Bijlstra, G. Face masks impair facial emotion recognition and induce specific emotion confusions. Cogn. Res. Princ. Implic. 7, 83 (2022).

Tsantani, M., Podgajecka, V., Brewer, R. & Cook, R. J. How does the presence of a surgical face mask impair the perceived intensity of facial emotions? PLoS ONE 17, e0262344 (2022).

Verroca, A., De Rienzo, C., Gambarota, F. & Sessa, P. Mapping the perception-space of facial expressions in the era of face masks. Front. Psychol. 13, 956832 (2022).

Gil, S. & Bigot, L. L. Emotional face recognition when a colored mask is worn: a cross-sectional study. Sci. Rep. 13, 956832 (2023).

Ikeda, S. Social sensitivity predicts accurate emotion inference from facial expressions in a face mask: a study in Japan. Curr. Psychol. https://doi.org/10.1007/s12144-023-04344-3 (2023).

Proverbio, A. M., Cerri, A. & Gallotta, C. Facemasks selectively impair the recognition of facial expressions that stimulate empathy: an ERP study. Psychophysiology 60, e14280 (2023).

Ventura, M. et al. Face memory and facial expression recognition are both affected by wearing disposable surgical face masks. Cogn. Process. 24, 43–57 (2022).

Cooper, H., Brar, A., Beyaztas, H., Jennings, B. J. & Bennetts, R. The effects of face coverings, own-ethnicity biases, and attitudes on emotion recognition. Cogn. Res. Princ. Implic. 7, 57 (2022).

Shepherd, J. & Rippon, D. The impact of briefly observing faces in opaque facial masks on emotion recognition and empathic concern. Q. J. Exp. Psychol. (Hove) 76, 404–418 (2022).

Levitan, C. A. et al. Mask wearing affects emotion perception. Iperception 13, 204166952211073 (2022).

Saito, T., Motoki, K. & Takano, Y. Cultural differences in recognizing emotions of masked faces. Emotion 23, 1648–1657 (2023).

Aguillon-Hernandez, N., Jusiak, R., Latinus, M. & Wardak, C. COVID-19 masks: a barrier to facial and vocal information. Front. Neurosci. 16, 982899 (2022).

Leitner, M. C., Meurer, V., Hutzler, F., Schuster, S. & Hawelka, S. The effect of masks on the recognition of facial expressions: a true-to-life study on the perception of basic emotions. Front. Psychol. 13, 933438 (2022).

Langbehn, A. T., Yermol, D., Zhao, F., Thorstenson, C. A. & Niedenthal, P. M. Wearing N95, surgical, and cloth face masks compromises the perception of emotion. Affect. Sci. 3, 105–117 (2022).

Bogodistov, Y. & Dost, F. Proximity begins with a smile, but which one? Associating non-duchenne smiles with higher psychological distance. Front. Psychol. 8, 1374 (2017).

Sheldon, K. M., Goffredi, R. & Corcoran, M. The glow still shows: effects of facial masking on perceptions of Duchenne versus social smiles. Perception 50, 720–727 (2021).

Marini, M., Ansani, A., Paglieri, F., Caruana, F. & Viola, M. The impact of facemasks on emotion recognition, trust attribution and re-identification. Sci. Rep 11, 5577 (2021).

Salk, R. H., Hyde, J. S. & Abramson, L. Y. Gender differences in depression in representative national samples: meta-analyses of diagnoses and symptoms. Psychol. Bull. 143, 783–822 (2017).

Santos, S., Ferreira, H. L., Martins, J., Gonçalves, J. C. S. & Castelo-Branco, M. Male sex bias in early and late onset neurodevelopmental disorders: shared aspects and differences in Autism Spectrum Disorder, Attention Deficit/Hyperactivity Disorder, and Schizophrenia. Neurosci. Biobehav. Rev. 135, 104577 (2022).

Ferrer-Quintero, M. et al. The effect of sex on social cognition and functioning in schizophrenia. NPJ Schizophr 7, 57 (2021).

Kubota, R. et al. Sex differences in social cognition and association of social cognition and neurocognition in early course schizophrenia. Front. Psychol. 13, 867468 (2022).

Wong, H. K. & Estudillo, A. J. Face masks affect emotion categorisation, age estimation, recognition, and gender classification from faces. Cogn. Res. Princ. Implic. 7, 91 (2022).

Grundmann, F., Epstude, K. & Scheibe, S. Face masks reduce emotion-recognition accuracy and perceived closeness. PLoS ONE 16, e0249792 (2021).

Calbi, M. et al. The consequences of COVID-19 on social interactions: an online study on face covering. Sci. Rep. 11, 2601 (2021).

Pavlova, M. A. & Sokolov, A. A. Reading language of the eyes. Neurosci. Biobehav. Rev. 140, 104755 (2022).

Swain, R. H., O’Hare, A. J., Brandley, K. & Gardner, A. T. Individual differences in social intelligence and perception of emotion expression of masked and unmasked faces. Cogn. Res. Princ. Implic. 7, 54 (2022).

Baron-Cohen, S. et al. The “reading the mind in the eyes” test: complete absence of typical sex difference in ~400 men and women with autism. PLoS ONE 10, e0136521 (2015).

Isernia, S., Sokolov, A. N., Fallgatter, A. J. & Pavlova, M. A. Untangling the ties between social cognition and body motion: gender impact. Front. Psychol. 11, 138 (2020).

Pavlova, M. A. et al. Ties between reading faces, bodies, eyes, and autistic traits. Front. Neurosci. 16, 997263 (2022).

Kubon, J. et al. Neural circuits underpinning face tuning in male depression. Cereb. Cortex 33, 3827–3839 (2023).

Romagnano, V., Sokolov, A. N., Fallgatter, A. J. & Pavlova, M. A. Do subtle cultural differences sculpt face pareidolia? Schizophrenia 9, 28 (2023).

Ebner, N. C., Riediger, M. & Lindenberger, U. FACES - A database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods 42, 351–362 (2010).

Lix, L. M., Keselman, J. C. & Keselman, H. J. Consequences of assumption violations revisited: a quantitative review of alternatives to the one-way analysis of variance F test. Rev. Educ. Res. 66, 579–619 (1996).

Blanca, M. J., Alarcón, R., Arnau, J., Bono, R. & Bendayan, R. Non-normal data: Is ANOVA still a valid option? Psicothema 29, 552–557 (2017).

Blanca, M., Arnau, J., García-Castro, F. J., Alarcón, R. & Bono, R. Non-normal data in repeated measures ANOVA: impact on Type I error and power. Psicothema 35, 21–29 (2023).

Dalle Nogare, L., Cerri, A. & Proverbio, A. M. Emojis are comprehended better than facial expressions, by male participants. Behav. Sci. 13, 278 (2023).

Rozin, P., Lowery, L. & Ebert, R. Varieties of disgust faces and the structure of disgust. J. Pers. Soc. Psychol. 66, 870–881 (1994).

Chapman, H. A. & Anderson, A. K. Understanding disgust. Ann. N. Y. Acad. Sci. 1251, 62–76 (2012).

Duran, N. & Atkinson, A. B. Foveal processing of emotion-informative facial features. PLoS ONE 16, e0260814 (2021).

Zheng, Y. & Hsiao, J. H. Differential audiovisual information processing in emotion recognition: an eye-tracking study. Emotion 23, 1028–1039 (2023).

Hall, J., Hutton, S. B. & Morgan, M. J. Sex differences in scanning faces: does attention to the eyes explain female superiority in facial expression recognition? Cogn. Emot. 24, 629–637 (2010).

McKone, E. et al. Importance of the inverted control in measuring holistic face processing with the composite effect and part-whole effect. Front. Psychol. 4, 33 (2013).

Boccia, M. et al. Why do you like Arcimboldo’s portraits? Effect of perceptual style on aesthetic appreciation of ambiguous artworks. Atten. Percept. Psychophys. 76, 1516–1521 (2014).

Boccia, M. et al. Do you like Arcimboldo’s? Esthetic appreciation modulates brain activity in solving perceptual ambiguity. Behav. Brain Res. 278, 147–154 (2015).

Pavlova, M. A., Scheffler, K. & Sokolov, A. N. Face-n-Food: gender differences in tuning to faces. PLoS ONE 10, e0130363 (2015).

Pavlova, M. A., Mayer, A., Hösl, F. & Sokolov, A. N. Faces on her and his mind: female and likable. PLoS ONE 11, e0157636 (2016).

Romagnano, V., Sokolov, A. N., Steinwand, P., Fallgatter, A. J. & Pavlova, M. A. Face pareidolia in male schizophrenia. Schizophrenia 8, 112 (2022).

Kraines, M. A., Kelberer, L. J. A. & Wells, T. T. Sex differences in attention to disgust facial expressions. Cogn. Emot. 31, 1692–1697 (2016).

Fernandez-Modamio, M. et al. Emotion recognition in patients with schizophrenia: the role of sex. Psicothema 32, 197–203 (2020).

Escelsior, A. et al. COVID-19 and psychiatric disorders: the impact of face masks in emotion recognition face masks and emotion recognition in psychiatry. Front. Psychiatry 13, 932791 (2022).

Ventura, M. et al. Investigating the impact of disposable surgical face‐masks on face identity and emotion recognition in adults with autism spectrum disorder. Autism Res. 16, 1063–1077 (2023).

Gehdu, B. K., Tsantani, M., Press, C., Gray, K. L. & Cook, R. Recognition of facial expressions in autism: effects of face masks and alexithymia. Q. J. Exp. Psychol. https://doi.org/10.1177/17470218231163007 (2023).

Grüter, T., Grüter, M. & Carbon, C. C. Neural and genetic foundations of face recognition and prosopagnosia. J. Neuropsych. 2, 79–97 (2008).

Tsantani, M., Brewer, R. & Cook, R. J. New evidence of impaired expression recognition in developmental prosopagnosia. Cortex 154, 15–26 (2022).

Ziccardi, S., Crescenzo, F. & Calabrese, M. “What is hidden behind the mask?” Facial emotion recognition at the time of COVID-19 pandemic in cognitively normal multiple sclerosis patients. Diagnostics 12, 47 (2021).

Phillips, M. L., Senior, C., Fahy, T. & David, A. S. Disgust – the forgotten emotion of psychiatry. British J. Psychiatry 172, 373–375 (1998).

Young, M. How Disgust Explains Everything. The New York Times https://www.nytimes.com/2021/12/27/magazine/disgust-science.html (2021).

Garcia-Leon, M. A. et al. Altered brain responses to specific negative emotions in schizophrenia. Neuroimage Clin 32, 102894 (2021).

Lindner, C. et al. Social alienation in schizophrenia patients: association with insula responsiveness to facial expressions of disgust. PLoS ONE 9, e85014 (2014).

Acknowledgements

We are grateful to participants for their engagement in the study, Valentina Romagnano, Annika Resch, and Alessandro Lecchi for assistance with the study and manuscript preparation, and Dr. Michael Erb for his help with the stimuli presentation program. We acknowledge the support of the German Research Foundation (DFG, PA847/25-1 and FA331/31-1) to M.A.P. and A.J.F., respectively, and Reinhold Beitlich Foundation to M.A.P. and A.N.S. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. We acknowledge support by the Open Access Publishing Fund of University of Tübingen.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

Conceived and designed the study experiments: M.A.P. and A.N.S. Performed testing: J.M. Analyzed the data: J.M., A.N.S., and M.A.P. Contributed materials/analysis tools: M.A.P., C.C.C., A.N.S., and A.J.F. Participant recruitment: J.M. Wrote the paper: M.A.P., A.N.S., and J.M. All co-authors contributed to the writing and editing. Supervision and administration of the whole project: M.A.P.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pavlova, M.A., Moosavi, J., Carbon, CC. et al. Emotions behind a mask: the value of disgust. Schizophr 9, 58 (2023). https://doi.org/10.1038/s41537-023-00388-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41537-023-00388-3