Abstract

Quantum circuit algorithms often require architectural design choices analogous to those made in constructing neural and tensor networks. These tend to be hierarchical, modular and exhibit repeating patterns. Neural Architecture Search (NAS) attempts to automate neural network design through learning network architecture and achieves state-of-the-art performance. We propose a framework for representing quantum circuit architectures using techniques from NAS, which enables search space design and architecture search. We use this framework to justify the importance of circuit architecture in quantum machine learning by generating a family of Quantum Convolutional Neural Networks (QCNNs) and evaluating them on a music genre classification dataset, GTZAN. Furthermore, we employ a genetic algorithm to perform Quantum Phase Recognition (QPR) as an example of architecture search with our representation. Finally, we implement the framework as an open-source Python package to enable dynamic circuit creation and facilitate circuit search space design for NAS.

Similar content being viewed by others

Introduction

Machine learning using trainable quantum circuits provides promising applications for quantum computing1,2,3,4. Among various parameterised quantum circuit (PQC) models, the Quantum Convolutional Neural Network (QCNN) introduced in ref. 5 stands out for its shallow circuit depth, absence of barren plateaus6, and good generalisation capabilities7. It has been implemented experimentally8 and combines techniques from Quantum Error Correction (QEC), Tensor Networks (TNs) and deep learning. Research at this intersection has been fruitful, yielding deep-learning solutions for quantum many-body problems9,10,11,12, quantum-inspired insights for deep learning13,14,15 and equivalences between them16,17,18. Deep learning has been widely successful in recent years with applications spanning from content filtering and product recommendations to aided medical diagnosis and scientific research. Its main characteristic, learning features from raw data, eliminates the need for manual feature design by experts19. AlexNet20 demonstrated this and marked the shift in focus from feature design to architecture design21. Naturally, the next step is learning network architecture, which Neural Architecture Search (NAS) aims to achieve22. NAS has already produced state-of-the-art deep-learning models with automatically designed architectures21,23,24,25. NAS consist of three main categories: search space, search strategy and performance estimation strategy22. The search space defines the set of possible architectures that a search algorithm can consider, and carefully designed search spaces help improve search efficiency and reduce computational complexity26. Search space design often involves encoding architectures using a cell-based representation. Usually, a set of primitive operations, such as convolutions or pooling, are combined into a cell to capture some design motif (compute graph). Different cells are then stacked to form a complete architecture. Cell-based representations are popular because they can capture repeated motifs and modular design patterns, which are often seen in successful hand-crafted architectures. Similar patterns also appear in quantum circuit designs5,27,28,29,30,31. For example, Grant et al.27 use hierarchical architectures based on tensor networks to classify classical and quantum data. Similarly, Cong et al.5 use the multiscale entanglement renormalisation ansatz (MERA) as an instance of their proposed QCNN and discuss generalisations for quantum analogues of convolution and pooling operations. In this work, we formalise these design patterns by providing a hierarchical representation for quantum circuits. We use the QCNN as a basis and illustrate search space design and architecture search with NAS for PQCs.

The QCNN belongs to the class of hybrid quantum-classical algorithms, in which a quantum computer executes the circuit, and a classical computer optimises its parameters. Two key factors must be considered when using PQCs for machine learning: the method of data encoding (feature map)32,33 and the choice of a quantum circuit34,35,36. Both the challenge and objective are to find a suitable quantum circuit for a given feature map that is expressive and trainable33. The typical approach to finding a circuit is to keep the architecture (gates layout) fixed and to optimise continuous parameters such as rotation angles. Optimising architecture is referred to as variable structure ansatz in literature and is generally not the focus because of its computational complexity2. However, the architecture of a circuit can improve its expressive power and the effectiveness of initialisation techniques28. Also, the QCNN’s defining characteristic is its architecture, which we found to impact model performance significantly. Therefore, we look towards NAS to optimise architecture in a quantum circuit setting. This approach, sometimes referred to as quantum architecture search (QAS)37,38, has shown promising results for the variational quantum eigensolver (VQE)39,40,41,42, the quantum approximate optimisation algorithm (QAOA)43,44 and general architecture search37,38,45,46,47. However, these approaches are often task-specific or impose additional constraints, such as circuit topology or allowable gates, to make them computationally feasible. To the best of the author’s knowledge, there is currently no framework that can generate hierarchical architectures such as the QCNN without imposing such constraints.

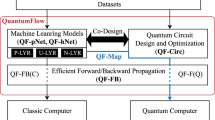

One problem with the cell-based representation for NAS is that the macro architecture, the sequence of cells, is fixed and must be chosen22. Recently, Liu et al.26 proposed a hierarchical representation as a solution, where a cell sequence acts as the third level of a multi-level hierarchy. In this representation, lower-level motifs act as building blocks for higher-level ones, allowing both macro and micro architecture to be learned. In this work, we follow a similar approach and represent a QCNN architecture as a hierarchy of directed graphs. On the lowest level are primitive operations such as convolutions and pooling. The second level consists of sequences of these primitives, such as convolution-pooling or convolution–convolution units. Higher-level motifs then contain sequences of these lower-level motifs. For example, the third level could contain a sequence of three convolution-pooling units, as seen in Fig. 1d. For the primitives, we define hyperparameters such as strides and pooling filters that control their architectural effect. This way, the representation can capture design motifs on multiple levels, from the distribution of gates in a single layer to overall hierarchical patterns such as tensor tree networks. We demonstrate this by generating a family of QCNN architectures based on popular motifs in literature. We then benchmark this family of models and show that alternating architecture has a greater impact on model performance than other modelling components. By alternating architecture, we mean the following: given a quantum circuit that consist of n unitary gates, an altered architecture consists of the same n gates rearranged in a different way on the circuit. The types of rearrangements may be changing which qubits the gates act upon, altering the order of gate occurrences, or adjusting larger architectural motifs, such as pooling specific qubits (stop using them) while leaving others available for subsequent gates and so on. We create architectural families to show the impact of alternating architecture, any two instances of the family will have the exact same unitaries, just applied in a different order on different qubits. Consider the machine-learning pipeline for classifying musical genres from audio signals, seen in Fig. 1. We start with a 30-s recording of a song (Fig. 1a) and transform it in two ways. The first is tabular form (Fig. 1b), derived from standard digital signal processing statistics of the audio signal. The second is image form (Fig. 1c), constructed using a Mel-frequency spectrogram. Both datasets are benchmarked separately, with their own data preprocessing and encoding techniques applied. For the tabular data, we test Principal Component Analysis (PCA) and tree-based feature selection before encoding it in a quantum state using either qubit, IQP, or amplitude encoding. Once encoded, we choose two-qubit unitary ansatzes (Supplementary Fig. 1) Um and Vm for the convolution and pooling primitives m = 1, 2, …, 6, as shown in Fig. 1d. We test them across different instances of an architecture family. Of all the components in this pipeline, alternating architecture, that is changing how each Um and each Vm are spread across the circuit, had the greatest impact on model performance. In addition to our theoretical framework, we implement it as an open-source Python package to enable dynamic QCNN creation and facilitate search space design for NAS. It allows users to experimentally determine suitable architectures for specific modelling setups, such as finding circuits that perform well under a specific noise or hardware configuration, which is particularly relevant in the Noisy Intermediate-Scale Quantum (NISQ)48 era. In addition, as more qubits become available, the hierarchical nature of our framework provides a natural way to scale up the same model. In summary, our contributions are the architectural representation for QCNNs, a Python package for dynamic QCNN creation, and experimental results on the potential advantage of architecture search in a quantum setting.

The machine-learning pipeline we implemented for music genre classification. Given an audio signal of a song (a), we generate two forms of data: tabular (b) and image (c). Each form has data preprocessing applied before being encoded into a quantum state (d). The QCNN circuit shown in (d) favours Principal Component Analysis (PCA) because qubits are pooled from bottom to top, and principal components are encoded from top to bottom. This architecture is an instance of the reverse binary tree family that we generated with our framework.

The remainder of this paper is structured as follows: we begin with our main results by summarising the architectural representation for QCNNs and then show the effect of alternating architecture, justifying its importance. We then provide an example of architecture search with our representation by employing an evolutionary algorithm to perform QPR. Following this, we give details of our framework by providing a mathematical formalism for the representation and describing its use. Next, with the formalism at hand, we show how it facilitates search space design by describing the space we created for the benchmark experiments. We then discuss generalisations of formalism and the applicability of our representation with search algorithms. After this, we elaborate on our experimental setup in “Methods”. Finally, we discuss applications and future steps.

Results

Architectural representation

Figure 2 shows our architectural representation for QCNNs. We define two-qubit unitary ansatzes from a given set of gates, and capture design motifs \({M}_{k}^{l}\) on different levels l of the hierarchy. On the lowest level l = 1, we define primitives which act as building blocks for the architecture. For example, a convolution operation with stride one is encoded as the directed graph \({M}_{1}^{1}\), and with stride three as \({M}_{2}^{1}\). The directed graph \({M}_{3}^{1}\) is a pooling operation that measures the bottom half of the circuit, and \({M}_{4}^{1}\) measures from the inside outwards. Combined, they can form higher-level motifs such as convolution-pooling units \({M}_{1}^{2}\) (e), convolution–convolution units \({M}_{2}^{2}\), or convolution-pooling-convolution units \({M}_{3}^{2}\). The highest level l = L contains only one motif \({M}_{1}^{L}\), the complete QCNN architecture. \({M}_{1}^{L}\) is a hierarchy of directed graphs fully specifying how to spread the unitary ansatzes across the circuit. This hierarchical representation is based on the one from ref. 26 for deep neural networks (DNNs), and allows for the capture of modularised design patterns and repeated motifs. The two lines of code (e) and (f) show the power of this representation as it is all that is required to create the entire QCNN circuit from Fig. 1d. The code comes from the Python package we implemented based on the work of this paper. It facilitates dynamic QCNN creation and search space design.

An overview of our architectural representation for QCNNs. From a given set of gates, we build two-qubit unitary ansatzes. The representation then captures design motifs \({M}_{k}^{l}\) on different levels l of the hierarchy. On the lowest level l = 1, we define primitives which act as building blocks for the architecture. For example, a convolution operation with stride one is encoded as the directed graph \({M}_{1}^{1}\). The directed graph \({M}_{3}^{1}\) is a pooling operation that measures the bottom half of the circuit. Combined, they form the level two motif (e): a convolution-pooling unit \({M}_{1}^{2}\). Higher-level motifs consist of combinations of lower-level motifs up until the final level l = L, which contains only one motif \({M}_{1}^{L}\), the complete QCNN architecture. \({M}_{1}^{L}\) is a hierarchy of directed graphs fully specifying how to spread the unitary ansatzes across the circuit. The two lines of code (e) and (f) show the power of this representation as it is all that is required to create the entire QCNN circuit from Fig. 1d. The code comes from the Python package we implemented based on the work of this paper. It facilitates dynamic QCNN creation and search space design.

Architectural impact

The details regarding specific notation and representation of the framework are given after this section, first we justify it with the following experimental results. We also give background on QCNNs in Supplementary Note 1 and on quantum machine learning in Supplementary Note 2 for more context. To illustrate the impact of architecture on model performance, we compare the fixed architecture from the experiments of ref. 29 to other architectures in the same family while keeping all other components the same. The only difference in each comparison is architecture (how the unitaries are spread across the circuit). The architecture in ref. 29 is represented within our framework as: (sc, F*, sp) = (1, even, 0) ↦ Qfree(8) + (Qconv(1) + Qpool(0, Feven)) × 3, see Algorithm 1. To evaluate their performance, we use the country vs rock genre pair, which proved to be one of the most difficult classification tasks from the 45 possible combinations. We compare eight unitary ansatzes with different levels of complexity, shown in Supplementary Fig. 1.

Table 1 shows the results of the comparisons, the reference architecture is as described above and the discovered alteration found via random search. We note the first important result, we improved the performance of every ansatz, in one case, by 18.05%, through the random search of the architecture space. Ansatz refers to the two-qubit unitary used for the convolution operation of a model. For example, the model in Fig. 1d is described by (1, right, 0) and ansatz (a) corresponds to U1, U2 and U3 being the circuit in Supplementary Fig. 1a. Each value represents the average model accuracy and standard deviation from 30 separate trained instances on the same held-out test set.

The second important result is that alternating architecture can improve model performance without increasing complexity. For instance, the best-performing model for the reference architecture is with ansatz (g), which has an average accuracy of 73.24%. However, this ansatz causes the model to have 10 × 3 = 30 parameters. In contrast, by alternating the architecture with the simplest ansatz (a), the model outperformed the best reference model with an average accuracy of 75.14% while only having 3 × 2 = 6 parameters. The parameter counts come from each model having N = 8 qubits and the same number of unitaries, 3N − 2 → 3(8) − 2 = 22, of which 13 are for convolutions. See “Search space design” and Algorithm 1 for more details. A model has three convolutions, and each convolution shares weights between its two-qubit unitaries. This means that the two-qubit unitary ansatz primarily determines the number of parameters to optimise for a model. For example, a model with ansatz (a) have 2 × 3 = 6 parameters to optimise because the ansatz has two parameters.

Another interesting result is for ansatz (c), the reference architecture could only obtain an average accuracy of 52.69%, indicating its inability to find any kind of local minimum during training, leading one to think it might be a barren plateau. But, the altered architecture was able to find a local minima and improve the average accuracy by 18.05%.

We would like to note that our primary objective in these experiments is to demonstrate the potential for performance improvement. As such, we only conducted random search for ~2 h on an i7-1165G7 processor for each ansatz. Consequently, for higher parameter ansatzes, which correspond to longer training times, the search space was less explored. This is likely the reason behind the observed decrease in performance improvement for larger parameter ansatzes. Therefore the observed improvements are all lower bounds for the potential performance increase from alternating architecture. We anticipate that significantly better architectures may still exist within the space. Table 2 presents the performance of the family of reverse binary trees (as described in Algorithm 1) for ansatz (a). Due to its quick training time, ansatz (a) was the only case for which we managed to exhaust the search space (168 architectures). In “Search space design”, we discuss how the size of the family can be easily increased or decreased. Each value represents the average accuracy of five trained instances on the country vs rock genre pair. The overall accuracy of the whole space is 63.11%, indicating that the reference architecture from Table 1 was close to the mean performance. The best-performing architecture in this space is (sc, F*, sp) = (6, left, 2), with an average accuracy of 75.93%. This is the alteration from Table 1 discovered through random search within the family of reverse binary trees. It seems that the combination of Fleft and sc = 6 performs particularly well for this task, with an average accuracy of 72.52%. In general, it appears that the convolution stride sc and pooling filter F* have the most significant impact on performance. It is also worth noting that convolution strides of sc = 3, 4, 5 performed poorly compared to the other values. The range of performance in this space goes from a minimum of 43.75% to a maximum of 75.93%, demonstrating the potential impact of architectural choices on model performance.

Finally, we compared the performance of two different architectures on the image data across all genres. This time, we used ansatz (g) to compare the \({F}_{m}^{{{{\rm{right}}}}}\) and \({F}_{m}^{{{{\rm{even}}}}}\) pooling filters, shown in Figs. 3 and 4. The image data is a low-resolution (8 × 32 = 256 = 28 pixels) spectrogram of the audio signal. We did not expect high accuracy from this data, but were interested in the variation of performance for different architectures. Figures 3 and 4 show the difficulty of some genre pairs. Interestingly, the \({F}_{m}^{{{{\rm{right}}}}}\) pooling filter outperformed the \({F}_{m}^{{{{\rm{even}}}}}\) filter on almost all genres. If we focus on the genre pairs that the models were able to classify, we see that \({F}_{m}^{{{{\rm{right}}}}}\) had 14 models that achieved an accuracy above 75%, compared to the 5 of \({F}_{m}^{{{{\rm{even}}}}}\). We also note that the image data had no PCA or tree-based feature selection applied to it, and the \({F}_{m}^{{{{\rm{right}}}}}\) filter was still favoured. A similar result was obtained with ansatz (a). This shows architecture impacts performance even on low-resolution data.

Architectural search

In this section, we present an example of applying our architectural representation in conjunction with evolutionary search to perform Quantum Phase Recognition (QPR). The specifics of the search algorithm can be found in "Generalisation and search” but we utilise an algorithm similar to the one employed in ref. 26. Mutations involve replacing a primitive within a motif with a randomly generated one, while crossover consists of combining two motifs end-to-end, if possible, or interweaving them otherwise. To facilitate comparison, we consider the same task and setup from the original QCNN paper5. The objective is to recognise a \({{\mathbb{Z}}}_{2}\times {{\mathbb{Z}}}_{2}\) symmetry-protected topological (SPT) phase for a ground state that belongs to a family of cluster-Ising Hamiltonians49:

Here, Xi, Zi are Pauli operators acting on the spin at site i and the SPT phase contains a S = 1 Haldane chain50. The ground state can belong to an SPT, paramagnetic or antiferromagnetic phase depending on the values of h1, h2, and J. Our goal is to identify a QCNN capable of distinguishing between SPT and other phases by measuring a single qubit. Following the approach in ref. 5, we consider a system of N = 15 spins and train a circuit on 40 equally spaced points along h2 = 0, where the ground state is known to be in the SPT phase when h1 ≤ 1. We also evaluate the circuit with the same sample complexity5:

where P represents the probability of measuring a non-zero expectation value and P0 = 0.5. Equation (2) calculates the minimum number of measurements required to be 95% confident that P ≠ 0.5, with P being the expectation value of the circuit U encoded with the ground state \(\left\vert {\psi }_{g}\right\rangle\) transformed into a probability: \(p=(\left\langle {\psi }_{g}\right\vert U\left\vert {\psi }_{g}\right\rangle +1)/2\). Therefore a well-performing QCNN will yield low values of \({M}_{\min }\) near the phase boundary for points within the SPT phase. We define the fitness of an architecture as a linear combination of the sample complexity values Min, Mmiddle for points in the SPT phase, and the mean squared error MSEout for points outside the boundary. Figure 5 illustrates the points considered for Min, Mmiddle and MSEout. During search we assigned the majority of the weight to Min as the goal is to develop a model that confidently identifies SPT phases near the boundary. To prevent a model from classifying all points as SPT, MSEout is included, while Mmiddle ensures overall good performance. Finally, during search we added a regularisation term for the number of parameters, to find well-performing architectures with low computational complexity.

Expectation values for the circuit found via evolutionary search for a system of N = 15 spins. Points represent a test set of 64 × 64 ground states for various h1 and h2 values of the Hamiltonian, J = 1. The inside, middle and outside points were used to evaluate an architecture’s fitness during search. The same colour scale as in5 is used to facilitate comparison.

Table 3 and Fig. 5 show the performance of the best architecture found during search. The search algorithm identified a QCNN with only 11 parameters, in contrast to the 1308 parameters of the original reference architecture. For points in the SPT phase near the boundary, the sample complexity of the discovered architecture (Min = 36.079) is lower than that of the reference (61.523), resulting in 25 fewer measurements required on average. Although the reference architecture exhibits slightly better sample complexity for points in the middle of the phase boundary (Mmiddle = 10.992) compared to the discovered architecture (Mmiddle = 13.253), and a marginally lower MSE for points outside the phase boundary (MSEout = 0.164 compared to MSEout = 0.167), the improvements in Min and the number of parameters are substantial and more advantageous. The discovered architecture is shown in Supplementary Fig. 2, and the phase diagram it generates is shown in Fig. 5. The search was conducted on a system equipped with two Intel Xeon E5-2640 processors (2.0 GHz) and 128 GB of RAM, and it took ~2 h to discover the final architecture (over 831 generations). Although we anticipate that extending the search may yield even better architectures, the primary goal of this experiment was to demonstrate a representative example of the search process and showcase the ease of obtaining promising results. This emphasises the potential advantages of architecture search in quantum computing tasks, where the computational cost of a circuit can be reduced while maintaining or even improving performance. We attribute this success to a well-defined search space, with our representation aiming to simplify the process of creating such spaces. Moreover, our representation allows for the incorporation of hardware constraints, facilitating the search for architectures that perform well on specific quantum devices. We believe this to be a necessary step towards the development of efficient quantum algorithms for real-world applications. By employing a well-structured representation and search space, we can streamline the process of discovering optimised quantum circuit architectures that are better suited for specific tasks and hardware.

Digraph formalism

We represent the QCNN architecture as a sequence of directed graphs, each acting as a primitive operation such as a convolution (Qconv) or pooling (Qpool). A primitive is the directed graph G = (Q, E); its nodes Q represent available qubits, and oriented edges E the connectivity of the unitary applied between a pair of them. The direction of an edge indicates the order of interaction for the unitary. For example, a CNOT gate with qubit i as control and j as target is represented by the edge from qubit i to qubit j. We also introduce other primitives, such as Qfree, that free up pooled qubits for future operations. The effect of a primitive is based on its hyperparameters and the effect of its predecessor. This way, their individual and combined architectural effects are captured, enabling them to be dynamically stacked one after another to form the second level l = 2 motifs. Stacking these stacks in different ways constitutes higher-level motifs until a final level l = L, where one motif constitutes the entire QCNN architecture. In the case of pooling, controlled unitaries are used in place of measurement due to the deferred measurement principle51. We define a QCNN architecture in Definition 1.

Definition 1. The k = 1, 2, …Kl motif on level l = 1, 2, …, L is the tuple \({M}_{k}^{l}=({M}_{j}^{l-1}| j\in \{1,2,\ldots ,{K}_{l-1}\})\). Motifs on the lowest level \({M}_{k}^{1}\) are primitive operations, which form the set \({M}^{(1)}=\{{M}_{1}^{1},{M}_{2}^{1},\ldots ,{M}_{{K}_{1}}^{1}\}\). At the highest level l = L there is only one motif \({M}_{1}^{L}\) which is a hierarchy of tuples. \({M}_{1}^{L}\) is flattened through an assemble operation: \(M={{{\rm{assemble}}}}({M}_{1}^{L})\) which encodes each primitive into a directed graph Gm = (Qm, Em), the nodes Qm are available qubits and edges Em the connectivity of unitaries applied between them. M describes the entire QCNN architecture, M = (G1, G2, …, G∣M∣).

Figure 2 shows example motifs on different levels for a QCNN. Higher-level motifs are tuples, and the lowest-level ones directed graphs. The dependence between successive motifs is specified in Definition 2.

Definition 2. Let x ∈ {c, p, f} indicate the primitive type for {Qconv, Qpool, Qfree} and \({M}_{1}^{L}\) be the highest level motif for a QCNN. Then \({{{\rm{assemble}}}}({M}_{1}^{L})\) flattens depth-wise into M = (G1, G2, …, G∣M∣) where \({G}_{m}=({Q}_{m}^{x},{E}_{m}^{x})\). G1 is always a Qfree(Nq) primitive specifying the number of available qubits with Nq. For m > 1, Gm is defined as:

with d−(i) and d+(i) referring to the indegree and outdegree of node i, respectively, and ⧹ to set difference.

We show this digraph perspective in Fig. 6, it is the data structure of the circuit in Fig. 1d. If the mth graph in M is a convolution, we denote its two-qubit unitary acting on qubit i and j as \({U}_{m}^{ij}(\theta )\). Similarly, for pooling, we notate the unitary as \({V}_{m}^{ij}(\theta )\). The action of \({V}_{m}^{ij}(\theta )\) is measuring qubit i (the control), which causes a unitary rotation V on qubit j (the target). With this figure and notational scheme in mind, Definition 2 reads as follows:

Graph view for the circuit architecture in Fig. 1d. The same two-qubit unitary is used in all layers for the convolution operation, i.e., \({U}_{m}^{ij}={U}_{m}.\) Similarly, in this example, we use the same two-qubit pooling unitaries \({V}_{m}^{ij}={V}_{m}\). The top left graph is \({G}_{1}=({Q}_{1}^{c},{E}_{1}^{c})\) with all eight qubits \({Q}_{1}^{c}\) available for the convolution operations \({U}_{1}^{ij},(i,j)\in {E}_{1}^{c}\). Below G1 is G2 with half the qubits of \({Q}_{2}^{p}\) measured, indicated by the ith indices of \({V}_{m}^{ij},(i,j)\in {E}_{2}^{p}\). For example, qubit \(8\in {Q}_{2}^{p}\) is measured and V2 applied to qubit \(1\in {Q}_{2}^{p}\) as indicated by \({V}_{2}^{81},(8,1)\in {E}_{2}^{p}\). This pattern repeats until one qubit remains in G6, which is measured and used to classify the music genre.

\({Q}_{m}^{x}\) is the set of available qubits for the mth primitive in M, where x ∈ {c, p, f} for convolution, pooling or Qfree respectively. The first primitive G1 is Qfree(Nq) which specifies the number of available qubits Nq for future operations. Any proceeding m > 1 primitive Gm only has access to qubits not measured up to that point. This is the previous primitive’s available qubits \({Q}_{m-1}^{x}\) if its type x ∈ {c, f} is a convolution or Qfree. Otherwise, for pooling, x = p, it’s the set difference: \({Q}_{m-1}^{x}\setminus \{i\in (i,j)\in {E}_{m-1}^{x}\}\) since the i indices during pooling \((i,j)\in {E}_{m}^{p}\) indicates measured qubits. This is visualised as small red circles in Fig. 6. The only way to make those qubits available again is through Qfree(Nf), which can be used to free up Nf qubits. For the convolution primitive, \({E}_{m}^{c}\) is the set of all pairs of qubits that have \({U}_{m}^{ij}(\theta )\) applied to them. Finally, for the pooling primitive, \({E}_{m}^{p}\) is the set of pairs of qubits that have pooling unitaries \({V}_{m}^{ij}(\theta )\) applied to them. The restriction is that if qubit i is measured, it cannot have any other rotational unitary V applied to it within the same primitive Gm. This means the indegree d− of node i is zero. Similarly, if qubit i is measured, it may only have one corresponding target, meaning that the outdegree d+ of node i is one. In the same vein, no target qubit j can be the control for another, d+(j) = 0. Every target qubit j have at least one corresponding control qubit i, d−(j)≥1. It is possible for multiple measured qubits to have the same target qubit, giving \({E}_{m}^{p}\) a surjective property.

Following this definition, we can express a convolution or pooling operation for the mth graph in M as:

Let \({\widetilde{W}}_{m}={\widetilde{U}}_{m}\,{{{\rm{or}}}}\,{\widetilde{V}}_{m}\) be the mth primitive in M based on whether it’s a convolution or pooling and the identity I if it’s a Qfree primitive. Then the state of the QCNN after one training run is:

We note that the choice of V is unrestricted, which means that within one layer each V can be a different rotation. Figure 1d shows a special case where the same V is used per layer, which is computationally favourable compared to using different ones. To enable weight sharing, the QCNN require convolution unitaries to be the same i.e., \({U}_{m}^{ij}={U}_{m}^{kh}\;{{{\rm{where}}}}\;(i,j)\in ,(k,h)\in {E}_{m}^{c}\). This formulation only regards one and two-qubit unitaries for convolutions, one-qubit unitaries being described with \({E}_{m}^{c}=(i,i),i\in {Q}_{m}^{c}\). In “Generalisation and search”, we extend it to multiple qubit unitaries.

After training, \(\left\vert \psi \right\rangle\) in Eq. (5) is measured based on the type of classification task, in this work, we focus on binary classification allowing us estimate \(\hat{y}\) by measuring the remaining or specified qubit in the computational basis:

We note that multi-class classification is also possible by measuring the other qubits and associating each with a different class outcome. Following this, we calculate the cost of a training run with \(C(y,\hat{y})\), then using numerical optimisation the cost is reduced by updating the parameters from Eqs. (3) to (4) and repeat the whole process until some local minimum is reached. Resulting in a model alongside a set of parameters to be used for classifying unseen data.

Controlling the primitives

We define basic hyperparameters that control the individual architectural effect of a primitive. There are two broad classes of primitives, special and operational. A special primitive has no operational effect on the circuit, such as Qfree. Its purpose is to make qubits available for future operational primitives and therefore has one hyperparameter Nf for this specification. Nf is typically an integer or set of integers corresponding to qubit numberings:

Each operational primitive has its own stride parameter analogous to classical CNNs. For a given stride s, each qubit gets paired with the one s qubits away modulo the number of available qubits. For example, a stride of 1 pairs each qubit with its neighbour. This depends on the qubit numbering used which is based on the circuit topology. For illustration purposes, we use a circular topological ordering, but any layout is possible as long as some ordering is provided for \({Q}_{1}^{f}\). For the convolution primitive, we define its stride sc ∈ {1, 2, 3, . . . } as:

Equation (10) captures the case where there are only two qubits available for a convolution and Eq. (11) when there is only one which implies the convolution unitaries only consist of single qubit gates. A stride of sc = 1 is a typical design motif for PQCs and the graph formalism allow for a simple way to capture and generalise it. To achieve translational invariance for all strides the two constraints: \(| {E}_{m}^{c}| =| {Q}_{m}^{c}|\) and \((i,j)\,\ne\, (k,h)\;{{{\rm{where}}}}\;(i,j)\in ,(k,h)\in {E}_{m}^{c}\) are added. Another option for translational invariance is a Qdense primitive, which only differs from Qconv in that \({E}_{m}^{c}\) generates all possible pairwise combinations of \({Q}_{m}^{c}\). This primitive is available in the python package but left out from the definition (because of its similarity).

Figure 7 shows different ways in which sc generate \({E}_{m}^{c}\) for \(| {Q}_{m}^{c}| =8\).

The pooling primitive has two hyperparameters, a stride sp and filter \({F}_{m}^{* }\). The filter indicates which qubits to measure and the stride how to pair them with the qubits remaining. We define the filter as a binary string:

For N = 8 qubits, the binary string \({F}_{m}^{* }=00001111\) translates to measuring the rightmost qubits, i.e., \(\{i| i\in {Q}_{m}^{p},i\ge 5\}\). Figure 6 is an example where the pattern \({F}_{2}^{* }=00001111\to {F}_{4}^{* }=0011\to {F}_{6}^{* }=01\) is used, visually the qubits are removed from bottom to top. Encoding filters as binary strings is useful since generating them becomes generating languages, enabling the use of computer scientific tools such as context free grammars and regular expressions to describe families of filters. Pooling primitives enable hierarchical architectures for QCNNs, and in “Search space design”, we illustrate how they can be implemented to create a family resembling reverse binary trees. The action of the filter is expressed as: \({F}_{m}^{* }\star {Q}_{m}^{p}={Q}_{m+1}^{x}\) where ⋆ slices \({Q}_{m}^{p}\) corresponding to the 0 indices of \({F}_{m}^{* }\), i.e., wi = 0(not measured). For example 010⋆{4, 7, 2} = {4, 2}. This example illustrates the case where an ordering was given to the set of available qubits to represent some specific topology of the circuit. Let \({Q}_{m+1}^{x}={F}_{m}^{* }\star {Q}_{m}^{p}\) then the pooling primitive stride sp = {1, 2, … } is defined as:

Search space design

We show how the digraph formalism facilitates QCNN generation and search space design. Grant et al.27 exhibited the success of hierarchical designs that resemble reverse binary trees. To create a space of these architectures, we only need three levels of motifs. The idea is to reduce the system size in half until one qubit remains while alternating between convolution and pooling operations. Given N qubits, a convolution stride sc, pooling stride sp and a pooling filter F* that reduce system size in half, a reverse binary tree QCNN is generated in Algorithm 1.

Algorithm 1

QCNN, reverse binary tree architecture.

Algorithm 1 shows how to create instances of this architecture family. First, two primitives are created on the first level of the hierarchy, a convolution operation \({M}_{1}^{1}\) and a pooling operation \({M}_{2}^{1}\). They are then sequentially combined on level two as \({M}_{1}^{2}=({M}_{1}^{1},{M}_{2}^{1})\) to form a convolution-pooling unit. The third-level motif \({M}_{1}^{3}\) repeats this second-level motif \({M}_{1}^{2}\) until the system only contains one qubit. This is \({\log }_{2}(N)\) repetitions for N qubits because we chose F* to reduce the system size in half during each pooling operation. The addition and multiplication symbols act as append and extend for tuples. For example, \({M}_{1}^{l}+{M}_{2}^{l}=({M}_{1}^{l},{M}_{2}^{l})\) and \({M}_{k}^{l}\times 3=({M}_{k}^{l},{M}_{k}^{l},{M}_{k}^{l})\) which allow for an intuitive way to build motifs. It is easy to expand the algorithm for more intricate architectures, for instance, by increasing the number of motifs per level and the number of levels. A valid level four motif for Algorithm 1 would be \({M}_{1}^{4}=({M}_{1}^{3}+{M}_{2}^{3})\times 3\), where \({M}_{2}^{3}={{{\rm{Qfree(4)}}}}+{M}_{2}^{2}+{M}_{1}^{2}\) and \({M}_{2}^{2}={M}_{1}^{1}\times 2\) which is the reverse binary tree architecture \({M}_{1}^{3}\) then two convolutions and one convolution-pooling unit on four qubits, all repeated three times. Motifs can also be randomly selected on each level to generate architectures. The python package we provide acts as a tool to facilitate architecture generation this way.

In more detail, we now analyse the family of architectures generated by Algorithm 1. First, we consider the possible pooling filters F* that reduce system size in half. It is equivalent to generating strings for the language \(A=\{w| w\;{{{\rm{has}\;{\rm{an}\;{\rm{equal}}\;{\rm{number}}\;{\rm{of}}\;{\rm{0s}}\;{\rm{and}}\;{\rm{1s}}}}}},| w| =| {Q}_{m}^{p}| \}\). Let \({N}_{m-1}=| {Q}_{m-1}^{x}|\) indicate the number of available qubits for the filter \({F}_{m}^{* }\). Then based on the \(\left(\begin{array}{l}4\\ 2\end{array}\right)=6\) possible equal binary strings of length four, we construct the following pooling filters:

where the exponent a3 ≡ {a} ∘ {a} ∘ {a} = aaa refers to the regular operation concatenation: A∘B = {xy∣x ∈ A, y ∈ B}. The pooling filter Finside yields 0110. Visually this pattern pools qubits from the inside (the middle of the circuit). See Fig. 8c. Figure 8a shows the repeated usage of Fright for pooling. This particular pattern is useful for data preprocessing techniques such as principal component analysis (PCA) since PCA introduces an order of importance to the features used in the model. Typically, the first principal component (which explains the most variance) is encoded on the first qubit, the second principal component on the second qubit and so on. Therefore, it makes sense to pool the last qubits and leave the first qubits in the model for as long as possible.

An example of how the hyperparameters of the primitives effect the circuit architecture of the family generated by Algorithm 1. Three are shown, the convolution stride sc, pooling stride sp and pooling filter F*. These are specified in “Controlling primitives”. Controlled-\({R}_{z}^{\theta }\) gates are used for convolutions and CNOTs for pooling as an example. The convolution stride sc determine how convolution unitaries are distributed across the circuit. Each convolution primitive typically consists of multiple unitaries and the QCNN requires them to be identical for weight sharing. The pooling stride sp determine how pooling unitaries are distributed, for a given pooling primitive, a portion of available qubits gets pooled via controlled unitary operations and sp dictates which controls match to which targets. The pooling filter F* dictates which qubits to pool according to some recursive pattern/mask. For example, circuit d) always pools the outside qubits during pooling primitives, resulting in the middle qubit making it to the end of the circuit.

If N = 8, sc = 1, sp = 0 and F* = Fright then Algorithm 1 generates the circuit in Fig. 1d, Fig. 2, Fig. 6f, and Fig. 8a. Specifically, Fig. 8 shows how different values for sc, spandF* generate different instances of the family using Algorithm 1. The possible combinations of N, sc, sp, F* represent the search space/family size. Since F* reduces system size in half, it’s required that the number of available qubits N is a power of two. Using integer strides causes the \(| {E}_{m}^{c}| =| {Q}_{m}^{c}|\) constraint (see “Controlling primitives”), which enable translational invariance. The complexity of the model(in terms of the number of unitaries used) then scales linearly with the number of qubits N available. Specifically, N qubits result in 3N − 2 number of unitaries. This is because of the geometric series: \(N(\frac{1}{{2}^{0}}+\frac{1}{{2}^{1}}+\cdots +\frac{1}{{2}^{{\log }_{2}N-1}})+N(\frac{1}{{2}^{1}}+\frac{1}{{2}^{2}}+\cdots +\frac{1}{{2}^{{\log }_{2}N-1}})\). Where the first sum is for convolution unitaries and the second for pooling.

Generalisation and search

The digraph formalism extends naturally to multi-qubit unitaries, enabling the representation of more intricate and larger-scale architectures. In general, a primitive with n-qubit unitaries is represented as a hypergraph G = (Q, E), where the edges E consist of n-tuples. We introduce two additional hyperparameters, step and offset, which control the construction of E. For instance, Fig. 9 shows three primitives, each with 3-qubit unitaries. The first two have a stride of one, meaning that each 3-qubit unitary connects to its neighbours. In contrast, the last primitive has a stride of three, connecting every third qubit within the unitary. The offset parameter determines the starting point for counting; Fig. 9a begins with the first qubit, while Fig. 9b starts with the third. The step parameter controls the position of the next unitary; for example, Fig. 9a, b has a step of three, skipping two qubits before creating another edge starting on the third qubit. Consequently, the primitives with 2-qubit unitaries we’ve been considering thus far are all special cases with a step of one and an offset of zero. Another aspect to consider is the execution order of the unitaries, which by default is the sequence in which the edges were created for a primitive. Our package introduces an additional hyperparameter to control this order. For example to execute the third edge of Fig. 9a first followed by edge five, four, one and two, a value of (3, 5, 4, 1, 2) can be passed to the edge order hyperparameter. Lastly, a boundary condition hyperparameter can also be specified, allowing for the definition of open or periodic boundaries for the qubits. This essentially determines whether edge creation is calculated in modulo with respect to the number of qubits or not, which in turn influences whether edge creation ceases when no further connections can be made based on the stride parameter.

Algorithm 2

Original QCNN from ref. 5.

The hyperparameters provided are sufficient to generate a diverse array of hierarchical architectures. For example, we demonstrate how to represent the original QCNN from ref. 5 within our framework in Algorithm 2. The arguments for each convolution and pooling primitive are stride, step, and offset. The Qdense primitive generates 2-qubit unitaries between all pairwise combinations of n + 1 qubits. Subsequently, the second primitive \({M}_{2}^{1}\) takes \({M}_{1}^{1}\) as its mapping, which just means it treats \({M}_{1}^{1}\) as a single n + 1-qubit unitary, and distributes it across the circuit with a stride of 1, step of n, and offset of n − 1. This is followed by n convolutions of n-qubit unitaries, each having an offset incremented by one from the previous. For n = 3 and N = 15, the first and last convolution is illustrated in Fig. 9a, b. Next, a pooling layer with n-qubit unitaries is applied, measuring the outer n − 1 qubits from each nth qubit, this corresponds to the filter \({F}^{* }=\{{1}^{\frac{n-1}{2}}0{1}^{\frac{n-1}{2}}\}\). Finally, a convolution is performed on all remaining qubits. In practice, each of these primitives is given a mapping for their corresponding unitary. The mappings of the original QCNN are based on 2v × 2v Gell–Mann matrices, where v indicates the number of qubits the unitary acts upon. For instance, the first unitary of the primitive \({M}_{2}^{1}\) operates on v = n + 1 qubits, \({M}_{3}^{1}\) on v = n qubits and pooling \({M}_{3}^{1}\) on n qubits where v = n − 1 to leave a qubit for the control. For \({M}_{5}^{1}\), v equals the number of remaining qubits. It’s easy to generate a family of architectures related to the original by providing the algorithm with different values of stride, step, offset, pooling filters, mappings and relaxing the dependence on n based on how large we want the search space to be.

Next, we discuss the applicability of search algorithms with our representation. The framework’s expressiveness is demonstrated in Fig. 2e, f, where only two lines of code are needed to specify a complete architecture, and in Fig. 10, which illustrates how to capture circuits from refs. 5,27. This expressiveness allows search algorithms to explore an extensive range of architectures and numerous design choices. Moreover, the modularity of the framework enables search algorithms to identify robust building blocks to combine into motifs, serving as the foundation for architectural designs. This is especially advantageous in the context of genetic algorithms, as it facilitates the definition of crossover and mutation operations in various ways. For example, mutations can involve adjusting a single hyperparameter of a primitive or replacing an entire primitive within a motif. Crossovers may include combining motifs at the same or different levels or interweaving two motifs by alternating their final sequence of primitives. In the case of reinforcement learning, modularity allows an agent to make decisions at multiple levels of granularity, enabling it to explore and exploit different combinations of primitives and motifs. Hill climbing algorithms can also leverage this modularity in various ways. For instance, we can generate a random fixed high-level motif, such as a MERA circuit, and then iteratively optimise the hyperparameters of each primitive within the motif. In each step, we adjust a hyperparameter to neighbouring values, evaluate the resulting objective values, and select the best configuration. Once we have updated all the hyperparameters of all primitives, we obtain a final motif, which can be used as a starting point for the next iteration. This approach of adjusting individual hyperparameters within a multi-level motif allows for incremental changes to the architecture. Such fine-grained modifications can be beneficial in approaches like Bayesian optimisation, where smoothness in objective values is advantageous. In addition, the hierarchical nature of our representation allows it to capture scale-invariant circuit motifs, as seen in the MERA (Fig. 10) and TTN (Fig. 8) circuits. This inherent capability promotes scalability: it enables search algorithms to start with smaller subsystems, then scale up to larger ones using only the ’Qfree’ primitive. As a result, there’s no need to re-establish such motifs for larger systems, leading to reduced computational costs and broadened possibilities for architectural exploration (provided there are scale-invariant features to the problem at hand). Lastly, the intuitive nature of the representation facilitates interpretability, for instance, in one experiment, we observed a spike in performance for a convolution stride of five. Upon further investigation, we discovered a strong correlation between features one and six, which was previously unknown. This insight informed future experiments and design choices for the problem at hand. The specific experiment pertains to a pulsar classification task in radio astronomy, which is currently undergoing further experimentation.

Example architectures from ref. 5 (c) and ref. 27 (d), generated using our Python package to demonstrate its expressibility, interpretability, and scalability. a The 15-qubit original QCNN is created with the first three parameters of each primitive being stride, step, and offset, respectively. The unitary U mappings employ generalised Gell–Mann matrices parametrised based on the number of qubits they act upon. Line 5, Qfree(15) + m1 + m2 + m3, controls the system size; applying the same architecture to N qubits only requires changing it to Qfree(N). To introduce a depth d parameter to the circuit, the last line should be modified to \({m_{4}\,*\,d\,+\,m_{5}}\). b A 16-qubit MERA circuit is generated. For an 8-qubit MERA circuit, the last line would be changed to \({{{\rm{Qfree}}}}(8)+{m}_{1}^{1}+{m}_{2}^{1}* 2\), and in general, \({{{\rm{Qfree}}}}(N)+{m}_{1}^{1}+{m}_{2}^{1}({\log }_{2}N-1)\) produces an N-qubit MERA circuit. These examples highlight the representation’s strengths: the essence of an architecture is captured with a few lines of code in a modular and understandable manner, and scaling up to larger systems is accomplished with minimal adjustments.

Finally, we present the evolutionary algorithm used in our experiments, which is based on the approach described in ref. 26 and detailed in Algorithm 3. We refer to an architecture as a genotype, and its fitness is determined by the sample complexity for both inside and middle points, as well as the mean squared error (MSE) for outside points in the test set (see Fig. 5). Specifically, \({{{\rm{fitness}}}}={c}_{1}\frac{{M}_{{{{\rm{in}}}}}}{{M}_{{{{\rm{cap}}}}}}+{c}_{2}\frac{{M}_{{{{\rm{middle}}}}}}{{M}_{{{{\rm{cap}}}}}}+{c}_{3}{{{{\rm{MSE}}}}}_{{{{\rm{out}}}}}+\lambda {n}_{p}\) where we cap Min and Mmiddle by some large value Mcap and np is the number of parameters required for the architecture. The weights c1, c2, and c3 sum to one, assigning importance to each term. Our experiments showed that setting c1 = 0.7, c2 = 0.05, and c3 = 0.25 led to generally well-performing architectures, we also chose Mcap = 500 since fit genotypes exhibit sample complexity below 100. We initialise the population with a pool of 100 random primitives (Qconv, Qpool, Qdense), each having random hyperparameters. Upon initialisation, we perform mutation and crossover operations based on tournament selection with a 5% selection pressure. After the selection, we mutate the fittest genotype by choosing one of its primitives and replacing it with a randomly generated one. The crossover operator acts on the two fittest individuals, attempting to combine them tail-to-head. If this is not possible, they are interleaved up to the point where they can be combined. Just like the approach in26, we do not remove any genotypes from the pool, leading to a more diverse population.

Algorithm 3

Evolutionary Search Algorithm

Discussion

This work has introduced a framework for representing hierarchical quantum circuits, enabling circuit generation and search space design. The framework is implemented as a readily available Python package that allows for the generation of complex quantum circuit architectures, which is particularly useful for Neural Architecture Search (NAS). Our numerical experiments illustrate the significance of alternating architectures for parameterised quantum circuits and present a pathway to enhance model performance without increasing its complexity.

Looking ahead, our primary goal is to investigate various search strategies leveraging this architectural representation, aiming to automate the discovery of quantum circuits tailored for a range of tasks. Although we’ve demonstrated the value of this representation for evolutionary algorithms, we’re keen to explore alternative search algorithms such as reinforcement learning or Bayesian optimisation.

Another interesting consideration is the theoretical analysis of QCNN architectures that generalise well across multiple datasets. Recently, it has been shown how symmetry can be used to inform the inductive biases of a model52,53, and we suspect that our numerical results stem from the search finding architectures that respect symmetries of the data. Symmetry is a natural starting point for creating primitives, the convolution primitive is already constrained by translational symmetry and additional primitives can be developed by considering other symmetries. This approach effectively narrows the search space, enabling a system to automatically discover general equivariant architectures that align well with the data. The framework also allows for the specification qubit orderings that correspond to physical hardware setups. Therefore, benchmarking the effect of noise on different architectures on NISQ devices would be a useful exploration.

Methods

Overview

Figure 1 gives a broad view of the machine-learning pipeline we implement for the benchmarks. There are various factors influencing model performance during such a pipeline. Each step, from a raw audio signal to a classified musical genre, contains various possible configurations, the influence of which propagates throughout the pipeline. For this reason, it is difficult to isolate any configuration and evaluate its effect on the model. With our goal being to analyse QCNN architectures (Fig. 1d) on the audio data, we perform random search in the family created by Algorithm 1 with different choices of circuit ansatz and quantum data encoding. These are evaluated on two different datasets: Mel spectrogram data (Fig. 1b) and 2D statistical data (Fig. 1c), both being derived from the same audio signal (Fig. 1a). We preprocess the data based on requirements imposed by the model implementation before encoding it into a quantum state. These configurations are expanded on below:

Data

We aimed to use a practical and widely applicable dataset for the data component and chose the well-known54 music genre dataset, GTZAN. It consists of 1000 audio tracks, each being a 30-s recording of some song. These recordings were obtained from radio, compact disks and compressed MP3 audio files55. Each is given a label of one of the following ten musical genres: blues, classical, country, disco, hip-hop, jazz, metal, pop, reggae, rock. Binary classification is used for the analysis of model performance across different architectures. Meaning there are \(\left(\begin{array}{l}10\\ 2\end{array}\right)=45\) possible genre pairs to build models from. Each pair is equally balanced since there are 100 songs for each genre. The dataset enables the comparison of 45 models per configuration within the audio domain.

Model implementation

For all experiments, we evaluate instances of Algorithm 1 with N = 8 qubits, resulting in 3(8) − 2 = 22 two-qubit unitaries. We test each model based on different combinations of model architecture, two-qubit unitary ansatz and quantum data encoding. The specific unitaries for Um are chosen from a set of eight ansatzes that were used in ref. 29. They are based on previous studies that explore the expressibility and entangling capability of parameterised circuits56, hierarchical quantum classifiers27 and extensions to the VQE57. These are shown in Supplementary Fig. 1, the ansatz for pooling also comes from ref. 29 and is shown in Supplementary Fig. 3. For quantum data encoding, we compare qubit encoding36 with IQP encoding58 on the tabular dataset. Amplitude encoding59 is used for the image data.

Each model configuration considers all 45 genre pairs for classification, for example, rock vs reggae. Cross entropy is used as the cost function \(C(y,\hat{y})\) during training, for rock vs reggae this would be:

where

\(\hat{{y}_{i}}\) is obtained from Eq. (6), i represents one observation, and both y, \(\hat{y}\) are all the observations in vector form.

Data creation

We benchmark the model against two different forms of data, namely tabular and image. To construct the dataset in tabular form, we extract specific features from each audio signal using librosa60 as shown in Fig. 1b. Each row represents a single audio track with its features as columns. The specific features extracted are those typically used by music information retrieval systems, namely: chroma frequencies, harmonic and percussive elements, Mel-frequency cepstral coefficients, root-mean-square, spectral bandwidth, spectral centroid, spectral roll-off, tempo and the zero crossing rate. See Supplementary Table 1 for a short description of these features. To construct the dataset in image form, we extract a Mel-frequency spectrogram (Fig. 1c) from each audio signal. The Mel scale is a non-linear transformation based on how humans perceive sound and is frequently used in speech recognition applications61. The spectrogram size depends on the number of qubits available for the QCNN. We can encode 2N values with amplitude encoding into a quantum state, where N is the number of available qubits. Using N = 8 qubits, we scale the image to 8 × 32 = 256 = 28 pixels, normalising each pixel between 0 and 1. The downscaling is done by binning the Mel frequencies into eight groups and taking the first three seconds of each audio signal.

Data preprocessing

Two primary forms of preprocessing are applied to the data before it is sent to the model: feature scaling and feature selection. The features are scaled using min-max scaling, where the range is based on the type of quantum data encoding used. For amplitude encoding, the data is scaled to the range [0, 1], qubit encoding to [0, π/2] and IQP encoding to [0, π]. Feature selection is only applied to the tabular data. Using qubit encoding with N = 8 qubits results in selecting eight features. Principal Component Analysis (PCA) and decision trees are used to perform the selection. The tree-based selection is used to compare against PCA to verify whether PCA does not heavily bias the model’s results.

Model evaluation

The model is trained with 70% of the data while 30% is held out as a test set to evaluate performance. During training, fivefold cross-validation is used on each model. The average classification accuracy and standard deviation of 30 separate trained instances are calculated on the test set as performance metrics.

Data availability

The music genre classification dataset analysed during the current study is available on TensorFlow Datasets, https://www.tensorflow.org/datasets/catalog/gtzan.

Code availability

The theoretical framework discussed in this paper has been implemented as an open-source Python package, which is available on GitHub at https://github.com/matt-lourens/hierarqcal. This package was used to generate all the circuits in the paper.

References

Benedetti, M., Lloyd, E., Sack, S. & Fiorentini, M. Parameterized quantum circuits as machine learning models. Quantum Sci. Technol. 4, 043001 (2019).

Cerezo, M. et al. Variational quantum algorithms. Nat. Rev. Phys. 3, 625–644 (2021).

Mangini, S., Tacchino, F., Gerace, D., Bajoni, D. & Macchiavello, C. Quantum computing models for artificial neural networks. Europhys. Lett. 134, 10002 (2021).

Bharti, K. et al. Noisy intermediate-scale quantum algorithms. Rev. Mod. Phys. 94, 015004 (2022).

Cong, I., Choi, S. & Lukin, M. D. Quantum convolutional neural networks. Nat. Phys. 15, 1273–1278 (2019).

Pesah, A. et al. Absence of barren plateaus in quantum convolutional neural networks. Phys. Rev. X 11, 041011 (2021).

Banchi, L., Pereira, J. & Pirandola, S. Generalization in quantum machine learning: a quantum information standpoint. PRX Quantum 2, 040321 (2021).

Herrmann, J. et al. Realizing quantum convolutional neural networks on a superconducting quantum processor to recognize quantum phases. Nat. Commun. 13, 4144 (2022).

Carleo, G. & Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 355, 602–606 (2017).

Carrasquilla, J. & Melko, R. G. Machine learning phases of matter. Nat. Phys. 13, 431–434 (2017).

van Nieuwenburg, E. P. L., Liu, Y.-H. & Huber, S. D. Learning phase transitions by confusion. Nat. Phys. 13, 435–439 (2017).

Deng, D.-L., Li, X. & Sarma, S. D. Machine learning topological states. Phys. Rev. B 96, 195145 (2017).

Levine, Y., Sharir, O., Cohen, N. & Shashua, A. Quantum entanglement in deep learning architectures. Phys. Rev. Lett. 122, 065301 (2019).

Stoudenmire, E. & Schwab, D. J. Supervised learning with tensor networks. Adv. Neural Inf. Process. Syst. 29 https://papers.nips.cc/paper/2016/hash/5314b9674c86e3f9d1ba25ef9bb32895-Abstract.html (2016).

Deng, D.-L., Li, X. & Sarma, S. D. Quantum entanglement in neural network states. Phys. Rev. X 7, 021021 (2017).

Lin, H. W., Tegmark, M. & Rolnick, D. Why does deep and cheap learning work so well? J. Stat. Phys. 168, 1223–1247 (2017).

Mehta, P. & Schwab, D. J. An exact mapping between the variational renormalization group and deep learning. Preprint at http://arxiv.org/abs/1410.3831 (2014).

Levine, Y., Yakira, D., Cohen, N. & Shashua, A. Deep learning and quantum entanglement: Fundamental connections with implications to network design. Int. Conf. Learn. Represent. https://openreview.net/forum?id=SywXXwJAb (2018).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25 https://papers.nips.cc/paper_files/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45b-Abstract.html (2012).

Zoph, B. & Le, Q. V. Neural architecture search with reinforcement learning. Int. Conf. Learn. Represent. https://openreview.net/forum?id=r1Ue8Hcxg (2017).

Elsken, T., Metzen, J. H. & Hutter, F. Neural architecture search: a survey. J. Mach. Learn. Res. 20, 1–21 (2019).

Real, E., Aggarwal, A., Huang, Y. & Le, Q. V. Regularized evolution for image classifier architecture search. Proc. AAAI Conf. Artif. Intell. 33, 4780–4789 (2019).

Zoph, B., Vasudevan, V., Shlens, J. & Le, Q. V. Learning transferable architectures for scalable image recognition. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 8697–8710 (IEEE, 2018).

Chen, L.-C. et al. Searching for efficient multi-scale architectures for dense image prediction. Adv. Neural Inf. Process. Syst. 31 https://papers.nips.cc/paper_files/paper/2018/hash/c90070e1f03e982448983975a0f52d57-Abstract.html (2018).

Liu, H., Simonyan, K., Vinyals, O., Fernando, C. & Kavukcuoglu, K. Hierarchical representations for efficient architecture search. Int. Conf. Learn. Represent. https://openreview.net/forum?id=BJQRKzbA- (2018).

Grant, E. et al. Hierarchical quantum classifiers. NPJ Quantum Inf. 4, 65 (2018).

Haug, T., Bharti, K. & Kim, M. Capacity and quantum geometry of parametrized quantum circuits. PRX Quantum 2, 040309 (2022).

Hur, T., Kim, L. & Park, D. K. Quantum convolutional neural network for classical data classification. Quantum Mach. Intell. 4, 3 (2022).

Oh, S., Choi, J. & Kim, J. A tutorial on quantum convolutional neural networks (QCNN). in International Conference on Information and Communication Technology Convergence 236–239 (IEEE, 2020).

Franken, L. & Georgiev, B. Explorations in quantum neural networks with intermediate measurements. In Proc. ESSAN, Vol. ES2020, 297–302 (2020).

McClean, J. R., Boixo, S., Smelyanskiy, V. N., Babbush, R. & Neven, H. Barren plateaus in quantum neural network training landscapes. Nat. Commun. 9, 4812 (2018).

Holmes, Z., Sharma, K., Cerezo, M. & Coles, P. J. Connecting ansatz expressibility to gradient magnitudes and barren plateaus. PRX Quantum 3, 010313 (2022).

Schuld, M., Sweke, R. & Meyer, J. J. Effect of data encoding on the expressive power of variational quantum-machine-learning models. Phys. Rev. A 103, 032430 (2021).

Abbas, A. et al. The power of quantum neural networks. Nat. Comput. Sci. 1, 403–409 (2021).

Schuld, M. Supervised quantum machine learning models are kernel methods. Preprint at https://arxiv.org/abs/2101.11020v2 (2021).

Zhang, S.-X., Hsieh, C.-Y., Zhang, S. & Yao, H. Differentiable quantum architecture search. Quantum Sci. Technol. 7, 045023 (2022).

Zhang, S.-X., Hsieh, C.-Y., Zhang, S. & Yao, H. Neural predictor based quantum architecture search. Mach. Learn. Sci. Technol. 2, 045027 (2021).

Grimsley, H. R., Economou, S. E., Barnes, E. & Mayhall, N. J. An adaptive variational algorithm for exact molecular simulations on a quantum computer. Nat. Commun. 10, 3007 (2019).

Tang, H. L. et al. Qubit-ADAPT-VQE: an adaptive algorithm for constructing hardware-efficient ansatze on a quantum processor. PRX Quantum 2, 020310 (2021).

Yordanov, Y. S., Armaos, V., Barnes, C. H. W. & Arvidsson-Shukur, D. R. M. Qubit-excitation-based adaptive variational quantum eigensolver. Commun. Phys. 4, 228 (2021).

Rattew, A. G., Hu, S., Pistoia, M., Chen, R. & Wood, S. A domain-agnostic, noise-resistant, hardware-efficient evolutionary variational quantum eigensolver. Preprint at https://arxiv.org/abs/1910.09694 (2020).

Zhu, L. et al. Adaptive quantum approximate optimization algorithm for solving combinatorial problems on a quantum computer. Phys. Rev. Res. 4, 033029 (2022).

Li, L., Fan, M., Coram, M., Riley, P. & Leichenauer, S. Quantum optimization with a novel Gibbs objective function and ansatz architecture search. Phys. Rev. Res. 2, 023074 (2020).

Ostaszewski, M., Grant, E. & Benedetti, M. Structure optimization for parameterized quantum circuits. Quantum 5, 391 (2021).

Du, Y., Huang, T., You, S., Hsieh, M.-H. & Tao, D. Quantum circuit architecture search for variational quantum algorithms. NPJ Quantum Inf. 8, 1–8 (2022).

Lu, Z., Shen, P.-X. & Deng, D.-L. Markovian quantum neuroevolution for machine learning. Phys. Rev. Appl. 16, 044039 (2021).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Smacchia, P. et al. Statistical mechanics of the cluster Ising model. Phys. Rev. A 84, 022304 (2011).

Haldane, F. D. M. Nonlinear field theory of large-spin Heisenberg antiferromagnets: Semiclassically quantized solitons of the one-dimensional easy-axis néel state. Phys. Rev. Lett. 50, 1153–1156 (1983).

Nielsen, M. A. & Chuang, I. L.Quantum Computation and Quantum Information: 10th Anniversary Edition (Cambridge University Press, 2011).

Larocca, M. et al. Group-invariant quantum machine learning. PRX Quantum 3, 030341 (2022).

Meyer, J. J. et al. Exploiting symmetry in variational quantum machine learning. PRX Quantum 4, 010328 (2023).

Sturm, B. L. A survey of evaluation in music genre recognition. in Adaptive Multimedia Retrieval: Semantics, Context, and Adaptation, vol. 8382 of Lecture Notes in Computer Science (eds Nürnberger, A. et al.) 29–66 (Springer International Publishing, 2014).

George, T., Georg, E. & Perry, C. Automatic musical genre classification of audio signals. In Proc. ISMIR, Indiana, Vol. 144. https://ismir2001.ismir.net/papers.html (2001).

Sim, S., Johnson, P. D. & Aspuru-Guzik, A. Expressibility and entangling capability of parameterized quantum circuits for hybrid quantum-classical algorithms. Adv. Quantum Technol. 2, 1900070 (2019).

Parrish, R. M., Hohenstein, E. G., McMahon, P. L. & Martínez, T. J. Quantum computation of electronic transitions using a variational quantum eigensolver. Phys. Rev. Lett. 122, 230401 (2019).

Havlícek, V. et al. Supervised learning with quantum-enhanced feature spaces. Nature 567, 209–212 (2019).

Schuld, M. & Petruccione, F. Machine Learning with Quantum Computers (Springer International Publishing, 2021).

McFee, B. et al. librosa/librosa: 0.8.1rc2. https://doi.org/10.5281/zenodo.4792298 (2021).

Davis, S. & Mermelstein, P. Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process. 28, 357–366 (1980).

Acknowledgements

The development of the Python package was funded by Unitary Fund (https://unitary.fund/). Support from the Oppenheimer Memorial Trust, NICIS (National Integrated Cyber Infrastructure System) e-research grant QICSI7 and the South African Research Chair Initiative, Grant No. 64812 of the Department of Science and Innovation and the National Research Foundation of the Republic of South Africa is kindly acknowledged. This research was also supported by the Yonsei University Research Fund of 2022 (2022-22-0124), by the National Research Foundation of Korea (Grant No. 2022M3E4A1074591), and by the KIST Institutional Program (2E32241-23-010).

Author information

Authors and Affiliations

Contributions

M.L. designed the theoretical framework, performed the numerical experiments, and implemented the software. I.S. and F.P. supervised the research. D.P. and C.B. conceived the experiment and provided insights on the reference architecture. All authors reviewed and discussed the analyses and results and contributed towards writing the manuscript.

Corresponding author

Ethics declarations

Competing interests

C.B. is the Co-Founder of Data cybernetics, and Francesco Petruccione the Chair of Scientific Board and Co-Founder of QUNOVA computing. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lourens, M., Sinayskiy, I., Park, D.K. et al. Hierarchical quantum circuit representations for neural architecture search. npj Quantum Inf 9, 79 (2023). https://doi.org/10.1038/s41534-023-00747-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-023-00747-z