Abstract

Quantum information needs to be protected by quantum error-correcting codes due to imperfect physical devices and operations. One would like to have an efficient and high-performance decoding procedure for the class of quantum stabilizer codes. A potential candidate is Gallager’s sum-product algorithm, also known as Pearl’s belief propagation (BP), but its performance suffers from the many short cycles inherent in a quantum stabilizer code, especially highly-degenerate codes. A general impression exists that BP is not effective for topological codes. In this paper, we propose a decoding algorithm for quantum codes based on quaternary BP with additional memory effects (called MBP). This MBP is like a recursive neural network with inhibitions between neurons (edges with negative weights), which enhance the perception capability of a network. Moreover, MBP exploits the degeneracy of a quantum code so that the most probable error or its degenerate errors can be found with high probability. The decoding performance is significantly improved over the conventional BP for various quantum codes, including quantum bicycle, hypergraph-product, surface and toric codes. For MBP on the surface and toric codes over depolarizing errors, we observe error thresholds of 16% and 17.5%, respectively.

Similar content being viewed by others

Introduction

To demonstrate an interesting quantum algorithm, such as Shor’s factoring algorithm1, a quantum computer needs to implement more than 1010 logical operations, which means that the error rate of each logical operation must be much less than 10−10 (see ref. 2). With limited quantum devices and imperfect operations3,4, quantum information needs to be protected by quantum error-correcting codes to achieve fault-tolerant quantum computation5. If a quantum state is encoded in a stabilizer code6,7, the error syndrome of an occurred error can be measured without disturbing the quantum information of the state. A quantum stabilizer code constructed from a sparse graph is favorable since it affords a two-dimensional layout or simple quantum error-correction procedures. This includes the families of surface and toric codes8, color codes9, random bicycle codes10, and generalized hypergraph-product (GHP) codes11,12.

For a general stabilizer code, the decoding problem of finding the most probable coset of degenerate errors with a given error syndrome is hard13,14, and an efficient decoding procedure with good performance is desired. The complexity of a decoding algorithm is usually a function of code length N. Edmonds’ minimum-weight perfect matching (MWPM)15 can be used to decode a surface or toric code16,17,18,19, and the complexity of MWPM is O(N3), which can be reduced to O(N2) if local matching is used with minor performance loss19,20,21. Duclos-Cianci and Poulin proposed a renormalization group (RG) decoder, which uses a strategy analogous to the decoding of a concatenated code, to decode a toric (or surface) code with complexity proportional to \(N\log (\sqrt{N})\)22. Both MWPM and RG can be generalized for color codes 23,24,25,26,27.

On the other hand, most sparse quantum codes can be decoded by belief propagation (BP)10,28,29,30 or its variants with additional processes31,32,33,34,35. BP is an iterative algorithm and the decoding complexity per iteration is O(Nj)30,36, where j is the mean column-weight of the check matrix of a quantum code. In general, an average number of iterations proportional to \(\log \log N\) is sufficient for BP decoding37,38. In practice, a maximum number of iterations \({T}_{\max }\) proportional to \(\log \log N\) up to a large enough constant will be chosen. So the overall decoding complexity of BP is \(O(Nj{T}_{\max })\) or \(O(Nj\log \log N)\).

Although BP seems to have the lowest complexity, the long-standing problem is that BP does not perform well on quantum codes with high degeneracy unless additional complex processes are included31,33. (We say that a code has high degeneracy or is highly degenerate if it has many stabilizers of weight lower than its minimum distance.) The Tanner graph of a stabilizer code inevitably contains many short cycles, which deteriorate the message-passing process in BP10,31, especially for codes with high degeneracy33,34,39. Any message-passing or neural network decoder may suffer from this issue. One may consider variants of BP with additional efforts in pre-training by neural networks40,41,42,43 or post-processing33,34 such as ordered statistics decoding (OSD)44, but these methods may not be practical for large codes. In this paper, we will address this long-standing BP problem by devising an efficient quaternary BP decoding algorithm with memory effects (c.f. Eq. (10)), abbreviated MBP, so that the degeneracy of quantum codes can be exploited. Moreover, many known decoders in the literature treat Pauli X and Z errors separately as binary errors, which may incur additional computation overhead or performance loss. MBP directly handles the quaternary errors.

The problem of hard-decision decoding of a classical code is like an energy-minimization problem in a neural network45, where an energy function measures the parity-check satisfaction (denoted by JS).

It is known that BP has been used for energy minimization in statistical physics31,38,46. Moreover, an iterative decoder based on the gradient descent optimization of the energy function has been proposed47. These motivate us to consider a soft-decision generalization of the energy function with variables that are log-likelihood ratios (LLRs) of Pauli errors and make connections between BP and the gradient descent algorithm. We define an energy function with an additional term JD that measures the distance between a recovery operator and the initial channel statistics. Then we show that BP in the log domain is like a gradient descent optimization for this generalized energy function but with more elegant step updates48. This explains why the conventional BP may work well on a nondegenerate quantum code10, since this is similar to the classical case 47.

For a highly-degenerate quantum code, it has many low-weight stabilizers corresponding to local minimums in the energy topology so that the conventional BP easily gets trapped in these local minimums near the origin. This suggests that we should use a larger step (which can be controlled by message normalization)30. However, this is simply not enough since the energy-minimization process may not converge if large steps are made. An observation from neural networks is that inhibitions (edges with negative weights) between neurons can enhance the perception capability of a network and improve the pattern-recognition accuracy49,50,51,52,53. MBP is mathematically formulated to have this inhibition functionality, which helps to resist wrong beliefs passing on the Tanner graph (due to short cycles37) or to effectively accelerate the search in a gradient descent optimization. An important feature of MBP is that no additional computation is required, and thus, the complexity of MBP remains the same as the conventional BP with message normalization.

The performance of MBP can be further improved by choosing an appropriate step-size for each error syndrome. However, it is difficult to precisely determine the step-size. If the step-size is too large, MBP may return incorrect solutions or diverge. We propose to choose the step-size using an ε-net so the step-size can be determined adaptively. This adaptive scheme will be called AMBP. The overall complexity of AMBP is still \(O(Nj\log \log N)\) since the chosen ε is independent of N.

Another technique adopted in MBP is to use fixed initialization54,55. The energy function and energy topology are defined according to the channel statistics. If MBP performs well on a certain channel statistics (say, at a certain depolarizing rate ϵ0), it means that MBP can correctly determine most syndrome-and-error pairs on that topology. Thus it is better to decode using this energy topology, regardless of the true channel statistics. This technique works for any quantum code.

Computer simulations of MBP on various quantum codes are performed. Note that MBP naturally extends to a more complicated error model of simultaneous data and measurement errors56; however, perfect syndrome measurements are assumed in this paper since we focus on the algorithm and performance of BP on degenerate quantum codes. In RESULTS, we demonstrate the decoding of quantum bicycle codes10, a highly-degenerate GHP code33, and the (rotated) surface or toric codes57,58. Our simulation results show that MBP performs significantly better than the conventional BP. In particular, any degenerate error of the target error up to a stabilizer can also be the target and MBP is able to locate such errors. (See more discussions and examples about energy minimization and the memory effects of MBP in ref. 48).

Results

Computer simulations

Our main results are based on an efficient decoder for quantum codes, the quaternary MBP (MBP4) (see Algorithm 1). MBP4 has a configurable step-size, which is scaled by a positive constant α−1. For comparison, the conventional quaternary BP (BP4) is similar to MBP4 with α = 1 but without additional memory effects. MBP4 can be extended as AMBP4 (Algorithm 2). We simulate the decoding performances of various quantum codes by MBP4 and AMBP4 in the following. The message-update schedule will be denoted by a prefix parallel/serial28.

For an [[N, K, D]] quantum code that encodes K logical qubits into N physical qubits with minimum distance D, if any errors of weight smaller or equal to t are correctable, its logical error rate is

at depolarizing rate ϵ, using bounded distance decoding (BDD). Let r × BDD denote the case that any N-fold Pauli error of weight \(\le \,t=\lfloor \frac{rD-1}{2}\rfloor\) is correctable so that the logical error rate is \({P}_{{{{\rm{BDD}}}}}(\lfloor \frac{rD-1}{2}\rfloor )\). If D is unknown, we may directly specify BDD with some t instead of r × BDD for comparison. Usually, a good classical decoding procedure has a correction radius between 1 × BDD and 2 × BDD37. However, the degeneracy of a quantum code is not considered in BDD; we may have decoding performance much better than 2 × BDD in the quantum case. In addition, the optimal achievable decoding performance is unknown13,14, so r × BDD serves as a good benchmark.

The mean weight of the rows in the check matrix of a quantum code is called the row-weight and denoted by k. If the row-weight is small, then the quantum code has many low-weight stabilizers. We say that a quantum code is more degenerate if the row-weight of its check matrix is smaller compared to the minimum distance of the code. We will see that MBP4 improves the conventional BP4 more when the tested code is more degenerate. In our simulations, the normalization factor α for the step-size in MBP4 is chosen to be roughly proportional to k and inversely proportional to the depolarizing rate ϵ. (See the analysis in ref. 48)

A relatively larger step-size may be needed for a highly-degenerate quantum code to decode those errors with a weight larger than the row-weight of the check matrix. We use an ε-net of α to adaptively determine the best value α* for each error syndrome (Algorithm 2, denoted as AMBP4). Since MBP4 is queried as a subroutine in AMBP4 at most ε−1 times, the computation complexity of AMBP4 is higher. If ε is independent of N, then the asymptotic complexity remains the same. To efficiently determine α* is worth further studying.

We briefly explain how to interpret the simulation results. Let \({{{{\mathcal{G}}}}}_{N}\) be the N-fold Pauli group, \({{{\mathcal{S}}}}\subset {{{{\mathcal{G}}}}}_{N}\) be the stabilizer group that defines a quantum code, and \({{{{\mathcal{S}}}}}^{\perp }\subset {\{I,X,Y,Z\}}^{\otimes N}\) denote the set of operators with phase +1 that commute with \({{{\mathcal{S}}}}\) in \({{{{\mathcal{G}}}}}_{N}\). Let ntot be the number of tested error samples for a data point in the simulation of the performance curve of a code. Suppose that E(i) and \({\hat{{{{\bf{E}}}}}}^{(i)}\in {\{I,X,Y,Z\}}^{\otimes N}\) are the tested and estimated errors, respectively, for i = 1, 2, …, ntot. Denote

Empirically, we have the classical block error rate \(P(\hat{{{{\bf{E}}}}}\,\ne\, {{{\bf{E}}}})={n}_{{{{\rm{0}}}}}/{n}_{{{{\rm{tot}}}}}\), the quantum logical error rate \(P(\hat{{{{\bf{E}}}}}\,\notin\, \pm {{{\bf{E}}}}{{{\mathcal{S}}}})={n}_{{{{\rm{e}}}}}/{n}_{{{{\rm{tot}}}}}\), and the undetected error rate \(P(\hat{{{{\bf{E}}}}}{{{\bf{E}}}}\in {{{{\mathcal{S}}}}}^{\perp }\setminus \pm {{{\mathcal{S}}}})={n}_{{{{\rm{u}}}}}/{n}_{{{{\rm{tot}}}}}\).

Since \((\hat{{{{\bf{E}}}}}\,\notin\, \pm {{{\bf{E}}}}{{{\mathcal{S}}}})\subseteq (\hat{{{{\bf{E}}}}}\,\ne\, {{{\bf{E}}}})\), by Bayes’ rule, we have

Usually, a classical decoding strategy is to lower n0/ntot, which means that the target error needs to be accurately located from a given syndrome. Such a strategy has a limit in performance due to short cycles or strong degeneracy of the code. If a decoder converges to anyone of the degenerate errors, the decoding succeeds. A better strategy has to also lower the ratio ne/n0, which will be called the error suppression ratio, by exploiting the degeneracy.

In the simulations, E(i) is drawn from a memoryless depolarizing error model and then decoded as \({\hat{{{{\bf{E}}}}}}^{(i)}\). The pairs \(({{{{\bf{E}}}}}^{(i)},{\hat{{{{\bf{E}}}}}}^{(i)})\) are collected until we have 100 logical error events for a data point. Otherwise, an error bar between two crosses is used to show the 95% confidence interval (1.96 times the standard error of the mean). If a maximum number of iteration \({T}_{\max }\) is reached, but the BP does not converge, the decoding stops, and this error sample is counted as a logical error. \({T}_{\max }\) is chosen to match the literature for comparison if it was specified. (Empirically, \({T}_{\max }\) is chosen to be much larger than the average number of iterations τ.) We will see that MBP4 significantly improves BP4 with better convergence speed (smaller τ) and lower logical error rate (with ne/n0 < 1).

Bicycle codes

MacKay et al. constructed families of random bicycle codes, which are sparse-graph codes with performance possibly close to the quantum Gilbert–Varshamov rate10. To have an [[N, K]] random bicycle code, the number of row-weight k is chosen and two random circulant matrices are generated accordingly to define the check matrix of a quantum code of rate \(\frac{K}{N}\) (after proper row-deletion). Since the minimum distance of the code is no larger than k due to the code construction, it may have a high decoding error-floor when k is small. For [[3786, 946]] bicycle codes, MacKay et al. showed that a code of row-weight k ≥ 24 can have good BP decoding performance. However, the decoding complexity is lower for a check matrix with a smaller k and the syndrome measurements are simpler. Thus we would like to have a good decoder for random bicycle codes of small row-weight.

We first construct bicycle codes with the same parameters as in ref. 10. Figure 1a shows the conventional BP4 performance on [[3786, 946]] bicycle codes for row-weights k = 24, 20, 16, 12. It shows that the code of row-weight 24 is able to achieve the logical error rate of 10−4 before hitting the error-floor. Also shown in Fig. 1a are the performance curves from MacKay et al. using binary BP (BP2), which treats Pauli X and Z errors separately. It can be seen that BP4 performs better than BP2, because the correlations between X errors and Z errors are considered28.

a MBP4 with α = 1 (conventional BP4). b MBP4 with appropriate α > 1 chosen for each ϵ. c Average numbers of iterations in a (dotted lines) and b (solid lines). The [M04] curves in a are obtained using a conversion from bit-flip error rate to depolarizing error rate, based on Fig. 6 and Eq. (40) in ref. 10. In a and b, 100 logical error events are collected for each data point; or otherwise, an error bar between two crosses indicates the 95% confidence interval.

Now we show that the performance is significantly improved with MBP4, as shown in Fig. 1b. The error-floor performance is improved, and the code of row-weight 16 is able to well achieve the logical error rate of 10−6. The minimum distance of a bicycle code is usually unknown, so it is hard to compare its performance with r × BDD for some r. However, we know that the minimum distance is no larger than k for a bicycle code. Thus the performance of a code with k = 16 is at most \({P}_{{{{\rm{BDD}}}}}(\lfloor \frac{16-1}{2}\rfloor )\) for 1 × BDD. On the other hand, the performance of MBP4 on the code of row-weight 16 is close to PBDD(t) with t between 140 and 200 depending on the logical error rate as shown in Fig. 1b, which is better than 17 × BDD.

The average numbers of iterations are shown in Fig. 1c. The convergence behavior is good since the number of average iterations decreases when the physical error rate decreases. It can be seen that the number of average iterations using MBP4 for k = 12 decreases more than in the other three cases of k = 16, 20, and 24 since there are more lower-weight stabilizers and hence more low-weight degenerate errors.

Next, we study whether MBP4 improves the error suppression ratio ne/n0 defined in Eq. (5). Detailed error counts n0, ne, nu, and ntot of (M)BP4 on the two codes of k = 16 and k = 12 at depolarizing rate ϵ = 0.027, 0.037, and 0.049 are provided in Table 1. MBP4 has ne/n0 < 1 for these two codes if ϵ ≤ 0.049. Note that ne/n0 is small if the decoder finds degenerate errors most of the time. We observe that the decoder exploits the degeneracy more for a code with stabilizers of lower-weight. If the depolarizing rate is smaller, the ratio ne/n0 is smaller for MBP4 on both codes. For k ≤ 12, the minimum distance of a bicycle code would be too small to have a low error-floor.

We remark that the conventional BP4 has ne/n0 ≈ 1 for most cases when k ≥ 16. Also listed in Table 1 are the numbers of undetected errors, which are nonzero for k = 12. However, the ratio nu/ntot tends to be small.

To further improve the performance of these bicycle codes, we use AMBP4 with α* ∈ {2.4, 2.3, …, 0.5}. Herein we consider the serial schedule because it accelerates the message update and enlarges the error-correction radius in finite iterations. The performance curves in Fig. 1b are significantly improved, as shown in Fig. 2.

In the case of quantum communication, we may focus on a target logical error rate of 10−4 (see ref. 10), where quantum retransmission is possible if necessary59. Consider \(\epsilon =\frac{t}{N}\) for large N. The quantum Gilbert–Varshamov rate6,60 states that there exists a code of rate \(\frac{1}{4}\) with the target logical error rate at ϵ = 0.063. One can see from Fig. 2 that the [[3786, 946]] bicycle code with k = 16 has this target logical error rate at ϵ = 0.057, which is close to the quantum Gilbert–Varshamov rate.

Generalized hypergraph-product code

Herein we consider the decoding of an [[882, 48, 16]] GHP code constructed in ref. 33. This code has row-weight 8, which is less than its minimum distance and is thus highly degenerate. The performance of this code under each decoding strategy is shown in Fig. 3. The conventional BP4, no matter parallel or serial, does not perform good enough. On the other hand, we find that most errors can be decoded by MBP4 with α ∈ [1.2, 1.5]. The results can be further improved by AMBP4 with α* ∈ {1.5, 1.49, …, 0.5}, for both the parallel and serial schedules.

The maximum number of iteration is \({T}_{\max }=32\). The decoding error rate for curve \(P(\hat{{{{\bf{E}}}}}\,\ne\, {{{\bf{E}}}})\) is the classical block error rate, and for the other (non-BDD) curves, it is the logical error rate. The [PK19] curves are from ref. 33.

For reference, we also plot the performance curves in the literature33 in Fig. 3. The curve “[PK19] BP” is quaternary BP with a layered schedule and the curve “[PK19] BP-OSD-ω” is BP with OSD and additional post-processing. In addition to BP and OSD, BP-OSD-ω has to sort out 2ω errors in ω unreliable coordinates, so its complexity is high. For this [[882, 48, 16]] GHP code, as shown in Fig. 3, the performance of AMBP4 is better than BP-OSD-ω with ω = 15. The complexity of AMBP4 is low enough, so we simulate to lower logical error rate.

We also plot several r × BDD performance curves for comparison. Observe that the curve of serial AMBP4 has a slope roughly aligned with 1 × BDD, but its performance is close to 8 × BDD at a logical error rate of 10−6, since more low-weight errors are corrected. We also draw the curve of the classical block error rate \(P(\hat{{{{\bf{E}}}}}\,\ne\, {{{\bf{E}}}})={n}_{{{{\rm{0}}}}}/{n}_{{{{\rm{tot}}}}}\). It becomes the logical error rate after times the ratio ne/n0. Figure 3 shows that the improvement by the ratio ne/n0 is quite significant, which means that AMBP4 is able to exploit the code degeneracy to have better performance.

Surface and toric codes

In this subsection, we simulate the surface codes with a 45∘ rotation for lower overhead57,58. Our analysis can be applied to rotated toric codes as well.

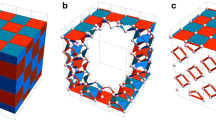

An [[L2, 1, L]] surface code for an odd integer L can be defined on an L × L square lattice. Figure 4a provides an example of L = 5. A stabilizer generator of a surface code is of weight 2 or 4, independent of the minimum distance. Consequently, a large surface code is highly-degenerate. As mentioned in the Introduction, the conventional BP cannot handle highly-degenerate quantum codes since there could be many errors of similar likelihood, so BP will hesitate among these errors. The decoding performance curves of the conventional (parallel) BP4 and (serial) MBP4 on several surface codes are shown in Fig. 5. It can be seen that the conventional BP4 does not work well on these surface codes. Moreover, the logical error rate is worse for a surface code with a larger minimum distance.

a [[L2, 1, L]] surface code with L = 5. b [[L2, 2, L]] toric code with L = 4. In both figures, a qubit is represented by a yellow box numbered from 1 to N. Since the toric code is defined on a torus, there are orange boxes on the right and bottom in b, each representing the qubit of the same number. An X- or Z-type stabilizer is indicated by a label W ∈ {X, Z} between its neighboring qubits. For example, in a, the label X between qubits 1 and 2 is X1X2 and the label Z between qubits 1, 2, 6, and 7 is Z1Z2Z6Z7.

On the other hand, serial MBP4 is able to decode the surface codes, as shown in Fig. 5. For L = 17, the decoding performance of serial MBP4 with α = 0.65 is around 1 × BDD to 2 × BDD, which agrees with Gallager’s expectation on BP decoding of classical codes37.

How MBP4 decodes the surface codes is examined as follows. As previously discussed, ne/n0 would be small if a decoder can find degenerate errors of the target, which is indeed the case for MBP4, as shown in Fig. 6a. We also observe undetected error events in the serial MBP4 decoding. For the conventional BP4, we have ne/n0 ≈ 1 and the undetected error rate ≈ 0 for L > 7. Thus the improvement of serial MBP4 over BP4 comes at the cost of some undetected error events, as shown in Fig. 6b. (A similar phenomenon was also observed in the neural BP decoder)42. This unwanted phenomenon is not surprising, since a large step-size is used so that BP may jump too far, causing logical errors. (We remark that this is not a random search, or otherwise the ratio ne/n0 would be as large as 3/4 since there are four logical operators, I, X, Y, and Z, for a logical qubit). However, the undetected error rate is smaller for larger L so this is fine for the purpose of fault-tolerant quantum computation. Figure 6c compares the average numbers of iterations for serial MBP4 and conventional BP4. It can be seen that serial MBP4 uses fewer iterations than the conventional BP4, and yet the performance of serial MBP4 is better. It means that the convergence behavior of serial MBP4 is more accurate and the computation is more economic and effective.

Next, we verify that the runtime of MBP4 is O(Nj) per iteration. First, consider the toric codes with mean column-weight j = 4. We test serial MBP4 with α = 0.75 at depolarizing rate of 0.32 on one core (4.9 GHz) of an Intel i9-9900K machine. The average runtime per iteration is shown in Fig. 7, which is obviously linear in N. Then we consider the surface codes, which have a mean column-weight slightly smaller than 4. As expected, the average runtime per iteration is again linear in N and the slope is smaller than that for the toric codes, as shown in Fig. 7.

Although MBP4 succeeds to decode topological codes from our simulations, it is also observed that the performance of MBP4 on surface codes saturates for large L, i.e., the slope of the performance curve does not increase as L increases. For better decoding performance, we use AMBP4 with α* ∈ {1.0, 0.99, …, 0.5}, and the performance for L = 17 is greatly improved, as shown in Fig. 5. In Fig. 8, we plot the performance of AMBP4 for each surface code of lattice size L ∈ {3, 5, 7, …, 17} and an error threshold of about 16% is observed. Similarly, a slightly higher error threshold of roughly 17.5% can be observed on the toric codes, using AMBP4 decoding48.

Finally, we compare various polynomial-time decoders in terms of error thresholds and computation complexity in Table 2. Let ϵsurf and \(\epsilon_{\text{toric}}\) denote the error thresholds for the surface and toric codes, respectively. Certain decoders can approach the quantum hashing bound (which is roughly 18.9%6,60,61) for the surface or toric codes, but they will not be considered due to high complexities62,63,64. MWPM achieves ϵsurf ≈ ϵtoric = 15.5%17,19. RG combined with BP (RG-BP) achieves ϵtoric = 16.4%22. The matrix product states (MPS) decoder achieves 17% ≤ ϵsurf ≤ 18.5% with complexity O(N2) specified65. Union-find (UF) has complexity almost linear in N, but its decoding performance is slightly worse than MWPM66. BP-assisted MWPM (BP-MWPM) has high thresholds for both the surface and toric codes, but its complexity is O(N2.5)32. AMBP4 achieves roughly ϵsurf = 16% and ϵtoric = 17.5%, and its complexity is only \(O(N\log \log N)\). Thus AMBP4 is very competing in both decoding performance and computation complexity.

Discussion

We analyzed the energy topology of BP and proposed an efficient BP decoding algorithm for quantum codes called MBP. MBP explores the degeneracy of a quantum code by finding degenerate errors of the target. MBP is competing in both decoding performance and computation complexity. The reader can find a detailed comparison of the thresholds and complexities of MWPM- or BP-based decoders on various topological codes (including color codes and XZZX codes) over depolarizing errors in Table II of ref. 67.

It is known that BP can be treated as a recurrent neural network (RNN)68. Similarly, our MBP induces an RNN with inhibition without the pre-training process. This may provide an explanation why RNN decoders can work on degenerate codes42. Thus, one may consider an MBP-based neural network decoder, which naturally generalizes the BP-based neural networks42,68. One would have an adjustable parameter αmn,i for each edge (m, n) at iteration i.

In AMBP4, one has to find a proper value for α*. An efficient strategy to select α* is desired. A clue is that α* should be related to the properties of the error syndrome. For example, a syndrome vector of high weight usually corresponds to an error of high weight and a smaller value of α should be chosen.

Our decoder can be extended for fault-tolerant quantum computation with imperfect quantum gates, following the initial study of BP decoding for both data and syndrome errors56. This is our ongoing work.

Methods

BP with additional memory effects (MBP)

Decoding an [[N, K]] quantum code subject to an (unknown) error \({{{\bf{E}}}}\in {{{{\mathcal{G}}}}}_{N}\) is to estimate an \(\hat{{{{\bf{E}}}}}\in \pm {{{\bf{E}}}}{{{\mathcal{S}}}}\), given a check matrix S ∈ {I, X, Y, Z}M×N (where M ≥ N − K), a syndrome z ∈ {0, 1}M, a real α > 0, and initial LLRs \({\{{{{{\mathbf{\Lambda }}}}}_{n} = ({{{{\mathbf{\Lambda }}}}}_{n}^{X},{{{{\mathbf{\Lambda }}}}}_{n}^{Y},{{{{\mathbf{\Lambda }}}}}_{n}^{Z})\in {{\mathbb{R}}}^{3}\}}_{n = 1}^{N}\) of the error rate at each qubit (see ref. 30). The error syndrome z ∈ {0, 1}M is defined by

where Sm is the m-th row of S. For simplicity, an E ∈ {I, X, Y, Z}⊗N is represented by E = (E1, E2, …, EN) ∈ {I, X, Y, Z}N.

The LLR value \({\mathbf{\Lambda}}_{n}^{W}\triangleq {{\bf{p}}}_{n}^{I}/{{\bf{p}}}_{n}^{W}\) for W ∈ {X, Y, Z} is initialized by a distribution vector \({{{{\bf{p}}}}}_{n}=({{{{\bf{p}}}}}_{n}^{I},{{{{\bf{p}}}}}_{n}^{X},{{{{\bf{p}}}}}_{n}^{Y},{{{{\bf{p}}}}}_{n}^{Z})=(1-{\epsilon }_{0},\frac{{\epsilon }_{0}}{3},\frac{{\epsilon }_{0}}{3},\frac{{\epsilon }_{0}}{3})\) for independent depolarizing errors. The value of ϵ0 can be the channel error rate ϵ or an independent fixed point ∈ [0, 1]. When the LLR is initialized by a fixed point, this is referred to as fixed initialization.

We denote \({{{\mathcal{N}}}}(m)=\{n:{{{{\bf{S}}}}}_{mn}\,\ne\, I\}\) and \({{{\mathcal{M}}}}(n)=\{m:{{{{\bf{S}}}}}_{mn}\,\ne\, I\}\). Define functions \({\lambda }_{W}:{{\mathbb{R}}}^{3}\to {\mathbb{R}}\)

for W ∈ {X, Y, Z}. Also define an operation ⊞ : for a set of k real scalars \({a}_{1},{a}_{2},\ldots ,{a}_{k}\in {\mathbb{R}}\),

We may simplify a notation \({{{\mathcal{M}}}}(n)\setminus \{m\}\) as \({{{\mathcal{M}}}}(n)\setminus m\).

Algorithm 1:

Quaternary MBP (MBP4)

Input: S ∈ {I, X, Y, Z}M×N, z ∈ {0, 1}M, \({T}_{\max }\in {{\mathbb{Z}}}_{+}\), a real α > 0, and initial LLRs \({\{({{{{\mathbf{\Lambda }}}}}_{n}^{X},{{{{\mathbf{\Lambda }}}}}_{n}^{Y},{{{{\mathbf{\Lambda }}}}}_{n}^{Z})\in {{\mathbb{R}}}^{3}\}}_{n = 1}^{N}\).

Initialization. For n = 1 to N and \(m\in {{{\mathcal{M}}}}(n)\), let

Horizontal Step. For m = 1 to M and \(n\in {{{\mathcal{N}}}}(m)\), compute

Vertical Step. For n = 1 to N and W ∈ {X, Y, Z}, compute

-

(Hard Decision.) Let \(\hat{{{{\bf{E}}}}}=({\hat{{{{\bf{E}}}}}}_{1},{\hat{{{{\bf{E}}}}}}_{2},\ldots ,{\hat{{{{\bf{E}}}}}}_{N})\), where \({\hat{{{{\bf{E}}}}}}_{n}=I\) if \({{{{\mathbf{\Gamma }}}}}_{n}^{W} \,>\, 0\) for all W ∈ {X, Y, Z}, and \({\hat{{{{\bf{E}}}}}}_{n}=\arg \mathop{\min }\limits_{W\in \{X,Y,Z\}}{{{{\mathbf{\Gamma }}}}}_{n}^{W}\), otherwise.

-

If \(\langle \hat{{{{\bf{E}}}}},{{{{\bf{S}}}}}_{m}\rangle ={{{{\bf{z}}}}}_{m}\,\forall \,m\), halt and return “CONVERGE”;

-

Otherwise, if the maximum number of iterations \({T}_{\max }\) is reached, halt and return “FAIL”;

-

(Fixed Inhibition.) Otherwise, for n = 1 to N, \(m\in {{{\mathcal{M}}}}(n)\), and W ∈ {X, Y, Z}, compute

$${{{{\mathbf{\Gamma }}}}}_{n\to m}^{W}={{{{\mathbf{\Gamma }}}}}_{n}^{W}-\,\langle W,{{{{\bf{S}}}}}_{mn}\rangle {{{{\mathbf{\Delta }}}}}_{m\to n}.$$(10) -

Repeat from the horizontal step.

Motivated by the energy topology of a degenerate quantum code and gradient decent energy optimization, we propose MBP4 in Algorithm 1. MBP4 has variable-to-check messages \({\lambda }_{{{{{\bf{S}}}}}_{mn}}({{{{\mathbf{\Gamma }}}}}_{n\to m})\) and check-to-variable messages Δm→n, similarly to the log-BP in ref. 30; however, the message Δm→n is used differently when generating \({{{{\mathbf{\Gamma }}}}}_{n\to m}^{W}\) in Eq. (10). Especially we can rewrite Eq. (10) as

The term − 〈W, Smn〉Δm→n is called inhibition, which provides adequate strength to resist the wrong belief looped in the short cycles. Unlike refs. 28,30, where the corresponding inhibition is also scaled by 1/α, we suggest to keep this inhibition strength (Eq. (11)) since this part is the belief inherited in check node m, and it must remain unchanged when we update the belief in variable n to make the decoding less affected by the short cycles. Consequently, these introduce additional memory effects in MBP. How to choose the factor α is intriguing. Please see ref. 48 for more discussions; for reference, MBP4 can also be defined in the linear domain.

Remark 1

In Algorithm 1, one can verify that

It is more efficient to update \({\lambda }_{{{{{\bf{S}}}}}_{mn}}({{{{\mathbf{\Gamma }}}}}_{n\to m})\) in this way so that for each n, computing \({\lambda }_{{{{{\bf{S}}}}}_{mn}}({{{{\mathbf{\Gamma }}}}}_{n})\) needs at most three computations of \({\lambda }_{{{{{\bf{S}}}}}_{mn}}(\cdot )\) for Smn ∈ {X, Y, Z}; otherwise, directly computing \({\lambda }_{{{{{\bf{S}}}}}_{mn}}({{{{\mathbf{\Gamma }}}}}_{n\to m})\) needs \(| {{{\mathcal{M}}}}(n)|\) (usually ≥3) computations of \({\lambda }_{{{{{\bf{S}}}}}_{mn}}(\cdot )\). On the other hand, the computation in the horizontal step can be simplified as in Remarks 1 and 4 of ref. 30. Then the MBP4 complexity is proportional to the number of edges Nj per iteration, and thus the overall complexity is \(O(Nj{T}_{\max })\) or \(O(Nj\log \log N)\).

Adaptive MBP

Herein we propose a variation of MBP4 with α chosen adaptively, as shown in Algorithm 2. The value of α controls the search radius of MBP4. Typically, a fixed α is chosen so that BP focuses on an error-correction region between 1 × BDD and 2 × BDD. For highly-degenerate codes, we intend to correct errors in a much wider region, and thus we need to consider variations in α. More specifically, α should be chosen according to the given syndrome vector. Precisely determining a required value of α helps to achieve the desired performance, but in general, it is difficult to do so. Generating a solution by referring to multiple instances of the decoder is an important technique in Monte Carlo sampling methods (cf. parallel tempering in ref. 62), as well as in neural networks (cf. Fig. 4 of ref. 68). This is like using an ε-net of α. Thus we conduct multiple instances of MBP4 with different values of α, and choose a valid (syndrome-matched) solution with the largest (the most conservative) value of α. This value of α is adaptively chosen and denoted by α*, so Algorithm 2 is referred to as AMBP4. For this algorithm, the prefix parallel/serial is used to indicate the schedule type of the oracle function MBP4.

Note that in Algorithm 2 each value of αi is tested in a sequential manner; these αi’s can be tested in parallel if the physical resources for implementation are available.

Algorithm 2:

Adaptive MBP4 (AMBP4)

Input: S ∈ {I, X, Y, Z}M×N, z ∈ {0, 1}M, \({T}_{\max }\in {{\mathbb{Z}}}_{+}\), \({\{{{{{\mathbf{\Lambda }}}}}_{n} = ({{{{\mathbf{\Lambda }}}}}_{n}^{X},{{{{\mathbf{\Lambda }}}}}_{n}^{Y},{{{{\mathbf{\Lambda }}}}}_{n}^{Z})\in {{\mathbb{R}}}^{3}\}}_{n = 1}^{N}\), a sequence of real values α1 > α2 > ⋯ > αl > 0, and an oracle function MBP4.

Initialization: Let i = 1.

MBP Step: Run MBP\({}_{4}({{{\bf{S}}}},\,{{{\bf{z}}}},\,{T}_{\max },\,{\alpha }_{i},\,\{{{{{\mathbf{\Lambda }}}}}_{n}\})\), which will return “CONVERGE” or “FAIL” with estimated \(\hat{{{{\bf{E}}}}}\in {\{I,X,Y,Z\}}^{N}\).

Adaptive Check:

-

If the return indicator is “CONVERGE”, then return "SUCCESS” (with valid \(\hat{{{{\bf{E}}}}}\) and α* = αi);

-

Let i ← i + 1. If i > l, return “FAIL” (with invalid \(\hat{{{{\bf{E}}}}}\));

-

Otherwise, repeat from the MBP Step.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Shor, P. W. Algorithms for quantum computation: Discrete logarithms and factoring. In Proc. 35th Annual Symposium on Foundations of Computer Science (FOCS). 124–134 (IEEE, 1994).

Suchara, M. et al. QuRE: The quantum resource estimator toolbox. In Proc. IEEE 31st International Conference on Computer Design (ICCD). 419–426 (IEEE, 2013).

Wang, Y. et al. Single-qubit quantum memory exceeding ten-minute coherence time. Nat. Photonics 11, 646–650 (2017).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505–510 (2019).

Shor, P. W. Fault-tolerant quantum computation. In Proc. 37th Annual Symposium on Foundations of Computer Science (FOCS). 56–65 (IEEE Computer Society, 1996).

Gottesman, D. Stabilizer Codes and Quantum Error Correction. Ph.D. thesis, California Institute of Technology (1997).

Calderbank, A. R., Rains, E. M., Shor, P. W. & Sloane, N. J. A. Quantum error correction via codes over GF(4). IEEE Trans. Inf. Theory 44, 1369–1387 (1998).

Kitaev, A. Y. Fault-tolerant quantum computation by anyons. Ann. Phys. 303, 2–30 (2003).

Bombin, H. & Martin-Delgado, M. A. Topological quantum distillation. Phys. Rev. Lett. 97, 180501 (2006).

MacKay, D. J. C., Mitchison, G. & McFadden, P. L. Sparse-graph codes for quantum error correction. IEEE Trans. Inf. Theory 50, 2315–2330 (2004).

Tillich, J.-P. & Zémor, G. Quantum LDPC codes with positive rate and minimum distance proportional to the square root of the blocklength. IEEE Trans. Inf. Theory 60, 1193–1202 (2014).

Kovalev, A. A. & Pryadko, L. P. Quantum Kronecker sum-product low-density parity-check codes with finite rate. Phys. Rev. A 88, 012311 (2013).

Kuo, K.-Y. & Lu, C.-C. On the hardnesses of several quantum decoding problems. Quantum Inf. Process. 19, 1–17 (2020).

Iyer, P. & Poulin, D. Hardness of decoding quantum stabilizer codes. IEEE Trans. Inf. Theory 61, 5209–5223 (2015).

Edmonds, J. Paths, trees, and flowers. Can. J. Math. 17, 449–467 (1965).

Dennis, E., Kitaev, A., Landahl, A. & Preskill, J. Topological quantum memory. J. Math. Phys. 43, 4452–4505 (2002).

Wang, C., Harrington, J. & Preskill, J. Confinement-Higgs transition in a disordered gauge theory and the accuracy threshold for quantum memory. Ann. Phys. 303, 31–58 (2003).

Raussendorf, R., Harrington, J. & Goyal, K. A fault-tolerant one-way quantum computer. Ann. Phys. 321, 2242–2270 (2006).

Wang, D. S., Fowler, A. G., Stephens, A. M. & Hollenberg, L. C. L. Threshold error rates for the toric and planar codes. Quantum Inf. Comput. 10, 456–469 (2010).

Fowler, A. G., Whiteside, A. C. & Hollenberg, L. C. Towards practical classical processing for the surface code. Phy. Rev. Lett. 108, 180501 (2012).

Fowler, A. G. Minimum weight perfect matching of fault-tolerant topological quantum error correction in average O(1) parallel time. Quantum Inf. Comput. 15, 145–158 (2015).

Duclos-Cianci, G. & Poulin, D. Fast decoders for topological quantum codes. Phys. Rev. Lett. 104, 050504 (2010).

Wang, D. S., Fowler, A. G., Hill, C. D. & Hollenberg, L. C. L. Graphical algorithms and threshold error rates for the 2d color code. Quantum Inf. Comput. 10, 780–802 (2010).

Bombin, H., Duclos-Cianci, G. & Poulin, D. Universal topological phase of two-dimensional stabilizer codes. N. J. Phys. 14, 073048 (2012).

Delfosse, N. Decoding color codes by projection onto surface codes. Phys. Rev. A 89, 012317 (2014).

Sarvepalli, P. & Raussendorf, R. Efficient decoding of topological color codes. Phys. Rev. A 85, 022317 (2012).

Stephens, A. M. Efficient fault-tolerant decoding of topological color codes. Preprint at https://arxiv.org/abs/1402.3037 (2014).

Kuo, K.-Y. & Lai, C.-Y. Refined belief propagation decoding of sparse-graph quantum codes. IEEE J. Sel. Areas Inf. Theory 1, 487–498 (2020).

Kuo, K.-Y. & Lai, C.-Y. Refined belief-propagation decoding of quantum codes with scalar messages. In Proc. IEEE Globecom Workshops 1–6 (IEEE, 2020).

Lai, C.-Y. & Kuo, K.-Y. Log-domain decoding of quantum LDPC codes over binary finite fields. In IEEE Transactions on Quantum Engineering 1–15 (IEEE, 2021).

Poulin, D. & Chung, Y. On the iterative decoding of sparse quantum codes. Quantum Inf. Comput. 8, 987–1000 (2008).

Criger, B. & Ashraf, I. Multi-path summation for decoding 2D topological codes. Quantum 2, 102 (2018).

Panteleev, P. & Kalachev, G. Degenerate quantum LDPC codes with good finite length performance. Quantum 5, 585 (2021).

Roffe, J., White, D. R., Burton, S. & Campbell, E. T. Decoding across the quantum LDPC code landscape. Phys. Rev. Res. 2, 043423 (2020).

Grospellier, A., Grouès, L., Krishna, A. & Leverrier, A. Combining hard and soft decoders for hypergraph product codes. Quantum 5, 432 (2021).

Davey, M. & MacKay, D. Low-density parity check codes over GF(q). IEEE Commun. Lett. 2, 165–167 (1998).

Gallager, R. G. Research Monograph Series (MIT Press, 1963).

MacKay, D. J. C. Good error-correcting codes based on very sparse matrices. IEEE Trans. Inf. Theory 45, 399–431 (1999).

Raveendran, N. & Vasić, B. Trapping sets of quantum LDPC codes. Quantum 5, 562 (2021).

Torlai, G. & Melko, R. G. Neural decoder for topological codes. Phys. Rev. Lett. 119, 030501 (2017).

Krastanov, S. & Jiang, L. Deep neural network probabilistic decoder for stabilizer codes. Sci. Rep. 7, 11003 (2017).

Liu, Y.-H. & Poulin, D. Neural belief-propagation decoders for quantum error-correcting codes. Phys. Rev. Lett. 122, 200501 (2019).

Maskara, N., Kubica, A. & Jochym-O’Connor, T. Advantages of versatile neural-network decoding for topological codes. Phys. Rev. A 99, 052351 (2019).

Fossorier, M. & Lin, S. Soft-decision decoding of linear block codes based on ordered statistics. IEEE Trans. Inf. Theory 41, 1379–1396 (1995).

Bruck, J. & Blaum, M. Neural networks, error-correcting codes, and polynomials over the binary n-cube. IEEE Trans. Inf. Theory 35, 976–987 (1989).

Yedidia, J. S., Freeman, W. T. & Weiss, Y. Constructing free-energy approximations and generalized belief propagation algorithms. IEEE Trans. Inf. Theory 51, 2282–2312 (2005).

Lucas, R., Bossert, M. & Breitbach, M. On iterative soft-decision decoding of linear binary block codes and product codes. IEEE J. Sel. Areas Commun. 16, 276–296 (1998).

Kuo, K.-Y. & Lai, C.-Y. Exploiting degeneracy in belief propagation decoding of quantum codes. Preprint at https://arxiv.org/abs/2104.13659 (2021).

Hopfield, J. J. Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Nat. Acad. Sci. USA 81, 3088–3092 (1984).

Hopfield, J. J. & Tank, D. W. "neural” computation of decisions in optimization problems. Biol. Cybern. 52, 141–152 (1985).

Hopfield, J. J. & Tank, D. W. Computing with neural circuits: a model. Science 233, 625–633 (1986).

Van den Bout, D. E. & Miller, T. K. Improving the performance of the Hopfield-Tank neural network through normalization and annealing. Biol. Cybern. 62, 129–139 (1989).

Marcus, C. M., Waugh, F. R. & Westervelt, R. M. Nonlinear dynamics and stability of analog neural networks. Phys. D. 51, 234–247 (1991).

Hagiwara, M., Fossorier, M. P. C. & Imai, H. Fixed initialization decoding of LDPC codes over a binary symmetric channel. IEEE Trans. Inf. Theory 58, 2321–2329 (2012).

Sutskever, I., Martens, J., Dahl, G. & Hinton, G. On the importance of initialization and momentum in deep learning. In Proc. 30th International Conference on Machine Learning (ICML) 1139–1147 (PMLR, 2013).

Kuo, K.-Y., Chern, I.-C. & Lai, C.-Y. Decoding of quantum data-syndrome codes via belief propagation. In Proc. IEEE International Symposium on Information Theory (ISIT) 1552–1557 (IEEE, 2021).

Bombin, H. & Martin-Delgado, M. A. Optimal resources for topological two-dimensional stabilizer codes: Comparative study. Phys. Rev. A 76, 012305 (2007).

Horsman, C., Fowler, A. G., Devitt, S. & Van Meter, R. Surface code quantum computing by lattice surgery. N. J. Phys. 14, 123011 (2012).

Yu, N., Lai, C.-Y. & Zhou, L. Protocols for packet quantum network intercommunication. In IEEE Transactions on Quantum Engineering (2021).

Ekert, A. & Macchiavello, C. Quantum error correction for communication. Phys. Rev. Lett. 77, 2585 (1996).

Bennett, C. H., DiVincenzo, D. P., Smolin, J. A. & Wootters, W. K. Mixed-state entanglement and quantum error correction. Phys. Rev. A 54, 3824 (1996).

Wootton, J. R. & Loss, D. High threshold error correction for the surface code. Phys. Rev. Lett. 109, 160503 (2012).

Bombin, H., Andrist, R. S., Ohzeki, M., Katzgraber, H. G. & Martín-Delgado, M. A. Strong resilience of topological codes to depolarization. Phys. Rev. X 2, 021004 (2012).

Ohzeki, M. Error threshold estimates for surface code with loss of qubits. Phys. Rev. A 85, 060301 (2012).

Bravyi, S., Suchara, M. & Vargo, A. Efficient algorithms for maximum likelihood decoding in the surface code. Phys. Rev. A 90, 032326 (2014).

Delfosse, N. & Nickerson, N. H. Almost-linear time decoding algorithm for topological codes. Quantum 5, 595 (2021).

Kuo, K.-Y. & Lai, C.-Y. Comparison of 2D topological codes and their decoding performances. In Proc. IEEE International Symposium on Information Theory (ISIT) 186–191 (IEEE, 2022).

Nachmani, E. et al. Deep learning methods for improved decoding of linear codes. IEEE J. Sel. Top. Signal Process. 12, 119–131 (2018).

Acknowledgements

CYL was supported by the National Science and Technology Council in Taiwan under Grant MOST110-2628-E-A49-007, MOST111-2628-E-A49-024-MY2, MOST111-2119-M-A49-004, and MOST111-2119-M-001-002.

Author information

Authors and Affiliations

Contributions

C.-Y.L. and K.-Y.K. formulated the initial idea and developed the theory. K.-Y.K. performed the simulations. All co-authors contributed to the preparation of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kuo, KY., Lai, CY. Exploiting degeneracy in belief propagation decoding of quantum codes. npj Quantum Inf 8, 111 (2022). https://doi.org/10.1038/s41534-022-00623-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-022-00623-2

This article is cited by

-

Exploiting degeneracy in belief propagation decoding of quantum codes

npj Quantum Information (2022)