Abstract

Soft strain sensors pose great potential for emerging human–machine interfaces. However, their real-world applications have been limited due to challenges such as low reproducibility, susceptibility to environmental noise, and short lifetimes, which are attributed to nanotechnologies, including microfabrication techniques. In this study, we present a computer vision-based optical strain (CVOS) sensor system that integrates computer vision with streamlined microfabrication techniques to overcome these challenges and facilitate real-time multiaxial strain mapping. The proposed CVOS sensor consists of an easily fabricated soft silicone substrate with micro-markers and a tiny camera for highly sensitive marker detection. Real-time multiaxial strain mapping allows for measuring and distinguishing complex multi-directional strain patterns, providing the proposed CVOS sensor with higher scalability. Our results indicate that the proposed CVOS sensor is a promising approach for the development of highly sensitive and versatile human–machine interfaces that can operate long-term under real-world conditions.

Similar content being viewed by others

Introduction

Soft sensors with high sensitivity, large working range, and flexibility to overcome the limitations of traditional metallic strain sensors, have sparked great interest in a broad array of applications1,2,3,4,5,6. Soft strain sensors typically utilize changes in the electrical resistance or capacitance induced by their shape deformation. Various piezoresistive- and piezocapacitive-type flexible strain sensors have been suggested using carbon nanotubes (CNTs)7,8,9,10,11,12, nanoparticles13,14,15, nanowires16,17,18, thin metal films19,20,21, conductive polymers22,23, or laser-induced graphene (LIG) 24,25,26,27 as the conductive layer. Although these sensors differ in their materials and fabrication methods, they show large working ranges and high sensitivity compared with conventional metallic sensors. However, despite the remarkable performances, these sensors have limited applications in real world because of their low reproducibility from complex micro/nanofabrication processes, vulnerability to environmental noise, and performance degradation by repeated use or over time. The low reproducibility can be attributed to the randomness of the materials and the sophisticated fabrication methods, which adversely affect the yield rate and overall fabrication costs. Nanomaterials contain randomness caused by their uneven spatial and size distributions28,29,30. In addition, nano- and microfabrication methods are extremely difficult to optimize because of the large influence of human factors and external environmental factors such as temperature, humidity, and vibration at such small scales28,31. Meanwhile, environmental noise from the ambient temperature and curvature during the operation adversely influences the sensor signal13,32,33. For soft strain sensors, the signal integrity can largely depend on the ambient temperature, which can vary significantly under actual operating conditions. Moreover, the deformation of a sensor can lead to a continuously varying curvature. This changes the alignment of the nanomaterials, which can affect the gauge factor (GF) and linearity34,35,36. Finally, the performance degradation can be attributed to the damage or deformation of the conductive layer with repeated stretching and compression of the sensor in the case of extremely thin substrates19,37. Similarly, the performance of conductive hydrogel-based sensors may be degraded by water loss over time38,39. These remaining challenges must be addressed by the next generation of flexible strain sensors for wider application under real-world conditions.

Past attempts to overcome the above challenges have focused on developing materials and fabrication methods. While those efforts have been successful to some extent, there is some doubt that nanotechnology alone is the optimal solution. Recently, optical strain sensors28,40,41, which detect an optical response instead of electrical response, have been proposed to address the structural vulnerabilities of the conductive materials in existing piezoresistive and piezocapacitive strain sensors. Optical strain sensors are based on the concept of piezotransmittance, in which light detectors are used to measure changes in the transmittance of an elastomer layer with the varying thickness due to stretch. Experimental results in those previous studies have demonstrated high sensor-to-sensor uniformity and repeatability, but the transmittance is affected by the direction or intensity of the external light source. Thus, a sensing interface is needed to facilitate the measurement of a stable response by automatically correcting for the error factors of flexible surfaces.

Vision technologies have made remarkable progress, and their advances have been accelerated by the emergence of artificial intelligence (AI)42,43,44. We hypothesized that AI-based vision technology can be integrated with optical strain sensors to enhance their accuracy and automaticity and facilitate their fabrication. Here, we report a computer-vision-based optical strain (CVOS) sensor to address the challenges faced by current soft sensor technologies: reproducibility, vulnerability, and repeatability. The proposed sensor integrates three technologies: a nonmetal sensor, which can be simply fabricated by using laser processing to apply regular micro-marker patterns on an Ecoflex film; close-up imaging using an assembly of commercially available parts to capture micro-markers; and advanced vision using an automated response correction algorithm to correct various error factors in the captured images such as the focusing distance, hysteresis, and bending state. As the proposed sensor stretches, the micro-markers move in the stretched direction. Then, the optical system adaptively recognizes the change in the micro-markers by object-tracking and sensor state detection. Furthermore, the integration of vision technology and strain sensors provides a significant advantage through the implementation of multiaxial strain mapping, enabling the swift determination of the magnitude and orientation of intricate strains applied to the strain sensors. This represents a significant advantage over previously reported strain sensors, as the direction of the strain applied to the sensor can be thoroughly detected with greater precision, thereby expanding the potential range of applications by providing more comprehensive information.

Previously reported strain sensors were typically constrained to measuring the degree of deformation, which limited their scalability. In contrast, multiaxial strain mapping facilitates simultaneous identification and quantification of deformations occurring in diverse directions, providing scalability for extending applications in real-world environments characterized by complex strains. Our findings suggest that the proposed CVOS sensor can meet the requirements of real-world applications (i.e., high reproducibility, high sensitivity, long-term operability, and multiaxial strain mapping) that conventional material-based sensors cannot.

Results

Design and working mechanism of the CVOS sensor

Figure 1 shows the design and working mechanism of the proposed CVOS sensor. The CVOS sensor consists of two subsystems (Fig. 1a): the sensing part comprising micro-markers on an Ecoflex film, and the optical system to capture changes in the marker positions due to sensor deformation. The sensing part consists of a square-shaped white Ecoflex film with micro-markers. The opaque white color of the Ecoflex film increases the micro-marker detection rate by preventing unnecessary light reflection and penetration. The symmetric square design (size: 14 mm × 14 mm) makes it easier to predict the response of the sensor to loads such as a bending strain or biaxial strain. The micro-markers, with average diameters of 528.11 ± 23.58 µm (mean ± standard deviation (SD)), were measured from 32 samples, ensuring reliable detection by the optical system. Furthermore, the distance between micro-markers is minimized up to the operational constraints of the laser marking machine, enabling the optical system to recognize as many markers as possible at once (670.23 ± 18.2 µm). The optical system comprises a tiny camera, compact microscope lens, and light source (LED). The optical distortion caused by changes in the light path is corrected by a camera calibration process. The light source makes the surface of the sensor visible to the camera, which allows the optical system to operate even under low-light conditions. This configuration enables the optical system to capture micro-markers at a resolution of 640 × 480 pixels at very close distances (3–6 mm). Figure 1b presents the working mechanism of the CVOS sensor. The relative pixel changes of the micro-markers in response to the applied strain are measured by the optical system and are processed in real time by the automated response correction algorithm. The red dotted box represents the field of view (FOV) of the camera. The algorithm tracks specific micro-markers in the FOV and corrects the response based on the sensor state. Figure 1c depicts the motion of micro-markers during the occurrence of multiaxial strain. Through the analysis of the movement of micro-markers, the CVOS sensor can detect the direction and magnitude of complex strains.

a Design (Scale bar: 100 µm). b Strain detection mechanism. c Movement of the micro-marker according to multiaxial strain direction. d Images of micro-markers captured by the optical system to detect the applied tensile strain. e Comparison of micro-marker positions obtained via numerical simulations and measurements.

Figure 1d displays images captured by the optical system and the detected strain based on the micro-markers (green boxes). Increasing the applied strain caused the center points of the micro-markers (red dots) to move in the direction of the applied strain because the FOV is fixed. This demonstrates that the positions of the micro-markers could be used to detect the applied strain. As shown in Fig. 1e, the predicted positions of the micro-markers were almost the same as their measured positions (mean absolute error = 4.8 pixels). These results prove that the response of the CVOS sensor could be predicted by numerical simulations.

Design of the real-time strain analysis algorithm

Figure 2a shows a flowchart of the custom-built automated response correction algorithm. Current research on computer vision has focused on deep learning-based image processing, which has high computational costs. In our case, however, we have a controlled and ideal optical environment (i.e., regular pattern of objects, constant focal length, and fixed direction of light source), which makes it possible to design a low-cost image processing algorithm suitable for mobile processors. The algorithm comprises a series of processes for micro-marker detection: estimation of the current sensor state (curvature and loading/unloading), tracking of micro-markers, machine learning-based response correction, and multiaxial strain mapping. These processes are executed sequentially, and a corrected response is derived in real time according to the current sensor state.

The raw image of the micro-markers can include several sources of noise, such as an unbalanced contrast due to the direction of the light source, manufacturing error, and impurities on the surface of the sensing part. Figure 2b shows the micro-marker detection process, which includes several image filters to enhance the image properties and reduce noise. The contour approximation method is applied to the filtered image to detect micro-markers based on bounding boxes (green boxes). Figure 2c presents visual information used to decide on the initial micro-markers of interest (MOIs). The relative change in pixels of MOIs represents the sensor response to the applied strain. In this case, the initial MOIs were nine micro-markers located around the center of the image in the no-strain state. The use of multiple MOIs prevents a completely incorrect sensor response even if several MOIs are tracked incorrectly. Figure 2d illustrates the curvature state detection process by the analysis of Voronoi cell. This process is thoroughly discussed in the paragraph related to Fig. 4.

The CVOS sensor accurately predicts the micro-marker positions outside the FOV by using a special object-tracking method taking advantage of the controlled vision environment and uniform micro-pattern (Supplementary Video 1). General object-tracking methods focus on tracking several objects within the FOV. Figure 3a, b suggests the limitations of this approach. One of the limitations was the detection error at the image corners due to the system’s limitations to capture the full size micro-marker or changes in the intensity of the light source. Moreover, if the outer MOIs are located outside or close to the FOV, they are no longer tracked, and adjacent markers are tracked instead. Consequently, the response of the outer MOIs has errors (Fig. 3c). We designed a virtual MOI so that the positions of outer MOIs can be predicted according to the positions of inner MOIs when the outer MOIs exceed the threshold area at the image corners (Fig. 3d, e). Figure 3f illustrates that the special object-tracking method using the virtual MOI could estimate the response of outer MOIs outside the FOV. These results suggest that the special object-tracking method extends the working range of the sensor.

a Concept of inner MOIs [0,3,6] and outer MOIs [2,5,8]. b Limitation of general object-tracking methods. c Relative pixel change of MOI groups with the general object-tracking method at 0–40% strain. d Concept of the threshold area at the image corners. e Concept of virtual MOIs [2,5,8]. f Relative pixel change of MOI groups with the object-tracking method using virtual MOIs at 0–40% strain.

The generation of a real-time strain map, comprising partial strain direction and magnitude, can be achieved via micro-marker matching. By using micro-markers as feature points, the complex algorithms required to identify feature points between images can be bypassed, thereby enabling real-time strain map generation. Finally, we employed a partitioning method, dividing the image into four quadrants and comparing the micro-marker in each quadrant to determine the strain direction within that specific region.

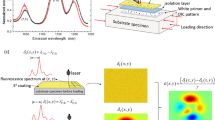

The curvature state of the sensor is detected by using a Voronoi diagram and is classified as linear or bending (Fig. 4a). Bending can be further categorized into in-plane bending, where the sensor bends within its own plane, and out-of-plane bending, where the sensor bends in the vertical direction; however, our study primarily focuses on out-of-plane bending due to its significant impact on sensor response. The linear state is the most important consideration for strain sensors and refers to when the strain sensor is attached to a plane surface. The bending state is correlated to the curvature radius and angle, and it refers to when the strain sensor is attached to a curved surface. The micro-markers in the captured image and Voronoi diagram show different shapes depending on the curvature state (Supplementary Fig. 1). The Voronoi diagram divides a plane into regions close to each original point45. In this study, the center points of the MOIs were used as the original points in the Voronoi diagram, which comprised Voronoi vertices (orange points), edges (black line), and original points (blue points). Our previous research showed that Voronoi cells, which are regions surrounded by Voronoi edges in the Voronoi diagram, are suitable indicators of the influence of micro-markers. This is because the areas of Voronoi cells have high linearity (R2 = 0.96) with the applied strain compared with the areas of the MOI (R2 = 0.39) or MOI rectangles (R2 = 0.94) (Supplementary Fig. 2). In addition, the Voronoi diagram can reduce the computational cost of numerical simulations because the convex and concave states are the same. Figure 4b presents the average and standard deviation of the Voronoi cell area depending on the curvature radius. The trend for the areas of the Voronoi cells can be used to predict the curvature state of the sensor. Figure 4c, d shows the finite element method (FEM) results for simulations of the curvature state. The sensor response in the bending state was calculated based on simulations in the linear state with a high prediction accuracy. At 80% strain, the sensor responses in the linear and bending states differed by up to 3%. These results strongly suggest that the response in the bending state can be obtained by correcting the response in the linear state.

Characteristics of the CVOS sensor

The various characteristics of the CVOS sensor were analyzed. The response of piezoresistive or piezocapacitive strain sensors is related to the change in resistance, whereas the response of the CVOS sensor is related to the change in pixels. Therefore, the GF of the CVOS sensor is defined as follows:

where \({MO}{I}_{0}\) is the center coordinates of the initial MOI, \(\Delta {MOI}\) is the difference between the center coordinates of the initial MOI and current MOI, and ε is the strain. Figure 5a shows that the CVOS sensor had a high GF (503.4), low hysteresis (0.9%), and high linearity (R2 > 0.99) at a large working range (ε = 0–81%). The high linearity and low hysteresis indicate that the sensor response could be predicted with sufficient accuracy. These remarkable characteristics can be attributed to the automated response correction algorithm and sensing part comprising a single material. The CVOS sensor performance was affected by the distance of the lens from the sensing part and the size of the sensing part, and the pitch of the pattern (Supplementary Fig. 3, Supplementary Table. 1, Supplementary Table. 2 and Supplementary Table. 3). In this test, the sensing part had a square shape (14 mm × 14 mm), the distance of the lens from the sensing part was 5 mm and the pitch of the pattern was 700 µm. In addition, the four samples showed almost similar performances with a mean absolute percentage error (MAPE) of 3.1% (Fig. 5b). The MAPE is defined as follows:

where \(n\,\) is the number of data, \({S}_{t}\) is the response of the sensor, and \({{AS}}_{t}\) is the response of another sensor.

a Relative pixel change during a loading–unloading cycle at 0–81% strain. b Sensor-to-sensor uniformity for four samples at 0–81% strain. c Response to strain in the range 0–77% strain. d Very small (0.19–0.31%) strain detection. e MOI position according to strain using the object-tracking method with virtual MOIs. f Strain mapping according to multiaxial strain. g Comparison between test results of the initial cycle and after 1000, 5000, and 10,000 cycles. h The comparison of the gauge factor and sensing range of the strain sensors with those of the previously reported flexible strain sensors.

The high uniformity was attributed to the CVOS sensor being fabricated by precise laser processing without complicated chemical processes. Figure 5c shows the dynamic response of the CVOS sensor to various strains in the working range. The sensor demonstrated good response and recovery properties. Figure 5d illustrates that the CVOS sensor is capable of detecting extremely small strains, as small as 0.19%, 0.25%, and 0.31%, which are close to the detection limit of the most recently developed strain sensors (Supplementary Fig. 4 and Supplementary Table 4). Figure 5e shows the MOI positions of the CVOS sensor according to the strain. All micro-markers (green boxes) were clearly detected, and the MOIs (red boxes) moved in the stretched direction. When the strain was over 60%, virtual MOIs (red dotted boxes) were generated, which proves that the object-tracking method worked as designed. Figure 5f displays the strain map of the CVOS sensor as a function of multiaxial strain. The average strain direction for each quadrant, corresponding well with the intended direction in the experiment, was measured from 63 samples: quadrant 1 = 223.52 ± 26.55°, quadrant 2 = 168.47 ± 20.23°, quadrant 3 = 130.44 ± 35.86°, and quadrant 4 = 226.41 ± 25.21° (mean ± SD). Figure 5g shows the test results after 1000, 5000, and 10,000 cycles at five loading–unloading curves in comparison with the initial cycle for a strain range of 0–60%. The curves of each cycle were nearly identical, which demonstrate the high reliability and durability of the CVOS sensor. As a whole, the superior sensing performance, high uniformity among sensors, and robustness of the CVOS sensor collectively position it as a competitive option for human–machine interfaces, compared to earlier strain sensors using diverse sensing mechanisms and materials (Fig. 5h and Supplementary Table 4).

Sampling rate

The sampling rate of the automated response correction algorithm was investigated according to the operating environment. Tests were run for 10 min. For the image processing, the sampling rate was expressed in frames per second (FPS). Supplementary Table. 5 indicates that the proposed algorithm had a high sampling rate of FPS (83) when running on a PC but only had a sampling rate of 15 FPS on an embedded board (Raspberry PI 4). The CVOS sensor had a somewhat lower sampling rate than piezoresistive or piezocapacitive strain sensors. However, previous studies46,47 have shown that a sampling rate of 10–20 Hz or FPS is sufficient for monitoring human motions. A higher sampling rate can be realized by reducing the image size (e.g., from 640 × 480 pixels to 320 × 240 pixels). In this case, the lowering the image resolution increased the sampling rate to 30 FPS, although the GF was low at 251.7. Furthermore, the sensor’s limit of detection doubles to 0.38%. If an embedded board with a high-performance CPU is available, the sampling rate can be increased to a level that can monitor all human motions, including skin-level motions. The embedded boards in this test used old CPUs designed in 2012 (Raspberry PI zero2) and 2015 (Raspberry PI 4).

Experimental demonstration

The mechanical stability and repeatability of the CVOS sensor guarantee its practical applicability (see Supplementary Video 2 and Supplementary Video 3). We built a real-time motion monitoring system comprising the CVOS sensor and a body brace to demonstrate its practical application. The optical system of the CVOS sensor was packaged in a 3D-printed structure to maintain a constant distance of 5 mm from the sensing part. The CVOS sensor measured the bending of the elbow, wrist, and knee with high repeatability and stability (Fig. 6a–c). In the case of wrist and knee monitoring, the CVOS sensors were integrated into a strap-type body brace, facilitating a strong coupling with the body, while in the case of elbow monitoring, a non-strap-type body brace was used. The strap-type body brace tightly fastens to specific parts of the body using bands, which can introduce strain measurements that deviate from the body’s original movements such as Fig. 6b, c (i.e., a significant increase in y-axis strain compared to expectations). Given the widespread use of strap-type body braces in practical rehabilitation and similar purposes, these findings offer valuable insights for the integration of body braces and sensors for practical applications. Figure 6d shows the response trends of the CVOS sensor and inertial measurement unit (IMU) sensor for the axis related to the rotation of the forearm (full IMU data are shown in Supplementary Fig. 5). The trends of the CVOS and IMU sensors were almost the same for forearm rotation motions (i.e., supination and pronation). The results confirmed that the CVOS sensor could detect forearm rotation like the IMU sensor. This is because the CVOS sensor can detect surface deformation with high sensitivity and biaxial sensing performance. This is significant because few studies have considered detecting body rotation using a single flexible strain sensor. The random bias drift of the IMU sensor, which builds up over time, affects its performance48, whereas the performance of the CVOS sensor is not affected over time. Therefore, the CVOS sensor may become a feasible alternative to IMU sensors for long-term monitoring of body motions, including rotation.

A strain sensor should be able to distinguish complex body motions, which have various directions and angles, for real-world applicability to rehabilitation. The real-time motion monitoring system based on the CVOS sensor was tested on its ability to detect elbow flexion and extension in two directions: moving toward the chest (Case 1) and moving toward the humerus bone in the arm (Case 2). The cases were distinguished by the movement direction of the glenohumeral joint. Figure 6e presents the monitoring results of the bending angle and movement direction. The relative pixel change along the x-axis was almost the same in both cases. However, the relative pixel change along the y-axis varied greatly in Case 2 but did not change significantly in Case 1. Therefore, the CVOS sensor could clearly classify the two bending motions according to the movement direction. The IMU sensor also clearly detected the body rotation and bending angle in Case 2 (Supplementary Fig. 6). These results demonstrate the versatility of the CVOS sensor and its potential for various real-world applications such as in healthcare.

One of the most significant findings of this study is the multiaxial strain mapping function of the CVOS sensor to detect complex strains that are typically challenging to classify with previously reported strain sensor systems (see Supplementary Video 4). Figure 6f displays the results of monitoring different shoulder movements, including flexion, extension, abduction, internal rotation, external rotation, and adduction. Unlike previously reported strain sensors, which tend to focus solely on strain magnitude, the CVOS sensor’s multiaxial strain mapping function allows for greater precision and thorough strain direction detection, thereby enabling the classification of various movements. Although classifying movements based solely on the 2-axis strain magnitude can be challenging due to the similarity of responses between some movements, we successfully classified all six movements using the strain direction of each quadrant obtained from the multiaxial strain mapping. These results suggest that strain direction, an often-overlooked feature in strain sensing, can have a significant impact on the capture of complex strain patterns in real-world applications, and the ability of the CVOS sensor to rapidly capture strains makes it particularly well-suited for this task.

Discussion

The proposed CVOS sensor is based on integrating computer vision with a simple sensor fabrication method. The sensor comprises an easily reproducible polymer-based sensing part with micro-markers, an off-the-shelf optical system to detect the micro-markers, and an automated response correction algorithm that analyzes the detected micro-markers and automatically corrects the response according to sensor state. The simple and concise laser-based fabrication method improves reproducibility and repeatability by eliminating the need for highly specialized methods to fabricate a conductive layer. In addition, the CVOS sensor has a much longer lifetime than current soft strain sensors because it does not suffer performance degradation due to factors such as water loss of a conductive hydrogel or degradation of a conductive layer. Next, the micro-marker alignment facilitates numerical prediction of their coordinates with high accuracy (mean absolute error = 4.8 pixels in this study). Positions of the micro-marker move according to the sensor deformation and can be used intuitively for image processing and sensor state detection. The optical system can capture close-up images with microscale resolution using only a tiny camera, commercial lens, and LED light source. The optical system and regular micro-marker patterns provide a controlled optical environment, which minimizes image processing costs. For example, we used a simple object-tracking method based on the Euclidean distance. Furthermore, the automated response correction algorithm guarantees a reliable biaxial response even with various error factors such as the hysteresis and bending state. In addition to the aforementioned features, the proposed CVOS sensor offers the ability to perform multiaxial strain mapping. The multiaxial strain mapping function is useful to distinguish various movements, which was difficult to achieve with existing strain sensor systems.

The proposed CVOS sensor demonstrated an detection performance with a high GF (503.4), low hysteresis (0.9%), low detection limit (0.19%), and high linearity (R2 > 0.99) over a large working range (ε = 0–81%). In particular, the CVOS sensor maintained its performance even after tests of more than 10,000 cycles, which demonstrates its readiness for real-world applications. In experiments, we integrated the CVOS sensor in a brace to demonstrate its potential for monitoring body motions such as the flexion and extension of the limbs. Furthermore, the proposed sensor could measure complex strains, including shoulder movement and rotational motions such as supination and pronation, unlike previous works that only focused on measuring stretching motions49. The multiaxial strain mapping function of the CVOS system can quickly capture the intensity and direction of various strains and can be used to comprehensively monitor the motions of body parts such as the shoulder, elbow, wrist, knee, and forearm. The capability of a single CVOS sensor to rapidly detect the direction and rotation of complex strains offers a competitive advantage over previous approaches, which typically require a large-scale sensor array consisting of two or more sensors. The performance and versatility of the CVOS sensor indicate that it can serve as a disruptive solution to the many constraints of current soft strain sensors. We project that the proposed CVOS sensor can be combined with rehabilitative programs to enable feedback-based treatment and provide a pathway for more effective rehabilitation therapies.

Moreover, the applications of the CVOS sensor are not just limited to rehabilitation devices, and it can be generalized to other human–machine interface devices by customizing the design parameters and algorithm. While our demonstration of the CVOS sensor focused on sensing body motions, we envision the CVOS sensor as a scalable sensing solution for various industrial applications because design parameters such as the size of the sensing part and micro-markers, image resolution, and focus length are easily customizable depending on the purpose. The current curvature state detection algorithm based on the Voronoi diagram is focused on only out-of-plane bending, but it has the potential to be extended to detect complex bending states including in-plane bending. The AI architecture and image processing are also generic and thus are easily modified. In this study, we used a computationally light algorithm to facilitate operation on a mobile processor, but computationally heavy algorithms such as deep learning can be used if combined with a high-performance computing device. Future research will involve developing a softer device platform that minimizes the number of rigid components for healthcare and industrial applications. This is an exciting prospect not only for human–machine interfaces but also for other industrial applications that require accurate motion sensing. Our approach of combining AI and microfabrication technology is the critical step toward achieving this ultimate goal.

Methods

Sensing part

Ecoflex (Ecoflex 0030, Smooth-On Inc., Macungie, PA, USA) prepolymer was prepared by mixing a base, curing agent, and white silicon pigment (Silc Pig, Smooth-On Inc., Macungie, PA, USA) at a weight ratio of 1:1:0.01. The prepolymer was then used to fabricate a white Ecoflex film in a 3D-printed mold. The thickness of white Ecoflex film was 500 µm. A CO2 laser-marking machine (CM30D, Lasers Co. Ltd., Republic of Korea) was used to fabricate the micro-markers on the white Ecoflex film. The prepolymer was poured onto the 3D-printed mold and was then cured at room temperature (25 °C) for >6 h. The laser-marking machine scanned the white Ecoflex film along the programmed scanning pattern comprising circular micro-markers and the edge of the sensing part. The laser power, scanning speed, and scanning loop were fixed at 15 W, 20 mm/s, and 2 cycles, respectively. With these parameters, the white Ecoflex film suffered no significant thermal damage. The micro-markers were made by burning the surface of the white Ecoflex film by a laser, which generated impurities that would interfere with micro-marker detection. To remove such impurities, we cleaned the surface of the white Ecoflex film by repeatedly pasting and peeling Scotch tape.

Optical system

The optical system of the CVOS sensor comprised a tiny camera (IMX219 Auto Focus Camera Module, ArduCAM, China), compact microscope lens (iMicro C, Shanghai Qingying E&T LLC, China), and LED light source (LUW/G/B30243, HSUKWANG, China).

Calibration of the camera lens assembly

When an additional lens was attached to the camera, additional calibration was required because suffered from pincushion distortion (Supplementary Fig. 7). By capturing several images of a circular grid pattern as a target from different viewpoints, the relationship between the pattern coordinates according to a global coordinate system and image coordinate system could be analyzed. Then, calibration parameters were calculated by using OpenCV, which is a Python library. Finally, the black boundary of the image was removed, and it was resized.

Numerical simulations

The changes in micro-marker positions upon tensile deformation were simulated by using ANSYS. The Ecoflex 0030 sensing part was assumed a linear elastic material with Young’s modulus (E) of 126.23 kPa and Poisson’s ratio (v) of 0.49. Young’s modulus was measured using a universal testing machine (UNITEST M1, TEST ONE Co. Ltd., Republic of Korea), and Poisson’s ratio used a typical value of Ecoflex 003050. As a boundary condition, one of the edges was fixed, and the displacement of the opposite edge was controlled according to the simulation condition (ε = 0%, 10%, …, 70%, 80%). The numerical simulation results were exported with the micro-marker positions.

Real-time strain analysis algorithm

Micro-marker detection First, contrast-limited adaptive histogram equalization was applied to the original image, which improves the global contrast and enhances the edge definition of the micro-markers by redistributing the contrast according to the histogram of pixel intensities in an image51. Second, unsharp masking was used to sharpen the edges of the micro-markers, and a Gaussian blur filter was used to remove image noise. The image was convolved with the following sharpen filter:

Third, the image boundaries were removed. The image had a black boundary caused by the misalignment of the optical system with the commercial lens and camera. We removed the black boundary because this constituted noise from the bounding box extraction process. Fourth, the image was binarized by using Otsu’s method 52 to differentiate the micro-markers and Ecoflex substrate into distinct colors. Finally, bounding boxes of the micro-markers were extracted depending on the binarized image. If a bounding box was too small or large, the bounding box was not extracted.

Deciding initial MOIs

In the no-strain state, the Euclidean distance was calculated between the center coordinates of the image and the center coordinates of each micro-marker:

where \(({x}_{c},{y}_{c})\) is the center coordinates of the image and \(({x}_{m},{y}_{m})\) is the center coordinates of each micro-marker in Euclidean space. Then, nine micro-markers found in the order of smallest Euclidean distance were assigned individualized IDs. The selected micro-markers were designated as MOIs, and their initial coordinates were saved.

Object-tracking with virtual MOI generation

Objects were tracked by comparing the center coordinates of the MOIs in the previous frame and center coordinates of micro-markers in the current frame using the Euclidean distance. Thereafter, micro-markers with the smallest Euclidean distance became MOI of the current frame. Moreover, if an outer MOI exceeded the set threshold, its coordinates were predicted according to those of an inner MOI:

where t is the current frame and \(t-1\) is the previous frame.

Detection of the loading–unloading state

The loading–unloading state was calculated from the difference between the responses of the previous and current frames. If the difference was positive, the sensor was in a loading state; if it was negative, the sensor was in an unloading state.

Detection of the curvature state based on the Voronoi diagram

The original image was converted into the Voronoi diagram by using the center points of the micro-markers. Only the inner points of the Voronoi diagram were used to calculate the curvature state, and the outer points were excluded. This is because the outer points formed a virtual area with virtual points that were not visible on the image. Finally, the average and standard deviation values of the measured Voronoi cell areas were used to calculate the degree of curvature. If the standard deviation value exceeded 300, it was judged a bending state, and the curvature radius was estimated according to the average value. The trend line was used to estimate the curvature radius:

Fully connected network

A simple artificial neural network was trained by using a dataset combining performance test data and simulation data. The simulation data reflected the hysteresis of the sensor based on the performance test data. The features of the dataset comprised the curvature state, curvature radius, loading–unloading state, and MOI positions of the sensor. The model had two hidden layers consisting of 10 nodes each. Tensorflow, which is a Python library, was used for model development. The model was then made lightweight by using Tensorflow Lite before it was mounted on the embedded board (25.7 KB → 1.73 KB).

Multiaxial strain mapping

First, corresponding points between the initial and current positions of micro-markers were identified using the FLANN (Fast Library for Approximate Nearest Neighbors Matching) matcher algorithm. Second, the homography matrix was calculated using the RANSAC (RANdom SAmple Consensus) method based on the obtained corresponding points. Third, the maps were initialized as empty arrays, and the computation coordinates were defined based on a specified grid size. In this research, a grid size of 63 (7 × 9) was employed. Finally, the grid points were transformed using the homography matrix, and the strain map and strain direction map were determined based on the differences between the transformed points and the original grid points. The strain map was computed as the Euclidean distance between the transformed and original points, while the strain direction map was derived using the inverse tangent.

Strain direction analysis

The pixels were partitioned into four quadrants, and for each quadrant, the main strain directions were determined using the weighted average method. In this method, the importance of each pixel is increased proportional to the magnitude of its strain.

Setup for the performance test

The mechanical properties of the proposed CVOS sensor were measured by using a universal testing machine on 14 mm × 14 mm, 17 mm × 17 mm, and 20 mm × 20 mm samples. The tiny camera, compact microscope lens, and LED light source were combined into a 3D-printed structure for mounting on the universal testing machine. In this test, an embedded board was used for real-time image processing and as a power supply for the LED light source. The overall performance test setup is shown in Supplementary Fig. 8.

Setup for the experimental demonstration

This experiment was approved by the institutional review board of POSTECH (PIRB-2022-E042). The experiments involving human subjects were performed with the full consent of the volunteers, including the publication of identifiable images. All participants provided written informed consent. The components of the optical system were assembled into a specially designed 3D-printed structure. The tiny camera was connected to an embedded board (Raspberry Pi 4, Raspberry Pi Foundation, United Kingdom) by using a cable. The LED light source was connected to the embedded board with a bias voltage of 3 V. Finally, the optical system and sensing part were integrated into the body brace. An IMU sensor (WT901BLECL, WitMotion Shenzhen Co., Ltd, China) was attached to the forearm to compare its detection performance with that of the CVOS sensor (Supplementary Fig. 9).

Data availability

All data are available within the article or available from the authors upon reasonable request.

Code availability

The code of real-time multiaxial strain mapping using computer vision integrated optical sensors is on our GitHub, and the URL is https://github.com/HongSungUk/CVOS-sensor.

References

Yamada, T. et al. A stretchable carbon nanotube strain sensor for human-motion detection. Nat. Nanotechnol. 6, 296–301 (2011).

Qiu, Y. et al. Nondestructive identification of softness via bioinspired multisensory electronic skins integrated on a robotic hand. npj Flex Electron. 6, 1–10 (2022).

Yin, R., Wang, D., Zhao, S., Lou, Z. & Shen, G. Wearable sensors-enabled human–machine interaction systems: from design to application. Adv. Funct. Mater. 31, 2008936 (2021).

Jin, T. et al. Triboelectric nanogenerator sensors for soft robotics aiming at digital twin applications. Nat. Commun. 11, 5381 (2020).

Chu, M. et al. Respiration rate and volume measurements using wearable strain sensors. npj Digit. Med. 2, 8 (2019).

Dinh, T. et al. Stretchable respiration sensors: advanced designs and multifunctional platforms for wearable physiological monitoring. Biosens. Bioelectron. 166, 112460 (2020).

Wang, R. et al. Cost-effective fabrication of transparent strain sensors via micro-scale 3D printing and imprinting. Nanomaterials (Basel) 12, 120 (2021).

Park, S. J., Kim, J., Chu, M. & Khine, M. Flexible piezoresistive pressure sensor using wrinkled carbon nanotube thin films for human physiological signals. Adv. Mater. Technol. 3, 1700158 (2018).

Wang, Y. et al. Hierarchically buckled Ti3C2Tx MXene/carbon nanotubes strain sensor with improved linearity, sensitivity, and strain range for soft robotics and epidermal monitoring. Sens. Actuators B 368, 132228 (2022).

Ko, Y., Kim, J. S., Vu, C. C. & Kim, J. Ultrasensitive strain sensor based on pre-generated crack networks using Ag nanoparticles/single-walled carbon nanotube (SWCNT) hybrid fillers and a polyester woven elastic band. Sensors 21, 2531 (2021).

Kim, K. H. et al. Enhancement of linearity range of stretchable ultrasensitive metal crack strain sensor: Via superaligned carbon nanotube-based strain engineering. Mater. Horiz. 7, 2662–2672 (2020).

Nag, A., Alahi, M. E. E., Mukhopadhyay, S. C. & Liu, Z. Multi-walled carbon nanotubes-based sensors for strain sensing applications. Sensors (Basel) 21, 1261 (2021).

Choi, Y. K. et al. Wearable anti-temperature interference strain sensor with metal nanoparticle thin film and hybrid ligand exchange. Nanoscale 14, 8628–8639 (2022).

Zhang, W., Liu, Q. & Chen, P. Flexible strain sensor based on carbon black/silver nanoparticles composite for humanmotion detection. Materials (Basel) 11, 1836 (2018).

Cheng, H. W., Yan, S., Shang, G., Wang, S. & Zhong, C. J. Strain sensors fabricated by surface assembly of nanoparticles. Biosens. Bioelectron. 186, 113268 (2021).

Luan, J., Wang, Q., Zheng, X., Li, Y. & Wang, N. Flexible metal/polymer composite films embedded with silver nanowires as a stretchable and conductive strain sensor for human motion monitoring. Micromachines (Basel) 10, 372 (2019).

Gong, S. et al. A wearable and highly sensitive pressure sensor with ultrathin gold nanowires. Nat. Commun. 5, 3132 (2014).

Kumar, K. S. et al. Stretchable and sensitive silver nanowire-hydrogel strain sensors for proprioceptive actuation. Acs. Appl. Mater. Interfaces 13, 37816–37829 (2021).

Jung, H. et al. Nano-cracked strain sensor with high sensitivity and linearity by controlling the crack arrangement. Sensors (Basel) 19, 2834 (2019).

Park, C. et al. One-step laser encapsulation of nano-cracking strain sensors. Sensors (Basel) 18, 2673 (2018).

Jeon, H., Hong, S. K., Kim, M. S., Cho, S. J. & Lim, G. Omni-purpose stretchable strain sensor based on a highly dense nanocracking structure for whole-body motion monitoring. ACS Appl. Mater. Interfaces 9, 41712–41721 (2017).

Kang, S., Rachim, V. P., Baek, J. H., Lee, S. Y. & Park, S. M. A flexible patch-type strain sensor based on polyaniline for continuous monitoring of pulse waves. IEEE Access 8, 152105–152115 (2020).

Rachim, V. P., Kang, S., Baek, J. H. & Park, S. M. Unobtrusive, cuffless blood pressure monitoring using a soft polymer sensor array with flexible hybrid electronics. IEEE Sens. J. 21, 10132–10142 (2021).

Wang, W. et al. Fingerprint-inspired strain sensor with balanced sensitivity and strain range using laser-induced graphene. ACS Appl. Mater. Interfaces 14, 1315–1325 (2022).

Xia, S. Y. et al. Laser-induced graphene (LIG)-based pressure sensor and triboelectric nanogenerator towards high-performance self-powered measurement-control combined system. Nano Energy. 96, 107099 (2022).

Jeong, S. Y. et al. Highly skin-conformal laser-induced graphene-based human motion monitoring sensor. Nanomaterials (Basel) 11, 951 (2021).

Rachim, V. P. et al. A scalable laser-centric fabrication of an epidermal cardiopulmonary patch. Adv. Mater. Technol. 7, 2200242 (2022).

Gu, J. et al. Self-powered strain sensor based on the piezo-transmittance of a mechanical metamaterial. Nano Energy 89, 106447 (2021).

Naruse, M. et al. Randomness in highly reflective silver nanoparticles and their localized optical fields. Sci. Rep. 4, 6077 (2014).

Zebarjadi, M., Esfarjani, K., Bian, Z. & Shakouri, A. Low-temperature thermoelectric power factor enhancement by controlling nanoparticle size distribution. Nano. Lett. 11, 225–230 (2011).

Li, Y. et al. Low‐Cost Data Glove based on deep‐learning‐enhanced flexible multiwalled carbon nanotube sensors for real‐time gesture recognition. Adv. Intell. Syst. 4, 2200128 (2022).

Marin, B. C. et al. Graphene-metal composite sensors with near-zero temperature coefficient of resistance. ACS Omega 2, 626–630 (2017).

Lee, S., Kim, E. M. & Lim, Y. Near-zero temperature coefficient of resistance of hybrid resistor fabricated with carbon nanotube and metal alloy. Sci. Rep. 9, 7763 (2019).

Kwon, Y. et al. Effects of bending strain and crack direction on crack-based strain sensors. Smart. Mater. Struct. 29, 115007 (2020).

Kanao, K. et al. Highly selective flexible tactile strain and temperature sensors against substrate bending for an artificial skin. RSC Adv. 5, 30170–30174 (2015).

Xu, X. L., Li, S. X., Yang, Y., Sun, X. C. & Xia, H. High-performance strain sensor for detection of human motion and subtle strain by facile fabrication. Measurement 189, 110658 (2022).

Zhang, S. et al. Highly stretchable, sensitive, and flexible strain sensors based on silver nanoparticles/carbon nanotubes composites. J. Alloy. Compd. 652, 48–54 (2015).

Wang, Z., Cong, Y. & Fu, J. Stretchable and tough conductive hydrogels for flexible pressure and strain sensors. J. Mater. Chem. B. 8, 3437–3459 (2020).

Zhang, W. et al. Anti-freezing, water-retaining, conductive, and strain-sensitive hemicellulose/polypyrrole composite hydrogels for flexible sensors. J. Mater. Res. Technol. 14, 555–566 (2021).

Gu, J., Kwon, D., Ahn, J. & Park, I. Wearable strain sensors using light transmittance change of carbon nanotube-embedded elastomers with microcracks. Acs. Appl. Mater. Interfaces 12, 10908–10917 (2020).

Zhai, Q. & Yang, Y. Highly stretchable variable-transmittance skin for ultrasensitive and wearable strain sensing. Adv. Mater. Technol. 2, 1700161 (2017).

Zuo, C. et al. Deep learning in optical metrology: a review. Light. Sci. Appl. 11, 1–54 (2022).

Genty, G. et al. Machine learning and applications in ultrafast photonics. Nat. Photonics 15, 91–101 (2021).

Xu, S. et al. Computer vision techniques in construction: a critical review. Arch. Comput. Methods Eng. 28, 3383–3397 (2021).

Aurenhammer, F. Voronoi Diagrams—a survey of a fundamental geometric data structure. ACM Comput. Surv. 23, 345–405 (1991).

Liu, K. C., Hsieh, C. Y., Hsu, S. J. P. & Chan, C. T. Impact of sampling rate on wearable-based fall detection systems based on machine learning models. IEEE Sens. J. 18, 9882–9890 (2018).

Zhang, S. et al. Activity classification using the Genea: Optimum sampling frequency and number of axes. Med. Sci. Sports Exerc. 44, 2228–2234 (2012).

Faisal, A. I. et al. Monitoring methods of human body joints: state-of-the-art and research challenges. Sensors (Basel) 19, 2629 (2019).

Lee, J. H., Chee, P. S., Lim, E. H., Low, J. H. & Nguyen, N. T. A stretchable kirigami-inspired self-powered electroactive sensor for tensile strain and torsion sensing. Adv. Eng. Mater. 24, 2100961 (2022).

Lee, M., Lee, S. & Lim, S. Electromagnetic control by actuating kirigami-inspired shape memory alloy: thermally reconfigurable antenna application. Sensors (Basel) 21, 3026 (2021).

Pizer, S. M. et al. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 39, 355–368 (1987).

Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man. Cybern. 9, 62–66 (1979).

Acknowledgements

This research was supported by the Pioneer Research Center Program through the National Research Foundation (NRF) of Korea funded by the Ministry of Science, ICT & Future Planning (2022M3C1A3081294), NRF of Korea funded by the Korean government and Ministry of Science and ICT (MSIT) (2020R1A2C2005385), Basic Science Research Program through the NRF of Korea funded by the Ministry of Education (2020R1A6A1A03047902), National R&D Program through the NRF of Korea funded by the Ministry of Science and ICT (2020M3H4A1A02084830) and the MSIT under the ICAN (ICT Challenge and Advanced Network of HRD) program (IITP-2022-2020-0-01822) supervised by the IITP (Institute of Information & Communications Technology Planning & Evaluation).

Author information

Authors and Affiliations

Contributions

S.H. and S.M.P. conceived this study. S.H. designed the hardware, developed fabrication methods, developed algorithms, conducted FEM simulations, and analyzed the data. S.H. and J.H.P. designed and conducted experiments. S.M.P. and V.P.R. supervised the data analysis. S.H. wrote the manuscript under the supervision of S.M.P. All authors were involved in the discussion and finalization of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hong, S., Rachim, V.P., Baek, JH. et al. Real-time multiaxial strain mapping using computer vision integrated optical sensors. npj Flex Electron 7, 30 (2023). https://doi.org/10.1038/s41528-023-00264-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41528-023-00264-1