Abstract

Continued research on the epidermal electronic sensor aims to develop sophisticated platforms that reproduce key multimodal responses in human skin, with the ability to sense various external stimuli, such as pressure, shear, torsion, and touch. The development of such applications utilizes algorithmic interpretations to analyze the complex stimulus shape, magnitude, and various moduli of the epidermis, requiring multiple complex equations for the attached sensor. In this experiment, we integrate silicon piezoresistors with a customized deep learning data process to facilitate in the precise evaluation and assessment of various stimuli without the need for such complexities. With the ability to surpass conventional vanilla deep regression models, the customized regression and classification model is capable of predicting the magnitude of the external force, epidermal hardness and object shape with an average mean absolute percentage error and accuracy of <15 and 96.9%, respectively. The technical ability of the deep learning-aided sensor and the consequent accurate data process provide important foundations for the future sensory electronic system.

Similar content being viewed by others

Introduction

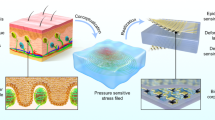

Somatosensory systems, such as mechanoreceptors and nociceptors located throughout the body’s surface, play critical roles in detecting physical stimuli from the environment. Any damage of such receptors leads to inaccurate translation of the stimuli and thus exposes the individual to potential serious health hazards such as hyperalgesia1,2 and post-burn pruritus3. An emerging class of artificial tactile systems that mimic the sensing modalities of receptors offers versatile functions for the transduction of dynamic stimuli with widespread applications, including artificial electronic skin4,5, haptic interface6,7, and voice recognition8,9. Recent examples of these prominent systems combine exploratory efforts in materials (e.g., metal, semiconductor, nanomaterials, liquid metal, and carbon materials), transduction mechanisms (e.g., capacitive, piezoelectric, and piezoresistive), and algorithmic methods to interpret simultaneous forms of varying stimuli. For instance, the tactile sensors based on two interlocked nanofibers10, micro-structured pyramid array11, and three-dimensional piezoresistors12 have demonstrated various abilities to extract separate force parameters (e.g., pressure, shear, bending, and/or strain) from combinatorial and simultaneous stimuli. Although well configured for the sensitivity that is often beyond that of the human skin’s sensing threshold, a linear response within a short sensing range and complicated algorithmic interpretations of the sensing data represent key limitations of sensors in such platforms. Many factors including complex stimulus shape/magnitude and various moduli of the skin significantly affect the electrical performance of sensors, making data interpretation abstruse. This also requires the calibration process of each tactile sensor per usage, which is another disadvantage.

Recent research involving methods to analyze dynamic and varying stimuli have aimed to implement machine learning models, such as hidden Markov13, K-nearest-neighbors14, and deep convolutional neural networks15,16 for signal processing. The resulting tactile systems with artificial neural networks offer potential use in gesture recognition and voice detection, all of which are difficult to analyze with conventional and complicated algorithmic data processing, where the accuracy of recognition is reduced by a nonlinear sensing response, undesired parasitic inputs, and interfering noise. In one example, the integration of a convolutional and a sparse neural network for the visual sensing data improved the pattern recognition in computer vision, particularly with images that are noisy and under- or over-exposed to light irradiation17. Similarly, an in-sensor adaptive learning capability classified the real-time gesture through the hyperdimensional computation of electromyography18. However, in these machine learning-aided tactile systems, classification of static body positions lacks essential capabilities of cutaneous receptors, where the system fails to differentiate not only complex external stimuli, but also the physical conditions (e.g., modulus and hardness) of the skin.

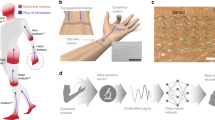

This report demonstrates a deep learning-aided ultrathin (<20 µm) and flexible tactile system that uses the piezoresistive single-crystalline silicon nanomembrane (SINM) to capture multiple external stimuli and assess the physical conditions of the regions of interest (Fig. 1a). The resulting system provides essential capabilities in high sensitivity and reliability, owing to the large gauge factor of SINM, for the prediction of (1) the magnitude of the external force, (2) the hardness of the attached sensor’s substrate, and (3) the shape of the stimulating object, all from the associated electrical response obtained from the sensor. Furthermore, to ensure accurate prediction of the aforementioned characteristics when compared to the conventional spectrogram-based model, we designed a customized deep neural network that processes the input signal transformed by the continuous wavelet transform (CWT) (Fig. 1b). Thus, the key feature of this system is the acute response and analysis of the physical external stimuli with the nonlinear and dynamic experimental condition, which is not available from traditional sensing systems. Our system would be potentially adaptable across a wide range of applications that include artificial prostheses, robotic interfaces, electronic skins, and structural health monitoring.

a The schematic illustrates the mechanical activation of sensory receptors in the surface of the skin and associated neuronal action potential that results in the interpretation of stimuli in the somatosensory cortex (left). The artificial sensor with computer neural network yields the machine-aided recognition of the different stimulator types and of the mechanical modulus to which the sensor is attached (right). b Block diagrams compare the sequence of the stimulator recognition in the conventional (above) and the deep learning-aided (below) artificial sensing system. The latter includes a neural computer network that enables the classification of the digitized output pattern from the flexible piezoresistive sensing module.

Results

Piezoresistive mechanosensory system

The fabrication of the artificial mechanosensory system exploits the concept demonstrated for the flexible piezoresistive sensors, where the boron-doped SINM on a thin polyimide substrate (thickness of 12.5 µm) offers a large gauge factor, ranging from 20 to 10012,19,20 (Supplementary Fig. 1). The SINM in this platform is typically in the [110] orientation, due to the sensitivity that is roughly ten times higher than that of the [100] orientation. A representative boron-doped SINM piezoresistor (200 µm length and 10 µm width) in Fig. 2a, b shows effective gauge factors of (~50 and −15), comparable to the previously reported sensor with a doping concentration of ~5 × 1018 cm−3, for the longitudinal tensile and horizontal compressive strain, respectively21. Here, the Poisson effect in the latter case results in the contraction of the substrate, thereby reducing the resistance of the SINM. As the length of the piezoresistors was aligned to the longitudinal stretching direction, the longitudinal response in the change of resistance is more evident than the horizontal response risen from the Poisson effect (Supplementary Fig. 2). The [100] oriented SINM shows the fractional change of resistance of less than 2 % (Fig. 2b), due to the relatively small shift of the heavy and light hole energy band in a k-space, and the negligible redistribution of holes22,23, for both tensile and compressive strains. The mechanical modeling theoretically verifies the intrinsic gauge factor for the [110] oriented piezoresistor (Fig. 2c and Supplementary Fig. 2). When the [110] oriented piezoresistor is subject to strain from horizonal and longitudinal stretching, the modulus mismatch between the substrate and SINM yield strain values of εSINM = −0.31% at 1% horizontal stretching strain and εSINM = 0.98% at 1% longitudinal stretching strain, returning an intrinsic gauge factor of ~50 (∆R/R0 = GF × εSINM (%), Supplementary Fig. 2b) for the SINM. According to the strain contours, the strain distribution in the silicon piezoresistor becomes more uniform outside its junctions to the gold interconnection (Supplementary Fig. 2c). The sensor in this platform further verifies the minimal electrical hysteresis (<0.1%) as shown in Fig. 2d, where the strain response under loading and unloading is consistent.

a Optical image of the single crystalline silicon piezoresistors in two orientations, [110] and [100], yielding sensitivity differences. b Fractional changes in resistance as the function of longitudinal and transverse strain variation; the slope represents the sensing behavior and gauge factor. c Computed strain distributions in a piezoresistor for the 1% strain, applied to the flexible polyimide substrate, in the horizontal (up) and longitudinal (down) direction. d Response of the [110] silicon piezoresistor to the application and the release of longitudinal strain. e Schematic of the fabrication of the strain gauge in a Wheatstone bridge configuration using two [110] and two [100] silicon piezoresistors on a 12.5 µm thick polyimide substrate. f Exploded view highlighting the key sensing element and functional layers of the strain gauge in a thin sheet geometry with total thickness of ~15 µm. g Optical photograph of the piezoresistive sensor. The inset shows an enlarged view of the red dotted box showing the piezoresistors in a Wheatstone bridge configuration. h Changes in output voltage from a Wheatstone bridge as the function of longitudinal and transverse strain variation. i Optical photograph of the strain gauge during cycles of bending and stretching. j Output voltage from a Wheatstone bridge at different stages of fatigue testing, which involve 1000 cycles of bending at a different radius, as indicated in the legends. k Computed strain distribution of metal interconnection bent with a critical radius of 0.78 mm.

The strain gauge in a Wheatstone bridge configuration is preferable in minimizing the interfering temperature input, and integrating the voltage follower before the data acquisition system24,25. Here, the magnitude and sign of the voltage output correspond to the quantity and type (i.e., compressive and tensile) of the stimuli, respectively. However, the [110] oriented SINMs Wheatstone bridge’s key limitation become apparent when the device produces identical voltage outputs at different stimuli conditions (e.g., x-axis concave and y-axis convex bending conditions presented in Supplementary Fig. 3, left), leading to unclear interpretations. Figure 2e shows the fabrication procedure to overcome this limitation through the multiple transfer printing techniques that align [100] and [110] oriented SINM in an identical longitudinal direction (Supplementary Fig. 4). The resulting system, where sensing elements with different gauge factors are integrated in a Wheatstone bridge configuration (Fig. 2f, g), can discern between bending along the y-axis and x-axis (Supplementary Fig. 3, right). Moreover, the proposed bridge configuration results in different voltage outputs between the longitudinal and horizontal stretching conditions (Supplementary Fig. 5a). The voltage gauge factor of −1.8 and 0.4 for the former and latter, respectively, are consistent with the analytically calculated sensitivity using the strain response of a single resistor, as described in the method section (Fig. 2h). In the case for the [110] oriented SINMs in a proposed Wheatstone bridge configuration, the same gauge factor associated with the four resistors yields minimal strain effect undergoing horizontal and longitudinal stretching (Supplementary Fig. 5b).

The fatigue cycling test depicted in Fig. 2i is paramount in confirming the mechanical and electrical robustness of the sensor due to the intrinsic brittleness of the silicon material, and the accumulation of strain during mechanical deformation. The test result of the sensor withstanding up to 1000 bending cycles with bending radii ranging from 16.5 to 9 mm shows negligible changes in the output voltage (Fig. 2j and Supplementary Fig. 6). Here, the calculated strain (εcal = V (output voltage)/1.8 (longitudinal voltage GF) at each bending radius, ranging from 9 to 16.5 mm, along with the corresponding bending strain (ε, refer to Eq. 5 in “Methods” section) match the results (εmax Au and εmax Si) from the finite element analysis (FEA) modeling (Supplementary Fig. 7a, b). The FEA indicates that the minimum bending radius before reaching the fracture limits of silicon and gold is less than 1 mm, which is well beyond the range of bending radius under normal operating conditions (e.g., 16.5–9 mm). When the piezoresistive structure is under bending deformation, the maximum strain in the gold interconnects, well below its fracture threshold, occurs at the junction between the gold interconnect (εmax Au) and Si piezoresistor (εmax Si) as detailed in Fig. 2k and Supplementary Fig. 7c, respectively. The mechanically robust construction and electrically reliable operation comply with previous sensors tested using a similar sensing element and substrate12,19,20.

Deep learning-aided data regression

The output signal from the sensor (Fig. 3a) yields a non-linear response to the magnitude of the exerted physical external stimuli of the force gauge (Mark-10) and varies between the different hardness of the target substrate where the sensor is attached. Due to unpredictable outputs measured in the nonlinear response regime, most of the conventional sensors suffer from a limited operation range where only a simple and linear relationship can be determined for specific parameters (e.g., magnitudes of pressure, bending, stretching) without ambiguity (Fig. 3a). Here, when the sensor attached to six distinct substrates of different hardness (hardness of shore A; 4, 8, 38, 58, 64, 69) is subject to an external normal force, the device yields a non-linear response as depicted in Fig. 3b, where the hardest substrate (shore A 69) produces the slope of 21 Ω/Pa from 0 to 0.1 kPa and 4 Ω/Pa from 0.1 to 1 kPa.

a Illustration of the sensor deformation as the attached elastomer bends due to external stimuli. b The relationship between the three parameters (i.e., stimulus magnitude, substrate hardness, and sensor output), where separate colors are used for the hardness of the distinct substrates. c The overall structure of our model shows a stacked hierarchy with initial and final dense layers, and five block operations (details shown in c). Input for block operation: ‘Input 1’ from the feature maps of the previous layer, and ‘Input 2’ from the feature maps of all the preceding layers, except ‘Input 1’. d Conventional implementation of block operation, where each group consists of divided input 1 and whole input 2 for the group dense operation. e Customized implementation of block operation, where input 1 and input 2 are placed in group dense and general dense operation, respectively. Same size outputs from each operation are added for the final output. f Epoch-loss graph of the training and validation sets for the prediction of substrate hardness from the given sensor output and mechanical stimulus. g Epoch-loss graph of the training and validation sets for the prediction of sensor output from the given hardness and mechanical stimulus. h Scatterplot of the true and prediction value of the test set for substrate hardness. i Scatterplot of the true and prediction value of the test set for sensor output.

To address and overcome these limitations, we employed a deep learning-aided data processing technique, where a deep neural network serves as a nonlinear function approximator that speculates unknown nonlinear parameters by accessing and employing known conditions into its prediction value. In this scenario, a simple sequence of fully connected layers (i.e., a conventional vanilla deep regression model) is not optimal for the training of complex relations and its tasks due to the dilution of early-stage information and the deluge of these data as the model deepens and widens26,27 (Supplementary Fig. 8). In order to resolve the issue of information dilution, we developed a customized architecture based on the stacking structure of the DenseNet model28. Each layer in this model accepts the inputs of the preceding layers’ feature maps while also passing on its own feature maps to the subsequent layers as their respective inputs. By applying this structure to a network with L layers, the number of connections between layers increases from L to \(\frac{{{{{\mathrm{L}}}}({{{\mathrm{L}}}} + 1)}}{2}\). This result reinforces the gradient flow, thus preventing the dilution of early-stage information. Furthermore, our model is able to regulate the overflow of information by configuring the custom-group dense operation to independently conduct each group of input features, similar to group convolution29. This establishment of an independent structure of the group dense operation accommodates each group to focus on different patterns in the input signal, preventing the overflow of information.

Inter-group exchange of information cannot be achieved by simply combining two architectures as a stacking structure of sequential group dense layers. To take advantage of both architectures without limiting the interaction among the groups, we designed our model as a sequence of blocks with two distinct inputs; (1) ‘input 1’ from the feature maps of the preceding layer, and (2) ‘input 2’ from the feature maps of all the anteceding layers except for input 1, as shown in Fig. 3c, d. Based on this model, an initial architecture called Block structure A is constructed using input 2 as the input for all groups, while dividing input 1 into separate groups. All groups in the blocks then can process other groups’ features by sharing all the preceding feature maps. They also extract distinct features through individual foci on each group’s newly generated feature maps. Therefore, by all the residing groups in the blocks processing the features of input 1 and input 2, our model effectively fuses both architectures of the stacking and group dense structures.

The further modified structure (i.e., structure B), shown in Fig. 3e, overcomes the limitations of the structure in Fig. 3d, such as the overhead of copying and concatenating of input 2 into all groups in the forward path. All connections from input 2 in structure B is designed to be equivalent to those in structure A. This symmetry can be obtained by supplying input 2 into a general dense operation with a configured output the same size as the output generated from input 1 in the group dense operation, followed by adding the two outputs together. Here, we chose the number of groups as six. With the initial channel size of the first dense layer configured to 36, the subsequent block’s constant, k, is incremented to be defined as 36 × (k + 1), where k ranges from 1 to 5.

To evaluate the prediction performance of the proposed method, 180 sample data (30 samples × 6 substrates) were split into the train/validation/test sets with ratios of 4:1:1. For this evaluation, the data sets include three dependent variables: sensor output, substrate hardness and the location of directly applied pressure to the sensor. The prediction determines one variable from two other given nonlinear variables. Each known parameter functions to complement the other, where the unknown variable is calculated and determined by the given two variables. In a scenario where the sensor output value and the applied pressure are known, the model can accurately resolve the hardness of the substrate and vice versa. The mean absolute error (MAPE) was also conducted to evaluate the prediction accuracy for the regression problem with the test datasets (Supplementary Table 1). Figure 3f–i shows epoch-loss graphs and scatter plots for the predicted hardness and the sensor output. The results shown in Fig. 3f, g indicate the convergence of our model across the entire substrate hardness range from shore A 4 to 69 and the varied sensor output by stimulating the normal force from 0 to 1 kPa, respectively. By effectively learning the relationship among the three parameters, we demonstrated that our model accurately predicts the target substrate hardness and the sensor output with MAPE 13 and 4.14 (Fig. 3h, i), outperforming the vanilla dense model with MAPE 29.6 and MAPE 20.28 (Supplementary Fig. 8c, e). We report the results, comparing other existing algorithms in a metric of MAPE (Supplementary Table 2).

Deep learning-aided data classification

The time–series sensor output under multi-point stimulus conditions is determined by the nonlinear relationship among the parameters (e.g., stimulus magnitude and substrate-hardness sensor output) at each stimulus point. The classification of a shape using the emitted signals of complex stimuli requires a data-driven method due to the complexity of designing a logic algorithm. By utilizing a deep learning-based classifier model, the joint system (i.e., sensor – signal processing – deep learning) can effectively classify objects based on the varying stimulus (Fig. 4a). For this simulation, instead of precisely controlling the pressure as carried out in the previously mentioned regression experiment, this experiment was conducted by directly pressing the object onto the skin-mimic elastic material (hardness of shore A 30) by hand on the assumption that sensory receptors are present on the surface of the skin.

a Illustration of the mechanical deformation and a corresponding change of the sensor. Pressure applied to the stimulus point leads to the nonlinear behavior in the sensor signal. b The overall data processing sequence summarized with three steps. (1) Cropping the 1D time-series data. (2) Conversion of the cropped signal into the 2D image by the signal processing method of wavelet transform. (3) Transfer-learning a pre-trained CNN with the processed 2D image. c Epoch-loss graph of the five-fold cross validation results with the mean and standard deviation for each epoch in the classification of the mechanically stimulating object. d Epoch-accuracy graph of the five-fold cross validation results with the mean and standard deviation for each epoch of the classification. e Confusion matrix depicting the comprehensive classification results of the test set by each cross validation trained model with the representative raw signals.

The stimuli-based signals, obtained from the sensor, are reconstructed as images to optimize raw-data noise filtering. The reconstructed images of the segmented time window signals are applied to a CWT (spectrogram in Supplementary Fig. 9), which displays information in both the frequency and time domain, better suited for complex data analysis when compared to the Fourier transformation which only returns the frequency domain. For a raw signal datum x(t), the wavelet transformation is defined with respect to a given mother wavelet (ψ):

where, a, b, and Wa,b are the scale factor, the translational value, and the wavelet transformation, respectively. We obtained CWT using the mother wavelet of the analytic Morse wavelet with the symmetry parameter of 3 and the time-bandwidth product of 60.

For accurate classification, we designed a deep learning system based on EfficientNet30, boasting high accuracy for the ImageNet 2012 dataset31. Auxiliary applications utilizing EfficientNet30 for other public datasets (e.g., CIFAR 1032, CIFAR 10032, Birdsnap33, Flowers34, Oxford-IIIT Pets35, Food-10136) produced a higher average accuracy of 0.28%30 with a 4.730 reduction in model parameter when compared to the original models of NASNet-A37 and Inception-v438. These results demonstrate that EfficientNet exhibited high accuracy and flexibility with transfer learning39, making the base model suitable for the classification task in this study (Fig. 4b).

This joint system (sensor - CWT - EfficientNet) effectively captured features from the time-series signal to classify objects. The evaluation process consisted of 5 objects (earbud, cotton swab, lip balm, stick, door lock; Supplementary Fig. 9), each with 160 gathered samples. The samples were split, where each object’s test set consist of 35 randomly sampled images while the remaining 125 samples are trained through k-fold cross validation. Here, lower accuracy bias is achieved with k equal to five or ten40. Thus, throughout the cross validation process, k was set to five with 25 samples in each fold. The final test accuracy is evaluated by averaging accuracy values of five models of the test set. The evaluation results show the convergence (Fig. 4c) and accuracy (Fig. 4d) obtains a top-1 accuracy value of 96.9%, surpassing the 94.2% accuracy obtained from the spectrogram signal-processed images (Supplementary Fig. 9d). The figures, expressed as the mean and standard deviation of the five-fold cross validation for each epoch, show the validation accuracy exceeding the training accuracy. This phenomenon can be traced to several reasons, of which, configuration of unbalanced, easy data sets commonly induces such occurrences. To address this common determinant and exclude the possibility, cross-validation experiments were performed. As standard deviation decreases and converges to a certain accuracy, all dataset configurations show similar training progress as displayed in Fig. 4c, d. These results indicate that the higher validation accuracy was not caused by the validation set’s properties, but rather from the use of strong augmentation and regularization. During the training process, the model is subject to these rigorous settings due to its complexity and high variance in data; however, in validation, the model is no longer constrained and shows better performance despite being unfamiliar with the dataset. Without the application of augmentation and regularization, we can observe that the validation accuracy does not exceed training accuracy (Supplementary Fig. 10a), but as a result of overfitting, the final test accuracy is reduced by 1.8%. The augmented training samples are shown in Supplementary Fig. 10b. In order to bolster the model’s performance, augmentations and regularization techniques are essential to the development of an efficacious classification system.

Despite the favorable results, the current system was less than ideal for classifying objects with crowded multiple-stimulus points. Figure 4e shows that the proposed model achieves the recall rate of >0.98 for all objects except for the door lock. Unlike other objects, the combination door lock has protruding convex buttons aligned adjacently (Supplementary Fig. 11), making the external physical stimulus crowded on multiple points when placed on the sensor. During this instance, the sensor is comprehensively affected by the bending of the elastic material by multiple-stimulus points. Therefore, rather than having all adjacent sensors be receptive to the bending of the elastic material from each stimulus, a single sensor measures the overall bending. Furthermore, when the combination lock is placed on the sensor, each point of contact does not occur concomitantly with varying orders in point of contact with the elastic material in each trial (e.g., A → B → C and B → C → A results in the different signal where A, B, C indicate multiple-stimulus points). By attaching multiple sensors to different locations, we expect the sensors adjacent to each stimulus point be able to provide information on the corresponding stimulus and overcome these limitations. Among the classified objects, the signals emitted by the lip balm and stick have a keen resemblance in the short time series; thus, to provide contrast, we have visualized the long time series signals of the two objects in Supplementary Fig. 12 along with five CWT images of each object’s emitted signal.

Discussion

The current study not only demonstrates the viability of the epidermal sensor in classifying mechanical stimulus and substrate modulus, but also the versatility of the deep-learning amalgamated system, which can be easily adapted to applications beyond mechanical sensing. The sensing module can be modified for the use in biochemical (e.g., sweat chemistry, blood oxygen saturation, and diabetes), biophysiological (e.g., electrocardiogram, electroencephalogram, and electromyogram), and biophysical (e.g., flow, temperature, and shear) sensors for monitoring certain health or fitness conditions. The ultrathin SINM in this study is investigated as the possible candidate material for the development of highly sensitive and flexible sensing components. Furthermore, the described deep learning-aided data processing provided accurate regression and classification that can simplify the pre-clinical and clinical studies associated with complex data sets. Specifically, three variables (sensor output, substrate hardness, pressure) with a nonlinear relationship are shown to be predictable by the customized deep neural network, with a significantly lower prediction error than the vanilla models. In addition, sensors with a linear response and limited sensing range are capable of classifying dynamic and complex stimulus shapes as shown in this study, with an accuracy of 96.9%. Altogether, the integration of deep learning with the flexible and sensitive sensor provides a powerful framework for the future sensory system where miniaturized artificial intelligence is integrated into a single sensing module, offering alternatives to algorithmic approaches of bio-data interpretation. The adaptability of the comprehensive system also enables the integration of wireless protocols, expediting the overall experiment process. The amalgamation of deep learning with IoT paves the way for research where bio-data can be remotely monitored and interpreted.

Methods

Device fabrication

Supplementary Figs. 1 and 4 illustrate the schematics of fabricating a strain gauge with [110] and [100] silicon piezoresistors, respectively. The first process involves doping (Boron, 950 °C for 5 min) of the top silicon layer (thickness of 200 nm) of silicon on insulator wafer (SOITEC INC.), yielding an electrically conductive piezoresistive element. Removal of the buried oxide layer via immersion in hydrofluoric acid releases the top silicon layer, thereby enabling transfer printing of SINM onto the target substrate (polyimide with thickness of 12.5 μm). Here, the SINM in two orientations, [110] and [100], can be transferred sequentially and aligned to each other (Supplementary Fig. 1). Afterwards, photolithography, followed by reactive ion etching (SF6, 50 mTorr, 20 sccm, 50 Watt, 200 s), defines the silicon piezoresistors. Electron beam evaporation (Cr/Au, 5/200 nm thick) and wet etching creates the metal interconnection. A spin-coated top polyimide layer (~1.5 μm thick, HD microsystem INC.) insulates the strain gauge. Finally, electrical contact regions were defined by photolithography and reactive ion etching (O2, 100 mTorr, 100 W, 20 sccm, 15 min).

Sensor characterization

To measure the piezoresistive response, electrical contact regions were connected to a customized printed circuit board (PCB) connector via bonding of an anisotropic conductive film (ACF) cable (Elform Inc., USA). A universal testing machine (UTM 3343, Instron Co., USA) performed tensile testing with the device. Jaws in UTM firmly hold both ends of a device. While tensile strain ranging from (0 to 1.0)% was applied to the device along the x and y-directions, a digital multimeter (NI PXI‐4071, National Instrument, USA) monitored the electrical resistance change of the sensor. The cycling of bending in different radii in Supplementary Fig. 6 uses a flexural endurance tester (IPC, CK-700FET) that repeats one cycle of bending at 0.32 Hz. The test involves buckling of the device at different radius curvatures (radius of (9, 10.5, 12, and 16.5) mm) over 1000 cycles.

Sensing response to the bending strain

The fractional change of each resistance value (R1–R4 in Supplementary Fig. 4c, d) in the Wheatstone bridge can be expressed as:

where ΔR/R0 is the fractional change of resistance, ε is the mechanical strain, and GF is the gauge factor for each piezoresistor. Under uniaxial longitudinal strain, the positive changes in resistance at R1 and R3 correspond to the tensile strain, whereas the negative changes in resistance at R1 and R3 correspond to the compressive strain. The change in the output voltage from the Wheatstone bridge can be then expressed as:

where Vin and Vout are the input voltage and the output voltage from the Wheatstone bridge, respectively. The pure bending test in Fig. 2i–k induces the tensile strain,

along the outer surface of the sensor, where h is the thickness of the sensor, and r is the bending radius. The FEA result shows the bending-induced strain on the metal electrode and silicon element at the bending radius ranging from (5 to 0.5) mm. The bending radius around 0.8 mm is the critical radius which causes 1% strain on the metal electrode (Supplementary Fig. 7).

Finite element analysis

Three-dimensional (3D) FEA in the software suite Abaqus FEA facilitated the prediction of the mechanical deformations and strain distributions of the SINM device. Four-node composite shell elements were used for the multi-layer structure. For uniaxial stretching simulations, the stretching load was prescribed at the two opposite edges of the polyimide substrate, in the horizontal or longitudinal direction. For bending simulations, the bending loading was prescribed at two opposite edges without confining lateral deformation. The deformed 3D shape and strain distributions at different locations in the SINM structure could be obtained. The strain levels in silicon and gold were monitored to be well below their fracture strains. In the FEA, silicon and polyimide were modeled as linear elastic materials: Young’s modulus ESi = 130 GPa and Poisson’s ratio νSi = 0.27 for silicon; EPI = 2.5 GPa and νPI = 0.34 for polyimide. Gold (Au) was modeled as an idealized elastoplastic material (without hardening; von Mises yield stress chosen as 234 MPa, corresponding to a yield strain of ~0.3 %) with EAu = 78 GPa and νAu = 0.44.

Regression and classification experiments

The first regression test in Fig. 3 involves the vertical stage with a force gauge (Mark-10) to generate approximately consistent pressure onto the strain gauge attached to various elastomers (Polydimethylsiloxane with hardness value of (4, 8, 38, 58, 64, and 69) shore A). Different patterns and output magnitudes associated with the deformation of the strain gauge are measured via digital multimeters (NI-USB 4065). The second classification test in Fig. 4 utilizes the strain gauges mounted on thin elastomer (Polydimethylsiloxane, 1 mm). Arbitrary objects (earbud, cotton swab, lip balm, stick, and door lock) placed on the strain gauge generate distinct output patterns. The objects were directly pressed by the user’s hand, with the assumption that mechanical activation of sensory receptors occurs on the surface of the skin rather than in a laboratory setting.

Data processing

For the task of learning the relationship among the three parameters (i.e., stimulus magnitude, substrate hardness, and sensor output), the stimulus magnitude data was extended to five data points using four scaling functions including the original scale: loge (x + 1); x0.8; x0.5; x0.2. After scaling, the data was z-score normalized via the mean/standard-deviation of the train data.

For the classification test depicted in Figure 4, a window size of 3 was used for the CWT (Symmetry parameter (gamma)) of the signal composed of 450 sampling points; Time-bandwidth product was 60). We also used data preprocessing and augmentation methods in PyTorch, such as random resized crop (various sized patches between 8 and 100%)41, random horizontal flip, color jittering, and RandAugment (N = 2 and M = 5)42. The data was RGB-normalized via the mean/standard-deviation of the ImageNet2012 dataset31.

MAPE (Metric)

The mean absolute percentage error (MAPE) is a common metric in evaluating the prediction accuracy of regression problems, which is expressed as:

This defined formula reports the prediction accuracy as a percentage with At and Ft as the actual and the predicted value, respectively. The value can be interpreted as shown in Supplementary Table 1.

Model configuration

For the regression task in Fig. 3, we trained our model for 800 epochs with a batch size of twelve. The model utilizes RMSProp optimizer with 0 for the decay, 0 for the momentum, 0.99 for the batch norm momentum, 0.0005 for the initial learning rate, Swish activation43, and

for the loss function of regression.

For the classification task in Fig. 4, the final 3 blocks along with the fully connected layer of EfficientNet b730 are fine-tuned while fixing the parameters of the remaining layers. With a configured dropout ratio of 0.5, we train our model for 75 epochs with a batch size of eight and an image resolution of 600. The model utilizes RMSProp optimizer with 0 for the decay, 0 for the momentum, 0.99 for the batch norm momentum, 0.001 for the initial learning rate, and Swish activation43. Here, Cross Entropy Loss was used for the loss function of classification.

Training tools

A digital multimeter (NI-USB 4065) and customized LabVIEW program were used for data collection. For the training process, we used tensor processing libraries in the PyTorch framework (1.7.0+cu101) on Python (3.6.9). Our platform includes Linux-4.19.112+-x86_64-with-Ubuntu-18.04-bionic with a Tesla P100 graphics processing unit.

Data availability

All data are available in the main text or supplementary materials. All information can be requested from one of the corresponding authors.

Code availability

Codes for the machine learning model used in this study are available on GitHub (https://github.com/thckdfhr/Epidermal-piezoresistive-structure-with-deep-learning-assisted-data-translation). Additional information can be requested from one of the corresponding authors.

References

Seal, R. P. et al. Injury-induced mechanical hypersensitivity requires C-low threshold mechanoreceptors. Nature 462, 651–655 (2009).

Treede, R. D. Neurogenic hyperalgesia: Illuminating its mechanisms with an infrared laser. J. Physiol. 594, 6441–6442 (2016).

Yang, Y. S. et al. Increased expression of three types of transient receptor potential channels (TRPA1, TRPV4, and TRPV3) in burn scars with post-burn pruritus. Acta Derm. Venereol. 95, 20–24 (2015).

Hua, Q. et al. Skin-inspired highly stretchable and conformable matrix networks for multifunctional sensing. Nat. Commun. 9, 244 (2018).

You, I. et al. Artificial multimodal receptors based on ion relaxation dynamics. Science 370, 961–965 (2020).

Jung, Y. H., Kim, J. H. & Rogers, J. A. Skin‐integrated vibrohaptic interfaces for virtual and augmented reality. Adv. Funct. Mater. 31, 2008805 (2021).

Yu, X. et al. Skin-integrated wireless haptic interfaces for virtual and augmented reality. Nature 575, 473–479 (2019).

Kang, D. et al. Ultrasensitive mechanical crack-based sensor inspired by the spider sensory system. Nature 516, 222–226 (2014).

Park, B. et al. Dramatically enhanced mechanosensitivity and signal-to-noise ratio of nanoscale crack-based sensors: Effect of crack depth. Adv. Mater. 28, 8130–8137 (2016).

Pang, C. et al. A flexible and highly sensitive strain-gauge sensor using reversible interlocking of nanofibres. Nat. Mater. 11, 795–801 (2012).

Choi, D. et al. A highly sensitive tactile sensor using a pyramid-plug structure for detecting pressure, shear force, and torsion. Adv. Mater. Technol. 4, 1800284 (2019).

Won, S. M. et al. Multimodal sensing with a three-dimensional piezoresistive structure. ACS Nano 13, 10972–10979 (2019).

Hatzipantelis, E. & Penman, J. The use of hidden Markov models for condition monitoring electrical machines. In Proc. 1993 Sixth International Conference on Electrical Machines and Drives (Conf. Publ. No. 376), 91–96 (IET, 1993).

Saini, I., Singh, D. & Khosla, A. QRS detection using K-Nearest Neighbor algorithm (KNN) and evaluation on standard ECG databases. J. Adv. Res. 4, 331–344 (2013).

He, R. et al. Automatic detection of atrial fibrillation based on continuous wavelet transform and 2D convolutional neural networks. Front. Physiol. 9, 1206 (2018).

Zhao, Y., Cheng, J., Zhan, P. & Peng, X. ECG classification using deep CNN improved by wavelet transform. Comput. Mater. Contin. 64, 1615–1628 (2020).

Tian, C. et al. Deep learning on image denoising: An overview. Neural Netw. 131, 251–275 (2020).

Moin, A. et al. A wearable biosensing system with in-sensor adaptive machine learning for hand gesture recognition. Nat. Electron. 4, 54–63 (2020).

Han, S. et al. Battery-free, wireless sensors for full-body pressure and temperature mapping. Sci. Transl. Med. 10, eaan4950 (2018).

Kim, D. H. et al. Electronic sensor and actuator webs for large-area complex geometry cardiac mapping and therapy. Proc. Natl Acad. Sci. USA 109, 19910–19915 (2012).

Won, S. M. et al. Piezoresistive strain sensors and multiplexed arrays using assemblies of single-crystalline silicon nanoribbons on plastic substrates. IEEE Trans. Electron Devices 58, 4074–4078 (2011).

Nguyen, T. et al. Advances in ultrasensitive piezoresistive sensors: From conventional to flexible and stretchable applications. Mater. Horiz. 8, 2123–2150 (2021).

Kleimann, P., Semmache, B., Berre, M. L. & Barbier, D. Stress-dependent hole effective masses and piezoresistive properties of p-type monocrystalline and polycrystalline silicon. Phys. Rev. B 57, 8966–8971 (1998).

Lu, N., Lu, C., Yang, S. & Rogers, J. A. Highly sensitive skin-mountable strain gauges based entirely on elastomers. Adv. Funct. Mater. 22, 4044–4050 (2012).

Shin, J. et al. Bioresorbable pressure sensors protected with thermally grown silicon dioxide for the monitoring of chronic diseases and healing processes. Nat. Biomed. Eng. 3, 37–46 (2019).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770–778 (IEEE, 2016)

Zagoruyko, S. & Komodakis, N. Wide residual networks. In Proc. British Machine Vision Conference (BMVC), 87.1–87.12 (BMVA Press, 2016).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely Connected Convolutional Networks. In Proc. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2261–2269 (IEEE, 2017).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

Tan, M. & Le, Q. V. Efficientnet: Rethinking model scaling for confolutional neural networks. International Conference on Machine Learning 6105–6114 (2019).

Russakovsky, O. et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 115, 211–252 (2015).

Krizhevsky, A. & Hinton, G. Learning multiple layers of features from tiny images. http://www.cs.utoronto.ca/kriz/learning-features-2009-TR.pdf. (2009).

Berg, T. et al. Birdsnap: large-scale fine-grained visual categorization of birds. In Proc. 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019–2026 (IEEE, 2014).

Nilsback, M.-E. & Zisserman, A. Automated flower classification over a large number of classes. 2008 Sixth Indian Conference on Computer Vision, Graphics & Image Processing, 722–729, https://doi.org/10.1109/ICVGIP.2008.47 (2008).

Parkhi, O. M., Vedaldi, A., Zisserman, A. & Jawahar, C. V. Cats and dogs. 2012 IEEE Conference on Computer Vision and Pattern Recognition, 3498–3505, https://doi.org/10.1109/CVPR.2012.6248092 (2012).

Bossard, L., Guillaumin, M. & Van Gool., L. Food-101– mining discriminative components with random forests. European Conference on Computer Vision. 446–461 (Springer, Cham, 2014).

Zoph, B., Vasudevan, V., Shlens, J. & Le, Q. V. Learning transferable architectures for scalable image recognition. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8697–8710, https://doi.org/10.1109/CVPR.2018.00907 (2018).

Szegedy, C., Ioffe, S., Vanhoucke, V. & Alemi., A. Inception-v4, inception-ResNet and the impact of residual connections on learning. In Proc. 31st AAAI Conf. on Artificial Intelligence, 4278–4284, https://doi.org/10.1109/CVPR.2018.00907. (AAAI Press, 2017).

Zhuang, F. et al. A comprehensive survey on transfer learning. Proc. IEEE 109, 43–76 (2021).

Rodriguez, J. D., Perez, A. & Lozano, J. A. Sensitivity analysis of k-fold cross validation in prediction error estimation. IEEE Trans. Pattern Anal. Mach. Intel. 32, 569–575 (2010).

Szegedy, C. et al. A. Going deeper with convolutions. In Proc. In Proc. of the IEEE conference on computer vision and pattern recognition (CVPR), 1–9 (IEEE, 2015).

Cubuk, E. D., Zoph, B., Shlens, J. & Le, Q. V. Randaugment: Practical automated data augmentation with a reduced search space. In Proc. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 3008–3017 (IEEE, 2020).

Ramachandran, P., Zoph, B. & Le, Q. V. Swish: A self-gated activation function. Preprint at https://arxiv.org/abs/1710.05941v1 (2017).

Acknowledgements

S.M.W. and J.H.K would like to acknowledge the support of the MSIT (Ministry of Science and ICT), Korea, under the ICT Creative Consilience program (IITP-2020-0-01821). S.M.W., J.H.K, and H.W.B. acknowledges support by a National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIP; Ministry of Science, ICT & Future Planning; grant no. NRF-2021R1C1C1009410, and NRF-2022R1A4A3032913). S.M.W. would also like to express gratitude for support by the Nano Material Technology Development Program (2020M3H4A1A03084600) through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT of Korea. J.H.K. was partly supported by the Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (IITP-2021-0-02068). J.U.K. and T.-i.K. were supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT; NRF-2018M3A7B4071110). This work was also supported by Samsung Electronics Co., Ltd.

Author information

Authors and Affiliations

Contributions

C.S., J.U.K., and H.L. contributed equally to this work; C.S., J.U.K., H.L., S.U.P., H.K., and D.K. performed the experiments; S.H., C.S., T.-i.K., W.H.L., and Y.P. analyzed the data; H.W.B., J.H.K., and S.M.W. led this work, C.S., J.U.K., H.L., B.W.B., J.H.K., and S.M.W. wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

So, C., Kim, J.U., Luan, H. et al. Epidermal piezoresistive structure with deep learning-assisted data translation. npj Flex Electron 6, 70 (2022). https://doi.org/10.1038/s41528-022-00200-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41528-022-00200-9