Abstract

Accurately capturing the architecture of single lithium-ion electrode particles is necessary for understanding their performance limitations and degradation mechanisms through multi-physics modeling. Information is drawn from multimodal microscopy techniques to artificially generate LiNi0.5Mn0.3Co0.2O2 particles with full sub-particle grain detail. Statistical representations of particle architectures are derived from X-ray nano-computed tomography data supporting an ‘outer shell’ model, and sub-particle grain representations are derived from focused-ion beam electron backscatter diffraction data supporting a ‘grain’ model. A random field model used to characterize and generate the outer shells, and a random tessellation model used to characterize and generate grain architectures, are combined to form a multi-scale model for the generation of virtual electrode particles with full-grain detail. This work demonstrates the possibility of generating representative single electrode particle architectures for modeling and characterization that can guide synthesis approaches of particle architectures with enhanced performance.

Similar content being viewed by others

Introduction

To enable widespread electrification of passenger cars, lithium(Li)-ion batteries must achieve high-energy densities, high rates, and long cycle lives1. The design of electrode architectures is known to significantly influence the rate performance and energy density of electrodes2,3,4 but it is not well understood how the architecture of single particles influences their performance. For example, the most widely used positive electrode in Li-ion batteries consists of LiNi1−x−yMnyCoxO2 (NMC) particles of various stoichiometries. The majority of NMC electrodes consist of polycrystalline particles where each grain within the particle facilitates transport of Li along 2D planes. When each grain is oriented randomly relative to its neighbor, one can envisage a sub-particle tortuosity where Li travels along the 2D plane of one grain and changes direction as it enters and travels along the 2D plane of the next grain, and so on as the particle continues to lithiate or delithiate5. Anisotropic expansion or contraction of the layered crystal structure of NMC occurs when the grains lithiate or delithiate. This expansion and contraction can lead to sub-particle mechanical strains6,7,8, particle cracking9,10, and accelerated capacity fade7,8. Furthermore, the morphology and orientation of grains have been shown to greatly affect the rate capability of the electrode, where particles with grains oriented with exposed edge facets that transport Li radially inward, display superior rate performance, and longer life11,12,13.

This knowledge presents an opportunity for sub-particle architectures to be designed in such a way to improve rate performance and reduce degradation within electrodes, where the efficacy of various particle architectures could be evaluated through 3D multi-physics models. However, to construct an accurate model of a particle, it is necessary to retrieve or generate realistic particle architectures from experimental data. X-ray nano- and micro-computed tomography (CT) has been demonstrated to be a powerful tool for non-destructively imaging and thereafter quantifying the morphological properties of many particles simultaneously2,9,14,15,16,17,18,19,20 but falls short of capturing the detailed morphology of individual grains within particles and is incapable of determining the orientation of the grains.

Recently, focused-ion beam electron backscatter diffraction (FIB-EBSD) has emerged as an effective means to quantify the morphology and orientation of sub-particle grains in 3D5,21, filling the capability gap of quantifying sub-particle grain detail. Merging the respective strengths of multiple techniques to compile datasets has been shown to enhance characterization capabilities, as demonstrated by merging X-ray nano-CT with FIB-SEM data22, or X-ray nano-CT with ptychographic CT19 to reconstruct images with particle detail (from X-ray nano-CT) and detail on the conductive carbon and binder (from FIB-SEM or ptychography).

Here, we leverage data from both X-ray nano-CT and FIB-EBSD to generate artificial but representative single-particle architectures completed with grain morphological details by parametric stochastic modeling. Using stochastic geometry models23 for describing the 3D nano- and microstructure of various kinds of materials has several advantages. From a model—which was calibrated to image data—it is possible to generate virtual materials, so-called digital twins, the 3D morphology of which is statistically similar to that of real materials observed in tomographic image data24. Moreover, systematic variation of model parameters allows for the generation of a wide range of different virtual materials with predefined morphological properties. Then, the simulated 3D nano- and microstructures can be used as geometry input for (spatially resolved) numerical simulations, i.e., for virtual materials testing to study the influence of a material’s geometry on its physical properties25,26. Furthermore, using parameter regression it is possible to predict model parameters and thus material properties based on parameters of manufacturing processes27.

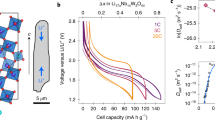

In the present paper, we combine two kinds of stochastic 3D models which represent NMC particles on different length scales to obtain a multi-scale model for both the outer shell and grain architecture of NMC particles. This workflow is visualized in Fig. 1. For modeling the outer shell of NMC particles, we consider a random field on the unit sphere28, which is fitted to particles observed in CT data27,29,30. The fitted model can then generate virtual, but realistic outer particle shells. To model the grain architecture depicted in the FIB-EBSD data, we consider a random tessellation driven by a random marked point process23. Therefore, we first determine a parametric representation of the grains in the FIB-EBSD data using so-called Laguerre tessellations which are a generalization of Voronoi tessellations31. Then, a so-called Matérn cluster process23 with conditionally independent marks is fitted to the parametric Laguerre representation of FIB-EBSD data, resulting in a stochastic 3D model for the grain architecture of NMC particles. Finally, in a last step both models are combined to obtain a multi-scale model from which we can generate virtual NMC particles together with their inner grain architecture. Thus, this work demonstrates how realistic particle architectures can be generated from experimental image data and sets the basis for future modeling efforts of single-particle geometries with tailored particle and grain characteristics.

Using FIB-EBSD data depicting the grain architecture of an NMC particle (top, left), a 3D grain architecture model is developed which generates digital twins of the segmented FIB-EBSD data (top, middle). On the other hand, using nano-CT data depicting a large number of NMC particles (bottom, left), a 3D model for the outer shell of NMC particles is developed which generates random particle shells (bottom, middle). By combining both models, a stochastic multi-scale model is obtained for both the outer shell and the grain architecture of NMC particles (right).

Results

In this section, we describe and quantitatively validate the stochastic 3D model and its individual components used in the present paper for modeling geometrical features of NMC particles at different length scales. Therefore, we first describe the imaging procedures and the image processing steps which were deployed for capturing a statistically relevant number of both outer particle shells (using nano CT) and sub-particle grains (using FIB-EBSD). Then, the stochastic models are described from which random outer particle shells and random grain architectures can be generated, respectively. Both models are combined into a multi-scale model for the outer shell and grain architecture of NMC particles. Finally, the stochastic geometry models described in this section are then calibrated to image data and validated.

3D imaging of NMC particles and their grain architecture

The electrode particles analyzed in this work consisted of TODA LiNi0.5Mn0.2Co0.2O2 (NMC532) particles in a 70 μm thick electrode coating of 90 wt% NMC532, 5 wt% C45 Timcal conductive carbon, and 5 wt% PVdF binder, on a 20 μm thick aluminum current collector. The electrode sheet was manufactured at Argonne National Laboratory’s Cell Analysis, Modeling, and Prototyping facility. The TODA NMC532 particles had a size distribution of D10, D50, and D90 or 7.1, 9.3, and 12.1 μm, respectively.

For imaging the outer shell of such NMC particles, a sample was laser-milled into a pillar of ~80 μm diameter using a technique described previously32. The pillar of electrode material was then imaged using X-ray nano-CT with a quasi-monochromatic beam of 5.4 keV in absorption contrast model (Zeiss Ultra 810, Zeiss Xradia XRM, Pleasanton, CA, USA) with a voxel size of 128 nm. The image was reconstructed using the Zeiss XRM Reconstructor software package that is based on filtered back projection. The reconstructed grayscale data were then used for segmenting the NMC particles and characterizing their morphology. A resource for micro- and nano-CT data of battery electrode architectures is the NREL Battery Microstructure Library33.

For the forthcoming analyses, we consider the raw reconstructed CT data to be a map \({I}^{{\rm{CT}}}:{{\mathcal{W}}}^{{\rm{CT}}}\to {\mathbb{R}}\), where \({{\mathcal{W}}}^{{\rm{CT}}}=\{1,\ldots ,499\}\times \{1,\ldots ,508\}\times \{1,\ldots ,1616\}\), see Fig. 2a. Then, ICT(x) corresponds to the grayscale value of ICT of the voxel \({\bf{x}}\in {{\mathcal{W}}}^{{\rm{CT}}}\). For simplicity’s sake, we extend the domain of ICT to \({{\mathbb{Z}}}^{3}\), i.e., we consider \({I}^{{\rm{CT}}}:{{\mathbb{Z}}}^{3}\to {\mathbb{R}}\) with ICT(x) = 0 if \({\bf{x}} \; \notin \; {{\mathcal{W}}}^{{\rm{CT}}}.\) In order to quantitatively analyze the image data and stochastically model the outer shape of NMC particles, we have to compute a particle-wise segmentation map from ICT. Therefore, we first crop the image data ICT, followed by denoising using a Gaussian filter with standard deviation 4, see Fig. 2b34. Then, the image is binarized using Otsu’s method35, i.e., we obtain an image \({B}^{{\rm{CT}}}:{{\mathbb{Z}}}^{3}\to \{0,1\}\) with

Then, the binary image (cf. Fig. 2c) is segmented into individual particles using the procedure described in Spettl et al.36. The resulting segmentation of the CT data is visualized in Fig. 2d. Since some particles are cut off by the cuboid sampling window, we do not consider them in our subsequent analysis—resulting in n = 239 fully imaged particles as a basis for modeling the outer shape. From here on, we denote the segmented particles by \({P}_{1}^{{\rm{CT}}},\ldots ,{P}_{n}^{{\rm{CT}}}:{{\mathbb{Z}}}^{3}\to \{0,1\}\) which are given by

for each i = 1, …, n. Note that this representation of particles allows us to easily check, whether some point x on the grid \({{\mathbb{Z}}}^{3}\) belongs to a particle \({P}_{i}^{{\rm{CT}}}\). However, we also have to make such inquiries for points in-between gridpoints, i.e., for any \({\bf{x}}\in {{\mathbb{R}}}^{3}\). To do so, we extend the maps \({P}_{i}^{{\rm{CT}}}\) to \({{\mathbb{R}}}^{3}\) using nearest-neighbor interpolation, i.e., putting

where ⌈xi⌋ denotes the closest integer to xi with ⌈xi⌋ = xi − 0.5 if 2xi is an odd integer. The resulting particles \({P}_{1}^{{\rm{CT}}},\ldots ,{P}_{n}^{{\rm{CT}}}\) will be used for modeling the outer shape of NMC particles.

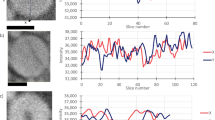

Top row: Volumetric cutouts of raw (a), denoised (b), binarized (c), and segmented (d) CT data containing many full electrode particles. Figures a–d use the same length scale. Bottom row: Volumetric cutouts of raw (e), aligned (f), and segmented (g) FIB-EBSD data of a single particle showing morphological detail of numerous sub-particle grains. Figures e-g use the same length scale.

In addition to the CT data, further measurements were performed for mapping the inner grain architecture of NMC particles. For that purpose, NMC particles were removed from the electrode sheet by partially dissolving the electrode binder with N-Methyl-2-pyrrolidone solvent, wiping the partially dissolved region with a rubber spatula, and smearing the electrode from the spatula onto a copper sheet in order to disperse individual electrode particles for analysis. Single particles with good electrical connection were identified on the copper sheet using Scanning Electron Microscopy (SEM) and were targeted for FIB-EBSD, which was conducted using an FEI Helios NanoLab 600i equipped with an EDAX-EBSD detector. A 30 kV 2.5 nA Ga beam was used for FIB-slicing of ca. 50 nm width and the EBSD measurements were taken at 50 nm steps in the x and y directions. Diffraction patterns were fit to a trigonal crystal system (space group R-3m) with a = b = 2.87 Å, and c = 14.26 Å to obtain orientation data from the measurements. The software produced text files containing spatially resolved confidence index, image quality (IQ), and Bunge-Euler angle data.

For modeling the grain architecture, we utilize the segmentation of the FIB-EBSD data obtained in Furat et al.21. Therefore, we give a short overview of the segmentation procedure which was used to obtain a grain-wise segmentation of the partially imaged NMC particle21. First, the stack of IQ images was aligned to obtain a 3D image, see Fig. 2e, f. Then, the grain boundaries were manually labeled in four different slices of the 3D image. To enhance the grain boundaries in the entire 3D image, a convolutional neural network, namely a 3D U-Net37,38 with a modified loss function, was trained to predict grain boundaries from the IQ image. Finally, a grain-wise segmentation of the 3D image was achieved by applying a marker-based watershed algorithm36,39 to the neural network’s grain boundary predictions, see Fig. 2g.

Stochastic 3D model for the outer particle shell

In this section, we describe the stochastic model which is used to generate random outer particle shells being statistically similar to those of the segmented particles \({P}_{i}^{{\rm{CT}}}\). Therefore, NMC particles are assumed to be pore-free with the constraint that each line segment between any point within the particle and another fixed point (e.g. particle centroid) is contained in the particle. This assumption limits the modeling to so-called star-shaped particles, which reduces the complexity for modeling the 3D outer particle shell while still providing a reasonably flexible family of outer shell shapes and sizes27,29. Thus, for each particle \({P}_{i}^{{\rm{CT}}}\) observed in the CT data, we assume that there is some point \({{\bf{s}}}_{i}\in {{\mathbb{R}}}^{3}\) with \({P}_{i}^{{\rm{CT}}}({{\bf{s}}}_{i})=1\) such that for any other point \({\bf{s}}\in {P}_{i}^{{\rm{CT}}}\) the segment from si to s is fully contained in the particle, i.e., the equation

holds for all t \(\in\) [0, 1]. Furthermore, we assume that for each particle \({P}_{i}^{{\rm{CT}}}\) the so-called star point si can be chosen as the particle’s centroid \({\overline{{\bf{x}}}}_{i}\) which is given by

where \(\#\{{\bf{x}}\in {{\mathbb{Z}}}^{3}:{P}_{i}^{{\rm{CT}}}({\bf{x}})=1\}\) denotes the number of voxels associated with \({P}_{i}^{{\rm{CT}}}\). Star-shaped particles \({P}_{i}^{{\rm{CT}}}\) have the advantage that they can be represented by a radius function \({r}_{i}^{{\rm{CT}}}:{S}^{2}\to [0,\infty )\) which is easier to model stochastically, where \({S}^{2}=\{{\bf{x}}\in {{\mathbb{R}}}^{3}:\,\Vert {\bf{x}}\Vert =1\}\) denotes the unit sphere and ∥⋅∥ is the Euclidean norm. More precisely, the radius function \({r}_{i}^{{\rm{CT}}}\) assigns to any orientation vector u \(\in\) S2 the distance between the star point si and the particle’s boundary into direction u. More precisely, the radius function \({r}_{i}^{{\rm{CT}}}\) is given by

Thus, we replace the volumetric (discretized) representation \({P}_{i}^{{\rm{CT}}}\) by the surface representation \({r}_{i}^{{\rm{CT}}}\). In practice, we will consider a discretized surface representation of \({r}_{i}^{{\rm{CT}}}\), i.e., we will consider evaluation vectors \(({r}_{i}^{{\rm{CT}}}({{\bf{u}}}_{1}),\ldots ,{r}_{i}^{{\rm{CT}}}({{\bf{u}}}_{m}))\) of the radius function for some gridpoints u1, …, um \(\in\) S2. In other words, we transfer the data from a grid in space to a 2D grid—resulting in a complexity reduction which is advantageous for modeling purposes.

The goal of the stochastic model described below is to define a random function r : S2 → [0,\(\infty\)) on the unit sphere S2, which statistically behaves similarly as the radius functions \({r}_{1}^{{\rm{CT}}},\ldots ,{r}_{n}^{{\rm{CT}}}\) computed from tomographic image data. Note that, from a representation as a radius function r we can determine the corresponding particle \(P:{{\mathbb{R}}}^{3}\to \{0,1\}\) uniquely up to the position of its star point by

where \({\bf{o}}=(0,0,0)\in {{\mathbb{R}}}^{3}\).

The stochastic model which we use for describing the outer particle shell is a random field {X(u) : u \(\in\) S2} on the unit sphere S2 28. Note that X(u) is a real-valued random variable for each orientation vector u \(\in\) S2. Since we are solely interested in modeling the shape of the particle shell and not in its location nor its orientation in space, we assume our random field to be isotropic, i.e., for any rotation matrix R \(\in\) SO(3) the random fields {X(u) : u \(\in\) S2} and {X(Ru) : u \(\in\) S2} have the same distribution. Specifically, we will fit a mixture of Gaussian random fields to reassemble the radius functions \({r}_{1}^{{\rm{CT}}},\ldots ,{r}_{n}^{{\rm{CT}}}\) computed from tomographic image data. Therefore, to make our paper more self-contained, we first explain the notion of an isotropic Gaussian random field on the unit sphere S2.

A random field {Z(u) : u \(\in\) S2} is called Gaussian if for any m > 0 and pairwise distinct orientation vectors u1, …, um \(\in\) S2 the random vector \(\left(Z({{\bf{u}}}_{1}),\ldots ,Z({{\bf{u}}}_{m})\right)\) follows a multivariate normal distribution40. Then, the random field {Z(u) : u \(\in\) S2} is uniquely defined by its mean function \(\mu :{S}^{2}\to {\mathbb{R}}\) and its covariance function \(\sigma :{S}^{2}\times {S}^{2}\to {\mathbb{R}}\) which are given by

and

where \({\mathbb{E}}[\cdot ]\) denotes the expected value. For isotropic Gaussian random fields the mean function μ is constant—thus, it is described by a single numerical parameter which we denote by \(\mu \in {\mathbb{R}}\). Moreover, in the isotropic case the covariance function of a Gaussian random field has the representation

where P0, P1, … denote the Legendre polynomials and u1 ⋅ u2 is the inner product of u1 and u240,41. The coefficients a0, a1, … ≥ 0 are called the angular power spectrum and they, together with the mean value μ, uniquely determine the isotropic Gaussian random field {Z(u) : u \(\in\) S2}. In other words, the model parameters of an isotropic Gaussian random field on the unit sphere are the mean value μ and the angular power spectrum \({({a}_{\ell })}_{\ell = 0}^{\infty }.\) For computational reasons, we only consider Gaussian random fields such that \(a_{\ell}\) = 0 for all \(\ell\) > L, where L > 0 is some fixed integer. Then, the angular power spectrum of these random fields is characterized by a finite-dimensional vector a = (a0, …, aL). Consequently, the covariance function σ of {Z(u) : u \(\in\) S2} is given by

Note that for the fitting procedure described below it is necessary to consider the finite-dimensional distributions of an isotropic Gaussian random field {Z(u) : u \(\in\) S2} with mean value \(\mu \in {\mathbb{R}}\) and angular power spectrum \({\bf{a}}=({a}_{0},{a}_{1},\ldots ,{a}_{L})\in {{\mathbb{R}}}^{L+1}\). More precisely, for any fixed integer m > 0, let u1, …, um be pairwise distinct points on S2. Then, the random vector \(\left(Z({{\bf{u}}}_{1}),\ldots ,Z({{\bf{u}}}_{m})\right)\) has a multivariate normal distribution with mean vector \({\boldsymbol{\mu }}=(\mu ,\ldots ,\mu )\in {{\mathbb{R}}}^{m}\) and covariance matrix

Specifically, assuming that Σ is not singular, i.e. \(\det ({{\Sigma }})\ne 0\), the probability density function \({f}_{\mu ,{\bf{a}}}:{{\mathbb{R}}}^{m} \to [0,\infty )\) of \(\left(Z({{\bf{u}}}_{1}),\ldots ,Z({{\bf{u}}}_{m})\right)\) is given by

Thus, for simulating such a Gaussian random field at some (arbitrary, but fixed) evaluation points u1, …, um \(\in\) S2 we have to draw samples from a multivariate normal distribution.

Note that in particular, according to Eq. (13), the random variable Z(u) is normal distributed with mean value μ and variance σ(u, u) for each u \(\in\) S2. However, the histograms of the values \({r}_{1}^{{\rm{CT}}}({\bf{u}}),\ldots ,{r}_{n}^{{\rm{CT}}}({\bf{u}})\) of the radius functions extracted from the segmented image data for any fixed direction u \(\in\) S2 can not be fitted by the density function of a normal distribution, see Fig. 3. Specifically, the histogram shown in Fig. 3 indicates a multimodal distribution which motivates using mixtures of Gaussian random fields to model the outer shell of particles. More precisely, we randomly select among K > 0 Gaussian random fields and generate an outer shell by drawing a sample from the selected model. Therefore, let {Z(1)(u) : u \(\in\) S2}, …, {Z(K)(u) : u \(\in\) S2} be independent, isotropic Gaussian random fields with mean values μ(1), …, μ(K) and angular power spectra \({{\bf{a}}}^{(1)}={({a}_{\ell }^{(1)})}_{\ell = 0}^{L},\ldots ,{{\bf{a}}}^{(K)}={({a}_{\ell }^{(K)})}_{\ell = 0}^{L}\), respectively. We call the random fields {Z(1)(u) : u \(\in\) S2}, …, {Z(K)(u) : u \(\in\) S2} the components of the mixture. In order to model the random selection of a component, we introduce further parameters p1, …, pK ≥ 0 with p1 + ⋯ + pK = 1 which correspond to the probability of selecting a component. Then, the mixture {X(u) : u \(\in\) S2} of K Gaussian random fields is given by

with the vector θ = (L, K, p1, …, pK, μ(1), …, μ(K), a(1), …, a(K)) of model parameters. Furthermore, the finite-dimensional probability density function \({f}_{{\boldsymbol{\theta }}}:{{\mathbb{R}}}^{m}\to [0,\infty )\) of the random field {X(u) : u \(\in\) S2} for some evaluation points u1, …, um \(\in\) S2 is given by

where \({f}_{{\mu }^{(1)},{{\bf{a}}}^{(1)}},\ldots ,{f}_{{\mu }^{(K)},{{\bf{a}}}^{(K)}}\) are the corresponding probability densities of {Z(1)(u) : u \(\in\) S2}, …, {Z(K)(u) : u \(\in\) S2} given in Eq. (13), respectively. To generate a realization of {X(u) : u \(\in\) S2} at the evaluation points u1, …, um we just have to randomly pick a component according to the probabilities p1, …, pK and then draw a sample from the corresponding multivariate normal distribution.

Now that we have defined the mixture {X(u) : u \(\in\) S2} of Gaussian random fields, we describe how its parameter vector θ = (L, K, p1, …, pK, μ(1), …, μ(K), a(1), …, a(K)), for any fixed pair L, K > 0, can be fitted to data. Recall that previously in this section we described the procedure for representing particles extracted from tomographic image data as radius functions \({r}_{1}^{{\rm{CT}}},\ldots ,{r}_{n}^{{\rm{CT}}}:{S}^{2}\to {\mathbb{R}}\). Now, we describe how to fit the parameter vector θ such that the realizations of the model {X(u) : u \(\in\) S2} statistically reassemble the radius functions \({r}_{1}^{{\rm{CT}}},\ldots ,{r}_{n}^{{\rm{CT}}}:{S}^{2}\to {\mathbb{R}}\). Therefore, let u1, …, um \(\in\) S2 be some pairwise distinct evaluation points. Then, the random field {X(u) : u \(\in\) S2} is fitted by determining an optimal parameter vector \(\widehat{{\boldsymbol{\theta }}}\) for which the evaluations \({{\bf{r}}}_{1}^{{\rm{CT}}}=\left({r}_{1}^{{\rm{CT}}}({{\bf{u}}}_{1}),\ldots ,{r}_{1}^{{\rm{CT}}}({{\bf{u}}}_{m})\right),\ldots ,{{\bf{r}}}_{n}^{{\rm{CT}}}=\left({r}_{n}^{{\rm{CT}}}({{\bf{u}}}_{1}),\ldots ,{r}_{n}^{{\rm{CT}}}({{\bf{u}}}_{m})\right)\) are most likely with respect to the distribution of the random vector \(\left(X({{\bf{u}}}_{1}),\ldots ,X({{\bf{u}}}_{m})\right)\). More precisely, the optimal parameter \(\widehat{{\boldsymbol{\theta }}}\) is obtained by maximizing the (log-)likelihood function, i.e., by

where fθ denotes the probability density of \(\left(X({{\bf{u}}}_{1}),\ldots ,X({{\bf{u}}}_{m})\right)\) given in Eq. (15) and ΘL,K is the set of admissible model parameters for fixed L, K > 0. Since the probability density fθ is differentiable with respect to the remaining model parameters in θ, the optimization problem given in Eq. (16) can be solved using gradient-descent algorithms42. However, since the number of model parameters can be—depending on the number K of components and the number L + 1 of angular power spectrum coefficients per component—relatively large, we require a good initial parameter constellation for applying gradient-descent algorithms. Therefore, we present a method for determining a good initial parameter vector θinit for performing optimization.

Instead of directly fitting the mixture {X(u) : u \(\in\) S2} of Gaussian random fields to the evaluations \({{\bf{r}}}_{1}^{{\rm{CT}}},\ldots ,{{\bf{r}}}_{n}^{{\rm{CT}}}\) by solving the optimization problem given in (16), we first fit a mixture of finite-dimensional Gaussian random vectors to \({{\bf{r}}}_{1}^{{\rm{CT}}},\ldots ,{{\bf{r}}}_{n}^{{\rm{CT}}}\). Then, from this fit we derive an initial parameter constellation θinit \(\in\) ΘL.K to solve Eq. (16). Therefore, we shortly describe the construction of mixtures of finite-dimensional Gaussian random vectors, which is similar to the construction of mixtures of Gaussian random fields described in Eq. (14).

Let Z1, …, ZK be pairwise independent, normally distributed random vectors taking values in \({{\mathbb{R}}}^{m}\), with mean vectors \({{\boldsymbol{\mu }}}^{(1)},\ldots ,{{\boldsymbol{\mu }}}^{(K)}\in {{\mathbb{R}}}^{m}\) and non-singular covariance matrices \({{{\Sigma }}}^{(1)},\ldots ,{{{\Sigma }}}^{(K)}\in {{\mathbb{R}}}^{m\times m}\). Thus, for k = 1, …, K, their probability densities fk are given by

for any \({\bf{x}}\in {{\mathbb{R}}}^{m}\). Furthermore, let p1, …, pK ≥ 0 be some probabilities with p1 + ⋯ + pK = 1. Then, the mixture X of the finite-dimensional Gaussian random vectors Z1, …, ZK is given by

The parameters of the distribution of X—namely the mixing probabilities p1, …, pK, the mean vectors μ(1), …, μ(K) and the covariance matrices Σ(1), …, Σ(K)— can be fitted to the evaluation vectors \({{\bf{r}}}_{i}^{{\rm{CT}}}=({r}_{i}^{{\rm{CT}}}({{\bf{u}}}_{1}),\ldots ,{r}_{i}^{{\rm{CT}}}({{\bf{u}}}_{m}))\in {{\mathbb{R}}}^{m}\), i = 1, …, n, using the so-called expectation-maximization algorithm43.

Note that the distribution of the random vector X, fitted in this manner, does not yet describe the outer particle shells extracted from CT data sufficiently well. First of all, there can be some k \(\in\) {1, …, K} such that the components of the mean vector μ(k) are not identical, which is in contradiction with the assumption of anisotropy. Furthermore, the random vector X does not describe the behavior of the outer shell at evaluation points u \(\in\) S2 such that u ≠ ui for all i = 1, …, m. Therefore, in the next step, we describe how to determine a parameter constellation θinit \(\in\) Θ of a mixture {X(u) : u \(\in\) S2} of isotropic Gaussian random fields which is in some sense similar to the random vector X at the evaluation points u1, …, um, i.e., for which it holds that (X(u1), …, X(um)) ≈ X.

First, for each k = 1, …, K, we determine the parameters of an isotropic Gaussian random field {Z(k)(u) : u \(\in\) S2} with (Z(k)(u1), …, Z(k)(um)) ≈ Zk. The mean value \({\mu }^{(k)}\in {\mathbb{R}}\) of {Z(k)(u) : u \(\in\) S2} is chosen as the average of the components of the mean vector \({{\boldsymbol{\mu }}}^{(k)}=({\mu }_{1}^{(k)},\ldots ,{\mu }_{m}^{(k)})\), i.e., we put

The angular power spectrum \({{\bf{a}}}^{(k)}=({a}_{0}^{(k)},\ldots ,{a}_{L}^{(k)})\in {{\mathbb{R}}}^{L+1}\) of {Z(k)(u) : u \(\in\) S2} is chosen such that the covariance matrix \({(\sigma ({{\bf{u}}}_{i},{{\bf{u}}}_{j}))}_{i,j = 1}^{m}\), see Eq. (12), in some sense coincides with the covariance matrix Σ(k) of the corresponding multivariate normal distribution, i.e., we have to solve a system of linear equations with unknowns \({a}_{0}^{(k)},\ldots ,{a}_{L}^{(k)}\) where the equations are given by

for each i, j = 1, …, m. Furthermore, we have the constraints \({a}_{0}^{(k)},\ldots ,{a}_{L}^{(k)}\ge 0\). This system of linear equations does not necessarily have an exact solution. However, using an iterative algorithm44 we can determine a good solution a(k) in a least-squares sense. Then, the initial value θinit for solving the optimization problem in Eq. (16) is given by

Stochastic 3D model for the grain architecture using Laguerre tessellations

In this section, we explain our stochastic modeling approach, which is used to simulate the 3D grain architecture inside NMC particles, and its calibration to the FIB-EBSD data described above. For this, we consider a certain sampling window \({\mathcal{W}}\subset {{\mathbb{R}}}^{3}\), being a cuboid \({\mathcal{W}}=[{a}_{1},{a}_{2}]\times [{b}_{1},{b}_{2}]\times [{c}_{1},{c}_{2}]\) for some \({a}_{1},{a}_{2},{b}_{1},{b}_{2},{c}_{1},{c}_{2}\in {\mathbb{R}}\) with a1 < a2, b1 < b2, c1 < c2, and employ (random) tessellations to randomly partition the sampling window \({\mathcal{W}}\) into non-overlapping cells, each of which corresponds to one of the grains in the FIB-EBSD data. In combination with the stochastic model of the outer shells described in the previous section, we are then able to present a holistic stochastic model for complete NMC particles, along with their inner 3D grain architecture.

To parametrically subdivide the sampling window \({\mathcal{W}}\subset {{\mathbb{R}}}^{3}\) into cells, Laguerre tessellations—generalizations of the well-known Voronoi tessellation31,45—are used. For this purpose, for any \({\bf{s}},{\bf{x}}\in {{\mathbb{R}}}^{3}\) and \(w\in {\mathbb{R}}\), the power distance pow(x, (s, w)) (also called Laguerre distance function) is considered, which is defined by

A Laguerre tessellation \({\mathcal{T}}\) of \({\mathcal{W}}\) with \({n}_{{\mathcal{T}}}\) cells is fully characterized by a sequence of weighted generating points \({\mathcal{G}}={\{({{\bf{s}}}_{i},{w}_{i})\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\) with seed points \({{\bf{s}}}_{i}\in {{\mathbb{R}}}^{3}\) and weights \({w}_{i}\in {\mathbb{R}}\) for \(i=1,\ldots ,{n}_{{\mathcal{T}}}\). The ith cell \({C}_{i}^{{\rm{L}}}\) of the Laguerre tessellation \({\mathcal{T}}\) is then defined as

For simplicity, we use the notation

to indicate whether a point \({\bf{x}}\in {\mathcal{W}}\) belongs to the ith Laguerre cell. It can be shown that Laguerre cells are convex polyhedra. Note that for modeling non-convex grains other tessellation models, e.g., Johnson–Mehl tessellations46,47 can be deployed analogously. Furthermore, note that the seed points roughly determine where the corresponding cell manifests (though depending on the weights, the seed point might actually not belong to the cell, or the cell might even be empty), and that high additive weights compared to those of neighboring generating points lead to larger cells. A big advantage of Laguerre tessellations is that they provide much more flexibility compared to simpler Voronoi tessellations through their additional weights. For more details on this topic, see e.g. Lautensack and Zuyev48.

In summary, Laguerre tessellations allow us to parametrically describe a system of convex grains. For stochastic modeling, it is thus sufficient to come up with a random sequence of seed points and weights. With these, the spatial extends of the simulated grains can then be easily computed. However, before we can discuss the stochastic model for the 3D grain architecture inside NMC particles and how to calibrate it to experimental image data, it is necessary to find a representation of the grains in the segmented FIB-EBSD data (Fig. 4a) in terms of a Laguerre tessellation, see Fig. 4b. We will then use the extracted seed points and weights as basis for the modeling.

Analogous to the representation of particles \({\{{P}_{i}^{{\rm{CT}}}\}}_{i = 1}^{n}\) extracted from segmented CT data which has been introduced above, we consider the ith grain of the experimental FIB-EBSD data as a map \({C}_{i}^{{\rm{E}}}:{{\mathbb{Z}}}^{3}\to \{0,1\}\) given by

with i = 1, …, nE and nE the number of grains in the sampling window \({\mathcal{W}}\subset {{\mathbb{R}}}^{3}\). Again, nearest neighbor interpolation can be used to extend the domain of \({C}_{i}^{{\rm{E}}}\) to the continuous Euclidean space. Furthermore, let \({{\mathcal{X}}}^{{\rm{F}}}={\{{{\bf{x}}}_{j}^{{\rm{F}}}\}}_{j = 1}^{{n}_{{\rm{F}}}}\in {{\mathbb{Z}}}^{3\times {n}_{{\rm{F}}}}\) be the coordinates of voxels that belong to the foreground of the image data, i.e., for each j = 1, …, nF there is an integer i = 1, …, nE such that \({C}_{i}^{{\rm{E}}}({{\bf{x}}}_{j}^{{\rm{F}}})=1\), where nF denotes the number of foreground voxels in \({\mathcal{W}}\).

At its heart, fitting a Laguerre tessellation to image data is an optimization problem of finding the best generators such that the discrepancy of the tessellation and the image data is minimized. The ground truth for that is given by the grain labels \({\{({C}_{1}^{{\rm{E}}}({{\bf{x}}}_{j}^{{\rm{F}}}),\ldots ,{C}_{{n}_{{\rm{E}}}}^{{\rm{E}}}({{\bf{x}}}_{j}^{{\rm{F}}}))\}}_{j = 1}^{{n}_{{\rm{F}}}}\). We employ a volume-based discrepancy measure which counts all voxels where the experimental FIB-EBSD image data and the discretized Laguerre tessellation have the same label. To be more precise, the value of the objective function \(E:{\left({{\mathbb{R}}}^{3}\times {\mathbb{R}}\right)}^{{n}_{{\rm{E}}}}\to [0,\infty )\) for a sequence of generators \({\mathcal{G}}={\{{{\bf{g}}}_{i}\}}_{i = 1}^{{n}_{{\rm{E}}}}\) with \({{\bf{g}}}_{i}=({{\bf{s}}}_{i},{w}_{i})\in {{\mathbb{R}}}^{3}\times {\mathbb{R}}\) is given by

where the cells \({C}_{1}^{{\rm{L}}},\ldots ,{C}_{{n}_{{\rm{E}}}}^{{\rm{L}}}\) of the Laguerre tessellation \({\mathcal{T}}\) depend on the choice of the generators in \({\mathcal{G}}\) such that \({n}_{{\mathcal{T}}}\) = nE. The corresponding fitting problem is thus to determine an optimal sequence of generators \({{\mathcal{G}}}_{{\rm{opt}}}\) defined as

Eq. (27) is probably the most natural choice to define the fitting problem, but in practice it is computationally expensive. In the following, we address this issue by slightly altering the original fitting problem.

It is easy to see that \({C}_{j}^{{\rm{L}}}({{\bf{x}}}^{{\rm{F}}})\) with \({{\bf{x}}}^{{\rm{F}}}\in {{\mathcal{X}}}^{{\rm{F}}}\) can be reformulated as

where \({{\rm{argmin}}}_{j}^{* }\) is the jth component of the \({n}_{{\mathcal{T}}}\)-dimensional vector-valued argmin function, i.e.,

In cases where the minimum is not unique, i.e., there are indices \({j}_{1},{j}_{2}\in \{1,\ldots ,{n}_{{\mathcal{T}}}\}\) with j1 ≠ j2 and \({z}_{{j}_{1}}={z}_{{j}_{2}}\), only the component with the smaller index is set equal to 1. The function \({{\rm{argmax}}}^{* }\) is defined analogously.

Even though the Laguerre distance function pow is differentiable (with respect to the generators), the fact that argmin* in Eq. (28) is not makes the objective function E defined in Eq. (26) non-differentiable. This leaves us only with derivative-free optimization algorithms to solve Eq. (27), which in most cases converge slower than gradient-descent methods49. In order to increase efficiency, we slightly deviate from the original Laguerre tessellation formulation by replacing the argmin* function in Eq. (28) with a ‘softmin*’ function, i.e., a softmax* function with a negative argument

Here, the \({n}_{{\mathcal{T}}}\)-dimensional \({{\rm{softmax}}}^{* }\) function

is a smooth version of the \({{\rm{argmax}}}^{* }\) function. So instead of returning a vector whose components are either 0 or 1, the \({{\rm{softmax}}}^{* }\) function is a vector-valued map where each component is a continuous function with values between 0 and 1. In fact its output vector \({{\rm{softmax}}}^{* }{\bf{z}}\) for some argument \({\bf{z}}\in {{\mathbb{R}}}^{{n}_{{\mathcal{T}}}}\) defines a discrete probability measure (i.e., the values of all components are between 0 and 1 and their sum is equal to 1), which assigns the highest probability to the index j if zj ≥ zi for all \(i\in \{1,\ldots ,{n}_{{\mathcal{T}}}\}\). The \({{\rm{softmax}}}^{* }\) function is often used for multi-class classification problems in machine learning50, which show many similarities with our tessellation fitting. With this, the value of a component of the vector \(({\widetilde{C}}_{1}^{{\rm{L}}}({{\bf{x}}}^{{\rm{F}}}),\ldots ,{\widetilde{C}}_{{n}_{{\mathcal{T}}}}^{{\rm{L}}}({{\bf{x}}}^{{\rm{F}}}))\) is thus the largest one if the corresponding generator has the shortest power distance to the given evaluation point xF. In addition, since \({{\rm{softmax}}}^{* }\) is a composition of differentiable functions and therefore differentiable, the function \({\widetilde{C}}_{j}^{{\rm{L}}}\) is differentiable, too, for each \(j\in \{1,\ldots ,{n}_{{\mathcal{T}}}\}\).

Because it is now no longer possible with \({\widetilde{C}}_{j}^{{\rm{L}}}\) to assign each voxel a unique label, it is necessary to also adapt the objective function. Thus, instead of E defined in Eq. (26), we consider the function \(\widetilde{E}:{\left({{\mathbb{R}}}^{3}\times {\mathbb{R}}\right)}^{{n}_{{\rm{E}}}}\to [0,\infty )\), where

with the (binary) cross-entropy loss function \(\ell\) : [0, 1]2 → [0, \(\infty\)) given by \(\ell (\tilde{y},y)=-y \, {\log}\, \tilde{y}-(1-y)\,{\log}\,(1-\tilde{y})\). Note that the cross-entropy loss is often used in machine learning50 to compare the output of a classifier with ground truth data—basically the same purpose it serves here: If an evaluation point xF belongs to the ith grain (i.e., \({C}_{i}^{{\rm{E}}}({{\bf{x}}}^{{\rm{F}}})=1\)), \({\widetilde{C}}_{i}^{{\rm{L}}}({{\bf{x}}}^{{\rm{F}}})\) also needs to be close to 1 in order to maximize \(\ell\) and vice versa. Note that the modified objective function \(\widetilde{E}\) is differentiable with respect to the Laguerre generators. The fitted sequence of generators \({\widetilde{{\mathcal{G}}}}_{{\rm{opt}}}\) can then be obtained by solving

In summary, we modified the original fitting problem, formulated in Eq. (27), to get a differentiable version of it in Eq. (33). This allows us to employ fast, gradient-based optimization algorithms, such as in our case, Adam51. The initial guess for the generators is computed using the procedure described in Spettl et al.52, which is based on a global optimization algorithm minimizing a fast, but less accurate objective function. Note that for the discretization of a fitted Laguerre tessellation, we compute the (unique) cell labels using the classical definition in Eq. (28).

Once a sequence of generators \({\widetilde{{\mathcal{G}}}}_{{\rm{opt}}}={\{{\widetilde{{{\bf{g}}}_{}}}_{i}^{{\rm{opt}}}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\) with \({\widetilde{{{\bf{g}}}_{}}}_{i}^{{\rm{opt}}}=({{\bf{s}}}_{i},{w}_{i})\) fitted to the experimental FIB-EBSD data is obtained by Eq. (33), it acts as ground truth for the stochastic modeling of the 3D grain architecture using random Laguerre tessellations. In this way, a random subdivision of the sampling window \({\mathcal{W}}\subset {{\mathbb{R}}}^{3}\) into a sequence of random Laguerre cells \({\{{C}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\) is obtained, where \({N}_{{\mathcal{T}}}^{{\rm{M}}}\) denotes the random number of cells in \({\mathcal{W}}\). More precisely, an appropriately chosen set of random vectors \({\{{S}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\), a so-called point process23, is used for stochastic modeling of the sequence of seed points \({\{{{\bf{s}}}_{i}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\). The additive weights \({\{{w}_{i}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\) are modeled by (conditionally independent) real-valued random variables \({W}_{1}^{{\rm{M}}},\ldots ,{W}_{{N}_{{\mathcal{T}}}^{{\rm{M}}}}^{{\rm{M}}}\), which are characterized by a univariate probability distribution conditional on the seed points.

To establish a suitable parametric model for the sequence of random seed points \({\{{S}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\), we employ so-called Matérn cluster processes23 to describe the spatial clustering of seed points extracted from experimental FIB-EBSD data. Matérn cluster processes are comprised of two modeling components: First, points are drawn from a certain parent point process, which play the role of cluster centers. Then, an individual cluster of descendant points is created by a different (independent) point process at each of the previously generated locations of parent points. The parent points themselves are disregarded for the final point pattern. More specifically, a homogeneous Poisson point process with intensity λM > 0 is used to draw the cluster centers. For each cluster, the number of descendant points is drawn from a Poisson distribution with rate parameter μM > 0, and the descendant points are uniformly distributed in a sphere with radius rM > 0 surrounding the cluster center. Thus, the sequence of random seed points \({\{{S}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\) is characterized by three model parameters: λM, μM, and rM;

The intensity of the parent point process λM and the cluster size rM are estimated using the so-called minimum contrast method with respect to the pair correlation function23, a functional characteristic which statistically describes the distances between pairs of seed points, where a peak at a given distance indicates that inter-point distances of that length occur frequently. In this context, the minimum contrast method minimizes the discrepancy (with respect to the L2-norm) between the theoretical pair correlation function of the Matérn cluster process and the one estimated from the sequence of seed points \({\{{{\bf{s}}}_{i}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\). Finally, the third model parameter μM can be estimated in the following way. Since a closed formula for the overall intensity of Matérn cluster processes is known23, it can be set equal to \({n}_{{\mathcal{T}}}/| {\mathcal{W}}|\), i.e., the number of seed points of the ground truth divided by the volume of the sampling window \(| {\mathcal{W}}|\). The resulting equation can then be solved for the mean number of points in each cluster μM.

The next step is to model the additive weights \({\{{w}_{i}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\) of the Laguerre tessellation fitted in Eq. (33). The easiest way to do this would be to estimate the probability distribution of additive weights in the fitted Laguerre tessellation and to independently sample from that distribution when the stochastic grain architecture model is simulated. However, it turns out that this approach is too simple and does not capture the interdependencies of the fitted generators well enough. We therefore choose a different approach: For each random seed point \({S}_{i}^{{\rm{M}}}\), we draw the corresponding additive weight \({W}_{i}^{{\rm{M}}}\) conditionally on the random distance \({D}_{i}^{{\rm{M}}}\) to its nearest neighboring seed point. By doing so, it is possible to give seed points which are grouped together in a spatial cluster different weights than those points that are isolated. This leaves us with the question of how to model the joint probability distribution of the additive weights and the nearest neighbor distances, from which the conditional probability distribution can be computed directly. One possible answer can be given by means of copulas53.

A two-dimensional copula C : [0, 1]2 → [0, 1] is a cumulative distribution function with [0, 1]-uniformly distributed marginal distributions. Sklar’s theorem53 states that for any two (real-valued) random variables X1 and X2 with the cumulative distribution functions \({F}_{{X}_{1}}\) and \({F}_{{X}_{2}}\) defined by \({F}_{{X}_{1}}({x}_{1})={\mathbb{P}}({X}_{1}\le {x}_{1})\) and \({F}_{{X}_{2}}({x}_{2})={\mathbb{P}}({X}_{2}\le {x}_{2})\) for all \({x}_{1},{x}_{2}\in {\mathbb{R}}\), respectively, there is a copula C such that their joint cumulative distribution function \({F}_{({X}_{1},{X}_{2})}\), defined by \({F}_{({X}_{1},{X}_{2})}({x}_{1},{x}_{2})={\mathbb{P}}({X}_{1}\le {x}_{1},{X}_{2}\le {x}_{2})\) for all \({x}_{1},{x}_{2}\in {\mathbb{R}}\), can be written as

In practice, it is often beneficial to consider probability densities instead of cumulative distribution functions. So assuming that C, \({F}_{{X}_{1}}\), and \({F}_{{X}_{2}}\) are differentiable, by applying the chain rule to Eq. (34) the joint probability density \({f}_{({X}_{1},{X}_{2})}\) of (X1, X2) can be obtained as

with \({f}_{{X}_{1}}\) and \({f}_{{X}_{2}}\) the probability densities of X1 and X2, respectively, and c the probability density of C, i.e.,

The conditional probability density \({f}_{({X}_{1}| {X}_{2})}\)(⋅∣x2) of X1 provided that X2 = x2 for some \({x}_{2}\in {\mathbb{R}}\) is then given by

see, e.g., Aas et al.54.

In our case, we consider the nearest neighbor distance D0 of the typical seed point—a characteristic that describes the Euclidean distance from a seed point, selected at random, to its nearest neighbor among the remaining seed points23. Furthermore, we consider the additive weight W0 of the typical seed point. Thus, X1 corresponds to the weight W0 of the typical seed point, and X2 to its nearest neighbor distance D0. This allows us to model the univariate marginal distributions of the random vector (W0, D0) with parametric distributions first, and then the dependence structure of (W0, D0) using (parametric) copulas. This drastically facilitates the modeling of higher-dimensional random vectors compared to deriving their joint distributions directly.

Motivated by the statistical analysis performed below regarding model validation, for the sequence of generators \({\widetilde{{\mathcal{G}}}}_{{\rm{opt}}}={\{{\widetilde{{{\bf{g}}}_{}}}_{i}^{{\rm{opt}}}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\) with \({\widetilde{{{\bf{g}}}_{}}}_{i}^{{\rm{opt}}}=({{\bf{s}}}_{i},{w}_{i})\) fitted in Eq. (33) to the experimental FIB-EBSD data, we assume that (i) the random additive weights \({W}_{1}^{{\rm{M}}},\ldots ,{W}_{{N}_{{\mathcal{T}}}^{{\rm{M}}}}^{{\rm{M}}}\) of the grain architecture model are conditionally independent provided that the sequence of random seed points \({\{{S}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\) is given, (ii) the random additive weight \({W}_{i}^{{\rm{M}}}\) of the ith seed point depends on the random point pattern \({\{{S}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\) only via the random distance \({D}_{i}^{{\rm{M}}}\) of S\({}_{i}^{{\rm{M}}}\) to its nearest neighboring seed point, (iii) the probability density of the two-dimensional random vector (W0, D0) of additive weight and nearest neighbor distance of the typical seed point is parametrically modeled as follows, using the representation formula given in Eq. (35). In particular, we assume that the first component W0 can be modeled by a (shifted) inverse-gamma distribution. That is, its density \({f}_{{W}_{0}}\) is given by

with shape parameter αM > 0, scale parameter βM > 0 and location parameter \({\theta }^{{\rm{M}}}\in {\mathbb{R}}\). Here, Γ denotes the gamma function. The name of this distribution originates from the fact that the reciprocal of a gamma-distributed random variable is inverse-gamma-distributed (and vice versa). Furthermore, we use the fact that for the cumulative distribution function \({F}_{{D}_{0}}\) of the nearest neighbor distance D0 of the typical seed point from a Matérn cluster process the following formula holds55:

where V(d, rM, x) is the volume of the intersection of two balls with radii d and rM such that the distance between their centers is equal to x, and \(F:{\mathbb{R}}\to [0,1]\) is the cumulative distribution function of the so-called contact distance, which is given by

For modeling the joint distribution of the random vector (W0, D0) we employ a special case of the Tawn coupla56, whose asymmetric behavior matches that observed in the data. The general definition of this copula is given by

with the parameters \({\psi }_{1}^{{\rm{M}}},{\psi }_{2}^{{\rm{M}}}\in [0,1]\) and κM > 1. In our case, we set \({\psi }_{1}^{{\rm{M}}}=1\).

To estimate the parameters \({\alpha }^{{\rm{M}}},{\beta }^{{\rm{M}}},{\theta }^{{\rm{M}}},{\psi }_{2}^{{\rm{M}}}\), and κM of the joint distribution of (W0, D0), we use the representation formula of the conditional probability density \({f}_{({W}_{0}| {D}_{0})}\) given in (37), i.e.,

maximizing the likelihood function

where \({\{{d}_{i}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\) is the sequence of nearest neighbor distances corresponding to the sequence of seed points \({\{{{\bf{s}}}_{i}\}}_{i = 1}^{{n}_{{\mathcal{T}}}}\).

In summary, the simulation of the 3D grain architecture is comprised of the following steps: First, the Matérn cluster process is simulated in the sampling window \({\mathcal{W}}\subset {{\mathbb{R}}}^{3}\) to get a realization \({\{{{\bf{s}}}_{i}^{{\rm{M}}}\}}_{i = 1}^{{n}_{{\mathcal{T}}}^{{\rm{M}}}}\) of the random Laguerre seed points \({\{{S}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\). Then, for each \({{\bf{s}}}_{i}^{{\rm{M}}}\), the distance \({d}_{i}^{{\rm{M}}}\) to its nearest neighbor within the point pattern \({\{{{\bf{s}}}_{i}^{{\rm{M}}}\}}_{i = 1}^{{n}_{{\mathcal{T}}}^{{\rm{M}}}}\) is computed and, conditional on \({d}_{i}^{{\rm{M}}}\), the corresponding additive weight \({w}_{i}^{{\rm{M}}}\) is drawn using the representation formula given in Eq (43). With the set of generators \(\{({{\bf{s}}}_{1}^{{\rm{M}}},{w}_{1}^{{\rm{M}}}),\ldots ({{\bf{s}}}_{{n}_{{\mathcal{T}}}^{{\rm{M}}}}^{{\rm{M}}},{w}_{{n}_{{\mathcal{T}}}^{{\rm{M}}}}^{{\rm{M}}})\}\), the final Laguerre tessellation can be discretized using Eq. (28) to obtain a realization \({\{{c}_{i}^{{\rm{M}}}\}}_{i = 1}^{{n}_{{\mathcal{T}}}^{{\rm{M}}}}\) of the stochastic grain architecture model \({\{{C}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\).

Stochastic multi-scale model for the outer particle shell and grain architecture

In this section, we describe our approach to combine the outer shell model {X(u) : u \(\in\) S2} and the grain architecture model \({\{{C}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\), introduced in the previous sections, resulting in a multi-scale model for the outer shell and grain architecture of NMC particles, see Fig. 5. Note that the outer shell model {X(u) : u \(\in\) S2} is fitted to reassemble the shape and size of NMC particles observed in nano-CT data, while the parameters of the random Laguerre tessellation \({\{{C}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\) are attuned to statistically represent the grain architecture of a single particle which was partially imaged with FIB-EBSD. Since the grain architecture of NMC particles might depend on their size, in the following we will consider a conditional version of the fitted outer shell model {X(u) : u \(\in\) S2} to only generate outer shells with a similar size as the NMC particle imaged using FIB-EBSD. Since the latter was only partially imaged its true size is unknown—therefore, in a first step, we describe an approach to estimate the particle size.

We start by computing a binary image \(B:{{\mathbb{Z}}}^{3}\to \{0,1\}\) depicting the (partially visible) outer shell of the NMC particle imaged with FIB-EBSD by

Then we fit the parameters of a sphere, i.e., the center \({{\bf{x}}}_{{\rm{c}}}\in {{\mathbb{R}}}^{3}\) and the diameter d > 0, to the outer shell image B by minimizing the discrepancy

where \({p}_{{{\bf{x}}}_{{\rm{c}}},d}({\bf{x}})\) denotes the point on a sphere with center xc and diameter d which is closest to x. Then, the fitted sphere’s diameter dopt = 7.44 μm is an estimate for the size of the particle which was partially imaged with FIB-EBSD. Using this size estimate, we generate virtual multi-scale particle morphologies according to the following scheme:

-

(i)

For some m > 0 and pairwise distinct orientation vectors u1, …, um \(\in\) S2, generate realizations \({{\bf{r}}}^{{\rm{sim}}}\) of the random vector (X(u1), …, X(um)) until the volume-equivalent diameter \(d({{\bf{r}}}^{{\rm{sim}}})\), given by

$$d({{\bf{r}}^{{\rm{sim}}}}) = \root{3}\of{\frac{6V({\bf{r}}^{\rm{sim}})}{\pi}},$$(47)belongs to the interval [0.98 dopt, 1.02 dopt], see Fig. 5a. Details on the computation of the volume \(V({{\bf{r}}}^{{\rm{sim}}})\) are given below.

-

(ii)

Generate a discretized realization \({\{{c}_{i}^{{\rm{M}}}\}}_{i = 1}^{{n}_{{\mathcal{T}}}^{{\rm{M}}}}\) of the stochastic grain architecture model \({\{{C}_{i}^{{\rm{M}}}\}}_{i = 1}^{{N}_{{\mathcal{T}}}^{{\rm{M}}}}\), see Fig. 5b.

-

(iii)

Keep only those cells \({c}_{i}^{{\rm{M}}}\) of the Laguerre tessellation \({\{{c}_{i}^{{\rm{M}}}\}}_{i = 1}^{{n}_{{\mathcal{T}}}^{{\rm{M}}}}\) for which the centroids, see Eq. (5), are located inside the outer shell defined by \({{\bf{r}}}^{{\rm{sim}}}\). We denote these cells by \({c}_{1}^{{\rm{I}}},\ldots ,{c}_{{n}_{{\rm{I}}}}^{{\rm{I}}}\).

-

(iv)

The discretized multi-scale particle morphology \({P}^{{\rm{M}}}:{{\mathbb{Z}}}^{3}\to {\mathbb{R}}\) is then given by

$${P}^{{\rm{M}}}({\bf{x}})=\left\{\begin{array}{ll}i,&\,{\text{if}}\, {\bf{x}}\ \,{\text{belongs}}\, {\text{to}}\,\ {c}_{i}^{{\rm{I}}}\ \,{\text{for}}\, {\text{some}}\, i \in \{1, \ldots ,{n}_{{\rm{I}}}\},\\ 0,&\,{\text{else}}.\end{array}\right.$$(48)

Figure 5c visualizes a realization of the multi-scale particle model. Note that due to this construction scheme the outer shell of a realization PM differs from the outer shell which was generated in step (i). More precisely, this approach introduces surface roughness which similarly can be observed in FIB-EBSD data21.

Model calibration

In this section, we describe the procedure for calibrating the model parameters of the stochastic geometry models for the outer shell and the grain architecture. The stochastic outer shell model was fitted using the Adam stochastic gradient-descent method51. First, we manually tuned the truncation parameter L in the series expansion given in Eq. (11) and the number K of Gaussian components, putting L = 15 and K = 7. For fitting the remaining model parameters, we chose m = 258 orientation vectors u1, …, um \(\in\) S2 which provide a triangular mesh on the unit sphere S2 57. The fitted model parameters p1, …, pK, μ(1), …, μ(K), a(1), …, a(K) are given in Table 1. Note that even though the model parameters of {X(u) : u \(\in\) S2} were fitted using m = 258 evaluation points, the realizations of the model can be evaluated at arbitrarily many sampling points.

The parameters of the grain architecture model were fitted using the procedure described above. With this, we get the following parameter values for the Matérn cluster process of seed points: λM = 0.00108, μM = 0.418, and rM = 2.58. Using the aforementioned maximum likelihood approach results in parameters for the distribution of the additive weights αM = 16.8, βM = 1247, θM = − 76.4, and for the copula κM = 1.25, \({\psi }_{2}^{{\rm{M}}}=0.372\). Note that the model calibration was performed based on the FIB-EBSD image data and uses its voxel length scale.

In order to evaluate the goodness of fit of the grain architecture model with respect to the Laguerre tessellation fitted to FIB-EBSD data, we first consider various characteristics of the Laguerre generators which have been used for model fitting. The goodness of fit with respect to further characteristics, not used for model fitting, will be analyzed in the next section.

In Fig. 6a, the pair correlation functions of the seed points of the fitted Laguerre tessellation and those of a realization of the stochastic grain architecture model are shown. In addition, the theoretical pair correlation function of the fitted Matérn cluster process is depicted in Fig. 6a. It becomes clear that the pattern of seed points of the fitted Laguerre tessellation is far from the complete spatial randomness assumption (indicated as dashed line in Fig. 6a at the y-axis value 1). In fact the high values of the pair correlation function for small distances prove that the seed points are spatially clustered. The fitted Matérn cluster process captures this behavior quite well.

Comparison of characteristics of the Laguerre tessellation fitted to FIB-EBSD data and the stochastic grain architecture model. Pair correlation function of seed points (a), probability densities of nearest neighbor distances (b) and additive weights (c), and two-dimensional (joint) density of additive weights and nearest neighbor distances of seed points (d). All distances are given in terms of the voxel length scale of FIB-EBSD data.

When considering the probability densities of the nearest neighbor distances in Fig. 6b, it is important to keep in mind that the theoretical one is fully specified by the Matérn cluster process and its parameters. While the theoretical density follows the general shape of the density, which has been estimated for the nearest neighbor distances of the seed points fitted to FIB-EBSD data (i.e., both densities are concentrated in roughly the same interval and the modes are at similar positions), the finer details are not fully captured. On the other hand, the (small) differences between the theoretical density and the density estimated for the simulated nearest neighbor distances are due to the finite sample size and the smoothing effect of the kernel density estimator.

The densities estimated for the additive weights of the Laguerre generators fitted to FIB-EBSD data and the simulated weights shown in Fig. 6c indicate a good fit. The small discrepancies can be explained by the fact that the latter are not drawn directly from the marginal distribution, but conditionally on the nearest neighbor distances of the corresponding seed points.

Finally, by considering a bivariate kernel density estimator for the joint density of nearest neighbor distances and additive weights, see Fig. 6d, a generally good correspondence can be observed. Most of the slight mismatches can be explained by the differences mentioned above between the theoretical and estimated densities of the nearest neighbor distances, cf. Fig. 6b.

Model validation

Having fitted the models for the outer shell and the grain architecture to the experimental data, model validation is performed by comparing probability distributions of size and shape characteristics of particles and grains extracted from tomographic image data to those of virtual particles and grains generated by the stochastic models. Note that these characteristics have not been used for parameter fitting.

For a quantitative validation of the fitted outer shell model {X(u) : u \(\in\) S2}, we first generate n discretized realizations, i.e., we independently draw n = 239 realizations \({{\bf{r}}}_{1}^{{\rm{sim}}},\ldots ,{{\bf{r}}}_{n}^{{\rm{sim}}}\) of the random vector \(\left(X({{\bf{u}}}_{1}),\ldots ,X({{\bf{u}}}_{m})\right)\). Then, we compare size and shape characteristics of the corresponding particles to those extracted from CT data. More precisely, for each outer particle shell, represented by an evaluation vector \({{\bf{r}}}^{{\rm{P}}}=({r}_{1}^{{\rm{P}}},\ldots ,{r}_{m}^{{\rm{P}}})\in {{\mathbb{R}}}^{m}\), we compute the volume-equivalent diameter d(rP) given by

where V(rP) denotes the volume of a particle corresponding to the evaluation vector rP. Since the entry \({{\bf{r}}}_{i}^{{\rm{P}}}\) of the latter represent the radius of the corresponding particle into the direction of the orientation vector ui, it is impossible to determine the true volume of the particle based on a finite number of directional radii \({r}_{1}^{{\rm{P}}},\ldots ,{r}_{m}^{{\rm{P}}}\). Therefore, we now specify the procedure for computing an estimate V(rP) for the particle’s true volume.

Recall that the orientation vectors u1, …, um chosen in the previous section form a triangular mesh of the unit sphere. More precisely, the surface of the unit sphere is approximated by triangles, the vertices of which are given by certain triplets \(({{\bf{u}}}_{{i}_{1}},{{\bf{u}}}_{{i}_{2}},{{\bf{u}}}_{{i}_{3}})\). We denote this set of triangle vertices by \({\mathcal{V}}\subset {\{{{\bf{u}}}_{1},\ldots ,{{\bf{u}}}_{m}\}}^{3}\). Then, by scaling the vertices in \({\mathcal{V}}\) with the corresponding radii we obtain a triangular mesh \({{\mathcal{V}}}^{{\rm{P}}}\) of the outer particle shell, i.e.,

From this representation of a particle we can easily compute an estimate for its volume by

where \({V}^{{\rm{tetra}}}({{\bf{v}}}_{1},{{\bf{v}}}_{2},{{\bf{v}}}_{3},{\bf{o}})\) denotes the volume of a tetrahedron with vertices v1, v2, v3 and the origin \({\bf{o}}=(0,0,0)\in {{\mathbb{R}}}^{3}\). Note that the representation of a particle’s surface as a mesh \({{\mathcal{V}}}^{{\rm{P}}}\) allows for the estimation of its surface area. More precisely, by summing up the areas of the triangles represented by the triplets of vertices in \({{\mathcal{V}}}^{{\rm{P}}}\) we obtain an estimate A(rP) for the surface area of a particle represented by the evaluation vector rP.

Inserting the estimate V(rP) of the particle volume given in Eq. (51) into Eq. (49) we computed the volume-equivalent diameters \(d({{\bf{r}}}_{1}^{{\rm{CT}}}),\ldots ,d({{\bf{r}}}_{n}^{{\rm{CT}}})\) and \(d({{\bf{r}}}_{1}^{{\rm{sim}}}),\ldots ,\left.d({{\bf{r}}}_{n}^{{\rm{sim}}})\right)\) of particles observed in CT and of model realizations, respectively. Figure 7a indicates a good fit between kernel density estimates of both samples.

Probability densities of the volume-equivalent diameter (a) and sphericity (b) of particles observed in CT data (blue) and model realizations (red). Bivariate probability densities of the volume-equivalent diameter and sphericity of particles observed in CT data (c) and model realizations (d). The corresponding sphericity distributions under the condition that d = 7.44 μm (e).

To validate the shape of model realizations, we investigate the sphericity s(rP) of the outer particle shell represented by the evaluation vectors rP, which is given by

The corresponding kernel density estimates of the sphericity values \(s({{\bf{r}}}_{1}^{{\rm{CT}}}),\ldots ,s({{\bf{r}}}_{n}^{{\rm{CT}}})\) and \(s({{\bf{r}}}_{1}^{{\rm{sim}}}),\ldots ,s({{\bf{r}}}_{n}^{{\rm{sim}}})\) are visualized in Fig. 7b which again indicates a good fit.

Moreover, we computed bivariate kernel density estimates for pairs of volume-equivalent diameter and sphericity of outer particle shells. More precisely, the bivariate probability density corresponding to pairs of characteristics \((d({{\bf{r}}}_{1}^{{\rm{CT}}}),s({{\bf{r}}}_{1}^{{\rm{CT}}})), \, \ldots ,(d({{\bf{r}}}_{n}^{{\rm{CT}}}),s({{\bf{r}}}_{n}^{{\rm{CT}}}))\) computed from CT data is visualized in Fig. 7c, whereas the bivariate density computed from the characteristics \((d({{\bf{r}}}_{1}^{{\rm{sim}}}),s({{\bf{r}}}_{1}^{{\rm{sim}}})), \, \ldots ,(d({{\bf{r}}}_{n}^{{\rm{sim}}}),s({{\bf{r}}}_{n}^{{\rm{sim}}}))\) of realizations of the outer shell model is depicted in Fig. 7d. A visual comparison indicates a good match between both bivariate probability densities. The bivariate probability densities indicate that small particles are preferentially more spherical though with a higher variance in sphericity values, while larger particles have a narrower sphericity distribution. Such distributions are material-specific with different trends for other electrode materials as shown in a previous work58.

Recall that for the multi-scale model, we generated solely outer shells from the model {X(u) : u \(\in\) S2} for which the corresponding particles have a volume-equivalent diameter close to dopt = 7.44 μm. To validate the sphericity distribution of particles whose outer shells were generated with this specific volume-equivalent diameter we computed the conditional probability densities of the sphericity from the bivariate probability densities depicted in Fig. 7c, d under the condition that d = 7.44 μm. These conditional probability densities are depicted in Fig. 7e which also indicates a good fit.

Until now, we only compared characteristics of particles extracted from CT data to those of realizations of the outer shell model {X(u) : u \(\in\) S2}. However, these realizations are used for generating realizations PM of the multi-scale model which may result in a modified outer shell of PM since they are given by certain ensembles of grains. More precisely, the outer shell ∂PM of a multi-scale particle PM corresponds to the boundary of the union of its grains, i.e.,

Thus, the outer shell ∂PM does not necessarily coincide with the realization of the outer shell model {X(u) : u \(\in\) S2} which was used for generating PM. Therefore, we perform further validation—by comparing the shape of particles extracted from CT data to the shape of outer shells which were computed from realizations of the multi-scale model.

To do so, we generated (discretized) realizations \({P}_{1}^{{\rm{M}}},\ldots ,{P}_{n}^{{\rm{M}}}\) of the multi-scale model. For better comparability to the outer shells extracted from CT image data, we first apply the same degree of smoothing to the realizations \({P}_{1}^{{\rm{M}}},\ldots ,{P}_{n}^{{\rm{M}}}\) as to the CT data during image processing described above. A smoothed realization of PM is visualized in Fig. 8. Then, we computed sphericity values of the smoothed versions of \({P}_{1}^{{\rm{M}}},\ldots ,{P}_{n}^{{\rm{M}}}\) and their corresponding kernel density estimate, see Fig. 7e, which indicates a slight underestimation of sphericity values for the multi-scale model.

Discretized realization of the outer shell model {X(u): u \(\in\) S2} (a), the corresponding realization of the multi-scale model (b). The discretized outer shell of the realization of the multi-scale model is depicted in (c). In (d) the outer shell depicted in (c) is visualized after applying the same degree of smoothing as for the CT data.

After the validation for the outer shell model, we now consider cell characteristics computed from realizations of the stochastic grain architecture model, which have not been used for model fitting, and compare them to those of the grains in the experimental FIB-EBSD data. These comparisons serve on the one hand as (further) measures for the goodness of fit of the fitted Laguerre tessellation with respect to the (segmented) FIB-EBSD image data, but also as validation for the stochastic model since grain-based characteristics have not been used for model fitting. A more comprehensive analysis of the FIB-EBSD image data was provided by Furat et al.21.

The probability density of volume-equivalent diameters of the fitted Laguerre cells, visualized in Fig. 9a, closely matches that computed from the experimental FIB-EBSD data, whereas the stochastic grain architecture model tends to produce more medium sized grains and fewer very large grains. A similar behavior can be asserted for the densities of grain/cell surface areas, see Fig. 9b. Regarding the sphericity of cells, the densities computed from a realization of the stochastic model and from its ground truth, the fitted Laguerre tessellation, indicate a good match, where, however, some slight mismatches to the density computed from the FIB-EBSD image data are visible, see Fig. 9c. Note that for all three characteristics, the corresponding mean values are all in close proximity, and most discrepancies come from a slightly different skewness and tail-behavior of the probability densities. The histogram of the numbers of cell neighbors for the simulated data, visualized in Fig. 9d, is shifted a little bit to the right, compared to the similarly distributed numbers of cell/grain neighbors in the fitted Laguerre and segmented FIB-EBSD data. In summary, together with a visual inspection in Fig. 4, a very good match of the fitted Laguerre tessellation and the (segmented) experimental FIB-EBSD data can be observed. Furthermore, the stochastic grain architecture model captures all considered structural characteristics reasonably well.

Grain-based characteristics of the segmented FIB-EBSD image data, the fitted Laguerre tessellation and of a realization of the stochastic grain architecture model. Estimated probability densities and mean values (dashed vertical lines) of the volume-equivalent diameter (a), surface area (b), and sphericity (c); and histograms of the number of grain neighbors (d).

Discussion

As shown in the previous section, the proposed multi-scale model for the outer shell and grain architecture of NMC particles represents the particle(s) mapped with CT and FIB-EBSD sufficiently well. However, in principle, modifications and further extensions of the outer shell model and the grain architecture model are possible, although their fitting and validation with respect to tomographic image data might then be computationally more expensive.

Regarding the outer shell model the following modifications are possible: Recall that according to Eqs. (13) and (15) we have to sample from multivariate normal distributions to generate the values of a realization of the random field {X(u) : u \(\in\) S2} at some orientation vectors u1, …, um \(\in\) S2. However, the dimension of the multivariate normal distributions increases with the number of considered orientation vectors, i.e., when the realization is to be highly resolved, we have to draw from a high-dimensional normal distribution which can be inefficient. For such inquiries it can be useful to utilize an alternative representation of Gaussian random fields {Z(u) : u \(\in\) S2} considering a series expansion with respect to so-called spherical harmonics functions. More precisely, an isotropic Gaussian random field {Z(u) : u \(\in\) S2} on the unit sphere with mean value μ and angular power spectrum a = (a0, a1, …, aL) adheres to the representation

where the \(a_{\ell,k}\) are independent random variables with \({a}_{\ell ,0} \sim {\mathcal{N}}(\mu ,{a}_{\ell })\) for \(\ell\) ≥ 0 and \({\rm{Re}}({a}_{\ell ,k}),{\rm{Im}}({a}_{\ell ,k}) \sim {\mathcal{N}}(0,{a}_{\ell }/2)\) for \(\ell\), k > 0, see29,40. Note that the spherical harmonics functions \({Y}_{\ell ,k}:{S}^{2}\to {\mathbb{C}}\) are given by

where i denotes the imaginary unit and \(P_{\ell,k}\) are the associated Legendre functions59. The representation of {Z(u) : u \(\in\) S2} given in Eq. (54) has the advantage that we can first generate realizations of the random coefficients \(a_{\ell,k}\), the number of which does not depend on the number of evaluation points u1, …, um. Then, we can compute the values of the corresponding realization of the random field {Z(u) : u \(\in\) S2} at arbitrarily many orientation vectors, i.e., we can obtain highly resolved realizations of {Z(u) : u \(\in\) S2} witout having to simulate high-dimensional normally distributed random vectors. The spherical harmonics representation given in Eq. (54) can also be utilized for simulating the fitted Gaussian mixture model {X(u) : u \(\in\) S2}. More precisely, we have to randomly pick a component according to the probabilities p1, …, pK introduced in Eq. (14) and then generate realizations of the spherical harmonics coefficients of the corresponding Gaussian model.

The validation of the outer shell model {X(u) : u \(\in\) S2} indicates a good match between distributions of size/shape characteristics of model realizations and particles extracted from CT data, see Fig. 7. However, Fig. 7e indicates that the sphericity distribution of the outer shell of realizations PM of the multi-scale model is slightly underestimated. Therefore, further modifications to the outer shell model could be taken into account, which can ensure an even better match between the sphericity distributions of particles extracted from CT data and model realizations. More precisely, rejection sampling60 could be applied: Let fdata denote the (size-conditioned) probability density of the sphericity values of particles extracted from CT data. Furthermore, let fmodel be the corresponding probability density of some outer shell model {X(u) : u \(\in\) S2}, being, e.g., a Gaussian random field or a mixture of Gaussian random fields. Note that fmodel can be determined by extensive simulation, e.g., by generating a large number of outer shells from which the sphericity distribution can be estimated. For rejection sampling to be applicable the inequality fdata(x) ≤ Mfmodel(x) has to hold for each x \(\in\) [0, 1], where M > 1 is some constant. Then, the rejection sampling is performed as follows:

-

(i)

Generate a realization \({{\bf{r}}}^{{\rm{sim}}}\) of (X(u1), …, X(um)) and a random number u \(\in\) [0, 1] drawn from the uniform distribution on the unit interval.

-

(ii)

If \(u\,<\,\frac{{f}_{{\rm{data}}}(s({{\bf{r}}}^{{\rm{sim}}}))}{M{f}_{{\rm{model}}}(s({{\bf{r}}}^{{\rm{sim}}}))},\) the vector \({{\bf{r}}}^{{\rm{sim}}}\) is accepted as a realization of the rejection sampling scheme, otherwise go back to step (i).

Note that the sphericity distribution of outer shells generated by rejection sampling matches fdata perfectly. However, since this modeling approach solely considers the sphericity value it does not necessarily lead to a good match with distributions of other shape characteristics. On the other hand, the model {X(u) : u \(\in\) S2} for the outer shell described in the present paper was not fitted directly to size and shape characteristics of particles extracted from CT data, but to the representation of outer shells as radius functions which were evaluated on a mesh of the unit sphere’s surface. In other words, the model {X(u) : u \(\in\) S2} was fitted directly to a (approximative) representation of the outer shell which is more exhaustive than the aggregated sphericity value. This issue of the rejection sampling scheme described above could be remedied by considering multivariate probability densities which incorporate further particle shape characteristics. In this manner, more informative vectors of such characteristics can be used to represent outer shells. However, this can lead to an increase in the value M, which makes the simulation procedure computationally unfeasible. This is due to the fact, that, on average, step (i) in the rejection sampling scheme has to be performed M times until a single realization is accepted.

Furthermore, note that due to the choice of the parametric covariance functions (see Eq. 20) for the individual components of the mixture {X(u) : u \(\in\) S2} of Gaussian random fields, the realizations of the outer shell model exhibit smooth surfaces—this can also be seen by the representation of the corresponding isotropic Gaussian random fields given in Eq. (54). However, in the context of the present paper, the smoothness of realizations of the outer shell model is no issue, since the realizations reassemble the particles depicted in CT data reasonably well. For Gaussian random fields on the sphere, the realizations of which exhibit a rough surface, we refer the reader to30, where various parametric covariance functions are considered to generate fractal surfaces. Alternatively, surface roughness can be introduced by additional subsequent modeling steps, e.g., the outer shell of realizations of the presented multi-scale model exhibit faceted surfaces, see Fig. 8c.

As described at the end of the “Results” section, the general fit of the grain architecture model is reasonably good. Nevertheless, also with respect to this component of our multi-scale model, modifications and extensions are possible: Note that most of the aforementioned discrepancies can be explained by the differences regarding the seed point pattern of the fitted Laguerre generators and the ones from the simulated data, and the thus resulting differences regarding the nearest neighbor distributions. An obvious solution is to fit the parameters of the Matérn cluster process by the minimum contrast method with respect to the nearest neighbor distance distribution instead of the pair correlation function. While this improves the quality of fit of the nearest neighbor distance distribution, it degrades the one for the pair correlation function and pronounces the trend of the simulated cells being too large. So the general fit of the simulated grain architecture would be worse compared to the results obtained by the approach proposed in the present paper.

Another way to further improve the fit would be to employ a more sophisticated (seed) point process model. For example, instead of the Matérn cluster process, another type of random point processes could be considered, such as a suitably chosen Gibbs point process23, that could capture both the pair correlation and the nearest neighbor distances of the fitted Laguerre generators better. However, fitting the parameters of Gibbs point processes is computationally much more expensive than fitting the parameters of a Matérn cluster process. In addition, formulas for the nearest neighbor distance distribution for more sophisticated types of point processes (including Gibbs processes) are hard to derive theoretically, and, since they are necessary to model the conditional probability density of additive weights, must therefore be approximated, e.g., through Monte-Carlo techniques. This would introduce a further source of error and complexity.