Abstract

Uncertainty quantification (UQ) in metal additive manufacturing (AM) has attracted tremendous interest in order to dramatically improve product reliability. Model-based UQ, which relies on the validity of a computational model, has been widely explored as a potential substitute for the time-consuming and expensive UQ solely based on experiments. However, its adoption in the practical AM process requires overcoming two main challenges: (1) the inaccurate knowledge of uncertainty sources and (2) the intrinsic uncertainty associated with the computational model. Here, we propose a data-driven framework to tackle these two challenges by combining high throughput physical/surrogate model simulations and the AM-Bench experimental data from the National Institute of Standards and Technology (NIST). We first construct a surrogate model, based on high throughput physical simulations, for predicting the three-dimensional (3D) melt pool geometry and its uncertainty with respect to AM parameters and uncertainty sources. We then employ a sequential Bayesian calibration method to perform experimental parameter calibration and model correction to significantly improve the validity of the 3D melt pool surrogate model. The application of the calibrated melt pool model to UQ of the porosity level, an important quality factor, of AM parts, demonstrates its potential use in AM quality control. The proposed UQ framework can be generally applicable to different AM processes, representing a significant advance toward physics-based quality control of AM products.

Similar content being viewed by others

Introduction

Additive manufacturing (AM) can offer tremendous time and cost advantages over traditional manufacturing processes especially in fabricating customized components with complex geometry1,2. However, the complicated layer-by-layer manufacturing process makes as-fabricated AM components prone to great variability in both quality and properties3. Consequently, it is extremely challenging to repeat the manufacture of a good-quality product in mass production, as evidenced by the interlaboratory AM tests4,5.

The variability in AM product quality is mostly caused by uncertainty propagation from various uncertainty sources existing in the complex AM process6. Typical uncertainty sources include natural variation in powder absorptivity, fluctuation in temperature boundary, uncertainty in powder particle properties, and many others7,8. To achieve quality control under uncertainty, uncertainty quantification (UQ) is usually employed by quantitatively correlating quality to uncertainty sources and process conditions, followed by process optimization9. This is typically termed as “type I robust design” in mechanical design, i.e., optimizing control factors (process conditions) to reduce quality variability caused by noise factors (uncertainty sources)10.

The melt pool and its geometrical characteristics, as one of the most important process signatures developed during an AM process11,12,13, are intimately associated with various properties of as-fabricated AM parts. The melt pool thus acts as an effective proxy for the overall product quality. A good predictive capability for the melt pool geometry can serve as a basis for the efficient, comprehensive UQ and quality control of an AM product. For example, the elongated melt pool would induce a nearby steep thermal gradient along the vertical building direction. It controls the thermal-gradient dependent grain growth and hence the columnar microstructure development within AM parts14. Such thermal gradients might also dictate the primary dendrite growth and thus the solidification textures at the sub-grain scale15. In addition, it is widely known that the melt pool cross-sectional area, which is typically measured by the pool width and depth, largely determines the porosity formation due to insufficient pool overlapping16. Therefore, the geometry of a melt pool is often used as the quantity of interest (QoI) in the UQ analysis of AM17,18,19.

Model-based AM UQ as shown in Fig. 1 is a much more cost-effective approach than experimental-based UQ, which completely relies on repetitive experiments while metal-based AM experiments are notoriously expensive. In this context, a data-driven surrogate model13,20 based on computational data is strongly preferred (see Fig. 1a), to provide instantaneous knowledge about a QoI, the melt pool in this case, under any AM condition (see Fig. 1b). The computationally much less expensive surrogate model is essential for a successful UQ, which requires a tremendous number of realizations of QoI using a Monte Carlo approach8. However, the current model-based UQ in metal AM still faces an enormous challenge: the model uncertainty of the predictive model6,8. Model uncertainty results in predictive inaccuracy and basically arises from two aspects, namely, (1) our inaccurate knowledge of uncertainty sources, and (2) bias of the predictive model itself. First, there are usually very limited data on uncertainty sources, and thus there is a lack of accurate distributions because their in-process measurements (e.g., fluctuating power absorptivity) are challenging due to the complexity of AM. Second, a computational model always contains a number of assumptions or simplifications compared to true physics. This leads to a discrepancy between the computational model and reality21. In the AM community, a UQ study is essentially the investigation of the variability in QoI originating from physics-based uncertainty sources by using the predictive model. To quantify the uncertainty of QoI in AM systems, one needs to ask one question, that is, can we reduce the model uncertainty to build a more accurate predictive model?

a AM thermal simulations at 300 sampling points are performed to provide a large training dataset of the pool length, width, and depth; b a data-driven melt pool surrogate model is built based on the simulation-obtained data; c experimental data are collected from AM-Bench tests25,30, for uncertainty reduction of melt pool model. The measured fluctuation information of the melt pool, represented by the interval data of pool length, are especially used in the current calibration. These experimental data of three dimensions of the melt pool are obtainable through a close collaboration between in situ monitoring and ex situ measurement; d a sequential Bayesian calibration method (i.e., SeCAV) is adopted to systematically quantify the uncertainty sources by filtering the misleading information contained in experimental data. The model bias of the as-built melt pool surrogate model is also corrected. Details of d can be seen in Fig. 3; e upon validation the calibrated melt pool surrogate model is capable of efficiently predicting three dimensions of the developed melt pool, as well as its fluctuation caused by uncertainty sources; f to illustrate the practical usefulness of the developed melt pool surrogate model, the predicted pool width and depth information can be incorporated into a porosity predictive model, to investigate the uncertainty of porosity formation and thus variability in porosity level among AM parts.

To our best knowledge, a melt pool surrogate model incorporating the full pool dimensions, length (L), width (W), and depth (D), does not exist. There has been a very recent attempt22 to develop the multi-fidelity melt pool surrogate model with the calibration of uncertain parameters by using limited experimental data. However, only experimental point data without measured melt pool fluctuations were used for model calibration, and thus it suffers from the data deficiency. Furthermore, all the experimental data are directly used for calibration without model validity assessment, potentially resulting in the inaccurate uncertainty reduction (UR) during experimental calibration.

A successful data-derived model for the melt pool requires the availability of a sufficient amount of quality data. For a surrogate model derived from physics-based simulations, it requires extensive simulations at sufficiently high resolution to provide reliable training data. This can be a challenge for carrying out computationally intensive three-dimensional simulations and post-processing large-scale high-fidelity data. On the other hand, acquiring three-dimensional experimental data of the melt pool and its fluctuations is difficult, demanding close synergy between in situ and ex situ measurements (see Fig. 1c). Finally, a successful melt pool surrogate model should also be able to make the best use of available data in the open data source.

In this study, we propose a sequential calibration and validation (SeCAV) method23 with model bias correction to reduce the uncertainty of the data-driven melt pool model (see Fig. 1d). It allows us to make efficient predictions on the 3D fluctuating melt pool geometry under arbitrary processing conditions (see Fig. 1e). We take advantage of extensive simulation-obtained data through high-throughput AM simulations9,24 and high-quality experiment data from the AM-Bench project at NIST25. It should be emphasized that while existing AM UQ analyses are mostly limited to the melt pool geometry, we integrate the calibrated melt pool surrogate model with a probabilistic porosity model (see Fig. 1f), to facilitate the UQ analysis of practical product properties.

Results

Terminologies for uncertainties in AM

To facilitate the discussions, we first explain the terminologies for UQ. We then present the data-driven melt pool model (Fig. 1b) based on the computational data (Fig. 1a) followed by the introduction of the UR for the initial melt pool model (Fig. 1d) by calibration of uncertainty source parameters and correction of model bias using the experimental data obtained in Fig. 1c. Finally, the UQ analysis of the porosity level is carried out with the aid of the calibrated melt pool model as shown in Fig. 1e, f.

Uncertainties are typically classified into two categories: aleatory and epistemic6. For example, for the uncertainty on the fluctuating power absorptivity, η, the power absorptivity, as well as other uncertainty sources, are both aleatory and epistemic. The aleatory uncertainty of the power absorptivity refers to the natural variability in the power absorptivity, and thus the uncertainty source parameter, η, is mathematically a distribution rather than a point value. On the other hand, the epistemic uncertainties are associated with artifacts by human and can be reduced. Therefore, we have limited knowledge of the accurate distribution of η due to the lack of a sufficient number of direct and repetitive measurements of power absorptivity. Model bias is also a type of epistemic uncertainty since it arises from a computational model not capturing all the physics involved and can thus be reduced through additional model correction.

The main objective of the UQ study in AM is to reduce epistemic uncertainty towards reliable quantification of the aleatory uncertainty and to determine how aleatory uncertainties from different sources propagate to the aleatory-type variabilities in QoI like the melt pool geometry or final product properties.

Melt pool surrogate model

As described in Fig. 1, we first perform 300 AM simulations at random training points by the Latin hypercube sampling method26. We then construct three initial melt pool surrogate models for the melt pool length, width, and depth, respectively. We conduct tenfold cross-validation to test the effectiveness of the surrogate models in capturing the relationship between the melt pool dimensions and the multiple inputs for the processing parameters. We split all the training data into ten groups with one group employed as test data and the other nine groups as training data for each cross-validation test. We adopt the Gaussian process (GP) model27, one of the most widely used and tested machine learning techniques, for surrogate modeling with the details of GP presented in Supplementary Note 3.

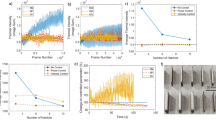

The cross-validation results in Fig. 2 show a very minor deviation of all points from the reference line of y = x, implying a high prediction accuracy of the surrogate model. The average relative errors for the melt pool length/width/depth prediction are, respectively, 5.74%, 3.26%, and 10.08%, giving us confidence that the computationally intensive AM simulations can be replaced by the constructed melt pool surrogate models.

Model UR

The as-built melt pool surrogate models require model UR, i.e., the calibration of the uncertainty source parameters and the correction of model bias. We employ ys(x, θ) and ye(x) to represent the AM simulation-derived surrogate model prediction and the corresponding experimental observation for the QoI, y. They are simply related by

where δ(x) is the model bias between the simulation model/surrogate model prediction and the experimental measurement, x represent controllable variables that can be precisely controlled during experiments (e.g., laser power and scanning speed), and θ is the uncertainty source parameters to be calibrated (see Table 1). The θ parameters are distribution-type in nature, and so is ye(x) by uncertainty propagation. The accurate distributions of θ are unknown and thus require inverse inference from limited experimental data ye(x). To confidently predict QoI and its uncertainty for any given x, we will perform the calibration of uncertain parameters θ and determine the model bias δ(x) to systematically reduce the model uncertainty.

We conduct the calibration of uncertain parameters employing Bayesian calibration28, which is one of the most popular methods for statistical inference (see Fig. 3a). The conventional Bayesian calibration, represented by Direct1 in this paper, yields a quantitative update of uncertainty based on probability theory. As shown in Fig. 3a, solving the Bayesian problem involves computing the posterior probability density function (PDF) of uncertain parameters f(θ∣ye) with the knowledge of the experimental data (i.e., ye) of QoI

where f(θ∣ye) represents the likelihood or probability of observing ye for the AM simulation model \(y_{\rm{s}}({\mathbf{x}},{\mathbf{\theta }})\) with respect to different specific values of \({\mathbf{\theta }}\) as shown in Fig. 3a. \(f({\mathbf{\theta }})\) is the prior PDF of uncertain parameters (i.e., before observing data \({\mathbf{y}}_{\rm{e}}\)), and the \(f({\mathbf{y}}_{\rm{e}})\) is the probability for the given evidence \({\mathbf{y}}_{\rm{e}}\) and is usually fixed. There are different methods for computing \(f({\mathbf{\theta }}\left| {{\mathbf{y}}_{\rm{e}}} \right.)\). Here, we employ the particle filter29 involving the prior sampling, likelihood weighting, and posterior resampling.

a The conventional Bayesian calibration (i.e., the direct method), b the SeCAV method for uncertain source parameters, and c model bias correction. The direct method in a updates the prior knowledge of the uncertain parameters listed in Table 1 using all the information (i.e., the likelihood) provided by the experimental data from AM benchmark tests (see Fig. 1c). In b, the SeCAV method implements the model validation and calibration in a sequential manner. In each iteration, the model validation serves as a filter to select the experimental data with the maximum confidence probability for the Bayesian inference. After that, the current posterior distributions of the uncertain parameters are updated by averaging the results of Bayesian inference and the previous posterior distribution, with the confidence probability as a weight factor. In c, the model bias is corrected by training a bias surrogate model with the bias data that is obtained at the maximum a posterior probability (MAP) estimation of the sequentially updated posterior distribution. Finally, the posterior prediction of the calibrated/updated melt pool model is calculated based on the results of calibration (i.e., \(f_{{\mathrm{SeCAV}}}({\mathbf{\theta }}\left| {{\mathbf{y}}_{\rm{e}}} \right.)\)) and correction (i.e., \(\delta ({\mathbf{x}})\)).

It should be pointed out that conventional Bayesian calibration ignores the uncertainty stemming from the model discrepancy (i.e., \(\delta ({\mathbf{x}})\) between the simulation model and the reality in Eq. (1)) as well as the potential measurement error while calculating the likelihood \(f({\mathbf{y}}_{\rm{e}}\left| {\mathbf{\theta }} \right.)\), which may result in inaccurate posterior distributions. To address this challenge, one can add a zero-mean random noise to capture the aforementioned uncertainty in the estimation of \(f({\mathbf{y}}_{\rm{e}}\left| {\mathbf{\theta }} \right.)\) as follows:

where \({\mathbf{y}}_{\rm{e}} = (y_{\rm{e}}^1,y_{\rm{e}}^2, \ldots ,y_{\rm{e}}^{N_{\rm{e}}})^T\), \(\mu _{\rm{s}}({\mathbf{x}}_{\rm{e}}^{i},{\mathbf{\theta }})\) and \(\sigma _{\rm{s}}^2({\mathbf{x}}_{\rm{e}}^{i},{\mathbf{\theta }})\) are, respectively, the mean prediction and variance of AM simulation-derived surrogate model, i.e., \(y_{\rm{s}}({\mathbf{x}},{\mathbf{\theta }})\), \(\sigma _{\rm{noise}}^2\) is the variance of the added random noise to account for all possible sources of uncertainty excluding surrogate prediction uncertainty. This kind of Bayesian method, namely Direct2, simultaneously updates the calibration parameters and the standard deviation of random noise using experimental data.

Moreover, we develop a SeCAV method23 that implements the physics-based model validation and Bayesian calibration by using each of experimental data (i.e., \({\mathbf{y}}_{\rm{e}}\)) of QoI in a sequential manner, as illustrated in Fig. 3b. In each iteration, the physics-based model validation is first conducted at each experimental data point to answer the question: to what degree can we trust the likelihood \(f({\mathbf{y}}_{\rm{e}}\left| {\mathbf{\theta }} \right.)\) and the Bayesian calibration result obtained based on \(f({\mathbf{y}}_{\rm{e}}\left| {\mathbf{\theta }} \right.)\). Therefore, a confidence probability, \(P_\lambda ({\mathbf{x}}_{\rm{e}}^{i})\), is calculated for each data by

where \(\varepsilon_{\rm{r}}({\mathbf{x}}_{\rm{e}}^{i})\left| {\mathbf{\theta }} \right.\) is the relative error of \(y_{\rm{s}}({\mathbf{x}}_{\rm{e}}^{i},{\mathbf{\theta }})\) with respect to \(y_{\rm{e}}({\mathbf{x}}_{\rm{e}}^{i})\), \(\varepsilon_{\rm{r}}^{\rm{c}}\) is a confidence threshold, \(\tilde f({\mathbf{\theta }})\) is the current prior distribution updated by the result of the previous iteration. In the sequential circle of Fig. 3b, the physics-based model validation serves as a filter to select the experimental data with the maximum confidence probability for the subsequent Bayesian calibration. A larger confidence probability means a more trust-worthy calibration result, and thus a larger weight will be given to the Bayesian calibration in weighted averaging. Otherwise, we retain the calibration result from the previous iteration. In ith iteration, the distribution of \({\mathbf{\theta }}\) is updated by

where \(({\mathbf{x}}_{\rm{e}}^{{i}_{\max }},y_{\rm{e}}^{{i}_{\max }})\) is the data with the maximum confidence probability, \(f_{{\mathrm{Baye}}}({\mathbf{\theta }}|y_{\rm{e}}^{{i}_{\max }})\) is the posterior distribution updated by Bayesian calibration using data \(({\mathbf{x}}_{\rm{e}}^{{i}_{\max }},y_{\rm{e}}^{{i}_{\max }})\). Such a weighted averaging in a sequentially updating manner from larger confidence to lower confidence enables a great trade-off between the accuracy and UR.

We further correct the bias between the melt pool model and true physics by building another GP model for the bias (see Fig. 3c). The training data, i.e., (x, δ(x)), are observed by fixing uncertain parameters θ at the values with the maximum posterior PDF. We finally achieve a reliable and accurate posterior prediction of melt pool surrogate model by integrating the simulation-derived surrogate model with respect to the posterior distribution of uncertain parameters and by adding the prediction of the bias surrogate model.

Calibration of uncertainty source parameters

We consider the five uncertainty source parameters θ listed in Table 1. An intuitive approach to determine their accurate distributions is based on copious point data obtained from a sufficient number of repetitive measurements. While directly measuring the uncertainty sources during the AM process (e.g., fluctuating laser power absorptivity) is often technically challenging and sometimes even impossible, we would inversely infer their distributions based on relatively easily measurable QoI, here the melt pool and its fluctuations. This is basically accomplished through experimental calibration of their assumed prior distributions.

As shown in Table 2, we use seven groups of experimental melt pool data (i.e., \(y_{\rm{e}}({\mathbf{x}})\)) taken from the AM-Bench project by NIST30. The pool fluctuation is represented by the interval data of measured pool length. We assume that the inferred uncertainty sources from the measured pool length alone should be trustworthy and shared for predicting the whole melt pool geometry. Because for a given AM machine, the variabilities in the pool length and the whole pool are caused by the same uncertainty sources. Note that the current study uses single-track AM data. Nonetheless, the present approach can be readily extended to multi-track AM scenarios with the availability of multi-track experimental data.

Figure 4 shows updated distributions (i.e., posterior distributions) of various uncertainty source parameters after experimental calibration, by using the direct and SeCAV methods, respectively. For all methods, the calibrated uncertain parameters exhibit apparently more concentrated distributions compared to their original assumed ones, i.e., there is a reduction of epistemic uncertainty via experimental calibration. However, the calibration results are rather different from each other. For the Direct1 method, the calibrated uncertain parameters tend toward quite narrowed distributions with spiky peaks, indicating little aleatory uncertainty or natural variability of these uncertainty sources. The Direct2 and SeCAV methods, however, leads to uncertainty parameters with relatively flat distributions, implying a great natural variability in those uncertainty sources. The results by Direct2 and SeCAV seem to be more plausible based on previous researches. For example, the Gaussian power distribution radius assumed in prior researches exhibits large differences, ranging from 5031 to 70 μm30, both for simulating a selective laser melting process based on an EOSINT M270 machine at NIST. For the selective electron beam (EB) melting process (based on an Arcam S12 machine), the adopted power absorptivity varies from 0.632–0.933. The distinctive values adopted by different groups of researchers basically may be considered as the aleatory uncertainty or natural variability.

The uncertainty source parameters, including a preheating temperature, b laser absorptivity, c Gaussian power distribution radius, d heat convection coefficient, and e ambient temperature, see a significant uncertainty reduction, presenting as a more concentrated distribution after experimental calibration.

The erroneous inference about uncertain parameters in the Direct1 method may stem from the model bias by the thermal model/GP model. It may also be due to the fact that the whole experimental data are directly used (see Fig. 3a) with potentially wrong information included, thus causing herein the over-calibration of uncertain parameters, which may further affect the prediction accuracy of the calibrated melt pool model (see Fig. 5), as detailed in the next subsection. Note that unlike the Direct1 method, it is hard to explicitly assess the inaccuracy of the Direct2 method in parameter calibration. Later, we will further investigate the finally calibrated models by the three methods.

The prior and posterior mean prediction of a melt pool length, b melt pool width, and c melt pool depth, as well as d–f the corresponding probability contours showing the prediction uncertainty. It is worth noting that prior prediction is directly estimated by the melt pool surrogate models (trained in Fig. 1b) with the assumed prior distributions of the uncertain parameters. The comparison results in c indicate that the melt pool depth prediction sees an increment of the prediction accuracy at the test data (No. 3) after uncertainty reduction. In d–f, the locations of black line/pentagram represent the true melt pool length, depth, and width of the No. 3 experimental data. Both the direct and SeCAV methods greatly reduce the prediction uncertainty, but SeCAV yields more accurate results.

A closer examination of SeCAV calibration results by adjusting the scale of plots (see insets in Fig. 4) shows obvious peaks or multi-peaks in the distributions of five uncertainty sources, but far less spiky than those from the direct Bayesian calibration results. These smoother peaks are reasonable because uncertainty sources fluctuate, and the fluctuations should naturally center around some specific expectations.

It can also be observed that different uncertainty sources exhibit different distribution characteristics such as the distribution width, indicating inherently different variation levels and patterns for different uncertainty sources. For example, ambient temperature and preheating temperature in the manufacturing chamber are supposed to show fewer fluctuations as a result of advanced control algorithms and, therefore, have smaller peaks with relatively narrow variations. We would like to note that uncertainty sources, in general, should fluctuate around one specific value like the setting value for the ambient temperature. However, here the multiple peaks are not calibration errors from the inadequacy of the SeCAV method. Instead, they are attributed to the limited experimental data, as well as to the further filtering for the useful information by the SeCAV method. Additional experimental data are expected to further reduce the aleatory uncertainties (probably leading to single-peak form) and improve the calibration results.

Bias correction of melt pool model

We further perform model bias correction using the maximum a posterior probability (MAP) estimated from the posterior distribution shown in Fig. 4. We then train another surrogate model for the model bias shown in Fig. 3c. It is shown that the uncertainty of the melt pool surrogate model is greatly reduced by using the posterior distributions shown in Fig. 4 and by incorporating the surrogate model of the model bias.

Figure 5 illustrates the influence of model UR on the prediction accuracy. Here we select the third cross-validation, in which case No. 3 experiment data is selected for validation while the remaining data are used for calibration. The parameter calibration and bias correction lead to a better agreement between the prediction surface and experimental data at No. 3 data in Fig. 5a–c. Table 3 also indicates the clear improvement (i.e., the posterior prediction is closer to the true value) of prediction accuracy, which emphasizes the necessity of experimental calibration in Fig. 1c for the UQ analysis after the melt pool surrogate modeling in Fig. 1b. Figure 5d–f provides the joint probability contours of the posterior predictions considering their uncertainty with the No. 3 test data, which will be utilized by the subsequent UQ of porosity level. They demonstrate that the prediction uncertainty is reasonably reduced by SeCAV compared to the prior prediction and that of direct methods.

A comparison between the direct and SeCAV methods illustrates their different efficacy in UR. The predicted mean width and depth using the SeCAV method are close to the true values whereas the direct methods show relatively large errors. The inaccurate prediction for the direct methods stems largely from the incorrect parameter calibration in Fig. 4. It thus implicitly demonstrate the relative inaccuracy of the Direct2 method in earlier parameter calibration. We obtain similar conclusions by comparing the joint probability contours of the posterior distribution for the predicted melt pool length and width, or the predicted melt pool length and depth from the two methods.

Figure 6 summarizes all the seven leave-one-out cross-validation results by using direct and SeCAV methods. Overall the predictions from the surrogate model calibrated by using the SeCAV method match well with experimental measurements. In Fig. 6b, c, nearly all point data (measured pool width and depth) are located within the predicted intervals. However, in Fig. 6a, comparing the predicted and measured pool length reveals a general overestimation of the variability in the melt pool. This overestimation might be alleviated or even eliminated with the availability of additional experimental data by further UR and enhanced inference of uncertainty sources. Nevertheless, for all seven cases in Fig. 6a, the currently predicted intervals well encompass practical pool length variations, indicative of our experimental calibration proceeding in the correct direction. For the calibrated surrogate model by using the direct methods, one can easily notice large errors in the estimations of the uncertainty source induced fluctuations; see Fig. 6a. Due to inaccurate inference about uncertainty source parameters, the calibrated surrogate model by using the Direct1 method would subsequently suggest near-zero variability in melt pool length, which is clearly contradictory to the experimental facts. The prediction errors are thus essentially caused by the aggressive and improper parameter calibration of the direct method in the presence of misleading experimental information. The Direct2 method shows clearly improved results but is still less accurate than the SeCAV method in predicting the fluctuation of pool length in Fig. 6a.

We compare the three calibrated models in predicting: a pool length, b pool width, and c pool depth. It should be pointed out that box-plots in blue should not be mistaken as lines; instead, they just reflect near-zero fluctuations of pool geometry predicted by the calibrated surrogate model using the Direct1 method.

It is noteworthy that a few outliers can be observed especially in case 5 (see Fig. 6b, c). They are explained by the rather complex physics underlying AM processes. Case 5 involves an extreme combination of very high laser power and slow scanning velocity, thereby likely triggering the known keyhole-mode melting34. This led to the melt pool development greatly deviating from the general tendency with laser power and scanning speed. To resolve this issue, dense experimental data in and near this special processing condition are desired, which will improve the surrogate model to better capture such special physics via improved model bias correction.

UQ of porosity level

The main objective for building a melt pool surrogate model is to assist the estimation and control of the practical AM product properties. Therefore, we further integrate our calibrated melt pool surrogate model and a probabilistic porosity predictive model (see Fig. 1e, f), to examine the porosity formation and its uncertainty over the P–V space.

Figure 7 plots the porosity level of AM parts as a function of the processing conditions. It can be seen that the average porosity level of AM parts, μ(a), would increase with decreased laser power and increased scanning speed. This is intuitively expected because small melt pools are usually formed under these conditions leading to a higher possibility of insufficient pool overlapping and thus porosity formation. Interestingly, the variability in porosity level among parts, σ(a), follows a similar trend; that is, AM parts show a greater σ(a) by decreasing laser power and increasing scanning velocity. There is typically a trade-off between minimizing the variation and bringing the mean on target35. For example, in the objective function, these two objects are usually assigned specific weights, depending on their individual significance in a particular design problem. However, it is shown that such a trade-off does not exist in controlling the porosity level within AM parts. The association of μ(a) and σ(a) with process parameters are relatively simple and similar to one another. It is clear that minimizing μ(a) leads to the minimization of σ(a). This is largely attributed to the AM porosity control problem itself, in which case the pool size and thus lack-of-fusion porosity formation has a simple, monotonical relationship with process conditions (laser power and scanning speed). This leads to a relatively simple relationship of μ(a) and σ(a) with process conditions. In addition, once the pool cross-sectional area achieves a critical size, fully dense AM parts (a = 0) with zero lack-of-fusion porosity are obtained, causing the porosity level to plateau, as seen in the 3D surface in Fig. 7a. Mathematically, this plateau would not allow any variability of porosity level therein when we are trying to introduce perturbations, for example, by uncertainty sources in practice. This in turn results in the plateau (σ(a) = 0) of σ(a) surface over a similar P–V space. Regarding those facts, when one performs process optimization or robust design, minimal mean and minimal variation of porosity level would be achieved simultaneously and exclusively in the shared plateau region, i.e., combinations of high laser power and low scanning speed.

a The mean and b standard deviation of porosity level of AM parts as a function of laser power and scanning speed. Here either the average or standard deviation of porosity level is meant for a bunch of AM parts manufactured under a specific power–velocity combination or processing condition. Note that, the variability in porosity level among AM parts of the same bunch is basically caused by two-step uncertainty propagation from uncertainty sources, which first causes the fluctuation of melt pool development and then the uncertainty of porosity formation within the AM part.

Discussion

There have been numerous recent reports on data-driven modeling techniques (especially machine learning based36,37) in AM, aiming at accelerating quality prediction and process optimization. These efforts require high-quality AM-related data. The AM-Bench project led by NIST is exclusively devoted to developing a continuing series of highly controlled AM benchmark tests. While AM experiments are expensive, AM-Bench offers a data source of high-quality experimental data. Here, we emphasize advantages by adopting the experimental data from the AM-Bench project. The AM-Bench project provides a fairly wide range of experimental data in addition to the melt pool data used in this study. They include in-process cooling rates, thermal field data, residual elastic strains, microstructure (e.g., grain size, aspect ratio, and primary arm spacing), and many more25. The complete data cover different phases of the entire AM process, allowing modelers to build and test various multi-level multi-physical simulation models in a level-by-level manner. This, for example, could aid in a smooth extension of the current melt pool model to a well-validated multi-scale AM model in the near future. One may otherwise acquire all wanted data such as the three dimensions of a melt pool compiled from multiple data sources, for which data scattering can exist due to machine-to-machine variability, different human interventions among operators, etc. This results in inconsistency and poor quality of collected data sets. On the contrary, the AM-Bench project offers highly controlled and rich AM experimental data that can meet various model testing needs.

In addition to high-quality experimental databases, advanced model UR techniques enabling the best use of the databases are also critical to building and updating a useful data-driven surrogate model. In spite of the direct and SeCAV methods used in this work, many other methods, such as the optimization-based calibration38, the well-known KOH framework39, and its variants40,41, have been proposed to build an accurate data-driven model. The optimization-based calibration method is strongly affected by the potentially wrong information if the bias between the simulation model and true physics is large, while the KOH framework and its variants may be misled by the assumed prior distribution of the uncertainty source parameters due to a lack of knowledge. It has been observed that SeCAV is able to make full use of the experimental data for reducing the prediction uncertainty and improving the prediction accuracy of a data-driven model23. In addition, SeCAV is not highly sensitive to the assumed prior interval of calibration parameter; see the illustrative example in Supplementary Note 5.

As mentioned above, the melt pool is closely related to various properties of AM product, such as columnar grain microstructure, solidification texture at sub-grain scale, and lack-of-fusion porosity. The present study has shown that a melt pool surrogate model may be linked to an analytical porosity model to understand and control the lack-of-fusion porosity within AM parts. The proposed surrogate model can also be integrated with some other types of AM models as well as experiment-derived empirical rules, enabling quick estimation of various practical properties. For example, the melt pool cross-sectional area scales with the solidification cooling rate42, which governs the columnar-to-equiaxed transition of the grain structure of an AM part. For example, it was shown that by maintaining a constant melt pool width, a constant average beta grain size can be achieved, with a scaling factor of ~20 grains per melt pool width43. Furthermore, increasing the melt pool width would increase the time for heat to conduct from the melt pool into its surrounding powders, thus resulting in a partially molten powder that subsequently increases surface roughness44. The proposed melt pool surrogate model in this work may be applied to model all of these microstructure processes and estimate their influences on AM parts.

In summary, a data-driven three-dimensional melt pool model for AM is developed by extensive high-throughput high-resolution simulations of melt pools and the AM-Bench data provided by NIST. The SeCAV method was demonstrated to be the most effective in the UR of the as-built melt pool surrogate model, including the calibration of uncertainty source parameters and correction of model bias by intelligently filtering the misleading part. The measured uncertainty information of melt pools was used for the rigorous quantification of uncertainty source parameters via inverse inference. The uncertainties of as many as five uncertainty sources have been quantified. Different uncertainty sources exhibit different variation levels and patterns, instead of simple uniform distributions as usually assumed. With the expected availability of additional melt pool data (e.g., fluctuations of the whole 3D pool and pools developed in keyholing conditions) and continuous update of the NIST AM-Bench database, it will lead to further UR of uncertainty sources and improved model correction. The calibrated melt pool surrogate model can be applied to the UQ of a range of microstructure evolution processes and their influence on properties in AM, including the porosity level. This work presents a clear path towards developing surrogate models for UQ and control of practically meaningful properties of AM.

Methods

Finite-element-based thermal model

In the metallic AM process, a high-power moving energy sources, such as laser or EB, is usually applied. This results in a high-temperature melt pool that fuses metal powders together upon solidification. In this study, a finite-element-based heat transfer model incorporating a moving heat source is utilized to predict the in-process temperature field development, from which geometrical information of the developed melt pool is extracted based on the T = Tsolidus isothermal line; see Fig. 1a.

Probabilistic porosity model

In recent years, numerous porosity predictive models, including analytical models and multi-physical simulation models, have been developed for the AM process. They cover the quantitative and/or qualitative predictions of different types of pores formed in the AM process, such as keyhole-melting caused porosity, inter-track lack-of-fusion porosity, and surface porosity due to unstable melt flow. For illustration purposes, in this study, a probabilistic porosity model45 is adopted to predict the lack-of-fusion porosity level within as-built AM components, with the melt pool width and depth information fed from the calibrated melt pool surrogate model. Other porosity models like the computationally heavy physical simulation model can be also chosen depending on research interests, but a surrogate model may need to be trained to replace the physics-based porosity model for efficient UQ. In this study, the selected porosity model itself is essentially a lightweight analytical model, thus ready-to-go for UQ analysis.

Model UR

As mentioned early, while well-trained for the AM simulation, the data-driven surrogate model is not recommended to directly predict the physics responses during the UQ analysis. Due to the presence of the uncertainty source parameters as well as the unavoidable model bias between the AM simulation and the true physics, the predictive ability of the surrogate model necessarily requires to be improved through model UR, i.e., the calibration of uncertainty source parameters and correction of the model bias using the true physics data. To achieve the improvement, the SeCAV method is employed to infer the distribution of the uncertain parameters. At the same time, the model bias is corrected by constructing another bias surrogate model with MAP estimation.

For details on the above three models, readers are referred to Supplementary Notes 1, 2, and 4, respectively.

Data availability

The simulation-obtained 3D melt pool data used to build the initial surrogate models in this paper are available online at https://github.com/zwang586/AM-3Dpool.

Code availability

The code will be available upon request and should be used only for the purpose of replicating the results in this study.

References

Laureijs, R. E. et al. Metal additive manufacturing: cost competitive beyond low volumes. J. Manuf. Sci. Eng. 139, 081010 (2017).

Huang, S. H., Liu, P., Mokasdar, A. & Hou, L. Additive manufacturing and its societal impact: a literature review. Int. J. Adv. Manuf. Technol. 67, 1191–1203 (2013).

Nath, P., Hu, Z. & Mahadevan, S. Multi-level uncertainty quantification in additive manufacturing. Solid Freeform Fabr. 922–937 (2017).

Slotwinski, J. A., Luecke, W. E., Lass, E. & Possolo, A. M. Interlaboratory mechanical property study for Cobalt-Chromium alloy Made by Laser Powder-Bed-Fusion Additive Manufacturing. https://doi.org/10.6028/NIST.TN.2006 (2018).

Brown, C. U. et al. Interlaboratory study for nickel alloy 625 made by laser powder bed fusion to quantify mechanical property variability. J. Mater. Eng. Perform. 25, 3390–3397 (2016).

Hu, Z. & Mahadevan, S. Uncertainty quantification in prediction of material properties during additive manufacturing. Scr. Mater. 135, 135–140 (2017).

Moges, T., Ameta, G. & Witherell, P. A review of model inaccuracy and parameter uncertainty in laser powder bed fusion models and simulations. J. Manuf. Sci. Eng. 141, 040801 (2019).

Hu, Z. & Mahadevan, S. Uncertainty quantification and management in additive manufacturing: current status, needs, and opportunities. Int. J. Adv. Manuf. Technol. 93, 2855–2874 (2017).

Wang, Z. et al. A data-driven approach for process optimization of metallic additive manufacturing under uncertainty. J. Manuf. Sci. Eng. 141, 081004 (2019).

Chen, W., Allen, J. K., Tsui, K.-L. & Mistree, F. A procedure for robust design: minimizing variations caused by noise factors and control factors. J. Mech. Des. 118, 478–485 (1996).

Mani, M. et al. Measurement Science Needs for Real-time Control of Additive Manufacturing Powder Bed Fusion Processes. https://doi.org/10.6028/NIST.IR.8036 (2015).

Yeung, H., Yang, Z. & Yan, L. A Meltpool prediction based scan strategy for powder bed fusion additive manufacturing. Addit. Manuf. 35, 101383 (2020).

Mondal, S., Gwynn, D., Ray, A. & Basak, A. Investigation of melt pool geometry control in additive manufacturing using hybrid modeling. Metals 10, 683 (2020).

Antonysamy, A. A., Meyer, J. & Prangnell, P. Effect of build geometry on the β-grain structure and texture in additive manufacture of Ti 6Al 4V by selective electron beam melting. Mater. Charact. 84, 153–168 (2013).

Wei, H., Mazumder, J. & DebRoy, T. Evolution of solidification texture during additive manufacturing. Sci. Rep. 5, 16446 (2015).

Qiu, C. et al. Influence of laser processing strategy and remelting on surface structure and porosity development during selective laser melting of a metallic material. Metall. Mater. Trans. A 50, 4423–4434 (2019).

Tapia, G. et al. Uncertainty propagation analysis of computational models in laser powder bed fusion additive manufacturing using polynomial chaos expansions. J. Manuf. Sci. Eng. 140, 121006 (2018).

Lopez, F., Witherell, P. & Lane, B. Identifying uncertainty in laser powder bed fusion additive manufacturing models. J. Mech. Des. 138, 114502 (2016).

Kamath, C. Data mining and statistical inference in selective laser melting. Int. J. Adv. Manuf. Technol. 86, 1659–1677 (2016).

Roy, M. & Wodo, O. Data-driven modeling of thermal history in additive manufacturing. Addit. Manuf. 32, 101017 (2020).

Ricciardi, D. E., Chkrebtii, O. A. & Niezgoda, S. R. Uncertainty quantification accounting for model discrepancy within a random effects bayesian framework. Integrating Mater. Manuf. Innov. 9, 181–198 (2020).

Olleak, A. & Xi, Z. Calibration and validation framework for selective laser melting process based on multi-fidelity models and limited experiment data. J. Mech. Des. 142, 1–35 (2019).

Jiang, C. et al. A sequential calibration and validation framework for model uncertainty quantification and reduction. Comput. Methods Appl. Mech. Eng. 368, 113172 (2020).

Wang, Z. et al. Uncertainty quantification in metallic additive manufacturing through physics-informed data-driven modeling. JOM 71, 2625–2634 (2019).

NIST. Additive Manufacturing Benchmark Test Series (AM-Bench). https://www.nist.gov/ambench (accessed 2020).

Helton, J. C. & Davis, F. J. Latin hypercube sampling and the propagation of uncertainty in analyses of complex systems. Reliab. Eng. Syst. Saf. 81, 23–69 (2003).

Rasmussen, C. E. & Williams, C. K. Gaussian Processes for Machine Learning. (MIT Press, Cambridge, MA, 2006).

Hu, Z., Mahadevan, S. & Ao, D. Uncertainty aggregation and reduction in structure–material performance prediction. Comput. Mech. 61, 237–257 (2018).

Arulampalam, M. S., Maskell, S., Gordon, N. & Clapp, T. A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking. IEEE Trans. Signal Process. 50, 174–188 (2002).

Ghosh, S. et al. Single-track melt-pool measurements and microstructures in Inconel 625. JOM 70, 1–6 (2018).

Keller, T. et al. Application of finite element, phase-field, and CALPHAD-based methods to additive manufacturing of Ni-based superalloys. Acta Mater. 139, 244–253 (2017).

Al-Bermani, S., Blackmore, M., Zhang, W. & Todd, I. The origin of microstructural diversity, texture, and mechanical properties in electron beam melted Ti-6Al-4V. Metall. Mater. Trans. A 41, 3422–3434 (2010).

Cheng, B., Price, S., Lydon, J., Cooper, K. & Chou, K. On process temperature in powder-bed electron beam additive manufacturing: model development and validation. J. Manuf. Sci. Eng. 136, 061018 (2014).

DebRoy, T. & David, S. Physical processes in fusion welding. Rev. Mod. Phys. 67, 85 (1995).

Chen, W., Wiecek, M. M. & Zhang, J. Quality utility—a compromise programming approach to robust design. J. Mech. Des. 121, 179–187 (1999).

Razvi, S. S., Feng, S., Narayanan, A., Lee, Y.-T. T. & Witherell, P. in ASME 2019 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference. (American Society of Mechanical Engineers Digital Collection, 2019). https://asmedigitalcollection.asme.org/IDETC-CIE/proceedings-abstract/IDETCCIE2019/59179/V001T02A040/1069728.

Baturynska, I., Semeniuta, O. & Martinsen, K. Optimization of process parameters for powder bed fusion additive manufacturing by combination of machine learning and finite element method: a conceptual framework. Proc. CIRP 67, 227–232 (2018).

Cha, S.-H. Comprehensive survey on distance/similarity measures between probability density functions. Int. J. Math. Mod. Meth. Appl. Sci. 1, 1 (2007).

Kennedy, M. C. & O’Hagan, A. Bayesian calibration of computer models. J. Roy. Stat. Soc. Ser. B 63, 425–464 (2001).

Arendt, P. D., Apley, D. W. & Chen, W. Quantification of model uncertainty: calibration, model discrepancy, and identifiability. J. Mech. Des. 134, 100908 (2012).

Higdon, D., Nakhleh, C., Gattiker, J. & Williams, B. A Bayesian calibration approach to the thermal problem. Comput. Methods Appl. Mech. Eng. 197, 2431–2441 (2008).

Narra, S. P., Cunningham, R., Beuth, J. & Rollett, A. D. Location specific solidification microstructure control in electron beam melting of Ti-6Al-4V. Addit. Manuf. 19, 160–166 (2018).

Gockel, J., Fox, J., Beuth, J. & Hafley, R. Integrated melt pool and microstructure control for Ti–6Al–4V thin wall additive manufacturing. Mater. Sci. Technol. 31, 912–916 (2015).

Mumtaz, K. & Hopkinson, N. Selective laser melting of thin wall parts using pulse shaping. J. Mater. Process. Technol. 210, 279–287 (2010).

Tang, M., Pistorius, P. C. & Beuth, J. L. Prediction of lack-of-fusion porosity for powder bed fusion. Addit. Manuf. 14, 39–48 (2017).

Acknowledgements

The research is financially supported by a startup fund from the Department of Mechanical Engineering at the University of Michigan-Dearborn, Propelling Original Data Science (PODS) Grants from Michigan Institute of Data Science (MIDAS), and National Science Foundation (NSF) Grant CMMI-1662864. The extensive simulations were supported through computational resources and services provided by Advanced Research Computing at the University of Michigan, Ann Arbor.

Author information

Authors and Affiliations

Contributions

L.C. and Z.H. conceived the idea and designed the research. Z.W. carried out thermal simulations and collected experimental data. C.J. worked on the surrogate modeling and experimental calibration. P.W.L. helped revise the paper. Z.W., C.J., W.H.Y., and Y.Z. prepared the figures and analyzed the results. Z.W. and C.J. wrote the text of the paper. Z.H. supervised C.J. and Y.Z. for all data-driven modeling related work, and L.C. supervised Z.W. and W.H.Y. for physical simulation and experiment-related work involved in this paper. M.F.H and L.Q.C. contributed by consultation and discussion about the whole work. All authors reviewed and approved the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, Z., Jiang, C., Liu, P. et al. Uncertainty quantification and reduction in metal additive manufacturing. npj Comput Mater 6, 175 (2020). https://doi.org/10.1038/s41524-020-00444-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-020-00444-x

This article is cited by

-

Preliminary results for a data-driven uncertainty quantification framework in wire + arc additive manufacturing using bead-on-plate studies

The International Journal of Advanced Manufacturing Technology (2023)

-

Uncertainty-aware mixed-variable machine learning for materials design

Scientific Reports (2022)

-

Uncertainty calibration and quantification of surrogate model for estimating the machining distortion of thin-walled parts

The International Journal of Advanced Manufacturing Technology (2022)

-

An efficient semi-analytical extreme value method for time-variant reliability analysis

Structural and Multidisciplinary Optimization (2021)