Abstract

Humans’ ability to adapt and learn relies on reflecting on past performance. These experiences form latent representations called internal states that induce movement variability that improves how we interact with our environment. Our study uncovered temporal dynamics and neural substrates of two states from ten subjects implanted with intracranial depth electrodes while they performed a goal-directed motor task with physical perturbations. We identified two internal states using state-space models: one tracking past errors and the other past perturbations. These states influenced reaction times and speed errors, revealing how subjects strategize from trial history. Using local field potentials from over 100 brain regions, we found large-scale brain networks such as the dorsal attention and default mode network modulate visuospatial attention based on recent performance and environmental feedback. Notably, these networks were more prominent in higher-performing subjects, emphasizing their role in improving motor performance by regulating movement variability through internal states.

Similar content being viewed by others

Introduction

Professional athletes represent the pinnacle of motor control and precision, but they too fall victim to slight variations in their movement. Movement variability is traditionally viewed as a byproduct of noise accumulated by the motor system1. However, there is emerging evidence that this variability is purposefully orchestrated by the brain to facilitate learning and adaptation2,3,4,5. For example, more movement variability through exploration leads to faster learning and better performance6,7. The decision to explore—as opposed to exploit—an environment to gather information to inform future behavior through learning lends itself naturally to movement variability. Not only does this information depend on the present, but also on internalized factors that account for the accumulation of past experience. These factors are commonly represented as a methodological construct of memory called internal states. For example, movement variability is influenced by motivation8,9, confidence10,11, and emotion12,13,14. Future behavior is therefore the culmination of current information and internal states.

With their impact on behavior so apparent, it is surprising how ambiguous internal states are in motor control compared to other fields such as decision-making. To date, research into decision-making has used statistical models to explore relationships between behavioral variability and internal states15,16,17,18 with the aim of finding evidence of the brain encoding states related to uncertainty19, bias20, trial history21, and impulsivity22. Like decision-making, the goal of motor control is to produce actions that optimize outcomes in the presence of uncertainty23. Whether those actions are decisions or movements, variability and internal states are inherent to both. Therefore, we speculated that internal states are encoded in regions that are not specific to motor control (i.e., nonmotor regions). Indeed, decision-making tasks that require movements find that regions involved with sensorimotor integration encode their internal states as opposed to motor regions20,21. In this context, an emerging consensus is that movement variability originates from both the planning24 and execution phases25 of movement. However, the gap in our understanding of motor control becomes apparent when one asks how internal states evolve, where they are encoded in the brain, and how they affect performance.

Two challenges need to be overcome to address these questions. The first challenge is determining the internal states based on behavior. To date, direct measures of the brain’s internal states have remained elusive26. Internal states are not measurable biological phenomena, such as temperature. Researchers have tried to capture measures of such states using methods including self-reporting27, galvanic skin conductance28, heart rate variability29,30, and pupil size31. However, these measures are context-dependent32, vary between individuals30, and can function with timescales on the order of minutes to compute28,30, whereas internal states can change within seconds33. By comparison, decision-making studies rely on observable behavior such as reaction times, decisions, and outcomes to derive their internal states. Therefore, motor control studies would also be ideal for deriving internal states due to their abundant movement-related data. Even so, since these methods are all byproducts of the brain, the question arises as to why not measure internal states directly from the brain.

This leads to our second challenge, which is identifying where internal states are encoded in the brain. As previously mentioned, research on decision-making supports the view that internal states are encoded by diverse brain regions involving multiple systems (e.g., sensory and memory)19,20,21,22. Whole-brain imaging with high temporal resolution would be ideal for capturing diverse brain structures and rapidly evolving internal states. Most work in humans has used non-invasive neural imaging, such as functional Magnetic Resonance Imaging (fMRI). These studies report the occurrence of co-varying behavioral and neurological variability2,4,34. However, the limitations of the temporal resolution of fMRI35, compounded by the limited space that subjects must perform a natural movement, make it difficult to link neural correlates of internal states to behavior34. What is needed is millisecond resolution with whole-brain coverage.

To address these challenges, we combined high-quality measurements of natural reaching movements with high-spatial and temporal resolution neural StereoElectroEncephaloGraphy (SEEG) recordings. Specifically, ten human subjects implanted with intracranial depth electrodes performed a simple motor task that elicited movement variability during planning and execution. We estimated two internal states using state-space models trained on measurable behavioral data: the error state accumulates based on past errors to convey overall performance and the perturbed state accumulates based on past perturbations to convey environmental uncertainty. Adding these states improved our ability to estimate trial-by-trial reaction times and speed errors over using stimuli alone. Our approach also granted us access to latent terms that predicted subject performance and provided insight into subject strategy. Remarkably, we found neural evidence of the brain encoding each of the internal states in relatively distinct large-scale brain networks. Specifically, large-scale brain networks, such as the Dorsal Attention Network (DAN) and Default Network (DN), were linked to the error and perturbed state. We also have preliminary evidence linking the encoding strength and functional connectivity of these networks back to subject performance and strategy.

Results

To investigate the coupling between motor variability and internal states, we devised a goal-directed reaching task that elicited movement variability both within and between human subjects. We first characterized this variability for our population of subjects during both planning and execution based on trial conditions. Then, to account for this variability, we built a simple behavioral model that incorporated dynamic internal states as accumulating trial history that evolve over time. Using computational methods, we used this model to explain differences in strategy across subjects by comparing their session performance to how they used internal states to inform future behavior. Finally, using recordings from intracranial depth electrodes implanted in the same subjects, we investigated the relationship between neural activity in large-scale brain networks and the encoding of these internal states.

Motor task produced variability between and within subjects

Subjects performed a motor task using a robotic manipulandum for a virtual monetary reward using their dominant hand (Table 1). The task consisted of reaching movements towards a target at an instructed speed despite the possibility of physical perturbations (Fig. 1a). This task was designed to elicit movement variability both between- and within-subjects. We quantified this variability for the planning and execution phases of movement by calculating reaction time (RT) and speed error (SE), respectively. An overlay of RT and SE for each subject is shown in Fig. 1b, c. Group data comparisons confirmed the presence of between-subject variability in both RT and SE. That is, there was a main effect of subject on RT (three-way ANOVA: F(9, 27) = 7.24, p = 2.85 × 10−3, partial η2 = 0.87) and SE (three-way ANOVA: F(9, 45) = 13.17, p = 4.09 × 10−12, partial η2 = 0.62). We then used standard deviations (STD) to quantify the within-subject variability, where the absence of variability would mean STD equal to 0. Indeed, within-subject variability was consistently found across all subjects, meaning no subject was able to exactly reproduce the same movement across trials. All subjects had a non-zero STD for RT and SE, with subject 6 having the highest variability for RT and subject 2 having the highest variability for SE (Supplementary Tables 1–2). Combined, our motor task successfully produced behavior that varied between- and within-subjects.

Summary of behavior during motor task shows variability within and between subjects that is independent of trial conditions yet correlates with their session performance. a A detailed timeline of epochs shown to subjects on a computer screen during an example trial. The name of each epoch is labeled above and the time each epoch was presented is displayed in the bottom right corner. The conditions (speed, direction, perturbation) for this example of a correct trial are fast, up, and unperturbed. Epochs were grouped into movement phases (planning, execution, feedback). Time-series of the observed (b) reaction time (RT) and (c) speed error (SE) across all trials and subjects. Subject 6 is colored black and the remainder of the subjects are colored gray. Observed (d) RT and (e) SE for all trials and subjects separated by trial conditions. Each marker represents the behavior of a subject during a trial for the specified condition. The arrows indicate the interpretation of the behavior relative to the average. Subject 6 is colored black and the remainder of the subjects are colored gray. The gray dotted line in (d) indicates the average population RT (0.80 s). The gray dotted lines in (e) indicate the tolerance of SE between -0.13 and 0.13, where markers between these lines represent correct trials. Comparison of the variability of the observed (f) RT and (g) SE and performance across subjects. Standard deviation (STD) was used to quantify variability. Each marker is labeled by the subject it represents. Subject 6 is colored black and the remainder of the subjects are colored gray. The least-squares line is marked as the gray dashed line. Average session performance (51%) is marked by the vertical gray dotted line. There is a correlation between SE variability and session performance (two-tailed Pearson correlation: r = − 0.88, p = 7.42 × 10−4), in which better performers have fewer variable errors, but not between RT variability and session performance. Source data are provided as a Source Data file.

We then investigated whether differences in trial conditions could explain within-subject variability. Figure 1d, e shows the distributions of the RT and SE for the population separated based on the trial condition. Starting with the planning phase, the trial conditions that influenced RT were speed and direction. We expected subjects to change their RT based on these speeds. Indeed, there was a main effect of speed on RT (three-way ANOVA: F(1, 9) = 9.74, p = 0.012, partial η2 = 0.52), meaning subjects reacted more quickly for fast trials than slow trials. We also found a main effect between the location of the target and how quickly subjects reacted (three-way ANOVA: F(3, 27) = 4.59, p = 0.0012, partial η2 = 0.32). Specifically, they reacted more slowly when the target was up compared to when the target was down (post-hoc Tukey’s: p = 0.0005) or right (post-hoc Tukey’s: p = 0.0022). However, we did not find a significant interaction between speed and direction. For the execution phase, the trial conditions that influenced SE were speed and perturbation. As a group, we found a main effect between the type of perturbation and SE (three-way ANOVA: F(5, 45) = 23.23, p = 9.56 × 10−12, partial η2 = 0.71), with the exception of unperturbed compared to towards trials regardless of speed (Supplementary Table 10). However, we did find that RT does not significantly influence SE. Taken together, these results support a model of planning and execution using RT and SE that is both subject-specific and based on trial conditions. Supplementary Table 3 contains the complete summary of the trial conditions each subject experienced. See Supplementary Table 4–10 for the complete statistical test results.

We also found that performance differentiates variability between subjects. As previously described, variability was quantified using the STD of each behavior, where a higher STD corresponds to higher variable behavior. Session performance of each subject was quantified as the percent of correct trials over all completed trials. Average session performance was 51%. Figure 1f, g shows how subject’s variability of RT and SE is related to their performance. Specifically, we found that subjects with higher session performance had less variable SE (two-tailed Pearson correlation: r = − 0.88, p = 7.42 × 10−4).

In summary, even though all the subjects encountered the same trial conditions, their behavior varied which, in turn, affected their performance. Therefore, factors other than trial conditions must be influencing their performance.

Internal states capture movement variability

Using computational methods, we then developed a model to account for the variability that we observed between subjects (see Methods). Specifically, to account for variability not captured by trial conditions, we added two internal states. The first internal state was the error state, which accumulates the speed errors during past trials to keep track of how well a subject was accomplishing the instructed speed. The second internal state was the perturbed state, which accumulates the presence of perturbations during past trials to convey environmental uncertainty

To combine trial conditions and internal states, we used the state-space model illustrated in Fig. 2a. Each behavior (Eqs. (1) and (2)) was calculated as a linear combination of the trial conditions and internal states (Eqs. (3) and (4)). These equations were then used to simultaneously estimate the behavior and internal states for all subjects. We were interested in examining (i) if our estimates follow the observed behavior, (ii) the characteristics that internal states and trial conditions independently capture, and (iii) how their internal states uniquely evolve to impact behavior. The model results of all subjects are shown in Supplementary Figs. 1–5. All model weights are in Supplementary Table 11.

a Block diagram of our dynamical model—representing the brain—that models behavior to capture movement variability. Internal states are outlined by the dotted line to highlight its latent feedback structure in the model. b–d Examination of model variables for subject 6 reveals underlying dynamics from the internal states that lead to the improvement of estimated behavior from the model observed across all subjects. The black triangle marks trial 109. b Time-series of the observed (gray solid line) and estimated (black solid line) reaction time (RT) ± the 95% confidence interval (gray shaded area) over all trials for subject 6. The gray dotted line markers their average RT (1.07 s). c Time-series of the conditions (orange solid line) and states (purple solid line) over all trials for subject 6. The gray dotted line markers their average RT. Adding the conditions and states together yields the estimated RT in (b). d Time-series of the error state (blue solid line) and perturbed state (pink solid line) over all trials for subject 6. For demonstrative purposes, the states are not weighted but are scaled by their standard deviation. The gray dotted line marks 0. Adding the weighted combination of error and perturbed states together yields the states in (c). Goodness-of-fit for the (e) RT and (f) speed error (SE) models across all subjects measured using the coefficient of determination between the observed and estimated behavior, which ranges between 0% (worst) and 100% (best). We compared the behavioral models with internal states (With states) to a model with the same trial conditions but without internal states (Without states). Each marker is labeled by the subject it represents. Subject 6 is outlined in black. Adding internal states significantly improved the goodness-of-fit of the RT (two-tailed paired-sample t-test: t(9) = − 3.71, p = 0.0048) and SE (two-tailed paired-sample t-test: t(9) = − 5.62, p = 0.00032) model for all subjects, as larger values are better. Source data are provided as a Source Data file.

Overall, we first found that the estimated behavior follows the observed behavior. We found a significant positive correlation between the estimated and observed behavior in 9 out of 10 subjects for RT (range of two-tailed Pearson correlation values: 0.3 − 0.78, Supplementary Fig. 1) and in 10 out of 10 subjects for SE (range of two-tailed Pearson correlation values: 0.42 − 0.86, Supplementary Fig. 2). Only subject 1’s RT was not statically significant (two-tailed Pearson correlation: r = 0.19, p = 0.065). To illustrate the relationship between the estimated and observed behavior, for example, consider subject 6, whose session performance was around the population average. Figure 2b shows subject 6’s RT across all their trials, with their observed behavior in gray and their estimated behavior in black. Indeed, our estimates followed key features from their observed behavior (two-tailed Pearson correlation: r = 0.73, p = 4.75 × 10−23), including trial-to-trial changes and gradual changes such as between trials 100 and 125. For example, the estimate on trial 109 (black triangle) matches what was observed; subject 6 reacted faster than their average.

Next, we explored which parts of our model allowed the estimates to follow the observed behavior. Within the model, trial conditions accounted for the trial-by-trial changes and internal states accounted for gradual changes across all subjects (Supplementary Fig. 6a, b) for both RT (Supplementary Fig. 3) and SE (Supplementary Fig. 4). As such, the states captured the accumulation of subtle changes each subject exhibited. For example, the states (purple) for RT monotonically increased over trials for subjects 4, 5, 7, and 10 (Supplementary Fig. 3). This feature reproduced subjects progressively reacting slower possibly due to fatigue (Supplementary Fig. 1), which could not be replicated by the portion of the model responsible for trial conditions (orange). As another example, Fig. 2c shows the estimated RT of subject 6 separated into the trial conditions (orange) and internal states (purple). On trial 109 (black triangle) with the conditions slow and up, subject 6 should have reacted slower than average. However, the states outweighed the conditions, as demonstrated in subject 6 by their faster reaction time. By incorporating both trial conditions and internal states, our model captured features essential in realistic behavior that neither would be able to convey independently.

To determine why subjects behaved the way they did despite trial conditions, we next investigated the structure of the two internal states to understand their dynamics. The monotonic nature of the states observed in the population was carried over by one or both states for most subjects (Supplementary Fig. 5). We found the perturbed state (pink) to be responsible for the monotonic structure of the RT for subjects 4, 5, 7, and 10. This result suggests that these subjects reacted slower out of hesitancy originating from the accumulation of perturbations, making them less certain about their environment. Even subjects with nonmonotonic states exhibited trials with uncertainty. For example, Fig. 2d shows error state (blue) and perturbed state (pink) across all trials for subject 6. Their error and perturbed state on trial 109 (black triangle) are both positive, indicating that they recently moved slower than instructed and were perturbed. The perturbed state conveyed that recent successions of perturbations caused subject 6 to react and move faster in an attempt to counteract the uncertain environment. Overall, this data suggests that the internal states grant access to latent information about subjects.

Finally, we looked at our population of subjects to test whether adding internal states improved our ability to explain movement variability over using trial conditions alone, by comparing the coefficient of determination—a goodness-of-fit metric that measures the proportion of the behavioral variability that can be explained by the model variables—of the model with states to one without states. Figure 2e, f shows that adding the internal states to the model significantly improved the estimation two-tailed paired-sample t-test: t(9) = − 3.71, p = 0.0048of both RT (two-tailed paired-sample t-test: t(9) = − 3.71, p = 0.0048) and SE (two-tailed paired-sample t-test: t(9) = − 5.62, p = 0.00032) across all subjects. The higher percentage means more of the variability is accounted for by the model variables. For example, the goodness-of-fit for subject 6 (black) improved by 16%. However, the model structure fits some subjects better than others. Specifically, subjects 1 through 4 had outlying model performance for RT (Fig. 2e) compared to other subjects. This is notable because these subjects also had the lowest session performance. Since their model performance was low prior to adding internal states, it indicates that their RT varied by factors other than speed and direction as well as could explain their low session performance. Despite this, adding internal states also improved the deviance (Supplementary Fig. 6c, d) and 10-fold cross-validation using the fitted internal states for RT (Supplementary Fig. 6e). This, in addition to the fact that adding internal states improved their model fit and the inlying goodness-of-fit of their SE model, still merits the validity of interpreting their RT model.

In summary, internal states are essential for completely capturing movement variability. They conveyed slow evolving characteristics in the behavior, caused by retained trial history, that were not accounted for by the trial conditions.

Learning from previous trials improves performance

The quantity of the weights on model variables reveals what each subject prioritized when they varied their behavior. Thus, we explored whether we could use our model to uncover different strategies used by subjects. Specifically, we hypothesized that subjects with higher session performance learned selective information from previous trials. Indeed, we found that they learned from the error state but not the perturbed state. We tested this by considering the relationship between subjects’ weights on the internal states and session performance as a population (see Supplementary Fig. 7 all for model variables).

We found a negative correlation between the weights on the error state and session performance for both RT in Fig. 3a (two-tailed Pearson correlation: r = − 0.63, p = 0.05) and SE in Fig. 3b (two-tailed Pearson correlation: r = − 0.74, p = 0.01). Hence, higher performance corresponded to negative weights on the error state whereas lower performance corresponded to positive weights. Positive weights would cause high performing subjects to reduce their RT and SE after accumulating positive errors by moving slower than instructed. Under the same circumstances, lower performing subjects would continue to react and move more slowly than instructed due to the positive weights on the error state, thereby accumulating more errors. These results indicate that subjects with high performance adjusted their behavior to improve outcomes while subjects with low performance maintained their error tendencies. This finding suggests that higher performers learn based on feedback.

A comparison between the internal state weights and session performance reveals that higher performers learn from error and become more vigilant after perturbations. The larger the magnitude of the weight, the larger the impact the internal state has on behavior. The sign of the weight determines the impact the internal state has on behavior. Each marker is labeled by the subject. Average session performance (51%) is marked by the vertical gray dotted line. A weight of 0 (i.e., internal state does not impact behavior) is marked by the horizontal gray dotted line. The least-squares line is marked as the gray dashed line. All relationships were quantified using a two-tailed Pearson correlation without adjusting for multiple comparisons. a There is a significant relationship (two-tailed Pearson correlation: r = − 0.63, p = 0.05) between session performance and weight of error state on RT. Higher performers countered their error by reacting faster than average (0 > βSE) and lower performers maintained their error by reacting slower than average (0 < βSE) after moving too slow. b There is a significant relationship (two-tailed Pearson correlation: r = − 0.74, p = 0.01) between session performance and weight of error state on speed error (SE). Higher performers tended to move too fast (0 > βSE) and lower performers tended to move too slow (0 < βSE) after moving too slow. c There is not a significant relationship between session performance and weight of perturbed state on RT. Most subjects hesitated after perturbation trials by reacting slower (0 < βP). d There is not a significant relationship between session performance and weight of perturbed state on SE. Half of the subjects hesitated by moving slower (0 < βP) and the other half hastened by moving faster (0 > βP) after perturbations trials. Refer to Supplementary Fig. 6 and Supplementary Table 11 for all the weights. Source data are provided as a Source Data file.

Alternatively, we did not foresee subjects learning from perturbed trials to improve their session performance due to the unpredictability of these trials. Indeed, Fig. 3c, d show that there was no relationship between the weight of the perturbed state and session performance on RT and SE, respectively. Instead, we found that subjects responded by either hesitating (i.e., react slower or move too slow) or counteracting (i.e., react faster or move too fast) for subsequent trials when they perceived the environment to be uncertain. More than half of the subjects positively weighted the perturbed state on RT, indicating hesitation to react. In terms of SE, we found half of the subjects moved slower (positive weight) in response to perturbation history while the other half moved faster (negative weight). We suggest that those who moved faster did so because they were exerting more force in their future movement in case they were perturbed.

In summary, our model could account for differences in the strategies of related to session performance, where higher performance corresponded to learning to counteract errors directly based on previous feedback. Though we could not find a complementary strategy against perturbations, we did find that subjects either hesitated or hastened to react when they perceived the environment to be uncertain. Another key strategy was speed, where larger magnitudes of weights on speed correlated with high session performance (Supplementary Fig. 7c).

Large-scale brain networks encode internal states

Our combined experimental and modeling results provide support for the proposal that internal states can account for behavioral variability, within- and between-subjects. We next asked whether it was possible to gain insight into neural correlates of these internal states. More specifically, we asked whether such states are encoded by large-scale brain networks. To do this, we first assessed whether we could identify a set of brain regions linked to each internal state, and then determined which regions preferentially map to distinct large-scale brain networks related to session performance.

As a result of our unique experimental procedure, we had access to neural recordings from intracranial depth electrodes from each human subject simultaneously as they performed the motor task that was used to derive their internal states. Subjects were implanted with electrodes by clinicians to localize the epileptogenic zone for treatment. Illustrated in Fig. 4a, this granted us access to local field potential activity from a broad coverage of nonmotor regions, where we hypothesized the brain encoded internal states. These regions were first labeled anatomically using semi-automated electrode localization before being mapped to large-scale brain networks (see Methods, Supplementary Fig. 8a, b).

a Subjects were implanted with multiple intracranial depth electrodes. The electrode coverage is visualized by mapping subject-specific coordinates of each channel onto a template brain called cvs_avg35_inMNI152 from Freesurfer94. Electrodes are made up of multiple channels. For example, the channel clinically called X4 for subject 6, located in the right intraparietal sulcus (IPS R), is outlined in black where “A" means anterior and “P" means posterior. b The spectrogram of channel X4 in IPS R for subject 6 for three trials during Speed Instruction, time-locked to its onset (white solid line). It is represented by the time—frequency domain. The color of each pixel represents the normalized power. c Time-series of the error state (blue solid line) for subject 6. The black upward-pointing triangle marks the value of the error state for the trials corresponding to the spectrograms in (b). The non-parametric cluster statistic identified a significant cluster (outlined by the solid black line in (b)) where power correlated with the error state across all channels in the IPS R in the population. For example, the power in the cluster increases for large positive magnitudes of the error state (too slow) in trials 29 and 109 but decreases when the error state is large and negative (too fast) in trial 77. d The statistical map over the time-frequency domain from the non-parametric cluster statistic between the IPS R and the error state across the population during Speed Instruction. Each pixel represents the hierarchical average across channels and subjects of the two-tailed t-statistic of the two-tailed Spearman’s correlation between the error state and spectrogram. The white solid line markers where data was time-locked to epoch onset. Clusters were found as adjacent time-frequency windows that show a significant correlation (outlined in black). e There is a significant relationship (two-tailed Spearman’s correlation: r = − 0.31, p = 3.87 × 10−4) between the average normalized power in the cluster and the error state for the example in (b). This correlation coefficient also represents the encoding strength of this channel. The black star markers correspond to the trials in (c). The least-squares line is marked as the gray dashed line. Source data are provided as a Source Data file.

To identify the neural correlates amongst these regions with an unsupervised and data-driven method, we used a non-parametric cluster statistic36 (see Methods) between the spectral data of each region and each internal state across the population (Fig. 4b, c). This method finds windows of time (relative to epoch onset) and frequency (between 1 and 200 Hz) in which the spectral power of a region significantly correlates with the internal state across trials as demonstrated in Fig. 4d. The statistics provided us with two sets of neural correlates, one for each internal state.

Our modeling results showed that subjects weighed internal states differently, primarily based on their session performance. We suspected that this would be reflected in the brain for each subject by how well these regions encoded the states through the strength of their neural correlates. The degree to which a subject encodes an internal state in a region, which we called the encoding strength, was quantified by correlating the average power within the time-frequency window (from the population statistic) to the state on a trial-by-trial basis (see Methods). Figure 4e shows an example of how the encoding strength is obtained for a channel in subject 6 using the neural correlate from Fig. 4b–d.

Dorsal attention network encodes error state

First, we found that the error state was encoded primarily by regions in the DAN (see Table 2 for details and Fig. 5a for visualization). The regions in DAN (dark blue) included the right intraparietal sulcus (IPS R), right middle temporal gyrus (MTG R), left superior frontal gyrus (SFG L), left superior parietal lobule (SPL L), and right superior temporal sulcus (STS R). The second most prominent network was the visual network (yellow), which included the right parieto-occipital sulcus (POS R), left anterior transverse collateral sulcus (ATCS L), right cuneus (Cu R), and right inferior temporal gyrus (ITG R). Some regions from other networks also appeared, such as the right angular gyrus (AG R) and right posterior-dorsal cingulate gyrus (dPCC R) in DN as well as the right supramarginal gyrus (SMG R) from the Ventral Attention Network (VAN). However, in the regions we recorded from, the majority of the regions that encoded the error state were located in DAN. Seven of the 15 grouped clusters found to encode the error state belonged to the DAN. We verified the primacy of DAN encoding the error state over DN by comparing the distribution of the magnitude of their cluster statistics, where higher values indicate stronger clusters. Indeed, we found DAN to have a higher average cluster statistic than DN (two-tailed two-sample t-test: t(53) = 2.32, p = 0.024; Supplementary Fig. 8c).

Summary of regions found by our analysis that encodes the (a) error state and (d) perturbed state. They are highlighted on an inflated template brain called cvs_avg35_inMNI152 from Freesurfer94 based on the large-scale brain network they belong to: dorsal attention (DAN) in dark blue, default (DN) in red, somatomotor in green, ventral attention (VAN) in light blue, visual in yellow, and none in black. The light gray represents the gyri and the dark gray represents the sulci. The white represents regions included in our analysis but were not found to encode the state based on performance. Subjects encoded the (b) error state (two-tailed Pearson correlation: r = 0.66, p = 1.80 × 10−8) and (e) perturbed state (two-tailed Pearson correlation: r = 0.69, p = 2.71 × 10−14) in their neural activity based on their session performance. Each marker represents the average encoding strength of a region for a subject and is colored by the network the region belongs to. An encoding strength of 1 means the state is strongly encoded by the activity of a region whereas 0 means the state is not encoded. Average session performance (51%) is marked by the vertical gray dotted line. The least-squares line is marked as the gray dashed line. Subjects with higher performance had neural activity that modulated significantly more with either internal states than subjects with lower performance. Examples of subjects with high and low session performance for the (c) error state and (f) perturbed state. The left superior parietal lobule (SPL L) between subject 9 and subject 4 in the DAN and the right angular gyrus (AG R) between subject 10 and subject 1. Each marker represents a trial, with the corresponding neural activity of the cluster for a channel in the region and normalized state value. The gray dashed line represents the least-square line. The two-tailed Spearman’s correlation (r) and p-value (p) are included in each panel, where the correlation magnitude was used as the encoding strength. Source data are provided as a Source Data file.

Two groups of regions emerged based on (i) when during the trial did their activity correlate with the error state and (ii) in what frequency bands (see Methods). One-half of these regions encoded the error state throughout the session as persistent activity—activity that continues across all movement phases—in the frequency band hyper gamma (100–200 Hz). This activity was negatively correlated with the error state, meaning these regions exhibited higher deactivation when a subject moved slower than instructed. The other half encoded the error state as phasic activity—activity isolated to either planning, execution, or feedback—in frequency bands <15 Hz. This activity was positively correlated with the error state for most regions. Overall, these regions use both persistent and phasic activity to encode the error state.

Recall our result above showing that subjects used opposing strategies regarding how they used their error state to change how they reacted in future trials based on their session performance (Fig. 3a, b). We next investigated whether this relationship would be reflected by how strongly these regions encodes the state. Since higher performance corresponded to subjects learning from their errors, we expected a positive correlation between encoding strength and session performance. We found this to be true (two-tailed Pearson correlation: r = 0.66, p = 1.80 × 10−8), as shown in Fig. 5b with DAN in dark blue, DN in red, VAN in light blue, and the visual network in yellow. For example, the SPL L—a key hub of DAN—modulates its neural activity based on the error state for a subject with above average performance (subject 9) but not for a subject with below average performance (subject 4) (Fig. 5c).

Default and dorsal attention networks encode perturbed state

Second, we further found that the perturbed state was encoded primarily by regions in the DN and DAN (see Table 3 for details and Fig. 5d for visualization). The regions in DN (red) included the right angular gyrus (AG R), left anterior cingulate gyrus (ACG L), right middle temporal gyrus (MTG R), right posterior-dorsal cingulate gyrus (dPCC R), and right superior temporal sulcus (STS R). The regions in DAN (blue) included IPS R and SPL L. As with the error state, regions in the visual network (yellow) also encoded the perturbed state. They consisted of the right cuneus (Cu R), right inferior temporal gyrus (ITG R), left fusiform gyrus (FuG L), and right parieto-occipital sulcus (POS R). Other networks that appeared included the VAN (SMG R) and even the somatomotor network through the left subcentral gyrus (SubCG L). The subcortical region right hippocampus (Hippo R) briefly encoded the perturbed state after execution. However, since we were only interested in large-scale brain networks of the neocortex, Hippo R was not classified in this study. Although the majority of regions we found to encode the perturbed state were in DN, we did not find a significant difference between the magnitude of the cluster statistics in DN and DAN (two-tailed two-sample t-test: t(22) = 0.16, p = 0.87; Supplementary Fig. 8d).

The regions in DN and DAN that encoded the perturbed state did so with phasic activity in frequency bands under 15 Hz (i.e., delta, theta, alpha). Most regions had activity that was positively correlated with the perturbed state; trials with a high perturbed state (associated with recent perturbations) coincided with higher activation in DN and DAN.

Recall our earlier result in which subjects alter their behavior (i.e., hesitate or hasten) with regard to the perturbed state. Although there was no consistent strategy based on session performance, we still speculated whether there would be a neurological relationship between session performance and how the perturbed state was encoded. As with the error state, we expected subjects with above average performance to have higher encoding strength because they generally weighted the perturbed state with a higher magnitude than those with below average performance (Fig. 3c–d). Indeed, Fig. 5e shows the networks that modulate their neural activity with the perturbed state based on session performance (two-tailed Pearson correlation: r = 0.69, p = 2.71 × 10−14). This figure also shows regions from other networks, which include the DAN in dark blue, VAN in light blue, somatomotor network in green, and visual network in yellow. For example, as a hub for the DN, the AG R would increase activity when the perturbed state was high (i.e., after perturbation trials) for subjects with high session performance (subject 10) but for subjects with low session performance (subject 1) (Fig. 5f). Even though our models could not find a consistent strategy based on session performance, subjects with above-average performance still modulated their activity to match the perturbed state.

Connectivity correlates with performance and strategy

Recall that the weight a subject puts on their internal states provides insight into their learning strategies. Since subjects encode the internal states in distinct networks, we hypothesized that these strategies would further be reflected by the functional connectivity of the networks that we identified as encoding both the internal state and session performance. Simply put, pairs of regions that are spatially separate yet whose neural activity is correlated are functionally connected37. We quantified functional connectivity by correlating the average power within the time-frequency window used for encoding strength on a trial-by-trial basis for each channel per subject (Fig. 6a, b). Taking the average of these correlations resulted in a value that summarizes the functional connectivity between a pair of regions called subject connectivity strength. Population connectivity strength, found by averaging subject connectivity strength across all pairs, is summarized in Supplementary Fig. 9.

a Average normalized power for channels in the right intraparietal sulcus (IPS R) and right middle temporal gyrus (MTG R) of subject 6 across trials. The value for each trial comes from averaging the normalized power in the time-frequency windows identified by the non-parametric cluster statistic (Table 2 and Table 3). This figure uses the results of the error state. b Matrix of channel connectivity strengths found by taking the magnitude of two-tailed Spearman’s correlation value between the average normalized power of channels in IPS R and MTG R. Values close to 1 (red) indicate high correlations and values close to 0 (yellow) indicate low correlations. c Comparison between subject connectivity strength (found by taking the average of the channel connectivity strength) and session performance reveals high-performing subjects engaged in stronger connectivity between IPS R and MTG R (two-tailed Pearson correlation: r = 0.96, p = 0.17). The correlation value represents the performance connectivity strength. Directed graph depicting performance connectivity strength across regions for (d) error state and (e) perturbed state. The regions are colored by the large-scale brain network they belong to: dorsal attention (DAN) in dark blue, default (DN) in red, ventral attention (VAN) in light blue, and visual in yellow. Only pairs with positive relationships between connectivity strength and session performance are shown (i.e., higher performance with higher subject connectivity). The magnitude of the correlation between connectivity strength and session performance is depicted by the thickness of the edge; the higher the correlation, the thicker the edge. Values below 0.5 were truncated. The regions are ordered by when they encode each state and its phase is labeled along the circumference, demonstrating synchrony across time. See Supplementary Fig. 9 for full performance connectivity. Source data are provided as a Source Data file.

Unfortunately, we were not able to observe all possible pairs of regions because either the pair was not represented in the data set, or we did not have enough subjects with the pair (n ≥ 3) to make any remarks. Based on behavioral results, we were interested in the variability of connectivity between subjects. Specifically, since connectivity strength is subject-specific, we expected it to vary based on how subjects performed related to different strategies we observed earlier. For example, subjects with high performance will exhibit higher subject connectivity strength between key regions in distinctive networks (Fig. 6c).

Our results above demonstrated that high session performance was accompanied by subjects compensating their behavior in response to the error state. Using the set of regions that encode the error state, Fig. 6d further shows that subjects with high performance favored connectivity between regions in the DN (red), DAN (dark blue), VAN (light blue), and visual network (yellow). We found that regions that encoded with persistent activity connected to regions that encoded with phasic activity. Specifically, the persistent activity from DAN (IPS R and SPL L) and VAN (SMG R) projected namely to regions in the visual network during key phases during the trial (i.e., planning, execution, feedback). This result suggests that the error state is held and distributed by the attention networks to modulate visual attention. An increase in such attention could account for the counteracting behavior observed by top performers, such as reacting faster after moving slower than instructed (Fig. 3a). We also observed a significant correlation between the persistent activity and phasic activity during feedback for the SPL L, which is a key hub of DAN. This relationship implicates persistent and phasic activity having different roles when encoding the error state. Perhaps the phasic activity represents the various sensory processes (depending on the phase) used to extrapolate information about the error state and the persistent activity holds this information in memory for accessibility by other regions. Then, the connection between SPL L could be an example of updating between integration and memory. Overall, subjects with high session performance favored connections between DAN and visual networks to encode the error state. These connections could account for how these subjects learned from their errors, specifically by updating their memory based on visual feedback to modulate attention in future trials.

These results also revealed that subjects with high session performance altered how they reacted (i.e., hesitate or hasten) in response to the perturbed state. Figure 6e further shows the connectivity between the perturbed state encoding regions favored towards higher session performance. Notable, we found more connections for the perturbed state than compared to the error state. This can be interpreted as the perturbed state being more disruptive to their behavior and requiring the integration of sensorimotor pathways for interpretation during planning and feedback. In general, most of the connections we found were between DN and visual network. This relationship could allow for the communication of visual information from feedback to be available for the DN during planning, such as to update their expectation about the environment of the motor task. This information could then be projected to regions in the visual network and VAN to plan their future behavior. For example, dPCC R (hub of DN) during early planning projects to SMG R (hub of VAN) during late planning and early execution. Taken together, these results suggest that the DN could be modulating bottom-up visual attention based on the perturbed state. There are also notable connections between DN and DAN: IPS R and dPCC during planning as well as IPS R from planning to MTG R during feedback. These junctions suggest points when DN and DAN communicate task-relevant information that could also aid in modulating visual attention. Overall, high session performance favored connectivity between DN to other relevant networks whose activity modulates based on the perturbed state during planning and feedback, which they could have used to adapt their responsiveness based on perturbations we observed from their behavior.

Discussion

In the present study, we first identified two internal states—based on error and environmental history—that induce movement variability in humans. The degree to which states contribute to an individual’s variability reveals different strategies based on session performance regarding how they used their states to inform future behavior. Remarkably, we then found that these internal states were linked to encoding in large-scale brain networks, DAN and DN. Taken together our findings reveal that differences in large-scale brain networks that can distinguish subjects by their session performance: (i) high performers modulate network activity on a trial-by-trial basis with respect to their internal states and (ii) their learning strategy is supported by explicit connections within and between networks during phases of movement.

The general effect of error and the environment on movement variability are well documented in motor control. Traditionally, the goal of optimal feedback control is to minimize error during movement, where larger errors require more variability to correct the movement38,39. By accounting for the accumulation of errors from trial-to-trial, we also found that variability scaled based on error history for those with above average session performance. In everyday life, we adapt our behavior to fit the environment based on prior experience. However, it is difficult to adapt when disturbances are rare and unpredictable. Fine and Thoroughman found that it is difficult for subjects to learn how to respond to these disturbances that occur for <20% of trials40. They proposed an adaptive switch strategy that depends on the environmental dynamics: ignore performance from trials with rare disturbances and learn when they are common41. Complimentary, we found that subjects responded in trials after perturbations by either reacting hesitantly or vigorously. Nevertheless, their strategy was not a predictor of how well they performed our reaching task, which can be further explored in future studies.

To learn our speed-instructed motor task, subjects needed to keep track of errors using working memory. Some of their errors were self-inflicted (e.g., forgetting the speed instruction) while others were caused by unexpected perturbations. In either case, we found that our subjects learned the task by monitoring their history of errors to decide when to allocate attention using the DAN. Our findings are in agreement with our current knowledge about DAN, specifically for its control of visuospatial attention42. Other studies have found that it activates before and during expected (top-down) as well as after unexpected (bottom-up) visual stimuli43. Therefore, DAN appears to combine bottom-up information (i.e., unexpected perturbations) with top-down information (i.e., self-inflicted errors) when deciding how much attention to allocate for future trials.

More specifically, we found DAN encoded using two frequency patterns: activating below 15 Hz and deactivating in frequencies above 100 Hz. The former was found when subjects either recently moved faster than instructed—to which they responded by slowing down—or after recently perturbed trials. This finding is reminiscent of the speed-accuracy trade-off phenomenon. Speed-accuracy trade-off is observed during motor learning as a form of behavioral variability where subjects must balance moving faster at the cost of making more errors to optimize performance44. This phenomenon innately requires tracking history45. This suggests that DAN is not only tracking history but is modulating it for learning. Other fMRI studies also corroborate our observation. Increases in Blood-Oxygen-Level-Dependent (BOLD) signal relative to baseline in DAN have been reported when subjects were instructed to prioritize speed (instructed as fast or slow) over accuracy during a response interference speed-accuracy trade-off task46. Another study using an anti-saccade task found DAN activation through BOLD signal positively correlated with RT (i.e., more activation when slowing down) and being the least activated on trials with large errors (which compares to our task when the error state would be close to zero)47. In a visually guided motor sequence learning task, DAN activated—through BOLD signal—to large errors during early learning, which they related to active visuospatial attention when first learning the sequence48. Taken together, our results directly implicate DAN as a network for encoding tracking history.

The persistent activity of DAN in frequencies above 100 Hz when found are characteristic of working memory49,50,51. During motor planning in tasks with working memory, DAN has been shown to maintain task-relevant information, such as target location, during delay periods using persistent activity in both whole-brain and single unit recordings52,53. DAN has also been linked to working memory closely tied to visual attenuation related to memory load and top-down memory attention control during visual working memory tasks54. Though our task does not explicitly study working memory, given the evidence, our results suggest that DAN is tracking the accumulation of past errors in working memory. This would also support our connectivity results as information held in working memory can be easily accessed by multiple systems, such as for sensorimotor integration or visual processing, for recalling and updating55,56,57.

Finally, we found that the encoding strength of regions in the DAN and functional connectivity between these regions, along with the visual network, scaled based on the subject’s overall performance. Though these results are preliminary, we linked this with observations that subjects have different strategies. Those with above average session performance more strongly encoded error history in and between the regions in the DAN. Hence, they were more engaged in the task and modulated their attention based on the error state. This led to them slowing down after they moved too quickly and vice versa as predicted by their model weights. Meanwhile, below average performers have poor attentional control and memory capacity and thus did not learn from their mistakes. Studies have shown that poor overall behavioral performance is related to decreased activity in DAN58,59,60,61,62 (called out of the zone63), fluctuations in attention and working memory known as a lapse in attention64,65,66, and poor connectivity in DAN67,68. Clinically, studies of Attention Deficit Hyperactivity Disorder (ADHD) found compromised performance during working memory tasks is related to poor attentional control in DAN-related regions69,70, similar to what we observed in our poor performers.

The random perturbations made our task more difficult by creating uncertainty in the environment. Our models show that subjects also kept track of past perturbations suggesting possible attempts to learn the environment. We found that regions whose activity correlated with the perturbed state were in DN and DAN. They did so primarily in the frequency bands theta and alpha (<15 Hz). The involvement of DAN and the perturbed state was discussed with the error state for its connection to allocating attention. As for DN, it encoded the perturbed state by increasing activity when the environment was perceived to be more uncertain. This function of the DN is similar to a recent study by Brandman et al.71 in which they found that the DN activated immediately following unexpected stimuli in the form of surprising events during movie clips. They suggested that the DN could be involved in prediction-error representation, which our results also support. Furthermore, they also found that DN coactivated with the hippocampus during unexpected stimuli. This finding parallels a previous report from our dataset which demonstrated activation of the hippocampus in response to motor uncertainty72. A proposed process model of reinforcement learning incorporates regions in the DN and hippocampus that predict and evaluate the semantic knowledge about the environment to inform future behavior73. Taken together, our findings suggest that we captured the DN responding to the unexpected stimuli by updating semantic knowledge about the environment which informs future behavior based on the perturbed state.

Behaviorally, our model did not establish a link between subjects’ performance and how they handled past perturbations but our analysis of neural activity revealed that subjects with high session performance demonstrated increased activation of regions in DN in response to the history of perturbations as well as correlated activity across regions between trials. Since subjects have no control over the perturbations, we speculate that they attempted to learn how to react in a way that optimizes their performance. Although they are applied to random trials and in random directions, perturbations were only applied during the beginning of the movement. Therefore, subjects can learn to prepare themselves in a way they see fit. Our preliminary findings suggest that high performers effectively implement their new semantic knowledge about the environment to explore different approaches to prepare for the possibility of future perturbations. DN becomes more activated during early learning48, particularly when motor imaginary is used74. In fact, athletes (i.e., experts or high performers) have been shown to activate the DN when employing strategies that decrease variability, resulting in stable performance during movement68. The phenomenon, known as in the zone63, has been linked to the DN activation with consistent performance associated with preparedness75,76 and vigilance77. Hence, activation of DN indicates those subjects are prepared for the chance of a perturbation. Taken together, these findings suggest that high performers react to uncertainty by heightening vigilance through activation and connectivity in DN in conjunction with the VAN and the visual network.

Observing movement variability in the form of motor error is common in motor control, with paradigms typically focused on aspects of motor learning. Numerous motor control studies have found—directly or indirectly—the involvement of regions in DAN and working memory53,56,78. In fact, our results align with classic motor control reports when considering their results in terms of networks. For example, Diedrichsen et al.79 identified neural correlates of error in DAN and visual networks, represented by SPL and POS respectively, identical to ours. Gnadt & Anderson52 observed persistent activity in IPS (hub for DAN) in relation to target location during the delay in motor planning, connecting their results to memory. In a study directed towards large-scale brain networks, DAN activation during early learning was correlated with decreased error rate, which they related to active visuospatial attention when first learning the sequence48.

This study highlights the complexity of behavioral and neural data as well as how challenging it is to disentangle internal states from other processes, nevertheless, we acknowledge several limitations inherent to our approach. First, it is possible that some of our neural data include results from epileptic brain regions in which activity could differ from comparable regions in healthy humans, despite precautions we took to minimize this possibility as discussed in Methods. The effects of anti-epileptic medications are another confounding variable that could have influenced the magnitude of the results, though subjects ceased their medications during clinical investigation. At this time, the only ethical method to record from the brain necessary for our study using SEEG depth electrodes in humans is while they are implanted for clinical purposes. Second, our behavioral data was limited by the design of the motor task and trial conditions, including two speeds, four directions, and few perturbation trials. Since internal states rely on the accumulation of history across trials, the validity of a state such as the perturbed state does not depend on the number of perturbed trials but rather the overall number of trials. For example, if no trials were perturbed, then the perturbed state would remain 0. Future experiments could explore other trial conditions, like introducing obstacles into the task space or other stimuli such as audio, or varying the probability of perturbations. One could also imagine designing a study that picks trial conditions to produce the desired variability from a subject based on model inferences about their internal states. Third, we focused on using a simple modeling approach, which raises the possibility that a key factor in a subject’s behavioral variability may be absent from our model. Contrarily, this simplicity allows for other variables, such as other trial conditions or internal states, to be easily designed and integrated to create a model for a variety of behavioral tasks. Finally, we want to emphasize that the performance-related results should be taken as preliminary as the sample sizes for these statistics were limited to the number of subjects implanted in the same regions. Hence, studies with larger sample sizes are needed to make any conclusive statements.

In conclusion, our findings provide a fresh viewpoint for motor control research. Behaviors are readily measured every day from devices such as smartphones. Our results raise the possibility that the underlying history of measured behaviors could be used to make inferences about a person’s brain state without needing to collect electrophysiological data, saving time and money in the health field for personalized medicine80 or business ventures such as sports81. Future studies in motor control should consider the effect of these networks on motor control and should account for the effects of internal states as we found that they play a significant role in governing behavior and its variability.

Methods

Recording neural data from humans

Ten human subjects (seven females and three males; mean age of 34 years) were implanted with intracranial SEEG depth electrodes and performed our motor task at the Cleveland Clinic. These subjects elected to undergo a surgical procedure for clinical treatment of their epilepsy to identify an Epileptogenic Zone (EZ) for possible resection. Details of the handedness and clinical information of each subject are listed in Table 1. The study protocol, including experimental paradigms and collection of relevant clinical and demographic data, was approved by the Cleveland Clinic Institutional Review Board. Subject criteria required volunteering individuals to be over the age of 18 with the ability to provide informed consent and able to perform the motor task. A data-sharing agreement between the Cleveland Clinic and Johns Hopkins University was approved by the legal teams of both institutions. Other than the experiment, no alterations were made to their clinical care. We excluded two additional subjects who attempted to perform the task but failed to complete it.

Each subject was implanted with 8–14 stereotactically-placed depth electrodes (PMT® Corporation, USA). Each electrode had between 8–16 electrode channels (henceforth referred to as channels) spaced 1.5 mm apart. Each channel was 2 mm long with a diameter of 0.8 mm. Depth electrodes were inserted using a robotic surgical implantation platform, (ROSA®, Medtech®, France) in either an oblique or orthogonal orientation. This procedure granted access to broad intracranial recordings in a three-dimensional arrangement, which included lateral, intermediate, and/or deep cortical as well as subcortical structures82. The day prior to surgery, volumetric preoperative Magnetic Resonance Imaging (MRI) scans (T1-weighted, contrasted with MultiHance®, 0.1 mmol kg−1 of body weight) were obtained to plan safe electrodes trajectories that avoided vascular structures preoperatively. Postoperative Computed Tomography (CT) scans were obtained and coregistered with preoperative MRI scans to verify electrode placement postoperatively following implantation82. Electrophysiological data in the form of Local Field Potential (LFP) activity were collected onsite in the Epilepsy Monitoring Unit (EMU) at the Cleveland Clinic using the clinical electrophysiology acquiring system (Neurofax EEG-1200, Nihon Kohden, USA) with a sampling rate of 2 kHz referencing an exterior channel affixed to the skull. Each recording session was also determined to be free of any ictal activity.

Inducing movement variability using our motor task

Our motor task was a center-out delay arm reach where subjects won virtual money by controlling a cursor on a screen to reach a target with an instructed speed despite a chance of encountering a random physical perturbation72,83,84,85. Subjects performed this task in the EMU using a behavioral control system, which consisted of three elements: a computer screen, an InMotion2 robotic manipulandum (Interactive Motion Technologies, USA), and a Windows-based laptop computer83. The computer screen (640 × 480 px) was used to display the visual stimuli to the subject. Subjects were seated ~60 cm in front of the screen. The robotic manipulandum allowed for precise tracking of the arm position in a horizontal two-dimensional plane relative to the subject. The subject used the robotic manipulandum to control the position of a cursor on the computer screen during the motor task restricted to a horizontal two-dimensional plane relative to themselves. The laptop computer ran the motor task using a MATLAB-based software tool called MonkeyLogic (version 2.72)86.

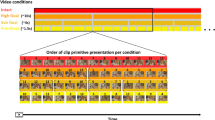

During the session, subjects would complete as many trials as they could in 30 min. A complete trial consisted of eight epochs, each distinguishable with unique visual stimuli shown in Fig. 1a. Subjects began each trial with an instructed speed, indicating whether they were supposed to move fast or slow (Speed Instruction). Next, subjects moved their cursor to a target in the center of the screen (Fixation). Once centered, subjects were presented with a target in one of the four possible directions (Show Target). A random delay was applied here in which subjects could not move their cursor out of the center until cued to do so. This cue was signaled as the target changing color from gray to green (Go Cue). After their cursor left the center (Movement Onset), there was a chance that a constant perturbation would interrupt their movement. Subjects were still expected to reach the target with the correct speed despite the perturbation. Once they reached (Hit Target) and held their cursor in the target, subjects were immediately presented with feedback on their trial speed compared to the instructed speed (Speed Feedback). The reward they were shown depended on whether they matched the instructed speed or not (Show Outcome). An image of an American $5 bill was presented for correct trials while the same image overlaid by a red X was presented for incorrect trials. It should be noted that subjects did not receive any monetary reward for participating in this task. Epochs are structured into traditional phases of motor control based on the design of the experiment. Planning includes Speed Instruction, Fixation, Show Target and Go Cue, Execution includes Movement Onset and Hit Target, and Feedback includes Speed Feedback and Show Outcome.

Subjects could fail a trial for any of the following reasons: not acquiring the center during Fixation, leaving the center before Go Cue, failing to leave the center after Go Cue, or inability to reach the target during Movement Onset. Regardless of the reason, the rest of the trial was aborted and subjects were presented with a red X before moving to the next trial.

We only used completed trials (i.e., trials in which the Speed Feedback was reached) for our study. Subjects were aware that perturbations would be applied. Additionally, subjects were allowed as much time as they wanted to practice the motor task before the session began, which included the speeds, directions, and perturbations.

At the end of each session, the session performance of each subject was calculated as 100*(Number of completed trials with correct speed)/(Number of completed trials). Session performance of 0% means the subject achieved the correct speed on none of their trials and session performance of 100% means the subject achieved the correct speed on all of their trials. To differentiate the performance of a trial (i.e., correct or incorrect) from the session (i.e., percent of correct trials), we refer to the former as trial performance.

There are three trial conditions that varied from trial-to-trial: the instructed speed, the instructed target direction, and the type of perturbation. Speed refers to the binary condition categorizing the instructed speed (fast, slow). Either speed was equally likely for each trial. In actuality, the categorical representation of speed translates to a range of values based on the percent of a subject-specific maximum speed measured during calibration; when they were told to move the cursor as quickly as possible from the center to a right target over five trials just before starting the experiment. Fast trials accepted 66.67 ± 13.33% and slow trials accepted 33.33 ± 13.33% of their calibration speed. Direction refers to the four possible locations of the target relative to the center of the computer screen (down, right, up, left). The probability of each location was equally like for each trial. Perturbation refers to the type of perturbation, if any, that was experienced during the trial (unperturbed, towards, away). Each trial had a 20% probability of a perturbation being applied with a random force between 2.5 to 15 N at a random angle, both selected from a uniform distribution. The perturbation was physically applied to the subject using the robotic manipulandum and would persist until the subject was shown their feedback. Perturbations can be categorized based on the angle it was applied relative to the target direction: towards or away. All other trials are unperturbed. The summary of the trial conditions experienced by each subject are listed in Supplementary Table 3.

In addition to trial conditions, we also tracked two continuous values that incurred movement variability from trial-to-trial: Reaction Time (RT) and Speed Error (SE). The RT (in seconds) was quantified as the time it took for a subject to move their cursor out of the center after Go Cue. The SE was quantified as the difference between the middle of the range of the instructed speed (0.33 or 0.67) and their trial speed. The trial speed was found by dividing the constant distance between the center and the target (in pixels) with the total time between Go Cue and Hold Target (in seconds), then scaling it by their calibration speed. The SE can take on a value between −0.67 to 0.67, where a positive SE means the subject moved slower than instructed (i.e., too slow), a negative SE means the subject moved faster than instructed (i.e., too fast), and a SE between −0.13 and 0.13 means the subject was within the acceptable range for the trial to be correct. The summary of the RT and SE for each subject are listed in Supplementary Tables 1 and 2.

To test the main effects and interactions that subjects and trial conditions had on RT and SE, we constructed a multi-way ANOVA for each. For RT, we used a three-way ANOVA using subject (n = 10), speed (n = 2), and direction (n = 4) as the independent factors. For SE, we used a three-way ANOVA using subject (n = 10), the combination of speed and perturbation called type of perturbation (n = 6), and RT as the independent factors. Subjects were treated as a random variable for both tests and the RT factor in SE was treated as a continuous variable. Any initial results that indicated significant differences were followed up by a post-hoc Tukey’s comparisons. The results of these tests are reported in Results and shown in Supplementary Tables 4–10.

Estimating internal states to capture movement variability

We sought to construct a behavioral model to capture movement variability based on data collected during our goal-directed center-out delay arm reach motor task. The behavioral data consisted of any quantifiable measurements from the motor task, namely the trial conditions, RTs, and, SEs.

Our system follows the framework outlined in Fig. 2a. It takes on the structure of a state-space representation and consists of three basic elements for each trial t: outputs, inputs, and internal states. Based on the design of our motor task, we assume that a movement on every trial goes through two phases: planning and execution. This is represented by two boxes seen in Fig. 2a. The inputs of planning are speed and direction while the output is RT. The input of execution is perturbation as well as the RT from planning while the output is SE. Internal states are drawn as a black dashed line for illustrative purposes. They provide feedback for both planning and execution. This is because the internal states update is based on trial history (such as past performance or trial conditions). This information then flows through our system to affect the outputs. Though it is not labeled, the dotted line from planning to execution also carries the inputs from the planning system (speed and direction) to be available for the downstream system. Therefore speed and direction are also available for modeling SE. However, perturbation is not available for RT because perturbations happen after RT. However, the history of the trial conditions from previous trials is available through the internal states.

The outputs, RT and SE, are denoted \({y}_{t}^{{{{{{{{\rm{RT}}}}}}}}}\in {\mathbb{R}}\) and \({y}_{t}^{{{{{{{{\rm{SE}}}}}}}}}\in {\mathbb{R}}\) respectively. They are directly measured from the behavioral data during the motor task. The RT was normalized using the z-score before any modeling was performed so each subject followed a standard normal distribution (i.e., \({N}(0,1)\)). The SE innately followed a continuous uniform distribution (i.e., \({U}_{[-0.67,0.67]}\)) and was not normalized. Refer to Supplementary Tables 1 and 2 for the summary of outputs. The inputs are the trial conditions of the motor task: speed, direction, and perturbation. They are also directly measured. They are described as categorical variables, denoting speed as \({u}_{t}^{{{{{{{{\rm{S}}}}}}}}}\in \{\,{{\mbox{fast,slow}}}\,\}\), direction as \({u}_{t}^{{{{{{{{\rm{D}}}}}}}}}\in \{\,{{\mbox{down,right,up,left}}}\,\}\), and perturbation as \({u}_{t}^{{{{{{{{\rm{P}}}}}}}}}\in \{\,{{\mbox{unperturbed,towards,away}}}\,\}\). The internal states we defined are the error state and perturbed state, denoted \({x}_{t}^{{{{{{{{\rm{SE}}}}}}}}}\in {\mathbb{R}}\) and \({x}_{t}^{{{{{{{{\rm{P}}}}}}}}}\in {\mathbb{R}}\) respectively.

Our system is broken down into the phases of planning and execution (Fig. 2a). The behavioral outputs of planning and execution are RT87 and SE25, respectively. They are separated by a delay for maximal separation88. It is important to note that the states remain constant between planning to execution since neither state has information to update until after a movement is complete. Each phase is associated with its own mathematical function relating the outputs as a linear combination of states and inputs available on trial t. The task begins with the planning phase, written as:

where \({\epsilon }_{t}^{{{{{{{{\rm{RT}}}}}}}}}\) is an independent normal random variable with zero mean and variance \({\sigma }_{{{{{{{{\rm{RT}}}}}}}}}^{2}\in {\mathbb{R}}\ge 0\). In other words, it defines the output of planning on trial t as RT and is the linear combination of a constant, error state, perturbed state, speed, and direction on trial t, scaled by their respective weights (β’s). This is followed by the execution phase written as:

where \({\epsilon }_{t}^{{{{{{{{\rm{SE}}}}}}}}}\) is an independent normal random variable with zero mean and variance \({\sigma }_{{{{{{{{\rm{SE}}}}}}}}}^{2}\in {\mathbb{R}}\ge 0\). It defines the output of execution on trial t as SE and is the linear combination of a constant, error state, perturbed state, RT, and the combination of speed and perturbation on trial t, scaled by their respective weights (β’s). By our definition, RT will always be available as an input for SE. Though speed is not a direct input to execution (Fig. 2a), it also carries over from planning. We found the combination of speed and perturbation captured SE well as compared to any other linear combination of trial conditions. This combination is supported by intuition as well as in literature89. On one hand, a slow trial with an away perturbation could help subjects reduce the magnitude of their SE by forcing them to move slower. On the other hand, a fast trial with an away perturbation could make it harder to match the speed, making a negative SE (too slow) more believable.

To capture the history of their performance of speed error during the task, we used SE to update the error state: