Abstract

Subseasonal forecasting—predicting temperature and precipitation 2 to 6 weeks ahead—is critical for effective water allocation, wildfire management, and drought and flood mitigation. Recent international research efforts have advanced the subseasonal capabilities of operational dynamical models, yet temperature and precipitation prediction skills remain poor, partly due to stubborn errors in representing atmospheric dynamics and physics inside dynamical models. Here, to counter these errors, we introduce an adaptive bias correction (ABC) method that combines state-of-the-art dynamical forecasts with observations using machine learning. We show that, when applied to the leading subseasonal model from the European Centre for Medium-Range Weather Forecasts (ECMWF), ABC improves temperature forecasting skill by 60–90% (over baseline skills of 0.18–0.25) and precipitation forecasting skill by 40–69% (over baseline skills of 0.11–0.15) in the contiguous U.S. We couple these performance improvements with a practical workflow to explain ABC skill gains and identify higher-skill windows of opportunity based on specific climate conditions.

Similar content being viewed by others

Introduction

Improving our ability to forecast both weather and climate is of interest to many sectors of the economy and government agencies, from the local to the national level. Weather forecasts 0–10 days ahead and climate forecasts seasons to decades ahead are currently used operationally in decision making, and the accuracy and reliability of these forecasts has improved consistently in recent decades1. However, many critical applications—including water allocation, wildfire management, and drought and flood mitigation—require subseasonal forecasts, with lead times beyond 10 days and up to a season2,3. Given the changing nature of the climate and the increasing frequency of extreme weather events, there is a social and scientific consensus regarding the importance and urgency of providing reliable subseasonal forecasts4,5.

Subseasonal forecasting lies in a challenging intermediate domain between shorter-term weather forecasting (an initial-value problem) and longer-term climate forecasting (a boundary value problem). Skillful subseasonal forecasting requires capturing the complex dependence between local weather conditions, typically described by numerical weather models, and global climate variables, usually part of long-range seasonal forecasts2. The intertwined dynamics of initial-value prediction problems and boundary forcing phenomena led subseasonal forecasting to long be considered a predictability desert6, more difficult than either short-term weather forecasting or long-term climate prediction. Recent studies, however, have highlighted important sources of predictability on subseasonal timescales, including oscillatory modes such as El Niño–Southern Oscillation and the Madden–Julian oscillation (MJO), large-scale anomalies in, e.g., soil moisture or sea ice, and external forcing4,7. These predictability sources are imperfectly understood and imperfectly represented in weather and climate models8 and hence represent an opportunity for more skillful subseasonal forecasting.

The challenges of subseasonal forecasting are particularly apparent for precipitation forecasts9. Precipitation is governed by both macro-scale dynamical processes of the atmosphere and complex microphysical processes, some of which are still not fully understood8. In addition, precipitation is oftentimes a very local phenomenon, working over a much finer scale than the resolution employed by subseasonal dynamical models. This scale incompatibility, in concert with suboptimal process representation and incomplete process understanding, results in dynamical models falling short of predictability limits for forecasting precipitation4,8. Generating precipitation forecasts for longer seasonal horizons is an even more daunting task. In this case, dynamical models show considerable biases in precipitation and wind fields. These biases arise from the parameterization of key physical processes associated with deep convective cloud systems10 and, when combined with chaotic dynamics and imperfectly represented sources of predictability, translate into rapidly decreasing skill for precipitation forecasts.

Bridging the gap between short-term and seasonal forecasting has been the focus of several recent large-scale research efforts to advance the subseasonal capabilities of operational physics-based models9,11,12. However, despite these advances, dynamical models still suffer from persistent systematic errors, which limit the skill of temperature and precipitation forecasts for longer subseasonal lead times. Low skill at these time horizons has a palpable practical impact on the utility of subseasonal forecasts for policy planners and stakeholders.

To counter the observed systematic errors of physics-based models on the subseasonal timescale, there have been parallel efforts in recent years to demonstrate the value of machine learning and deep learning methods for improved subseasonal forecasting accuracy13,14,15,16,17,18,19,20,21,22,23,24,25. While these works demonstrate the promise of learned models for subseasonal forecasting, they also highlight the complementary strengths of physics- and learning-based approaches and the opportunity to combine those strengths to improve forecasting skill15,20,21,22.

To harness the complementary strengths of physics- and learning-based models, we introduce a hybrid dynamical-learning framework for improved subseasonal forecasting. In particular, we learn to adaptively correct the biases of dynamical models and apply our adaptive bias correction (ABC) to improve the skill of subseasonal temperature and precipitation forecasts. ABC is an ensemble of three low-cost, high-accuracy machine learning models introduced in this work: Dynamical++, Climatology++, and Persistence++. Each model trains only on past temperature, precipitation, and forecast data and outputs corrections for future forecasts tailored to the site, target date, and dynamical model. Dynamical++ and Climatology++ learn site- and date-specific offsets for dynamical and climatological forecasts by minimizing forecasting error over adaptively selected training periods. Persistence++ additionally accounts for recent weather trends by combining lagged observations, dynamical forecasts, and climatology to minimize historical forecasting error for each site. More details on each component model can be found in “Methods” section. Correction alone is no substitute for improved understanding and representation of predictability sources, and we therefore view ABC as a complement for improved dynamical modeling. Fortunately, as an adaptive correction, ABC automatically benefits from scientific improvements to its dynamical model inputs while learning to compensate for their residual systematic errors.

ABC can be applied operationally as a computationally inexpensive enhancement to any dynamical model forecast, and we use this property to substantially reduce the forecasting errors of eight operational dynamical models, including the state-of-the-art ECMWF model. ABC also improves upon the skill of classical and recently developed bias corrections from the subseasonal forecasting literature including quantile mapping26,27,28,29, locally estimated scatterplot smoothing (LOESS)27,30, and neural network22 approaches. We couple these performance improvements with a practical workflow for explaining ABC skill gains using Cohort Shapley31 and identifying higher-skill windows of opportunity5 based on relevant climate variables. To facilitate future deployment and development, we release our ABC model and workflow code through the subseasonal_toolkit Python package.

Results

Improved precipitation and temperature prediction with adaptive bias correction

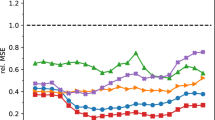

Figure 1 highlights the advantage of ABC over raw dynamical models when forecasting accumulated precipitation and averaged temperature in the contiguous U.S. Here, ABC is applied to the leading subseasonal model, ECMWF, to each of seven operational models participating in the Subseasonal Experiment [SubX11], and to the mean of the SubX models. Subseasonal forecasting skill, measured by uncentered anomaly correlation, is evaluated at two forecast horizons, weeks 3–4 and weeks 5–6, and averaged over all available forecast dates in the 4-year span of 2018–2021. We find that, for each dynamical model input and forecasting task, ABC leads to a pronounced improvement in skill. For example, when applied to the U.S. operational Climate Forecast System Version 2 (CFSv2), ABC improves temperature forecasting skill by 109-289% (over baseline skills of 0.08–0.17) and precipitation skill by 165–253% (over baseline skills of 0.05–0.07). When applied to the leading ECMWF model, ABC improves temperature skill by 60–90% (over baseline skills of 0.18–0.25) and precipitation skill by 40–69% (over baseline skills of 0.11–0.15). Moreover, for precipitation, even lower-skill models like CCSM4 have improved skill that is comparable to the best dynamical model after the application of ABC. Overall—despite significant variability in dynamical model skill—ABC consistently reduces the systematic errors of its input model, bringing forecasts closer to observations for each target variable and time horizon. Similar forecast improvement is observed when stratifying skill by season (see Supplementary Fig. S1).

Across the contiguous U.S. and the years 2018–2021, ABC provides a pronounced improvement in skill for each SubX or ECMWF dynamical model input and each forecasting task (a–d). The error bars display 95% bootstrap confidence intervals. Models without forecast data for weeks 5–6 are omitted from the bottom panels.

In Supplementary Fig. S2, we compare ABC with three additional subseasonal debiasing baselines (detailed in “Methods” section): quantile mapping26,27,28,29, LOESS debiasing27,30, and a recently proposed neural network debiasing scheme trained jointly on temperature and precipitation inputs [NN-A22]. Given the same dynamical model inputs, ABC improves upon the skill of each baseline for each target variable and forecast horizon.

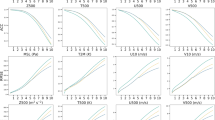

We next examine the spatial distribution of skill for CFSv2, ECMWF, and their ABC-corrected counterparts at three forecast horizons in Fig. 2. At the shorter-term horizon of weeks 1–2, both CFSv2 and ECMWF have reasonably high skill throughout the contiguous U.S. However, skill drops precipitously for both models when moving to the subseasonal forecast horizons (weeks 3–4 and 5–6). This degradation is particularly striking for precipitation, where prediction skill drops to zero or to negative values in the central and northeastern parts of the U.S. For temperature prediction, CFSv2 has a skill of zero across a broad region of the East, while ECMWF produces isolated pockets of zero skill in the west. At these subseasonal timescales, ABC provides consistent improvements across the U.S. that either double or triple the mean skill of CFSv2 and increase the mean skill of ECMWF by 40–90% (over baseline skills of 0.11–0.25). Similar improvements are observed when ABC is applied to the SubX multimodel mean (see Supplementary Fig. S3). In addition, common skill patterns across models are apparent that are consistent with higher precipitation predictability in the Western U.S. than in the Eastern U.S. and higher temperature predictability on the coasts than in the center of the country.

Across the contiguous U.S. and the years 2018–2021, dynamical model skill drops precipitously at subseasonal timescales (weeks 3–4 and 5–6), but adaptive bias correction (ABC) attenuates the degradation, doubling or tripling the skill of CFSv2 (a, b) and boosting ECMWF skill 40–90% over baseline skills of 0.11–0.25 (c, d). Taking the same raw model forecasts as input, ABC also provides consistent improvements over operational debiasing protocols, tripling the precipitation skill of debiased CFSv2 and improving that of debiased ECMWF by 70% (over a baseline skill of 0.11). The average temporal skill over all forecast dates is displayed above each map.

Notably, ABC also improves over standard operational debiasing protocols (labeled debiased CFSv2 and debiased ECMWF in Fig. 2), tripling the average precipitation skill of debiased CFSv2 and increasing that of debiased ECMWF by 70% (over a baseline skill of 0.11). As seen in Supplementary Fig. S4, ABC additionally improves upon the quantile mapping, LOESS, and neural network debiasing baselines, doubling the ECMWF precipitation skill of the best-performing baseline and improving the ECMWF temperature skill by 37% (over a baseline skill of 0.25).

A practical implication of these improvements for downstream decision-makers is an expanded geographic range for actionable skill, defined here as spatial skill above a given sufficiency threshold. For example, in Fig. 3, we vary the weeks 5–6 sufficiency threshold from 0 to 0.6 and find that ABC consistently boosts the number of locales with actionable skill over both raw and operationally debiased CFSv2 and ECMWF. We observe similar gains for weeks 3–4 in Supplementary Fig. S5 and for ABC correction of the SubX multimodel mean in Supplementary Fig. S6.

We emphasize that our results, like those of refs. 21,22,27,28,29, focus on improved deterministic forecasting: outputting a more accurate point estimate of a future weather variable. The complementary paradigm of probabilistic forecasting instead predicts the distribution of a weather variable, i.e., the probability that a variable will fall above or below any given threshold. Ideally, one would employ a tailored approach to probabilistic debiasing that directly optimizes a probabilistic skill metric to output a corrected distribution. However, there is a simple, inexpensive way to convert the output of ABC into a probabilistic forecast. Given any ensemble of dynamical model forecasts (e.g., the control and perturbed forecasts routinely generated operationally), one can train ABC on the ensemble mean, apply the learned bias corrections to each ensemble member individually, and use the empirical distribution of those bias-corrected forecasts as the probabilistic forecasting estimate. In Supplementary Figs. S7–S10, we present two standard probabilistic skill metrics—the continuous ranked probability score (CRPS) and the Brier skill score (BSS) for above normal observations32, defined in Supplementary Methods—and observe that, for each target variable, forecast horizon, and season, ABC improves upon the BSS and CRPS of ECMWF, LOESS debiasing, and quantile mapping debiasing.

As evidenced in Fig. 4, an important component of the overall accuracy of ABC is the reduction of the systematic bias introduced by dynamical model deficiencies. Figure 4 presents the spatial distribution of this bias by plotting the average difference between forecasts and observations over all forecast dates. The precipitation maps reveal a wet bias over the northern half of the U.S. for CFSv2 (average bias: 8.32 mm) and a dry bias over the south-east part of the U.S. for ECMWF (average bias: −8.12 mm). In this case, ABC eliminates the CFSv2 wet bias (average bias: −0.46 mm) and slightly alleviates the ECMWF dry bias (average bias: −6.24 mm). For temperature, we observe a cold bias over the eastern half of the U.S. for CFSv2 (average bias: −1.2 °C) and notice a mixed pattern of cold and warm biases over the western half of the U.S for ECMWF (average bias: −0.30 °C). In this case, although ABC does not eliminate these biases entirely, it reduces the magnitude of the cold eastern bias by bringing CFSv2 forecasts closer to observations (average bias: −0.18 °C) and reduces the mixed ECMWF bias (average bias: −0.04 °C).

We observe comparable bias reductions when ABC is applied to the SubX multimodel mean in Supplementary Fig. S11, improved dampening of bias relative to quantile mapping, LOESS, and neural network baselines in Supplementary Fig. S12, and improved dampening of bias relative to operationally debiased temperature and CFSv2 precipitation in Supplementary Fig. S13. Since each bias correction is based on historical data and weather is non-stationary, the remaining residual bias patterns may be indicative of recent regional shifts in average temperature or precipitation, e.g., decreased average precipitation in the Southeastern U.S. or increased average temperature on the country’s coasts.

Identifying statistical forecasts of opportunity

The results presented so far evaluate overall model skill, averaged across all forecast dates. However, there is a growing appreciation that subseasonal forecasts can benefit from selective deployment during “windows of opportunity,” periods defined by observable climate conditions in which specific forecasters are likely to have higher skill5. In this section, we propose a practical opportunistic ABC workflow that uses a candidate set of explanatory variables to identify windows in which ABC is especially likely to improve upon a baseline model. The same workflow can be used to explain the skill improvements achieved by ABC in terms of the explanatory meteorological variables.

The opportunistic ABC workflow is based on the equitable credit assignment principle of Shapley33 and measures the impact of explanatory variables on individual forecasts using Cohort Shapley31 and overall variable importance using Shapley effects34 (see “Methods” section for more details). We use these Shapley measures to determine the contexts in which ABC offers improvements, in terms of climate variables with known relevance for subseasonal forecasting accuracy. As a running example, we use our workflow to explain the skill differences between ABC-ECMWF and debiased ECMWF when predicting precipitation in weeks 3–4. As our candidate explanatory variables, we use Northern Hemisphere geopotential heights (HGT) at 500 and 10 hPa, the phase of the MJO, Northern Hemisphere sea ice concentration, global sea surface temperatures, the multivariate El Niño–Southern Oscillation index (MEI.v2)35, and the target month. All variables are lagged as described in “Methods” section to ensure that they are observable on the forecast issuance date.

We first use Shapley effects to determine the overall importance of each variable in explaining the precipitation skill improvements of ABC-ECMWF. As shown in Supplementary Fig. S14, the most important explanatory variables are the first two principal components (PCs) of 500 hPa geopotential height, the MJO phase, the second PC of 10 hPa geopotential height, and the first PC of sea ice concentration. These variables are consistent with the literature exploring the dominant contributions to subseasonal precipitation. The 500 hPa geopotential height plays a crucial role in conveying information about the thermal structure of the atmosphere and indicates synoptic circulation changes36. The MJO phase influences weather and climate phenomena within both the tropics and extratropics, resulting in a global influence of MJO in modulating temperature and precipitation37. The 10 hPa geopotential height is a known indicator of polar vortex variability leading to lagged impacts on sea level pressure, surface temperature, and precipitation2. Finally, sea ice concentration has a strong impact on surface turbulent heat fluxes and therefore near-surface temperatures38.

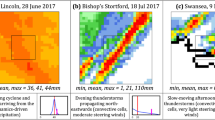

We next use Cohort Shapley to identify the contexts in which each variable has the greatest impact on skill. For example, Fig. 5 summarizes the impact of the first 500 hPa geopotential heights PC (hgt_500_pc1) on ABC-ECMWF skill improvement. This display divides our forecasts into 10 bins, determined by the deciles of hgt_500_pc1, and computes the probability of positive impact in each bin. We find that hgt_500_pc1 is most likely to have a positive impact on skill improvement in decile 1, which features a positive Arctic Oscillation (AO) pattern, and least likely in decile 9, which features AO in the opposite phase. The ABC-ECMWF forecast most impacted by hgt_500_pc1 in decile 1 is also preceded by a positive AO pattern and replaces the wet debiased ECMWF forecast with a more skillful dry pattern in the west. Similarly, Fig. 6 summarizes the impact of the MJO phase (mjo_phase) on ABC-ECMWF skill improvement. Importantly, while skill improvement is sometimes achieved with an especially skillful ABC forecast (as in Fig. 5), it can also be achieved by recovering from an especially poor baseline forecast. The latter is what we see at the bottom of Fig. 6, where the highest impact ABC forecast avoids the strongly negative skill of the baseline debiased ECMWF forecast.

a To summarize the impact of hgt_500_pc1 on ABC-ECMWF skill improvement for precipitation weeks 3–4, we divide our forecasts into 10 bins, determined by the deciles of hgt_500_pc1, and display above each bin map the probability of positive impact in each bin along with a 95% bootstrap confidence interval. The highest probability of positive impact is shown in blue, and the lowest probability of positive impact is shown in red. We find that hgt_500_pc1 is most likely to have a positive impact on skill improvement in decile 1 which features a positive Arctic Oscillation (AO) pattern, and least likely in decile 9, which features AO in the opposite phase. b The forecast most impacted by hgt_500_pc1 in decile 1 is also preceded by a positive AO pattern and replaces the wet debiased ECMWF forecast with a more skillful dry pattern in the west.

a To summarize the impact of mjo_phase on ABC-ECMWF skill improvement for precipitation weeks 3–4, we compute the probability of positive impact and an associated 95% bootstrap confidence interval in each lagged MJO phase bin and adopt the methodology of ref. 53 to create an MJO phase space diagram. The highest probabilities of positive impact (those falling within the confidence interval of the highest probability overall) are shown in blue and the lowest probability of positive impact is shown in red. We find that positive impact on skill improvement is most common in phases 2, 4, 5, and 8 and least common in phase 1. b The forecast most impacted by mjo_phase in phases 2, 4, 5, and 8 avoids the strongly negative skill of the debiased ECMWF baseline.

Finally, we use the identified contexts to define windows of opportunity for operational deployment of ABC. Indeed, since all explanatory variables are observable on the forecast issuance date, one can selectively apply ABC when multiple variables are likely to have a positive impact on skill and otherwise issue a default, standard forecast (e.g., debiased ECMWF). We call this selective forecasting model opportunistic ABC. How many high-impact variables should we require when defining these windows of opportunity? We say a variable is “high-impact” if the positive impact probability for its decile or bin is within the confidence interval of the highest probability overall. Requiring a larger number of high-impact variables will tend to increase the skill gains of ABC but simultaneously reduce the number of dates on which ABC is deployed. Figure 7 illustrates this trade-off for ABC-ECMWF and shows that opportunistic ABC skill is maximized when two or more high-impact variables are required. With this choice, ABC is used for approximately 81% of forecasts and debiased ECMWF is used for the remainder. Figure 8 summarizes the complete opportunistic ABC workflow, from the identification of windows of opportunity through the selective deployment of either ABC or a default baseline forecast for a given target date.

Here we focus on forecasting precipitation in weeks 3–4. a When more explanatory variables fall into high-impact deciles or bins (e.g., the blue bins of Figs. 5 and 6), the mean skill of ABC-ECMWF improves, but the percentage of forecasts using ABC declines. b The overall skill of opportunistic ABC is maximized when ABC-ECMWF is deployed for target dates with two or more high-impact variables and standard debiased ECMWF is deployed otherwise.

Discussion

Dynamical models have shown increasing skill in accurately forecasting the weather39, but they still contain systematic biases that compound on subseasonal timescales and suppress forecast skill40,41,42,43. ABC learns to correct these biases by adaptively integrating dynamical forecasts, historical observations, and recent weather trends. When applied to the leading subseasonal model from ECMWF, ABC improves forecast skill by 60–90% (over baseline skills of 0.18–0.25) for precipitation and 40–69% (over baseline skills of 0.11–0.15) for temperature. The same approach substantially reduces the forecasting errors of seven additional operational subseasonal forecasting models as well as their multimodel mean, with less skillful input models performing nearly as well as the ECMWF model after applying the ABC correction. This finding suggests that systematic errors in dynamical models are a primary contributor to observed skill differences and that ABC provides an effective mechanism for reducing these heterogeneous errors. Because ABC is also simple to implement and deploy in real-time operational settings, ABC represents a computationally inexpensive strategy for upgrading operational models, while conserving valuable human resources.

While the learned correction of systematic errors can play an important role in skill improvement, it is no substitute for scientific improvements in our understanding and representation of the processes underlying subseasonal predictability. As such, we view ABC as a complement for improved dynamical model development. Fortunately, ABC is designed to be adaptive to model changes. As operational models are upgraded, process models improve, and systematic biases evolve, our ABC training protocol is designed to ingest the upgraded model forecasts and hindcasts reflecting those changes.

To capitalize on higher-skill forecasts of opportunity,we have also introduced an opportunistic ABC workflow that explains the skill improvements of ABC in terms of a candidate set of environmental variables, identifies high-probability windows of opportunity based on those variables, and selectively deploys either ABC or a baseline forecast to maximize expected skill.The same workflow can be applied to explain the skill improvements of any forecasting model and, unlike other popular explanation tools44,45, avoids expensive model retraining, requires no generation of additional forecasts beyond those routinely generated for operational or hindcast use, and allows for explanations in terms of variables that were not explicitly used in training the model.

Overall, we find that correcting dynamical forecasts using ABC yields an effective and scalable strategy to optimize the skill of the next generation of subseasonal forecasting models. We anticipate that our hybrid dynamical-learning framework will benefit both research and operations, and we release our open-source code to facilitate future adoption and development.

Methods

Dataset

All data used in this work was obtained from the SubseasonalClimateUSA dataset46. The spatial variables were interpolated onto a 1.5° × 1.5° latitude-longitude grid, and all daily observations (with two exceptions noted below) were aggregated into 2-week moving averages. As ground-truth measurements, we extracted daily gridded observations of average 2-meter temperature in °C47 and precipitation in mm48,49,50. For our explanatory variables, we obtained the daily PCs of 10 and 500 hPa stratospheric geopotential height51 extracted from global 1948–2010 loadings, the daily PCs of sea surface temperature and sea ice concentration52 using global 1981–2010 loadings, the daily MJO phase53, and the bimonthly MEI.v235,54,55. Precipitation was summed over two-week periods, and the MJO phase was not aggregated. Finally, we extracted twice-weekly ensemble mean forecasts of temperature and precipitation from the ECMWF S2S dynamical model6 and ensemble mean forecasts of temperature and precipitation from seven models participating in the SubX project, including five coupled atmosphere-ocean-land dynamical models (NCEP-CFSv2, GMAO-GEOS, NRL-NESM, RSMAS-CCSM4, ESRL-FI) and two models with atmosphere and land components forced with prescribed sea surface temperatures (EMC-GEFS, ECCC-GEM)11. The SubX multimodel mean forecast was obtained by calculating, for each target date, the mean prediction over all available SubX models using the most recent forecast available from each model within a lookback window of size equal to 6 days. Each candidate SubX model is represented by its ensemble mean forecast. Two sets of candidate models are considered. When calculating SubX ensemble mean for weeks 1–2 and weeks 3–4, we consider NCEP-CFSv2, GMAO-GEOS, NRL-NESM, RSMAS-CCSM4, ESRL-FI, EMC-GEFS, and ECCC-GEM. When generating mean forecast for weeks 5–6, we consider NCEP-CFSv2, GMAO-GEOS, NRL-NESM, and RSMAS-CCSM4 only, as the remaining models do not produce forecast data for weeks 5–6.

Forecasting tasks and skill assessment

We consider two prediction targets: average temperature (°C) and accumulated precipitation (mm) over a 2-week period. These variables are forecasted at two time horizons: 15–28 days ahead (weeks 3–4) and 29–42 days ahead (weeks 5–6). We forecast each variable at G = 376 grid points on a 1.5° × 1.5° grid across the contiguous U.S., bounded by latitudes 25N–50N and longitudes 125W–67W. To provide the most realistic assessment of forecasting skill56, all predictions in this study are formed in a real forecast manner that mimics operational use. In particular, to produce a forecast for a given target date, all learning-based models are trained and tuned only on data observable on the corresponding forecast issuance date.

For evaluation, we adopt the exact protocol of the recent Subseasonal Climate Forecast Rodeo competition, run by the U.S. Bureau of Reclamation in partnership with the National Oceanic and Atmospheric Administration, U.S. Geological Survey, U.S. Army Corps of Engineers, and California Department of Water Resources57. In particular, for a 2-week period starting on date t, let \({{{{{{{{\bf{y}}}}}}}}}_{t}\in {{\mathbb{R}}}^{G}\) denote the vector of ground-truth measurements yt,g for each grid point g and \({\hat{{{{{{{{\bf{y}}}}}}}}}}_{t}\in {{\mathbb{R}}}^{G}\) denote a corresponding vector of forecasts. In addition, define climatology ct as the average ground-truth values for a given month and day over the years 1981–2010. We evaluate each forecast using uncentered anomaly correlation skill57,58,

with a larger value indicating higher quality. For a collection of target dates, we report average skill using progressive validation59 to mimic operational use.

Operational ECMWF, CFSv2, and SubX debiasing

We bias correct a uniformly weighted ensemble of the ECMWF control forecast and its 50 ensemble forecasts following the ECMWF operational protocol60: for each target forecast date and grid point, we bias correct the 51-member ensemble forecast by subtracting the equal-weighted 11-member ECMWF ensemble reforecast averaged over all dates from the last 20 years within ±6 days from the target month-day combination and then adding the average ground-truth measurement over the same dates.

Following ref. 57, we bias correct a uniformly weighted 32-member CFSv2 ensemble forecast, formed from four model initializations averaged over the eight most recent 6-hourly issuances, in the following way: for each target forecast date and grid point, we bias correct the 32-member ensemble forecast by subtracting the equal-weighted 8-member CFSv2 ensemble hindcast averaged over all dates from 1999 to 2010 inclusive matching the target day and month and then adding the average ground-truth measurement over the same dates. We bias correct the SubX multimodel mean forecast in an identical manner, using the SubX multimodel mean reforecasts from 1999 to 2010 inclusive.

Adaptive bias correction

ABC is a uniformly weighted ensemble of three machine learning models, Dynamical++, Climatology++, and Persistence++, detailed below. A schematic of ABC model input and output data can be found in Supplementary Fig. S15, and supplementary algorithm details can be found in Supplementary Methods.

Dynamical++ (Algorithm S1) is a three-step approach to dynamical model correction: (i) adaptively select a window of observations around the target day of year and a range of issuance dates and lead times for ensembling based on recent historical performance, (ii) form an ensemble mean forecast by averaging over the selected range of issuance dates and lead times, and (iii) bias correct the ensemble forecast for each site by adding the mean value of the target variable and subtracting the mean forecast over the selected window of observations. Unlike standard debiasing strategies, which employ static ensembling and bias correction, Dynamical++ adapts to heterogeneity in forecasting error by learning to vary the amount of ensembling and the size of the observation window over time.

For a given target date t⋆ and lead time l⋆, the Dynamical++ training set \({{{{{{{\mathcal{T}}}}}}}}\) is restricted to data fully observable one day prior to the issuance date, that is, to dates t ≤ t⋆ − l⋆ − L − 1 where L = 14 represents the forecast period length. For each target date, Dynamical++ is run with the hyperparameter configuration that achieved the smallest mean progressive geographic root mean squared error (RMSE) over the preceding 3 years. Here, progressive indicates that each candidate model forecast is generated using all training data observable prior to the associated forecast issuance date. Every configuration with spans ∈ {0, 14, 28, 35} (the span is the number of days included on each side of the target day of year), number of averaged issuance dates d⋆ ∈ {1, 7, 14, 28, 42}, and leads \({{{{{{{\mathcal{L}}}}}}}}=\{29\}\) for the weeks 5–6 lead time and \({{{{{{{\mathcal{L}}}}}}}}\in \{\{15\},[15,22],[0,29],\{29\}\}\) the weeks 3–4 lead time was considered.

Inspired by climatology, Climatology++ (Algorithm S2) makes no use of the dynamical forecast and rather outputs the historical geographic median (if the user-supplied loss function is RMSE) or mean (if loss = MSE) of its target variables over all days in a window around the target day of year. Unlike a static climatology, Climatology++ adapts to target variable heterogeneity by learning to vary the size of the observation window and the number of training years over time.

For a given target date t⋆ and lead time l⋆, the Climatology++ training set \({{{{{{{\mathcal{T}}}}}}}}\) is restricted to data fully observable one day prior to the issuance date, that is, to dates t ≤ t⋆ − l⋆ − L − 1 where L = 14 represents the forecast period length. For each target date, Climatology++ is run with the hyperparameter configuration that achieved the smallest mean progressive geographic RMSE over the preceding 3 years. All spans s ∈ {0, 1, 7, 10} were considered. All precipitation configurations used the geographic MSE loss and all available training years. All temperature configurations used the geographic RMSE loss and either all available training years or Y = 29. For shorter than subseasonal lead times (e.g., weeks 1–2), Climatology++ is excluded from the ABC forecast and only Dynamical++ and Persistence++ are averaged.

Persistence++ (Algorithm S3) accounts for recent weather trends by fitting an ordinary least-squares regression per grid point to optimally combine lagged temperature or precipitation measurements, climatology, and a dynamical ensemble forecast. For a given target date t⋆ and lead time l⋆, the Persistence++ training set \({{{{{{{\mathcal{T}}}}}}}}\) is restricted to data fully observable one day prior to the issuance date, that is, to dates t ≤ t⋆ − l⋆ − L − 1 where L = 14 represents the forecast period length. In Algorithm S3, the set \({{{{{{{\mathcal{L}}}}}}}}\) represents the full set of subseasonal lead times available in the dataset, i.e., \({{{{{{{\mathcal{L}}}}}}}}=[0,29]\).

Debiasing baselines

NN-A22 learns a non-linear mapping between daily corrected CFSv2 precipitation and temperature and observed precipitation and temperature for the contiguous U.S. In particular, the model’s inputs (predictors) consist of CFSv2 bias-corrected ensemble mean for total precipitation and temperature anomalies as well as the observed climatologies for precipitation and temperature. The model’s target variables (predictands) are observed temperature anomalies and total precipitation. Both daily target variables are converted to 2-weekly mean and 2-weekly total and are predicted simultaneously for the entire forecast domain. NN-A is a neural network with a single hidden layer consisting of K = 200 hidden neurons. This architecture enables the model to account for both non-linear relationships among input and target variables as well as their spatial dependency and the co-variability that characterize these variables. For a given lead time, the NN-A model was trained on all available data from January 2000 to December 2017 (inclusive) save for those dates that were unobservable on the issuance date associated with a January 1, 2018, target date. Each NN-A model was trained using the Adam algorithm61 for 10,001 epochs without dropout as in ref. 22 and used relu activations and the default batch size (32) and learning rate (0.001) from Tensorflow62.

LOESS debiasing30 adds a correction to a dynamical model forecast using LOESS. Using all dates prior to 2018 with available ground-truth measurements and (re)forecast data, the measurements for each month-day combination (save February 29) are averaged, resulting in a sequence of 365 values. The same is done for the forecasts. A local linear regression is run on each of these sequences using a fraction of 0.1 of the points to fit each value. The end result is two smoothed sequences of 365 values, one with the measurement data and the other one with forecast data. The entrywise difference (in the case of temperature) or ratio (in the case of precipitation) between these sequences is used as a correction to be added (in the case of temperature) or multiplied (in the case of precipitation) to the forecasts made in 2018 and beyond, based on the target forecast day and month. Note that the locality of the smoothed corrections, which only use consecutive days in the calendar year and do not wrap around from December to January, ensures that every forecast is made using only training data observable on the forecast issuance date.

Quantile mapping26 corrects a base dynamical forecast by aligning the quantiles of forecast and measurement data. For our training set, we use all dates prior to 2018 with available ground-truth measurements and (re)forecast data. For a given grid point, target date, and dynamical model forecast, we first identify the quantile rank of the forecasted value amongst all training set forecasts issued for the same month-day combination. If the quantile rank exceeds 90%, we replace its value with 90%; if the quantile rank falls below 10%, we replace its value with 10%. We then add to the forecast the corresponding quantile of the training set measurements for the target month and day and subtract the corresponding quantile of the training set forecasts for the target month and day. In the case of precipitation, if the resulting value is negative, we set the forecast to zero.

Cohort Shapley and Shapley effects

Cohort Shapley and Shapley effects use Shapley values to quantify the impact of variables on outcomes. Shapley values are based on work in game theory33 exploring how to assign appropriate rewards to individuals who contribute to an outcome as part of a team. When applied to explanatory variables, Shapley values can be thought of as roughly analogous to the coefficients in a linear regression. Importantly, unlike linear regression coefficients, Shapley values are applicable in settings where the interaction among variables is highly non-linear. The procedure for computing Shapley values involves testing how much a change to one explanatory variable influences a target outcome. These tests are carried out by measuring how the target outcome varies when a given explanatory variable changes in the context of subsets of other explanatory variables.

Shapley effects34 are a specific instantiation of the general Shapley value principle, designed for measuring variable importance. For a given outcome variable to be explained (for example, the skill difference between ABC-ECMWF and operationally debiased ECMWF measured on each forecast date) and a collection of candidate explanatory variables (for example, relevant meteorological variables observed at the time of each forecast’s issuance), the Shapley effects are overall measures of variable importance that quantify how much of the outcome variable’s variance is explained by each candidate explanatory variable. Cohort Shapley values31 provide a more granular application of Shapley values by quantifying the impact of each explanatory variable on the measured outcome of each individual forecast.

Opportunistic ABC workflow

Here we detail the steps of the opportunistic ABC workflow illustrated in Fig. 8 using ECMWF as an example of dynamical input. The same workflow applies to any other dynamical input.

-

1.

Identify a set of V candidate explanatory variables. Here we use the temporal variables enumerated in ref. 15 (Fig. 2) augmented with the first two PCs of 500 hPa geopotential heights and the target month. To ensure that the workflow can be deployed operationally, we use lagged observations with lags chosen so that each variable is observable on the forecast issuance date. MEI.v2 is lagged by 45 days when forecasting weeks 3–4 and by 59 days for weeks 5–6. The other variables are lagged by 30 days for weeks 3–4 and by 44 days for weeks 5–6.

-

2.

Compute the temporal skill difference between ABC-ECMWF and debiased ECMWF for each target date in the evaluation period.

-

3.

For each continuous explanatory variable (e.g., hgt_500_pc2), divide the evaluation period forecasts into 10 bins, determined by the deciles of the explanatory variable. For each categorical variable (e.g., mjo_phase), divide the forecasts into bins determined by the categories (e.g., MJO phases).

-

4.

Use the cohortshapley Python package to compute overall variable importance (measured by Shapley effects) and forecast-specific variable impact values explaining the skill differences.

-

5.

Within each variable bin, compute the fraction of forecasts with positive Cohort Shapley impact values. Report that fraction as an estimate of the probability of positive variable impact, and compute a 95% bootstrap confidence interval. Flag all bin probabilities within the confidence interval of the highest probability bin as high impact; similarly, flag all bin probabilities within the confidence interval of the lowest probability bin as low impact. The remaining bins—those that fall outside of both confidence intervals—have an intermediate impact and are not flagged as either low or high impact. Visualize and interpret the highest and lowest impact bins.

-

6.

Identify the forecast most impacted by the explanatory variable in the high-impact bins. Visualize the ABC-ECMWF and debiased ECMWF forecasts and the associated explanatory variable for that target date.

-

7.

For each k ∈ {0, …, V}, compute opportunistic ABC skill when k or more explanatory variables fall into high-impact bins. Let k⋆ represent the integer at which opportunistic ABC skill is maximized.

-

8.

At each future forecast issuance date, deploy ABC-ECMWF if k⋆ or more explanatory variables fall into high-impact bins and deploy debiased ECMWF otherwise.

Data availability

The SubseasonalClimateUSA dataset used in this study has been deposited on Microsoft Azure and is available for download via the subseasonal_data Python package: https://github.com/microsoft/subseasonal_data. This work is based on S2S data. S2S is a joint initiative of the World Weather Research Programme (WWRP) and the World Climate Research Programme (WCRP). The original S2S database is hosted at ECMWF as an extension of the TIGGE database. We acknowledge the agencies that support the SubX system, and we thank the climate modeling groups (Environment Canada, NASA, NOAA/NCEP, NRL and University of Miami) for producing and making available their model output. NOAA/MAPP, ONR, NASA, NOAA/NWS jointly provided coordinating support and led the development of the SubX system. Source Data are provided with this paper.

Code availability

Python 3 code replicating all experiments and analyses in this work is available at https://github.com/microsoft/subseasonal_toolkit.

References

Troccoli, A. Seasonal climate forecasting. Meteorol. Appl. 17, 251–268 (2010).

Merryfield, W. J. et al. Current and emerging developments in subseasonal to decadal prediction. Bull. Am. Meteorol. Soc. 101, E869–E896 (2020).

White, C. J. et al. Potential applications of subseasonal-to-seasonal (S2S) predictions. Meteorol. Appl. 24, 315–325 (2017).

National Academies of Sciences Engineering and Medicine. in Next Generation Earth System Prediction: Strategies for Subseasonal to Seasonal Forecasts (The National Academies Press, Washington, DC, 2016).

Mariotti, A. et al. Windows of opportunity for skillful forecasts subseasonal to seasonal and beyond. Bull. Am. Meteorol. Soc. 101, E608–E625 (2020).

Vitart, F., Robertson, A. W. & Anderson, D. L. Subseasonal to seasonal prediction project: bridging the gap between weather and climate. Bull. World Meteorol. Organ. 61, 23 (2012).

L’Heureux, M. L., Tippett, M. K. & Becker, E. J. Sources of subseasonal skill and predictability in wintertime California precipitation forecasts. Weather Forecast. 36, 1815–1826 (2021).

Reyniers, M. in Quantitative Precipitation Forecasts based on Radar Observations: Principles, Algorithms and Operational Systems (Institut Royal Météorologique de Belgique Brussel, Belgium, 2008).

Vitart, F. et al. The subseasonal to seasonal (S2S) prediction project database. Bull. Am. Meteorol. Soc. 98, 163–173 (2017).

Chantry, M., Christensen, H., Dueben, P. & Palmer, T. Opportunities and challenges for machine learning in weather and climate modelling: hard, medium and soft ai. Philos. Trans. R. Soc. A 379, 20200083 (2021).

Pegion, K. et al. The subseasonal experiment (SubX): a multimodel subseasonal prediction experiment. Bull. Am. Meteorol. Soc. 100, 2043–2060 (2019).

Lang, A. L., Pegion, K. & Barnes, E. A. Introduction to special collection: “bridging weather and climate: subseasonal-to-seasonal (S2S) prediction”. J. Geophys. Res. Atmos. 125, e2019JD031833 (2020).

Li, L., Schmitt, R. W., Ummenhofer, C. C. & Karnauskas, K. B. Implications of North Atlantic sea surface salinity for summer precipitation over the us midwest: Mechanisms and predictive value. J. Clim. 29, 3143–3159 (2016).

Cohen, J. et al. S2s reboot: an argument for greater inclusion of machine learning in subseasonal to seasonal forecasts. WIREs Clim. Change 10, e00567 (2019).

Hwang, J., Orenstein, P., Cohen, J., Pfeiffer, K. & Mackey, L. Improving subseasonal forecasting in the western U.S. with machine learning. in Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, ser. KDD ’19, 2325–2335 (Association for Computing Machinery, New York, NY, USA) https://doi.org/10.1145/3292500.3330674 (2019).

Arcomano, T. et al. A machine learning-based global atmospheric forecast model. Geophys. Res. Lett. 47, e2020GL087776 (2020).

He, S., Li, X., DelSole, T., Ravikumar, P. & Banerjee, A. Sub-seasonal climate forecasting via machine learning: challenges, analysis, and advances. Proceedings of the AAAI Conference on Artificial Intelligence 35, 169–177 (2021).

Yamagami, A. & Matsueda, M. Subseasonal forecast skill for weekly mean atmospheric variability over the northern hemisphere in winter and its relationship to midlatitude teleconnections. Geophys. Res. Lett. 47, e2020GL088508 (2020).

Wang, C. et al. Improving the accuracy of subseasonal forecasting of china precipitation with a machine learning approach. Front Earth Sci. 9, 659310 (2021).

Kim, M., Yoo, C. & Choi, J. Enhancing subseasonal temperature prediction by bridging a statistical model with dynamical Arctic Oscillation forecasting. Geophys. Res. Lett. 48, e2021GL093447 (2021).

Kim, H., Ham, Y., Joo, Y. & Son, S. Deep learning for bias correction of mjo prediction. Nat. Commun. 12, 1–7 (2021).

Fan, Y., Krasnopolsky, V., van den Dool, H., Wu, C.-Y. & Gottschalck, J. Using artificial neural networks to improve CFS week 3-4 precipitation and 2-meter air temperature forecasts. Weather and Forecasting; https://journals.ametsoc.org/view/journals/wefo/aop/WAF-D-20-0014.1/WAF-D-20-0014.1.xml (2021).

Watson-Parris, D. Machine learning for weather and climate are worlds apart. Philos. Trans. R. Soc. A 379, 20200098 (2021).

Weyn, J. A., Durran, D. R., Caruana, R. & Cresswell-Clay, N. Sub-seasonal forecasting with a large ensemble of deep-learning weather prediction models. J. Adv. Modeling Earth Syst. 13, e2021MS002502 (2021).

Srinivasan, V., Khim, J., Banerjee, A. & Ravikumar, P. Subseasonal climate prediction in the western US using Bayesian spatial models. in Proceedings of the Thirty-Seventh Conference on Uncertainty in Artificial Intelligence, ser. Proceedings of Machine Learning Research vol. 161, July 27–30 (eds de Campos, C. & Maathuis, M. H.) 961–970 (PMLR, 2021).

Panofsky, H. A. & Brier, G. W. in Some Applications of Statistics to Meteorology (Pennsylvania State University Press, University Park, PA, USA, 1968).

Monhart, S. et al. Skill of subseasonal forecasts in Europe: effect of bias correction and downscaling using surface observations. J. Geophys. Res. Atmos. 123, 7999–8016 (2018).

Baker, S. A., Wood, A. W. & Rajagopalan, B. Developing subseasonal to seasonal climate forecast products for hydrology and water management. JAWRA J. Am. Water Resour. Assoc. 55, 1024–1037 (2019).

Li, W. et al. Evaluation and bias correction of S2S precipitation for hydrological extremes. J. Hydrometeorol. 20, 1887–1906 (2019).

Cleveland, W. S. & Devlin, S. J. Locally weighted regression: an approach to regression analysis by local fitting. J. Am. Stat. Assoc. 83, 596–610 (1988).

Mase, M., Owen, A. B. & Seiler, B. in Explaining Black Box Decisions by Shapley Cohort Refinement;https://arxiv.org/abs/1911.00467 (2019).

Hersbach, H. Decomposition of the continuous ranked probability score for ensemble prediction systems. Weather Forecast. 15, 559–570 (2000).

Shapley, L. S. A value for n-person games. in Contributions to the Theory of Games (AM-28), Volume II (eds Kuhn, H. W. & Tucker, A. W.) 307–318 (Princeton University Press, Princeton, 1953).

Song, E., Nelson, B. L. & Staum, J. Shapley effects for global sensitivity analysis: theory and computation. SIAM/ASA J. Uncertain. Quantif. 4, 1060–1083 (2016).

Wolter, K. & Timlin, M. S. Monitoring ENSO in COADS with a seasonally adjusted principal component index. in Proc. of the 17th Climate Diagnostics Workshop 52–57 (1993).

Christidis, N. & Stott, P. A. Changes in the geopotential height at 500 hpa under the influence of external climatic forcings. Geophys. Res. Lett. 42, 10–798 (2015).

Woolnough, S. J. Chapter 5 – the Madden-Julian Oscillation. in Sub-Seasonal to Seasonal Prediction (eds Robertson, A. W. & Vitart, F.) 93–117 (Elsevier); https://www.sciencedirect.com/science/article/pii/B978012811714900005X (2019).

Chevallier, M., Massonnet, F., Goessling, H., Guémas, V. & Jung, T. Chapter 10 – the role of sea ice in sub-seasonal predictability. in Sub-Seasonal to Seasonal Prediction (eds Robertson, A. W. & Vitart, F.) 201–221 (Elsevier); https://www.sciencedirect.com/science/article/pii/B9780128117149000103 (2019).

Bauer, P., Thorpe, A. & Brunet, G. The quiet revolution of numerical weather prediction. Nature 525, 47–55 (2015).

Intergovernmental Panel on Climate Change. Evaluation of Climate Models 741–866 (Cambridge University Press, 2014).

Zadra, A. et al. Systematic errors in weather and climate models: nature, origins, and ways forward. Bull. Am. Meteorol. Soc. 99, ES67–ES70 (2018).

Zhang, L., Kim, T., Yang, T., Hong, Y. & Zhu, Q. Evaluation of subseasonal-to-seasonal (S2S) precipitation forecast from the North American multi-model ensemble phase II (NMME-2) over the contiguous US. J. Hydrol. 603, 127058 (2021).

Dutra, E., Johannsen, F. & Magnusson, L. Late spring and summer subseasonal forecasts in the northern hemisphere midlatitudes: biases and skill in the ECMWF model. Monthly Weather Rev. 149, 2659–2671 (2021).

Ribeiro, M. T., Singh, S. & Guestrin, C. “Why should i trust you?”: explaining the predictions of any classifier. in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, ser. KDD ’16 1135–1144 (Association for Computing Machinery, New York, NY, USA, 2016).

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. in Advances in Neural Information Processing Systems Vol. 30 (eds Guyon, I. et al.) (Curran Associates, Inc., 2017).

SubseasonalClimateUSA dataset. Subseasonal data Python package; https://github.com/microsoft/subseasonal_data (2021).

Fan, Y. & van den Dool, H. A global monthly land surface air temperature analysis for 1948-present. J. Geophys. Res. Atmos. 113, D1 (2008).

Xie, P. et al. A gauge-based analysis of daily precipitation over East Asia. J. Hydrometeorol. 8, 607–626 (2007).

Chen, M. et al. Assessing objective techniques for gauge-based analyses of global daily precipitation. J. Geophys. Res. Atmos. 113, D4 (2008).

Xie, P., Chen, M. & Shi, W. CPC unified gauge-based analysis of global daily precipitation. in 24th Conf. on Hydrology Vol. 2 (Amer. Meteor. Soc, Atlanta, GA, 2010).

Kalnay, E. et al. The NCEP/NCAR 40-year reanalysis project. Bull. Am. Meteorol. Soc. 77, 437–472 (1996).

Reynolds, R. W. et al. Daily high-resolution-blended analyses for sea surface temperature. J. Clim. 20, 5473–5496 (2007).

Wheeler, M. C. & Hendon, H. H. An all-season real-time multivariate MJO index: development of an index for monitoring and prediction. Monthly Weather Rev. 132, 1917–1932 (2004).

Wolter, K. & Timlin, M. S. Measuring the strength of ENSO events: how does 1997/98 rank? Weather 53, 315–324 (1998).

Wolter, K. & Timlin, M. S. El Niño/Southern Oscillation behaviour since 1871 as diagnosed in an extended multivariate ENSO index (MEI.ext). Int. J. Climatol. 31, 1074–1087 (2011).

Risbey, J. S. et al. Standard assessments of climate forecast skill can be misleading. Nat. Commun. 12, 4346 (2021).

Nowak, K., Webb, R., Cifelli, R. & Brekke, L. Sub-seasonal climate forecast rodeo. in 2017 AGU Fall Meeting, New Orleans, LA, 11–15 Dec (2017).

Wilks, D. S.Statistical Methods in the Atmospheric Sciences, ser. Vol. 100 (International Geophysics. Academic Press, 2011).

Blum, A., Kalai, A. & Langford, J. Beating the hold-out: bounds for k-fold and progressive cross-validation. in Proceedings of the Twelfth Annual Conference on Computational Learning Theory 203–208 (1999).

ECMWF. Re-forecast for medium and extended forecast range (accessed 29 June 2022); https://www.ecmwf.int/en/forecasts/documentation-and-support/extended-range/re-forecast-medium-and-extended-forecast-range (2022).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7–9, 2015, Conference Track Proceedings (eds Bengio, Y. & LeCun, Y.) (2015).

Abadi, M. et al. TensorFlow: large-scale machine learning on heterogeneous systems; https://www.tensorflow.org/ (2015).

Acknowledgements

We thank Jessica Hwang for her suggestion to explore Cohort Shapley. This work was supported by Microsoft AI for Earth (S.M. and G.F.); the Climate Change AI Innovation Grants program (S.M., P.O., G.F., J.C., E.F., and L.M.), hosted by Climate Change AI with the support of the Quadrature Climate Foundation, Schmidt Futures, and the Canada Hub of Future Earth; FAPERJ (Fundação Carlos Chagas Filho de Amparo à Pesquisa do Estado do Rio de Janeiro) grant SEI-260003/001545/2022 (P.O.); NOAA grant OAR-WPO-2021-2006592 (G.F., J.C., and L.M.); and the National Science Foundation grant PLR-1901352 (J.C.).

Author information

Authors and Affiliations

Contributions

S.M., P.O., G.F., J.C., E.F., and L.M. designed the research, discussed the results, and contributed to the writing of the manuscript. S.M., P.O., G.F., M.O., and L.M. performed model runs. S.M., P.O., and L.M. analyzed model outputs and generated figures.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mouatadid, S., Orenstein, P., Flaspohler, G. et al. Adaptive bias correction for improved subseasonal forecasting. Nat Commun 14, 3482 (2023). https://doi.org/10.1038/s41467-023-38874-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-38874-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.