Abstract

Open quantum systems have been shown to host a plethora of exotic dynamical phases. Measurement-induced entanglement phase transitions in monitored quantum systems are a striking example of this phenomena. However, naive realizations of such phase transitions requires an exponential number of repetitions of the experiment which is practically unfeasible on large systems. Recently, it has been proposed that these phase transitions can be probed locally via entangling reference qubits and studying their purification dynamics. In this work, we leverage modern machine learning tools to devise a neural network decoder to determine the state of the reference qubits conditioned on the measurement outcomes. We show that the entanglement phase transition manifests itself as a stark change in the learnability of the decoder function. We study the complexity and scalability of this approach in both Clifford and Haar random circuits and discuss how it can be utilized to detect entanglement phase transitions in generic experiments.

Similar content being viewed by others

Introduction

Entanglement entropy in closed quantum systems that thermalize generally tends to increase until reaching a volume-law behavior with entanglement spread throughout the system1,2. Coupling to a bath profoundly changes the internal evolution of the system3, which in turn can suppress the growth of entanglement and correlations within the system to an area-law behavior4,5. A prominent example of such systems is random quantum circuits with intermediate measurements6,7,8,9,10. In these circuits, where the unitary time evolution of the system is interspersed by quantum measurements, the competition between unitary and non-unitary elements leads to a measurement-induced phase transition (MIPT) between a pure phase with an area-law and a mixed phase with a volume-law entanglement behavior11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33. Such entanglement phase transitions are only accessible when the density matrix is conditioned on the measurement outcomes while they are hidden from any observable which can be expressed as a linear function of the density matrix. On the other hand, to experimentally probe observables which are non-linear functions of the density matrix, one naively needs to reproduce multiple copies of the same state. However, due to intrinsic randomness in measurement outcomes, this naive approach requires repeating the experiment exponentially many times (in system size)10,26.

Building on the close connection between measurement-induced entanglement phase transitions and quantum error correction11,12,34,35,36,37, a possible workaround to this obstacle was found in ref. 38 for purification transitions, which generically coincide with area-to-volume-law entanglement transitions in random circuit models without symmetry or topological order27. It was shown how to probe these phase transitions through purification dynamics of an ancilla reference qubit that is initially entangled to local system degrees of freedom. Subsequently, the time dependence of the entanglement entropy of the reference qubits signifies the phase transition properties11,15,38. To employ this method, one needs to find the density matrix of reference qubits conditioned on the measurement outcomes of the circuit. Hence, the final objective of this approach is to obtain a “decoder” that maps the measurement outcomes to the density matrix of the reference qubit. However, such decoders are only known and implemented for special classes of circuits such as stabilizer circuits9. For more generic circuits like Haar-random circuits, finding an analytical solution to this problem is likely unfeasible.

Here, motivated by the recent successful applications of machine learning algorithms in quantum sciences39 and especially optimizing quantum error correction codes and quantum decoders40,41,42,43,44,45,46,47,48, we provide a generic neural network (NN) approach that can efficiently find the aforementioned decoders. First, we sketch our physically motivated NN architecture. Although we use numerical simulations of Clifford circuits to show the efficacy of our NN decoder, we argue that in principle the same decoder with slight modifications should work for any generic circuit. We investigate the complexity of our learning task by studying the number of circuit runs required for training the neural network decoder. Importantly, we show that the learning task only needs measurement outcomes inside a rectangle encompassing the statistical light-cone19,38 of the reference qubit. Furthermore, we demonstrate that by studying the temporal behavior of the learnability of the quantum trajectories, one can estimate the critical properties of the phase transition. We also verify that for large circuits one can train the NN over smaller circuits which provides evidence for the scalability of our method. Finally, we explain how our method can be applied to generic circuits with Haar random gates, and we study the temporal behavior of the averaged entanglement entropy for two values of measurement rate in the area-law and volume-law phases for a small ensemble of such circuits.

Results

Model

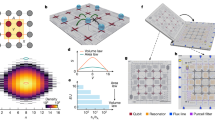

The circuits that we study have a brickwork structure as in Fig. 1, with L qubits. We consider time evolution with T time steps with repetitive layers of two-qubit random unitary gates, followed by a round of single-site measurements of the Pauli Z operators at each site with probability p. As one tune p past some critical value pc, there is a phase transition from a volume-law entanglement behavior (p < pc) to an area-law behavior (p > pc) and a logarithmic scaling at the critical point (p = pc). Crucially for this work, this phase transition is also manifested in the time dependence of the entanglement entropy of a reference qubit entangled with the system SQ(t)38. SQ(T), averaged over many circuit runs, is known as the coherent quantum information and plays a crucial role in the fundamental theory of quantum error correction49. For polynomials in system-size circuit depths, SQ(T) maintains a finite value in the volume-law phase and vanishes in the area-law phase. The protocol we use to probe SQ(t) is illustrated in Fig. 1a. Starting from a pure product state, we make a Bell pair out of the qubit in the middle and an ancilla reference qubit. Throughout the paper, we use periodic boundary conditions for the circuit.

a Brickwall structure of a hybrid circuit with random two-qubit Clifford gates interspersed with projective Z measurements and with periodic boundary conditions. \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\) denotes the measurement outcome matrix with matrix elements mi = {0, ± 1} (mi = 0 when the corresponding qubit is not measured, and mi = ± 1 when a qubit’s Pauli Z is measured). Here, T = 3 for this example. b Neural network architecture: We use convolutional neural networks composed of C: convolutional, P: pooling, and F: fully connected layers, trained on quantum trajectories. The neural network implements a decoder function that predicts the measurement result for the reference qubit σp using the measurement record in the circuit \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\) as input.

Decoder

To find SQ(T) in the experiment, we need to find the density matrix of the reference qubit at time T, which is a vector inside the Bloch sphere and can be specified by its three components 〈σX〉, 〈σY〉 and 〈σZ〉. Therefore, probing the phase transition can be viewed as the task of finding a decoder function \({F}_{{{{{{{{\mathcal{C}}}}}}}}}\) for a given circuit \({{{{{{{\mathcal{C}}}}}}}}\), such that

where \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\) is the set of circuit measurement outcomes. Let \({p}_{{\rm {P}}}(m|{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}})\) for P ∈ {X, Y, Z} denote the probability of getting reference qubit outcome m = ± 1 when measuring σP of the reference qubit after time t = T, conditioned on the measurement outcomes \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\). Since \(\langle {\sigma }_{{\rm {P}}}\rangle={\sum }_{m=\pm 1}m\,{p}_{{\rm {P}}}(m|{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}})\), the problem of finding the decoder \({F}_{{{{{{{{\mathcal{C}}}}}}}}}\) is equivalent to finding the probability distributions \({p}_{{\rm {P}}}(m|{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}})\) for P ∈ {X, Y, Z}.

Deep learning algorithm

Instead of finding \({p}_{{\rm {P}}}(m|{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}})\) analytically for a given circuit \({{{{{{{\mathcal{C}}}}}}}}\), we plan to use ML methods to learn these functions from a set of sampled data points which in principle could be obtained from experiments. The task of learning conditional probability distributions is known as the probabilistic classification task in ML literature50,51. Let us fix the circuit \({{{{{{{\mathcal{C}}}}}}}}\) and the Pauli P. A sample data point is a pair of \(({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}},m)\) for a single run of the circuit where \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\) is the circuit measurement outcomes and m is the of outcome of measuring the reference qubit in the σP basis at the end of the circuit. By repeating the experiment Nt times, we can generate a training set of Nt data points. By training a neural network using this data set, we obtain a neural network representation of the function \({p}_{{\rm {P}}}(m|{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}})\).

Framing the problem as a probabilistic classification task does not necessarily mean that the learning task would be efficient. Indeed, given that the number of different possible \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\) outcomes scales exponentially with the system size, one would naively expect that the minimum required Nt should also scale exponentially for the learning task to succeed, i.e., we need to run the circuit exponential number of times to generate the required training data set. However, the crucial point made in ref. 38 is that, when the reference qubit is initially entangled locally to the system, its density matrix at the end of the circuit only depends on the measurement outcomes that lie inside a statistical light cone, and up to a depth bounded by the correlation time that is finite in the system size away from the critical point. Hence, for a typical circuit away from the critical point, the function \({p}_{{\rm {P}}}(m|{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}})\) depends only on a finite number of elements in \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\) and that makes the learning task feasible.

To show the effectiveness of this method, we test our decoder using data points gathered from numerical simulation of Clifford circuits with pc = 0.160(1)8, which enables us to study circuits of large enough sizes. Due to Clifford dynamics, the reference qubit either remains completely mixed at t = T or it is purified along one of the Pauli axis. This means the measurement outcome of σP at the end of the circuit is either deterministic or completely random. Therefore, it is more natural to view the problem as a hard classification task (rather than probabilistic) where we train the neural network to determine the measurement outcome of σP (see the “Methods” section). Note, if the reference qubit is purified at the end of the circuit, then the decoder can in principle learn the decoding function while, if it is not, then the measurement outcomes are completely random, leading to an inevitable failure of the hard classification. Thus, the purification phase transition shows itself as a learnability phase transition. It is worth noting that we are only changing how we interpret the output of the NN, i.e. we pick the label with the highest probability, so the same NN architecture can be used for more generic gate sets. For simplicity, we also only look at the data points corresponding to the basis P in which the reference qubit is purified. In an experiment, the purification axis is not known, so one needs to train the NN for each of the three choices of P; if the learning task fails for all of them, it means the qubit is totally mixed. Otherwise, the learning task will succeed for one axis and fail for the other two (Note, for a fixed Clifford circuit, the purification axis does not depend on \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}\)), which means the reference qubit is purified.

Since locality plays an important role in purification dynamics, we employ a particular deep learning52,53,54 architecture called convolutional neural networks (CNN) that are efficient in detecting local features in image recognition applications55. In utilizing these networks the input data is treated as a snapshot as in Fig. 1b with each pixel treated as a feature of the NN and the label of each image is the measurement outcome of σP.

Learning complexity

For a fixed circuit \({{{{{{{\mathcal{C}}}}}}}}\), we start the training procedure by training the NN with a given number of labeled quantum trajectory measurements and then evaluate its performance in predicting the labels of new randomly generated trajectories produced by the same circuit \({{{{{{{\mathcal{C}}}}}}}}\). The learning accuracy 1−ϵl is the probability that the NN predicts the right label. The minimum number of training samples denoted by M(ϵl) to reach a specified learning error ϵl can provide an empirical measure of the learning complexity of the decoder function \({F}_{{{{{{{{\mathcal{C}}}}}}}}}\)56. In what follows, we fix the learning error of each circuit to be ϵl = 0.02.

In performing this analysis, different learning settings can be considered. Intuitively, for a fixed circuit, we expect the purification time of the reference qubit, tp, after which the reference qubit’s state does not alter any further, to play an important role in determining M. Therefore, in our first learning setup, we consider a conditional learning scheme where for a given measurement rate, we select quantum circuits based on their purification time tp, which allows us to study the effect of the system size on the learning complexity. Moreover, we discard measurement outcomes corresponding to measurements performed after tp. This is to say that for each tp, measurement outcomes outside a mask with width L and height tp will be masked. Here, we note that given Nc circuits with the same purification time, in addition to the learning efficiency of each circuit, we need to fix the learning inaccuracy averaged over Nc circuits, δl, which we fix to be δl = 20%. We remark that this number is larger than ϵl since some of the conditionally selected circuits have not been learned.

In the second setting, we remove the conditioning constraint and only consider the overall complexity of the learning task when we randomly generate circuits for a given p in a completely unconditional manner. The two schemes can be related using the probability distribution rp of the purification time as shown in Fig. 2a and explained more concretely in the methods section. We should emphasize that a conditional learning scheme is only a tool for studying the complexity of the learning problem for Clifford circuits. For probing the phases and phase transitions in both Clifford and Haar circuits, we use the unconditional learning scheme. Note that since the reference qubit is entangled locally at the beginning, there is always a finite probability that it will be purified in early times. In the mixed phase, the distribution has an exponentially small tail until exponentially long times (both in system size) whereas, in the pure phase, the ancilla purifies in a constant time independent of system size. Inspired by the approximate locality structure of hybrid circuits38, we also consider a light-cone learning scheme, where we train the NN using only the measurement outcomes inside a box centered in the middle (see below). In Fig. 2b, we compare the complexity of the conditional learning task in the pure and mixed phases both by using the light-cone box (main) and whole circuit (inset) measurement data. For each purification time and p, we consider Nc = 20 different circuits and we average over their minimum required training numbers to calculate \(\bar{M}({\epsilon }_{{\rm {l}}},{\delta }_{{\rm {l}}})\), and show the standard deviation as the error bar. Here, for all the curves, we observe an approximate exponential growth of \(\bar{M}({\epsilon }_{{\rm {l}}},{\delta }_{{\rm {l}}})\) as a function of the purification time tp. By comparing the mixed and pure phases, we notice that the conditional learning task is more complicated in the pure phase than the mixed phase, which is expected since, all else being equal, there are more measurements in the pure phase. Additionally, as shown in the inset, we find that learning with light-cone data is less complicated than using all the measurement outcomes. These behaviors can be understood by recognizing that to learn the decoder we need to explore the domain of the mapping in Eq. (1) whose size scales exponentially with 2pTL.

a Distribution of purified circuits as a function of the purification time for different measurement rates p = 0.05 (mixed phase), p = pc ≃ 0.16 (critical value), p = 0.5 (pure phase) with L = 16 qubits and Nc = 107 random circuits. b and c Averaged the number of quantum trajectories required for learning the reference qubit after conditioning on the purification time tp, for p = 0.1 (mixed phase) and p = 0.3 (pure phase). Averaging is performed over Nc = 20 circuits for each tp and error bars are set according to the standard deviation. In b we have circuits with L = 128 qubits. In the main plot measurement outcomes from inside the fixed light-cone box are used for training while for the inset we use the measurement outcomes from the whole circuit. In c we have circuits with L = 128 qubits (solid-line) and L = 64 qubits (dashed-line) with p = 0.1 in the main plot and p = 0.3 in the inset. d Ratio of learned circuits as a function of a number of quantum trajectories with L = 64 and for different p without conditioning on the purification time with Nc = 103 circuits for each p.

In Fig. 2c we compare the system size dependence of the complexity in the two phases with L = 64,128 where we train our networks with the light-cone data. We note that since the size of the light-cone box for a fixed tp is independent of the system size, we expect the asymptotic complexity to be independent of the system size. Our numerical observation is partially in agreement with this theoretical expectation. In the “Methods” section, this point has been studied further where we explicitly depict the system size dependence of the complexity for circuits with experimentally relevant system sizes L = {16, 32, 64, 128}. In the “Methods” section, we also obtain similar complexity results for circuits with initial states scrambled by a high-depth random Clifford circuit.

In the final step, we consider the unconditional learning task. Figure 2d shows the ratio of circuits that can be learned, denoted by Rl, as a function of Nt, with the circuit depth fixed at T = 10.

After an initial fast growth in Rl, the learning procedure slows down. This can be understood by noting that exponentially more samples are required to learn the decoder for circuits with longer purification time. Moreover, the saturation value for each p is bounded by the ratio of circuits that are purified by time T, which can be expressed as

where rp is the purification rate plotted in Fig. 2a.

Dynamics of coherent information

We can utilize the NN decoder to study the critical properties of the phase transition. For a fixed circuit configuration c with a given p, let ρc and \({s}_{c}(t)=-{{{{{{{\rm{tr}}}}}}}}({\rho }_{c}{\log }_{2}{\rho }_{c})\) denote its density matrix and von Neumann entropy of the reference qubit after time t, respectively. Based on this definition, we let \({S}_{{\rm {Q}}}(t)=-\mathop{\sum }\nolimits_{c=1}^{{N}_{c}}\frac{1}{{N}_{c}}{{{{{{{\rm{tr}}}}}}}}({\rho }_{c}{\log }_{2}{\rho }_{c})\) denote the average entropy of the reference qubit after time t, i.e., the coherent quantum information of the system with 1 encoded qubit. We may assume on general grounds that SQ(t) follows an early-time exponential decay e−λt with λ following the scaling form:

where z and ν are the dynamical and correlation length critical exponents respectively9. In stabilizer circuits, the density matrix of the reference qubit will be either purified completely with sc = 0, or will be in a totally mixed state with sc = 1. Since SQ(t) and the ratio of purified circuits Rp(t) are related by SQ = 1−Rp, we can estimate SQ(t) by the ratio of learnable circuits of depth t in the unconditional scheme described above. We denote the estimated value of SQ(t) from learning by \({\tilde{S}}_{{\rm {Q}}}\). More concretely: (1) For each given p and L we generate Nc = 103 random circuits and we evolve them for \(T \sim {{{{{{{\mathcal{O}}}}}}}}(10)\) time steps that do not scale with the system size and record the measurement outcomes \({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}},(2)\) At the end of this time evolution, we measure the spin of the reference qubits along the purification axis, m, (3) For each circuit we use the corresponding labeled data \(({{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}},m)\) and we train our neural network with this data to make future predictions. We note that since in this approach, there is no constraint in generating the circuits and their quantum trajectories, this procedure can be directly applied to experimental data without requiring any post-selection or conditioning procedure.

In Fig. 3a we compare the temporal behavior of the coherent information obtained from an ideal decoder and the NN decoder introduced here where for each p we consider Nc = 103 different circuit configurations. As demonstrated in Fig. 3a, in the mixed phase the learned entanglement entropy closely follows the simulated entanglement entropy, while in the pure phase, the two curves start to deviate from each other after a few time steps. This behavior is consistent with previous observations in Fig. 2 where we demonstrated that the learning task is easier in the mixed phase. Since at the critical point this phase transition can be described by a 1 + 1-D conformal field theory6,8, the dynamical critical exponent can be fixed in advance z = 1 and correspondingly we define the scaled time τ = t/L. Furthermore, since the argument of the scaling function f on the right-hand side of Eq. (3) becomes independent of L at pc, we expect to see a crossing in

when it is plotted for different system sizes. Here, τd = td/L is the differentiation time which should be sufficiently large. In Fig. 3b, we evaluate the decay rate obtained by learning, \({\tilde{\lambda }}_{{\tau }_{{\rm {d}}}}\), for three different system sizes, L = {32, 48, 64}, at τd = 1/16 using \({\tilde{S}}_{{\rm {Q}}}\). The corresponding times are td = {2, 3, 4} for which the deviation of the learned and simulated coherent information is negligible. Here, we notice an approximate crossing in the region 0.1 ≲ pc ≲ 0.15 signaling a phase transition in this region.

a Comparing the temporal behavior for a circuit with L = 32 qubits in the mixed (p = 0.1) and pure (p = 0.3) phases averaged over Nc = 103 circuit configurations for each p. The dashed and solid lines are achieved from learning quantum trajectories, and exact simulation of the circuits, respectively. Each point in these curves has a statistical error <2%. b Scaled temporal rate of the learned entanglement entropy, \(L{\tilde{\lambda }}_{{\tau }_{{\rm {d}}}}\), as a function of the measurement rate at a fixed scaled time τd = td/L = 1/16. Inset: Collapsing the curves for \({L}^{z}{\tilde{\lambda }}_{{\tau }_{{\rm {d}}}}\) as a function of \(\tilde{p}=(p-{p}_{{\rm {c}}}){L}^{z/\nu }\), using pc = 0.13, ν = 1.5, and z = 1. c Inverse fitting error as a function of ν and pc for z = 1. d Inverse fitting error as a function of ν and z for pc = 0.13. In c and d yellow areas show the best parameter estimates for the phase transition.

More systematically, we may find the best-estimated values of the critical data by collapsing the decay rate curves according to the scaling ansatz in Eq. (3). In particular after fixing z = 1, we can search simultaneously for pc and ν so that the fitting error of the regression curve would be minimized (see the “Methods” section). The inverse error has been plotted as a function of pc and ν in Fig. 3c where we observe that the lowest error corresponds to the region pc ≃ 0.13, ν ≃ 1.5. Similarly, we can examine our assumption about the conformal symmetry of the transition, by fixing pc = 0.13, and allowing ν and z to vary as in Fig. 3d. Here, we observe that the lowest error corresponds to the region around ν ≃ 1.5, z ≃ 1. Using the obtained estimates, namely, ν ≃ 1.5, z ≃ 1, and pc ≃ 0.13, in the inset of Fig. 3b we collapse the three curves of \({L}^{z}\tilde{\lambda }\) as a function of \(\tilde{p}=(p-{p}_{{\rm {c}}}){L}^{z/\nu }\). In the “Methods” section, we search simultaneously over all three parameters and find that the best estimates for the critical data are in the region pc = 0.14 ± 0.03, z = 0.9 ± 0.15, and ν = 1.5 ± 0.3. Once the error margins are considered, these results are consistent with the results obtained from the half-chain entanglement entropy, z = 1, pc ≃ 0.16, and ν ≃ 1.36,8. However, in order to differentiate this phase transition from the percolation phase transition57, more precise results for the critical exponents are required. Additionally, we verify our learning results by comparing them with the results obtained from exact simulations of SQ(t), where we demonstrate that by increasing L, td, and Nc, the phase transition parameters can be determined more accurately.

Scalability of learning

An important feature of a practical decoder is the possibility of training it on small circuits and then utilizing it for decoding larger circuits. Here, due to the approximate locality of the temporal evolution of the random hybrid circuits, one can examine the scalability of the decoders in a concrete manner. For a given circuit with L qubits, we generate smaller circuits with LB < L number of qubits which have identical gates as the original circuit in a rectangular narrow strip around the middle qubit which is entangled to the reference qubit. The geometry of the two sets of circuits is displayed in Fig. 4a where the depth of the two sets of circuits is chosen to be equal. Here, for each p we generate Nc large circuits with L = {32, 64} and T = 10-time steps. We also only consider those circuits that are learnable using measurement outcomes from the original circuit. Next, for each of these circuits, for LB = {4, 8, ⋯ , 20} we generate their corresponding smaller circuits and we run them to generate Nt = 5 × 103 quantum trajectories. In the training step, we use the quantum trajectories produced from the smaller circuits to train our neural networks. In the testing step, however, we use these neural networks to make predictions for the quantum trajectories obtained from the larger circuits. As we observe in Fig. 4b, by increasing LB the ratio of the circuits that can be learned by the smaller circuits’ NNs increases. Also, consistent with the effective light-cone picture, we see that for both system sizes, L = {32, 64}, the largest required LB to reach almost full efficiency, according to the light cone condition can be determined by LB ≳ 2T which in our case corresponds to LB = 20. This demonstration provides evidence that independent of the system size, the light-cone-trained NNs can be used for learning larger circuits.

a Predicting the decoder function of a circuit using the neural network trained by the measurement outcomes inside the small circuit in the orange box. b Fraction of experiments that can be learned using smaller circuits of width LB. When the ratio is 1, that means that there is no benefit in the training from increasing LB. Main: L = 64 and Nc = 100. Inset: L = 32 and Nc = 200.

Generalization to Haar random circuits

To benchmark the methods, we have focused on Clifford circuits, which have two important simplifications for our learning procedure. First, the purification axis is independent of the measurement outcomes and the learning only needs to be performed along one of the {X, Y, Z} axes in the Bloch sphere. In addition, the purification occurs at the specific layer of the circuit. Therefore, it is important to test our results in more generic Haar random circuits, where the purification axis can be along any radius in the Bloch sphere and purification dynamics occurs throughout the circuit evolution15. Here, we show how to adapt our method to Haar random circuits to see clear evidence of the two phases. We leave the study of critical properties of the entanglement phase transition with our method for future work.

To obtain the decoder function \({F}_{{{{{{{{\mathcal{C}}}}}}}}}\) for generic circuits, we need to create three independent sets of labeled data for measuring σi with i ∈ {X, Y, Z} obtained from quantum trajectories. Next, these three sets of labeled measurement data, represented by \(\{{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}}^{i},{m}_{i}\}\), are used to train three independent neural networks to produce the probability distribution of reference qubit density matrix expectation values \({p}_{i}(m|{{{{{{{{\mathcal{M}}}}}}}}}_{{\rm {T}}})\). Consequently, given new quantum trajectories, the trained pi’s will be employed to estimate 〈σi〉. Finally, using standard density matrix tomography methods, such as the maximum likelihood estimation of the density matrix of a single qubit58, we can obtain the most likely physical density matrix associated with the predicted 〈σi〉’s. An illustrative example of the learning dynamics in the two phases for a small number of circuits is shown in Fig. 5 where we study SQ(t) and its learned value as a function of time for a circuit with L = 8 qubits in the two phases (pc ≈ 0.17 for this model15). We see from this example that our NN decoder straightforwardly generalizes to generic quantum circuits and using a larger circuit ensemble and quantum trajectories it should be possible to study the phase transition properties.

Discussion

We first note that since in our approach obtaining the critical exponents is obtained from the temporal behavior of the learning efficiency at long times, the most important obstacle in obtaining more accurate results for the critical exponents is the low efficiency of our learning algorithms for deep circuits in the area law phase. Hence, an intriguing possibility is to find state-of-art neural network architectures that are more efficient in learning deep circuits with local data37. Similarly, implementing neural network decoders for other MIPTs such as systems with long-range interactions30, and symmetric MIPT59, is an immediate extension of this work. As an alternative main future direction to explore, we note that from an experimental perspective, it is possible to incorporate different errors, which are common in the realization of the two-qubit gates and/or measurement processes, in our machine learning framework. Another intriguing question is to investigate whether it is possible to use our decoder approach for MIPTs where it is not equivalent to purification transitions. In the context of quantum error correction and fault tolerance, the purification dynamics in measurement-induced phase transitions lead to a rich set of examples of dynamically generated quantum error-correcting codes11,34,60,61. Designing similar decoders as considered here for other types of dynamically generated logical qubits is a rich avenue of investigation. We also highlight that our empirical complexity results raise interesting questions about the complexity of learning an effective Hamiltonian description32,62,63 of the measurement outcome distributions for monitored quantum systems. Finally, we note that improving our neural network algorithms to find the optimal decoder and investigating the applicability of unsupervised machine learning techniques for this problem is left for future studies64,65.

Methods

Quantum dynamics

The dynamics of hybrid circuits considered in this work in general can be described using the quantum channel formalism. The wave function of the circuit, denoted by \(\left|{\psi }_{{\rm {S}}}\right\rangle\) at the beginning of time evolution is entangled to a reference qubit. Formally, the time evolution of the system under this setting can be modeled using Kraus operators66,

where mt, Ut and \({P}_{t}^{{m}_{t}}\), denote the measurement outcomes, unitary gates, and projective measurements at the tth layer of the circuit, respectively. We also denote the set of all measurement outcomes in different layers via \(\overrightarrow{m}\). The corresponding evolution of the density matrix, ρ, can be described via the following quantum channel:

For our purpose, to generate the quantum trajectories we need to consider the time evolution of the system at the level of the wave functions. Under an arbitrary unitary operator U, the wave function evolves as

For projective measurements, we consider a complete set of orthogonal projectors with eigenvalues labeled by m satisfying \({\sum }_{m}{P}_{t}^{m}=1\) and \({P}_{t}^{m}{P}_{t}^{{m}^{{\prime} }}={\delta }_{m{m}^{{\prime} }}{P}_{t}^{m}\) under which the wave function evolves as,

In simulating the time evolution of the wave functions, we use random unitaries sampled from the Clifford group where, under any conjugation operation, the Pauli group is mapped to itself67. Such circuits, according to the Gottesman–Knill theorem, can be classically simulated in polynomial times in the system size68,69.

Implementation of deep learning algorithms

In this work, we mainly used convolutional neural networks for learning the decoder function. These networks are composed of several interconnected convolutional and pooling layers. The convolutional layer uses the locality of the input data to create new features from a linear combination of adjacent features through a convolution process. These layers are followed by pooling layers which reduce the number of features. Finally, a fully connected layer is used to associate a label to the newly generated features, thus classifying the data. These layers can be repeated a number of times for more complicated input data. Our neural network architecture symbolically displayed in Fig. 1b consists of eight layers whose hyperparameters are chosen by an empirical parametric search to optimize the learning accuracy when the number of samples are smaller than 5 × 104. From left to right these layers include: (1) a convolutional layer with a Lq/2 filters where Lq is the number of qubits with a kernel size of 4 × 4, and a stride size of 1 × 1 with a rectified linear unit (ReLu) activation function, (2) a convolutional layer with a Lq/2 filters where Lq is the number of qubits with a kernel size of 3 × 3, and a stride size of 1 × 1 with a Relu activation function, (3) a maximum pooling layer with a window size of 2 × 2 to decrease the dimension of the input data, (4) a dropout layer with a dropping rate of rd = 0.2 to prevent overfitting, (5) a flattening layer to convert the data into a one-dimensional vector, (6) a dense fully connected layer with a Relu activation function whose number of output neurons is variable and is determined according to the number of training samples, Nn = 512*(1 + 2⌊Nt/2000⌋) where ⌊x⌋ denotes the floor function of x, (7) a dropout layer with a dropping rate of rd = 0.2, (8) a dense fully connected layer with a sigmoid activation function which generates the prediction for the spin of the reference qubit. Finally, since we have a classification problem, the loss function for comparing the predicted labels and the actual labels is a binary cross-entropy function. Using this loss function, for training our neural network model, we use the Adam optimization algorithm with a learning rate l = 0.001. The implementation of our neural network layers and their optimization was done by the Python deep-learning packages TensorFlow and Keras.

Scaling analysis and estimation of critical exponents

The critical exponents of this measurement-induced phase transition can be investigated from the decay rate of the reference qubit’s entanglement entropy denoted by λ, which has the scaling (see Eq. (3))

While in the main text, we fixed z = 1 based on the assumption of conformal invariance, here, we perform the analysis with z allowed to vary. To find the best combination of the critical data that collapses our data according to this ansatz, we compare the normalized mean squared errors (NMSE), εNMSE such that the best fit is obtained when \({\varepsilon }_{{{{{{{{\rm{NMSE}}}}}}}}}^{-1}\) is maximized70. In particular, for a given pc, ν, and z, using cubic polynomials we first find the regression curve of y ≡ Lzλ as a function of (p−pc)Lz/ν, and then we evaluate the corresponding value of the mean squared error between y and the best-fitted value of it \(\hat{y}\). We point out that in order to compare mean squared errors for different combinations of (pc, ν, z), we have to normalize the data by defining dimensionless deviations and then evaluate the NMSE for different combinations of critical data according to \({\varepsilon }_{{{{{{{{\rm{NMSE}}}}}}}}}={\sum }_{i}{({\hat{y}}_{i}-{y}_{i})}^{2}/{y}_{i}^{2}\) where the summation is performed over data for all the measurement rates and system sizes.

The results of this analysis are displayed in Fig. 6, where we have plotted \({\varepsilon }_{{{{{{{{\rm{NMSE}}}}}}}}}^{-1}\) as a function of ν and pc for six different values of z ranging from 0.75 to 1.25. Based on the subplots in this figure, we observe that the highest values for \({\varepsilon }_{{{{{{{{\rm{NMSE}}}}}}}}}^{-1}\) are obtained for z ≃ 0.85 − 0.95 which is quite close to the value expected from theoretical results based on conformal symmetry z = 1. Allowing \({\varepsilon }_{{{{{{{{\rm{NMSE}}}}}}}}}^{-1}\) to vary within almost 10% of its maximum value, we obtain following range for the best fits of the critical data, pc = 0.14 ± 0.03, ν = 1.5 ± 0.3, and z = 0.9 ± 0.15.

Maximum inverse normalized mean squared error \({\varepsilon }_{{{{{{{{\rm{NMSE}}}}}}}}}^{-1}\) of the learned decay rate \(\tilde{\lambda }\) when evaluated for different combinations of z, pc and ν can be used for finding the best collapse of different \(\tilde{\lambda }\) curves. Colormap of the intensity \({\varepsilon }_{{{{{{{{\rm{NMSE}}}}}}}}}^{-1}\) plotted as a function of fitted pc and ν for different dynamical scaling exponent z displayed on the top of each subplot. The best fits (highest inverse error) are obtained for z = 0.85, and z = 0.95.

Finally, we compare our results with the results obtained directly from exact numerical simulations of Clifford circuits without employing our learning scheme. The results of such simulations for the decay rates for different system sizes have been displayed in Fig. 7. In the left subplot we have shown the results for the same system sizes as used for our learning simulations where we observe a crossing of the curves at pc ≃ 0.13 which supports our results obtained from the learning scheme. Furthermore, in the right subplot we observe that for larger system sizes, the obtained crossing of the curves is around pc ≃ 0.16 which is very close to the results obtained from half-chain entanglement entropy6,8. Accordingly, we expect that by increasing L and Nc, the estimates obtained from our learning scheme should improve.

Entanglement entropy rate calculated by exact stabilizer simulation of Clifford circuits is obtained for different system sizes as a function of the measurement rate. The crossing point of the scaled decay rates represents the critical measurement rate. (Left) The dimensionless time is τd = td/L = 1/16 and the system sizes are the same as those used for the learning protocol simulations L = 32, 48, 64. (Right) Scaled decay rates of the entanglement entropy of the reference qubit were obtained for larger system sizes L = 64, 128, 256.

Key measurements in Clifford circuits

Consider a hybrid Clifford circuit \({{{{{{{\mathcal{C}}}}}}}}\) which has M Pauli measurements. Imagine applying this circuit on an initial stabilizer state which is entangled to a reference qubit. Assume that as a result of this, the reference qubit disentangles and purifies into the \(\left|P;{p}_{{\rm {R}}}\right\rangle\) state, where P is one of the Paulis and pR = ± 1 determines which eigenvector of P the reference qubit has been purified into. Let s1, ⋯ , sM = ± 1 denote the measurement outcomes for a single run of the circuit. If we run the same circuit again, the ancilla will purify in the same basis P, but we may get different pR as well as different si. The goal is to understand the relation between the value of pR and the measurement outcomes \({\{{s}_{i}\}}_{i=1}^{M}\).

When a Pauli string is measured on a stabilizer state, the result is either predetermined (in case the Pauli string is already a member of the stabilizer group up to a phase) or it is ±1 with equal probability. We call the former determined measurements and the latter undetermined measurements. Note that in a stabilizer circuit, whether a measurement is determined or undetermined is independent of previous measurement outcomes. Therefore, for a given circuit \({{{{{{{\mathcal{C}}}}}}}}\) and a fixed ordering of performing measurements, it is well-defined to label measurements as either determined or undetermined without referring to a specific circuit run.

The following is a straightforward result of the Gottesmann–Knill theorem:

Corollary

There exists a unique subset of undetermined measurement results \(\{{s}_{{j}_{1}},\cdots \,,{s}_{{j}_{m}}\}\) (which we call key measurements) such that,

where c = ± 1 is the same for all circuit runs. We call this set the key measurements set.

Note that since key measurements are undetermined measurements, their value are independent of each other. Hence, to predict pR from undetermined measurement outcomes with any accuracy better than 1/2, one needs to have access to all key measurement results.

Each determined measurement can be seen as a constraint between previous undetermined measurement outcomes. Specifically, if si is a determined measurement result for some i it means that there is some fixed \({c}^{{\prime} }=\pm 1\) (independent of circuit run) and a subset of undetermined measurements \(\{{s}_{{j}_{1}^{{\prime} }},\cdots \,,{s}_{{j}_{m}^{{\prime} }}\}\) such that

The similarity to the Corollary is not accidental: if the reference qubit is purified in the P Pauli basis, it means that measuring it in the P basis would be a determined measurement.

The existence of these constraints then means that if we relax the condition of the measurements being undetermined in Corollary, then the set of key measurements is no longer unique; we may be able to replace some measurement outcomes in Eq. (10) with a product of others using the constraints between measurement outcomes.

Relation between conditional and unconditional learning schemes

Here, under certain conditions, we argue that the results of the two learning schemes as displayed in Fig. 2 are related to each other. In particular, using the purification-time distribution of the circuits in Fig. 2a, learnability Rl(Nt), is related to the purification ratio rp(tp). In what follows to make our analysis more intelligible, we assume that the learning error is nearly vanishing, ϵl ≃ 0. Next, we need to study the averaged learning efficiency of our decoder which for a given tp and Nt we denote by ηl(tp, Nt). For a given tp and Nt, this quantity is related to the averaged inaccuracy introduced in the text by ηl = 1−δl. To proceed, we employ a simplifying assumption that is approximately consistent with our numerical results. More concretely, we imagine a decoder with a sharp step-like behavior for ηl(tp, Nt) as a function of Nt. Using the Heaviside theta function θH(x), we suppose ηl(tp, Nt) = θH(Nt−M(tp)) where M(tp) is the minimum number of training samples to reach full efficiency for t ≤ tp. From the definitions, it follows straightforwardly that

where \({t}_{{\rm {p}}}^{{{{{{{{\rm{Max}}}}}}}}}({N}_{t})\) is the maximum purification time that can be learned for a given Nt. However, this quantity can be evaluated by inverting the function M(t) according to \({t}_{{\rm {p}}}^{{{{{{{{\rm{Max}}}}}}}}}({N}_{t})={M}^{-1}({N}_{t})\) where M−1(Nt) is the inverse function of M(tp). Now, we notice that M(tp) after averaging over different circuits, can be read from the averaged minimum number of training samples in Fig. 2b. Therefore, by integrating the information in Fig. 2a and b plus ηl(tp, Nt), one can explain the behavior of Rl(Nt) in Fig. 2d. Here, although we do not have the explicit form of ηl(tp, Nt), we use the step-like behavior as an approximation which is justifiable due to the exponential behaviors of the complexity as a function of the purification time. Thus, using Eq. (12) as a plausible approximation for the learnability of our decoder, we expect that during the initial fast growth of the curves in Fig. 2a, learned circuits mostly belong to the circuits with short purification times. However, since for longer purification times, an exponentially large number of training samples is required, the initial exponential growth is followed by a slow learning curve. Therefore, in Fig. 2d, we observe that deep in the pure phase where the majority of circuits have a short purification time, Rl asymptotically approaches one.

Complexity results for scrambled initial states

Here, we present our results for the circuits scrambled by a high-depth random Clifford circuit. Concretely, to obtain such states, we first run our circuits with the initial product states only with two-qubit random Clifford gates in the absence of any measurements. This unitary time evolution creates a highly entangled state after T ~ L time steps with an entanglement entropy proportional to the system size. Next, we entangle the reference qubit to one of the circuit’s qubits and run the same circuit in the presence of two-qubit gates and random measurements. As shown in ref. 11, there is a purification phase transition such that for p < pc the subsystem entanglement entropy of the circuit after T ~ L still has a volume-law behavior while for p > pc, its entanglement entropy is negligible. Using such initially mixed states, the complexity results are displayed in Fig. 8. Here, as in Fig. 4, we observe a nearly exponential behavior with the purification time. Furthermore, we notice that the conditional learning scheme is more difficult in the pure phase compared to the mixed phase. By comparing the inset and main plots, we also observe that learning with the light-cone data requires fewer training samples. Finally, by comparing Figs. 2b and 8 we observe that learning the circuits with scrambled initial conditions requires more training samples than the circuits with product state initial conditions.

Average of the minimum number of training samples required for learning the reference qubit’s state when using circuits with scrambled initial states is plotted after conditioning on the purification time tp, for p = 0.1 (mixed phase) and p = 0.3 (pure phase). Averaging is performed over Nc = 20 circuits for each tp and error bars are set according to the standard deviation. We have circuits with L = 128 qubits. In the main plot measurement outcomes from inside the fixed light-cone box are used for training while for the inset we use the measurement outcomes from the whole circuit.

Finally, we present further results for the system-size dependence of the sampling complexity of our approach in Fig. 9 where we only use the light-cone measurement outcomes. The x-axis represents the system size which includes L = {16, 32, 64, 128}. Different curves represent different purification times spanning tp = {1, ⋯ , 6}. In the left panel of this figure, we have displayed our results for p = 0.3 corresponding to the area-law phase and in the right we have displayed our results for the volume-law phase with p = 0.1. Our results after taking the error bars into account can be indicative of a nearly system-size independent behavior. However, we should note that since the NN decoder that we have employed for these simulations is not necessarily the optimum decoder, we expect some deviation from an exact system-size independent behavior. Changing the system size by a factor of 8, the sample complexity increases by a factor of 2 on average and a factor of 4 on the tails. For more definitive results, we need to consider larger ensembles of circuits with larger Nc and also increase the system size, which would be beyond the scope of this work.

Average of the minimum number of training samples required for learning the reference qubit using light-cone data as a function of the system sizes and different purification times. Averaging is performed over Nc = 20 circuits for each tp and error bars are set according to the standard deviation. (Left) Results for the area-law phase with p = 0.3. (Right) Results for the volume-law phase with p = 0.1.

Data availability

Source data for figures in the main text are provided with this paper. Data that support the plots within this paper and other findings of this study are generated and protected by the Extreme Science and Engineering Discovery Environment (XSEDE), at the Pittsburgh Supercomputing Center and are available from the corresponding author upon request. Source data are provided with this paper.

Code availability

The code used for this study is available on https://github.com/Hossein-D/BrickwallCliffordCircuit.

References

Kim, H. & Huse, D. A. Ballistic spreading of entanglement in a diffusive nonintegrable system. Phys. Rev. Lett. 111, 127205 (2013).

Nandkishore, R. & Huse, D. A. Many-body localization and thermalization in quantum statistical mechanics. Annu. Rev. Condens. Matter Phys. 6, 15 (2015).

Breuer, H.-P. et al. The Theory of Open Quantum Systems (Oxford University Press on Demand, 2002).

Bauer, B. & Nayak, C. Area laws in a many-body localized state and its implications for topological order. J. Stat. Mech. Theory Exp. 2013, P09005 (2013).

Serbyn, M., Papić, Z. & Abanin, D. A. Local conservation laws and the structure of the many-body localized states. Phys. Rev. Lett. 111, 127201 (2013).

Skinner, B., Ruhman, J. & Nahum, A. Measurement-induced phase transitions in the dynamics of entanglement. Phys. Rev. X 9, 031009 (2019).

Li, Y., Chen, X. & Fisher, M. P. A. Quantum Zeno effect and the many-body entanglement transition. Phys. Rev. B 98, 205136 (2018).

Li, Y., Chen, X. & Fisher, M. P. A. Measurement-driven entanglement transition in hybrid quantum circuits. Phys. Rev. B 100, 134306 (2019).

Noel, C. et al. Measurement-induced quantum phases realized in a trapped-ion quantum computer. Nat. Phys. 18, 760 (2021).

Koh, J. M., Sun, S.-N., Motta, M. & Minnich, A. J. Experimental realization of a measurement-induced entanglement phase transition on a superconducting quantum processor. Preprint at arXiv:2203.04338 (2022).

Gullans, M. J. & Huse, D. A. Dynamical purification phase transition induced by quantum measurements. Phys. Rev. X 10, 041020 (2020).

Choi, S., Bao, Y., Qi, X.-L. & Altman, E. Quantum error correction in scrambling dynamics and measurement-induced phase transition. Phys. Rev. Lett. 125, 030505 (2020).

Jian, C.-M., You, Y.-Z., Vasseur, R. & Ludwig, A. W. Measurement-induced criticality in random quantum circuits. Phys. Rev. B 101, 104302 (2020).

Bao, Y., Choi, S. & Altman, E. Theory of the phase transition in random unitary circuits with measurements. Phys. Rev. B 101, 104301 (2020).

Zabalo, A. et al. Critical properties of the measurement-induced transition in random quantum circuits. Phys. Rev. B 101, 060301 (2020).

Tang, Q. & Zhu, W. Measurement-induced phase transition: a case study in the nonintegrable model by density-matrix renormalization group calculations. Phys. Rev. Res. 2, 013022 (2020).

Fuji, Y. & Ashida, Y. Measurement-induced quantum criticality under continuous monitoring. Phys. Rev. B 102, 054302 (2020).

Turkeshi, X., Fazio, R. & Dalmonte, M. Measurement-induced criticality in (2 + 1)-dimensional hybrid quantum circuits. Phys. Rev. B 102, 014315 (2020).

Ippoliti, M., Gullans, M. J., Gopalakrishnan, S., Huse, D. A. & Khemani, V. Entanglement phase transitions in measurement-only dynamics. Phys. Rev. X 11, 011030 (2021).

Lavasani, A., Alavirad, Y. & Barkeshli, M. Measurement-induced topological entanglement transitions in symmetric random quantum circuits. Nat. Phys. 17, 342 (2021).

Sang, S. & Hsieh, T. H. Measurement-protected quantum phases. Phys. Rev. Res. 3, 023200 (2021).

Van Regemortel, M., Cian, Z.-P., Seif, A., Dehghani, H. & Hafezi, M. Entanglement entropy scaling transition under competing monitoring protocols. Phys. Rev. Lett. 126, 123604 (2021).

Buchhold, M., Minoguchi, Y., Altland, A. & Diehl, S. Effective theory for the measurement-induced phase transition of Dirac fermions. Phys. Rev. X 11, 041004 (2021).

Bao, Y., Choi, S. & Altman, E. Symmetry enriched phases of quantum circuits. Ann. Phys. 435, 168618 (2021). special issue on Philip W. Anderson.

Jian, S.-K., Liu, C., Chen, X., Swingle, B. & Zhang, P. Measurement-induced phase transition in the monitored Sachdev-ye-Kitaev model. Phys. Rev. Lett. 127, 140601 (2021).

Czischek, S., Torlai, G., Ray, S., Islam, R. & Melko, R. G. Simulating a measurement-induced phase transition for trapped-ion circuits. Phys. Rev. A 104, 062405 (2021).

Potter, A. C. & Vasseur, R. Entanglement dynamics in hybrid quantum circuits. In Bayat, A., Bose, S. & Johannesson, H. (eds) Entanglement in Spin Chains. Quantum Science and Technology. https://doi.org/10.1007/978-3-031-03998-0_9 (Springer, Cham., 2022).

Turkeshi, X. Measurement-induced criticality as a data-structure transition. Phys. Rev. B 106, 144313 (2021).

Block, M., Bao, Y., Choi, S., Altman, E. & Yao, N. Y. Measurement-induced transition in long-range interacting quantum circuits. Phys. Rev. Lett. 128, 010604 (2022).

Minato, T., Sugimoto, K., Kuwahara, T. & Saito, K. Fate of measurement-induced phase transition in long-range interactions. Phys. Rev. Lett. 128, 010603 (2022).

Müller, T., Diehl, S. & Buchhold, M. Measurement-induced dark state phase transitions in long-ranged fermion systems. Phys. Rev. Lett. 128, 010605 (2022).

Van Regemortel, M. et al. Monitoring-induced entanglement entropy and sampling complexity. Phys. Rev. Res. 4, L032021 (2022).

Koh, J. M., Sun, S.-N., Motta, M. & Minnich, A. J. Experimental realization of a measurement-induced entanglement phase transition on a superconducting quantum processor. arXiv preprint arXiv:2203.04338 (2022).

Gullans, M. J., Krastanov, S., Huse, D. A., Jiang, L. & Flammia, S. T. Quantum coding with low-depth random circuits. Phys. Rev. X 11, 031066 (2021).

Fan, R., Vijay, S., Vishwanath, A. & You, Y.-Z. Self-organized error correction in random unitary circuits with measurement. Phys. Rev. B 103, 174309 (2021).

Li, Y. & Fisher, M. P. A. Statistical mechanics of quantum error correcting codes. Phys. Rev. B 103, 104306 (2021).

Yoshida, B. Decoding the entanglement structure of monitored quantum circuits. arXiv preprint arXiv:2109.08691 (2021).

Gullans, M. J. & Huse, D. A. Scalable probes of measurement-induced criticality. Phys. Rev. Lett. 125, 070606 (2020).

Carrasquilla, J. Machine learning for quantum matter. Adv. Phys.: X 5, 1797528 (2020).

Torlai, G. & Melko, R. G. Neural decoder for topological codes. Phys. Rev. Lett. 119, 030501 (2017).

Krastanov, S. & Jiang, L. Deep neural network probabilistic decoder for stabilizer codes. Sci. Rep. 7, 1 (2017).

Baireuther, P., O’Brien, T. E., Tarasinski, B. & Beenakker, C. W. Machine-learning-assisted correction of correlated qubit errors in a topological code. Quantum 2, 48 (2018).

Andreasson, P., Johansson, J., Liljestrand, S. & Granath, M. Quantum error correction for the toric code using deep reinforcement learning. Quantum 3, 183 (2019).

Nautrup, H. P., Delfosse, N., Dunjko, V., Briegel, H. J. & Friis, N. Optimizing quantum error correction codes with reinforcement learning. Quantum 3, 215 (2019).

Liu, Y.-H. & Poulin, D. Neural belief-propagation decoders for quantum error-correcting codes. Phys. Rev. Lett. 122, 200501 (2019).

Flurin, E., Martin, L. S., Hacohen-Gourgy, S. & Siddiqi, I. Using a recurrent neural network to reconstruct quantum dynamics of a superconducting qubit from physical observations. Phys. Rev. X 10, 011006 (2020).

Sweke, R., Kesselring, M. S., van Nieuwenburg, E. P. & Eisert, J. Reinforcement learning decoders for fault-tolerant quantum computation. Mach. Learn.: Sci. Technol. 2, 025005 (2020).

Wang, Z., Rajabzadeh, T., Lee, N. & Safavi-Naeini, A. H. Automated discovery of autonomous quantum error correction schemes. PRX Quantum 3, 020302 (2022).

Schumacher, B. & Nielsen, M. A. Quantum data processing and error correction. Phys. Rev. A 54, 2629 (1996).

Niculescu-Mizil, A. & Caruana, R. Predicting good probabilities with supervised learning. In Proc. 22nd International Conference on Machine Learning, ICML ’05 625–632 (Association for Computing Machinery, New York, NY, USA, 2005).

Guo, C., Pleiss, G., Sun, Y. & Weinberger, K. Q. On calibration of modern neural networks. In Proc. 34th International Conference on Machine Learning Research Vol. 70 (eds Precup, D. & Teh, Y. W.) 1321–1330 (PMLR, 2017).

Hinton, G. E. & Salakhutdinov, R. R. Reducing the dimensionality of data with neural networks. Science 313, 504 (2006).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436 (2015).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Lawrence, S., Giles, C. L., Tsoi, A. C. & Back, A. D. Face recognition: a convolutional neural-network approach. IEEE Trans. Neural Netw. 8, 98 (1997).

Bairey, E., Guo, C., Poletti, D., Lindner, N. H. & Arad, I. Learning the dynamics of open quantum systems from their steady states. N. J. Phys. 22, 032001 (2020).

Stauffer, D. & Aharony, A. Introduction to Percolation Theory (Taylor & Francis, 2018).

James, D. F. V., Kwiat, P. G., Munro, W. J. & White, A. G. Measurement of qubits. Phys. Rev. A 64, 052312 (2001).

Barratt, F., Agarwal, U., Potter, A. C., Gopalakrishnan, S. & Vasseur, R. Transitions in the learnability of global charges from local measurements. arXiv preprint arXiv:2206.12429 (2022).

Brown, W. & Fawzi, O. Short random circuits define good quantum error correcting codes. In 2013 IEEE International Symposium on Information Theory (ISIT), 346 (2013).

Hastings, M. B. & Haah, J. Dynamically generated logical qubits. Quantum 5, 564 (2021).

Anshu, A., Arunachalam, S., Kuwahara, T. & Soleimanifar, M. Sample-efficient learning of interacting quantum systems. Nat. Phys. 17, 931 (2021).

Haah, J., Kothari, R. & Tang, E. Optimal learning of quantum Hamiltonians from high-temperature Gibbs states. 2022 IEEE 63rd Annual Symposium on Foundations of Computer Science (FOCS). pp. 135–146, https://doi.org/10.1109/FOCS54457.2022.00020 (Denver, CO, USA, 2022).

Huang, H.-Y., Kueng, R. & Preskill, J. Predicting many properties of a quantum system from very few measurements. Nat. Phys. 16, 1050 (2020).

Kuo, E.-J. & Dehghani, H. Unsupervised learning of interacting topological and symmetry-breaking phase transitions. Phys. Rev. B 105, 235136 (2022).

Nielsen, M. A. & Chuang, I. Quantum Computation and Quantum Information (Cambridge University Press, 2002).

Gottesman, D. Theory of fault-tolerant quantum computation. Phys. Rev. A 57, 127 (1998).

Gottesman, D. The Heisenberg representation of quantum computers. arXiv preprint quant-ph/9807006 (1998).

Aaronson, S. & Gottesman, D. Improved simulation of stabilizer circuits. Phys. Rev. A 70, 052328 (2004).

Cichosz, P. Data Mining Algorithms: Explained Using R. (John Wiley & Sons, 2014).

Towns, J. et al. Xsede: accelerating scientific discovery. Comput. Sci. Eng. 16, 62 (2014).

Acknowledgements

We acknowledge stimulating discussions with Alireza Seif, David Huse, Pradeep Niroula, Crystal Noel, Grace Sommers, and Christopher White. We acknowledge support from the National Science Foundation QLCI grant OMA-2120757. This work is supported by a collaboration between the US DOE, and other agencies. This material is based upon work supported by the U.S. Department of Energy, Office of Science, National Quantum Information Science Research Centers, Quantum Systems Accelerator, the National Science Foundation (JQI-PFC-UMD and QLCI grant OMA2120757). H.D. and M.H. acknowledge support from ARO W911NF2010232, AFOSR FA9550-19-1-0399, and Simons and Minta Martin foundations. This work used the Extreme Science and Engineering Discovery Environment (XSEDE), supported by the grant number PHY210049, at the Pittsburgh Supercomputing Center (PSC)71. M.H. thanks ETH Zurich for their hospitality during the conclusion of this work.

Author information

Authors and Affiliations

Contributions

All authors contributed to this work extensively and to the writing of the manuscript. The computational analysis and simulations have been conducted by the corresponding author.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Evert van Nieuwenburg and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dehghani, H., Lavasani, A., Hafezi, M. et al. Neural-network decoders for measurement induced phase transitions. Nat Commun 14, 2918 (2023). https://doi.org/10.1038/s41467-023-37902-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-37902-1

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.