Abstract

Causality detection likely misidentifies indirect causations as direct ones, due to the effect of causation transitivity. Although several methods in traditional frameworks have been proposed to avoid such misinterpretations, there still is a lack of feasible methods for identifying direct causations from indirect ones in the challenging situation where the variables of the underlying dynamical system are non-separable and weakly or moderately interacting. Here, we solve this problem by developing a data-based, model-independent method of partial cross mapping based on an articulated integration of three tools from nonlinear dynamics and statistics: phase-space reconstruction, mutual cross mapping, and partial correlation. We demonstrate our method by using data from different representative models and real-world systems. As direct causations are keys to the fundamental underpinnings of a variety of complex dynamics, we anticipate our method to be indispensable in unlocking and deciphering the inner mechanisms of real systems in diverse disciplines from data.

Similar content being viewed by others

Introduction

Causal interactions are fundamental underpinnings in natural and engineering systems, as well as in social, economical, and political systems. Here system details are typically not known, but only time series are available. Correctly identifying causal relations among the dynamical variables generating the time series provides a window through which the inner dynamics of the target system may be probed into, and a number of previous methods were developed, such as those based on the celebrated Granger causality1,2,3,4,5, the entropy6,7,8,9,10,11, the dynamical Bayesian inference12,13,14,15, and the mutual cross mapping (MCM)16,17,18,19,20,21, with applications to real-world systems5,7,22,23,24,25,26,27,28,29,30,31. If the system contains two independent variables only, the causal relation between them is straightforwardly direct. However, for a complex system with a large number of interacting nodes connected with each other in a networked fashion, two kinds of causation can arise: direct and indirect. Especially, if there is a direct link between two nodes, the detected causal relation between them can contain a direct component and an indirect one through other nodes in the network as a result of the generic phenomenon of causation transitivity (see Fig. 1). Even for two nodes that are not directly connected, a causal relation may be detected, but it must be indirect. To eliminate indirect causal influences so as to ascertain direct causal links is of paramount importance, as the latter constitutes the base for modeling, predicting, and controlling the system. There were previous studies of significant advance in detecting direct causal links to reconstruct the underlying true causal network based on the concept of partial transfer entropy or its linear Gaussian version, the conditional Granger causality, which resulted in many successful data mining in related fields32,33,34,35,36,37,38. Combining these methods with graphical models, recent studies further provided a visible and comprehensive description of causal relations among interested variables36,38,39. However, mathematically, all these methods are not applicable directly in situations where the relevant dynamical variables are non-separable so that the information from any variables cannot be separated easily in a prediction framework (see “Methods” for the rigorous concept of non-separability). In real-world nonlinear systems, the non-separability is ubiquitously present among systems variables17. To our knowledge, the problem of ascertaining direct causation by removing indirect causal influences for general complex dynamical systems has not been fully studied and remained outstanding.

a There is directional interaction between variables X and Y, but Z is an independent variable. b The variables X, Y, and Z constitute a one-directional causal chain with an indirect causal link from X to Y. c The variables constitute a causal loop, where every two neighboring variables have, in two opposite directions, a direct and an indirect causal link, respectively. d For a network with many interacting variables, more indirect causal links would be falsely identified as direct causal links.

In this paper, we develop a data-based, model-free method of partial cross mapping (PCM) to eliminate indirect causal influences in situations where non-separability is allowed to be present. The central idea is to integrate three basic data analysis methods from nonlinear dynamics and statistics: classic phase-space reconstruction, MCM, and partial correlation, to detect direct causal links for complex and nonlinear networked systems. The method is validated using various benchmark systems. Its applications to real-world systems lead to new insights into their dynamical underpinnings. The method provides a solution to the long-standing, crucial problem with existing causality detection methods: misidentifying indirect causal influences as direct ones. Because of its unprecedented ability to eliminate indirect causation, this method can be a powerful tool to understand and model complex dynamical systems.

Results

Direct and indirect causal links

To illustrate the difference between direct and indirect causal links, we first consider a toy system of three variables with different interaction structures. If only two variables interact in one direction and the third one is isolated (Fig. 1a), then the previous methods can be effective for identifying the direct causal link16,17,18,19,20,21. However, when the three variables constitute a unidirectional causal chain (Fig. 1b), applying any of the previous methods to the time series from a pair of variables would detect a false direct link between the two non-neighboring variables X and Y in Fig. 1b (see “Methods” for a false link aroused by the transitivity). When the three variables constitute a causal loop (Fig. 1c), every two neighboring variables may have an indirect causal link in addition to the direct one in the opposite direction. In this case, previous methods would falsely identify any actual indirect link as a direct one. In addition to the above three representative interaction structures for the three variables, all the other possible modes have been introduced thoroughly and investigated systematically in Supplementary Note 1. Moreover, with more observable variables, the likelihood that indirect causal links are incorrectly regarded as direct ones will substantially increase (Fig. 1d).

Partial cross mapping

To overcome this problem, we propose the PCM method. The key idea is to examine the consensus between one time series and its cross map prediction from the other with conditioning on the part that is transferred from the third variable. For the convenience of describing our method clearly, we consider the simple case of three variables (X, Y, and Z) causally interacting with each other in a unidirectional chain (Fig. 2a). Let \(X={\left\{{x}_{t}\right\}}_{t = 1}^{L}\), \(Y={\left\{{y}_{t}\right\}}_{t = 1}^{L}\), and \(Z={\left\{{z}_{t}\right\}}_{t = 1}^{L}\) be the corresponding time series of length L. Using Takens–Mañé’s delay-coordinate embedding40,41, we obtain three shadow manifolds: \({M}_{X}={\{{{\bf{x}}}_{t}\}}_{t = r}^{L}\), \({M}_{Y}={\{{{\bf{y}}}_{t}\}}_{t = r}^{L}\), and \({M}_{Z}={\{{{\bf{z}}}_{t}\}}_{t = r}^{L}\) with the vectors

where Ex, Ey, and Ez are the respective embedding dimensions, τx, τy, and τz are the time lags, and \(r={\max }_{\xi = x,y,z}\{1+({E}_{\xi }-1){\tau }_{\xi }\}\). These parameters of embedding dimensions and time lags can be computationally determined by the method of false nearest neighbor (FNN) and delayed mutual information (DMI), respectively. More advanced techniques can also be utilized20,42. In general, for any pair of variables ξ and η ∈ {x, y, z}, we set \({\hat{{\mathcal{N}}}}^{{\boldsymbol{\xi }}}({{\boldsymbol{\eta }}}_{t})=\{{{\boldsymbol{\eta }}}_{t^{\prime} }| {{\boldsymbol{\xi }}}_{t^{\prime} }\in {\mathcal{N}}({{\boldsymbol{\xi }}}_{t})\}\), where \({\mathcal{N}}({{\boldsymbol{\xi }}}_{t})\) is a set containing a fixed number (usually taken as Eξ + 1, which is the minimum number of points needed for a bounded simplex in an Eξ-dimensional space43) of nearest neighboring points of ξt in the corresponding shadow manifold. For \({\boldsymbol{\xi }}={\boldsymbol{\eta }},{\hat{{\mathcal{N}}}}^{{\boldsymbol{\xi }}}({{\boldsymbol{\eta }}}_{t})\) becomes \({\mathcal{N}}({{\boldsymbol{\eta }}}_{t})\). For \({\boldsymbol{\xi }} \, \ne \, {\boldsymbol{\eta }},{\hat{{\mathcal{N}}}}^{{\boldsymbol{\xi }}}({{\boldsymbol{\eta }}}_{t})\) becomes a cross mapping neighborhood from \({\mathcal{N}}({{\boldsymbol{\xi }}}_{t})\) (for an illustrative example, see the horizontal arrows from MY to MX in Fig. 2a). The dependence from \({\mathcal{N}}({{\boldsymbol{\eta }}}_{t})\) to \({\hat{{\mathcal{N}}}}^{{\boldsymbol{\xi }}}({{\boldsymbol{\eta }}}_{t})\) characterizes the causal influence from the variable producing ηt to the variable producing ξt. Previously developed heuristic measures for quantifying such dependence and causal influence16,17,18,20,21 constitute the MCM framework. We exploit the correlation coefficient17 between ηt and \({\hat{{\boldsymbol{\eta }}}}_{t}^{{\boldsymbol{\xi }}}={\mathbb{E}}[{\hat{{\mathcal{N}}}}^{{\boldsymbol{\xi }}}({{\boldsymbol{\eta }}}_{t})]\), where \({\hat{{\boldsymbol{\eta }}}}_{t}^{{\boldsymbol{\xi }}}\) is the mapping from ξt and \({\mathbb{E}}[\cdot ]\) is an operation taking appropriately weighted average over all the points in a given set. Specifically, if the correlation coefficient \({\varrho }_{{\rm{C}}}=\left|{\rm{Corr}}({{\bf{x}}}_{t},{\hat{{\bf{x}}}}_{t}^{{\bf{y}}})\right|\) is larger than an empirical threshold T, the MCM method will stipulate that there is a causal influence from X to Y. MCM complements the field of causality analysis in pairwise non-separable dynamical systems. However, due to causation transitivity, the causal link detected by MCM can be either direct or indirect, as illustrated in Fig. 2a. Additionally, since causation manifests its influence in a certain time delay, we search for an optimal time delay that maximizes the causation (i.e., the obtained correlation coefficient ϱC) between a translated Y and X (see “Methods” for a detailed description)20.

a For the illustrative setting of three variables interacting in a unidirectional causal chain, the MCM method maps \({\mathcal{N}}({{\bf{y}}}_{t})\) to the left circled region \({\hat{{\mathcal{N}}}}^{{\bf{y}}}({{\bf{x}}}_{t})\) in MX, where the estimated \({\hat{X}}^{Y}\) is close to the true X, denoting full causal information from X to Y and leading to erroneous identification of the indirect causal link as the direct link. b For our proposed PCM method, partial correlation coefficient between X and \({\hat{X}}^{Y}\) is calculated by conditioning on the information about \({\hat{X}}^{{\hat{Z}}^{Y}}\), which is mapped from \({\mathcal{N}}({{\bf{y}}}_{t})\) through MZ and then to the right circled region \({\hat{{\mathcal{N}}}}^{{{\bf{z}}}^{{\bf{y}}}}({{\bf{x}}}_{t})\) in MX in a, denoting indirect information flow. Geometrically, ϱC corresponds to the cosine of the angle between X and \({\hat{X}}^{Y}\) in the entire space, while ϱD is the projection of ϱC onto the subspace orthogonal to \({\hat{X}}^{{\hat{Z}}^{Y}}\). Because ϱC ≥ ϱD, the example in a corresponds to the sketch on the right side of b, where the projection is close to the right angle, leading to a near-zero value of ϱD.

Heuristically, ϱC, as defined above, represents the cosine of the angle between X and \({\hat{X}}^{Y}\) in the entire space, as shown in Fig. 2b. In order to distinguish the existence of the causation transitivity, we consider the projection of ϱC onto the information space orthogonal to the indirect information that is induced by the causation transitivity. To this end, we formulate our PCM framework (see “Methods” and Supplementary Fig. 1 for detailed formulations and practical instructions). First, for a time series pair Z and translated \({Y}_{{\tau }_{i}}=\{{y}_{t+{\tau }_{i}}\}\) with possible time delay candidates τi(i = 1, 2, …, m), we apply the conventional MCM method to determine the optimal time delay \({\tau }_{i}={\tau }_{{i}_{1}}\), which maximizes the correlation coefficient \({\rm{Corr}}(Z,{\hat{Z}}^{{Y}_{{\tau }_{i}}})\). Correspondingly, the obtained mapping \({\hat{Z}}^{{Y}_{{\tau }_{{i}_{1}}}}\) from \({Y}_{{\tau }_{{i}_{1}}}\) is denoted by \({\hat{Z}}^{Y}\) for simplicity. The next step is to repeat the procedure to the time series pair X and the translated \({\hat{Z}}_{{\tau }_{i}}^{Y}\) so as to obtain the optimal time delay \({\tau }_{{i}_{2}}\), as well as the mapping \({\hat{X}}^{{\hat{Z}}_{{\tau }_{{i}_{2}}}^{Y}}\) from \({\hat{Z}}_{{\tau }_{{i}_{2}}}^{Y}\), which maximizes the coefficient \({\rm{Corr}}(X,{\hat{X}}^{{\hat{Z}}_{{\tau }_{i}}^{Y}})\). Denoting the obtained mapping by \({\hat{X}}^{{\hat{Z}}^{Y}}\), which is acquired from a successive MCM procedure and characterizes the indirect information flow through Z, and then obtaining \({\hat{X}}^{Y}\), which characterizes all causal information from X to Y, by repeating the above procedure to time series pair X and the translated \({Y}_{{\tau }_{i}}\), we introduce the correlation index: \({\varrho }_{{\rm{D}}}=\left|{\rm{Pcc}}(X,{\hat{X}}^{Y}| {\hat{X}}^{{\hat{Z}}^{Y}})\right|\) to measure the direct causation from X to Y conditioned on the indirect causation through Z, where Pcc( ⋅ , ⋅ ∣ ⋅ ) is the partial correlation coefficient describing the association degree between the first two variables with information about the third variable removed44, in contrast to the MCM index \({\varrho }_{{\rm{C}}}=\left|{\rm{Corr}}(X,{\hat{X}}^{Y})\right|\). Note that we search for the strongest causation on different candidate time delays in every MCM procedure above. As a consequence, ϱD can be regarded intuitively as the projection of ϱC onto the information space orthogonal to the indirect information \({\hat{X}}^{{\hat{Z}}^{Y}}\) (Fig. 2b), and thus eliminates the indirect causal influence.

For three causally interacting variables X, Y, and Z, we generally have ϱC ≥ ϱD. Setting an empirical threshold 1 > T ≫ 0, we have three cases for the order of the correlation index: ϱC ≥ ϱD ≥ T, ϱC ≥ T ≫ ϱD, and T > ϱC ≥ ϱD, corresponding, respectively, to the three causal relations: a direct causal link from X to Y, a sole indirect causal link from X to Y, and the absence of any causal link from X to Y. The index ϱD thus characterizes the degree to which direct causal links can be ascertained while eliminating the possibility of indirect links. For the example in Fig. 2a, the causal interaction of X and Y belongs to the second case above, which can be inferred from the correlation index in the same order as ϱC ≥ T ≫ ϱD. In real applications, it can happen that the causal signals in transition are not strong enough, making the values of ϱC ≳ T and ϱD close to that of T. In such a case, the detection of direct causal links becomes more sensitive to the value of T. To overcome this difficulty, we introduce γ = ϱD/ϱC to measure the proximity of the two index values. The closer the proximity to one, the higher the possibility of the existence of a direct causal link. Multiple tests45,46,47 have been conducted to ensure statistical reliability.

The framework of PCM can be generalized to networked systems with an arbitrary number of interacting variables: X, Y, Z1, …, Zs (s ≥ 2) (e.g., Fig. 1d). With the full correlation between X and \({\hat{X}}^{Y}\), we calculate their partial correlation coefficient, denoted as \({\varrho }_{{{\rm{D}}}_{1}}=\left|{\rm{Pcc}}(X,{\hat{X}}^{Y}| \{{\hat{X}}^{{\hat{Z}}^{iY}}| i=1,\ldots ,s\})\right|\), by removing the information of the cross mapping variables from the s variables Z1, …, Zs, where \({\varrho }_{{{\rm{D}}}_{1}}\) is a first-order measure for distinguishing the direct from indirect causal link from X to Y. Motivation and formalization for extending this measure to higher orders is described in “Methods” section. We emphasize here that strongly coupled (synchronized) variables in nonlinear systems are not in the scope of the PCM framework, because in this circumstance the complete system collapses to the cause system sub-manifold, and the effect variable becomes an observation function on the cause system, where bidirectional causation will always be computationally detected17. In addition, theoretically our PCM framework is based on the Takens–Mañé theorem, which is applicable only for autonomous systems. Data entirely recorded from nonautonomous systems are therefore not directly suitable for this framework48, but our method can be applied to some nonautonomous systems. In particular, it can be numerically used to detect piecewise causations with data from switching systems where the switching points could be located and each duration between the consecutive switching points is sufficiently long. Also, our framework is suitable for some forced systems or/and systems with weak or moderate noise because some generalized embedding theorems could support the soundness of our framework49,50. As for an important kind of nonautonomous system, viz., dynamical oscillators with time-evolving coupled functions or/and with various types of noise, the dynamical Bayesian inference with a delicate set of function bases can provide pretty practical solutions14. As for the future research topics, possible investigations include combining the above mutually complementary methods for causation detection in more general dynamical systems without knowing explicit model equations but with highly complex interaction structures.

Ascertaining direct causation in benchmark systems

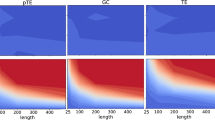

To validate our PCM method, we use the following benchmark system of three interacting species: xt = xt−1(αx − αxxt −1 − βxyyt−1) + ϵx,t, yt = yt−1(αy − αyyt−1 − βyxxt−1 − βyzzt−1) + ϵy,t, and zt = zt−1(αz − αzzt−1 − βzxxt−1) + ϵz,t, for αx = 3.6, αy = 3.72, and αz = 3.68, where ϵi,t (i ∈ {x, y, z}) are white noise of zero mean and standard deviation 0.005. Different choices of the coupling parameters βxy, βyx, βyz, and βzx can lead to distinct interacting modes (Fig. 3a). From the time series, we compute the MCM and PCM indices, ϱC and ϱD, respectively, for detecting the causal link from X to Y, with results listed in Fig. 3b, c. While there are cases where both methods are effective at detecting the direct causal links, for the causal chain and the causal loop structures with the threshold value T = 0.5, the PCM method succeeds in discriminating the indirect causal links, while clearly the MCM method, without eliminating the influence of the causation transitivity, fails. As furher shown in Supplementary Note 2, the PCM performance is more robust than that of the MCM method with respect to variations in the value of T, making the PCM method applicable to real-world systems when there is none or little a priori knowledge of assigning a proper value of T. The results in Fig. 3b, c have also been verified by using the multi-testing corrections. Additionally, for all the other possible interaction structures of three species, including the representative network motifs: fan-in, fan-out, and cascading structures51,52, our systematic studies manifest that the PCM method achieves accurate causation detections completely (see Supplementary Note 1). More importantly, we systematically conducted comparison studies with the Granger causality, the transfer entropy and all their conditional extensions to detect the causations for the above three species system and tested their robustness against different noise levels and time series lengths. As clearly shown in Supplementary Note 3, the PCM outperforms those existing methods which are, in principle, suitable only for the variables satisfying the separability condition. We also provided a comparison study between the PCM framework and the dynamical Bayesian inference in Supplementary Note 3. Both methods have their own particular advantages and could be used in a complementary manner. All these results systematically demonstrate the universal and peculiar usefulness of our method to the typical situation where the variables of dynamical systems are non-separable.

a Three distinct interaction modes of the system. b Causal links from X to Y detected by the MCM method, which contain false direct causation for the second and the third interaction modes. c Direct causal links detected by the PCM method, which successfully excludes the false direct causations in b. Randomly selected are the 100 trials with a 1000-length from 5000-length time series, where the sampling rate is 1 Hz so that the length matches exactly the time unit of the system. The average is calculated over the results of these randomly selected trials. The phase-space reconstruction parameters are E = 4 and τ = 1. Here superscripts of ϱC and ϱD denote the specified causal direction.

Additionally, we validate the effectiveness of the PCM method in a network model containing eight interacting species. As shown in Supplementary Fig. 10, the direct causal network can be reconstructed faithfully while the indirect links are all eliminated successfully with setting an appropriate group of T. In contrast, with the same values of T, the MCM method produces a dense network containing direct, indirect, and even erroneous causal links. We also find that the ratio γ = ϱD/ϱC can be used to improve the detection accuracy even for relatively small values of the threshold T (Supplementary Note 4). Moreover, selecting a practically effective threshold value is much more realizable and robust in our PCM method (see Supplementary Fig. 11 and see Supplementary Note 5 for detailed information on statistical tests and methods for threshold selection). The robustness tests of PCM against the time series lengths and the noise scales also show good effectiveness even with small data size and relatively strong noise in this model (Supplementary Note 3). These additional results demonstrate the power of our PCM method in detecting direct links and accurately reconstructing the underlying causal networks from multivariate time series.

Detecting direct causation in real-world networks

We test gene regulatory networks with gene expression data available from DREAM4 in silico Network Challenge53,54,55. There are five networks with different, synthetically produced structures. Each network has 100 genes. We use the software GeneNetWeaver56 to randomly select 20 interacting genes, where each gene has 10 realizations of 21 gene expression time series data. Figure 4a presents one gene regulatory network (see Supplementary Fig. 12 for the others). For each gene, we combine all realizations as one time series for phase-space reconstruction. We compare the direct causal links detected by PCM with the a priori known edges of the five networks and calculate the respective ROC (receiver operating characteristic) curves (Fig. 4b). We find the average of the five areas under the ROC curves approaches the value of ~0.75, indicating high detection accuracies of direct links in gene regulatory networks even with small data sets, a task for which PCM outperforms the MCM method (see Supplementary Note 6).

a One of the five gene regulatory networks with 20 interacting genes from GeneNetWeaver. Each red (blue) arrow represents an activating (inhibitory) effect. b ROC curves characterizing the PCM detection performance. The corresponding AUROCs are also indicated. The reconstruction parameters are E = 2 and τ = 1. c A food chain network of three plankton species, where the direction of each red arrow represents a prey to predator interaction. d The PCM indices (the color region framed by red boxes) signifying successful detection of the direct causal links (for E = 4 and τ = 1). A relatively weak but direct causal link (the yellow arrow in c) from Rotifers to Pico cyanobacteria is identified through the index framed by the yellow box. e Results on all successfully detected interactions between air pollutants and cardiovascular diseases (red box) for E = 7 and τ = 1. f The reconstructed causal network from the results in e. All detection results are verified using multiple testing corrections.

We next consider the food chain network of three plankton species: Pico cyanobacteria, Rotifers and Cyclopoids, with the prey–predator relations indicated in Fig. 4c. The oscillatory population data are selected from an 8-year mesocosm experiment of a plankton community isolated from the Baltic Sea57,58,59. Our PCM method yields six indices for all the possible causal links, and we preserve the links with index values ⪆10−1 and discard other links (see Supplementary Note 5 for issues on threshold selection). This leads to two direct causal links, which agree with the ground truth of the original network (Fig. 4d). Remarkably, our PCM method successfully excludes the indirect link from Pico cyanobacteria to Cyclopoids. For this network, there is also a weak direct link from Rotifers to Pico cyanobacteria, and our method is indeed able to detect it (verified with multi-testing corrections). This reveals that the actual prey–predator hierarchy does not necessarily match the direct causal links among the species. For example, while predators hunt preys, a predator through hunting can significantly influence the prey populations when they are not tremendously abundant. In such a case, the predator can be regarded as the causal source, giving rise to the third relatively weak but direct causal link.

Our third real-world example is from the recorded data of air pollution and hospital admission of cardiovascular diseases in Hong Kong from 1994 to 1997 (see Supplementary Note 6)60,61,62. As shown in Fig. 4e, f, our PCM method uncovers that only the pollutants, that is, nitrogen dioxide and respirable suspended, are detected as the major causes of cardiovascular diseases. Neither sulfur dioxide nor ozone has been identified as the cause for the diseases, which is consistent with previous results20,63. Our method reveals a unidirectional causal relation from ozone to sulfur dioxide, but the detected causal relations among the recognized pollutants are bidirectional. It is likely that these detected causal relations are either direct or indirect, because data of other factors, such as temperature, humidity, and wind speed, are not completely available, which can be the common causes to some pollutants (e.g., the fan-out interaction mode shown in Supplementary Fig. 2).

We also apply the PCM method to real-world examples, including gene expression data related to the circadian rhythms and electroencephalography data of the human brain in Supplementary Note 7. All the results demonstrate the broad applicability of our method to different scales of data sets, and indeed reveal new viewpoints to the dynamical underpinnings of real-world systems.

Discussion

To summarize the work, by exploiting both dynamical and statistical features from the observed data, there are two major advantages of our method: detecting direct causality based on PCM and handling non-separability problem based on Takens–Mañé’s embedding theorem. Actually, variables for a nonlinear dynamical system are generally considered non-separable due to their intertwined nonlinear nature. Specifically, in contrast to the existing methods on detecting causation, which either misidentify indirect causal links as direct ones or fail due to a violation of the condition of separability, we develop a method theoretically and computationally to solve this outstanding problem, coping with the situation for which the existing frameworks cannot work effectively. The central idea lies in examining the consensus between one time series and its cross map prediction from the other with conditioning on the part that is transferred from the third variable. Our method is capable of not only distinguishing direct from indirect causal influences but also removing the latter. A virtue of our method is that it is generally applicable to nonlinear dynamical networks without requiring the condition of separability, which complements the missing part of causality analysis (see Supplementary Table 3). In fact, the concept of causality in dynamical systems is different from the widely accepted traditional statistical viewpoint that X causes Y if and only if an intervention in X has an effect on Y. Due to the non-separability, causality in dynamical systems should have different formalization, which in simplest way can be intuitively interpreted as a coupling term from X to Y in the system’s equations. Further theoretical interpretations regarding this new framework will be included in our future work. Finally, our PCM method is validated by applying to a number of real-world systems, yielding new insights into the dynamics of these systems. Unambiguous identification of direct causal links with indirect causal influence eliminated is a key to understanding and accurately modeling the underlying system, and our framework therefore provides a vehicle to achieve this goal.

Methods

The concept of non-separability

We illustrate the concept, non-separability, by using a general continuous-time dynamical system:

where the state variable \({\bf{x}}(t)={[{x}_{1}(t),{x}_{2}(t),\ldots ,{x}_{n}(t)]}^{\top }\) evolves inside a compact manifold \({{\mathcal{M}}}_{x}\), forming an attractor \({\mathcal{A}}\) with a dimension \({d}_{{\mathcal{A}}}\). Here, \({d}_{{\mathcal{A}}}\) can be computed as the box-counting dimension of \({\mathcal{A}}\). The dynamics with an initial value \({{\bf{x}}}_{0}\in {{\mathcal{M}}}_{x}\) are denoted by x(t) = φt(x0), where φt(⋅) is regarded as a flow along the manifold \({{\mathcal{M}}}_{x}\). According to Takens–Mañé’s embedding theory and its fractal generalizations, one can, with probability one, reconstruct the system with a positive delay τ and a smooth observation function \(h:{{\mathcal{M}}}_{x}\to {\mathbb{R}}\) in the sense that the delay-coordinate map \({\Gamma }_{h,\varphi ,\tau }({\bf{x}})={[h({\bf{x}}),h({\varphi }_{-\tau }({\bf{x}})),h({\varphi }_{-2\tau }({\bf{x}})),\ldots ,h({\varphi }_{-(L-1)\tau }({\bf{x}}))]}^{\top }\) is generically an embedding map as long as \(L \, > \, 2{d}_{{\mathcal{A}}}\). Particularly for direct illustration, we take the observation function h(x) as a simple coordinate function: h(x) = xi, where xi is the ith component of x. Thus, we have \({\bf{y}}(t)={[{x}_{i}(t),{x}_{i}(t-\tau ),\ldots ,{x}_{i}(t-(L-1)\tau )]}^{\top }\) and also have the manifold \({{\mathcal{M}}}_{x}\) mapped to the shadow manifold \({{\mathcal{M}}}_{y}\) by the embedding map Γ. Since the embedding map is one to one, the dynamics ψτ on the shadow manifold \({{\mathcal{M}}}_{y}\) are topologically conjugated with the dynamics φτ on \({{\mathcal{M}}}_{x}\), that is,

On the one hand, system (1) implies a fact that the future dynamics of one specific component, say xj with j = (or ≠)i, is governed by

and thus depends on the history of all the components x1, x2, …, xn. On the other hand, the relation in (2) implies the other fact that as long as the embedding map Γ exists, the future dynamics of xj is also governed by

and thus only depends on the history of one variable xi and on the embedding map Γ as well.

Generically, it is possible to make a prediction of xj(t + τ) based only on the observation of one variable, and this prediction could be as perfect as the prediction using the information of all the variables x1(t), x2(t), …, xn(t) of the system (this obviously disables the idea of Granger causality and its extensions). Thus, Takens–Mañé’s embedding theory reveals that, in such a deterministic nonlinear dynamical system, the information of the whole dynamical system could be generically injected into only one single variable and thus could be reconstructed by the observation data of that variable. This therefore invites a concept of non-separability, that is, one, prevalently, cannot remove the information of some variable from the other variables when any prediction is made for the dynamical systems. This also reveals that the methods based on prediction frameworks, such as the Granger causality, the transfer entropy, and all their extensions, mathematically are not suitable for dealing with the time series data produced by nonlinear dynamical systems where non-separability always exists among the internal variables. A toy example showing how GC fails in non-separable systems could be referred to the Supplementary Materials of ref. 17.

Transitivity arousing indirect causation

To investigate how the transitivity arouses indirect causation, we consider a heuristic logistic model of three species connected in the following manner:

where the three species X = {xt}, Z = {zt} and Y = {yt} are interacting in a causal chain, denoted by X → Z → Y, and the coupling strengths βzx and βyz are nonzero.

Now, we shift the second equation in (5) with one time step and then substitute it into the last equation in (5), which yields:

Also the last equation in (5) can be transformed as:

so that

Then, a substitution of Eq. (8) into Eq. (6) gives:

Consequently, this equation, coupling with the first equation in (5), forms a causation relation unidirectionally from X to Y. However, this causation is indirect, induced by the transitivity, and then the influence has the effect of time delay for discrete-time dynamical systems.

The PCM method of first order and higher order

We now formulate the PCM framework formally (see Supplementary Fig. 1 for a schematic graph of the PCM procedure). The first step is to translate the time series Y = {yt} with time steps τi(i = 1, 2, …, m), generating m translated variables denoted as \({Y}_{{\tau }_{i}}=\{{y}_{t+{\tau }_{i}}\}\). For time series pair \({Y}_{{\tau }_{i}}\) and Z, we apply the conventional MCM method (see the practical steps below) to obtain the mapping \({\hat{Z}}^{{Y}_{{\tau }_{i}}}\) from \({Y}_{{\tau }_{i}}\) and calculate the correlation coefficient \({\rm{Corr}}(Z,{\hat{Z}}^{{Y}_{{\tau }_{i}}})\). For simplicity, we denote \({\hat{Z}}^{Y}\) as the mapping \({\hat{Z}}^{{Y}_{{\tau }_{{i}_{1}}}}\) with

The next step is to repeat the procedure to the time series pair of translated \({\hat{Z}}_{{\tau }_{i}}^{Y}\) and X so as to obtain the mapping \({\hat{X}}^{{\hat{Z}}_{{\tau }_{i}}^{Y}}\) from \({\hat{Z}}_{{\tau }_{i}}^{Y}\), and set \({\hat{X}}^{{\hat{Z}}^{Y}}\) as \({\hat{X}}^{{\hat{Z}}_{{\tau }_{{i}_{2}}}^{Y}}\) with

Now the obtained \({\hat{X}}^{{\hat{Z}}^{Y}}\) represents the indirect information flow. By directly applying MCM to the translated \({Y}_{{\tau }_{i}}\) and X, we could have \({\hat{X}}^{Y}\) denoting all the information transferred from X to Y, which is simplified for \({\hat{X}}^{{Y}_{{\tau }_{{i}_{3}}}}\) with

We now introduce the correlation index:

where Pcc( ⋅ , ⋅ ∣ ⋅ ) is the partial correlation coefficient describing the association degree between the first two variables with information about the third variable removed. We review the definition of partial correlation coefficient here. For time series X, Y, and Z1, …, Zs, the partial correlation coefficient between X and Y conditioned on Z1 is

The partial correlation coefficient between X and Y conditioned on both Z1 and Z2 is

and the partial correlation coefficient between X and Y conditioned on more variables can be defined recursively. For the computation and more information on the partial correlation coefficient, see refs. 44,64.

To provide detailed instruction to our method, we summarize the practical steps here:

Procedure A: MCM for detecting causation from \(U={\{{u}_{t}\}}_{t = 1}^{L}\) to \(V={\{{v}_{t}\}}_{t = 1}^{L}\):

-

1.

Reconstruct the phase space by using delay-coordinate embedding for time series U and V, the reconstruction parameters (embedding dimensions Eu, Ev and time lags τu, τv) can be selected by FNN algorithm and by the method of DMI, respectively (see Supplementary Note 5);

-

2.

For each time index t, find the set of neighboring points \({\mathcal{N}}({{\bf{v}}}_{t})\) of vt (Ev + 1 nearest neighbors are used since it is the minimum number of points needed for a bounded simplex in an Ev-dimensional space43);

-

3.

Find the corresponding points in MU that have the same time indexes as the points in \({\mathcal{N}}({{\bf{v}}}_{t})\) and calculate their weighted average (the weights are determined by the distances between the point in \({\mathcal{N}}({{\bf{v}}}_{t})\) and vt, which defines the operation \({\mathbb{E}}[\cdot ]\)) to obtain the estimated \({\hat{{\bf{u}}}}_{t}^{{\bf{v}}}\);

-

4.

Use an appropriate index (such as \({\varrho }_{{\rm{C}}}=\left|{\rm{Corr}}({{\bf{u}}}_{t},{\hat{{\bf{u}}}}_{t}^{{\bf{v}}})\right|\)) to characterize the consensus of the estimated time series \({\hat{U}}^{{V}_{0}}\) (subscript 0 is denoted for no translation of V here to keep consistency with the following notations) and the original time series U, which measures the causation from U to V.

Procedure B: PCM for detecting direct causation from X to Y conditioning on Z:

-

1.

Translate time series Y with different candidate time delays τi(i = 1, 2, …, m) to generate \({Y}_{{\tau }_{i}}=\{{y}_{t+{\tau }_{i}}\}\);

-

2.

For each pair Z to \({Y}_{{\tau }_{i}}\), perform Procedure A to obtain \({\rm{Corr}}(Z,{\hat{Z}}^{{Y}_{{\tau }_{i}}})\), and denote \({\hat{Z}}^{Y}\) as \({\hat{Z}}^{{Y}_{{\tau }_{{i}_{1}}}}\), where the time delay \({\tau }_{{i}_{1}}\) maximizes \({\rm{Corr}}(Z,{\hat{Z}}^{{Y}_{{\tau }_{i}}})\) as in (10);

-

3.

Translate time series \({\hat{Z}}^{Y}\) with different candidate time delays τi(i = 1, 2, …, m) to generate \({\hat{Z}}_{{\tau }_{i}}^{Y}\);

-

4.

For each pair X to \({\hat{Z}}_{{\tau }_{i}}^{Y}\), perform Procedure A to obtain \({\rm{Corr}}(X,{\hat{X}}^{{\hat{Z}}_{{\tau }_{i}}^{Y}})\), and denote \({\hat{X}}^{{\hat{Z}}^{Y}}\) as \({\hat{X}}^{{\hat{Z}}_{{\tau }_{{i}_{2}}}^{Y}}\), where the time delay \({\tau }_{{i}_{2}}\) maximizes \({\rm{Corr}}(X,{\hat{X}}^{{\hat{Z}}_{{\tau }_{i}}^{Y}})\) as in (11);

-

5.

For each pair X to \({Y}_{{\tau }_{i}}\), perform Procedure A to obtain \({\rm{Corr}}(X,{\hat{X}}^{{Y}_{{\tau }_{i}}})\), and denote \({\hat{X}}^{Y}\) as \({\hat{X}}^{{Y}_{{\tau }_{{i}_{3}}}}\), where the time delay \({\tau }_{{i}_{3}}\) maximizes \({\rm{Corr}}(X,{\hat{X}}^{{Y}_{{\tau }_{i}}})\) as in (12);

-

6.

Use \({\varrho }_{{\rm{D}}}=\left|{\rm{Pcc}}(X,{\hat{X}}^{Y}| {\hat{X}}^{{\hat{Z}}^{Y}})\right|\) to measure the direct causation from X to Y conditioning on Z.

Note that we search for the strongest causation on different candidate time delays in every MCM procedure above. For consistency, in the whole research, all the MCM results are also based on this strategy. Moreover, it is possible to characterize the causal relations among variables on a distribution of time delays (i.e., a causal spectrum). This full causal description will be included in our future work.

As described above, the first-order PCM method can be established as following definition for networked systems of more than three interacting variables: X, Y, Z1, …, Zs(s ≥ 2) (e.g., Fig. 1d), based on which high-order method can be derived,

In a complex dynamical networks, the indirect causation could also be transferred through more than one variables (e.g., through two variables X → Z1 → Z2 → Y). The high-order PCM method is derived to specifically characterize this situation. In particular, we calculate the correlation coefficient between X and \({\hat{X}}^{Y}\), and the partial correlation coefficient between them through removal of the information about the cross mapping variables via two variables out of the s variables Z1, …, Zs. The partial correlation coefficient

represents effectively a second-order method for differentiating the direct and indirect causal links from X to Y that is transferred through two mediate variables. Analogously, the nth order measure, denoted by \({\varrho }_{{{\rm{D}}}_{n}}\), can be defined through any combinations of n mediate variables from Z1, …, Zs as

Together with \({\varrho }_{{\rm{C}}},{\varrho }_{{{\rm{D}}}_{n}}\,(n=1,\ldots ,s)\) and the PCM measure

reflecting the proximity of all these coefficients, we obtain higher-order PCM methods for detecting direct causal links in large networks. However, for a relatively large order n, the possible number of combinations of n mediate variables is quite large. We will study the computations and applications of the high-order methods in future work, and in this research, we only consider the first-order problem.

In practice, the partial correlation procedure will encounter calculation problems if the network scale is relatively large and thus a large conditioning set should be taken into account. In this case, we could adopt the technique of selecting several nodes Zi that maximize \({\varrho }_{{\rm{C}}}^{X\to {Z}^{i}}+{\varrho }_{{\rm{C}}}^{{Z}^{i}\to Y}\) (or \(\min \{{\varrho }_{{\rm{C}}}^{X\to {Z}^{i}},{\varrho }_{{\rm{C}}}^{{Z}^{i}\to Y}\}\)), which means a high probability of the existence of an indirect link through Zi, and make conditioning on these nodes. Moreover, if we have a priori knowledge that the network is sparse, that is, indirect connections are seldom, we could also make conditioning on Z1, …, Zs one by one, and take the minimum value of \({\varrho }_{{\rm{D}}}^{X\to Y| {Z}^{i}}\) as the final result.

Moreover, the PCM idea can be further developed or varied by substituting the partial correlation to other possible measures characterizing the conditional dependence. For example, the coefficient of determination (denoted r2) is a possible choice to serve as an index directly estimated from the cross map neighbors in parceling out effect sizes for each contributing factor. Another heuristic thinking is that for indirect causal influence X → Z → Y, cutting off either the link X → Z or Z → Y is enough to eliminate the whole indirect information flow, which also provides variation of the PCM framework. These further variations will be included in our future work.

Data availability

The data sets generated during and/or analyzed during the current study are all available from the corresponding author on reasonable request. The links/references for the public data sets used and analyzed during the current study are all provided in Supplementary Information.

Code availability

The codes as well as their directions for the PCM framework that we developed in this article are publicly available at https://github.com/Partial-Cross-Mapping.

References

Granger, C. W. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424–438 (1969).

Geweke, J. F. Measurement of linear dependence and feedback between multiple time series. J. Am. Stat. Assoc. 77, 304–313 (1982).

Geweke, J. F. Measures of conditional linear dependence and feedback between time series. J. Am. Stat. Assoc. 79, 907–915 (1984).

Ding, M., Chen, Y. & Bressler, S. L. In Handbook of Time Series Analysis 437–460 (Wiley, Hoboken, 2006).

Guo, S., Ladroue, C. & Feng, J. In Frontiers in Computational and Systems Biology 83–111 (Springer, New York, 2010).

Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 85, 461 (2000).

Vicente, R., Wibral, M., Lindner, M. & Pipa, G. Transfer entropy-a model-free measure of effective connectivity for the neurosciences. J. Comput. Neurosci. 30, 45–67 (2011).

Cover, T. M. & Thomas, J. A. Elements of Information Theory (Wiley, Hoboken, 2012).

Sun, J., Cafaro, C. & Bollt, E. M. Identifying the coupling structure in complex systems through the optimal causation entropy principle. Entropy 16, 3416–3433 (2014).

Cafaro, C., Lord, W. M., Sun, J. & Bollt, E. M. Causation entropy from symbolic representations of dynamical systems. Chaos 25, 043106 (2015).

Sun, J., Taylor, D. & Bollt, E. M. Causal network inference by optimal causation entropy. SIAM J. Appl. Dyn. Syst. 14, 73–106 (2015).

Duggento, A., Stankovski, T., McClintock, P. V. & Stefanovska, A. Dynamical bayesian inference of time-evolving interactions: from a pair of coupled oscillators to networks of oscillators. Phys. Rev. E 86, 061126 (2012).

Stankovski, T., Duggento, A., McClintock, P. V. & Stefanovska, A. A tutorial on time-evolving dynamical bayesian inference. Eur. Phys. J. Spec. Top. 223, 2685–2703 (2014).

Stankovski, T., Ticcinelli, V., McClintock, P. V. & Stefanovska, A. Coupling functions in networks of oscillators. N. J. Phys. 17, 035002 (2015).

Stankovski, T., Pereira, T., McClintock, P. V. & Stefanovska, A. Coupling functions: universal insights into dynamical interaction mechanisms. Rev. Mod. Phys. 89, 045001 (2017).

Schiff, S. J., So, P., Chang, T., Burke, R. E. & Sauer, T. Detecting dynamical interdependence and generalized synchrony through mutual prediction in a neural ensemble. Phys. Rev. E 54, 6708 (1996).

Sugihara, G. et al. Detecting causality in complex ecosystems. Science 338, 496–500 (2012).

Ma, H., Aihara, K. & Chen, L. Detecting causality from nonlinear dynamics with short-term time series. Sci. Rep. 4, 7464 (2014).

Jiang, J.-J., Huang, Z.-G., Huang, L., Liu, H. & Lai, Y.-C. Directed dynamical influence is more detectable with noise. Sci. Rep. 6, 24088 (2016).

Ma, H. et al. Detection of time delays and directional interactions based on time series from complex dynamical systems. Phys. Rev. E 96, 012221 (2017).

Harnack, D., Laminski, E., Schünemann, M. & Pawelzik, K. R. Topological causality in dynamical systems. Phys. Rev. Lett. 119, 098301 (2017).

Joskow, P. L. & Rose, N. L. In Handbook of Industrial Organization, Vol. 2, 1449–1506 (Elsevier, Amsterdam, 1989).

Kamiński, M., Ding, M., Truccolo, W. A. & Bressler, S. L. Evaluating causal relations in neural systems: Granger causality, directed transfer function and statistical assessment of significance. Biol. Cybern. 85, 145–157 (2001).

Banos, R. et al. Optimization methods applied to renewable and sustainable energy: a review. Renew. Sust. Energ Rev. 15, 1753–1766 (2011).

Brockmann, D. & Helbing, D. The hidden geometry of complex, network-driven contagion phenomena. Science 342, 1337–1342 (2013).

Deyle, E. R. et al. Predicting climate effects on pacific sardine. Proc. Natl Acad. Sci. USA 110, 6430–6435 (2013).

Van Nes, E. H. et al. Causal feedbacks in climate change. Nat. Clim. Change 5, 445 (2015).

Tsonis, A. A. et al. Dynamical evidence for causality between galactic cosmic rays and interannual variation in global temperature. Proc. Natl Acad. Sci. USA 112, 3253–3256 (2015).

Hirata, Y. et al. Detecting causality by combined use of multiple methods: climate and brain examples. PLoS ONE 11, e0158572 (2016).

Ma, H., Leng, S., Aihara, K., Lin, W. & Chen, L. Randomly distributed embedding making short-term high-dimensional data predictable. Proc. Natl Acad. Sci. USA 115, E9994–E10002 (2018).

Leng, S., Xu, Z. & Ma, H. Reconstructing directional causal networks with random forest. Chaos 29, 093130 (2019).

Guo, S., Seth, A. K., Kendrick, K. M., Zhou, C. & Feng, J. Partial granger causality-eliminating exogenous inputs and latent variables. J. Neurosci. Methods 172, 79–93 (2008).

Frenzel, S. & Pompe, B. Partial mutual information for coupling analysis of multivariate time series. Phys. Rev. Lett. 99, 204101 (2007).

Zhao, J., Zhou, Y., Zhang, X. & Chen, L. Part mutual information for quantifying direct associations in networks. Proc. Natl Acad. Sci. USA 113, 5130–5135 (2016).

Runge, J., Heitzig, J., Petoukhov, V. & Kurths, J. Escaping the curse of dimensionality in estimating multivariate transfer entropy. Phys. Rev. Lett. 108, 258701 (2012).

Schelter, B. et al. Direct or indirect? graphical models for neural oscillators. J. Physiol. 99, 37–46 (2006).

Nawrath, J. et al. Distinguishing direct from indirect interactions in oscillatory networks with multiple time scales. Phys. Rev. Lett. 104, 038701 (2010).

Runge, J. Causal network reconstruction from time series: from theoretical assumptions to practical estimation. Chaos 28, 075310 (2018).

Runge, J., Petoukhov, V. & Kurths, J. Quantifying the strength and delay of climatic interactions: the ambiguities of cross correlation and a novel measure based on graphical models. J. Clim. 27, 720–739 (2014).

Takens, F. In Dynamical Systems and Turbulence, Warwick 1980, 366–381 (Springer, New York, 1981).

Mañé, R. In Dynamical Systems and Turbulence, Warwick 1980, 230–242 (Springer, New York, 1981).

Kantz, H. & Schreiber, T. Nonlinear Time Series Analysis, Vol. 7 (Cambridge Univ. Press, Cambridge, 2004).

Sugihara, G. & May, R. M. Nonlinear forecasting as a way of distinguishing chaos from measurement error in time series. Nature 344, 734 (1990).

Bailey, N. T. Statistical Methods in Biology (Cambridge Univ. Press, Cambridge, 1995).

Noble, W. S. How does multiple testing correction work? Nat. Biotechnol. 27, 1135 (2009).

Shaffer, J. P. Multiple hypothesis testing. Annu. Rev. Psychol. 46, 561–584 (1995).

Lancaster, G., Iatsenko, D., Pidde, A., Ticcinelli, V. & Stefanovska, A. Surrogate data for hypothesis testing of physical systems. Phys. Rep. 748, 1–60 (2018).

Clemson, P. T. & Stefanovska, A. Discerning non-autonomous dynamics. Phys. Rep. 542, 297–368 (2014).

Stark, J. Delay embeddings for forced systems. i. deterministic forcing. J. Nonlinear Sci. 9, 255–332 (1999).

Stark, J., Broomhead, D. S., Davies, M. & Huke, J. Delay embeddings for forced systems. II. Stochastic forcing. J. Nonlinear Sci. 13, 519–577 (2003).

Milo, R. et al. Network motifs: simple building blocks of complex networks. Science 298, 824–827 (2002).

Alon, U. Network motifs: theory and experimental approaches. Nat. Rev. Genet. 8, 450 (2007).

Marbach, D. et al. Revealing strengths and weaknesses of methods for gene network inference. Proc. Natl Acad. Sci. USA 107, 6286–6291 (2010).

Marbach, D., Schaffter, T., Mattiussi, C. & Floreano, D. Generating realistic in silico gene networks for performance assessment of reverse engineering methods. J. Comput. Biol. 16, 229–239 (2009).

Prill, R. J. et al. Towards a rigorous assessment of systems biology models: the dream3 challenges. PLoS ONE 5, e9202 (2010).

Schaffter, T., Marbach, D. & Floreano, D. Genenetweaver: in silico benchmark generation and performance profiling of network inference methods. Bioinformatics 27, 2263–2270 (2011).

Benincà, E., Jöhnk, K. D., Heerkloss, R. & Huisman, J. Coupled predator–prey oscillations in a chaotic food web. Ecol. Lett. 12, 1367–1378 (2009).

Benincà, E. et al. Chaos in a long-term experiment with a plankton community. Nature 451, 822 (2008).

Neutel, A.-M., Heesterbeek, J. A. & de Ruiter, P. C. Stability in real food webs: weak links in long loops. Science 296, 1120–1123 (2002).

Lee, B.-J., Kim, B. & Lee, K. Air pollution exposure and cardiovascular disease. Toxicol. Res. (Seoul., Repub. Korea) 30, 71 (2014).

Wong, T. W. et al. Air pollution and hospital admissions for respiratory and cardiovascular diseases in hong kong. Occup. Environ. Med. 56, 679–683 (1999).

Fan, J. & Zhang, W. Statistical estimation in varying coefficient models. Ann. Stat. 27, 1491–1518 (1999).

Milojevic, A. et al. Short-term effects of air pollution on a range of cardiovascular events in England and Wales: case-crossover analysis of the minap database, hospital admissions and mortality. Heart 100, 1093–1098 (2014).

Baba, K., Shibata, R. & Sibuya, M. Partial correlation and conditional correlation as measures of conditional independence. Aust. N. Z. J. Stat. 46, 657–664 (2004).

Acknowledgements

W.L. is supported by the National Key R&D Program of China (No. 2018YFC0116600), by the National Natural Science Foundation of China (Nos 11925103 and 61773125), and by the STCSM (Nos 18DZ1201000, 19511132000, and 2018SHZDZX01). L.N.C. is supported by the National Key R&D Program of China (No. 2017YFA0505500), by the Strategic Priority Project of CAS (No. XDB38000000), by the Natural Science Foundation of China (Nos 31771476 and 31930022), and by Shanghai Municipal Science and Technology Major Project (No. 2017SHZDZX01). S.Y.L. and K.A. are supported by JSPS KAKENHI (No. JP15H05707) and by AMED (No. JP20dm0307009). Y.-C.L. is supported by ONR (No. N00014-16-1-2828). H.F.M. is supported by the National Key R&D Program of China (No. 2018YFA0801100) and the National Natural Science Foundation of China (No. 11771010). J.K. is supported by the project RF Government Grant 075-15-2019-1885.

Author information

Authors and Affiliations

Contributions

W.L. and L.N.C. conceived the idea; S.Y.L., H.F.M., W.L., K.A., and L.N.C designed the research; S.Y.L., H.F.M., and W.L. performed the research; All authors, S.Y.L., H.F.M., J.K., Y.-C.L., W.L., K.A., and L.N.C., analyzed the data and wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Communications thanks Aneta Stefanovska and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Leng, S., Ma, H., Kurths, J. et al. Partial cross mapping eliminates indirect causal influences. Nat Commun 11, 2632 (2020). https://doi.org/10.1038/s41467-020-16238-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-020-16238-0

This article is cited by

-

Higher-order Granger reservoir computing: simultaneously achieving scalable complex structures inference and accurate dynamics prediction

Nature Communications (2024)

-

Uncovering hidden nodes and hidden links in complex dynamic networks

Science China Physics, Mechanics & Astronomy (2024)

-

Causal Linkage Effect on Chinese Industries via Partial Cross Mapping Under the Background of COVID-19

Computational Economics (2024)

-

A general model-based causal inference method overcomes the curse of synchrony and indirect effect

Nature Communications (2023)

-

Modern causal inference approaches to investigate biodiversity-ecosystem functioning relationships

Nature Communications (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.