Abstract

Objectives

To evaluate the accuracy of virtual orthodontic setup in simulating treatment outcomes and to determine whether virtual setup should be used in orthodontic practice and education.

Materials and Methods

A systematic search was performed in five electronic databases: PubMed, Scopus, Embase, ProQuest Dissertations & Theses Global, and Google Scholar from January 2000 to November 2022 to identify all potentially relevant evidence. The reference lists of identified articles were also screened for relevant literature. The last search was conducted on 30 November 2022.

Results

This systematic review included twenty-one articles, where all of them were assessed as moderate risk of bias. The extracted data were categorized into three groups, which were: (1) Virtual setup and manual setup; (2) Virtual setup and actual outcomes in clear aligner treatment; (3) Virtual setup and actual outcomes in fixed appliance treatment. There appeared to be statistically significant differences between virtual setups and actual treatment outcomes, however the discrepancies were clinically acceptable.

Conclusion

This systematic review supports the use of orthodontic virtual setups, and therefore they should be implemented in orthodontic practice and education with clinically acceptable accuracy. However, high-quality research should be required to confirm the accuracy of virtual setups in simulating treatment outcomes.

Similar content being viewed by others

Introduction

Orthodontic practice requires proper diagnosis and treatment planning to achieve expected treatment outcomes. However, especially in complicated orthodontic cases such as asymmetric extraction or multiple missing teeth, there tend to be more than one possible treatment options [1, 2]. These possibilities therefore should be simulated to predict their expected outcomes. A traditional approach of dental setup has been developed to simulate the orthodontic tooth movement by sectioning each tooth in a plaster model, moving them to favorable locations, and then positioning the moved teeth by wax [2,3,4]. However, this traditional dental setup requires considerable time and effort to complete the procedure.

Virtual orthodontic setups or tooth movement simulations have recently been adopted in orthodontics to overcome challenges of the traditional approach. Similar to other digital orthodontics, virtual dental setup can be considered as storage-space-friendly [5], damage-free [6, 7], and user-friendly [5, 7]. In addition, with the virtual setup, a number of possible treatment plans for an orthodontic problem (e.g., extraction, interproximal reduction, or expansion techniques to gain space for crowding elimination) can be simulated to visualize their results [8,9,10]. Virtual orthodontic setup can also undergo superimposition with the initial model, where the precise amount of tooth movement can be analyzed in each treatment option [11, 12]. Following the favorable characteristics of the virtual orthodontic setups, they should be considered as a replacement for traditional plaster model setups.

Not only the virtual orthodontic setup can be supportive for patient care, but it can also be in an educational aspect. As there is evidence that the visualization of tooth movement simulation could enhance a communication during the discussion of orthodontic treatment plans [9, 11, 13], it could support the discussion between orthodontic residents and their clinical advisors in discussing treatment outcomes of various orthodontic cases. Virtual setups can also be used for a case conference where orthodontists could present their treatment to their colleagues for educational purposes [7]. With the virtual setup, orthodontic patients with various craniofacial problems can be simulated, where orthodontic residents could gain experiences and improve their cognitive competence in safe environments through computer-generated tooth movement [14]. Consequently, virtual setup can play an important role as a technology-enhanced learning tool in orthodontic education.

Albeit the advantages of the virtual orthodontic setup, its precision and reliability seem to be a point of concern [15, 16]. There could be a number of errors at any steps, including obtaining the digital model, either by intraoral or plaster model scanning [17, 18], importing files to various simulation software [7, 19, 20], performing virtual tooth segmentation [21,22,23], moving teeth according to optimal treatment plan in different software [24, 25], and the measurement techniques of tooth movement [12, 26]. Although there had been several studies evaluating the reliability and accuracy of virtual orthodontic setup, this concern has not been yet comprehensively reviewed. As virtual orthodontic setup and simulation software have potential for clinical and educational purposes, this systematic review was conducted to evaluate their accuracy in simulating treatment outcomes.

Materials and methods

Review design

A systematic review was selected to evaluate the accuracy of digital orthodontic setups by comparing their tooth movement simulation to outcomes of actual treatments or manual setups, with a purpose of determining whether or not they should be used for clinical and educational purposes. This review methodology allows researchers to analyze a group of information on an interesting topic. Systematic reviews require the application of scientific strategies to minimize potential bias in reviewing all relevant evidence on a selected topic, to critically appraise and synthesize into a single comprehensive report [27,28,29]. The scientific procedures of systematic review are involving seven consecutive stages [27], including: (1) identify research questions or purposes; (2) identify research protocols; (3) systematically search relevant literature according to the inclusion and exclusion criteria; (4) extract data into organized categories; (5) assess quality of all the retrieved literatures; (6) collate, summarize, and report results; and (7) interpret results. Hence, systematic reviews can provide valid conclusions as well as valid evidence base for a selected topic.

Search strategy

The systematic search was performed across five electronic databases, which were PubMed, Embase, Scopus, ProQuest Dissertations & Theses (PQDT) Global, and Google Scholar. Gray literature was also expected from Google Scholar to cover orthodontic virtual setups wherever possible. The reference lists of identified articles were screened for relevant literature. The iterative searches were performed to adjust the search strategy and search terms to assure the robustness of this review [30]. The search terms were developed according to PICO approach and was detailed in Table 1. However, search terms for comparison finally were not included in order to ensure that as many as relevant articles would be identified. The last search of this systematic review was performed on 30 November 2022.

Inclusion and exclusion criteria

All types of empirical study regarding the accuracy of virtual setup in orthodontics published from January 2000 to November 2022 were eligible for this review. However, they were excluded if they were not relevant to orthodontic tooth movement and if they were not reporting outcomes of accuracy assessment. They were also excluded if they were not available in full-text or in English. These inclusion and exclusion criteria were presented in Table 2.

Article selection

Systematic searches and article screening were independently conducted by two researchers (BS and KS). The eligibility of the pre-identified articles was confirmed by the two researchers (BS and KS) independently after a screening of titles, abstracts, and full-text. Any disagreements on the article selection between the researchers were resolved by discussing and consulting with the third researcher (RC) by considering inclusion and exclusion criteria.

Risk of bias assessment for included articles

The strength of systematic reviews depends on the recruitment of high-quality studies, so assessing the quality of included articles is essential. The two researchers (B.S. and K.S.) independently assessed the quality and the strength of evidence of included articles using Swedish Council on Technology Assessment in Health Care (SBU) and Center for Reviews and Dissemination (CRD) [31], which could be graded into three levels of evidence as shown in Table 3. Similar to the article selection process, in the event of disagreement between the two researchers, the quality assessment was discussed with the other researcher (R.C.). The evaluation of included articles would reflect the level of evidence of this systematic review according to the protocol of SBU and CRD (Table 4). This tool was user-friendly and suitable for a fundamental appraisal of grading evidence in a systematic review [32]. Therefore, it was used as a checklist for determining the quality of articles included in this review.

A risk of bias assessment of included articles was performed using the Cochrane Collaboration’s tool [33], which was ‘Risk of Bias In Non-randomized Studies of Interventions (ROBINS-I)’. This tool could be used widely as a domain-based assessment, rather than focusing on only clinical treatment interventions in the evaluation of healthcare experimental research like other tools. ROBINS-I allowed researchers to assess a risk of bias of each included articles in seven domains, which were biases due to “confounding, selection of participants into the study, classification of intervention, deviations from intended interventions, missing data, and measurement of outcomes, and selection of the reported result”. All included articles were evaluated whether their risk of bias was low, moderate, high, or unclear. The assessment outcomes would inform whether the evidence included in this systematic review was robust or not, by considering the quality of included articles in terms of research methodology and report.

Data extraction and synthesis

The data from included articles were extracted and synthesized in the following categories: type of virtual setup, research objectives, methodology, outcome measurement, key findings, author conclusion, and risk of bias assessment (Tables S1–S3). The data synthesis was then performed using a narrative approach, where the themes of this systematic review would be constructed from the extracted information.

Results

Articles identified from the search

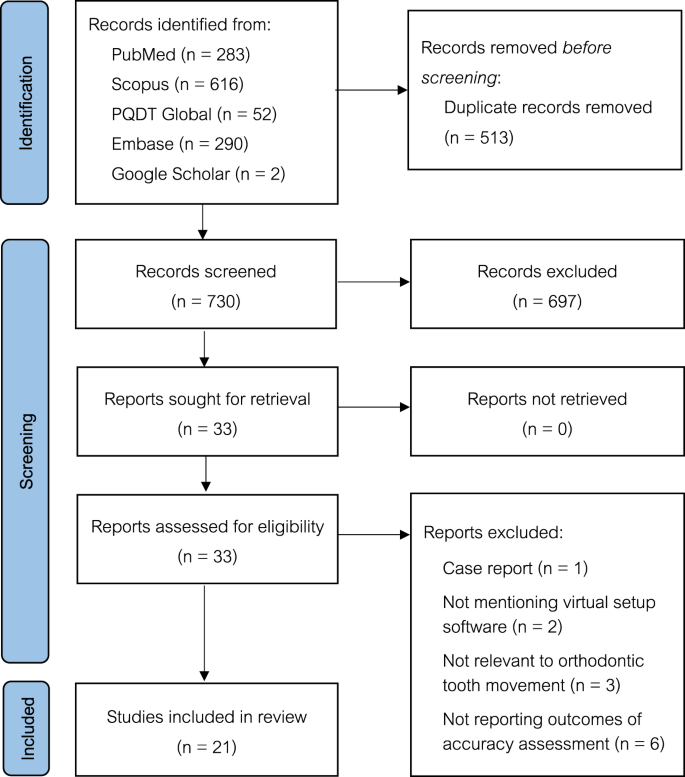

As presented in the PRISMA flow chart for study selection (Fig. 1), the electronic searches revealed 1241 articles from the four databases (PubMed = 283, Scopus = 616, Embase = 290, and PQDT Global = 52), and two studies were identified from Google Scholar. There was no additional research identified from the reference lists of included articles. After 513 duplicates were removed, 730 titles and abstracts were screened with consideration of the inclusion and exclusion criteria. Following the initial screen, 33 articles were selected for a full-text review, and 12 of them were excluded due to being a case report, no virtual setup software mentioned, no comparison of virtual setup and other techniques, being not relevant to orthodontic tooth movement, or no outcomes of accuracy assessment reported. Consequently, 21 full-texts were included in this systematic review.

Quality of the articles included in this review

When evaluating the strength of included evidence with SBU, there were three article of prospective clinical trials that could be considered as a high value of evidence (Grade A) [34,35,36]. The other included articles appeared to be of moderate value of evidence, as they were retrospective studies. Therefore, the overall level of evidence of this systematic review was considered as strong, with three article of Level ‘A’ evidence and the other studies of Level ‘B’. According to ROBINS-I assessment, all included articles were evaluated as low or moderate risk of bias for all domains, so all of them were interpreted as moderate risk of bias. Although no included research was considered as high quality (low risk of bias), the quality of included evidence was not considered as problematic, as nearly all of them were non-randomized studies. Therefore, the risks of bias were mostly from confounding factors of research designs, such as different setup providers [35, 37, 38], no mention of a setup provider [35, 39,40,41,42], varying degree of malocclusion at beginning of the treatment [39, 42,43,44,45], and presence of any additional mechanics [44,45,46,47]. Also, the researchers who assessed the outcomes were not blinded in several articles [25, 34,35,36,37,38, 40,41,42,43,44,45, 47,48,49,50,51,52]. Test-retest reliability was not performed to confirm the reproducibility and reliability of the measurement in five articles [25, 34, 40, 41, 45]. Only one article performed an interrater reliability to confirm the consistency between the two assessors [44].

Study design of included articles

Most of the included studies were non-randomized retrospective studies (n = 17), with exception of one prospective randomized clinical trial [34], two prospective non-randomized clinical research [35, 36], and one retrospective randomized research [39]. The sample size of included articles varied from ten to ninety-four samples, presenting various types of orthodontic problems were included ranging from mild to severe malocclusion. Out of twenty-one studies included in this systematic review, there were three articles comparing the treatment outcomes between manual and virtual setup [37, 39, 48]. The outcomes of virtual setup and actual treatment were compared in 18 articles, where the accuracy of virtual setup in clear aligners were evaluated in ten studies [25, 34,35,36, 38, 40, 41, 43, 49, 50] while eight research evaluated its accuracy in fixed orthodontic appliances [42, 44,45,46,47, 51,52,53].

Virtual setup software used in included articles

There were a number of software used for virtual setup as reported in the included articles. ClinCheck appeared to be the most popular software used in six articles [25, 34, 35, 40, 43, 49], followed by OrthoAnalyzer in five studies [37,38,39, 44, 47], SureSmile in three papers [42, 45, 53], and Maestro 3D [50, 51]. Other tooth movement simulations were 3Txer [48], Airnivol [36], Flash [25], OrthoDS 4.6 [41], eXceed software [52], and uLab [46], where each of them was included in only an article. In addition, there were four studies, implementing cone-beam computed tomography systems (CBCT) to tooth simulation software, in order to provide more precise information with a reference to the face and skull of patients [44, 47, 49, 51].

Outcome measurements

To measure the accuracy of tooth movement simulation, the treatment outcomes of virtual setup were compared with manual setup or actual treatment, where the differences between two approaches were compared in terms of linear intra-arch, interarch dimensions, and angular dimension. The comparisons were performed by digital software measurement [36,37,38, 40, 46, 50, 53], manually handed measurement [39, 48], or superimposition with a best-fit method [25, 34, 35, 41,42,43,44,45, 47, 49, 51, 52]. Seven included studies have clearly defined the threshold values of tooth movement discrepancies between virtual setup or actual treatment in reference to the American Board of Orthodontics (ABO) model grading system [25, 34, 42, 43, 45, 47, 52]. Thus, clinically significant discrepancies were set at over 0.5 mm for linear movements and over 2 degrees for angular movements in these articles. However, Smith et al. [53] set a discrepancy of 2.5 degrees of tooth tip and torque to be clinically acceptable variation for tip and torque.

Accuracy of virtual setup

The accuracy of tooth movement simulations can be categorized into three groups, depending on the interventions that virtual setup was compared with, which were: (1) the accuracy of virtual setup in simulating treatment outcomes compared with manual setup, (2) the accuracy of virtual setup in simulating treatment outcomes of clear aligner treatment, and (3) the accuracy of virtual setup in simulating treatment outcomes of fixed appliance treatment.

The accuracy of virtual setup in simulating treatment outcomes compared with manual setup

There were three articles comparing treatment outcomes between virtual and manual setup [37, 39, 48]. Two articles supported the accuracy of tooth movement simulation using OrthoAnalyzer and 3Txer software [37, 48], as virtual and manual setups provided comparable measurements of treatment outcomes. However, there was an article reporting that there were statistically significant differences in tooth movement simulation between the virtual and conventional setups [39], where the printed virtual setup was less accurate than conventional setup with small accuracy differences from printing technology, tooth collision and software limitations. The data of the included articles in this group were extracted in Table S1.

The accuracy of virtual setup in simulating treatment outcomes of clear aligner treatment

There were ten articles comparing treatment outcomes between virtual and aligner treatment [25, 34,35,36, 38, 40, 41, 43, 49, 50], where the patients included in all of these studies were non-extraction and non-surgical cases. ClinCheck was the most popular software used for clear aligner prediction [25, 34, 35, 40, 43, 49], and other virtual setups were Flash [25], OrthoAnalyzer [38], OrthoDs 4.6 [41], Airnivol [36], and Masetro 3D [50]. There appeared to be discrepancies between tooth movement simulations from these virtual setups and actual treatment outcomes.

All included studies demonstrated statistically significant differences between predicted and achieved tooth positions [25, 34, 35, 40, 43, 49]. The accuracy seemed to be higher in linear dimensions compared to angular dimensions [25, 34] and in transverse direction compared to vertical and sagittal directions [35, 49]. The most precisely predictable tooth movement was tipping movement especially in maxillary and mandibular anterior teeth, followed by torque and rotation [36, 38, 41, 50]. Sorour et al. [25] also compared ClinCheck and Flash and found no clinically statistically differences in accuracy and efficacy between Invisalign or Flash aligner systems. The data of the included articles in this group were extracted in Table S2.

The accuracy of virtual setup in simulating treatment outcomes of fixed appliance treatment

There were eight articles comparing treatment outcomes between virtual setups and fixed appliance treatment [42, 44,45,46,47, 51,52,53]. The tooth simulation software used in these articles included SureSmile [42, 45, 53], OrthoAnalyzer [44, 47], uLab [46], Maestro 3D [51], and eXceed [52]. The patients in these studies had more severe orthodontic problems than those of the comparison between virtual setups and clear aligner treatment, as five articles considered extraction cases [42, 45, 46, 52, 53], while three articles evaluated orthodontic treatment combined with orthognathic surgery [44, 47, 51]. There was only one article reporting that an indirect bonding technique was performed for orthodontic bracket placement [45].

The degrees of accuracy were various depending on the software, tooth position, and types of tooth movement. SureSmile appeared to be more accurate in mesiodistal and vertical directions than buccolingual position, and there seemed to be clinically significant discrepancies in angular movements (tip and torque) of nearly all teeth [45, 53]. Its highest precision could be expected for translational and rotational movements of incisor teeth, where the accuracy decreased from anterior to posterior areas [42]. Research in OrthoAnalyzer also demonstrated the similar degree of accuracy to SureSmile. Although there were statistically significant discrepancies in tooth movement, clinically significance was not found, resulting its potential for treatment plan discussion [44]. However, it could be considered as less accurate in more complicated cases especially in rotational and translational directions [47]. Research in uLab [46], Maestro 3D [51], and eXceed [52] also presented statistically significant discrepancies in tooth movement simulation, however they could be used for the purposes of treatment planning and outcome visualization due to acceptable clinical discrepancies. The data of the included articles in this group were extracted in Table S3.

Discussion

This systematic review was designed to include research published between January 2000 and November 2022. However, no identified article published between 2000 and 2012 was included in this review following the consideration of inclusion and exclusion criteria. During the period of 2013 to 2017, the research emphasized on comparing the accuracy of tooth movement simulations to manual setups [37, 48] and to actual treatment outcomes retrieved from fixed appliance treatment [42, 45, 51, 53]. The research focus had then moved to the comparison between virtual setups and clear aligner treatment during the period of 2018 to 2022, where seven out of ten articles were identified [25, 34,35,36, 40, 49, 50]. More recent publications had implemented CBCT superimposition to investigate root visualization and allow additional references from a skull [41, 44, 47, 49, 51, 53]. This implies the trend changes in the use of virtual setup over the 10-year period, which could be influenced by the current improvement and affordability of tooth movement simulation software.

The accuracy of virtual setups in simulating orthodontic tooth movement reported in the included articles can be considered as acceptable. The findings retrieved from those articles demonstrated statistical differences between virtual setups and actual treatment outcomes, but the discrepancies were clinically acceptable in non-extraction and non-surgery cases. The virtual setups tended to be more predictable in translation [25, 34, 44, 45, 47] and tipping movements [38, 41, 49, 51]. This could be a result from the flexibility of clear aligner materials, so they may have difficulties to control torque movement. The accuracy of treatment outcome simulation in clear aligners was greater in transverse prediction compared to vertical and sagittal directions [35, 49]. This could be due to orthodontic treatment planning where changes in arch width are generally minimized to aid in achieving stable treatment outcomes. Less accurate vertical and sagittal predictions could be a result of aligner thickness and improper anchorage control, respectively. In addition, due to the diversity in tooth movement methods of the included articles, the accuracy of virtual setups was categorized into three groups. However, the severity of malocclusions in treatment with fixed appliances tended to be more complicated than those treated with clear aligners. As tooth movement simulation for more complicated orthodontic problems could lead to more inaccuracies, there were difficulties in comparing their outcomes, especially between fixed appliances and clear aligners.

There appeared to be a number of factors making orthodontic tooth movement of virtual setups differed from actual treatment. As mentioned, only a few included articles employed virtual setups together with CBCT, so the movements of dental roots were not simulated in most of the studies. Therefore, unrealistic orthodontic tooth movements could be simulated, as surrounding tissues including biological limitations might not be considered [39, 48, 54]. In other words, less restrictions of tooth movements on computer simulation should be emphasized. Bone density and root morphology of the teeth could also affect orthodontic tooth movement [25, 36, 38, 43,44,45, 50]. The measurement extended to gum areas could also not be accurately assesses due to soft tissue distortion within virtual setups [37, 39]. In addition, following the digital segmentation, individually sectioned teeth of virtual setups appear to be smaller in mesiodistal width due to the hollowness of the inner proximal part of the model [48]. These limitations of virtual setups should be considered when performing tooth movement simulation.

Based on the findings in this systematic review, virtual setups should be implemented to simulate treatment planning in orthodontic practice. Tooth movement simulation can provide a chance for orthodontists to review their treatment plans with adequate precision in patients with mild to moderate malocclusions [34, 35, 38, 42,43,44, 46, 50,51,52,53]. However, an actual treatment outcome can differ from a simulated outcome due to a number of factors [25, 36, 38, 43,44,45, 50]. Patient compliance could also affect the treatment outcome [34, 36, 44, 53]. Therefore, orthodontists should acknowledge the limitations of the virtual setups when performing tooth movement simulation.

In addition to the advantages of virtual setups in clinical practice, they can be considered as supportive in orthodontic education. Virtual setup can provide safe learning environments for orthodontic residents to perform digital tooth movement and predict treatment outcomes, with or without supervision, in various orthodontic cases repetitively until they are competent for clinical practice. There is evidence of an increasing use of these tooth movement simulations in orthodontic education, where residents may use the virtual setups to present and discuss their treatment plans with clinical advisors [14, 55, 56]. Moreover, virtual setup could be used for a case conference where orthodontic professionals can discuss various cases with their colleagues [7, 11]. Therefore, with acceptable clinical discrepancies, virtual setups should be used for the purposes of education.

Most of the articles included in this systematic review are retrospective studies with no research was considered as high quality. In addition, there seems to be heterogeneity of research methodology of included articles, e.g., tooth simulation software, orthodontic appliance, severity of malocclusion, and outcome measurements, which could influence the accuracy of virtual setups. As virtual setup is an operator-dependent procedure, the research outcomes of included articles could be affected by different orthodontists or laboratory technicians who perform the tooth movement simulation procedure. Consequently, additional high-quality research with robust protocols should be required in order to enable meta-analysis to be performed to confirm the accuracy of virtual setup. Further research investigating the effectiveness and feasibility of virtual setups in either orthodontic practice or education should also be considered.

Conclusions

The available evidence demonstrates the clinically acceptable accuracy of orthodontic virtual setups in simulating treatment outcomes, especially in cases with less complexity of tooth movement. Therefore, virtual setups are suitable to be implemented into both orthodontic practice and education, bearing in mind their limitations and discrepancies. However, due to the moderate risk of bias of all included article, high-quality studies with homogeneity of research and clinical protocols should be further required to confirm the accuracy and effectiveness of virtual setups in simulating treatment outcomes of different orthodontic problems.

Data availability

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Change history

12 September 2023

A Correction to this paper has been published: https://doi.org/10.1038/s41405-023-00169-1

References

Kesling HD. The diagnostic setup with consideration of the third dimension. Am J Orthod. 1956;42:740–8. https://doi.org/10.1016/0002-9416(56)90042-2.

Mattos CT, Gomes AC, Ribeiro AA, Nojima LI, Nojima Mda C. The importance of the diagnostic setup in the orthodontic treatment plan. Int J Orthod Milwaukee. 2012;23:35–39.

Araújo TM, Fonseca L, Caldas LD, Costa-Pinto RA. Preparation and evaluation of orthodontic setup. Dent.Press J. Orthod. 2012;17:146–65.

Kim S-H, Park Y-G. Easy wax setup technique for orthodontic diagnosis-a time-saving setup method is presented. J Clin Orthod. 2000;34:140–4.

Rheude B, Sadowsky PL, Ferriera A, Jacobson A. An evaluation of the use of digital study models in orthodontic diagnosis and treatment planning. Angle Orthod. 2005;75:300–4. https://doi.org/10.1043/0003-3219(2005)75[300:AEOTUO]2.0.CO;2.

Polido W. Digital impressions and handling of digital models: the future of dentistry. Dent Press J Orthod. 2010;15:18–22. https://doi.org/10.1590/S2176-94512010000500003.

Peluso MJ, Josell SD, Levine SW, Lorei BJ. Digital models: an introduction. Semin Orthod. 2004;10:226–38. https://doi.org/10.1053/j.sodo.2004.05.007.

Macchi A, Carrafiello G, Cacciafesta V, Norcini A. Three-dimensional digital modeling and setup. Am J Orthod Dentofac Orthop. 2006;129:605–10. https://doi.org/10.1016/j.ajodo.2006.01.010.

Palmer NG, Yacyshyn JR, Northcott HC, Nebbe B, Major PW. Perceptions and attitudes of Canadian orthodontists regarding digital and electronic technology. Am J Orthod Dentofac Orthop. 2005;128:163–7. https://doi.org/10.1016/j.ajodo.2005.02.015.

Ho CT, Lin HH, Lo LJ. Intraoral scanning and setting up the digital final occlusion in three-dimensional planning of orthognathic surgery: its comparison with the dental model approach. Plast Reconstr Surg. 2019;143:1027e–36e. https://doi.org/10.1097/PRS.0000000000005556.

Hou D, Capote R, Bayirli B, Chan DCN, Huang G. The effect of digital diagnostic setups on orthodontic treatment planning. Am J Orthod Dentofac Orthop. 2020;157:542–9. https://doi.org/10.1016/j.ajodo.2019.09.008.

Camardella LT, Vilella OV, Breuning KH, de Assis Ribeiro Carvalho F, Kuijpers-Jagtman AM, Ongkosuwito EM. The influence of the model superimposition method on the assessment of accuracy and predictability of setup models. J Orofac Orthoped. 2021;82:175–86. https://doi.org/10.1007/s00056-020-00268-w.

Fleming PS, Marinho V, Johal A. Orthodontic measurements on digital study models compared with plaster models: a systematic review. Orthod Craniofac Res. 2011;14:1–16. https://doi.org/10.1111/j.1601-6343.2010.01503.x.

Sipiyaruk K, Kaewsirirat P, Santiwong P. Technology-enhanced simulation-based learning in orthodontic education: a scoping review. Dent Press J Orthod. 2023;28:e2321354.

Grauer D, Proffit WR. Accuracy in tooth positioning with a fully customized lingual orthodontic appliance. Am J Orthod Dentofac Orthoped. 2011;140:433–43. https://doi.org/10.1016/j.ajodo.2011.01.020.

Fabels LN, Nijkamp PG. Interexaminer and intraexaminer reliabilities of 3-dimensional orthodontic digital setups. Am J Orthod Dentofac Orthop. 2014;146:806–11. https://doi.org/10.1016/j.ajodo.2014.09.008.

Park JM, Choi SA, Myung JY, Chun YS, Kim M. Impact of orthodontic brackets on the intraoral scan data accuracy. Biomed Res Int. 2016;2016:5075182. https://doi.org/10.1155/2016/5075182.

Pellitteri F, Albertini P, Vogrig A, Spedicato GA, Siciliani G, Lombardo L. Comparative analysis of intraoral scanners accuracy using 3D software: an in vivo study. Prog Orthod. 2022;23:21. https://doi.org/10.1186/s40510-022-00416-5.

Martin CB, Chalmers EV, McIntyre GT, Cochrane H, Mossey PA. Orthodontic scanners: what’s available? J. Orthod. 2015;42:136–43. https://doi.org/10.1179/1465313315Y.0000000001.

Woo H, Jha N, Kim Y-J, Sung S-J. Evaluating the accuracy of automated orthodontic digital setup models. Semin Orthodontics. 2022. https://doi.org/10.1053/j.sodo.2022.12.010.

Im J, Kim JY, Yu HS, Lee KJ, Choi SH, Kim JH, et al. Accuracy and efficiency of automatic tooth segmentation in digital dental models using deep learning. Sci Rep. 2022;12:9429. https://doi.org/10.1038/s41598-022-13595-2.

Sinthanayothin C, Tharanont W. Orthodontics treatment simulation by teeth segmentation and setup. In Proceedings of the 2008 5th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Vol. 1. 2008. pp. 81–84. https://doi.org/10.1109/ECTICON.2008.4600377.

Sehrawat S, Kumar A, Grover S, Dogra N, Nindra J, Rathee S, et al. Study of 3D scanning technologies and scanners in orthodontics. Mater Today Proc. 2022;56:186–93. https://doi.org/10.1016/j.matpr.2022.01.064.

Dhingra A, Palomo JM, Stefanovic N, Eliliwi M, Elshebiny T. Comparing 3D tooth movement when implementing the same virtual setup on different software packages. J Clin Med. 2022;11:5351. https://doi.org/10.3390/jcm11185351.

Sorour H, Fadia D, Ferguson DJ, Makki L, Adel S, Hansa I, et al. Efficacy of anterior tooth simulations with clear aligner therapy-A retrospective cohort study of invisalign and flash aligner systems. Open Dent J. 2022;16. https://doi.org/10.2174/18742106-V16-E2205110.

Okunami TR, Kusnoto B, BeGole E, Evans CA, Sadowsky C, Fadavi S. Assessing the American Board of Orthodontics objective grading system: digital vs plaster dental casts. Am J Orthod Dentofac Orthop. 2007;131:51–6. https://doi.org/10.1016/j.ajodo.2005.04.042.

Wright RW, Brand RA, Dunn W, Spindler KP. How to write a systematic review. Clin Orthop Relat Res. 2007;455:23–9. https://doi.org/10.1097/BLO.0b013e31802c9098.

Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement. Onkologie. 2000;23:597–602. https://doi.org/10.1159/000055014.

Cook DJ, Sackett DL, Spitzer WO. Methodologic guidelines for systematic reviews of randomized control trials in health care from the Potsdam Consultation on Meta-Analysis. J Clin Epidemiol. 1995;48:167–71. https://doi.org/10.1016/0895-4356(94)00172-m.

Snyder H. Literature review as a research methodology: an overview and guidelines. J Bus Res. 2019;104:333–9. https://doi.org/10.1016/j.jbusres.2019.07.039.

Rossini G, Parrini S, Castroflorio T, Deregibus A, Debernardi CL. Efficacy of clear aligners in controlling orthodontic tooth movement: a systematic review. Angle Orthod. 2014;85:881–9. https://doi.org/10.2319/061614-436.1.

Bondemark L, Holm AK, Hansen K, Axelsson S, Mohlin B, Brattstrom V, et al. Long-term stability of orthodontic treatment and patient satisfaction. A Syst Rev Angle Orthod. 2007;77:181–91. https://doi.org/10.2319/011006-16r.1.

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928. https://doi.org/10.1136/bmj.d5928.

Al-Nadawi M, Kravitz ND, Hansa I, Makki L, Ferguson DJ, Vaid NR. Effect of clear aligner wear protocol on the efficacy of tooth movement. Angle Orthod. 2021;91:157–63. https://doi.org/10.2319/071520-630.1.

Lione R, Paoloni V, De Razza FC, Pavoni C, Cozza P. Analysis of maxillary first molar derotation with invisalign clear aligners in permanent dentition. Life (Basel). 2022;12:1495. https://doi.org/10.3390/life12101495.

D’Antò V, Bucci R, De Simone V, Ghislanzoni LH, Michelotti A, Rongo R. Evaluation of tooth movement accuracy with aligners: a prospective study. Materials 2022, 15, https://doi.org/10.3390/ma15072646.

Barreto MS, Faber J, Vogel CJ, Araujo TM. Reliability of digital orthodontic setups. Angle Orthod. 2016;86:255–9. https://doi.org/10.2319/120914-890.1.

Lombardo L, Arreghini A, Ramina F, Huanca Ghislanzoni LT, Siciliani G. Predictability of orthodontic movement with orthodontic aligners: a retrospective study. Progr Orthodontics. 2017, 18, https://doi.org/10.1186/s40510-017-0190-0.

González Guzmán JF, Teramoto Ohara A. Evaluation of three-dimensional printed virtual setups. Am J Orthod Dentofac Orthop. 2019;155:288–95. https://doi.org/10.1016/j.ajodo.2018.08.017.

Riede U, Wai S, Neururer S, Reistenhofer B, Riede G, Besser K, et al. Maxillary expansion or contraction and occlusal contact adjustment: effectiveness of current aligner treatment. Clin Oral Investig. 2021;25:4671–9. https://doi.org/10.1007/s00784-021-03780-4.

Zhang XJ, He L, Guo HM, Tian J, Bai YX, Li S. Integrated three-dimensional digital assessment of accuracy of anterior tooth movement using clear aligners. Korean J Orthod. 2015;45:275–81. https://doi.org/10.4041/kjod.2015.45.6.275.

Müller-Hartwich R, Jost-Brinkmann PG, Schubert K. Precision of implementing virtual setups for orthodontic treatment using CAD/CAM-fabricated custom archwires. J Orofac Orthop. 2016;77:1–8. https://doi.org/10.1007/s00056-015-0001-5.

Grünheid T, Loh C, Larson BE. How accurate is Invisalign in nonextraction cases? Are predicted tooth positions achieved. Angle Orthod. 2017;87:809–15. https://doi.org/10.2319/022717-147.1.

Baan F, de Waard O, Bruggink R, Xi T, Ongkosuwito EM, Maal TJJ. Virtual setup in orthodontics: planning and evaluation. Clin Oral Investig. 2020;24:2385–93. https://doi.org/10.1007/s00784-019-03097-3.

Larson BE, Vaubel CJ, Grünheid T. Effectiveness of computer-assisted orthodontic treatment technology to achieve predicted outcomes. Angle Orthod. 2013;83:557–62. https://doi.org/10.2319/080612-635.1.

Shantiya S. Comparison of Digital Treatment Setups and Final Treatment Outcomes of Class II Division 1 Malocclusions Treated with Premolar Extractions. UNLV Theses, Dissertations, Professional Papers, and Capstones 2020, 3955, https://doi.org/10.34917/19412168.

de Waard O, Baan F, Bruggink R, Bronkhorst EM, Kuijpers-Jagtman AM, Ongkosuwito EM. The prediction accuracy of digital orthodontic setups for the orthodontic phase before orthognathic surgery. J Clin Med. 2022, 11, https://doi.org/10.3390/jcm11206141.

Im J, Cha JY, Lee KJ, Yu HS, Hwang CJ. Comparison of virtual and manual tooth setups with digital and plaster models in extraction cases. Am J Orthod Dentofac Orthop. 2014;145:434–42. https://doi.org/10.1016/j.ajodo.2013.12.014.

Lin SY, Hung MC, Lu LH, Sun JS, Tsai SJ, Zwei-Chieng Chang J. Treatment of class II malocclusion with Invisalign®: A pilot study using digital model-integrated maxillofacial cone beam computed tomography. J Dent Sci. 2022. https://doi.org/10.1016/j.jds.2022.08.027.

Tepedino M, Paoloni V, Cozza P, Chimenti C. Movement of anterior teeth using clear aligners: a three-dimensional, retrospective evaluation. Progr Orthodontics 2018;19. https://doi.org/10.1186/s40510-018-0207-3.

Kim JH, Park YC, Yu HS, Kim MK, Kang SH, Choi YJ. Accuracy of 3-dimensional virtual surgical simulation combined with digital teeth alignment: a pilot study. J Oral Maxillofac Surg. 2017;75:2441.e2413. https://doi.org/10.1016/j.joms.2017.07.161.

Moreira FC, Vaz LG, Guastaldi AC, English JD, Jacob HB. Potentialities and limitations of computer-aided design and manufacturing technology in the nonextraction treatment of Class I malocclusion. Am J Orthod Dentofac Orthoped. 2021;159:86–96. https://doi.org/10.1016/j.ajodo.2020.04.020.

Smith TL, Kusnoto B, Galang-Boquiren MT, BeGole E, Obrez A. Mesiodistal tip and faciolingual torque outcomes in computer-driven orthodontic appliances. J. World Federat Orthod. 2015;4:63–70. https://doi.org/10.1016/j.ejwf.2015.04.001.

Camardella LT, Rothier EK, Vilella OV, Ongkosuwito EM, Breuning KH. Virtual setup: application in orthodontic practice. J Orofac Orthop. 2016;77:409–19. https://doi.org/10.1007/s00056-016-0048-y.

Rodrigues MAF, Silva WB, Barbosa Neto ME, Gillies DF, Ribeiro IMMP. An interactive simulation system for training and treatment planning in orthodontics. Comput Graph. 2007;31:688–97. https://doi.org/10.1016/j.cag.2007.04.010.

Tarraf NE, Ali DM. Present and the future of digital orthodontics. Semin Orthod. 2018;24:376–85. https://doi.org/10.1053/j.sodo.2018.10.002.

Funding

This study was supported by the Mahidol University.

Author information

Authors and Affiliations

Contributions

Conceptualization: BS, RC, PS, TN, SPN, KS; Methodology: BS, RC, PS, KS; Formal analysis and investigation: BS, RC, KS; Writing—original draft preparation: BS, RC, KS; Writing—review and editing: BS, RC, PS, TN, SPN, KS. All authors have read and agreed to the submitted version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics declarations

Based on the Institutional Review Boards for ethical conduct of research, the ethics approval was not required for this study, as it was conducted using a systematic review approach using publicly accessible evidence.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sereewisai, B., Chintavalakorn, R., Santiwong, P. et al. The accuracy of virtual setup in simulating treatment outcomes in orthodontic practice: a systematic review . BDJ Open 9, 41 (2023). https://doi.org/10.1038/s41405-023-00167-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41405-023-00167-3