Abstract

Neuropsychiatric disorders pose a high societal cost, but their treatment is hindered by lack of objective outcomes and fidelity metrics. AI technologies and specifically Natural Language Processing (NLP) have emerged as tools to study mental health interventions (MHI) at the level of their constituent conversations. However, NLP’s potential to address clinical and research challenges remains unclear. We therefore conducted a pre-registered systematic review of NLP-MHI studies using PRISMA guidelines (osf.io/s52jh) to evaluate their models, clinical applications, and to identify biases and gaps. Candidate studies (n = 19,756), including peer-reviewed AI conference manuscripts, were collected up to January 2023 through PubMed, PsycINFO, Scopus, Google Scholar, and ArXiv. A total of 102 articles were included to investigate their computational characteristics (NLP algorithms, audio features, machine learning pipelines, outcome metrics), clinical characteristics (clinical ground truths, study samples, clinical focus), and limitations. Results indicate a rapid growth of NLP MHI studies since 2019, characterized by increased sample sizes and use of large language models. Digital health platforms were the largest providers of MHI data. Ground truth for supervised learning models was based on clinician ratings (n = 31), patient self-report (n = 29) and annotations by raters (n = 26). Text-based features contributed more to model accuracy than audio markers. Patients’ clinical presentation (n = 34), response to intervention (n = 11), intervention monitoring (n = 20), providers’ characteristics (n = 12), relational dynamics (n = 14), and data preparation (n = 4) were commonly investigated clinical categories. Limitations of reviewed studies included lack of linguistic diversity, limited reproducibility, and population bias. A research framework is developed and validated (NLPxMHI) to assist computational and clinical researchers in addressing the remaining gaps in applying NLP to MHI, with the goal of improving clinical utility, data access, and fairness.

Similar content being viewed by others

Introduction

Neuropsychiatric disorders including depression and anxiety are the leading cause of disability in the world [1]. The sequelae to poor mental health burden healthcare systems [2], predominantly affect minorities and lower socioeconomic groups [3], and impose economic losses estimated to reach 6 trillion dollars a year by 2030 [4]. Mental Health Interventions (MHI) can be an effective solution for promoting wellbeing [5]. Numerous MHIs have been shown to be effective, including psychosocial, behavioral, pharmacological, and telemedicine [6,7,8]. Despite their strengths, MHIs suffer from systemic issues that limit their efficacy and ability to meet increasing demand [9, 10]. The first is the lack of objective and easily administered diagnostics, which burden an already scarce clinical workforce [11] with diagnostic methods that require extensive training. A second is variable treatment quality [12]. Widespread dissemination of MHIs has shown reduced effect sizes [13], not readily addressable through supervision and current quality assurance practices [14,15,16]. The third is too few clinicians [11], particularly in rural areas [17] and developing countries [18], due to many factors, including the high cost of training [19]. As a result, the quality of MHI remains low [14], highlighting opportunities to research, develop and deploy tools that facilitate diagnostic and treatment processes.

Recent innovations in the fields of Artificial Intelligence (AI) and machine learning [20] offer options for addressing MHI challenges. Technological and algorithmic solutions are being developed in many healthcare fields including radiology [21], oncology [22], ophthalmology [23], emergency medicine [24], and of particular interest here, mental health [25]. An especially relevant branch of AI is Natural Language Processing (NLP) [26], which enables the representation, analysis, and generation of large corpora of language data. NLP makes the quantitative study of unstructured free-text (e.g., conversation transcripts and medical records) possible by rendering words into numeric and graphical representations [27]. MHIs rely on linguistic exchanges and so are well suited for NLP analysis that can specify aspects of the interaction at utterance-level detail for extremely large numbers of individuals, a feat previously impossible [28]. Typically unexamined characteristics of providers and patients are also amenable to analysis with NLP [29] (Box 1). NLP for MHI began with pre-packaged software tools [30], followed by more computationally intense deep neural networks [31], particularly large language models (i.e., attention-based architectures such as Transformers) [32], and other methods for identifying meaningful trends in large amounts of data. The diffusion of digital health platforms has made these types of data more readily available [33]. These data make it possible to study treatment fidelity [33], estimate patient outcomes [34], identify treatment components [35], evaluate therapeutic alliance [36], and gauge suicide risk [37] in a transformative way, sufficient to generate anticipation and apprehension regarding conversational agents [38]. Lastly, NLP has been applied to mental health-relevant contexts outside of MHI including social media [39] and electronic health records [40].

While these studies demonstrate NLP’s research potential, questions remain about its impact on clinical practice. A significant limiting factor is the current separation between two communities of expertise: clinical science and computer science. Clinical researchers possess domain knowledge on MHI but have difficulty keeping up with the rapid advances in NLP. The clearest reflection of this separation is the continued reliance of clinical researchers on traditional expert-based dictionary methods [30] versus the ongoing state-of-the-art developments in large language models within computer science [32]. Accordingly, while prior reviews provided insights into the growing role of machine learning in mental health [25, 41], they did not include peer-reviewed manuscripts from AI conferences where many advances in NLP are reported. In addition, NLP pipelines were not deconstructed into algorithmic components, limiting the ability to identify distinctive model features. Meanwhile, computer scientists and computational linguists are driving developments in NLP that, while methodologically advanced, are typically limited in their applicability to clinical service provision.

We therefore conducted a systematic review of NLP studies for mental health interventions, examining their algorithmic and clinical characteristics to promote the intersection between computer and clinical science. Our aim was threefold: 1) classify NLP methods deployed to study MHI; 2) identify clinical domains and use them to aggregate NLP findings; 3) identify limitations of current NLP applications to recommend solutions. We examined each manuscript for clinical components (setting, aims, transcript source, clinical measures, ground truths and raters) and key features of the NLP pipeline (linguistic representations and features, classification models, validation methods, and software packages). Finally, we explored common areas, biases, and gaps in the current NLP applications for MHI, and proposed a research framework to address these limitations.

Methods

Search protocol and eligibility

The systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. The review was pre-registered, its protocol published with the Open Science Framework (osf.io/s52jh). The review focused on NLP for human-to-human Mental Health Interventions (MHI), defined as psychosocial, behavioral, and pharmacological interventions aimed at improving and/or assessing mental health (e.g., psychotherapy, patient assessment, psychiatric treatment, crisis counseling, etc.). We excluded studies focused solely on human-computer MHI (i.e., conversational agents, chatbots) given lingering questions related to their quality [38] and acceptability [42] relative to human providers. We also excluded social media and medical record studies as they do not directly focus on intervention data, despite offering important auxiliary avenues to study MHI. Studies were systematically searched, screened, and selected for inclusion through the Pubmed, PsycINFO, and Scopus databases. In addition, a search of peer-reviewed AI conferences (e.g., Association for Computational Linguistics, NeurIPS, Empirical Methods in NLP, etc.) was conducted through ArXiv and Google Scholar. The search was first performed on August 1, 2021, and then updated with a second search on January 8, 2023. Additional manuscripts were manually included during the review process based on reviewers’ suggestions, if aligning with MHI broadly defined (e.g., clinical diagnostics) and meeting study eligibility. Search string queries are detailed in the supplementary materials.

Eligibility and selection of articles

To be included, an article must have met five criteria: (1) be an original empirical study; (2) written in English; (3) vetted through peer-review; (4) focused on MHI; and (5) analyzed text data that was gathered from MHI (e.g., transcripts, message logs). Several exclusion criteria were also defined: (a) study of human-computer interventions; (b) text-based data not derived from human-to-human interactions (i.e., medical records, clinician notes); (c) social media platform content (e.g., Reddit); (d) population other than adults (18+); (e) did not analyze data using NLP; or (f) was a book chapter, editorial article, or commentary. Candidate manuscripts were evaluated against the inclusion and exclusion criteria initially based on their abstract and then on the full-text independently by two authors (JMZ and MM), who also assessed study focus and extracted data from the full text. Disagreement on the inclusion of an article or its clinical categorization was discussed with all the authors following full-text review. When more than one publication by the same authors used the same study aim and dataset, only the study with the most technical information and advanced model was included, with others classified as a duplicate and removed. Reasons for exclusion were recorded.

Data extraction

Studies that met criteria were further assessed to extract clinical and computational characteristics.

Setting and data

The MHI used to generate the data for NLP analyses. Treatment modality, digital platforms, clinical dataset and text corpora were identified.

Study focus

Goal of the study, and whether the study primarily examined conversational data from patients, providers, or from their interaction. Moreover, we assessed which aspect of MHI was the primary focus of the NLP analysis.

Ground truth

How the concepts of interest were operationalized in each study (e.g., measuring depression as PHQ-9 scores). Information on raters/coders, agreement metrics, training and evaluation procedures were noted where present. Information on ground truth was identified from study manuscripts and first order data source citations.

Natural language processing components

We extracted the most important components of the NLP model, including acoustic features for models that analyzed audio data, along with the software and packages used to generate them.

Classification model and performance

Where multiple algorithms were used, we reported the best performing model and its metrics, and when human and algorithmic performance was compared.

Reproducibility

Information on whether findings were replicated using an external sample separated from the one used for algorithm training, interpretability (e.g., ablation experiments), as well as if a study shared its data or analytic code.

Limitations and biases

A formal assessment of the risk of bias was not feasible in the examined literature due to the heterogeneity of study type, clinical outcomes, and statistical learning objectives used. Emerging limitations of the reviewed articles were appraised based on extracted data. We assessed possible selection bias by examining available information on samples and language of text data. Detection bias was assessed through information on ground truth and inter-rater reliability, and availability of shared evaluation metrics. We also examined availability of open data, open code, and for classification algorithms use of external validation samples.

Results

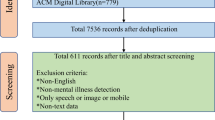

The initial literature screen delivered 19,756 candidate studies. After 4677 duplicate entries were removed, 15,078 abstracts were screened against inclusion criteria. Of these, 14,819 articles were excluded based on content, leaving 259 entries warranting full-text assessment. The screening process is reported in Fig. 1, with the final sample consisting of 102 studies (Table 1).

Study characteristics

Publication year

Results indicate a growth of NLP for MHI applications, with the first study appearing in 2010 and the majority being published between 2020–2022 (53.9%, n = 55). The median year of publication was 2020 (IQR = 2018–2021), a trend consistent with NLP advancements [32].

Setting and data

The majority of interventions consisted of synchronous therapy (53.9%, n = 55), with Motivational Interviewing as the most reported therapy modality (n = 20). These studies primarily involved face-to-face randomized controlled trials, traditional treatments, and collected therapy corpora (e.g., Alexander Street Corpus). Transcripts of clinical assessments, interviews, and structured tasks were another important source of textual data (20.6%, n = 21), elicited through the use of standardized prompts and questions. While most face-to-face studies used text data from manual transcripts, 18 studies used machine-transcription generated from audio sources [36, 43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59]. Online message-based interventions were the second largest setting (22.6%, n = 23), with text-data consisting of anonymized conversation logs between providers and patients. Sample sizes increased from less than 100 therapy transcripts [45, 60, 61] to over 100,000 [34, 62,63,64], with studies analyzing more than one million conversations [65, 66].

Ground truth

Clinicians provided ground truth ratings in the form of diagnoses, assessments, or suicide risk for 31 studies. Patients provided ground truth for 29 studies, through self-report measures of symptoms and functioning (n = 22), intervention feedback, and treatment alliance ratings. Students (n = 9), researchers (n = 6), crowd-workers (n = 3), and other raters (n = 26) provided treatment annotations and emotion/sentiment analysis. As the modal intervention, Motivational Interviewing Skills Codes (MISC) [67] annotations were the most prevalent source of provider/patient information. Thirty-two studies provided information on rater/coder agreement, with adequate inter-rater reliability across studies for frequent and aggregated codes. Only 20 studies provided information on the raters’ training or selection, with Sharma et al., describing in detail an interactive training consisting of instructions, supervision, evaluation, and final selection [62]. Combined human and deep-learning-based approaches were also explored as an alternative to producing a large amount of treatment-related labels. In particular, Ewbank and collegues [34, 35] used a hybrid approach to generate ground truth: human raters annotated a portion of sessions and an annotation model was based on their inputs to label a larger number of sessions.

Natural language processing and machine learning components

Multiple NLP approaches emerged, characterized by differences in how conversations were transformed into machine-readable inputs (linguistic representations) and analyzed (linguistic features). Linguistic features, acoustic features, raw language representations (e.g., tf-idf), and characteristics of interest were then used as inputs for algorithmic classification and prediction. Methods used mirrored the development of NLP tools through time (Fig. 2).

Language representation

The majority of studies (n = 53) tabulated the frequency of individual words through the use of lexicons or dictionaries. Forty-three studies (42.6%) used n-grams for language representation due to their simplicity and interpretability. Bag of Words and Term Frequency-Inverse Document Frequency (TF-IDF) were used by 30 studies to model word frequencies directly for classification purposes [37].

After raw word counts, Word Embeddings were the most commonly utilized language representation (n = 49, 48%), owing to its advantages for performing analytic operations. Lower-dimensional embeddings were primarily generated using word2vec and GloVe algorithms. With recent advances in deep learning, more sophisticated Transformer architectures (e.g., RoBERTa) produced contextualized embeddings, where the representation of a word or token depends on its surrounding context.

Model features

The most common linguistic features were based on lexicons (n = 43) computing the frequency of words by their membership in categories designed by domain experts. This approach is exemplified by software such as LIWC [30], and owes its diffusion in clinical research to its ease of use and low technological requirements. Another prevalent NLP task was sentiment analysis (n = 32), which generated feature scores for emotions (e.g., joy, annoyance) that are derived from lexicon-based methods and pre-trained models (e.g., VADER). Topic modeling (n = 16) also emerged as a widely used approach to identify common themes across clinical transcripts.

Deep learning features

More recent technological developments saw the rise of features based on deep neural networks (n = 40). The adoption of large language models grew in parallel with increases in computational power, the development of dedicated code libraries (e.g., Pytorch and Tensorflow), and increased availability of large MHI corpora (Fig. 2). Transformer models were the most used language models given their ability to generate contextually-meaningful linguistic features from sequences of text through the use of attention mechanisms, and to study the flow of individual talk turns [68], as well as its effects on overall session estimates [48].

Models deployed include BERT and its derivatives (e.g., RoBERTa, DistillBERT), sequence-to-sequence models (e.g., BART), architectures for longer documents (e.g., Longformer), and generative models (e.g., GPT-2). Although requiring massive text corpora to initially train on masked language, language models build linguistic representations that can then be fine-tuned to downstream clinical tasks [69]. Applications examined include fine-tuning BERT for domain adaptation to mental health language (MentalBERT) [70], for sentiment analysis via transfer learning (e.g., using the GoEmotions corpus) [71], and detection of topics [72]. Generative language models were used for revising interventions [73], session summarizations [74], or data augmentation for model training [70].

Acoustic features

Beyond the use of speech-to-text transcripts, 16 studies examined acoustic characteristics emerging from the speech of patients and providers [43, 49, 52, 54, 57,58,59,60, 75,76,77,78,79,80,81,82]. The extraction of acoustic features from recordings was done primarily using Praat and Kaldi. Engineered features of interest included voice pitch, frequency, loudness, formants quality, and speech turn statistics. Three studies merged linguistic and acoustic representations into deep multimodal architectures [57, 77, 80]. The addition of acoustic features to the analysis of linguistic features increased model accuracy, with the exception of one study where acoustics worsened model performance compared to linguistic features only [57]. Model ablation studies indicated that, when examined separately, text-based linguistic features contributed more to model accuracy than speech-based acoustics features [57, 77, 78, 80].

Clinical research categories

Three primary sources of data emerged from the examined studies: conversational data from patients (n = 45), another set from providers (n = 32), and a third set from patient-provider interactions (n = 21). In addition, four studies focused on improving NLP data pipelines [47, 74, 44, 83] (Fig. 3). Each of the three data sources were further divided into two subgroups according to study aims. The resulting six clinical categories are discussed further below and composed the central concepts of the integrative framework presented in the discussion.

Patient analysis (n = 45)

Clinical presentation (n = 34)

These studies assessed clinical characteristics evident in transcripts grounded in diagnostic ratings obtained by providers and self-reported symptoms from patients. The premise for these applications is the effect of neuropsychiatric disorders on speech (e.g., neologisms in schizophrenia), sentiment, and content (e.g., worry in anxiety) [29] that act as language-based markers of psychopathology. Serious Mental Illness (SMI). Eleven SMI applications used NLP markers to identify psychosis [51, 81, 84, 85] and bipolar [86] diagnoses (Accuracy 0.70 and 0.85), monitor symptoms [75, 87], detect psychotic episodes (F1 = 0.99) [88] and psychosis onset (AUC = 0.72) [89]. Negative symptoms [87, 90] and cognitive distortions [70] for SMI were detected using linguistic features, including connectedness emerging from graph analytics [90, 91]. Associations between linguistic features and neuroimaging were also examined [91, 92]. Depression and Anxiety. Examination of linguistic features showed that lexical diversity [66], the use of more affective language [93] and negative emotions [93, 94] are markers of depression and anxiety [49], and can be used to predict outcomes (QIDS-16 scores, Accuracy = 0.85) [95]. Sentence embeddings [77], n-grams and topics [96] were also used to assess depression and anxiety severity. In addition, linguistic features were able to detect symptoms beyond those typically captured by diagnostic screenings [64]. Post-Traumatic Stress Disorder (PTSD). Three studies focused on analyzing open-ended trauma narrative to accurately identify PTSD diagnosis [97] and symptom trajectories [98]. Of note, linguistic features from narratives collected one month after life-threatening traumatic events were shown to be predictive of future PTSD (AUC = 0.90) [79]. Affect Analysis. Six manuscripts focused on the automatic examination of affect, a component of clinical mental status evaluations. These studies examined emotions at the session- and utterance-level [82], emotional involvement (e.g., warmth) [99], and negativity [100], and emotional distress including exposure therapy hotspots [60]. Sentiment analysis performed similarly to human raters (Cohen’s K = 0.58) [101]. Across studies, the latest Transformer-based models were shown to capture emotional valiance [102] and associations with symptom ratings more accurately than other language features [71]. Suicide Risk. Another area of clinical interest was suicidality assessment (n = 4). While one study focused on lifetime history of suicidality [103], the majority used NLP to assess intentions of suicide or self-harm endorsed during interventions [37, 104, 105], one with sufficient accuracy to be deployed in a clinical setting (AUC = 0.83) [37].

Intervention response (n = 11)

Eleven studies examined linguistic markers of patient response related to treatment administration [106], outcome [107, 108], patient activation [109, 110], and between-session fluctuation of symptoms [108]. One study identified linguistic markers of behavioral activation in the treatment of depression of 10,000 patients (PHQ-9 scores; R2 = 75.5%) [109]. Three studies captured within-session responses to MHI by examining patients’ responses to provider interventions at utterance-level interactions [43, 57, 111]. Of note, Nook et al. showed that clustering a sample of 6,229 patients based on linguistic distance captured differences both in symptoms severity and treatment outcomes [112].

Provider analysis (n = 32)

Intervention monitoring (n = 20)

Most provider analyses focused on monitoring treatment fidelity. These studies segmented interventions into utterance-level elements based on treatment protocols. The majority of treatment fidelity studies examined adherence to Motivational Interviewing (MI) in clinical trial and outpatient settings [52, 54,55,56, 76, 113,114,115,116,117,118,119,120], with Flemotomos et al. also implementing automated MI fidelity evaluation in practice [52]. Taking advantage of the generative properties of Transformer models, Cao et al. [116]. designed a system that identified MI interventions (MISC codes; F1 = 0.65) and then forecasted the most likely upcoming intervention (based on the session’s history), with the goal of guiding providers. Other treatment fidelity studies examined the fidelity of Cognitive Behavioral Therapy (CBT) [34, 35, 53, 48] and digital health [121, 122] interventions, with two examining dialogue acts distinguishing different psychotherapy approaches [113, 123]. Treatment fidelity studies primarily relied on human annotators to produce session-level behavioral codes to then train treatment segmentation models. These codes describe the structure of a session compared to the treatment’s typical structure, which does not directly provide evidence for the effectiveness of specific interventions. A demonstration of the potential of directly examining treatment transcripts was shown in a study by Perez-Rosas et al. [55], where combined n-grams, lexicon, and linguistic features were as predictive of patient-rated quality as the use of behavioral codes (F1 = 0.87 with/out human annotations). Language models can also be used to generate treatment fidelity labels: Ewbank and colleagues [34] automatically segmented CBT sessions into intervention components (e.g., Socratic questioning), with varying degrees of accuracy (F1 = 0.22-0.94). They then showed how algorithmically identified CBT factors differentially increased the likelihood of engagement and symptom improvement (GAD-7 & PHQ-9 scores) for 17,572 patients.

Provider characteristics (n = 12)

Empathy. Seven studies focused on the assessment of empathy, given its role in establishing treatment alliance [16]. Early models assessed session-level empathy by examining behavioral codes [58, 59, 78, 124]. Contextual language models and larger datasets examined utterance-level empathy [68], also in specific expressive forms (i.e., reactions, interpretations, and explorations) with sufficient accuracy (EPITOME codes; F1 = 0.74) [62]. Similarly, Zhang and Danescu-Niculescu-Mizil [125] designed a model using 1.5 million crisis center conversations to identify whether providers responded to patients’ empathetically versus advancing toward concrete resolutions. In addition to measuring empathy, Transformer-based architectures have emerged as a tool for augmenting providers’ empathy, with one study using generative language models to suggest more empathic rewriting of text-based interventions [73]. Conversational skills. Five manuscripts examined the linguistic ability of providers [33, 50, 65, 125, 126]. One study [33] generated a model from 80,885 counseling interventions to extract therapist conversational factors, and showed how differences in content and timing of these factors predicted outcome (patient-reported helpfulness; AUC = 0.72). Importantly, conversational markers not only captured between-provider differences, but also found within-provider differences related to patients’ diagnoses [50] and as they gained clinical experience over time [65].

Patient-provider interaction analysis (n = 21)

Relational dynamics (n = 14)

Therapeutic alliance and ruptures. The study of patient-provider interactions primarily focused on analyzing therapeutic alliance, given its association with treatment outcomes [16]. Six studies sought to determine ratings of alliance strength [36, 46] or moments of rupture [127,128,129,130]. In one application, the NLP model detected patient ruptures unidentified by providers [129] and associated ruptures with decreases in emotional engagement between providers and patients [128]. Mutual affect analysis. Five interaction studies examined provider-therapist emotional convergence [131, 132] during the intervention, including defense mechanisms [133] and humor [45]. Tanana and colleagues examined a large corpus of therapy transcripts [102], and showed that an attention-based architecture captured therapists’ and patients’ valence interactions and their context more accurately (K = 0.48) than previous lexicon methods (K = 0.25 and K = 0.31) and human raters (K = 0.42). Linguistic coordination. Three studies focused on semantic similarity and linguistic coordination in therapeutic dyads given its association with positive outcomes [63, 134]. Researchers examined the association of linguistic coordination with affective behaviors across different treatment settings [134], and the role of linguistic synchrony in more effective interventions [135].

Conversation topics (n = 7)

Seven studies focused on identifying conversational themes emerging from treatment interactions [61, 136,137,138], including identifying functioning issues [139] and capturing conversational changes following the COVID-19 pandemic [72]. Imel et al. [140]. also showed how treatment topics accurately reflect differences in therapeutic approaches (Accuracy =0.87).

Limitations of reviewed studies

Bias towards English

Included studies overwhelmingly featured NLP analyses of English transcripts. English was the only source of conversational data for 87.3% of studies (n = 89). Of the remaining 13 manuscripts, three were Dutch [60, 85, 101], three were Hebrew [108, 127, 129], two were Cantonese [105, 130], two were German [44, 141], and Italian [61], Mandarin [88], and Polish [51] were each analyzed in a single study. This lack of linguistic diversity poses important questions on whether findings from the examination of English conversations can be generalized to other languages.

Limited reproducibility

While we reported algorithm performance where available, studies used different types of ground truth (e.g., psychiatrist-assessed [51] vs self-reported [122] autism) and reported different evaluation metrics (e.g., F-scores vs ROC AUC), which did not allow for meaningful direct comparisons across all studies. The examined studies were also limited in their availability of open data and open code: only a fraction of studies made their computational code (n = 16; 15.7%) or their data (n = 8; 7.8%) available. Although several studies provided graphical representations of their model architecture [52], information on algorithmic implementation, model hyper-parameters, and random seeds were typically left under-specified. Five deep learning studies mitigated this limitation by utilizing an interpretability algorithm to elucidate their models [71, 79, 85, 104, 122]. While data unavailability is understandable given concerns for patient privacy and remains a significant challenge to future work, the absence of detailed model information, shared evaluation metrics, and code is a critical obstacle to the replication and extension of findings to new clinical populations.

Population bias

A third limitation was the lack of sample diversity. Data for the studies were predominantly gathered from the US. Moreover, the majority of studies didn’t offer information on patient characteristics, with only 40 studies (39.2%) reporting demographic information for their sample. In addition, while many studies examined the stability and accuracy of their findings through cross-validation and train/test split, only 4 used external validation samples [89, 107, 134] or an out-of-domain test [100]. In the absence of multiple and diverse training samples, it is not clear to what extent NLP models produced shortcut solutions based on unobserved factors from socioeconomic and cultural confounds in language [142].

Discussion

In this systematic review we examined 102 applications of Natural Language Processing (NLP) for Mental Health Interventions (MHI) to evaluate their potential for informing research and practice on challenges experienced in the mental healthcare system. NLP methods are uniquely positioned to enhance language tasks with the potential to reduce provider burden, improve training and quality assurance, and more objectively operationalize MHI. To advance research in these areas, we highlight six clinical categories that emerged in the review. For the patient: (1) clinical presentation, including patient symptoms, suicide risk, and affect; and (2) intervention response, to monitor patient response during treatment. For the provider: (3) intervention monitoring, to evaluate the features of the administered treatment; and (4) provider characteristics, to study the person of the therapist and their conversational skills and traits. For patient-provider interactions: (5) relational dynamics, to evaluate alliance and relational coordination; and (6) conversational topics, to determine treatment content. In terms of language models, studies showed a shift from word count and frequency-based lexicon methods to context-sensitive deep neural networks. The growth of context-sensitive analyses appeared to follow increased prevalence of digital platforms and large corpora generated by telemedicine MHI. Acoustic features were another promising source of treatment data, although linguistic content was a richer source of information in the reviewed studies. Research in this area demonstrated progress in the areas of diagnostics, treatment specification, and the identification of contributors to outcome including the quality of the therapeutic relationship and markers of change for the patient. We propose integrating these disparate contributions into a single framework (NLPxMHI) to summarize promising avenues for increasing the utility of NLP for mental health service innovation.

NLPxMHI research framework

The goal of the NLPxMHI framework (Fig. 4) is to facilitate interdisciplinary collaboration between computational and clinical researchers and practitioners in addressing opportunities offered by NLP. It also seeks to draw attention to a level of analysis that resides between micro-level computational research [44, 47, 74, 83, 143] and macro-level complex intervention research [144]. The first evolves too quickly to meaningfully review, and the latter pertains to concerns that extend beyond techniques of effective intervention, though both are critical to overall service provision and translational research. The process for developing and validating the NLPxMHI framework is detailed in the Supplementary Materials.

A MHI transcripts corpus is reviewed for its representativeness. If deemed appropriate for the intended setting, the corpus is segmented into sequences, and the chosen operationalizations of language are determined based on interpretability and accuracy goals. Model features for the six distinct clinical categories are designed. If necessary, investigators may adjust their operationalizations, model goals and features. If no changes are needed, investigators report results for clinical outcomes of interest, and support results with sharable resources including code and data.

Demographic and sample descriptions for representativeness, fairness, and equity

Recent challenges in machine learning provide valuable insights into the collection and reporting of training data, highlighting the potential for harm if training sets are not well understood [145]. Since all machine learning tasks can fall prey to non-representative data [146], it is critical for NLPxMHI researchers to report demographic information for all individuals included in their models’ training and evaluation phases. As noted in the Limitations of Reviewed Studies section, only 40 of the reviewed papers directly reported demographic information for the dataset used. The goal of reporting demographic information is to ensure that models are adequately powered to provide reliable estimates for all individuals represented in a population where the model is deployed [147]. While the US-based population bias for papers in the review may not be easily overcome through expansion of international population data, US domestic research can reduce hidden population bias by reporting language-relevant demographic data for the samples studied, as such data may signal to other researchers’ findings influenced by dialect, geography, or a host of other factors. In addition to reporting demographic information, research designs may require over-sampling underrepresented groups until sufficient power is reached for reliable generalization to the broader population. Relatedly, and as noted in the Limitation of Reviewed Studies, English is vastly over-represented in textual data. There does appear to be growth in non-English corpora internationally and we are hopeful that this trend will continue. Within the US, there is also some growth in services delivered to non-English speaking populations via digital platforms, which may present a domestic opportunity for addressing the English bias.

There are additional generalizability concerns for data originating from large service providers including mental health systems, training clinics, and digital health clinics. These data are likely to be increasingly important given their size and ecological validity, but challenges include overreliance on particular populations and service-specific procedures and policies. Research using these data should report the steps taken to verify that observational data from large databases exhibit trends similar to those previously reported for the same kind of data. This practice will help flag whether particular service processes have had a significant impact on results. In partnership with data providers, the source of anomalies can then be identified to either remediate the dataset or to report and address data weaknesses appropriately. Another challenge when working with data derived from service organizations is data missingness. While imputation is a common solution [148], it is critical to ensure that individuals with missing covariate data are similar to the cases used to impute their data. One suggested procedure is to calculate the standardized mean difference (SMD) between the groups with and without missing data [149]. For groups that are not well-balanced, differences should be reported in the methods to quantify selection effects, especially if cases are removed due to data missingness.

Represent treatment as sequential actions

We recommend representing treatment as sequential actions taken by providers and patients, instead of aggregating data into timeless corpora, to reduce unnecessary noise, enhancing the precision of effect estimates for intervention studies [52, 71, 109]. The reviewed studies highlight the potential benefits of embedding textual units into time-delimited sequences [52]. Longitudinal designs, while admittedly more complex, can reveal dynamics in intervention timing, change, and individual differences [150], that are otherwise lost. For example, the relationship between a specific intervention and outcome is intricate, as timing and context are important moderators of beneficial effects [113, 114]. There are no universal rules for determining how to sequence data, however the most promising avenues are: (1) turn taking; (2) the span between outcome measures; (3) sessions; or (4) clinically meaningful events arising from within, or imposed from outside the treatment.

Operationalize language representations and estimate contribution of the six clinical categories

The systematic review identified six clinical categories important to intervention research for which successful NLP applications have been developed [151,152,153,154,155]. While each individually reflects a significant proof-of-concept application relevant to MHI, all operate simultaneously as factors in any treatment outcome. Integrating these categories into a unified model allows investigators to estimate each category’s independent contributions—a difficult task to accomplish in conventional MHI research [152]—increasing the richness of treatment recommendations. To successfully differentiate and recombine these clinical factors in an integrated model, however, each phenomenon within a clinical category must be operationalized at the level of utterances and separable from the rest. The reviewed studies have demonstrated that this level of definition is attainable for a wide range of clinical tasks [34, 50, 52, 54, 73]. Utterance-level operationalization exists for some therapy frameworks [153, 154], which can serve as exemplars to inform the specification process for other treatment approaches that have yet to tie aspects of speech to their proposed mechanisms of change and intervention. For example, it is not sufficient to hypothesize that cognitive distancing is an important factor of successful treatment. Researchers must also identify specific words in patient and provider speech that indicate the occurrence of cognitive distancing [112], and ideally just for cognitive distancing. This process is consonant with the essentials of construct and discriminant validity, with others potentially operative as well (e.g., predictive validity for markers of outcome, and convergent validity for related but complementary constructs). As research deepens in this area, we expect that there will be increasing opportunities for theory generation as certain speech elements, whether uncategorizable or derived through exploratory designs, remain outside of operationalized constructs of known theory.

Define model goals: interpretability and accuracy

Model interpretability is used to justify clinical decision-making and translate research findings into clinical policy [156]. However, there is a lack of consensus on the precise definition of interpretability and on the strategies to enhance it in the context of healthcare [157]. We suggest that enhancing interpretability through clinical review, model tuning, and generalizability is most likely to produce valid and trustworthy treatment decision rules [158] and to deliver on the personalization goals of precision medicine [159]. The reviewed studies show trade-offs between model performance and interpretability: lexicon and rule-based methods rely on predefined linguistic patterns, maximizing interpretability [33, 112], but they tend to be less accurate than deep learning models that account for more complex linguistic patterns and their context [71]. The interpretability of complex neural architectures, when deployed, should be improved at the instance-level to identify the words influencing model predictions. Methods include examining attention mechanisms, counterfactual explanations, and layer-wise relevance propagation. Surprisingly, only a handful of the reviewed studies implemented any of these techniques to enhance interpretability. Nevertheless, these methods don’t offer interpretation of the overall behavior of the model across all inputs. We expect that ongoing collaboration between clinical and computational domains will slowly fill in the gap between interpretability and accuracy through cyclical examination of model behavior and outputs. We also expect the current successes of large language models such as GPT-4 and LLaMa [160] to be further enhanced and made more clinically interpretable through training on data where relationships among the NLPxMHI categories and clinical outcomes is better understood. Meanwhile, the tradeoff between accuracy and interpretability should be determined based on research goals.

Results: clinically grounded insights and models

A sign of interpretability is the ability to take what was learned in a single study and investigate it in different contexts under different conditions. Single observational studies are insufficient on their own for generalizing findings [152, 161, 162]. Incorporating multiple research designs, such as naturalistic, experiments, and randomized trials to study a specific NLPxMHI finding [73, 163], is crucial to surface generalizable knowledge and establish its validity across multiple settings. A first step toward interpretability is to have models generate predictions from evidence-based and clinically grounded constructs. The reviewed studies showed sources of ground truth with heterogeneous levels of clinical interpretability (e.g., self-reported vs. clinician-based diagnosis) [51, 122], hindering comparative interpretation of their models. We recommend that models be trained using labels derived from standardized inter-rater reliability procedures from within the setting studied. Examples include structured diagnostic interviews, validated self-report measures, and existing treatment fidelity metrics such as MISC [67] codes. Predictions derived from such labels facilitate the interpretation of intermediary model representations and the comparison of model outputs with human understanding. Ad-hoc labels for a specific setting can be generated, as long as they are compared with existing validated clinical constructs. If complex treatment annotations are involved (e.g., empathy codes), we recommend providing training procedures and metrics evaluating the agreement between annotators (e.g., Cohen’s kappa). The absence of both emerged as a trend from the reviewed studies, highlighting the importance of reporting standards for annotations. Labels can also be generated by other models [34] as part of a NLP pipeline, as long as the labeling model is trained on clinically grounded constructs and human-algorithm agreement is evaluated for all labels.

Another barrier to cross-study comparison that emerged from our review is the variation in classification and model metrics reported. Consistently reporting all evaluation metrics available can help address this barrier. Modern approaches to causal inference also highlight the importance of utilizing expert judgment to ensure models are not susceptible to collider bias, unmeasured variables, and other validity concerns [155, 164]. A comprehensive discussion of these issues exceeds the scope of this review, but constitutes an important part of research programs in NLPxMHI [165, 166].

Sharable resources: data access

The most reliable route to achieving statistical power and representativeness is more data, which is challenging in healthcare given regulations for data confidentiality and ethical considerations of patient privacy. Technical solutions to leverage low resource clinical datasets include augmentation [70], out-of-domain pre-training [68, 70], and meta-learning [119, 143]. However, findings from our review suggest that these methods do not necessarily improve performance in clinical domains [68, 70] and, thus, do not substitute the need for large corpora. As noted, data from large service providers are critical for continued NLP progress, but privacy concerns require additional oversight and planning. Only a fraction of providers have agreed to release their data to the public, even when transcripts are de-identified, because the potential for re-identification of text data is greater than for quantitative data. One exception is the Alexander Street Press corpus, which is a large MHI dataset available upon request and with the appropriate library permissions. Access to richer datasets from current service providers typically require data use agreements that stipulate the extent of data use for researchers, as well as an agreement between patients and service providers for the use of their data for research purposes. While these practices ensure patient privacy and make NLPxMHI research feasible, alternatives have been explored. One such alternative is a data enclave where researchers are securely provided access to data, rather than distributing data to researchers under a data use agreement [167]. This approach gives the data provider more control over data access and data transmission and has demonstrated some success [168].

Limitations

While this review highlights the potential of NLP for MHI and identifies promising avenues for future research, we note some limitations. Although study selection bias was limited by pre-registered review protocol and by inclusion of peer-reviewed conference papers, theoretical considerations suggest possible publication bias in the selection of the reported results toward positive findings (i.e., file-drawer effect). In particular, this might have affected the study of clinical outcomes based on classification without external validation. Moreover, included studies reported different types of model parameters and evaluation metrics even within the same category of interest. As a result, studies were not evaluated based on their quantitative performance. Future reviews and meta-analyses would be aided by more consistency in reporting model metrics. Lastly, we expect that important advancements will also come from areas outside of the mental health services domain, such as social media studies and electronic health records, which were not covered in this review. We focused on service provision research as an important area for mapping out advancements directly relevant to clinical care.

Conclusions

NLP methods hold promise for the study of mental health interventions and for addressing systemic challenges. Studies to date offer a large set of proof-of-concept applications, highlighting the importance of clinical scientists for operationalizing treatment, and of computer scientists for developing methods that can capture the sequential and context-dependent nature of interventions. The NLPxMHI framework seeks to integrate essential research design and clinical category considerations into work seeking to understand the characteristics of patients, providers, and their relationships. Large secure datasets, a common language, and fairness and equity checks will support collaboration between clinicians and computer scientists. Bridging these disciplines is critical for continued progress in the application of NLP to mental health interventions, to potentially revolutionize the way we assess and treat mental health conditions.

Data availability

Data for the current study were sourced from reviewed articles referenced in this manuscript. Literature search string queries are available in the supplementary materials.

References

James SL, Abate D, Abate KH, Abay SM, Abbafati C, Abbasi N, et al. Global, regional, and national incidence, prevalence, and years lived with disability for 354 diseases and injuries for 195 countries and territories, 1990–2017: a systematic analysis for the Global Burden of Disease Study 2017. Lancet. 2018;392:1789–858.

Figueroa JF, Phelan J, Orav EJ, Patel V, Jha AK. Association of mental health disorders with health care spending in the medicare population. JAMA Netw Open. 2020;3:e201210.

Miranda J, McGuire TG, Williams DR, Wang P. Mental health in the context of health disparities. AJP. 2008;165:1102–8.

Health TLG. Mental health matters. Lancet Glob Health. 2020;8:e1352.

Association AP, others. American Psychiatric Association Practice Guidelines for the treatment of psychiatric disorders: compendium 2006. American Psychiatric Pub; 2006.

Cuijpers P, Driessen E, Hollon SD, van Oppen P, Barth J, Andersson G. The efficacy of non-directive supportive therapy for adult depression: a meta-analysis. Clin Psychol Rev. 2012;32:280–91.

Firth J, Torous J, Nicholas J, Carney R, Pratap A, Rosenbaum S, et al. The efficacy of smartphone-based mental health interventions for depressive symptoms: a meta-analysis of randomized controlled trials. World Psychiatry. 2017;16:287–98.

DeRubeis RJ, Siegle GJ, Hollon SD. Cognitive therapy versus medication for depression: treatment outcomes and neural mechanisms. Nat Rev Neurosci. 2008;9:788–96.

Cunningham PJ. Beyond parity: primary care physicians’ perspectives on access to mental health care. Health Aff. 2009;28:w490–w501.

SAMHSA. Key substance use and mental health indicators in the United States: results from the 2019 National Survey on Drug Use and Health. 2021 https://digitalcommons.fiu.edu/srhreports/health/health/32.

Wang PS, Aguilar-Gaxiola S, Alonso J, Angermeyer MC, Borges G, Bromet EJ, et al. Use of mental health services for anxiety, mood, and substance disorders in 17 countries in the WHO world mental health surveys. Lancet. 2007;370:841–50.

Insel TR. Digital phenotyping: a global tool for psychiatry. World Psychiatry. 2018;17:276–7.

Johnsen TJ, Friborg O. The effects of cognitive behavioral therapy as an anti-depressive treatment is falling: a meta-analysis. Psychol. Bull. 2015;141:747.

Kilbourne AM, Beck K, Spaeth-Rublee B, Ramanuj P, O’Brien RW, Tomoyasu N, et al. Measuring and improving the quality of mental health care: a global perspective. World Psychiatry. 2018;17:30–8.

Tracey TJG, Wampold BE, Lichtenberg JW, Goodyear RK. Expertise in psychotherapy: an elusive goal? Am Psychol. 2014;69:218–29.

Wampold BE, Imel I Zac E. The great psychotherapy debate: Models, methods, and findings. 2nd ed. Routledge/Taylor & Francis Group; 2015.

Douthit N, Kiv S, Dwolatzky T, Biswas S. Exposing some important barriers to health care access in the rural USA. Public Health. 2015;129:611–20.

Saraceno B, van Ommeren M, Batniji R, Cohen A, Gureje O, Mahoney J, et al. Barriers to improvement of mental health services in low-income and middle-income countries. Lancet. 2007;370:1164–74.

Heisler EJ, Bagalman E. The Mental Health Workforce: A Primer. 2018. https://ecommons.cornell.edu/handle/1813/79417 (Accessed 7 Oct 2021).

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–44.

Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500–10.

Schaffter T, Buist DSM, Lee CI, Nikulin Y, Ribli D, Guan Y, et al. Evaluation of combined artificial intelligence and radiologist assessment to interpret screening mammograms. JAMA Netw Open. 2020;3:e200265.

Hogarty DT, Mackey DA, Hewitt AW. Current state and future prospects of artificial intelligence in ophthalmology: a review. Clin Exp Ophthalmol. 2019;47:128–39.

Schultebraucks K, Shalev AY, Michopoulos V, Grudzen CR, Shin S-M, Stevens JS, et al. A validated predictive algorithm of post-traumatic stress course following emergency department admission after a traumatic stressor. Nat Med. 2020;26:1084–8.

Dwyer DB, Falkai P, Koutsouleris N. Machine learning approaches for clinical psychology and psychiatry. Annu Rev Clin Psychol. 2018;14:91–118.

Jurafsky D, Martin JH. Speech and Language Processing: An introduction to speech recognition, computational linguistics and natural language processing. 1st ed. Prentice-Hall; 2008.

Manning C, Schutze H. Foundations of statistical natural language processing. 1st ed. MIT Press; 1999.

Imel ZE, Caperton DD, Tanana M, Atkins DC. Technology-enhanced human interaction in psychotherapy. J Counseling Psychol. 2017;64:385.

Oyebode F Sims’ symptoms in the mind: an introduction to descriptive psychopathology. Elsevier Health Sciences; 2008.

Tausczik YR, Pennebaker JW. The Psychological Meaning of Words: LIWC and Computerized Text Analysis Methods. J Lang Soc Psychol. 2010;29:24–54.

Cho K, van Merriënboer B, Bahdanau D, Bengio Y. On the properties of neural machine translation: Encoder–Decoder approaches. Proceedings of SSST-8, eighth workshop on syntax, semantics and structure in statistical translation. Doha, Qatar: Association for Computational Linguistics; 2014. p. 103–11.

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention is All you Need. In: Guyon I, Von Luxburg U, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds). Advances in Neural Information Processing Systems. Curran Associates, Inc. Vol. 30, 2017. https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf.

Althoff T, Clark K, Leskovec J. Large-scale analysis of counseling conversations: an application of natural language processing to mental health. Trans. Assoc. Comput. Linguistics. 2016;4:463–76.

Ewbank MP, Cummins R, Tablan V, Bateup S, Catarino A, Martin AJ, et al. Quantifying the association between psychotherapy content and clinical outcomes using deep learning. JAMA Psychiatry. 2020;77:35–43.

Ewbank MP, Cummins R, Tablan V, Catarino A, Buchholz S, Blackwell AD. Understanding the relationship between patient language and outcomes in internet-enabled cognitive behavioural therapy: a deep learning approach to automatic coding of session transcripts. Psychother Res. 2021;31:300–12.

Goldberg SB, Flemotomos N, Martinez VR, Tanana MJ, Kuo PB, Pace BT, et al. Machine learning and natural language processing in psychotherapy research: alliance as example use case. J Counseling Psychol. 2020;67:438–48.

Bantilan N, Malgaroli M, Ray B, Hull TD. Just in time crisis response: suicide alert system for telemedicine psychotherapy settings. Psychother Res. 2021;31:289–99.

Miner AS, Shah N, Bullock KD, Arnow BA, Bailenson J, Hancock J. Key Considerations for Incorporating Conversational AI in Psychotherapy. Front Psychiatry. 2019;10:746.

Chancellor S, De Choudhury M. Methods in predictive techniques for mental health status on social media: a critical review. npj Digit Med. 2020;3:1–11.

Vaci N, Liu Q, Kormilitzin A, Crescenzo FD, Kurtulmus A, Harvey J, et al. Natural language processing for structuring clinical text data on depression using UK-CRIS. Evid-Based Ment Health. 2020;23:21–26.

Aafjes-van Doorn K, Kamsteeg C, Bate J, Aafjes M. A scoping review of machine learning in psychotherapy research. Psychother Res. 2021;31:92–116.

Morris RR, Kouddous K, Kshirsagar R, Schueller SM. Towards an artificially empathic conversational agent for mental health applications: System design and user perceptions. J Med Internet Res. 2018;20:e10148.

Aswamenakul C, Liu L, Carey KB, Woolley J, Scherer S, Borsari B. Multimodal analysis of client behavioral change coding in motivational interviewing. In: Proc. 20th ACM international conference on multimodal interaction. ACM: Boulder CO: ACM; 2018. p. 356–60.

Mieskes M, Stiegelmayr A. Preparing data from psychotherapy for natural language processing. Proceedings of the eleventh international conference on language resources and evaluation (LREC 2018). Miyazaki, Japan: European Language Resources Association (ELRA); 2018. https://aclanthology.org/L18-1458.

Ramakrishna A, Greer T, Atkins D, Narayanan S. Computational modeling of conversational humor in psychotherapy. In: Interspeech 2018. ISCA; 2018. p. 2344–48.

Martinez VR, Flemotomos N, Ardulov V, Somandepalli K, Goldberg SB, Imel ZE, et al. Identifying Therapist and Client Personae for Therapeutic Alliance Estimation. In: Interspeech 2019. 2019, ISCA, pp 1901–5.

Miner AS, Haque A, Fries JA, Fleming SL, Wilfley DE, Terence Wilson G, et al. Assessing the accuracy of automatic speech recognition for psychotherapy. npj Digit Med. 2020;3:82.

Chen Z, Flemotomos N, Singla K, Creed TA, Atkins DC, Narayanan S An automated quality evaluation framework of psychotherapy conversations with local quality estimates. Computer Speech Lang. 2022;75:101380.

Demiris G, Oliver DP, Washington KT, Chadwick C, Voigt JD, Brotherton S, et al. Examining spoken words and acoustic features of therapy sessions to understand family caregivers’ anxiety and quality of life. Int J Med Inform. 2022;160:104716.

Miner AS, Fleming SL, Haque A, Fries JA, Althoff T, Wilfley DE, et al. A computational approach to measure the linguistic characteristics of psychotherapy timing, responsiveness, and consistency. npj Ment Health Res. 2022;1:19.

Wawer A, Chojnicka I, Okruszek L, Sarzynska-Wawer J. Single and cross-disorder detection for autism and schizophrenia. Cogn Comput. 2022;14:461–73.

Flemotomos N, Martinez VR, Chen Z, Singla K, Ardulov V, Peri R, et al. Am I a good therapist? Automated evaluation of psychotherapy skills using speech and language technologies. CoRR, Abs. 2021;2102:10.3758.

Flemotomos N, Martinez VR, Chen Z, Creed TA, Atkins DC, Narayanan S. Automated quality assessment of cognitive behavioral therapy sessions through highly contextualized language representations. PLoS ONE. 2021;16:e0258639.

Min DJ, Pérez-Rosas V, Mihalcea R. Evaluating automatic speech recognition quality and its impact on counselor utterance coding. In: Proceedings of the seventh workshop on computational linguistics and clinical psychology: improving access. Association for Computational Linguistics; 2021. p. 159–68.

Pérez-Rosas V, Sun X, Li C, Wang Y, Resnicow K, Mihalcea R. Analyzing the quality of counseling conversations: the tell-tale signs of high-quality counseling. In: Proceedings of the eleventh international conference on language resources and evaluation (LREC 2018). 2018. European Language Resources Association (ELRA): Miyazaki, Japan https://aclanthology.org/L18-1591 (Accessed 9 Mar2022).

Pérez-Rosas V, Wu X, Resnicow K, Mihalcea R. What makes a good counselor? learning to distinguish between high-quality and low-quality counseling conversations. Proceedings of the 57th annual meeting of the association for computational linguistics. Florence, Italy: Association for Computational Linguistics; 2019. p. 926–35.

Tavabi L, Stefanov K, Zhang L, Borsari B, Woolley JD, Scherer S, et al. Multimodal automatic coding of client behavior in motivational interviewing. In: Proceedings of the 2020 international conference on multimodal interaction. ACM: Virtual Event Netherlands; 2020. p. 406–13.

Xiao B, Huang C, Imel ZE, Atkins DC, Georgiou P, Narayanan SS. A technology prototype system for rating therapist empathy from audio recordings in addiction counseling. PeerJ Comput Sci. 2016;2:e59.

Xiao B, Imel ZE, Georgiou PG, Atkins DC, Narayanan SS. ‘Rate My Therapist’: automated detection of empathy in drug and alcohol counseling via speech and language processing. PLoS ONE. 2015;10:e0143055.

Wiegersma S, Nijdam MJ, van Hessen AJ, Truong KP, Veldkamp BP, Olff M. Recognizing hotspots in brief Eclectic psychotherapy for PTSD by text and audio mining. Eur J Psychotraumatol. 2020;11:1726672.

Nitti M, Ciavolino E, Salvatore S, Gennaro A. Analyzing psychotherapy process as intersubjective sensemaking: an approach based on discourse analysis and neural networks. Psychother Res. 2010;20:546–63.

Sharma A, Miner AS, Atkins DC, Althoff T. A computational approach to understanding empathy expressed in text-based mental health support. Association for Computational Linguistics; 2020. p. 5263–76.

Wadden D, August T, Li Q, Althoff T. The effect of moderation on online mental health conversations. Proc Int AAAI Conf Web Soc Media. 2021;15:751–63.

Hull TD, Levine J, Bantilan N, Desai AN, Majumder MS. Analyzing digital evidence from a telemental health platform to assess complex psychological responses to the COVID-19 pandemic: content analysis of text messages. JMIR Form Res. 2021;5:e26190.

Zhang J, Filbin R, Morrison C, Weiser J, Danescu-Niculescu-Mizil C. Finding your voice: the linguistic development of mental health counselors. Proc. 57th Annual Meeting of the Association for Computational Linguistics. Florence, Italy: Association for Computational Linguistics; 2019. p. 936–47.

Wei J, Finn K, Templeton E, Wheatley T, Vosoughi S. Linguistic complexity loss in text-based therapy. In: Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. 2021. Association for Computational Linguistics; 2021. p. 4450–59.

Moyers T, Martin T, Catley D, Harris KJ, Ahluwalia JS. Assessing the integrity of motivational interviewing interventions: Reliability of the motivational interviewing skills code. Behav Cogn Psychother. 2003;31:177–84.

Wu Z, Helaoui R, Reforgiato Recupero D, Riboni D Towards Low-Resource Real-Time Assessment of Empathy in Counselling. In: Proceedings of the Seventh Workshop on Computational Linguistics and Clinical Psychology: Improving Access. 2021. Association for Computational Linguistics: Online, pp 204–16.

Alsentzer E, Murphy JR, Boag W, Weng W-H, Jin D, Naumann T, et al. Publicly Available Clinical. In Proceedings of the 2nd Clinical Natural Language Processing Workshop. Association for Computational Linguistics. 2019.

Ding X, Lybarger K, Tauscher J, Cohen T. Improving classification of infrequent cognitive distortions: domain-specific model vs. data augmentation. Proceedings of the 2022 conference of the North American chapter of the association for computational linguistics: human language technologies: Student Research Workshop. Seattle, Washington: Association for Computational Linguistics: Hybrid; 2022. p. 68–75.

Burkhardt H, Pullmann M, Hull T, Aren P, Cohen T. Comparing emotion feature extraction approaches for predicting depression and anxiety. Proceedings of the eighth workshop on computational linguistics and clinical psychology. Seattle, USA: Association for Computational Linguistics; 2022. p. 105–15.

Salmi S, Mérelle S, Gilissen R, van der Mei R, Bhulai S. Detecting changes in help seeker conversations on a suicide prevention helpline during the COVID− 19 pandemic: in-depth analysis using encoder representations from transformers. BMC Public Health. 2022;22:530.

Sharma A, Lin IW, Miner AS, Atkins DC, Althoff T. Towards facilitating empathic conversations in online mental health support: a reinforcement learning approach. In Proceedings of the Web Conference 2021, 2021. pp. 194–205.

Srivastava A, Suresh T, Lord SP, Akhtar MS, Chakraborty T. Counseling summarization using mental health knowledge guided utterance filtering. Proceedings of the 28th ACM SIGKDD conference on knowledge discovery and data mining. Washington, DC: ACM; 2022. p. 3920–30.

Arevian AC, Bone D, Malandrakis N, Martinez VR, Wells KB, Miklowitz DJ, et al. Clinical state tracking in serious mental illness through computational analysis of speech. PLoS ONE. 2020;15:e0225695.

Chen Z, Singla K, Gibson J, Can D, Imel ZE, Atkins DC, et al. Improving the prediction of therapist behaviors in addiction counseling by exploiting class confusions. ICASSP 2019—2019 IEEE international conference on acoustics, speech and signal processing (ICASSP). Brighton, United Kingdom: IEEE; 2019. p. 6605–9.

Mao K, Zhang W, Wang DB, Li A, Jiao R, Zhu Y, et al. Prediction of depression severity based on the prosodic and semantic features with bidirectional LSTM and Time Distributed CNN. IEEE Trans Affective Comput. 2022;1.

Pérez-Rosas V, Mihalcea R, Resnicow K, Singh S, An L. Understanding and predicting empathic behavior in counseling theraaspy. Proceedings of the 55th annual meeting of the association for computational linguistics (Volume 1: Long Papers). Vancouver, Canada: Association for Computational Linguistics; 2017. p. 1426–35.

Schultebraucks K, Yadav V, Shalev AY, Bonanno GA, Galatzer-Levy IR. Deep learning-based classification of posttraumatic stress disorder and depression following trauma utilizing visual and auditory markers of arousal and mood. Psychol Med. 2022;52:957–67.

Singla K, Chen Z, Flemotomos N, Gibson J, Can D, Atkins D, et al. Using prosodic and lexical information for learning utterance-level behaviors in psychotherapy. In: Interspeech 2018. ISCA; 2018. p. 3413-7.

Xu S, Yang Z, Chakraborty D, Tahir Y, Maszczyk T, Chua VYH, et al. Automatic verbal analysis of interviews with schizophrenic patients. 2018 IEEE 23rd international conference on digital signal processing (DSP). Shanghai, China: IEEE; 2018. p. 1–5.

Crangle CE, Wang R, Guimaraes MP, Nguyen MU, Nguyen DT, Suppes P. Machine learning for the recognition of emotion in the speech of couples in psychotherapy using the Stanford Suppes Brain Lab Psychotherapy Dataset. CoRR 2019. Preprint at http://arxiv.org/abs/1901.04110.

Carcone AI, Hasan M, Alexander GL, Dong M, Eggly S, Brogan Hartlieb K, et al. Developing machine learning models for behavioral coding. J Pediatr Psychol. 2019;44:289–99.

Just SA, Haegert E, Kořánová N, Bröcker A-L, Nenchev I, Funcke J, et al. Modeling Incoherent Discourse in Non-Affective Psychosis. Front Psychiatry. 2020;11:846.

Spruit M, Verkleij S, de Schepper K, Scheepers F. Exploring language markers of mental health in psychiatric stories. Appl Sci. 2022;12:2179.

Carrillo F, Mota N, Copelli M, Ribeiro S, Sigman M, Cecchi G, et al. Emotional Intensity analysis in Bipolar subjects. Preprint at http://arxiv.org/abs/1606.02231.

Alonso-Sánchez MF, Ford SD, MacKinley M, Silva A, Limongi R, Palaniyappan L. Progressive changes in descriptive discourse in First Episode Schizophrenia: a longitudinal computational semantics study. Schizophrenia. 2022;8:36.

Si D, Cheng SC, Xing R, Liu C, Wu HY. Scaling up prediction of psychosis by natural language processing. 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 2019. pp. 339–47, https://doi.org/10.1109/ICTAI.2019.00055.

Corcoran CM, Carrillo F, Fernández-Slezak D, Bedi G, Klim C, Javitt DC, et al. Prediction of psychosis across protocols and risk cohorts using automated language analysis. World Psychiatry. 2018;17:67–75.

Mota NB, Ribeiro M, Malcorra BLC, Atídio JP, Haguiara B, Gadelha A. Happy thoughts: What computational assessment of connectedness and emotional words can inform about early stages of psychosis. Schizophrenia Res. 2022;259:38–47.

Palaniyappan L, Mota NB, Oowise S, Balain V, Copelli M, Ribeiro S, et al. Speech structure links the neural and socio-behavioural correlates of psychotic disorders. Prog Neuro-Psychopharmacol Biol Psychiatry. 2019;88:112–20.

Alonso-Sánchez MF, Limongi R, Gati J, Palaniyappan L. Language network self-inhibition and semantic similarity in first-episode schizophrenia: A computational-linguistic and effective connectivity approach. Schizophrenia Res. 2022;259:97–103.

Xezonaki D, Paraskevopoulos G, Potamianos A, Narayanan S. Affective conditioning on hierarchical networks applied to depression detection from transcribed clinical interviews. Preprint at http://arxiv.org/abs/2006.08336.

Dirkse D, Hadjistavropoulos HD, Hesser H, Barak A. Linguistic analysis of communication in therapist-assisted internet-delivered cognitive behavior therapy for generalized anxiety disorder. Cogn Behav Ther. 2015;44:21–32.

Carrillo F, Sigman M, Fernández Slezak D, Ashton P, Fitzgerald L, Stroud J, et al. Natural speech algorithm applied to baseline interview data can predict which patients will respond to psilocybin for treatment-resistant depression. J Affect Disord. 2018;230:84–6.

Howes C, Purver M, McCabe R. Linguistic indicators of severity and progress in online text-based therapy for depression. Proceedings of the workshop on computational linguistics and clinical psychology: from linguistic signal to clinical reality. Baltimore, Maryland, USA: Association for Computational Linguistics; 2014. p. 7–16.

He Q, Veldkamp BP, Glas CAW, de Vries T. Automated assessment of patients’ self-narratives for posttraumatic stress disorder screening using natural language processing and text mining. Assessment. 2017;24:157–72.

Son Y, Clouston SAP, Kotov R, Eichstaedt JC, Bromet EJ, Luft BJ, et al. World Trade Center responders in their own words: predicting PTSD symptom trajectories with AI-based language analyses of interviews. Psychol Med. 2021;53:918–26.

Weintraub MJ, Posta F, Arevian AC, Miklowitz DJ. Using machine learning analyses of speech to classify levels of expressed emotion in parents of youth with mood disorders. J Psychiatr Res. 2021;136:39–46.

Tseng S-Y, Baucom B, Georgiou P. Approaching human performance in behavior estimation in couples therapy using deep sentence embeddings. In: Interspeech 2017. ISCA; 2017. p 3291–95.

Provoost S, Ruwaard J, van Breda W, Riper H, Bosse T. Validating automated sentiment analysis of online cognitive behavioral therapy patient texts: an exploratory study. Front Psychol. 2019;10:1065.

Tanana MJ, Soma CS, Kuo PB, Bertagnolli NM, Dembe A, Pace BT, et al. How do you feel? Using natural language processing to automatically rate emotion in psychotherapy. Behav Res. 2021. https://doi.org/10.3758/s13428-020-01531-z.

Glauser T, Santel D, DelBello M, Faist R, Toon T, Clark P, et al. Identifying epilepsy psychiatric comorbidities with machine learning. Acta Neurol Scand. 2020;141:388–96.

Kshirsagar R, Morris R, Bowman S. Detecting and explaining crisis. Proceedings of the fourth workshop on computational linguistics and clinical psychology—from linguistic signal to clinical reality. Vancouver, BC: Association for Computational Linguistics; 2017. p. 66–73.

Xu Z, Xu Y, Cheung F, Cheng M, Lung D, Law YW, et al. Detecting suicide risk using knowledge-aware natural language processing and counseling service data. Soc Sci Med. 2021;283:114176.

Baggott MJ, Kirkpatrick MG, Bedi G, de Wit H. Intimate insight: MDMA changes how people talk about significant others. J Psychopharmacol. 2015;29:669–77.

Norman KP, Govindjee A, Norman SR, Godoy M, Cerrone KL, Kieschnick DW, et al. Natural language processing tools for assessing progress and outcome of two veteran populations: cohort study from a novel online intervention for posttraumatic growth. JMIR Form Res. 2020;4:e17424.

Shapira N, Lazarus G, Goldberg Y, Gilboa-Schechtman E, Tuval-Mashiach R, Juravski D, et al. Using computerized text analysis to examine associations between linguistic features and clients’ distress during psychotherapy. J Counseling Psychol. 2021;68:77–87.

Burkhardt HA, Alexopoulos GS, Pullmann MD, Hull TD, Areán PA, Cohen T. Behavioral activation and depression symptomatology: longitudinal assessment of linguistic indicators in text-based therapy sessions. J Med Internet Res. 2021;23:e28244.

Malins S, Figueredo G, Jilani T, Long Y, Andrews J, Rawsthorne M, et al. Developing an automated assessment of in-session patient activation for psychological therapy: codevelopment approach. JMIR Med Inf. 2022;10:e38168.

SPark S, Kim D, Oh A. Conversation model fine-tuning for classifying client utterances in counseling dialogues. 2019. IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), IEEE. p. 339–47.

Nook EC, Hull TD, Nock MK, Somerville LH. Linguistic measures of psychological distance track symptom levels and treatment outcomes in a large set of psychotherapy transcripts. Proc Natl Acad Sci USA. 2022;119:e2114737119.

Lee F-T, Hull D, Levine J, Ray B, McKeown K. Identifying therapist conversational actions across diverse psychotherapeutic approaches. Proceedings of the sixth workshop on computational linguistics and clinical psychology. Minneapolis, Minnesota: Association for Computational Linguistics; 2019. p. 12–23.

Atkins DC, Steyvers M, Imel ZE, Smyth P. Scaling up the evaluation of psychotherapy: evaluating motivational interviewing fidelity via statistical text classification. Implement Sci. 2014;9:49.

Can D, Marín RA, Georgiou PG, Imel ZE, Atkins DC, Narayanan SS. “It sounds like…”: A natural language processing approach to detecting counselor reflections in motivational interviewing. J Counseling Psychol. 2016;63:343–50.

Cao J, Tanana M, Imel ZE, Poitras E, Atkins DC, Srikumar V. Observing dialogue in therapy: categorizing and forecasting behavioral codes. Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics; 2019. p. 5599–611.

Pérez-Rosas V, Mihalcea R, Resnicow K, Singh S, Ann L, Goggin KJ, et al. Predicting counselor behaviors in motivational interviewing encounters. Proceedings of the 15th conference of the european chapter of the association for computational linguistics: volume 1, long papers. Valencia, Spain: Association for Computational Linguistics; 2017. p. 1128–37.

Tanana M, Hallgren KA, Imel ZE, Atkins DC, Srikumar V. A comparison of natural language processing methods for automated coding of motivational interviewing. J Subst Abus Treat. 2016;65:43–50.

Chen Z, Flemotomos N, Imel ZE, Atkins DC, Narayanan S. Leveraging open data and task augmentation to automated behavioral coding of psychotherapy conversations in low-resource scenarios. 2022. In Findings of the Association for Computational Linguistics: EMNLP 2022. 2022. p. 5787–95.

Wu Z, Helaoui R, Reforgiato Recupero D, Riboni D. Towards automated counselling decision-making: remarks on therapist action forecasting on the AnnoMI dataset. In: Interspeech 2022. ISCA; 2022. p. 1906–10.

Hudon A, Beaudoin M, Phraxayavong K, Dellazizzo L, Potvin S, Dumais A. Implementation of a machine learning algorithm for automated thematic annotations in avatar: A linear support vector classifier approach. Health Inform J. 2022;28:146045822211424.

Liu Z, Peach RL, Lawrance EL, Noble A, Ungless MA, Barahona M. Listening to mental health crisis needs at scale: using natural language processing to understand and evaluate a mental health crisis text messaging service. Front Digit Health. 2021;3:779091.

Mehta M, Caperton D, Axford K, Weitzman L, Atkins D, Srikumar V, et al. Psychotherapy is not one thing: simultaneous modeling of different therapeutic approaches. Proceedings of the eighth workshop on computational linguistics and clinical psychology. Seattle, USA: Association for Computational Linguistics; 2022. p. 47–58.

Gibson J, Can D, Xiao B, Imel ZE, Atkins DC, Georgiou P, et al. A deep learning approach to modeling empathy in addiction counseling; 2016. p. 1447-51.

Zhang J, Danescu-Niculescu-Mizil C. Balancing objectives in counseling conversations: advancing forwards or looking backwards. In: Proceedings of the 58th annual meeting of the association for computational linguistics. 2020. Association for Computational Linguistics 2020. p. 5276–89.

Goldberg SB, Tanana M, Imel ZE, Atkins DC, Hill CE, Anderson T. Can a computer detect interpersonal skills? Using machine learning to scale up the Facilitative Interpersonal Skills task. Psychother Res. 2021;31:281–8.

Atzil-Slonim D, Juravski D, Bar-Kalifa E, Gilboa-Schechtman E, Tuval-Mashiach R, Shapira N, et al. Using topic models to identify clients’ functioning levels and alliance ruptures in psychotherapy. Psychotherapy. 2021. https://doi.org/10.1037/pst0000362.