Abstract

Social determinants of health (SDoH) play a critical role in patient outcomes, yet their documentation is often missing or incomplete in the structured data of electronic health records (EHRs). Large language models (LLMs) could enable high-throughput extraction of SDoH from the EHR to support research and clinical care. However, class imbalance and data limitations present challenges for this sparsely documented yet critical information. Here, we investigated the optimal methods for using LLMs to extract six SDoH categories from narrative text in the EHR: employment, housing, transportation, parental status, relationship, and social support. The best-performing models were fine-tuned Flan-T5 XL for any SDoH mentions (macro-F1 0.71), and Flan-T5 XXL for adverse SDoH mentions (macro-F1 0.70). Adding LLM-generated synthetic data to training varied across models and architecture, but improved the performance of smaller Flan-T5 models (delta F1 + 0.12 to +0.23). Our best-fine-tuned models outperformed zero- and few-shot performance of ChatGPT-family models in the zero- and few-shot setting, except GPT4 with 10-shot prompting for adverse SDoH. Fine-tuned models were less likely than ChatGPT to change their prediction when race/ethnicity and gender descriptors were added to the text, suggesting less algorithmic bias (p < 0.05). Our models identified 93.8% of patients with adverse SDoH, while ICD-10 codes captured 2.0%. These results demonstrate the potential of LLMs in improving real-world evidence on SDoH and assisting in identifying patients who could benefit from resource support.

Similar content being viewed by others

Introduction

Health disparities have been extensively documented across medical specialties1,2,3. However, our ability to address these disparities remains limited due to an insufficient understanding of their contributing factors. Social determinants of health (SDoH), are defined by the World Health Organization as “the conditions in which people are born, grow, live, work, and age…shaped by the distribution of money, power, and resources at global, national, and local levels”4. SDoH may be adverse or protective, impacting health outcomes at multiple levels as they likely play a major role in disparities by determining access to and quality of medical care. For example, a patient cannot benefit from an effective treatment if they don’t have transportation to make it to the clinic. There is also emerging evidence that exposure to adverse SDoH may directly affect physical and mental health via inflammatory and neuro-endocrine changes5,6,7,8. In fact, SDoH are estimated to account for 80–90% of modifiable factors impacting health outcomes9.

SDoH are rarely documented comprehensively in structured data in the electronic health records (EHRs)10,11,12, creating an obstacle to research and clinical care. Instead, issues related to SDoH are most frequently described in the free text of clinic notes, which creates a bottleneck for incorporating these critical factors into databases to research the full impact and drivers of SDoH, and for proactively identifying patients who may benefit from additional social work and resource support.

Natural language processing (NLP) could address these challenges by automating the abstraction of these data from clinical texts. Prior studies have demonstrated the feasibility of NLP for extracting a range of SDoH13,14,15,16,17,18,19,20,21,22,23. Yet, there remains a need to optimize performance for the high-stakes medical domain and to evaluate state-of-the-art language models (LMs) for this task. In addition to anticipated performance changes scaling with model size, large LMs may support EHR mining via data augmentation. Across medical domains, data augmentation can boost performance and alleviate domain transfer issues and so is an especially promising approach for the nearly ubiquitous challenge of data scarcity in clinical NLP24,25,26. The advanced capabilities of state-of-the-art large LMs to generate coherent text open new avenues for data augmentation through synthetic text generation. However, the optimal methods for generating and utilizing such data remain uncertain. Large LM-generated synthetic data may also be a means to distill knowledge represented in larger LMs to more computationally accessible smaller LMs27. In addition, few studies assess the potential bias of SDoH information extraction methods across patient populations. LMs could contribute to the health inequity crisis if they perform differently in diverse populations and/or recapitulate societal prejudices28. Therefore, understanding bias is critical for future development and deployment decisions.

Here, we characterize optimal methods, including the role of synthetic clinical text, for SDoH extraction using large language models. Specifically, we develop models to extract six key SDoH: employment status, housing issues, transportation issues, parental status, and social support. We investigate the value of incorporating large LM-generated synthetic SDoH data during the fine-tuning stage. We assess the performance of large LMs, including GPT3.5 and GPT4, in zero- and few-shot settings for identifying SDoH, and we explore the potential for algorithmic bias in LM predictions. Our methods could yield real-world evidence on SDoH, assist in identifying patients who could benefit from resource and social work support, and draw attention to the under-documented impact of social factors on health outcomes.

Results

Model performance

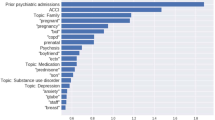

Table 1 shows the performance of fine-tuned models for both SDoH tasks on the radiotherapy test set. The best-performing model for any SDoH mention task was Flan-T5 XXL (3 out of 6 categories) using synthetic data (Macro-F1 0.71). The best-performing model for the adverse SDoH mention task was Flan-T5 XL without synthetic data (Macro-F1 0.70). In general, the Flan-T5 models outperformed BERT, and model performance scaled with size. However, although the Flan-T5 XL and XXL models were the largest models evaluated in terms of total parameters because LoRA was used for their fine-tuning, the fewest parameters were tuned for these models: 9.5 M and 18 M for Flan-TX XL and XXL, respectively, compared to 110 M for BERT. The negative class generally had the best performance overall, followed by Relationship and Employment. Performance varied quite a bit across the models for the other classes.

For both tasks, the best-performing models with synthetic data augmentation used sentences from both rounds of GPT3.5 prompting. Synthetic data augmentation tended to lead to the largest performance improvements for classes with few instances in the training dataset and for which the model trained on gold-only data had very low performance (Housing, Parent, and Transportation).

The performance of the best-performing models for each task on the immunotherapy and MIMIC-III datasets is shown in Table 2. Performance was similar in the immunotherapy dataset, which represents a separate but similar patient population treated at the same hospital system. We observed a performance decrement in the MIMIC-III dataset, representing a more dissimilar patient population from a different hospital system. Performance was similar between models developed with and without synthetic data.

Ablation studies

The ablation studies showed a consistent deterioration in model performance across all SDoH tasks and categories as the volume of real gold SDoH sentences progressively decreased, although models that included synthetic data maintained performance at higher levels throughout and were less sensitive to decreases in gold data (Fig. 1, Supplementary Table 1). When synthetic data were included in the training, performance was maintained until ~50% of gold data were removed from the train set. Conversely, without synthetic data, performance dropped after about 10–20% of the gold data were removed from the train set, mimicking a true low-resource setting.

Performance in Macro-F1 of Flan-T5 XL models fine-tuned using gold data only (orange line) and gold and synthetic data (green line), as gold-labeled sentences are gradually reduced by undersample value from the training dataset for the a adverse social determinant of health (SDoH) mention task and b any SDoH mention task. The full gold-labeled training set is comprised of 29,869 sentences, augmented with 1800 synthetic SDoH sentences, and tested on the in-domain RT test dataset. SDoH Social determinants of health.

Error analysis

The leading discrepancies between ground truth and model prediction for each task are in Supplementary Table 2. Qualitative analysis revealed 4 distinct error patterns: Human annotator error; false positives and false negatives for Relationship and Support labels in the presence of any family mentions that did not correlate with the correct label; incorrect labels due to information present in the note but external to the sentence and therefore not accessible to the model or that required implied/assumed knowledge; and incorrect labeling of a non-adverse SDoH as an adverse SDoH.

ChatGPT-family model performance

When evaluating our fine-tuned Flan-T5 models on the synthetic test dataset against GPT-turbo-0613 and GPT4–0613, our model surpassed the performance of the top-performing 10-shot learning GPT model by a margin of Macro-F1 0.03 on any SDoH task (p < 0.01), but fall shorts on adverse SDoH task (p < 0.01) (Table 3, Fig. 2).

Comparison of model performance between our fine-tuned Flan-T5 models against zero- and 10-shot GPT. Macro-F1 was measured using our manually validated synthetic dataset. The GPT-turbo-0613 version of GPT3.5 and the GPT4–0613 version of GPT4 were used. Error bars indicate the 95% confidence intervals. LLM large language model.

Language model bias evaluation

Both fine-tuned Flan-T5 models and ChatGPT provided discrepant classification for synthetic sentence pairs with and without demographic information injected (Fig. 3). However, the discrepancy rate of our fine-tuned models was nearly half that of ChatGPT: 14.3% vs. 21.5% of sentence pairs for any SDoH (P = 0.007) and 9.9% vs. 18.2% of sentence pairs for adverse SDoH (P = 0.005) for fine-tuned Flan-T5 vs. ChatGPT, respectively. ChatGPT was significantly more likely to change its classification when a female gender was injected compared to a male gender for the Any SDoH task (P = 0.01); no other within-model comparisons were statistically significant. Sentences gold-labeled as Support for both any SDoH and adverse SDoH mentions were most likely to lead to discrepant predictions for ChatGPT (56.3% (27/48)) and (21.0% (9/29)), respectively). Employment gold-labeled sentences were most likely to lead to discrepant prediction for any SDoH mention fine-tuned model (14.4% (13/90)), and Transportation for adverse SDoH mention fine-tuned model (12.2% (6/49)).

The proportion of synthetic sentence pairs with and without demographics injected led to a classification mismatch, meaning that the model predicted a different SDoH label for each sentence in the pair. Results are shown across race/ethnicity and gender for a any SDoH mention task and b adverse SDoH mention task. Asterisks indicate statistical significance (P ≤ 0.05) chi-squared tests for multi-class comparisons and 2-proportion z tests for binary comparisons. LLM large language model, SDoH Social determinants of health.

Comparison with structured EHR data

Our best-performing models for any SDoH mention correctly identified 95.7% (89/93) patients with at least one SDoH mention, and 93.8% (45/48) patients with at least one adverse SDoH mention (Supplementary Tables 3 and 4). SDoH entered as structured Z-code in the EHR during the same timespan identified 2.0% (1/48) with at least one adverse SDoH mention (all mapped Z-codes were adverse) (Supplementary Table 5). Supplementary Figs. 1 and 2 show that patient-level performance when using model predictions out-performed Z-codes by a factor of at least 3 for every label for each task (Macro-F1 0.78 vs. 0.17 for any SDoH mention and 0.71 vs. 0.17 for adverse SDoH mention).

Discussion

We developed multilabel classifiers to identify the presence of 6 different SDoH documented in clinical notes, demonstrating the potential of large LMs to improve the collection of real-world data on SDoH and support the appropriate allocation of resources support to patients who need it most. We identified a performance gap between a more traditional BERT classifier and larger Flan-T5 XL and XXL models. Our fine-tuned models outperformed ChatGPT-family models with zero- and few-shot learning for most SDoH classes and were less sensitive to the injection of demographic descriptors. Compared to diagnostic codes entered as structured data, text-extracted data identified 91.8% more patients with an adverse SDoH. We also contribute new annotation guidelines as well as synthetic SDoH datasets to the research community.

All of our models performed well at identifying sentences that do not contain SDoH mentions (F1 ≥ 0.99 for all). For any SDoH mentions, performance was worst for parental status and transportation issues. For adverse SDoH mentions, performance was worst for parental status and social support. These findings are unsurprising given the marked class imbalance for all SDoH labels—only 3% of sentences in our training set contained any SDoH mention. Given this imbalance, our models’ ability to identify sentences that contain SDoH language is impressive. In addition, these SDoH descriptions are semantically and linguistically complex. In particular, sentences describing social support are highly variable, given the variety of ways individuals can receive support from their social systems during care. Interestingly, our best-performing models demonstrated strong performance in classifying housing issues (Macro-F1 0.67), which was our scarcest label with only 20 instances in the training dataset. This speaks to the potential of large LMs in improved real-world data collection for very sparsely documented information, which is the most likely to be missed via manual review.

The recent advancements in large LMs have opened a pathway for synthetic text generation that may improve model performance via data augmentation and enable experiments that better protect patient privacy29. This is an emerging area of research that falls within a larger body of work on synthetic patient data across a range of data types and end-uses30,31. Our study is among the first to evaluate the role of contemporary generative large LMs for synthetic clinical text to help unlock the value of unstructured data within the EHR. We were particularly interested in synthetic clinical data as a means to address the aforementioned scarcity of SDoH documentation, and our findings may provide generalizable insights for the common clinical NLP challenge of class imbalance—many clinically important data are difficult to identify among the huge amounts of text in a patient’s EHR. We found variable benefits of synthetic data augmentation across model architecture and size; the strategy was most beneficial for the smaller Flan-T5 models and for the rarest classes where performance was dismal using gold data alone. Importantly, the ablation studies demonstrated that only approximately half of the gold-labeled dataset was needed to maintain performance when synthetic data was included in training, although synthetic data alone did not produce high-quality models. Of note, we aimed to understand whether synthetic data for augmentation could be automatically generated using ChatGPT-family models without additional human annotation, and so it is possible that manual gold-labeling could further enhance the value of these data. However, this would decrease the value of synthetic data in terms of reducing annotation effort.

Our novel approach to generating synthetic clinical sentences also enabled us to explore the potential for ChatGPT-family models, GPT3.5 and GPT4, for supporting the collection of SDoH information from the EHR. We found that fine-tuning LMs that are orders of magnitude smaller than ChatGPT-family models, even with our relatively small dataset, generally out-performed zero-shot and few-shot learning with ChatGPT-family models, consistent with prior work evaluating large LMs for clinical uses32,33,34. Nevertheless, these models showed promising performance given that they were not explicitly trained for clinical tasks, with the caveat that it is hard to make definite conclusions based on synthetic data. Additional prompt engineering could improve the performance of ChatGPT-family models, such as developing prompts that provide details of the annotation guidelines as done by Ramachandran et al.34. This is an area for future study, especially once these models can be readily used with real clinical data. With additional prompt engineering and model refinement, performance of these models could improve in the future and provide a promising avenue to extract SDoH while reducing the human effort needed to label training datasets.

It is well-documented that LMs learn the biases, prejudices, and racism present in the language they are trained on35,36,37,38. Thus, it is essential to evaluate how LMs could propagate existing biases, which in clinical settings could amplify the health disparities crisis1,2,3. We were especially concerned that SDoH-containing language may be particularly prone to eliciting these biases. Both our fine-tuned models and ChatGPT altered their SDoH classification predictions when demographics and gender descriptors were injected into sentences, although the fine-tuned models were significantly more robust than ChatGPT. Although not significantly different, it is worth noting that for both the fine-tuned models and ChatGPT, Hispanic and Black descriptors were most likely to change the classification for any SDoH and adverse SDoH mentions, respectively. This lack of significance may be due to the small numbers in this evaluation, and future work is critically needed to further evaluate bias in clinical LMs. We have made our paired demographic-injected sentences openly available for future efforts on LM bias evaluation.

SDoH are notoriously under-documented in existing EHR structured data10,11,12,39. Our findings that text-extracted SDoH information was better able to identify patients with adverse SDoH than relevant billing codes are in agreement with prior work showing under-utilization of Z-codes10,11. Most EMR systems have other ways to enter SDoH information as structured data, which may have more complete documentation, however, these did not exist for most of our target SDoH. Lyberger et al. evaluated other EHR sources of structured SDoH data and similarly found that NLP methods are a complementary source SDoH information extraction and were able to identify 10–30% of patients with tobacco, alcohol, and homelessness risk factors documented only in unstructured text22.

There have been several prior studies developing NLP methods to extract SDoH from the EHR13,14,15,16,17,18,19,20,21,40. The most common SDoH targeted in prior efforts include smoking history, substance use, alcohol use, and homelessness23. In addition, many prior efforts focus only on text in the Social History section of notes. In a recent shared task on alcohol, drug, tobacco, employment, and living situation event extraction from Social History sections, pre-trained LMs similarly provided the best performance41. Using this dataset, one study found that sequence-to-sequence approaches outperformed classification approaches, in line with our findings42. In addition to our technical innovations, our work adds to prior efforts by investigating SDoH which are less commonly targeted for extraction but nonetheless have been shown to impact healthcare43,44,45,46,47,48,49,50,51. We also developed methods that can mine information from full clinic notes, not only from Social History sections—a fundamentally more challenging task with a much larger class imbalance. Clinically-impactful SDoH information is often scattered throughout other note sections, and many note types, such as many inpatient progress notes and notes written by nurses and social workers, do not consistently contain Social History sections.

Our study has limitations. First, our training and out-of-domain datasets come from a predominantly white population treated at hospitals in Boston, Massachusetts, in the United States of America. This limits the generalizability of our findings. We could not exhaustively assess the many methods to generate synthetic data from ChatGPT. Instead, we chose to investigate prompting methods that could be easily reproduced by others and did not require extensive task-specific optimization, as this is likely not feasible for the many clinical NLP tasks for one may wish to generate synthetic data on. Incorporating real clinical examples in the prompt may improve the quality of the synthetic data and is an area of future research when large generative LMs become more widely available for use with protected health information and within the resource constraints of academic researchers and healthcare systems. Because we could not evaluate ChatGPT-family models using protected health information, our evaluations are limited to manually-verified synthetic sentences. Thus, our reported performance may not completely reflect true performance on real clinical text. Because the synthetic sentences were generated using ChatGPT itself, and ChatGPT presumably has not been trained on clinical text, we hypothesize that, if anything, performance would be worse on real clinical data. Finally, our models can only be as good as the annotated corpus. SDoH annotation is challenging due to its conceptually complex nature, especially for the Support tag, and labeling may also be subject to annotator bias52, all of which may impact ultimate performance.

Our findings highlight the potential of large LMs to improve real-world data collection and identification of SDoH from the EHR. In addition, synthetic clinical text generated by large LMs may enable better identification of rare events documented in the EHR, although more work is needed to optimize generation methods. Our fine-tuned models were less prone to bias than ChatGPT-family models and outperformed for most SDoH classes, especially any SDoH mentions, despite being orders of magnitude smaller. In the future, these models could improve our understanding of drivers of health disparities by improving real-world evidence and could directly support patient care by flagging patients who may benefit most from proactive resource and social work referral.

Methods

Data

Table 4 describes the patient populations of the datasets used in this study. Gender and race/ethnicity data and descriptors were collected from the EHR. These are generally collected either directly from the patient at registration, or by a provider, but the mode of collection for each data point was not available. Our primary dataset consisted of a corpus of 800 clinic notes from 770 patients with cancer who received radiotherapy (RT) at the Department of Radiation Oncology at Brigham and Women’s Hospital/Dana-Farber Cancer Institute in Boston, Massachusetts, from 2015 to 2022. We also created two out-of-domain test datasets. First, we collected 200 clinic notes from 170 patients with cancer treated with immunotherapy at Dana-Farber Cancer, and not present in the RT dataset. Second, we collected 200 notes from 183 patients in the MIMIC (Medical Information Mart for Intensive Care)-III database53,54,55, which includes data associated with patients admitted to the critical care units at Beth Israel Deaconess Medical Center in Boston, Massachusetts from 2001 to 2008. This study was approved by the Mass General Brigham institutional review board, and consent was waived as this was deemed exempt from human subjects research.

Only notes written by physicians, physician assistants, nurse practitioners, registered nurses, and social workers were included. To maintain a minimum threshold of information, we excluded notes with fewer than 150 tokens across all provider types. This helped ensure that the selected notes contained sufficient textual content. For notes written by all providers save social workers, we excluded notes containing any section longer than 500 tokens to avoid excessively lengthy sections that might have included less relevant or redundant information. For physician, physician assistant, and nurse practitioner notes, we used a customized medSpacy56,57 sectionizer to include only notes that contained at least one of the following sections: Assessment and Plan, Social History, and History/Subjective.

In addition, for the RT dataset, we established a date range, considering notes within a window of 30 days before the first treatment and 90 days after the last treatment. Additionally, in the fifth round of annotation, we specifically excluded notes from patients with zero social work notes. This decision ensured that we focused on individuals who had received social work intervention or had pertinent social context documented in their notes. For the immunotherapy dataset, we ensured that there was no patient overlap between RT and immunotherapy notes. We also specifically selected notes from patients with at least one social work note. To further refine the selection, we considered notes with a note date one month before or after the patient’s first social work note after it. For the MIMIC-III dataset, only notes written by physicians, social workers, and nurses were included for analysis. We focused on patients who had at least one social work note, without any specific date range criteria.

Prior to annotation, all notes were segmented into sentences using the syntok58 sentence segmenter as well as split into bullet points “•”. This method was used for all notes in the radiotherapy, immunotherapy, and MIMIC datasets for sentence-level annotation and subsequent classification.

Task definition and data labeling

We defined our label schema and classification tasks by first carrying out interviews with subject matter experts, including social workers, resource specialists, and oncologists, to determine SDoH that are clinically relevant but not readily available as structured data in the EHR, especially as dynamic features over time. After initial interviews, a set of exploratory pilot annotations was conducted on a subset of clinical notes and preliminary annotation guidelines were developed. The guidelines were then iteratively refined and finalized based on the pilot annotations and additional input from subject matter experts. The following SDoH categories and their attributes were selected for inclusion in the project: Employment status (employed, unemployed, underemployed, retired, disability, student), Housing issue (financial status, undomiciled, other), Transportation issue (distance, resource, other), Parental status (if the patient has a child under 18 years old), Relationship (married, partnered, widowed, divorced, single), and Social support (presence or absence of social support).

We defined two multilabel sentence-level classification tasks:

-

1.

Any SDoH mentions: The presence of language describing an SDoH category as defined above, regardless of the attribute.

-

2.

Adverse SDoH mentions: The presence or absence of language describing an SDoH category with an attribute that could create an additional social work or resource support need for patients:

-

Employment status: unemployed, underemployed, disability

-

Housing issue: financial status, undomiciled, other

-

Transportation issue: distance, resources, other

-

Parental status: having a child under 18 years old

-

Relationship: widowed, divorced, single

-

Social support: absence of social support

After finalizing the annotation guidelines, two annotators manually annotated the RT corpus. In total, ten thousand one hundred clinical notes were annotated line-by-line using the annotation software Multi-document Annotation Environment (MAE v2.2.13)59. A total of 300/800 (37.5%) of the notes underwent dual annotation by two data scientists across four rounds. After each round, the data scientists and an oncologist performed discussion-based adjudication. Before adjudication, dually annotated notes had a Krippendorf’s alpha agreement of 0.86 and Cohen’s Kappa of 0.86 for any SDoH mention categories. For adverse SDoH mentions, notes had a Krippendorf’s alpha agreement of 0.76 and Cohen’s Kappa of 0.76. Detailed agreement metrics are in Supplementary Tables 6 and 7. A single annotator then annotated the remaining radiotherapy notes, the immunotherapy dataset, and the MIMIC-III dataset. Table 5 describes the distribution of labels across the datasets.

The annotation/adjudication team was composed of one board-certified radiation oncologist who completed a postdoctoral fellowship in clinical natural language processing, a Master’s-level computational linguist with a Bachelor’s degree in linguistics and 1-year prior experience working specifically with clinical text, and a Master’s student in computational linguistics with a Bachelor’s degree in linguistics. The radiation oncologist and Master’s level computational linguist led the development of the annotation guidelines, and trained the Master’s student in SDoH annotation over a period of 1 month via review of the annotation guidelines and iterative review of pilot annotations. During adjudication, if there was still ambiguity, we discussed with the two Resource Specialists on the research team to provide input in adjudication.

Data augmentation

We employed synthetic data generation methods to assess the impact of data augmentation for the positive class, and also to enable an exploratory evaluation of proprietary large LMs that could not be downloaded locally and thus cannot be used with protected health information. In round 1, GPT-turbo-0301(ChatGPT) version of GPT3.5 via the OpenAI60 API was prompted to generate new sentences for each SDoH category, using sentences from the annotation guidelines as references. In round 2, in order to generate more linguistic diversity, the sample synthetic sentences output from round 1 were taken as references to generate another set of synthetic sentences. One-hundred sentences per category were generated in each round. Supplementary Table 8 shows the prompts for each sentence label type.

Synthetic test set generation

Iteration 1 for generating SDoH sentences involved prompting the 538 synthetic sentences to be manually validated to evaluate ChatGPT, which cannot be used with protected health information. Of these, after human review only 480 were found to have any SDoH mention, and 289 to have an adverse SDoH mention (Table 5). For all synthetic data generation methods, no real patient data were used in prompt development or fine-tuning.

Model development

The radiotherapy corpus was split into a 60%/20%/20% distribution for training, development, and testing respectively. The entire immunotherapy and MIMIC-III corpora were held-out for out-of-domain tests and were not used during model development.

The experimental phase of this study focused on investigating the effectiveness of different machine learning models and data settings for the classification of SDoH. We explored one multilabel BERT model as a baseline, namely bert-base-uncased61, as well as a range of Flan-T5 models62,63 including Flan-T5 base, large, XL, and XXL; where XL and XXL used a parameter efficient tuning method (low-rank adaptation (LoRA)64). Binary cross-entropy loss with logits was used for BERT, and cross-entropy loss for the Flan-T5 models. Given the large class imbalance, non-SDoH sentences were undersampled during training. We assessed the impact of adding synthetic data on model performance. Details on model hyper-parameters are in Supplementary Methods.

For sequence-to-sequence models, input consisted of the input sentence with “summarize” appended in front, and the target label (when used during training) was the text span of the label from the target vocabulary. Because the output did not always exactly correspond to the target vocabulary, we post-processed the model output, which was a simple split function on “,” and dictionary mapping from observed miss-generation e.g., “RELAT → RELATIONSHIP”. Examples of this label resolution are in Supplementary Methods.

Ablation studies

Ablation studies were carried out to understand the impact of manually labeled training data quantity on performance when synthetic SDoH data is included in the training dataset. First, models were trained using 10%, 25%, 40%, 50%, 70%, 75%, and 90% of manually labeled sentences; both SDoH and non-SDoH sentences were reduced at the same rate. The evaluation was on the RT test set.

Evaluation

During training and fine-tuning, we evaluated all models using the RT development set and assessed their final performance using bootstrap sampling of the held-out RT test set. Bootstrap sample number and size were calculated to achieve a precision level for the standard error of macro F1 of ±0.01. The mean and 95% confidence intervals from the bootstrap samples were calculated from the resulting bootstrap samples. We also sampled to ensure that our standard error on the 95% confidence interval limits was <0.01 as follows: Our selected bootstrap sample size matched the test data size, sampling with replacement. We then computed the 5th and 95th percentile values for each of the calculated k samples from the resulting distributions. The standard deviation of these percentile values was subsequently determined to establish the precision of the confidence interval limits. Examples of the bootstrap sampling calculations are in Supplementary Methods.

For each classification task, we calculated precision/positive predictive value, recall/sensitivity, and F1 (harmonic mean of recall and precision) as follows:

-

Precision = TP/(TP + FP)

-

Recall = TP/(TP + FN)

-

F1 = (2*Precision*Recall)/(Precision+Recall)

-

TP = true positives, FP = false positives, FN = false negatives

Manual error analysis was conducted on the radiotherapy dataset using the best-performing model.

ChatGPT-family model evaluation

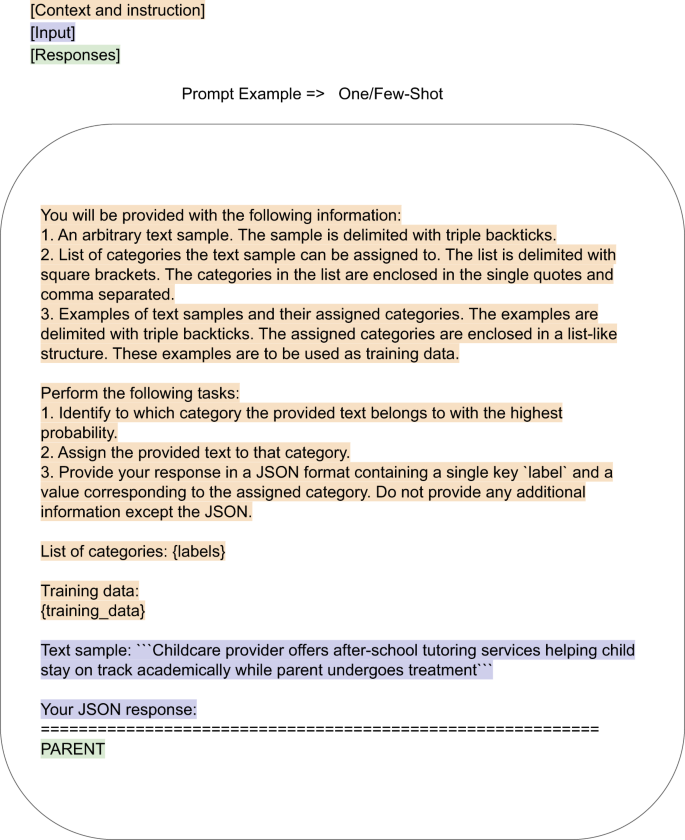

To evaluate ChatGPT, the Scikit-LLM65 multilabel zero-shot classifier and few-shot binary classifier were adapted to form a multilabel zero- and few-shot classifier (Fig. 4). A subset of 480 synthetic sentences whose labels were manually validated, were used for testing. Test sentences were inserted into the following prompt template, which instructs ChatGPT to act as a multilabel classifier model, and to label the sentences accordingly:

“Sample input: [TEXT]

Sample target: [LABELS]”

[TEXT] was the exemplar from the development/exemplar set.

[LABELS] was a comma-separated list of the labels for that exemplar, e.g. PARENT,RELATIONSHIP.

Example of prompt templates used in the SKLLM package for GPT-turbo-0301 (GPT3.5) and GPT4 with temperature 0 to classify our labeled synthetic data. {labels} and {training_data} were sampled from a separate synthetic dataset, which was not human-annotated. The final label output is highlighted in green.

Of note, because we were unable to generate high-quality synthetic non-SDoH sentences, these classifiers did not include a negative class. We evaluated the most current ChatGPT model freely available at the time of this work, GPT-turbo-0613, as well as GPT4–0613, via the OpenAI API with temperature 0 for reproducibility.

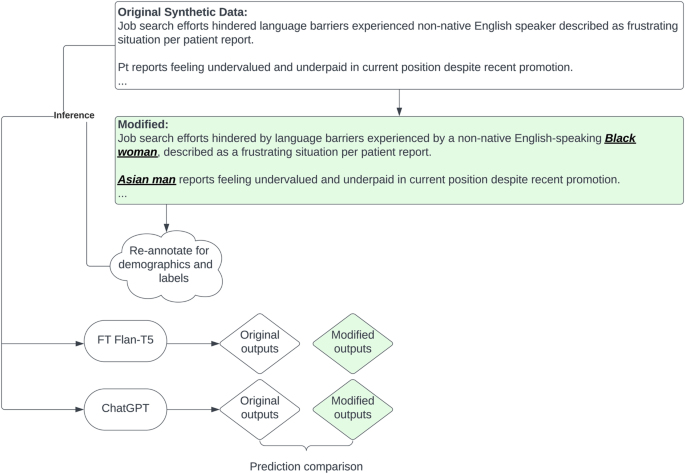

Language model bias evaluation

In order to test for bias in our best-performing models and in large LMs pre-trained on general text, we used GPT4 to insert demographic descriptors into our synthetic data, as illustrated in Fig. 5. GPT4 was supplied with our synthetically generated test sentences, and prompted to insert demographic information into them. For example, a sentence starting with “Widower admits fears surrounding potential judgment…” might become “Hispanic widower admits fears surrounding potential judgment…”. The prompt was as follows (in a batch of 10 ensure demographic variations):

“role”: “user”, “content”: “[ORIGINAL SENTENCE]\n swap the sentences patients above to one of the race/ethnicity [Asian, Black, white, Hispanic] and gender, and put the modified race and gender in bracket at the beginning like this \n Owner operator food truck selling gourmet grilled cheese sandwiches around town => \n [Asian female] Asian woman owner operator of a food truck selling gourmet grilled cheese sandwiches around town”

[ORIGINAL SENTENCE] was a sentence from a selected subset of our GPT3.5-generated synthetic data

These sentences were then manually validated; 419 had any SDoH mention, and 253 had an adverse SDoH mention.

Comparison with structured EHR data

To assess the completeness of SDoH documentation in structured versus unstructured EHR data, we collected Z-codes for all patients in our test set. Z-codes are SDoH-related ICD-10-CM diagnostic codes, mapped most closely with our SDoH categories present as structured data for the radiotherapy dataset (Supplementary Table 9). Text-extracted patient-level SDoH information was defined as the presence of one or more labels in any note. We compared these patient-level labels to structured Z-codes entered in the EHR during the same time frame.

Statistical analysis

Macro-F1 performance for each model type was compared when developed with or without synthetic data and for the ChatGPT-family model comparisons using the Mann–Whitney U test. The rate of discrepant SDoH classifications with and without the injection of demographic information was compared between the best-performing fine-tuned models and ChatGPT using chi-squared tests for multi-class comparisons and 2-proportion z tests for binary comparisons. A two-sided P ≤ 0.05 was considered statistically significant. Statistical analyses were carried out using the statistical Python package in scipy (Scipy.org). Python version 3.9.16 (Python Software Foundation) was used to carry out this work.

Data availability

The RT and immunotherapy datasets cannot be shared for the privacy of the individuals whose data were used in this study. All synthetic datasets used in this study are available at: https://github.com/AIM-Harvard/SDoH. The annotated MIMIC-III dataset is available after completion of a data use agreement at: https://doi.org/10.13026/6149-mb2566. The demographic-injected paired sentence dataset is available at: https://huggingface.co/datasets/m720/SHADR67.

Code availability

The final annotation guidelines and all synthetic datasets used in this study are available at: https://github.com/AIM-Harvard/SDoH.

References

Lavizzo-Mourey, R. J., Besser, R. E. & Williams, D. R. Understanding and mitigating health inequities - past, current, and future directions. N. Engl. J. Med 384, 1681–1684 (2021).

Chetty, R. et al. The association between income and life expectancy in the United States, 2001-2014. JAMA 315, 1750–1766 (2016).

Caraballo, C. et al. Excess mortality and years of potential life lost among the black population in the US, 1999-2020. JAMA 329, 1662–1670 (2023).

Social determinants of health. http://www.who.int/social_determinants/sdh_definition/en/.

Franke, H. A. Toxic stress: effects, prevention and treatment. Children 1, 390–402 (2014).

Nelson, C. A. et al. Adversity in childhood is linked to mental and physical health throughout life. BMJ 371, m3048 (2020).

Shonkoff, J. P. & Garner, A. S. Committee on psychosocial aspects of child and family health, committee on early childhood, adoption, and dependent care & section on developmental and behavioral pediatrics. the lifelong effects of early childhood adversity and toxic stress. Pediatrics 129, e232–e246 (2012).

Turner-Cobb, J. M., Sephton, S. E., Koopman, C., Blake-Mortimer, J. & Spiegel, D. Social support and salivary cortisol in women with metastatic breast cancer. Psychosom. Med. 62, 337–345 (2000).

Hood, C. M., Gennuso, K. P., Swain, G. R. & Catlin, B. B. County health rankings: relationships between determinant factors and health outcomes. Am. J. Prev. Med 50, 129–135 (2016).

Truong, H. P. et al. Utilization of social determinants of health ICD-10 Z-codes among hospitalized patients in the United States, 2016-2017. Med. Care 58, 1037–1043 (2020).

Heidari, E., Zalmai, R., Richards, K., Sakthisivabalan, L. & Brown, C. Z-code documentation to identify social determinants of health among medicaid beneficiaries. Res. Soc. Adm. Pharm. 19, 180–183 (2023).

Wang, M., Pantell, M. S., Gottlieb, L. M. & Adler-Milstein, J. Documentation and review of social determinants of health data in the EHR: measures and associated insights. J. Am. Med. Inform. Assoc. 28, 2608–2616 (2021).

Conway, M. et al. Moonstone: a novel natural language processing system for inferring social risk from clinical narratives. J. Biomed. Semant. 10, 1–10 (2019).

Bejan, C. A. et al. Mining 100 million notes to find homelessness and adverse childhood experiences: 2 case studies of rare and severe social determinants of health in electronic health records. J. Am. Med. Inform. Assoc. 25, 61–71 (2017).

Topaz, M., Murga, L., Bar-Bachar, O., Cato, K. & Collins, S. Extracting alcohol and substance abuse status from clinical notes: the added value of nursing data. Stud. Health Technol. Inform. 264, 1056–1060 (2019).

Gundlapalli, A. V. et al. Using natural language processing on the free text of clinical documents to screen for evidence of homelessness among US veterans. AMIA Annu. Symp. Proc. 2013, 537–546 (2013).

Hammond, K. W., Ben-Ari, A. Y., Laundry, R. J., Boyko, E. J. & Samore, M. H. The feasibility of using large-scale text mining to detect adverse childhood experiences in a VA-treated population. J. Trauma. Stress 28, 505–514 (2015).

Han, S. et al. Classifying social determinants of health from unstructured electronic health records using deep learning-based natural language processing. J. Biomed. Inform. 127, 103984 (2022).

Rouillard, C. J., Nasser, M. A., Hu, H. & Roblin, D. W. Evaluation of a natural language processing approach to identify social determinants of health in electronic health records in a diverse community cohort. Med. Care 60, 248–255 (2022).

Feller, D. J. et al. Detecting social and behavioral determinants of health with structured and free-text clinical data. Appl. Clin. Inform. 11, 172–181 (2020).

Yu, Z. et al. A study of social and behavioral determinants of health in lung cancer patients using transformers-based natural language processing models. AMIA Annu. Symp. Proc. 2021, 1225–1233 (2021).

Lybarger, K. et al. Leveraging natural language processing to augment structured social determinants of health data in the electronic health record. J. Am. Med. Inform. Assoc. 30, 1389–1397 (2023).

Patra, B. G. et al. Extracting social determinants of health from electronic health records using natural language processing: a systematic review. J. Am. Med. Inform. Assoc. 28, 2716–2727 (2021).

Xu, D., Chen, S. & Miller, T. BCH-NLP at BioCreative VII Track 3: medications detection in tweets using transformer networks and multi-task learning. Preprint at https://arxiv.org/abs/2111.13726 (2021).

Chen, S. et al. Natural language processing to automatically extract the presence and severity of esophagitis in notes of patients undergoing radiotherapy. JCO Clin. Cancer Inf. 7, e2300048 (2023).

Tan, R. S. Y. C. et al. Inferring cancer disease response fromradiology reports using large language models with data augmentation and prompting. J. Am. Med Inf. Assoc. 30, 1657–1664 (2023).

Jung, J. et al. Impossible distillation: from low-quality model to high-quality dataset & model for summarization and paraphrasing. Preprint at https://arxiv.org/pdf/2305.16635.pdf (2023).

Lett, E. & La Cava, W. G. Translating intersectionality to fair machine learning in health sciences. Nat. Mach. Intell. 5, 476–479 (2023).

Li, J. et al. Are synthetic clinical notes useful for real natural language processing tasks: a case study on clinical entity recognition. J. Am. Med. Inform. Assoc. 28, 2193–2201 (2021).

Chen, R. J., Lu, M. Y., Chen, T. Y., Williamson, D. F. K. & Mahmood, F. Synthetic data in machine learning for medicine and healthcare. Nat. Biomed. Eng. 5, 493–497 (2021).

Jacobs, F. et al. Opportunities and challenges of synthetic data generation in oncology. JCO Clin. Cancer Inf. 7, e2300045 (2023).

Chen, S. et al. Evaluation of ChatGPT family of models for biomedical reasoning and classification. Preprint at https://arxiv.org/abs/2304.02496 (2023).

Lehman, E. et al. Do we still need clinical language models? arXiv https://arxiv.org/abs/2302.08091 (2023).

Ramachandran, G. K. et al. Prompt-based extraction of social determinants of health using few-shot learning. In: Proceedings of the 5th Clinical Natural Language Processing Workshop, 385–393 (Association for Computational Linguistics, 2023).

Feng, S., Park, C. Y., Liu, Y. & Tsvetkov, Y. From pretraining data to language models to downstream tasks: tracking the trails of political biases leading to unfair NLP models. In: Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 11737–11762 (Association for Computational Linguistics, 2023).

Zhao, J., Wang, T., Yatskar, M., Ordonez, V. & Chang, K.-W. Men also like shopping: reducing gender bias amplification using corpus-level constraints. In: Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing 2979–2989 (Association for Computational Linguistics, 2017).

Caliskan, A., Bryson, J. J. & Narayanan, A. Semantics derived automatically from language corpora contain human-like biases. Science 356, 183–186 (2017).

Davidson, T., Warmsley, D., Macy, M. & Weber, I. Automated hate speech detection and the problem of offensive language. In Proceedings of the Eleventh International AAAI Conference on Web and Social Media. 512–515 (Association for the Advancement of Artificial Intelligence, 2017).

Kharrazi, H. et al. The value of unstructured electronic health record data in geriatric syndrome case identification. J. Am. Geriatr. Soc. 66, 1499–1507 (2018).

Derton, A. et al. Natural language processing methods to empirically explore social contexts and needs in cancer patient notes. JCO Clin. Cancer Inf. 7, e2200196 (2023).

Lybarger, K., Yetisgen, M. & Uzuner, Ö. The 2022 n2c2/UW shared task on extracting social determinants of health. J. Am. Med. Inform. Assoc. 30, 1367–1378 (2023).

Romanowski, B., Ben Abacha, A. & Fan, Y. Extracting social determinants of health from clinical note text with classification and sequence-to-sequence approaches. J. Am. Med. Inform. Assoc. 30, 1448–1455 (2023).

Hatef, E. et al. Assessing the availability of data on social and behavioral determinants in structured and unstructured electronic health records: a retrospective analysis of a multilevel health care system. JMIR Med. Inf. 7, e13802 (2019).

Greenwald, J. L., Cronin, P. R., Carballo, V., Danaei, G. & Choy, G. A novel model for predicting rehospitalization risk incorporating physical function, cognitive status, and psychosocial support using natural language processing. Med. Care 55, 261–266 (2017).

Blosnich, J. R. et al. Social determinants and military veterans’ suicide ideation and attempt: a cross-sectional analysis of electronic health record data. J. Gen. Intern. Med. 35, 1759–1767 (2020).

Wray, C. M. et al. Examining the interfacility variation of social determinants of health in the veterans health administration. Fed. Pract. 38, 15–19 (2021).

Wang, L. et al. Disease trajectories and end-of-life care for dementias: latent topic modeling and trend analysis using clinical notes. AMIA Annu. Symp. Proc. 2018, 1056–1065 (2018).

Navathe, A. S. et al. Hospital readmission and social risk factors identified from physician notes. Health Serv. Res. 53, 1110–1136 (2018).

Kroenke, C. H., Kubzansky, L. D., Schernhammer, E. S., Holmes, M. D. & Kawachi, I. Social networks, social support, and survival after breast cancer diagnosis. J. Clin. Oncol. 24, 1105–1111 (2006).

Maunsell, E., Brisson, J. & Deschênes, L. Social support and survival among women with breast cancer. Cancer 76, 631–637 (1995).

Schulz, R. & Beach, S. R. Caregiving as a risk factor for mortality: the Caregiver health effects study. JAMA 282, 2215–2219 (1999).

Hovy, D. & Prabhumoye, S. Five sources of bias in natural language processing. Lang. Linguist. Compass 15, e12432 (2021).

Johnson, A., Pollard, T. & Mark, R. MIMIC-III Clin. database https://doi.org/10.13026/C2XW26 (2023).

Johnson, A. E. W. et al. MIMIC-III, a freely accessible critical care database. Sci. Data 3, 160035 (2016).

Goldberger, A. et al. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 101, e215–e220 (2000).

Eyre, H. et al. Launching into clinical space with medspaCy: a new clinical text processing toolkit in Python. AMIA Annu. Symp. Proc. 2021, 438–447 (2021).

MedspaCy · spaCy universe. medspaCy https://spacy.io/universe/project/medspacy.

Leitner, F. syntok: Text tokenization and sentence segmentation (segtok v2). (Github).

Multi-document annotation environment. MAE https://keighrim.github.io/mae-annotation/.

OpenAI API. http://platform.openai.com.

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol. 1 (Long and Short Papers) 4171–4186 (Association for Computational Linguistics, 2019).

Chung, H. W. et al. Scaling instruction-finetuned language models. Preprint at https://arxiv.org/abs/2210.11416 (2022).

Longpre, S. et al. The flan collection: designing data and methods for effective instruction tuning. arXiv https://arxiv.org/abs/2301.13688 (2023).

Hu, E. J. et al. LoRA: Low-Rank Adaptation of Large Language Models. International Conference on Learning Representations (2022).

Kondrashchenko, I. scikit-llm: seamlessly integrate powerful language models like ChatGPT into scikit-learn for enhanced text analysis tasks. (Github).

Guevara, M. et al. Annotation dataset of social determinants of health from MIMIC-III Clinical Care Database. Physionet, 1.0.0, https://doi.org/10.13026/6149-mb25 (2023).

Guevara, M. et al. SDoH Human Annotated Demographic Robustness (SHADR) Dataset. Huggingface, 2308.06354 (2023).

Acknowledgements

The authors acknowledge the following funding sources: D.S.B.: Woods Foundation, Jay Harris Junior Faculty Award, Joint Center for Radiation Therapy Foundation. T.L.C.: Radiation Oncology Institute, Conquer Cancer Foundation, Radiological Society of North America. I.F.: Diversity Supplement (NIH-3R01CA240582-01A1S1), NIH/NCI LRP, NRG Oncology Health Equity ASTRO/RTOG Fellow, CDA BWH Center for Diversity and Inclusion. G.K.S.: R01LM013486 from the National Library of Medicine, National Institute of Health. R.H.M.: National Institute of Health, ViewRay, H.A.: (H.A.: NIH-USA U24CA194354, NIH-USA U01CA190234, NIH-USA U01CA209414, and NIH-USA R35CA22052), and the European Union - European Research Council (H.A.: 866504). S.C., M.G., B.K., H.A., G.K.S., H.A., and D.S.B.: NIH-USA U54CA274516-01A1.

Author information

Authors and Affiliations

Contributions

M.G. and S.C.: conceptualization, data curation, formal analysis, investigation, methodology, visualization, writing—original draft, writing—review & editing. S.T.: data curation, formal analysis, investigation, methodology. T.L.C., I.F., B.H.K., S.M., J.M.Q.: data curation, investigation, writing—review & editing. M.G. and S.H.: data curation, methodology. H.J.W.L.A.: funding acquisition, writing—review & editing. P.J.C., G.K.S., and R.H.M.: conceptualization, investigation, methodology, writing—review & editing. D.S.B.: funding acquisition, conceptualization, data curation, formal analysis, investigation, methodology, supervision, writing—original draft, writing—review & editing.

Corresponding author

Ethics declarations

Competing interests

M.G., S.C., S.T., T.L.C., I.F., B.H.K., S.M., J.M.Q., M.G., S.H.: none. H.J.W.L.A.: advisory and consulting, unrelated to this work (Onc.AI, Love Health Inc, Sphera, Editas, A.Z., and BMS). P.J.C. and G.K.S.: None. R.H.M.: advisory board (ViewRay, AstraZeneca), Consulting (Varian Medical Systems, Sio Capital Management), Honorarium (Novartis, Springer Nature). D.S.B.: Associate Editor of Radiation Oncology, HemOnc.org (no financial compensation, unrelated to this work); funding from American Association for Cancer Research (unrelated to this work).

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guevara, M., Chen, S., Thomas, S. et al. Large language models to identify social determinants of health in electronic health records. npj Digit. Med. 7, 6 (2024). https://doi.org/10.1038/s41746-023-00970-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-023-00970-0